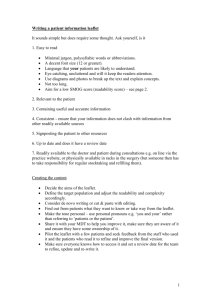

Neural Approaches to Text Readability: Supervised & Unsupervised Methods

advertisement