Contents

Task 1.1 ............................................................................... Ошибка! Закладка не определена.

(P1) Design a relational database system using appropriate design tools and techniques,

containing at least four interrelated tables, with clear statements of user and system requirements.

............................................................................................. Ошибка! Закладка не определена.

a.

Identify the user and system requirements to design a database for the above scenario.

Ошибка! Закладка не определена.

b.

Identify entities and attributes of the given scenario and design a relational database system

using conceptual design (ER Model) by including identifiers (primary Key) of entities and

cardinalities, participations of relationships. ...................... Ошибка! Закладка не определена.

c.

Convert the ER Model into logical database design using relational database model

including primary keys foreign keys and referential Integrities. It should contain at least five

interrelated tables. ............................................................... Ошибка! Закладка не определена.

Task 1.2 ............................................................................... Ошибка! Закладка не определена.

a.

Explain data normalization with examples. Check whether the provided logical design in

task 1.1 is normalized. If not, normalize the database by removing the anomalies.......... Ошибка!

Закладка не определена.

b.

Design set of simple interfaces to input and output for the above scenario using Wireframe

or any interface-designing tool. .......................................... Ошибка! Закладка не определена.

c.

Explain the validation mechanisms to validate data in the tables with examples. ... Ошибка!

Закладка не определена.

Task 1.3 ............................................................................... Ошибка! Закладка не определена.

(D1) Assess the effectiveness of the design in relation to user and system requirements Ошибка!

Закладка не определена.

a.

Check whether the given design (ERD and Logical design) is representing the identified

user and system requirements to access the effectiveness of the design. .. Ошибка! Закладка не

определена.

Database tables, Screen shots, table design ........................ Ошибка! Закладка не определена.

1) Admin user registration .................................................. Ошибка! Закладка не определена.

Task 2 -................................................................................ Ошибка! Закладка не определена.

Task 2.1 ............................................................................... Ошибка! Закладка не определена.

A.

Develop a relational database system according to the ER diagram you have created (Use

SQL DDL statements)......................................................... Ошибка! Закладка не определена.

b.

Provide evidence of the use of a suitable IDE to create a simple interface to insert, update

and delete data in the database ............................................ Ошибка! Закладка не определена.

c.

Implement the validation methods explained in task 1.2-part c. ....... Ошибка! Закладка не

определена.

d.

Display the details payment with the job details and the customer details using Join queries

Ошибка! Закладка не определена.

Task 2.2 ............................................................................... Ошибка! Закладка не определена.

a.

Explain the usage of DML with below mentioned queries by giving at least one single

example per each case from the developed database. ......... Ошибка! Закладка не определена.

Select ................................................................................... Ошибка! Закладка не определена.

Task 2.3 ............................................................................... Ошибка! Закладка не определена.

a.

Explain how security mechanisms have been used and the importance of these mechanisms

for the security of the database. Implement proper security mechanisms (EX: -User groups,

access permissions) in the developed database. .................. Ошибка! Закладка не определена.

Training ........................................................................... Ошибка! Закладка не определена.

Setting standards ............................................................. Ошибка! Закладка не определена.

Go live ............................................................................. Ошибка! Закладка не определена.

Task 2.4 ............................................................................... Ошибка! Закладка не определена.

a.

Explain the usage of the below SQL statements with the examples from the developed

database ............................................................................... Ошибка! Закладка не определена.

Group by ............................................................................. Ошибка! Закладка не определена.

Order by .............................................................................. Ошибка! Закладка не определена.

Having ................................................................................. Ошибка! Закладка не определена.

Between ............................................................................... Ошибка! Закладка не определена.

Where .................................................................................. Ошибка! Закладка не определена.

Task 3 – ............................................................................... Ошибка! Закладка не определена.

Task 3.1 ............................................................................... Ошибка! Закладка не определена.

a.

Provide a suitable test plan to test the system against user and system requirements

Ошибка! Закладка не определена.

b.

Provide relevant test cases for the database you have implemented . Ошибка! Закладка не

определена.

Task 3.2 ............................................................................... Ошибка! Закладка не определена.

a.

Explain how the selected test data in task 3.1 b) can be used improve the effectiveness of

testing. ................................................................................. Ошибка! Закладка не определена.

Task 3.3 ............................................................................... Ошибка! Закладка не определена.

a.

Get independent feedback on SmartMovers database solution from the non-technical users

and some developers (use surveys, questioners, interviews or any other feedback collecting

method) and make recommendations and suggestions for improvements in a separate

conclusion/recommendations section. ................................ Ошибка! Закладка не определена.

Task 4 .................................................................................. Ошибка! Закладка не определена.

Task 4.1 ............................................................................... Ошибка! Закладка не определена.

a.

Prepare a simple users’ guide and a technical documentation for the support and

maintenance of the software. .............................................. Ошибка! Закладка не определена.

Task 4.2 ............................................................................... Ошибка! Закладка не определена.

a.

SmartMovers technical documentation should include some of the UML diagrams (Use

case diagram Class diagram, etc.), flow charts for the important functionalities, context level

DFD and the Level 1 DFD .................................................. Ошибка! Закладка не определена.

Task 4.3 ............................................................................... Ошибка! Закладка не определена.

a.

Suggest the future improvements that may be required to ensure the continued effectiveness

of the database system. ....................................................... Ошибка! Закладка не определена.

Conclusion .......................................................................... Ошибка! Закладка не определена.

5.

Reference .................................................................... Ошибка! Закладка не определена.

Bibliography................................................................ Ошибка! Закладка не определена.

Task 1.1

(P1) Design a relational database system using appropriate design tools and techniques,

containing at least four interrelated tables, with clear statements of user and system

requirements.

a.

Identify the user and system requirements to design a database for the above

scenario.

In later stages, this model might be converted into actual information model Data model

The term information model really alludes to two altogether different things: a portrayal of

information structure and the manner in which information are coordinated utilizing, for

instance, a data set administration framework.

Information structure An information model portrays the design of the information inside a

given area and, by suggestion, the fundamental construction of that space itself. This implies

that an information model indeed indicates a committed 'sentence structure' for a devoted

counterfeit language for that space. Since there is little normalization of information models,

each information model is extraordinary. This implies that information that is organized by one

information model is hard to incorporate with information that is organized by another

information model. An information model may address classes of substances (sorts of things)

about which an organization wishes to hold data, the qualities of that data, and connections

among those elements and (frequently verifiable) connections among those ascribes. The model

depicts the association of the information somewhat regardless of how information may be

addressed in a PC framework.

A conventional information model complies to the accompanying guidelines:

•

1. Applicant ascribes are treated as addressing connections to other element types.

•

2. Element types are addressed, and be named after, the fundamental idea of a thing, not

the job it's anything but a specific setting. Element types are picked

•

3. Substances include a neighborhood identifier inside a data set or trade document.

These ought to be fake and figured out how to be novel. Connections are not utilized as a

feature of the neighborhood identifier.

•

4. Exercises, connections and occasion impacts are addressed by element types (not

credits).

•

5. Element types are essential for a sub-type/super-type chain of importance of element

types, to characterize a widespread setting for the model. As sorts of connections are

additionally substance types they are likewise organized in a sub-type/super-type chain of

command of kinds of relationship.

•

6. Kinds of connections are characterized on a high (nonexclusive) level, being the most

elevated level where the sort of relationship is as yet legitimate. For instance, a piece

relationship (showed by the expression: 'is made out of') is characterized as a connection

between an 'singular thing' and another 'singular thing' (and not simply between for example a

request and a request line). This conventional level implies that the sort of connection may on a

fundamental level be applied between any individual thing and some other individual thing.

Extra imperatives are characterized in the 'reference information', being standard occasions of

connections between sorts of things.

Information association

Another sort of information model portrays how to arrange information utilizing a data set

administration framework or other information the board innovation. It depicts, for instance,

social tables and driver IDs or item situated classes and traits. Such an information model is

once in a while alluded to as the actual information model, however in the first ANSI three

mapping design, it is classified "intelligent". In that engineering, the actual model portrays the

capacity media (chambers, tracks, and tablespaces). Preferably, this model is gotten from the

more reasonable information model depicted previously. It might contrast, notwithstanding, to

represent requirements like preparing limit and use designs.

While information examination is a typical term for information displaying, the movement

really shares all the more practically speaking with the thoughts and techniques for combination

(inducing general ideas from specific occurrences) than it does with investigation

(distinguishing segment ideas from more broad ones). Information displaying endeavors to

bring the information designs of interest together into a firm, indistinguishable, entire by

wiping out superfluous information redundancies and by relating information structures with

connections.

Data set plan

This article/instructional exercise will show the premise of social information base plan and

discloses how to make a decent data set plan. It's anything but a somewhat long content,

however we instruct to peruse all concerning it. Planning an information base is indeed

genuinely simple, yet there are a couple of rules to adhere to. Understand what these standards

are, yet more critically is to know why these principles exist, else you will in general commit

errors!

Normalization makes SmartMovers information model adaptable and that makes working with

SmartMovers information a lot simpler. Kindly, set aside the effort to get familiar with these

principles and apply them! The information base utilized in this article is planned with our data

set plan and demonstrating device DeZign for Databases.

A decent data set plan begins with a rundown of the information that you need to remember for

SmartMovers data set and what you need to have the option to do with the data set later on.

This would all be able to be written in SmartMovers own language, with no SQL. In this stage

you should do whatever it takes not to think in tables or driver IDs, but rather figure: "What do

I need to know?" Don't trifle with this as well, since, supposing that you discover later that you

failed to remember something, as a rule you need to start from the very beginning. Adding

things to SmartMovers information base is for the most part a ton of work.

b.

Identify entities and attributes of the given scenario and design a relational database

system using conceptual design (ER Model) by including identifiers (primary Key) of

entities and cardinalities, participations of relationships.

In this Database Design section, they identify what are the entities, attributes and their

relationships have been identified. The Entity Relationship diagram has been drawn according

to it.

Identify entity, relationship and attribute

Entitys

Supper Class Attribute

Lorry

Lorry_ID_Number

Driver

Data type

Int

Lorry_Name

varchar(50)

Position

varchar(50)

Home_Town

varchar(50)

Lorry_Year

datetime

Driver_id

varchar(50)

Lorry_size

Int

Driver_ID

Int

Driver_Name

varchar(50)

Position

varchar(50)

Home_Town

varchar(50)

Lorry_id

int

Age

varchar(50)

LORRY_ID_Number

int

Lorryloyees

LORRY _Name

Position

Home_Town

Job Year

LORRY_Address

Key Funtion

Primary Key

Foring Key

Primary Key

Foring Key

LORRY_Tell

B_ID

Department

DEP_ID_Number

Int

DEP_Name

varchar(50)

DEPHome_Town

varchar(50)

Tell number

int

datetime

Primary Key

Foring Key

Customers

Customers ID

Int

Primary Key

Customers NAME

varchar(50)

Customers ADDRESS

varchar(50)

Customers

varchar(50)

Foring Key

int

Foring Key

Int

Booking

Booking_ID

Int

Booking_Name

varchar(50)

Type_Name

int

Primary Key

Foring Key

level

LORRY_ID_Numbers

They need to identify main Entity Relationship in entity relationship diagram.

c.

Convert the ER Model into logical database design using relational database model

including primary keys foreign keys and referential Integrities. It should contain at least

five interrelated tables.

Lorry

Driver

Employees

Lorry_ID_Number

Lorry_Name

Position

Home_Town

Lorry_Year

Driver_id

Lorry_size

Driver_ID

Driver_Name

Position

Home_Town

Lorry_id

Age

EMP_ID_Number

EMP _Name

Position

Home_Town

Job Year

EMP_Address

EMP_Tell

B_ID

Department

DEP_ID_Number

DEP_Name

DEPHome_Town

Tell number

Customers

Customers ID

Customers NAME

Customers ADDRESS

Customers

Booking

Booking_ID

Booking_Name

Type_Name

level

EMP_ID_Numbers

Task 1.2

a.

Explain data normalization with examples. Check whether the provided logical

design in task 1.1 is normalized. If not, normalize the database by removing the

anomalies.

ttributes: lorry_id for storing lorryloyee’s id, lorry_name for storing lorryloyee’s name,

lorry_address for storing lorryloyee’s address and lorry_dept for storing the department details in

which the lorryloyee works. At some point of time the table looks like this:

lorry_id

lorry_name

lorry_address

lorry_dept

101

Rick

Delhi

D001

101

Rick

Delhi

D002

123

Maggie

Agra

D890

166

Glenn

Chennai

D900

166

Glenn

Chennai

D004

The above table isn't standardized. We will see the issues that we face when a table isn't

standardized.

Update abnormality: In the above table we have two columns for representative Rick as he has a

place with two divisions of the organization. On the off chance that we need to refresh the

location of Rick, we need to refresh something similar in two columns or the information will get

conflicting. Assuming in some way or another, the right location gets refreshed in one office

however not in other then according to the information base, Rick would have two unique

locations, which isn't right and would prompt conflicting information.

Supplement peculiarity: Suppose another worker joins the organization, who is under preparing

and at present not appointed to any office then we would not have the option to embed the

information into the table if lorry_dept field doesn't permit nulls.

Erase inconsistency: Suppose, assuming at a state of time the organization shuts the office D890,

erasing the lines that are having lorry_dept as D890 would likewise erase the data of worker

Maggie since she is alloted just to this division.

To beat these inconsistencies we need to standardize the information. In the following area we

will examine about standardization.

Standardization

Here are the most usually utilized ordinary structures:

•

First ordinary form(1NF)

•

Second ordinary form(2NF)

•

Third typical form(3NF)

•

Boyce and Codd typical structure (BCNF)

First ordinary structure (1NF)

According to the standard of first typical structure, a characteristic (section) of a table can't hold

numerous qualities. It should hold just nuclear qualities.

Model: Suppose an organization needs to store the names and contact subtleties of its workers.

It's anything but a table that resembles this:

lorry_id

lorry_name

lorry_address

lorry_mobile

101

Herschel

New Delhi

8912312390

102

Jon

Kanpur

8812121212

9900012222

103

Ron

Chennai

7778881212

104

Lester

Bangalore

9990000123

8123450987

Two workers (Jon and Lester) are having two versatile numbers so the organization put away

them in a similar field as you can find in the table above.

This table isn't in 1NF as the standard says "each characteristic of a table should have nuclear

(single) values", the lorry_mobile values for representatives Jon and Lester disregards that

standard.

To make the table consents to 1NF we ought to have the information like this:

lorry_id

lorry_name

lorry_address

lorry_mobile

101

Herschel

New Delhi

8912312390

102

Jon

Kanpur

8812121212

102

Jon

Kanpur

9900012222

103

Ron

Chennai

7778881212

104

Lester

Bangalore

9990000123

104

Lester

Bangalore

8123450987

Second normal form (2NF)

A table is supposed to be in 2NF if both the accompanying conditions hold:

•

Table is in 1NF (First typical structure)

•

No non-prime trait is reliant upon the appropriate subset of any competitor key of table.

A property that isn't essential for any applicant key is known as non-prime characteristic.

Model: Suppose a school needs to store the information of instructors and the subjects they

educate. They make a table that resembles this: Since an instructor can show more than one

subjects, the table can have different columns for an equivalent educator.

driver_id

driver_age

111

38

111

38

222

38

333

40

333

40

Candidate Keys: {driver_id, subject}

Non prime attribute: driver_age

The table is in 1 NF because each attribute has atomic values. However, it is not in 2NF because

non prime attribute driver_age is dependent on driver_id alone which is a proper subset of

candidate key. This violates the rule for 2NF as the rule says “no non-prime attribute is

dependent on the proper subset of any candidate key of the table”.

To make the table complies with 2NF we can break it in two tables like this:

driver_details table:

driver_id

driver_age

111

38

222

38

333

40

Third Normal form (3NF)

A table design is said to be in 3NF if both the following conditions hold:

Table must be in 2NF

Transitive functional dependency of non-prime attribute on any super key should be

removed.

An attribute that is not part of any candidate key is known as non-prime attribute.

In other words 3NF can be explained like this: A table is in 3NF if it is in 2NF and for each

functional dependency X-> Y at least one of the following conditions hold:

X is a super key of table

Y is a prime attribute of table

An attribute that is a part of one of the candidate keys is known as prime attribute.

Example: Suppose a company wants to store the complete address of each lorryloyee, they

create a table named lorryloyee_details that looks like this:

lorry_id

lorry_name

lorry_zip

lorry_state

lorry_city

lorry_district

1001

John

282005

UP

Agra

Dayal Bagh

1002

Ajeet

222008

TN

Chennai

M-City

1006

Lora

282007

TN

Chennai

Urrapakkam

1101

Lilly

292008

UK

Pauri

Bhagwan

1201

Steve

222999

MP

Gwalior

Ratan

Super keys: {lorry_id}, {lorry_id, lorry_name}, {lorry_id, lorry_name, lorry_zip}…so on

Candidate Keys: {lorry_id}

Non-prime attributes: all attributes except lorry_id are non-prime as they are not part of any

candidate keys.

Here, lorry_state, lorry_city & lorry_district dependent on lorry_zip. And, lorry_zip is dependent

on lorry_id that makes non-prime attributes (lorry_state, lorry_city & lorry_district) transitively

dependent on super key (lorry_id). This violates the rule of 3NF.

To make this table complies with 3NF we have to break the table into two tables to remove the

transitive dependency:

lorryloyee table:

lorry_id

lorry_name

lorry_zip

1001

John

282005

1002

Ajeet

222008

1006

Lora

282007

1101

Lilly

292008

1201

Steve

222999

lorryloyee_zip table:

lorry_zip

lorry_state

lorry_city

lorry_district

282005

UP

Agra

Dayal Bagh

222008

TN

Chennai

M-City

282007

TN

Chennai

Urrapakkam

292008

UK

Pauri

Bhagwan

222999

MP

Gwalior

Ratan

Boyce Codd normal form (BCNF)

It is an advance version of 3NF that’s why it is also referred as 3.5NF. BCNF is stricter than

3NF. A table complies with BCNF if it is in 3NF and for every functional dependency X->Y, X

should be the super key of the table.

Example: Suppose there is a company wherein lorryloyees work in more than one department.

They store the data like this:

lorry_id

lorry_nationality

lorry_dept

dept_type

dept_no_of_lorry

1001

Austrian

Production and

D001

200

planning

1001

Austrian

stores

D001

250

1002

American

design and technical

D134

100

support

1002

American

Purchasing department

D134

600

Functional dependencies in the table above:

lorry_id -> lorry_nationality

lorry_dept -> {dept_type, dept_no_of_lorry}

Candidate key: {lorry_id, lorry_dept}

The table is not in BCNF as neither lorry_id nor lorry_dept alone are keys.

To make the table comply with BCNF we can break the table in three tables like this:

lorry_nationality table:

lorry_id

lorry_nationality

1001

Austrian

1002

American

lorry_dept table:

lorry_dept

dept_type

dept_no_of_lorry

Production and planning

D001

200

stores

D001

250

design and technical support

D134

100

Purchasing department

D134

600

lorry_dept_mapping table:

lorry_id

lorry_dept

1001

Production and planning

1001

stores

1002

design and technical support

1002

Purchasing department

Functional dependencies:

lorry_id -> lorry_nationality

lorry_dept -> {dept_type, dept_no_of_lorry}

Candidate keys:

For first table: lorry_id

For second table: lorry_dept

For third table: {lorry_id, lorry_dept}

Entitys

Supper Class Attribute

Lorry

Lorry_ID_Number

Driver

Data type

Int

Lorry_Name

varchar(50)

Position

varchar(50)

Home_Town

varchar(50)

Lorry_Year

datetime

Driver_id

varchar(50)

Lorry_size

Int

Driver_ID

Int

Driver_Name

varchar(50)

Position

varchar(50)

Home_Town

varchar(50)

Lorry_id

int

Age

varchar(50)

LORRY_ID_Number

int

Lorryloyees

LORRY _Name

Position

Home_Town

Job Year

LORRY_Address

LORRY_Tell

Key Funtion

Primary Key

Foring Key

Primary Key

Foring Key

B_ID

Department

DEP_ID_Number

Int

DEP_Name

varchar(50)

DEPHome_Town

varchar(50)

Tell number

int

datetime

Primary Key

Foring Key

Customers

Customers ID

Int

Primary Key

Customers NAME

varchar(50)

Customers ADDRESS

varchar(50)

Customers

varchar(50)

Foring Key

int

Foring Key

Int

Booking

Booking_ID

Int

Booking_Name

varchar(50)

Type_Name

int

level

LORRY_ID_Numbers

Primary Key

Foring Key

b.

Design set of simple interfaces to input and output for the above scenario using

Wireframe or any interface-designing tool.

c.

Explain the validation mechanisms to validate data in the tables with examples.

Put away techniques are normally utilized for information approval or to exlorrylify expansive,

complex handling directions that join a few SQL questions."

Information approval happens on the grounds that to pass information into a put away method,

it's done by means of parameters which are unequivocally set to SQL information sorts (or

client characterized sorts, which are additionally in light of SQL information sorts). Just

approval of the information sort happens - more inside and out approval must be developed if

important (IE: checking for decimals in a NUMBER information sort). Parameterized questions

are for the most part more sheltered from SQL infusion, however it truly relies on upon what

the parameters are and what the inquiry is doing.

In this illustration, presenting a VARCHAR/string will bring about a mistake - something

besides what NUMBER backings will bring about a blunder. Also, 'll get a blunder if the

IN_VALUE information sort can't be certainly changed over to the information kind of TABLE.

driver ID.

A put away technique epitomizes an exchange, which is the thing that permits complex

preparing guidelines (which means, more than one SQL question). Exchange taking care of (IE:

having to expressly state "Confer" or "ROLLBACK") relies on upon settings.

Task 1.3

(D1) Assess the effectiveness of the design in relation to user and system requirements

a.

Check whether the given design (ERD and Logical design) is representing the

identified user and system requirements to access the effectiveness of the design.

Database tables, Screen shots, table design

SELECT

*

FROM

lorry

1) Admin user registration

Lorry info

Task 2 Task 2.1

A.

Develop a relational database system according to the ER diagram you have created

(Use SQL DDL statements).

Information control language (DML) is an organized code utilized in data sets to control the

information somehow or another. A couple of the fundamental controls utilized in information

control language incorporate adding to the data set, changing a record, erasing a record, and

moving information starting with one position then onto the next. The DML orders are basic

and include a couple of words, for example, "SELECT" or "UPDATE", to start the order. DML

can be parted into procedural and nonprocedural code, with the client indicating either what

datum is required and how to arrive at it, or exactly what is required, separately. Without DML,

it would be absolutely impossible to control the information in the data set

One of the primary explanations behind utilizing a data set is to store data, however the datum

is normally futile or of restricted use in the event that it can't be controlled. DML is the

standard language used to associate with the data put away in the information base. Through

this rundown of orders, a client can start a scope of changes to the data set to build its

convenience.

By and large, the change the executives interaction is liable for verifying that the important

records in the CMS are refreshed as needs be. By doing this we understand what CIs were

attracted from the DML to make what creation frameworks and we comprehend the specific

setup of what is underway. This is vital data since it helps for all intents and purposes the wide

range of various cycles including Incident, Problem, and Asset since they should realize

definite what is underway at some random time.

A given DML will develop with time. Similarly that systems are expected to add CIs, there

likewise should be DML methods for the audit of contained CIs. In specific cases we will need

to keep just the most current duplicate of a given CI and for other people, we might need to

store all variants. At issue are the degree of extra room required and furthermore the need to

diminish human mistake around utilizing out of date versions.There should be an agenda to

decommission CIs from the DML and proper demeanor including long haul files, cancellation,

etc.

All things being equal, the DML is a valuable ITIL idea. By characterizing appropriate systems

and controls, the dangers related with unapproved programming entering creation can be

extraordinarily decreased. The execution of at least one DMLs combined with strong Change

and Release and Deployment Management measures make a solidsolution for bunches hoping

to oversee what is delivered into their creation climate.

With a portion of the Advantage information access systems, SQL gives the lone instrument to

executing put away methods.

•

SQL gives a component to joining tables from numerous information word references

with a solitary association.

•

SQL decreases the measure of coding needed to play out some intricate undertakings,

particularly if these assignments include various tables.

•

SQL makes code more versatile to other RDBMS (social information base administration

frameworks).

•

Since Advantage 7.1, you can handle pretty much every part of SmartMovers data set,

including tables, lists, clients, gatherings, sees, triggers, distributions and memberships, client

characterized works, and even SmartMovers information word reference properties, through

SQL.

EXPLAIN SELECT * FROM `lorry`;

b.

Provide evidence of the use of a suitable IDE to create a simple interface to insert,

update and delete data in the database

insert

update

delete

c.

Implement the validation methods explained in task 1.2-part c.

This section presents an overview of the verification and validation activities performed

throughout the life cycle for complex electronics. The activities and tasks are described in more

detail in the corresponding phase sub-section. This page covers:

1. Requirements

2. Design Entry (preliminary design)

3. Design Synthesis (detailed design)

4. Implementation

5. Testing

6. Process Verification

Requirements

At the requirements phase, the system or sub-system level requirements are flowed down to the

complex electronics. This flow down is primarily the responsibility of the systems engineer,

though hopefully the design engineer for the complex electronics will be involved , to prevent

requirements being imposed on the hardware that it cannot meet!

Verification activity

Performed by

Evaluate requirements for the complex

Quality assurance, systems engineers

electronics

Safety assessment

System safety engineer

Requirements review (e.g. PDR)

All

Identification of applicable standards

Quality assurance, safety, design

engineers

Formal methods

IV&V or knowledgeable practitioner

Quality confirmation designers should audit the necessities for rightness, fulfillment, and

consistency. Top notch necessities are extremely valuable. Great necessities make everybody's

life simpler, in light of the fact that awful prerequisites are hard to confirm, are frequently

deciphered diversely by different individuals, and may not carry out the capacities that are

wanted. Discovering during testing that the gadget is missing significant usefulness, or is

excessively lethargic, is something you truly need to keep away from.

Planned for wellbeing or maybe crucial gear, appropriate techniques could be used as an

affirmation gadget. The prerequisites might be depicted having a specific vernacular that grants

numerical proof being made including the contraption won't disregard specific parts. Traditional

systems might be utilized at just the necessities stage (to be certain you acquire those right), or

maybe furthermore give to help affirm the plan when it's made. Virtually all tasks won't work

with appropriate techniques.

Format Access Over the example openness stage, the specific muddled devices productivity is

depicted in a very PC equipment clarification lingo (HDL). SmartMovers HDL worth might be

mimicked in a very assessment customary and its conduct might be found. It's anything but a

significant affirmation task which is typically completed simply because of the example

electrical architect. Top quality confirmation creators may well audit the specific reproduction

programs (if these are delivered) or maybe results, just as proposed for fundamental gear they

could see a significant number of the reenactment works.

Check action Performed by

Assess plan (HDL) against necessities

Quality affirmation engineer

Practical Simulation Design engineer

Wellbeing evaluation System security engineer

Configuration audit (for example CDR, peer audit) All

Static examination of HDL code

IV&V, Quality confirmation engineer

Practical reproduction includes imitating the usefulness of a gadget to confirm that it is working

per the particular and that it will deliver right outcomes. This kind of recreation is acceptable at

discovering blunders or bugs in the plan. Utilitarian reenactment is likewise utilized after the

plan union advance where the entryway level plan is mimicked.

The HDL code ought to be inspected by at least one specialists who can survey the plan. A

decent analyst needs to comprehend the framework inside which the gadget will work, know the

HDL language being utilized, and have the option to contrast what the gadget is planned with do

against its prerequisites. This implies that not simply anybody can satisfactorily audit the plan.

Absence of information or experience will hamper the survey, and frequently cause the creator to

think it's anything but an exercise in futility.

For exceptionally unpredictable or wellbeing basic gadgets, Independent Verification and

Validation (IV&V) might be brought in to survey the plan. One device they can utilize is static

investigation programming for the HDL code. This can search for issues or potential mistakes in

the code. This apparatus is basically the same as some static examination devices for

programming that search for possible rationale or coding blunders.

d.

Display the details payment with the job details and the customer details using Join

queries

FIRSTNAME , NAME

FROM CUS_COL.ACTORS A , CUS_COL.Roles R , CUS_COL.LORYs F ,

CUS_COL.LORYsActorsRoles AR

WHERE A.ACTORID = AR.ACTORID

AND R.ROLEID = AR.ROLEID

AND AR.LORYID = F.LORYID

AND F.TITLE = 'TEST';

SELECT F.TITLE FROM CUS_COL.LORYs F WHERE CertificateID IS NOT NULL ;

SELECT P.NAME

FROM CUS_COL.Producers P , CUS_COL.LORYs Producers FP , CUS_COL.LORYs F

WHERE P.ProducerID = FP.ProducerID

AND FP. ID = F.LORYID

AND F.Title = 'JURASIC';

Task 2.2

a.

Explain the usage of DML with below mentioned queries by giving at least one

single example per each case from the developed database.

Select

update

delete

Task 2.3

a.

Explain how security mechanisms have been used and the importance of these

mechanisms for the security of the database. Implement proper security mechanisms

(EX: -User groups, access permissions) in the developed database.

Preparing

The vast majority in SmartMovers association presumably get by with basic programming. Try

not to expect they can do likewise with SmartMovers information base. You need everybody to

utilize it a similar way (pretty much).

Interest in preparing is critical to a fruitful framework on the grounds that:

1.

People are more joyful with the framework and are 'purchased in' to its prosperity

2.

People work all the more successfully with it Processes are done the 'hierarchical' way -

efficiently

3.

People are more productive and better ready to continue ahead with their work

Setting principles

The more individuals who utilize a framework, the more you need principles and concurred

measures. Set these ahead of time and ensure everybody is joined and concurred. A basic

arrangement of standards will have an immense effect to information quality and execution.

Information bases work best when all around set up and kept up and rely totally upon quality

information. You should concur how to oversee information.

Go live

'Go live' is the day you let clients free on the framework with genuine information to use for

their everyday work. On the off chance that you can, let one division will holds with the data

set at a time - maybe stage the execution over a couple of days or even weeks. That route if

there are any last moment issues, they can be fixed before everybody discovers them.

There comes a moment that the entire association will change over to the new framework.

Ensure you're prepared and have a fallback position on the off chance that things turn out

badly.

Similarly as an ICT framework ought to have a help contract, any genuine interest in data set

innovation will require master help, quite often from the provider. It tends to be costly however

is great. In the event that it breaks, you may wind up with nothing.

Task 2.4

a.

Explain the usage of the below SQL statements with the examples from the

developed database

Group by

SELECT *

FROM driver

[WHERE condition]

[Group BY name] [ASC | DESC];

Order by

The SQL ORDER BY clause is used to sort the data in ascending or descending order,

based on one or more driver IDs. Some database sorts query results in ascending order

by default.

Syntax:

The basic syntax of ORDER BY clause which would be used to sort result in ascending

or descending order is as follows:

SELECT *

FROM driver

[WHERE condition]

[ORDER BY id, name, address] [ASC | DESC];

Having

HAVING filters records that work on summarized GROUP BY results.

HAVING applies to summarized group records, whereas WHERE applies to individual

records.

Only the groups that meet the HAVING criteria will be returned.

HAVING requires that a GROUP BY clause is present.

WHERE and HAVING can be in the same query.

The general syntax is:

1. SELECT driver ID

2.

FROM driver

3. WHERE condition

4. GROUP BY driver ID

5. HAVING condition

The general syntax with ORDER BY is:

1. SELECT driver ID

2.

FROM driver

3. WHERE condition

4. GROUP BY driver ID

5. HAVING condition

6. ORDER BY driver ID

List the number of customers in each country, except the USA, sorted high to low.

Only include countries with 9 or more customers.

1. SELECT COUNT(Id), Country

2.

FROM Customer

3. WHERE Country <> 'USA'

4. GROUP BY Country

5. HAVING COUNT(Id) >= 9

6. ORDER BY COUNT(Id) DESC

Results: 3 records

Count

Country

11

France

11

Germany

9

Brazil

Between

Problem: List all ,Moving between $10 and $20

1. SELECT Id, Name, Movers Price

2.

FROM Movers

3. WHERE UnitPrice BETWEEN 10 AND 20

4. ORDER BY UnitPrice

Where

The SQL WHERE clause is used to specify a condition while fetching the data from

single table or joining with multiple tables. If the given condition is satisfied then only it

returns specific value from the table. You would use WHERE clause to filter the records

and fetching only necessary records.

The WHERE clause is not only used in SELECT statement, but it is also used in

UPDATE, DELETE statement.

Syntax:

The basic syntax of SELECT statement with WHERE clause is as follows:

SELECT driver ID1, driver ID2, driver IDN

FROM table_name

WHERE [condition]

The SQL INSERT INTO Statement is used to add new rows of data to a table in the

database.

Syntax:

There are two basic syntaxes of INSERT INTO statement as follows:

INSERT INTO TABLE_NAME (driver ID1, driver ID2, driver ID3,...driver IDN)]

VALUES (value1, value2, value3,...valueN);

Task 3 –

Task 3.1

a.

Provide a suitable test plan to test the system against user and system requirements

Black box testing

Training

The vast majority in SmartMovers association presumably get by with basic programming. Try

not to expect they can do likewise with SmartMovers information base. You need everybody to

utilize it a similar way (pretty much).

Interest in preparing is critical to a fruitful framework on the grounds that:

1.

People are more joyful with the framework and are 'purchased in' to its prosperity

2.

People work all the more successfully with it Processes are done the 'hierarchical' way -

efficiently

3.

People are more productive and better ready to continue ahead with their work

Setting principles

The more individuals who utilize a framework, the more you need principles and concurred

measures. Set these ahead of time and ensure everybody is joined and concurred. A basic

arrangement of standards will have an immense effect to information quality and execution.

Information bases work best when all around set up and kept up and rely totally upon quality

information. You should concur how to oversee information.

'Go live' is the day you let clients free on the framework with genuine information to use for

their everyday work. On the off chance that you can, let one division will holds with the data

set at a time - maybe stage the execution over a couple of days or even weeks. That route if

there are any last moment issues, they can be fixed before everybody discovers them.

There comes a moment that the entire association will change over to the new framework.

Ensure you're prepared and have a fallback position on the off chance that things turn out

badly.

Similarly as an ICT framework ought to have a help contract, any genuine interest in data set

innovation will require master help, quite often from the provider. It tends to be costly however

is great. In the event that it breaks, you may wind up with nothing.

b.

Provide relevant test cases for the database you have implemented

Test Case

Test

Expected

Actual Result

Status

Test1

Description

Result

Put the client

Error message

id to the client

Get error

Put the

message

VARCHAR

Table

value in the

database

Test2

Put the CID to

Success

Get success

Put the INT

the Customer

message

message

value in the

Table

Test3

Put the Pay

database

Error message

Type to the

Get error

Put the INT

message

value in the

Payment Table

Tast4

database

Put the

Success

Get success

Put the INT

Telephone No

message

message

value in the

to the Direct

database

table

Test5

Put the Agent

Error message

name to the

Get error

Put the Real

message

value in the

Repeat Table

Test6

database

Put the Agent

Success

Success

Put the

Address to the

message

message

VARCHAR

Table

value in

database

Task 3.2

a.

Explain how the selected test data in task 3.1 b) can be used improve the

effectiveness of testing.

SQL Server 2012 gives an upgrade to FILESTREAM stockpiling by permitting more than one

document gathering to be utilized to store FILESTREAM information. This can improve I/O

execution and versatility for FILESTREAM information by giving the capacity to store the

information on numerous drives. FILESTREAM stockpiling, which was presented in SQL

Server 2008, coordinates the SQL Server Database Engine with the NTFS record framework,

giving a way to putting away unstructured information (like archives, pictures, and recordings)

with the data set putting away a pointer to the information. Albeit the real information dwells

outside the data set in the NTFS document framework, you can in any case utilize T-SQL

proclamations to embed, update, question, and back up FILESTREAM information, while

keeping up conditional consistency between the unstructured information and relating

organized information with same degree of safety.

This is a great feature for people who have to go through pain of SQL Server database

migration again and again. One of the biggest pains in migrating databases is user

accounts. SQL Server user resides either in windows ADS or at SQL Server level as SQL

Server users. So when we migrate SQL Server database from one server to other server these

users have to be recreated again. If you have lots of users you would need one dedicated person

sitting creating one’s for you.

MS SQL server provides results and messages following the process of executing queries. More

specifically, results part displays output of certain queries while messages display whether the

queries are successfully executed or not. Messages also provide information regarding errors if

any found. Thus, this is more effective and useful for designer because he is more able to know

that whether the query statements are right or not with errors.

Error

Validation

Task 3.3

a.

Get independent feedback on SmartMovers database solution from the non-

technical users and some developers (use surveys, questioners, interviews or any other

feedback collecting method) and make recommendations and suggestions for

improvements in a separate conclusion/recommendations section.

Information base User Management

Data set clients are the entrance ways to the data in a SQL information base. Along these lines,

tight security ought to be kept up for the administration of data set clients. Contingent upon the

size of an information base framework and the measure of work needed to oversee data set

clients, the security head might be the lone client with the advantages needed to make, adjust,

or drop data set clients. Then again, there might be various executives with advantages to

oversee information base clients. Notwithstanding, just believed people ought to have the

incredible advantages to direct data set clients.

Client Authentication

Information base clients can be confirmed (checked as the right individual) by SQL utilizing

the host working framework, network administrations, or the data set. By and large, client

validation by means of the host working framework is liked for the accompanying reasons:

•

Users can associate with SQL quicker and all the more advantageously without

determining a username or secret phrase.

•

Centralized command over client approval in the working framework: SQL need not

store or oversee client passwords and usernames if the working framework and data set

compare.

•

User sections in the data set and working framework review trails compare.

Client verification by the data set is ordinarily utilized when the host working framework can't

uphold client confirmation.

Secret key Security

On the off chance that client verification is overseen by the data set, security heads ought to

foster a secret word security strategy to keep up data set admittance security. For instance,

information base clients ought to be needed to change their passwords at ordinary spans, and

obviously, when their passwords are uncovered to other people. By constraining a client to

adjust passwords in such circumstances, unapproved information base access can be decreased.

Secure Connections with Encrypted Passwords

To more readily ensure the privacy of r secret phrase, SQL can be arranged to utilize encoded

passwords for customer/worker and worker/worker associations.

By setting the accompanying qualities, can necessitate that the secret key used to check an

association consistently be encoded:

•

Set the ORA_ENCRYPT_LOGIN climate variable to TRUE on the customer machine.

•

Set the DBLINK_ENCRYPT_LOGIN worker introduction boundary to TRUE.

Whenever lorryowered at both the customer and worker, passwords won't be sent across the

organization "free", yet will be encoded utilizing a changed DES (Data Encryption Standard)

calculation.

The DBLINK_ENCRYPT_LOGIN boundary is utilized for associations between two SQL

workers (for instance, when performing circulated inquiries). On the off chance that are

interfacing from a customer, SQL checks the ORA_ENCRYPT_LOGIN climate variable.

At whatever point endeavor to interface with a worker utilizing a secret word, SQL encodes the

secret phrase prior to sending it to the worker. On the off chance that the association falls flat

and evaluating is lorryowered, the disappointment is noted in the review log. SQL then, at that

point checks the suitable DBLINK_ENCRYPT_LOGIN or ORA_ENCRYPT_LOGIN esteem.

In the event that it set to FALSE, SQL endeavors the association again utilizing a decoded

variant of the secret word. On the off chance that the association is fruitful, the association

replaces the past disappointment in the review log, and the association continues. To keep

noxious clients from driving SQL to re-endeavor an association with a decoded rendition of the

secret word, should set the proper qualities to TRUE.

INFORMATION_SCHEMA

Task 4

Task 4.1

a.

Prepare a simple users’ guide and a technical documentation for the support and

maintenance of the software.

Benefit

Stored procedures are so popular and have become so widely used and therefore expected of

Relational Database Management Systems (RDBMS) that even MSSQL finally caved to

developer peer pressure and added the ability to utilize stored procedures to their very

popular open source database.

Task 4.2

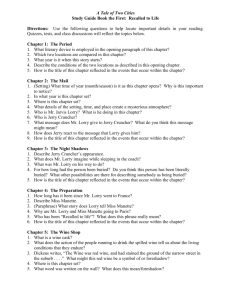

a.

SmartMovers technical documentation should include some of the UML diagrams

(Use case diagram Class diagram, etc.), flow charts for the important functionalities,

context level DFD and the Level 1 DFD

Use case diagram

Admin user login creating

Request the crate user

login

Get the information

Create user login

Updating database

ADMIN

USER

Publishing a user login

Send the user login

information

Login in the system

Class diagram

Task 4.3

a.

Suggest the future improvements that may be required to ensure the continued

effectiveness of the database system.

Is your database server healthy?

First and foremost, make sure that the host that’s serving your database process has sufficient

resources available. This includes CPU, memory, and disk space.

CPU

CPU will most likely not be a bottleneck, but database servers induce continuous base load on

machines. To keep the host responsive, make sure that it has at the very least two CPU cores

available.

I will assume that at least some of your hosts are virtualized. As a general rule of thumb, when

monitoring virtual machines, also monitor the virtual host that the machines run on. CPU metrics

of individual virtual machines won’t show you the full picture. Numbers like CPU ready time

are of particular importance.

CPU ready time is a considerable factor when assigning CPU time to virtual machines

Memory

Keep in mind that memory usage is not the only metric to keep an eye on. Memory usage does

not tell you how much additional memory may be needed. The important number to look at is

page faults per seconds.

Page faults is the real indicator when it comes to your host’s memory requirements

Having thousands of page faults per second indicates that your hosts are out of memory (this is

when you start to hear your server’s hard drive grinding away).

Disk space

Because of indices and other performance improvements, databases use up a LOT more disk

space than what the actual data itself requires (indices, you know). NoSQL databases in

particular (Cassandra and Mongo DB for instance) eat up a lot more disk space than you would

expect. Mongo takes up less RAM than a common SQL database, but it’s a real disk space hog.

I can’t lorryhasize this too much: make sure you have lots of disk space available on your hard

drive. Also, make sure your database runs on a dedicated hard drive, as this should keep disk

fragmentation caused by other processes to a minimum.

Disk latency is an indicator for overloaded harddrives

One number to keep an eye on is disk latency. Depending on hard drive load, disk latency will

increase, leading to a decrease in database performance. What can you do about this? Firstly, try

to leverage your application’s and database’s caching mechanisms as much as possible. There is

no quicker and more cost-effective way of moving the needle.

If that still does not yield the expected performance, you can always add additional hard drives.

Read performance can be multiplied by simply mirroring your hard drives. Write performance

really benefits from using RAID 1 or RAID 10 instead of, let’s say, RAID 6. If you want to get

your hands dirty on this subject, read up on disk latency and I/O issues.

3. Understand the load and individual response time of each service

4. Do you have enough database connections?

Even if the way you query your database is perfectly fine, you may still experience inferior

database performance. If this is your situation, it’s time to check that your application’s database

connection is correctly sized.

Check to see if Connection Acquisition time comprises a large percentage of your database’s

response time.

When configuring a connection pool there are two things to consider:

1) What is the maximum number of connections the database can handle?

2) What is the correct size connection pool required for your application?

Why shouldn’t you just set the connection pool size to the maximum? Because your application

may not be the only client that’s connected to the database. If your application takes up all the

connections, the database server won’t be able to perform as expected. However, if your

application is the only client connected to the database, then go for it!

How to find out the maximum number of connections

You already confirmed in Step #1 that your database server is healthy. The maximum number of

connections to the database is a function of the resources on the database. So to find the

maximum number of connections, gradually increase load and the number of allowed

connections to your database. While doing this, keep an eye on your database server’s metrics.

Once they max out—either CPU, memory, or disk performance—you know you’ve reached the

limit. If the number of available connections you reach is not enough for your application, then

it’s time to consider upgrading your hardware.

Determine the correct size for your application’s connection pool

The number of allowed concurrent connections to your database is equivalent to the amount of

parallel load that your application applies to the database server. There are tools available to help

you in determining the correct number here. For Java, you might want to give a try.

Increasing load will lead to higher transaction response times, even if your database server is

healthy. Measure the transaction response time from end-to-end to see if Connection Acquisition

time takes up increasingly more time under heavy load. If it does, then you know that

your connection pool is exhausted. If it doesn’t, have another look at your database server’s

metrics to determine the maximum number of connections that your database can handle. Verify

whether Connection Acquisition time contains a huge level of your information base's reaction

time.

While designing an association pool there are two interesting points:

1) What is the most extreme number of associations the data set can deal with?

2) What is the right size association pool needed for your application?

Is there any good reason why you shouldn't simply set the association pool size to the greatest?

Since your application may not be the solitary customer that is associated with the information

base. On the off chance that your application takes up every one of the associations, the data set

worker will not have the option to proceed true to form. Notwithstanding, on the off chance that

your application is the lone customer associated with the data set, pull out all the stops!

The most effective method to discover the greatest number of associations

You previously affirmed in Step #1 that your data set worker is sound. The most extreme number

of associations with the information base is a component of the assets on the data set. So to track

down the most extreme number of associations, steadily increment load and the quantity of

permitted associations with your data set. While doing this, watch out for your data set worker's

measurements. When they maximize—either CPU, memory, or plate execution—you realize

you've arrived at the cutoff. In the event that the quantity of accessible associations you reach

isn't sufficient for your application, then, at that point it's an ideal opportunity to consider

updating your equipment.

Decide the right size for your application's association pool

The quantity of permitted simultaneous associations with your data set is identical to the measure

of equal burden that your application applies to the data set worker. There are apparatuses

accessible to help you in deciding the right number here. For Java, you should check out.

Expanding burden will prompt higher exchange reaction times, regardless of whether your

information base worker is sound. Measure the exchange reaction time from start to finish to

check whether Connection Acquisition time occupies progressively additional time under

substantial burden. Assuming it does, you realize that your association pool is depleted. On the

off chance that it doesn't, have one more glance at your data set worker's measurements to decide

the most extreme number of associations that your information base can deal with.

Coincidentally, a decent general guideline to remember here is that an association pool's size

ought to be consistent, not variable. So set the base and most extreme pool sizes to a similar

worth.

5. Remember about the organization

We will in general disregard the actual requirements looked by our virtualized foundation.

Regardless, there are actual requirements: links fizzle and switches break. Shockingly, the hole

among works and doesn't work typically fluctuates. This is the reason you should watch out for

your organization measurements. In the event that issues abruptly show up after months or even

long stretches of working immaculately, odds are that your foundation is experiencing a nonvirtual, actual issue. Check your switches, check your links, and check your organization

interfaces. It's ideal to do this as ahead of schedule as conceivable after the main sign that there

might be an issue since this might be the point in time when you can fix an issue before it

impacts your business.

By the way, a good rule of thumb to keep in mind here is that a connection pool’s size should be

constant, not variable. So set the minimum and maximum pool sizes to the same value.

5. Don’t forget about the network

We tend to forget about the physical constraints faced by our virtualized infrastructure.

Nonetheless, there are physical constraints: cables fail and routers break. Unfortunately, the gap

between works and doesn’t work usually varies. This is why you should keep an eye on your

network metrics. If problems suddenly appear after months or even years of operating flawlessly,

chances are that your infrastructure is suffering from a non-virtual, physical problem. Check

your routers, check your cables, and check your network interfaces. It’s best to do this as early as

possible following the first sign that there may be a problem because this may be the point in

time when you can fix a problem before it impacts your business.

Retransmissions seriously impact network performance

All the time, over-focused on measures begin to drop parcels because of exhausted assets.

Simply on the off chance that your organization issue isn't an equipment issue, measure level

perceivability can prove to be useful in distinguishing a faltering part.

Information base execution wrap up

Information bases are complex applications that are not worked for terrible execution or

disappointment. Ensure your information bases are safely facilitated and resourced so they can

perform at their best.

•

Server information to check have wellbeing

•

Hypervisor and virtual machine measurements to guarantee that your virtualization is

alright

•

Application information to improve data set admittance

•

Network information to break down the organization effect of data set correspondence.

End

The way toward assessing information utilizing scientific and intelligent thinking to analyze

every part of the information gave. This type of investigation is only one of the numerous

means that should be finished when directing an examination try. Information from different

sources is accumulated, surveyed, and afterward investigated to frame a type of finding or end.

There are an assortment of explicit information examination technique, some of which

incorporate information mining, text investigation, business insight, and information

perceptions.

As a rule, logical information investigation for the most part includes at least one of following

three undertakings:

•

Generating tables,

•

Converting information into diagrams or other visual presentations, and additionally

•

Using factual tests.

Tables are utilized to put together information in one spot. Significant driver ID and line

headings work with discovering data rapidly. Perhaps the best benefit of tables is that when

information is coordinated, it very well may be simpler to spot patterns and inconsistencies.

Another benefit is their flexibility. Tables can be utilized to epitomize either quantitative or

subjective information, or even a blend of the two. Information can be shown in its crude

structure, or coordinated into information rundowns with relating insights.

Diagrams are a visual methods for addressing information. They permit complex information to

be addressed in a manner that is simpler to spot patterns by eye. There are a wide range of

kinds of diagrams, the most well-known of which can be surveyed in this fundamental manual

for charts: Data Analysis and Graphs.

You may consider diagrams the essential method to introduce SmartMovers information to

other people; despite the fact that charts are incredible methods of doing that (see the Science

Buddies guide about Data Presentation Tips for Advanced Science Competitions for additional

subtleties), they're likewise a decent insightful component. The way toward maneuvering the

information toward various visual structures regularly causes SmartMovers to notice various

parts of the information and grows SmartMovers considering the big picture. Simultaneously,

you may coincidentally find an example or pattern that recommends something new about

SmartMovers science project that you hadn't considered previously. Seeing SmartMovers

information in various graphical arrangements may feature new ends, new inquiries, or the need

to proceed to assemble extra information. It can likewise assist you with recognizing anomalies.

These are information focuses that seem, by all accounts, to be conflicting with the other

information focuses. Anomalies can be the consequences of trial blunder, similar to a failing

estimation apparatus, information passage mistakes, or uncommon occasions that really

occurred, however don't reflect what is typical. When genuinely breaking down SmartMovers

information, distinguish anomalies and manage them (see the Bibliography, beneath, for

articles examining how to manage exceptions) with the goal that they don't disproportionally

influence SmartMovers ends. Recognizing anomalies additionally permits you to return and

evaluate whether they reflect uncommon occasions and whether such occasions are useful to

SmartMovers generally logical ends.

On the off chance that you are uncertain of what sorts of charts may best exlorrylify

SmartMovers information, return to distributed logical articles with comparative kinds of

information. See how the creators chart and address their information. Take a stab at examining

SmartMovers information utilizing similar strategies.

Measurements are the third broad method of analyzing information. Frequently, factual tests are

utilized in some mix with tables or potentially diagrams. There are two general classifications

of measurements: expressive insights and inferential measurements. Clear insights are utilized

to sum up the information and incorporate things like normal, range, standard deviation, and

recurrence. For an audit of a few fundamental elucidating factual computations counsel the

overall advisers for Summarizing SmartMovers Data and assessing Variance and Standard

Deviation. Inferential insights depend on examples (the information you gather) to make

inductions about a populace. They're utilized to decide if it is feasible to reach general

inferences about a populace, or expectations about the future dependent on SmartMovers test

information. Inferential insights cover a wide assortment of factual ideas, for example,

speculation testing, relationship, assessment, and demonstrating.

Past the essential distinct measurements like mean, mode, and normal, you probably won't have

had a lot of openness to insights. So how would you understand what measurable tests to apply

to SmartMovers information? A decent beginning spot is to allude back to distributed logical

articles in SmartMovers field. The "Strategies" segments of papers with comparable kinds of

informational indexes will examine the factual tests the creators utilized. Different tests may be

alluded to inside information tables or figures. Have a go at assessing SmartMovers

information utilizing comparative tests. You may likewise think that its valuable to talk with

factual reading material, math instructors, SmartMovers science project tutor, and other science

or designing experts. The Bibliography, underneath, additionally contains a rundown of assets

for studying insights and their applications.

5.

Reference

1. "OGC – Annex 1". Office of Government Commerce (OGC). Retrieved 05, 03, 2024,,

2. Mike Goodland; Karel Riha (20 January 1999). "History of SSADM". SSADM – an

Introduction. Retrieved 05, 03, 2024, from

3. "Model Systems and SSADM". Model Systems Ltd. 2002. Retrieved 05, 03, 2024from

4. SSADM foundation. Business Systems Development with SSADM. The Stationery

Office. 2000. p. v. ISBN 0-11-330870-1. Retrieved 05, 03, 2024

Bibliography

This paper provides a discussion of how to choose the correct statistical test:

Windish, D.M. and Diener-West, M. (2006). A Clinician-Educator's Roadmap to

Choosing and Interpreting Statistical Tests. Journal of General Internal Medicine 21 (6):

656-660. Retrieved 05, 03,

2024http://www.pubmedcentral.nih.gov/articlerender.fcgi?tool=pubmed&pubmedid=168

08753

These resources provide additional information on what to do with outliers:

Fallon, A. and Spada, C. (1997). Detection and Accommodation of Outliers in Normally

Distributed Data Sets. Retrieved 05, 02,

2024http://www.cee.vt.edu/ewr/environmental/teach/smprimer/outlier/outlier.html

High, R. (2000). Dealing with 'Outliers': How to Maintain SmartMovers Data's Integrity.

Retrieved 05, 03, 2024

http://rfd.uoregon.edu/files/rfd/StatisticalResources/outl.txt