CT Brain Image Synthesis via CycleGAN: MRI to CT Conversion

advertisement

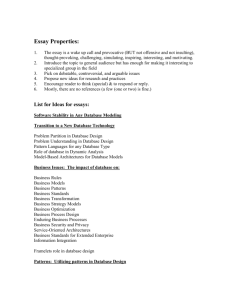

2023 IEEE International Conference on Consumer Electronics (ICCE) CT Brain Image Synthesization from MRI Brain Images Using CycleGAN Kazuki Takamiya Yutaro Iwamoto Masahiro Nonaka Yen-Wei Chen 2023 IEEE International Conference on Consumer Electronics (ICCE) | 978-1-6654-9130-3/23/$31.00 ©2023 IEEE | DOI: 10.1109/ICCE56470.2023.10043572 Graduate School of Information Faculty of Information and Kansai Medical University Graduate School of Information Science and Engineering Osaka, Japan Communication Engineering Science and Engineering Ritsumeikan University Osaka Electro-Communication nonakamasa65@gmail.com Ritsumeikan University Shiga, Japan University Shiga, Japan chen@is.ritsumei.ac.jp Osaka, Japan is0416ir@ed.ritsumei.ac.jp yiwamoto@osakac.ac.jp Abstract—Medical images from multiple modalities are essential for diagnosis and effective treatment of various diseases. However, obtaining these images separately is a time and cost consuming task for both patients and physicians. Whereas CT scanning also increases the radiation exposure for patients. Therefore, generating CT scans from radiation-free MR images is a very desirable task. Synthesized brain CT scans are useful for obtaining cranial information that is difficult to obtain from MR imaging but gives crucial information for brain surgery and treatment. In this study, we propose a deep learning-based method to synthesize CT image from MR image. We present a cycle GAN-based method to synthesize CT brain image from MR brain image. We also propose a novel normalization method called as range-of-interest (ROI) normalization to emphasize the tissue and bone regions. Using this method, the synthesized CT image can be treated in the same way as the actual CT image. Additionally, we evaluate the impact of intensity normalization using three different intensity normalizations for synthesizing MR-CT images, which is an important image preprocessing step. Our proposed ROI normalization for CT images and max-min normalization for MR images generate the highestquality synthetic image results, with Mean Absolute Error at approximately 94.60 HU of the produced 3D volume of CT images. Index Terms—MR image, CT image, image synthesization, cycle GAN, max-min normalization, z-score normalization, ROI normalization, brain image, cranium I. I NTRODUCTION Medical images are essential for the diagnosis and treatment of various diseases. For example, computed tomography (CT) provides detailed anatomic information of bones and other structures, but CT images are insufficient for contrast of soft tissues. On the other hand, magnetic resonance imaging (MRI) provides high contrast of soft tissues, but with less anatomic information. These CT and MRI images have different complementary information, which is crucial for diagnosis. Due to their complementary information, combining multi-modal medical images such as CT and MR images assists in better diagnosis and treatment. Usually, MRI images are used as primary modality in the diagnosis of brain diseases. However, the identification of the anatomical information also requires form CT image. Separately obtaining multi-modal medical images is a timeconsuming and costly task. Whereas CT scanning technology increases the radiation exposure to the human body. Therefore, in medical applications, it is a desirable task to generate CT images from MR images. In recent years, deep learning has been widely applied for medical imaging for several tasks. Specifically, methods based on Generative Adversarial Networks (GANs) have achieved a great success and attracted a lot of attention in the field of cross-modality medical image synthesization [1]. Emami et al. [2] generated CT images from T1-weighted MR images using a GAN learning scheme with a ResNet network as a generator. Their proposed architecture achieved better results than conventional CNN models. Wolterink et al. [3] also showed that CT brain images can be synthesized from unaligned and unpaired MR brain images using Cycle GAN [4]. Inspired by these work [2], [3], here, we introduce a cycle GAN-based method to synthesize CT brain image from MR brain image. We use paired MR images and CT images to train a GAN model (cycle GAN) for synthesizing CT images from MR images. We can generate corresponding CT images from MR images (input) using the trained GAN model (cycle GAN), and we can obtain the cranium information from the generated CT image. Further, since there is substantial intensity variations between the MR images obtained from different acquisition devices . Intensity normalization is an important image preprocessing step in MR-CT image synthesization. Though several intensity normalization methods (i.e., z-score normalization, max-min normalization) have been used in MRCT synthesization [2], [3], at present, the selection and determination of the optimal intensity normalization method is still a challenging task. In addition to z-score normalization and max-min normalization, in this study, we propose a new normalization method called as range-of-interest (ROI) normalization to emphasize the tissue and bone regions of CT images. We analyze and access effects of these three different intensity normalizations on MR-CT synthesis accuracy. According to both objective and subjective evaluation, our proposed ROI normalization for CT images and max-min normalization for MR images yield better synthesis results. Authorized licensed use limited to: Indira Gandhi Delhi Technical University for Women. Downloaded on October 27,2023 at 05:34:11 UTC from IEEE Xplore. Restrictions apply. 978-1-6654-9130-3/23/$31.00 ©2023 IEEE Cycle consistency loss LY X (GY X , DX , Y, X) = Ey∼pdata (y) [log(1 − DX (GY X (y))] Real MR !!" #$ %& Generated CT !%& #$ !" Reconstruct MR + Ex∼pdata (x) [log(DX (x)] , Lcyc (GXY , GY X ) = Ex∼pdata (x) [kGY X (GXY (x)) − x)k1 ] + Ey∼pdata (y) [kGXY (GY X (y)) − yk1 ] . Real/Fake "%& (a) !%& #$ !" Generated MR !!" #$ %& (4) In (1) – (4), X and Y represent MR and CT images, respectively. GXY and GY X represent the generators from MR to CT and CT to MR. DX and DY indicate the discriminators for MR and CT images, respectively. λ is a wight hyperparameter. Real CT Cycle consistency loss Real CT (3) Reconstruct CT B. Intensity Normalization Real/Fake "!" Real MR (b) Fig. 1. CycleGAN for synthesis of CT images from MR images. (a) Forward cycle; (b) backward cycle. The Mean Absolute Error of the estimated 3D volume of CT images is about 94.60 HU. II. M ETHOD A. Cycle GAN Cycle GAN is a method of image generation with domain translation. Cycle GAN does not require pairwise datasets during network training. A cycle of training is followed by the cycle GAN approach. Cycle GAN translates an image from a domain “A” (i.e., MR) into a domain “B” (i.e., CT) during training, and then it transfers the generated image from domain “B” (i.e., CT) to the original given domain “A” (MR). Although we trained the model using paired CT and MR images, we are not able to achieve perfect registration to align the images. Therefore, we employ cycle GAN to generate CT images from MR images. Figure. 1 depicts the cycle GAN employed in our CT image synthesization process. Both the forward and backward cycles are shown in Fig. 1(a) and 1(b). The Cycle GAN framework includes two generators and discriminators: MR to CT (GM RtoCT , DCT ) and CT to MR (GCT toM R , DM R ). The generators consist of an encoder and decoder style feature extractor network with residual blocks like skip connections to transmit the input data to subsequent layers [4]. The loss functions of cycle GAN are shown in (1) – (4): L(GXY , GY X , DX , DY ) = LXY (GXY , DY , X, Y ) + LY X (GY X , DX , Y, X) + λLcyc (GXY , GY X ). (1) Intensity normalization of medical images is an important preprocessing step in medical image synthesization. Recently, several intensity normalization methods have been used in MR-CT synthesization [2], [3], but at present, it is not easy to determine what type of intensity normalization is optimal for medical image synthesization. In this study, we investigate and evaluate synthesis accuracy using three different intensity normalizations (i.e., z-score normalization, max-min normalization. In addition, we formulate a novel range-of-interest (ROI) normalization ). 1) Z-score normalization: At first, we have investigated intensity normalization method is z-score normalization, which has been widely used for MR image normalization. The normalization scheme is illustrate in (5). x0 = LXY (GXY , DY , X, Y ) = Ex∼pdata (x) [log(1 − DY (GXY (x))] + Ey∼pdata (y) [log(DY (y)] , (2) x − mean std , (5) where the image intensities before and after normalization are represented by x and x0 , respectively. The mean and standard deviation of original (raw) image are denoted by mean and std (before normalization). 2) Max-min normalization: The second method is max-min normalization. In the max-min normalization, the intensity is normalized to a range of [a, b], with as shown in (6). x0 = x − min (b − a) ∗ + a, max − min (6) where min and max are minimum and maximum intensity values of the raw image (before normalization), a and b are the lower limit and upper limit of the normalized image (after normalization), We set [a, b] to [−1, 1] in this research. 3) Range-of-interest (ROI) normalization: The third method is our newly proposed range-of-interest normalization, which can be considered as an improved version of max-min normalization. We only focus on a specific range [LL, U L], where LL and U L are lower limit and upper limit of the range-of-interest (ROI). The intensity of the ROI is normalized to a range of [a, b] using (7). x0 = where x − LL + a. (b − a) ∗ U L − LL (7) In this study, ROI normalization is only used for CT image. The range-of-interest [LL, U L] is set as [−600, 1800] HU. Authorized licensed use limited to: Indira Gandhi Delhi Technical University for Women. Downloaded on October 27,2023 at 05:34:11 UTC from IEEE Xplore. Restrictions apply. ~ax, sag, cor⾯を添えて~ CT image MR image MR images CT images (a) Original. (a) Sample 1 (b) Z-score. (b) Sample 2 (c) Max-min. Fig. 2. Two typical sample pairs of CT and MR images from the private datasets for the experiments. The left figure shows the CT image, and the right figure shows the MR image. Note that if the intensity of the raw image is lower than LL = −600 or higher than U L = 1800, the intensity will be set as LL or U L. III. E XPERIMENTS AND R ESULTS We conduct experiments to demonstrate the effectiveness of our proposed CycleGAN framework for 3D CT brain image synthesis from MR images. We investigate different intensity normalizations to validate our approach. We also evaluate the impact of intensity normalization for MR-CT synthesizations, which is an important image preprocessing step. Three different intensity normalization methods (i.e., z-score normalization, max-min normalization, and ROI normalization) are used for evaluation. (d) ROI (-600~1800HU) Fig. 3. Examples of normalized MR and CT images. Original MR and CT images(a), Normalized MR and CT images by z-score normalization (b); maxmin normalization(c) and ROI normalization(d). shown in Fig. 3(a), 3(b), and 3(c), respectively. Note that only CT image is normalized by ROI normalization method. For MR and CT images, we have conducted 4 tests using various combinations of normalizing techniques ((5) – (7)), which are detailed in III-D. C. Evolution measures A. Data We have used our private datasets for experiments, which are collected in KANSAI Medical University, Japan. The dataset contains 19 paired CT and MR brain images collected from 18 subjects (patients).We use 15 pairs for training, 1 pair for validation and 3 pairs for testing. Only MR images are used as input during testing, and the corresponding CT images are used as ground truth to assess the accuracy of the proposed framework. Examples of the paired MR and CT images are shown in the Fig. 2. The reconstructed 3D CT volume and the actual CT volume (ground truth) are compared using the Mean Absolute Error (MAE) and Peak Signal-to-Noise Ratio (PSNR) as metrics for quantitative evaluation. The MAE is shown (8) and the PSNR is shown in (9) and (10). M AE = 1 XN |y(i) − y 0 (i)|, i=1 N B. Preprocessing At first, rigid transformation is used to register the paired CT and MR images. The slice image has been resized to 256× 256 pixels in size. Since the CycleGAN model we used is a 2D model, axial slices are used in the experiments. The 3D volume of the CT image is reconstructed using the synthetic axial CT slice images. All images are normalized. Examples of normalized MR and CT images by z-score normalization ((5)), max-min normalization ((6)), and ROI normalization ((7)) are P SN R = 10 log10 M SE = 2 M AXimage M SE , 2 1 XN y(i) − y 0 (i) , i=1 N (8) (9) (10) where y and y 0 are original CT image (ground truth) and synthesized CT image, respectively. N is the number of voxels. Authorized licensed use limited to: Indira Gandhi Delhi Technical University for Women. Downloaded on October 27,2023 at 05:34:11 UTC from IEEE Xplore. Restrictions apply. Example 1 Example 2 synthetic CT images visualized by volume rendering. Fig. 4(a) shows the original CT images, Fig. 4(b) – Fig. 4(d) are the synthesized CT images of experiments 2 – 4, respectively. In experiment 1, we noticed that the CT picture cannot be generated. Therefore, Fig. 4 does not show the visualization results of experiment 1. The best synthesis result is obtained by the proposed ROI normalization for CT images and maxmin normalization for MR images (experiment 4), as shown in Fig. 4. The proposed ROI normalization on the synthetic CT images (Fig. 4(d)) having a smooth cranium and some information about a surgical wound. Our upcoming task will be the synthesis of a precise surgical wound. Synthesis of clear surgical wound will be our future work. Example 3 (a) Original CT images; (200~1000HU). (b) MR: z-score, CT: max-min; (200~800HU). IV. C ONCLUSION We proposed a cycle GAN-based method to synthesize the CT brain image from the MR brain image. Three different types of intensity normalizations—the z-score normalization, the max-min normalization, and the our proposed range-ofinterest (ROI) normalization are used to assess the accuracy of the synthesized images. According to both objective and subjective evaluation, our proposed ROI normalization for CT images and max-min normalization for MR images has the best synthesis result. We also noticed that the proposed method performs effectively on the public dataset CERMEPiDB-MRXFDG [5]. (c) MR: max-min, CT: max-min; (-100~400HU). (d) MR: max-min, CT: ROI; (200~1000HU). Fig. 4. The craniums of the three synthetic CT images visualized by volume rendering, visualizing the range of the specified HU values. Results of experiments 2 – 4 are shown in (b) – (d). The result in (d) has smooth cranium reconstruction, but the surgical wound contained in (a) is not reconstructed. D. Results We have performed 4 experiments with different combination of normalization methods ((5) – (7)) for MR and CT images. The experimental results are given in TABLE I. TABLE I C OMPARISON OF ACCURACY OF SYNTHETIC CT IMAGES WITH DIFFERENT INTENSITY NORMALIZATION (Z- SCORE : (5); M AX - MIN : (6); ROI: (7)). Experiment 1 2 3 4 Normalization MR CT Z-score Z-score Z-score Max-min Max-min Max-min Max-min ROI MAE (HU) 296.95 ± 34.73 122.28 ± 7.24 148.12 ± 10.54 94.60 ± 1.47 PSNR (dB) 9.18 21.38 23.20 20.69 ± ± ± ± 6.84 3.51 3.25 7.88 As shown in TABLE I, the synthesis results with z-score normalization are not good, especially for experiment 1 (when both CT and MR images are normalized by z-score normalization). The max-min normalization for both MR and CT images achieves the best PSNR, while the proposed ROI normalization for CT and max-min normalization for MR images achieves the best MAE score. In addition to above objective evaluation, we also perform subjective evaluation. Fig. 4 shows the craniums of the three ACKNOWLEDGMENT Authors would like to thank Dr. Rahul Jain for his kind English proof. This work was supported in part by the Grant in Aid for Scientific Research from the Japanese Ministry for Education, Science, Culture and Sports (MEXT) under the Grant No. 20KK0234, No. 21H03470, and No. 20K21821 © Copyright CERMEP – Imagerie du vivant, www.cermep.fr and Hospices Civils de Lyon. All rights reserved. R EFERENCES [1] A. Shokraei Fard, D. C. Reutens, and V. Vegh, “From CNNs to GANs for cross-modality medical image estimation,” Computers in Biology and Medicine, vol. 146, p. 105556, 2022. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S0010482522003481 [2] H. Emami, M. Dong, S. P. Nejad-Davarani, and C. K. Glide-Hurst, “Generating synthetic CTs from magnetic resonance images using generative adversarial networks,” Medical Physics, vol. 45, no. 8, pp. 3627–3636, 2018. [Online]. Available: https://aapm.onlinelibrary.wiley.com/doi/abs/10.1002/mp.13047 [3] J. M. Wolterink, A. M. Dinkla, M. H. F. Savenije, P. R. Seevinck, C. A. T. van den Berg, and I. Išgum, “Deep MR to CT Synthesis Using Unpaired Data,” in Simulation and Synthesis in Medical Imaging, S. A. Tsaftaris, A. Gooya, A. F. Frangi, and J. L. Prince, Eds. Cham: Springer International Publishing, 2017, pp. 14–23. [4] J.-Y. Zhu, T. Park, P. Isola, and A. A. Efros, “Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks,” in 2017 IEEE International Conference on Computer Vision (ICCV), 2017, pp. 2242– 2251. [5] I. Mérida, J. Jung, S. Bouvard, D. Le Bars, S. Lancelot, F. Lavenne, C. Bouillot, J. Redouté, A. Hammers, and N. Costes, “CERMEP-IDBMRXFDG: a database of 37 normal adult human brain [18F]FDG PET, T1 and FLAIR MRI, and CT images available for research,” EJNMMI Research, vol. 11, no. 1, p. 91, Sep 2021. [Online]. Available: https://doi.org/10.1186/s13550-021-00830-6 Authorized licensed use limited to: Indira Gandhi Delhi Technical University for Women. Downloaded on October 27,2023 at 05:34:11 UTC from IEEE Xplore. Restrictions apply.