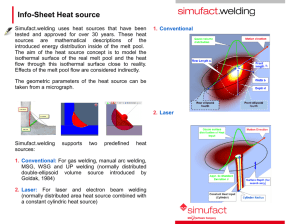

Optics & Laser Technology 174 (2024) 110707 Contents lists available at ScienceDirect Optics and Laser Technology journal homepage: www.elsevier.com/locate/optlastec Full length article Real-time monitoring of weld surface morphology with lightweight semantic segmentation model improved by attention mechanism during laser keyhole welding Wang Cai a, LeShi Shu b, ShaoNing Geng b, Qi Zhou c, LongChao Cao a, * a Hubei Key Laboratory of Digital Textile Equipment, School of Mechanical Engineering and Automation, Wuhan Textile University, 430200 Wuhan, PR China State Key Laboratory of Intelligent Manufacturing Equipment and Technology, School of Mechanical Science and Engineering, Huazhong University of Science and Technology, 430074 Wuhan, PR China c School of Aerospace Engineering, Huazhong University of Science and Technology, 430074 Wuhan, PR China b A R T I C L E I N F O A B S T R A C T Keywords: Weld surface morphology Molten pool contour Semantic segmentation Model lightweight Attention mechanism During the welding process, the molten metal continuously solidifies along the trailing edge of the molten pool to form the weld seam, so the change in the weld surface morphology can be monitored by the molten pool profile characteristics. In this study, an innovative weld surface morphology diagnosis strategy based on a lightweight semantic segmentation model improved by an attention mechanism is proposed. Considering the characteristics of molten pool morphology change, a semantic segmentation label automatic generation method is proposed, and a large number of high-precision training labels are quickly obtained. The constructed lightweight semantic segmentation model runs more than four times faster than the classical Unet, PSPnet, and Deeplabv3+. The molten pool segmentation accuracy of the constructed model can reach 94.95 % on the new dataset obtained from the test weld. The weld surface morphology reconstruction method is proposed, and the weld morphology size failure defect monitoring is realized based on the molten pool contour features. The validation results show that the constructed model has strong resistance to optical noise interference and generalization ability, and the reconstructed weld surface morphology is consistent with the actual morphological changes. 1. Introduction Laser welding has the advantages of large weld depth-to-width ratio, high energy density, fast welding speed, and easy-to-achieve automation [1–3], and is an important means of high-quality manufacturing of stainless steel components in rail transportation, offshore equipment, aerospace, and other fields [4–6]. The laser welding process stability is affected by the processing environment, workpiece deformation, as­ sembly state, and other factors [7], prone to weld surface size (width and height) failure defects [8]. The height and width of the weld seam sur­ face are closely related to the weld formation quality [9]. Dramatic fluctuations in the weld surface size can easily lead to the formation of stress concentrations at the location of the unqualified size, which af­ fects the service performance of the weld seam and causes safety hazards [10]. In recent years, the welding process real-time monitoring tech­ nology has become the frontier of the discipline and research hotspots, with broad application prospects [11,12], which can accurately sense the state of the welding process and control the process parameters to suppress defect generation based on real-time feedback operation from the sensed information. The monitoring method is the key to guaran­ teeing the welding process stability and improving the welding quality [13,14]. The realization of accurate and rapid monitoring of weld sur­ face morphology is the basis for solving size failure defects. The skilled human welder can determine the welding status by the surface characteristics of the molten pool during the welding process [15,16]. The molten metal continuously solidifies along the trailing edge of the molten pool to form the weld seam [17]. The molten pool profile is directly related to the shape of the weld seam and can reflect the changes in the shape of the weld seam through the characteristics of the molten pool profile [18]. However, during the laser welding process, metal vapors are emitted strongly and may partially obscure the molten pool [19]. In addition, the visual signal has uneven brightness distribution at the front and rear of the molten pool, serious reflective interference in * Corresponding author. E-mail addresses: wcai@wtu.edu.cn (W. Cai), shuleshi@hust.edu.cn (L. Shu), sngeng@hust.edu.cn (S. Geng), qizhou@hust.edu.cn (Q. Zhou), clc@wtu.edu.cn (L. Cao). https://doi.org/10.1016/j.optlastec.2024.110707 Received 1 December 2023; Received in revised form 31 January 2024; Accepted 5 February 2024 Available online 13 February 2024 0030-3992/© 2024 Elsevier Ltd. All rights reserved. W. Cai et al. Optics and Laser Technology 174 (2024) 110707 the monitoring accuracy and model robustness by extracting compre­ hensive, complex, and deep features from the welding process moni­ toring image. The most important physical characteristics of the weldment are the weld geometry [35]. A weld seam with qualified dimensions is uni­ formly formed and each dimensional data varies within a preset range. When the weld surface width is not qualified, forming a nail head weld is easy [36]. When the weld residual height is abnormal, it will produce defects such as hump and collapse [37]. Li et al. [38] studied the cor­ respondence between welding parameters and the molten pool flow state. The results showed that the molten metal gathered at the surface can form a nail head weld, and the amount of defocusing can change the flow state to affect the weld formation. Ai et al. [39] proposed a new three-dimensional model for simulating the weld formation process to predict the weld width, height, and depth. Results showed that the built simulation model can accurately predict the weld shape and the corre­ sponding weld size data. Artificial intelligence techniques are widely used to predict weld geometry due to their high accuracy and short delay [40]. Chandrasekhar et al [41] predicted the weld width and depth by using a meta cellular automata image processing algorithm to segment the image hot spot region. The results showed that the predicted results were in high agreement with the actual measurements. Lei et al. [42] proposed a multi-information fusion neural network combining weld parameters and molten pool features to predict the weld geometric features. The model’s average absolute percentage error was less than 1 %. Oh et al. [43] investigated a deep learning-based method for optical microscopic image prediction of weld cross-sections. Accurate highresolution optical microscopy images were successfully generated for all 39 sets of process parameters in the model validation. Li et al. [44] proposed an in-situ weld geometry monitoring system based on molten pool features. A novel multi-task CNN model is established to simulta­ neously predict the weld width and depth with a mean absolute per­ centage error of 1.9 % (width) and 3.0 % (depth) and an average timeconsuming of 23.35 ms. Ali et al. [45] applied artificial intelligence techniques to predict the weld bead geometry. The radial basis function neural network showed an outstanding level of accuracy in predicting weld penetration, width, and reinforcement. Accurate molten pool features are the foundation for achieving highprecision monitoring of weld morphology. In this paper, a laser welding weld surface morphology monitoring method based on a lightweight semantic segmentation model improved by an attention mechanism is proposed. Firstly, the correlation between the weld surface morphology and the molten pool contour features was analyzed. Subsequently, an automatic generation method for molten pool semantic segmentation labels was proposed, achieving rapid acquisition of high-precision se­ mantic segmentation labels under strong interference. Then, a light­ weight semantic segmentation model Deeplab-M was constructed with a molten pool segmentation accuracy of 97.43 % and a running speed of 4.23 times higher than commonly used semantic segmentation models. Finally, a method for reconstructing weld surface morphology was proposed considering the solidification characteristics of molten metal. Experimental validation results show that the introduced weld surface morphology monitoring method has the advantages of high accuracy, fast speed, strong anti-interference, and good generalization capability. The remainder of the paper is structured as follows, Section 2 in­ troduces the welding platform and the details analysis of the weld sur­ face morphology. Section 3 shows the background, structure, training process, and validation results of the lightweight semantic segmentation model. Section 4 provides a comprehensive analysis and validation of the performance of the proposed weld surface morphology monitoring method. Finally, conclusions are given in Section 5. Nomenclature CNN FPS PS TR FCN CRF BFEN ASPP MPA ROI CBAM MIoU Convolutional Neural Networks Frames Per Second Penetration Status Transition Region Full Convolutional Network Conditional Random Field Backbone Feature Extraction Network Atrous Spatial Pyramid Pooling Mean Pixel Accuracy Region of Interest Convolutional Block Attention Mechanism Mean Intersection over Union the molten pool, and low differentiation of the boundary between the molten pool and the base material, which makes it difficult to accurately and quickly extract the molten pool contour by traditional image pro­ cessing methods [20]. To accurately observe the molten pool, Luo et al. [21] built a coaxial image acquisition system based on a green auxiliary light source. The molten pool front end is bright, while the tail end is dark. Therefore, the monitoring images were divided into two regions to detect the edge. Meng et al. [22] paraxially acquired the molten pool images with strong optical noise interference and metal vapor plume. A threshold segmentation method was used to process the images, but the segmentation accuracy was susceptible to interference. Chen et al. [23] applied a Fourier transform-based homomorphic filtering algorithm to obtain molten pool features. Results showed that the obtained features can reflect the process stability of welding. Zhang et al. [24] proposed a molten pool surface 3D reconstruction method based on laser dot matrix data. Results showed that the 3D molten pool can provide richer information. Deep learning has extremely strong feature learning ability, gener­ alization ability, and anti-interference ability, and the application in welding visual signal processing is gradually increasing [25,26]. In 2014, Long et al. [27] proposed Fully Convolutional Networks (FCN) that enable semantic segmentation at the pixel level. Nguyen et al. [28] investigated a semantic segmentation method for interaction region images to obtain typical regions, such as molten pool and weld seam geometry. The results showed good agreement between the predicted image and ground truth image. Wang et al. [29] devised a molten pool image segmentation network EPNet to extract the width feature. The molten pool width is controlled by an active disturbance rejection control algorithm to ensure that the weld width is within the specified range. Knaak et al. [30] proposed a Convolutional Neural Networks (CNN) based semantic segmentation method to accurately distinguish the keyhole, molten pool, weld plate, and weld seam. Cai et al. [31] established a segmentation model based on the U-shaped architecture (U-net), and the keyhole and molten pool contours were accurately extracted by semantically segmenting the monitoring images. Baek et al. [32] applied a residual neural network for semantic segmentation of the acquired molten pool image to accurately extract the molten pool shape, which was fed into a back-propagation neural network to predict the penetration depth. Yu et al. [33] investigated a deep learning-based image processing method to obtain molten pool features. The pro­ posed method can achieve end-to-end visual signal processing and ac­ curate detection of molten pool boundaries under various disturbance conditions. Wang et al. [34] designed a multi-scale feature fusion network for semantic segmentation of molten pool contours. The results demonstrate that the constructed network has high accuracy compared with other traditional edge detection algorithms and semantic seg­ mentation networks. The above research indicates that the image pro­ cessing methods based on deep learning have the potential to increase 2 W. Cai et al. Optics and Laser Technology 174 (2024) 110707 2. Experiment setup and the analysis of weld surface morphology weld decreases by 0.28 mm. Fig. 3 shows the monitoring images in different TRs, with an interval of 0.08 s between images. In the TR ①, the molten pool tail size grad­ ually decreases. When the tail shrinkage is completed, the tail closes rapidly, and molten pool morphology changes. In figure No. 5226, the molten pool becomes significantly shorter. In the TR ②, the PS of the weld gradually changes from II to III, and molten pool morphology in­ creases, especially the length feature. The molten pool width gradually becomes wider, and the width of the solidified weld seam is similarly increased. In the TR ③, the weld width becomes significantly narrower. The molten pool width becomes significantly narrower and the solidi­ fication of the melt pool starts from both sides. The internal molten metal solidifies almost simultaneously, as shown in figure No. 18276. In the TR ④, the weld surface morphology gradually produces bulges and depressions. The corresponding molten pool length gradually increases and decreases. In summary, the maximum width of the molten pool is the width of the weld when it solidifies to form the weld. The molten pool tail shrinks to form a weld bulge, and the molten pool solidifies to form a dense pattern. After the shrinkage of the tail of the molten pool, the molten metal is difficult to enter the tail to form a depression and a sparse pattern is formed. In previous studies, it was also shown that the molten pool has different morphological characteristics at different penetration states. Therefore, the weld surface morphology and penetration state can be predicted from the molten pool profile characteristics, especially the width and tail shape features of the molten pool. In this paper, the time interval between adjacent monitoring images is 0.0002 s. In the acquired monitoring images, the morphology of the molten pool changes very slowly. It can be assumed that the quality of the weld is the same over a length range of 0.1 mm and that the molten pool morphology images obtained in this interval are essentially the same. Fig. 4 shows the luminance values of each pixel point in the fused image. The luminance value of the keyhole reaches 255, while the brightness of the pixel points at the tail contour of the molten pool is in the range of 60 ~ 70. The brightness difference between the front and rear end of the molten pool is large, and the brightness value at the tail contour has a small difference (5 ~ 10) from the brightness value of the neighboring section. Therefore, it is difficult to extract the molten pool contour by the traditional threshold segmentation method. 2.1. Experiment setup The experiment setup of the laser welding platform is shown in Fig. 1. The laser is produced by using an IPG YLR-4000 fiber laser device, with a maximum power of 4 kW. A PRECITEC (YW50) laser head mounted on an ABB robot (IRB 4400 M 2004). The laser head is equipped with a focusing lens with a focal length of 250 mm. The 316L austenitic stainless steel (022Cr17Ni12Mo2) is used as welding material which is designed to the appropriate shape for obtaining different penetration states and weld surface morphology, and the specific di­ mensions are shown in Fig. 1 (c). The laser power, welding speed, and shielding gas flow rate are 3 kW, 16 mm/s, and 20 L/min (Argon), respectively. A high-speed camera (Phantom V611) and a pulsed laserassisted light source (CAVILUX Smart) with a wavelength of 808 nm are applied to monitor the welding zone. The images are acquired at a rate of 5000 FPS (Frames Per Second, FPS) with an image resolution of 640 pixels × 480 pixels. Approximately 32,000 monitoring images were acquired during the welding process. A total of 6400 fused images are obtained by using the proposed adaptive fusion method [46]. The PyTorch framework [47] and Matlab are used to process and analyze the acquired original and fusion monitoring images. 2.2. Analysis of weld surface morphology In this paper, the penetration state (PS) of the weld seam can be divided into four categories, namely PS I, II, III, and IV, respectively. Fig. 2 shows the different characteristics of weld surface morphology at different locations. In the PS I, due to regular changes in the morphology of the molten pool tail, the surface of the weld will produce regular bulges and depressions, the width of the weld at the bulge is greater than the depression, the weld width and residual height fluctuations. In the PS II, the change in the morphology of the molten pool tail is reduced, and the surface of the weld is not obvious bulges and depressions. In the PS III and IV, the weld surface morphology fluctuates very little, with the surface morphology forming uniform. In the PS III, when the weld width is uniform, the widest in the four PSs, while in the PS IV, the weld width is significantly reduced. The weld surface morphology changes sub­ stantially in the transition region (TR) of different PS. For example, in TR ④, the width of the top surface of the weld gradually decreases from 3.14 mm to 2.71 mm (0.43 mm). The width of the back surface of the Fig. 1. The experiment setup of laser welding: (a) The schematic of monitoring platform and equipment, (b) The laser welding process, and (c) The schematic of the weld plate. 3 W. Cai et al. Optics and Laser Technology 174 (2024) 110707 Fig. 2. Weld surface morphology in penetration state and transition region. Fig. 3. Fusion images extracted at equal intervals (0.08 s) in the TR ①, ②, ③, and ④. Fig. 4. Molten pool morphology and brightness: (a) fused image, (b) molten pool contour, and (c) distribution of brightness values. 4 W. Cai et al. Optics and Laser Technology 174 (2024) 110707 coding part of the model after cropping the ROI region, firstly, two effective feature layers are obtained by the BFEN, one is a shallow feature compressed twice and one is a preliminary effective feature layer compressed three times. The initial effective feature layers compressed three times are fed into the parallel ASPP module for feature extraction and merging, and then the features are compressed using 1 × 1 convo­ lution to obtain deeper features. The acquisition of shallow and deep features is the task of the encoding part, while the decoding part requires the fusion and analysis of the acquired features to achieve the prediction of pixel points in the molten pool region. The decoding part first passes the shallow features through the CBAM module to ensure the feature learning effect and adjusts the channels using 1 × 1 convolution. Then the obtained deep features are subjected to 2-fold upsampling to make the deep features the same size as the shallow features. Then the two features are fused using the Concat fusion method, and the obtained fused features are passed through the CBAM module and 3 × 3 convolution operation to obtain the final condensed features. Finally, the features are needed to obtain the pre­ diction results of the class to which each pixel point in the image be­ longs. The prediction result acquisition process has two main steps: first, the number of channels of the feature is adjusted by 1 × 1 convolution so that the number of channels matches the number of classes of pixel points; second, the size of the output image is adjusted by upsampling operation so that its width and height match the input image. The model can take an input image of any size and predict it to output a semantic segmented image of the same size. 3. The construction process of the lightweight semantic segmentation model 3.1. The proposed semantic segmentation model In 2014, Chen et al [48] proposed the null/inflated convolution operation in the Deeplabv1 model. In 2016, the Deeplabv2 [49] model was proposed, and the Backbone Feature Extraction Network (BFEN) of the model (VGG [50]) was replaced by the ResNet [51]. The Atrous Spatial Pyramid Pooling (ASPP) module was proposed to concatenate four cavity convolution branches on the output feature map of the BFEN. The Deeplabv3 [52] model was proposed in 2017, and the model improved the structure of the ASPP module by proposing a multi-gridbased method for selecting the cavity coefficients. The model’s mean intersection over union on the Pascal VOC 2012 test dataset improved by 6.6 % over the Deeplabv2 model. In 2018, Chen et al [53] further optimized the Deeplab model by proposing the Deeplabv3 + model, which uses an encoder-decoder structure to obtain finer segmentation boundaries. The Deeplabv3 + model is considered a new peak of se­ mantic segmentation model with various advantages such as encoderdecoder structure, pyramid pooling module, and null convolution. The Deeplabv3 + model has many parameters, is computationally intensive in image segmentation tasks, and requires high hardware equipment. Based on the welding speed and sampling frequency it can be concluded that the monitoring model needs to process about 160 images per second. Therefore, the model must be lightweight while maintaining model accuracy. In this paper, a lightweight Deeplab-M semantic segmentation model is proposed to extract the molten pool contours considering both model running speed and accuracy. The model uses MobileNetv2 as the BFEN, which reduces the number of parameters of the model by nearly 10 times and adopts a null convo­ lution operation at the location where the effective feature layers are obtained to ensure that the model spatial information is not lost. In addition, to ensure the segmentation accuracy of the model, the Con­ volutional Block Attention Mechanism (CBAM) [54] module is used in the shallow feature acquisition part of the model and the part after feature fusion to ensure that the model can learn the key features during the training process. The structure of the constructed Deeplab-M semantic segmentation model is schematically shown in Fig. 5. The model is mainly composed of two parts: encoder and decoder. Monitoring images are input into the 3.2. Semantic segmentation label automatic acquisition method In this paper, the time interval between adjacent monitoring images is 0.0002 s. In the acquired monitoring images, the morphology of the molten pool changes very slowly. It can be assumed that the quality of the weld is the same over a length range of 0.1 mm and that the morphology of the molten pool images (30) obtained in this interval is essentially the same. Therefore, this paper proposes a semantic seg­ mentation label automatic generation method based on high-precision label sharing. As shown in Fig. 6, firstly, a fusion image is obtained by the image adaptive fusion method. Then the high-precision semantic segmentation label of the molten pool region is obtained by manually labeling with the labeling tool LabelMe. Finally, the obtained highprecision semantic segmentation label is used as the label Fig. 5. Schematic diagram of Deeplab-M model structure. 5 W. Cai et al. Optics and Laser Technology 174 (2024) 110707 Fig. 6. Flowchart of the automatic generation method of semantic segmentation labels. corresponding to the 10 monitoring images. The proposed method can quickly obtain a large number of accurate labels, enrich the training set samples, and improve the model accuracy and anti-interference ability. 700 fused images are randomly extracted from the fused image dataset (6400). The LabelMe labeling tool takes about 11 h to manually label the molten pool region. The Matlab software is used to automatically generate semantic segmentation labels for the 7000 monitoring images in about 2 min. 7000 monitoring images and the corresponding labels are used to train the Deeplab-M model, while 700 fused images and the corresponding labels are used for model validation. after 20 generations of training and gradually converges, while the MIoU of the model on the validation set exceeds 90 %. The model is close to convergence after 65 generations of training, with MIoU values fluctuating above 93 %. The model parameters at the highest MIoU values are saved for subsequent model validation and testing. The Deeplab-M model was trained by the same method when the input image size was another three. After the models are trained, the MIoU and MPA values are calculated on the prepared validation set to evaluate the model accuracy, and the model’s real-time performance is evaluated by calculating the FPS values. The model performance with different input-size images is shown in Table 1. The input image size is closely related to the model FPS value, and the smaller the input image size, the better the real-time performance. The Deeplab-M model has the highest accuracy when the input image size is 192 pixels × 72 pixels. When the size is 128 pixels × 48 pixels, the accuracy of the model de­ creases by about 1 %, but the running speed of the model improves significantly, and it can process nearly 55 more monitoring images per second, which has higher real-time performance. When the size is reduced to 64 pixels × 24 pixels, the feature size of the model is very small after downsampling 8 times, and it is difficult to learn sufficient features, so the accuracy of the model is significantly reduced. Consid­ ering the accuracy and real-time performance of the Deeplab-M model, the model trained when the size is reduced to 192 pixels × 72 pixels, is 3.3. The model training and validation To shorten the training time of the semantic segmentation model, the pre-training weights of the BFEN (MobileNetv2) were migrated. In addition, the size of the input image is also closely related to the speed and accuracy of the model. In this paper, four sizes of input images are used to train the Deeplab-M model separately. The input image is also processed for data enhancement before training. The optimizer of Deeplab-M is a stochastic gradient descent algorithm with a momentum value of 0.9 and a weight decay value of 0.0001, and the learning rate reduction method is a cosine annealing algorithm. A cross-entropy loss function is applied to evaluate the model training process by computing the error of prediction results and true labels. The mean intersection over union (MIoU) and mean pixel accuracy (MPA) are applied to measure the model performance. The model is trained on the training set for 100 generations, and MIoU values are calculated on the validation set every 5 generations. The loss and MIoU of the model training process are used to determine whether the model is adequately trained. Fig. 7 shows the variation of the loss and MIoU in the training process (192 pixels × 72 pixels). Due to the sufficient amount of data in the dataset and the enhancement of the data during training, the loss value of the model drops to a low level Table 1 The Deeplab-M performance with different input size images. Image width × height 256 × 96 192 × 72 128 × 48 64 × 24 Input size Model calculation MIoU MPA FPS 0.28 MB 7.46 GB 93.2 % 96.46 % 102.22 0.16 MB 4.2 GB 94.85 % 97.43 % 157.34 0.07 MB 1.87 GB 93.27 % 96.81 % 200.58 0.02 MB 466.37 MB 85.31 % 92.11 % 261.75 Fig. 7. Deeplab-M model training process: (a) loss variation graph and (b) MIoU value variation graph. 6 W. Cai et al. Optics and Laser Technology 174 (2024) 110707 selected for subsequent analysis and validation. The commonly used Unet [55], PSPnet [56], and Deeplabv3 + classic semantic segmentation models are trained separately using the same size input images (192 pixels × 72 pixels). The performance of the different models on the validation set after sufficient training is shown in Table 2. The semantic segmentation models all have high MIoU and MPA values on the validation set, and the models can accurately segment the molten pool region in the monitored images. Among them, the PSPnet has the lowest segmentation accuracy, while the Deeplabv3 + model has the highest segmentation accuracy, and the segmentation accuracy of the constructed Deeplab-M model is better than PSPnet, slightly worse than the Deeplabv3 + model, and similar to the Unet model. The classic semantic segmentation models usually need to segment dozens of categories of targets in the segmentation task, so the model has a huge number of parameters, which leads to a long model operation time and the FPS of the model is less than 40. The Deeplab-M model designed with lightweight and optimization has slightly lower accuracy compared with the Deeplabv3 + model, but the model calcu­ lation is reduced by nearly 6 times. The model runs at a significantly higher speed, with an FPS about 4 times higher than that of Unet, PSPnet, and Deeplabv3 + models, which better meets the real-time re­ quirements of monitoring. Fig. 8 shows the molten pool semantic segmentation results obtained by the four models processing the randomly extracted monitoring im­ ages. The monitoring images with No. 10661, No. 12472 and No. 20259 all have different degrees of strong metal vapor plume interference, and all four models obtain accurate molten pool contours. Among them, the segmentation results of the Deeplabv3 + model have smoother molten pool contours, and the predicted results at the molten pool contours obscured by strong interference are closest to the actual contours. The segmentation results of the PSPnet model have the roughest molten pool contours, especially poor in molten pool contour details. The Deeplab-M and Unet models obtained the molten pool contours consistent with the actual molten pool contour, and the Deeplab-M model is better in the segmentation of the contour details, which can also reasonably predict the masking area when the metal vapor plume interference is strong. this paper. The molten pool is the target region that needs to be ac­ quired, therefore the higher the classification accuracy of the molten pool, the more accurate the molten pool region acquired by the model. As a result, the molten pool segmentation accuracy of the Deeplab-M model can reach 94.95 %, which is only slightly lower than that of the Deeplabv3 + model. Fig. 10 shows the morphological variation of the test weld seam and the semantic segmentation images of the fused images (interval 50 im­ ages). The weld surface morphology size fluctuates very much, espe­ cially in the TR between different PSs. The molten pool morphology in the corresponding region also changes substantially in the regions where the weld morphology fluctuates a lot. Dimensional changes in the weld surface are consistent with changes in the molten pool contour. Several fusion images with strong interference were selected to analyze the prediction ability of the Deeplab-M model. The input images and the corresponding semantic segmentation results are shown in Fig. 11. The contours of the molten pool in the fused image are not obscured. Although there is strong optical noise interference near the molten pool contour, the Deeplab-M model can accurately predict the pixels in the molten pool region. The molten pools in the semantic segmentation images have almost identical morphology and contours as those in the monitoring images. As shown in Fig. 12, among the randomly selected original moni­ toring images, the brightness reaches the highest value of 255 when the metal vapor plume interference is very strong, such as the monitoring images of No. 1860, No. 12025, and No. 24964. The occluded portion can be accurately predicted by the Deeplab-M, and the predicted molten pool contours are close to those in the neighboring images with weaker interference. As shown in figures No.7709, No.9369, and No.15427, the molten pool region can be accurately predicted for monitoring images with weak metal vapor plume interference. The semantic segmentation results of the monitoring images in Fig. 11 and Fig. 12 show that the constructed semantic segmentation model can accurately acquire the molten pool contours under different disturbances. There are three main reasons for the model to have high accuracy. 1) The proposed method of automatic label generation enables the molten pool region obscured by interference to be accurately labeled. 2) Abundant training data allows the model to be adequately trained. 3) The attention mechanism of the model improves the feature learning ability. In summary, the constructed Deeplab-M model is highly resistant to metal vapor plume, molten pool reflection, and optical noise interference. 3.4. Analysis of the image segmentation results The test weld seam was obtained under the same experimental conditions and the corresponding 30,000 monitored images, and 6000 fused images were used to further evaluate the generalization capability of the Deeplab-M model. 200 fused images were extracted at equal in­ tervals (30) to form a model performance test set, and then the molten pool regions were labeled with LabelMe software. The results of the segmentation accuracy of 200 fused images on different semantic seg­ mentation models are shown in Table 3. The mean pixel accuracy of the constructed lightweight semantic segmentation model can reach 96.46 % on the test set. The semantic segmentation accuracy of the model is close to the traditional classical models Unet and Deeplabv3+, and better than PSPnet. Fig. 9 shows the confusion matrix of the classification accuracy of different semantic segmentation models on the test set. Semantic seg­ mentation implements pixel-level classification, whereas it is required to classify the pixels in an image as either a molten pool or background in 4. Weld surface morphology monitoring 4.1. The molten pool contour feature extraction method The molten pool length (Lm), width (Wm), waist width (Wwm), and tail morphology are closely related to the weld surface morphology [57]. Fig. 13 shows the schematic diagram of the definition of the molten pool morphology features and the feature extraction process. The width of the molten pool is directly related to the weld width, while the variation of the molten pool length can be used to analyze fluctuations in the weld seam height. The molten pool waist width feature can reflect the continuous occurrence of bumps and depressions in the weld seam. The waist features are extracted at 300 pixels from the left side of the image. In addition, the molten pool features are not independent of each other, and all features vary to varying degrees when the weld surface shape is different. The feature extraction process can be divided into three steps: firstly, extract the molten pool contour in the semantic segmentation image. Then coordinate the contour image to obtain six key points (orange). Finally, calculate the length, width, and waist width features based on the coordinates of the six points. Fig. 14 shows the variation of molten pool morphological features in 6000 semantic segmentation images on the test weld seam. The weld width can reflect the variation of the weld surface morphology. The Table 2 Performance comparison of different semantic segmentation models (192 pixels × 72 pixels). Model name Unet PSPnet Deeplabv3+ Deeplab-M BFEN Model calculation MIoU MPA FPS VGG 16 26.48 GB 94.65 % 97.45 % 37.95 ResNet 50 19.55 GB 93.31 % 96.06 % 39.57 Xception 25.57 GB 95.13 % 97.46 % 37.22 MobileNet v2 4.2 GB 94.85 % 97.43 % 157.34 7 W. Cai et al. Optics and Laser Technology 174 (2024) 110707 Fig. 8. Comparison of semantic segmentation images obtained by different models. welding process is stable. The extracted length, width, and waist width features do not change significantly and fluctuate slightly within a certain range, which can be set as the threshold interval for the assess­ ment of the quality of the weld surface morphology. When the feature value continuously exceeds the threshold value, it indicates that the weld surface morphology quality produces large fluctuations and re­ alizes real-time monitoring. Table 3 Performance comparison of different semantic segmentation models on the test set. Model name Unet PSPnet Deeplabv3+ Deeplab-M MIoU MPA 93.48 % 96.54 % 92.10 % 96.01 % 93.27 % 96.81 % 93.13 % 96.46 % 4.2. The weld surface morphology reconstruction method extracted weld width data fluctuates significantly, especially in the PS I and transition region between different PSs. When the penetration state is III and IV, the weld surface width data fluctuates less, and the surface morphology of the corresponding weld area is uniform. In PS I, the weld surface produces multiple consecutive bumps and depressions, the weld surface morphology fluctuates, and multiple consecutive peaks and valleys appear in the corresponding weld width data. As shown in Fig. 14, the variation pattern of the extracted molten pool width feature is in full agreement with the variation pattern of the weld width, indicating that the width feature of the molten pool is highly correlated with the weld width. The length feature of the molten pool changes more dramatically and significantly during the welding process, especially when the PS changes, such as the TR ② and TR ③. The molten pool length is shorter in the PS II than in the PS I. The width, length, and waist width features of the molten pool can reflect the change in the molten pool morphology, where the waist feature is the main feature to analyze the change of the molten pool tail morphology, which can accurately reflect the changing pattern of the tail morphology during the welding process. When the weld produces a bulge, the molten pool tail will gradually shrink and the feature will become smaller. When the necking is completed, the feature will become significantly larger. When the weld surface is well formed, the shape of the molten pool in the During the welding process, the molten metal continuously solidifies along the molten pool sides and trailing edges to form welds, which have a pattern formed by the solidification of the molten metal, consistent with the contour of the molten pool. Based on the welding speed and sampling frequency, it can be concluded that a total of about 30 moni­ toring images, i.e. 6 fusion images, were acquired in the 0.1 mm length range, and the molten pool moves forward by 5 pixel frames. Based on the fact that the weld quality is the same in the 0.1 mm weld length range, one of the 6 fused images can be selected to reflect the corre­ sponding molten pool characteristics. To analyze the weld surface morphology more intuitively, a weld surface morphology reconstruction method is proposed in this paper. As shown in Fig. 15, the proposed weld surface reconstruction method is based on the semantic segmentation image. The key points (orange) for calculating the molten pool width feature are obtained during the feature extraction process. The molten pool profile between the widest part and the tail part is extracted based on the key points as the molten metal solidifies along the sides and trailing edges of the molten pool. Finally, each subsequent fusion image profile extracted in every 6th fusion image is therefore moved forward by 5-pixel frames compared to the previous fusion image at the time of reconstruction. The Fig. 9. The confusion matrix of different semantic segmentation models on the test set: (a) Unet, (b) PSPnet, (c) Deeplabv3+, and (d) Deeplab-M. 8 W. Cai et al. Optics and Laser Technology 174 (2024) 110707 Fig. 10. Test weld surface morphology and semantic segmentation images. Fig. 11. The Deeplab-M molten pool prediction results of fusion images on test weld. Fig. 12. The Deeplab-M molten pool prediction results of original monitoring images on test weld. reconstructed weld surface morphology image consists of the molten pool contours, and the sparsity between the contours of the molten pool reflects the change in the weld surface morphology. When the quality of the weld is changed, the molten pool morphology changes significantly, and the reconstructed weld surface morphology can reflect the quality of the weld visually. The proposed reconstruction method was used to predict the weld surface morphology of the test weld seam, and the result is shown in 9 W. Cai et al. Optics and Laser Technology 174 (2024) 110707 Fig. 13. Schematic of molten pool feature definition and extraction process. Fig. 14. Changes in weld surface morphology, weld width, and molten pool characteristics. Fig. 15. Schematic diagram of weld surface morphology reconstruction process. Fig. 16. Comparison result between scanned and reconstructed images of weld surface morphology. 10 W. Cai et al. Optics and Laser Technology 174 (2024) 110707 molten pool length and waist width can be monitored for changes in weld height, which is very evident when there are bumps and de­ pressions, as shown in the blue circle area in the figure. In summary, the proposed weld surface morphology monitoring method can quickly and accurately predict the weld morphology size through the molten pool characteristics and the reconstructed weld morphology image, providing reliable data support for controlling the process parameter to control the weld morphology size (height and width) within the speci­ fied deviation, and effectively prevent the occurrence of weld morphology size failure defects. Fig. 16. The predicted weld surface morphology is almost identical to the actual surface profile of the weld in the scanned image. The weld surface bumps and depressions that occur in the PS I and II can be reflected by the sparseness between the melt pool contours. When the penetration state is III and IV, the weld shape is uniform, the reconstructed weld surface morphology is evenly spaced between the molten pools, and the weld width and height change is very small. In the TR between different PS, the molten pool width and tail profile changes accurately reflect the dynamics of weld surface morphology. The reconstructed image can visually reflect the change in the quality of the weld surface profile. 5. Conclusions 4.3. Validation of the weld surface morphology monitoring method This paper presents a detailed study of the weld surface morphology monitoring method based on the pixel-level semantic segmentation approach. The semantic segmentation label automatic generation method is proposed. A lightweight melt pool semantic segmentation model is constructed, and the weld surface morphology reconstruction method is studied. The main conclusions can be drawn as follows. Another test weld was obtained on the same experimental platform with a weld plate size of 200 mm × 100 mm × 4 mm. The laser power and welding speed during the welding process are 3 kW and 16 mm/s, respectively. 24,000 monitoring images and 4800 fusion images are taken to evaluate the capability of the weld surface morphology moni­ toring method. As shown in Fig. 17, the PS of the entire weld belongs to the third category (PS III). It can be seen that there are small-sized spatter defects near the weld seam and small fluctuations in the weld surface morphology, with larger bumps and depressions forming at the blue circles’ area. Monitoring images are extracted at equal intervals (1500) and the corresponding semantic segmentation images are ob­ tained by the Deeplab-M model. Strong spatter, metal vapor plume, and light noise interference are seen in the monitoring images. For example, spatter obscures the molten pool contour in figure No. 2000, and metal vapor plume obscures larger areas of the molten pool in figures No. 17000 and No. 18500. There is obvious reflection interference inside the molten pool and strong optical noise interference in the molten pool tail. As shown in figure No. 17000 and No. 18500, the molten pool contours in the obtained segmentation images match highly with the monitoring images, and accurate results can be obtained even for regions with strong interference, Fig. 18 illustrates the monitoring results on the test weld using the proposed weld surface morphology monitoring method. The variation pattern of width and height in the reconstructed image of weld surface morphology is consistent with the weld in the scanned image. The sparseness of the contour line at the molten pool tail in the blue circle can be judged by the presence of bulges and depressions in the weld at that location. The weld width variation can be monitored by analyzing the molten pool width feature, and it can be obtained that the weld width varies in a small range without any defective weld width. The (1) The correlation between the molten pool profile characteristics and the surface morphology was analyzed, and the molten pool width, length, and tail profile characteristics were found to be closely related to the weld surface morphology size (width and height). (2) An automatic generation method of semantic segmentation labels based on high-precision label sharing of fused images is proposed to achieve batch acquisition of high-precision semantic segmen­ tation labels under strong interference to improve the depth and breadth of training data. (3) A lightweight semantic segmentation model Deeplab-M based on the attention mechanism was constructed. The segmentation ac­ curacy of the molten pool is 94.95 % on the test set, and the model runs more than four times faster than classical Unet, PSPnet, and Deeplabv3 +. (4) A method for reconstructing the weld surface morphology was proposed considering the solidification of the molten metal. Through the molten pool contour features and the reconstructed image, fast and accurate monitoring of the weld surface morphology (width and height) was achieved. The monitoring method proposed in this paper enables fast speed and highly accurate monitoring of weld surface morphology. Obtaining Fig. 17. The scanned image, monitoring images, and semantic segmentation images in test weld. 11 W. Cai et al. Optics and Laser Technology 174 (2024) 110707 Fig. 18. Results of weld surface morphology monitoring on test weld. high-precision signal features is the basis for realizing high-accuracy weld quality monitoring, and the application of deep learning in signal processing, feature extraction, and welding state analysis deserves to be further investigated in future work. [4] K.-M. Hong, Y.C. Shin, Prospects of laser welding technology in the automotive industry: A review, J. Mater. Process. Technol. 245 (2017) 46–69. [5] L. Cao, J. Li, J. Hu, H. Liu, Y. Wu, Q. Zhou, Optimization of surface roughness and dimensional accuracy in LPBF additive manufacturing, Opt. Laser Technol. 142 (1) (2021) 107246. [6] Y. Cai, J. Xiong, H. Chen, G. Zhang, A review of in-situ monitoring and process control system in metal-based laser additive manufacturing, J. Manuf. Syst. 70 (2023) 309–326. [7] T. Lei, Y. Rong, H. Wang, Y. Huang, M. Li, A review of vision-aided robotic welding, Comput. Ind. 123 (2020) 103326. [8] ISO 6520-1: 2007 (E/F): Welding and allied processes—Classification of geometric imperfections in metallic materials—Part 1: Fusion welding, International Standards Organization, Switzerland, 2007. [9] Y. Kawahito, H. Wang, S. Katayama, D. Sumimori, Ultra high power (100 kW) fiber laser welding of steel, Opt. Lett. 43 (19) (2018) 4667–4670. [10] Y. Zhang, C. Zhang, L. Tan, S. Li, Coaxial monitoring of the fibre laser lap welding of Zn-coated steel sheets using an auxiliary illuminant, Opt. Laser Technol. 50 (2013) 167–175. [11] W. Cai, J.Z. Wang, P. Jiang, L.C. Cao, G.Y. Mi, Q. Zhou, Application of sensing techniques and artificial intelligence-based methods to laser welding real-time monitoring: A critical review of recent literature, J. Manuf. Syst. 57 (2020) 1–18. [12] G. Ma, L. Yu, H. Yuan, W. Xiao, Y. He, A vision-based method for lap weld defects monitoring of galvanized steel sheets using convolutional neural network, J. Manuf. Process. 64 (2021) 130–139. [13] S.Y. Zou, Z.J. Wang, S.S. Hu, W.D. Wang, Y. Cao, Control of weld penetration depth using relative fluctuation coefficient as feedback, J. Intell. Manuf. 31 (5) (2019) 1203–1213. [14] M. Gashi, P. Ofner, H. Ennsbrunner, S. Thalmann, Dealing with missing usage data in defect prediction: A case study of a welding supplier, Comput. Ind. 132 (2021) 103505. [15] Y.-K. Liu, Y.-M. Zhang, Supervised Learning of Human Welder Behaviors for Intelligent Robotic Welding, IEEE Trans. Autom. Sci. Eng. 14 (3) (2017) 1532–1541. [16] Z. Zhao, L. Deng, L. Bai, Y. Zhang, J. Han, Optimal imaging band selection mechanism of weld pool vision based on spectrum analysis, Opt. Laser Technol. 110 (2019) 145–151. [17] W. Hao, Z. Jundong, B. Zhenglan, H. Anduo, C. Fenghong, M. Yue, Study of weld pool monitoring system based on spatial filtering, J. Manuf. Process. 76 (2022) 638–645. [18] Y. Zhang, Z. Zhao, Y. Zhang, L. Bai, K. Wang, J. Han, Online weld pool contour extraction and seam width prediction based on mixing spectral vision, Opt. Rev. 26 (1) (2018) 65–76. [19] Y. Kawahito, M. Mizutani, S. Katayama, High quality welding of stainless steel with 10 kW high power fibre laser, Sci. Technol. Weld. Join. 14 (4) (2013) 288–294. [20] D.Y. You, X.D. Gao, S. Katayama, Review of laser welding monitoring, Sci. Technol. Weld. Join. 19 (3) (2013) 181–201. [21] M. Luo, Y.C. Shin, Vision-based weld pool boundary extraction and width measurement during keyhole fiber laser welding, Opt. Lasers Eng. 64 (2015) 59–70. [22] W. Meng, X.H. Yin, J.F. Fang, L.J. Guo, Q.S. Ma, Z.G. Li, Dynamic features of plasma plume and molten pool in laser lap welding based on image monitoring and processing techniques, Opt. Laser Technol. 109 (2019) 168–177. [23] Z.Q. Chen, X.D. Gao, Detection of weld pool width using infrared imaging during high-power fiber laser welding of type 304 austenitic stainless steel, Int. J. Adv. Manuf. Technol. 74 (9–12) (2014) 1247–1254. CRediT authorship contribution statement Wang Cai: Conceptualization, Data curation, Formal analysis, Investigation, Software, Writing – original draft. Leshi Shu: Project administration, Validation, Writing – review & editing. Shaoning Geng: Supervision, Writing – review & editing. Qi Zhou: Writing – review & editing. Longchao Cao: Funding acquisition, Data curation, Supervi­ sion, Validation, Writing – review & editing. Declaration of competing interest The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper. Data availability Data will be made available on request. Acknowledgements This work was supported by the National Natural Science Foundation of China under Grant No. 52105446, the Natural Science Foundation of Hubei Province under Grant No.2023AFB878, the Open Project of the State Key Laboratory of Intelligent Manufacturing Equipment and Technology under Grant No.IMETKF2023011, and the Knowledge Innovation Program of Wuhan-Shuguang Project under Grant No.2022010801020252. References [1] A.K. Dubey, V. Yadava, Laser beam machining—A review, Int J Mach Tool Manu 48 (6) (2008) 609–628. [2] J. Chen, T. Wang, X. Gao, L. Wei, Real-time monitoring of high-power disk laser welding based on support vector machine, Comput. Ind. 94 (2018) 75–81. [3] Z. Tang, L. Wan, H. Yang, P. Ren, C. Zhu, Y. Wu, H. Wang, H. Wang, Stable conduction mode welding of conventional high-reflectivity metals with 2000 W blue laser, Opt. Laser Technol. 168 (2024). 12 W. Cai et al. Optics and Laser Technology 174 (2024) 110707 [40] G.X.Y. Zhang, S. Katayama, Weld appearance prediction with BP neural network improved by genetic algorithm during disk laser welding, J. Manuf. Syst. 34 (2015) 53–59. [41] N. Chandrasekhar, M. Vasudevan, A.K. Bhaduri, T. Jayakumar, Intelligent modeling for estimating weld bead width and depth of penetration from infra-red thermal images of the weld pool, J. Intell. Manuf. 26 (1) (2013) 59–71. [42] Z.L. Lei, J.X. Shen, Q. Wang, Y.B. Chen, Real-time weld geometry prediction based on multi-information using neural network optimized by PCA and GA during thinplate laser welding, J. Manuf. Process. 43 (2019) 207–217. [43] S. Oh, H. Ki, Cross-section bead image prediction in laser keyhole welding of AISI 1020 dteel using deep learning architectures, IEEE Access 8 (2020) 73359–73372. [44] H. Li, H. Ren, Z. Liu, F. Huang, G. Xia, Y. Long, In-situ monitoring system for weld geometry of laser welding based on multi-task convolutional neural network model, Measurement 204 (2022). [45] A.N. Ahmed, C.W.M. Noor, M.F. Allawi, A. El-Shafie, RBF-NN-based model for prediction of weld bead geometry in Shielded Metal Arc Welding (SMAW), Neural Comput. & Applic. 29 (3) (2016) 889–899. [46] W. Cai, P. Jiang, L. Shu, S. Geng, Q. Zhou, Real-time laser keyhole welding penetration state monitoring based on adaptive fusion images using convolutional neural networks, J. Intell. Manuf. (2021). [47] A. Paszke, S. Gross, F. Massa, A. Lerer, J. Bradbury, G. Chanan, T. Killeen, Z.M. Lin, N. Gimelshein, L. Antiga, A. Desmaison, A. Köpf, E. Yang, Z. DeVito, M. Raison, A. Tejani, S. Chilamkurthy, B. Steiner, L. Fang, J.J. Bai, S. Chintala, PyTorch: an imperative style, high-performance deep learning library, Mach. Learn. (2019). [48] L.C. Chen, G. Papandreou, I. Kokkinos, K. Murphy, A.L. Yuille, Semantic image segmentation with deep convolutional nets and fully connected CRFs, arXiv (2014). [49] L.C. Chen, G. Papandreou, I. Kokkinos, K. Murphy, A.L. Yuille, DeepLab: semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs, IEEE Trans. Pattern Anal. Mach. Intell. 40 (4) (2018) 834–848. [50] K. Simonyan, A. Zisserman, Very deep convolutional networks for large-scale image recognition, Computer Vision and Pattern Recognition (2014). [51] K. He, X. Zhang, S. Ren, J. Sun, Deep Residual Learning for Image Recognition, in: Proceedings of the IEEE conference on computer vision and pattern recognition, 2016. [52] L.C. Chen, G. Papandreou, F. Schroff, H. Adam, Rethinking atrous convolution for semantic image segmentation, (2017). [53] L.C. Chen, Y.K. Zhu, G. Papandreou, F. Schroff, H. Adam, Encoder-decoder with atrous separable convolution for semantic image segmentation, ECCV (2018). [54] S. Woo, J. Park, J.Y. Lee, I.S. Kweon, CBAM: convolutional block attention module, ECCV (2018). [55] O. Ronneberger, P. Fischer, T. Brox, U-Net: convolutional networks for biomedical image segmentation, ArXiv abs/1505.04597 (2015). [56] H. Zhao, J. Shi, X. Qi, X. Wang, J. Jia, Pyramid scene parsing network, in: IEEE Computer Society, 2016. [57] W. Cai, P. Jiang, L. Shu, S. Geng, Q. Zhou, Real-time monitoring of laser keyhole welding penetration state based on deep belief network, J. Manuf. Process. 72 (2021) 203–214. [24] W.J. Zhang, X. Zhang, Y.M. Zhang, Robust pattern recognition for measurement of three dimensional weld pool surface in GTAW, J. Intell. Manuf. 26 (4) (2013) 659–676. [25] R. Yu, Y. Cao, H. Chen, Q. Ye, Y. Zhang, Deep learning based real-time and in-situ monitoring of weld penetration: Where we are and what are needed revolutionary solutions? J. Manuf. Process. 93 (2023) 15–46. [26] W. Cai, L. Shu, S. Geng, Q. Zhou, L. Cao, Weld beads and defects automatic identification, localization, and size calculation based on a lightweight fully convolutional neural network, Opt. Laser Technol. 170 (2024). [27] J. Long, E. Shelhamer, T. Darrell, Ieee, Fully convolutional networks for semantic segmentation, IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, 2015, pp. 3431-3440. [28] N.P. Nguyen, C. Nayak, M. Brosda, A. Olowinsky, H. Leitte, A. Gillner, Process Monitoring in Absorber-Free Laser Transmission Welding of Plastics by Using Deep Learning Algorithms, J. Laser Micro Nanoeng. 16 (3) (2021) 166–172. [29] Y. Wang, J. Lu, Z. Zhao, W. Deng, J. Han, L. Bai, X. Yang, J. Yao, Active disturbance rejection control of layer width in wire arc additive manufacturing based on deep learning, J. Manuf. Process. 67 (2021) 364–375. [30] C. Knaak, G. Kolter, F. Schulze, M. Kroger, P. Abels, Deep learning-based semantic segmentation for in-process monitoring in laser welding applications, Conference on Applications of Machine Learning, San Diego, CA, 2019, p. 11139. [31] W. Cai, P. Jiang, L. Shu, S. Geng, Q. Zhou, Real-time identification of molten pool and keyhole using a deep learning-based semantic segmentation approach in penetration status monitoring, J. Manuf. Process. 76 (2022) 695–707. [32] D. Baek, H.S. Moon, S.H. Park, In-process prediction of weld penetration depth using machine learning-based molten pool extraction technique in tungsten arc welding, (2022). [33] R. Yu, J. Kershaw, P. Wang, Y. Zhang, Real-time recognition of arc weld pool using image segmentation network, J. Manuf. Process. 72 (2021) 159–167. [34] Y. Wang, J. Han, J. Lu, L. Bai, Z. Zhao, TIG Stainless Steel Molten Pool Contour Detection and Weld Width Prediction Based on Res-Seg, Metals 10 (11) (2020). [35] S. Liu, G.Y. Mi, F. Yan, C.M. Wang, P. Jiang, Correlation of high power laser welding parameters with real weld geometry and microstructure, Opt. Laser Technol. 94 (2017) 59–67. [36] V.V. Semak, B. Damkroger, S. Kempka, Temporal evolution of the temperature field in the beam interaction zone during laser material processing, J. Phys. D Appl. Phys. 32 (15) (1999) 455–470. [37] Y.W. Ai, P. Jiang, C.M. Wang, G.Y. Mi, S.N. Geng, W. Liu, C. Han, Investigation of the humping formation in the high power and high speed laser welding, Opt. Lasers Eng. 107 (2018) 102–111. [38] S. Li, G. Chen, M. Zhang, Y. Zhou, Y. Zhang, Dynamic keyhole profile during highpower deep-penetration laser welding, J. Materi. Process. Tech 214 (3) (2014) 565–570. [39] Y.W. Ai, P. Jiang, X.Y. Shao, P. Li, C.M. Wang, G.Y. Mi, S.N. Geng, Y. Liu, W. Liu, The prediction of the whole weld in fiber laser keyhole welding based on numerical simulation, Appl. Therm. Eng. 113 (2017) 980–993. 13