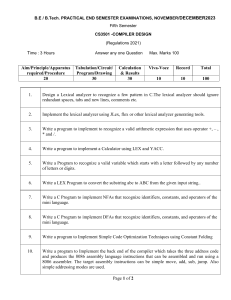

Ex. No.: 1

Lexical Analyzer Using C

Aim:

To Write a program for implementing Lexical Analyzer in ‘C’

Algorithm:

STEP 1: Create files for storing keywords, Operators, and a program as an input.

STEP 2: Read the source file as input.

STEP 3: Scan the first character.

STEP 4: Check the character begin with alphabet or digit using the functions

isalpha() and isdigit().

STEP 5: If isdigit() returns true then print it as a constant.

STEP 6: If isalpha() returns true check it with keywords in the keyword file.

STEP 7: If any word matches with the keywords in the file then print it as keyword

otherwise print it as an identifier.

Pseudo code:

BEGIN

IMPORT headers

DECLARE POINTERS fsur, fkey, fopr

SET set TO 0

ASSIGN source.text FILE to fsur

ASSIGN keyword.txt FILE to fkey

ASSIGN operator.txt FILE to fopr

READ keywords AND identifiers

IF input IS an ALPHABET

THEN ASSIGN input character c TO str[i]

END IF

ASSIGN ‘\0’ TO str[i]

REWIND fkey

IF strcmp(key,str) EQUALS 0

1

THEN SET set EQUAL to 1

BREAK

END IF

IF set EQUALS 0

PRINT “identifier”

IF input IS a DIGIT

PRINT “numeric literal”

IF input IS a STRING

PRINT “string literal”

IF input IS a CHARACTER

PRINT “character literal”

IF input IS a processor

PRINT “processor”

IF input IS a bracket

PRINT “open symbol” OR “close symbol”

IF input IS a punctuation

PRINT “punctuation”

IF input EQUALS “/”

PRINT “comment lines”

IF input IS an OPERATOR

PRINT “operator”

RETURN 0

Explanation:

Compiler is responsible for converting high level language in machine language. There are

several phases involved in this and lexical analysis is the first phase. The lexical analyzer is the

part of the compiler that detects the token of the program and sends it to the syntax analyzer.

Token is the smallest entity of the code, it is a keyword, identifier, constant, string literal,

symbol. Examples of different types of tokens in C. Token is the smallest entity of the code, it

is a keyword, identifier, constant, string literal, symbol. The process of forming tokens from an

input stream of characters is called tokenization.

2

Different types of tokens in C.

Keywords: for, if, include, etc

Identifier: variables, functions, etc

separators: ‘,’, ‘;’, etc

operators: ‘-’, ‘=’, ‘++’, etc

Example 1:

Consider the expression Sum=3+2 in C programming language:

Lexeme

Sum

Token category

identifier

=

assignment operator

3

integer

+

addition operator

2

integer

;

end of the statement

Example 2:

Consider the expression if ( x > 3.1 in C programming language:

3

Sample Input and Output:

Input:

// demo program- source.txt

# define N 30

int main()

{

float a = 61.37;

char str1 = ‘s’;

printf(“%c”, str1);

return 0;

}

Keyword.txt

define int main float char printf return if while for

Operator.txt

+ - * /

= % ==

><>= <=

Output:

# is a preprocessor symbol

define is a keyword.

N is an identifier

20 is a numeric literal

int is a keyword

main is a keyword

(

is an open brace

)

is an close braces

{

is an open braces

float is a keyword

a is an identifier

=

is an operator

61.37 is a numeric literal

;

is end of statement.

4

char is a keyword

str1 is an identifier

= is an operator

s is a character literal

printf is a keyword

(

is an opening braces

“%c” is a string literal

,

is a punctuation

strl

is an identifier

)

is an closing braces

;

is end of statement

return

is a keyword

0 is a numeric literal

} is a closing braces

Result:

Thus, the program to implement lexical analyzer using C was created and its output was verified.

5

Ex. No.: 2

To Ignore Redundant Spaces, Tabs, and New Lines Using

C Program

Aim:

To write a program in C to ignore redundant spaces, tabs, and new lines.

Algorithm:

STEP 1: Read the source file.

STEP 2: Recognize numbers using isdigit() function

STEP 3: Recognize keywords and Identifiers using isalpha() function

STEP 4: Write function to ignore whitespace, tabs and newline

STEP 5: Close the files and print the output.

STEP 6: End.

Pseudo code:

BEGIN

IMPORT libraries

DECLARE input variable

DECLARE a character variable AS previous

READ source FILE

READ next character FROM input

IF character EQUALS space OR tab OR newline

IF previous IS NOT space OR tab OR newline

PRINT space

SET previous flag TO TRUE

IF character NOT a space OR tab OR newline

PRINT character

SET previous flag TO FALSE

END

6

Explanation:

In C programming, white space refers to characters such as space, tab, and newline. These

characters are often used for formatting and are not significant in the logic of a program.

However, they can make reading and analyzing the code more difficult. Scanners reads the

source program one character at a time, carving the source program into a sequence of atomic

units called tokens.

The lexical analyzer is the first phase of compiler. Its main task is to read the input characters

and produce output a sequence of tokens that the parser uses for syntax analysis. Upon receiving

a “get next token” command from the parser the lexical analyzer reads input characters until it

can identify the next token.

One such task is stripping out from the source program comments and white space in the form

of blank, tab, and new line character. Another is correlating error messages from the compiler

with the source program. removing white space can improve the readability and maintainability

of your code. By reducing clutter and improving the structure of your code, you can make it

easier to understand and modify over time.

Sample Input and Output:

Input:

Enter the c program

int main()

{

int a=10,20;

charch;

float f;

}

Output:

The numbers in the program are: 10 20

The keywords and identifiers are:

int is a keyword

main is an identifier

7

int is a keyword

a is an identifier

char is a keyword

ch is an identifier

float is a keyword

f is an identifier

Special characters are ( ) { = , ; ; ; }

Total no. of lines are:5

Result:

Thus, the program to ignore redundant spaces, tabs and new lines was executed successfully.

8

Ex. No.: 3

Lexical Analyzer Using LEX Tool

Aim:

To write a program to implement Lexical Analyzer using LEX tool.

Algorithm:

STEP 1: Define and include necessary declarations and Regular definitions.

STEP 2: Define the Translation Rule section for Keywords, Identifiers, Constants,

operators and Literal Strings

STEP 3: Read the input from a file.

STEP 4: Lex tool will compare the input with patterns defined under translation

rule section

STEP 5: If any of the pattern matches with the input respective action will be

performed.

STEP 6: End.

Pseudo code:

BEGIN

IMPORT headers

DECLARE lex symbols

DECLARE id

READ input FROM file

CALL yylex()

DEFINE translation rules WITH patterns AND actions

COMPARE using lex tool

DEFINE auxiliary function()

SET integer expression

SET float expression

SET operator expression

SET identifier expression

SET punctuation expression

9

SET preprocessor expression

SET string literal expression

IF pattern MATCHES

PERFORM actions

PRINT output

END

Explanation:

It is a tool or software which automatically generates a lexical analyzer (finite Automata). It

takes as its input a LEX source program and produces lexical Analyzer as its output. Lexical

Analyzer will convert the input string entered by the user into tokens as its output.

In program with structure input-output two tasks occurs over and over. It can divide the inputoutput into meaningful units and then discovering the relationships among the units for C

program (the units are variable names, constants, and strings). This division into units (called

tokens) is known as lexical analyzer or LEXING. LEX helps by taking a set of descriptions of

possible tokens n producing a routine called a lexical analyzer or LEXER or Scanner.

Lex file format

A Lex program is separated into three sections by %% delimiters. The format of Lex source is

as follows:

{ definitions }

%%

{ rules }

%%

{ user subroutines }

•

Definitions include declarations of constant, variable and regular definitions.

•

Rules define the statement of form p1 {action1} p2 {action2}....pn {action}, Where pi

describes the regular expression and action1 describes the actions what action the

lexical analyzer should take when pattern pi matches a lexeme.

•

User subroutines are auxiliary procedures needed by the actions.

•

The subroutine can be loaded with the lexical analyzer and compiled separately.

10

Sample Lex program:

Lex program for Sum of two numbers

%{

#include<stdio.h>

%}

%%

%%

main()

{

int a=5,b=10;

printf(“sum=%d”,a+b);

return 0;

Commands to execute:

$ lex filename.l

// will create a file called lex.yy.c

$ cc lex.yy.c –lfl // compiling and linking flex library

$ ./a.out

Sample Input and Output:

Input:

source.c

# include<stdio.h>

void main()

{

int a;

A= 10 +2;

11

Printf(“%d”, a);

}

Output:

# include<stdio.h> is a preprocessor

A keyword: void

A keyword: main

( is a punctuation

) is a punctuation

{

is a punctuation

A keyword: int

An identifier: a

; is a punctuation

An identifier : a

An operator : =

An integer: 10

An operator : +

An integer: 2

; is a punctuation

A keyword :printf

(

is a punctuation

“%d” is a literal string

, is a punctuation

An identifier : a

) is a punctuation

; is a punctuation

} is a punctuation

Result:

Thus, Program to implement Lexical Analyzer using ‘lex’ tool was executed and verified.

12

Ex. No.: 4

Predictive Parser

Aim:

To write a program to implement Lexical Analyzer using LEX tool.

Algorithm:

STEP 1: Initialize stack and push starting symbol onto it.

STEP 2: Initialize index for input string

STEP 3: Repeat until stack is empty

STEP 4: Get top of stack

STEP 5: If symbol is a terminal, match it with current input token

STEP 6: If the symbols match, move to the next input token

STEP 7: If the symbols don't match, the input is not in the language

STEP 8: If symbol is a non-terminal, use the prediction table to get the production to

use production = predictionTable[symbol][input[inputIndex]]

STEP 9: If a production is found, push its symbols onto the stack in reverse order

STEP 10: If no production is found, the input is not in the language

STEP 11: If the stack is empty and the input has been fully processed, the input is in

the language return "Input is in the language" else return "Error: Input not

in language"

Pseudo code:

BEGIN

IMPORT headers

INITIALIZE stack AND PUSH start symbol

INITIALIZE index

REPEAT until stack IS EMPTY

GET top OF stack

IF symbol EQUALS terminal

MATCH WITH current token

IF symbol EQUALS match

MOVE TO next token

IF symbol NOT MATCHED

13

RETURN “input is not in the language”

IF symbol IS a non-terminal

SET production = predictionTable[symbol][input[inputIndex]]

IF production FOUND

PUSH ONTO stack IN reverse order

IF production NOT FOUND

RETURN “input is not in the language”

IF stack IS EMPTY

RETURN “Input is in the language”

ELSE

RETURN “Error: Input not in language”

Explanation:

The parser is that phase of the compiler which takes a token string as input and with the help

of existing grammar, converts it into the corresponding Intermediate Representation (IR). The

parser is also known as Syntax Analyzer.

14

A predictive parser is a recursive descent parser with no backtracking or backup. It is a topdown parser that does not require backtracking. At each step, the choice of the rule to be

expanded is made upon the next terminal symbol.

Example problem:

Construct Predictive parser or LL(1) PARSER

E->E+T| T

T->T*F | F

F-> (E)| id

Solution:

Step 1: Remove Left Recursion

E -> TE’

E ‘-> +TE’|ε

T -> FT’

T ’ -> *FT’|ε

F -> (E)|id

Step 2: Find FIRST( ) & FOLLOW( )

FIRST(E) = { ( , id}

FIRST(T) = { ( , id}

FIRST(F) = { ( , id}

FIRST(E’) = { +, Ɛ}

FIRST(T’) = { *, Ɛ}

FOLLOW(E) = { ) , $}

FOLLOW(E’) = { ) , $}

FOLLOW(T) = {+, ), $}

FOLLOW(T’) = {+, ), $}

FOLLOW(F) = {+, *, ), $}

15

Step 3: Construct Predictive table:

Non

Terminal

Terminals

id

E

(

T->FT’

$

E’-> ε

E’-> ε

T’-> ε

T’-> ε

T->FT’

T->FT’

F->id

)

E->TE’

E->TE’

T’

F

*

E->TE’

E’

T

+

T-> *FT’

F->(E)

Sample Input and Output:

Input:

S->A

A->Bb

A->Cd

B->aB

B->@

C->Cc

C->@

Output:

Predictive parsing table:

--------------------------------------------------------------------------------------------a

b

c

d

$

--------------------------------------------------------------------------------------------S

S->A

S->A

S->A

S->A

--------------------------------------------------------------------------------------------A

A->Bb

A->Bb

A->Cd

A->Cd

--------------------------------------------------------------------------------------------B

B->aB

B->@

B->@

B->@

--------------------------------------------------------------------------------------------C

C->@

C->@

C->@

---------------------------------------------------------------------------------------------

Result:

Thus, Program to implement Predictive parser was executed and verified successfully.

16

Ex. No.: 5

Arithmetic Calculator Using LEX and YACC

Aim:

To write a program to implement an Arithmetic Calculator using LEX and YACC

Algorithm:

Create a lex Program named “sample.l”

STEP 1: Include the necessary library files for token definitions and ‘C’ declarations

STEP 2: Define the rules for regular definitions and patterns

STEP 3: Define the functions invoked in the translation rules

Create a yacc Programnamed “sample.y”

STEP 1: Include the necessary declarations for yacc specifications and ‘C’ headers

STEP 2: Define the translation rules and the input structure specifications for the

grammar rules

STEP 3: Define the functions that are invoked in the rules

STEP 4: Read the input

STEP 5: If the given input matches with the defined rules, the yacc tool executes.

the respective actions.

STEP 6: End.

Pseudo code:

File Name: sample.l

BEGIN

IMPORT libraries

DEFINE rules FOR patterns

DEFINE functions

INVOKE translation rules

END

17

File Name: sample.y

BEGIN

DECLARE headers

DEFINE translation rules

AND input structure

DECLARE tokens

DEFINE lex AND yacc

DEFINE function yyparse() and CALL yylex()

CHECK input WITH rules

EXECUTE function

END

Explanation:

Yacc (for “yet another compiler compiler.”) is the standard parser generator for the Unix operating

system. An open source program, yacc generates code for the parser in the C programming

language. The acronym is usually rendered in lowercase but is occasionally seen as YACC or

Yacc.

How to execute:

$yacc –d file.y

$lex file.l

$cc lex.yy.c y.tab.c -ll –ly –lm

$./a.out

Sample Input and Output:

Input:

Enter the Expression: log100

Output:

Answer = 2

18

Input:

Enter the Expression: tan45

Output:

Answer = 0.999954

Result:

Thus, the Program to implement scientific calculator using LEX and YACC was executed

successfully.

19

Generate Three Address Code for a C Program Using

LEX and YACC

Ex. No.: 6

Aim:

To write a program to generate three address code for a simple program.

Algorithm:

STEP 1: Read the input expression from the user using gets() function.

STEP 2: Read and check each and every character of the given input inorder to

find the highest precedence operator.

STEP 3: After finding the highest precedence operator, assign the sub expression

involved on that operator on to a temporary name and print it.

STEP 4: Similarly find the next highest precedence operator and do Step 3 until ‘\0’

reached.

STEP 5: END

Pseudo code:

READ string

READ character y

IF y EQUALS ‘+’ OR ‘-’ OR ‘*’ OR ‘/’ OR ‘%’

IMPLEMENT function compute()

CHECK precedence

ADVANCE pointer

PERFORM arithmetic operation

ASSIGN subexpression TO temp

DECREMENT stpos

INCREMENT endpos

ASSIGN end EQUAL TO start

CONCAT buff, expr, stpos

ASSIGN ‘t’ TO buff[stpos]

20

COPY expr, buff

REPEAT until ‘\0’ IS reached

END

Explanation:

A compiler can broadly be divided into two phases based on the way they compile.

1. Analysis Phase

2. Synthesis Phase

Analysis Phase (Front end of the Compiler):

It is known as the front-end of the compiler, the analysis phase of the compiler reads the source

program, divides it into core parts and then checks for lexical, grammar and syntax errors.

The analysis phase generates an intermediate representation of the source program and symbol

table, which should be fed to the Synthesis phase as input.

The front end of a compiler is the part that takes the source language and produces an intermediate

representation. For example, a C compiler front end will take an input file containing C statements

and translate that into some intermediate form.

A compiler back end would convert that to a specific machine language.

Three address code is a type of intermediate code which is easy to generate and can be easily

converted to machine code.

It makes use of at most three addresses and one operator to represent an expression and the value

computed at each instruction is stored in temporary variable generated by compiler. The compiler

decides the order of operation given by three address code.

General representation – a = b op c

Where a, b or c represents operands like names, constants or compiler generated temporaries and

op represents the operator.

21

Example 1:

Source statement: x = a + b* c + d

Three address code:

t1 = b* c

t2 = a + t1

x = t2 + d

Example 2:

Source statement: (a+b)*(c+d) -(a+b+c)

Three address code:

t1 = a + b

t2 = c + d

t3 = t1 * t2

t4= a + b

t5 = t4 + c

t6 = t3 - t5

Sample Input and Output:

Input:

Enter the Expression

a=a+(3-(b–2)*4)

Output:

t1 = b – 2

t2 = t1 * 4

t3 = 3 – t2

22

t4 = a + t3

a = t4

Result:

Thus, the program to implement the Front end of a Compiler was successfully executed.

23

Code Optimization Technique – Constant Folding

Ex. No.: 7

Aim:

To write a program to implement the Constant folding Code Optimization techniques.

Algorithm:

STEP 1: Identify the tokens in the program and store it in a separate array.

STEP 2: Read through the tokens to identify any assignment to constants.

STEP 3: Scan through the tokens to find if there is any occurrence of the token in an

arithmetic expression which is a variable assigned to a constant.

STEP 4: Replace the occurrence of the token with the constant.

STEP 5: Finally write the set of tokens after replacement of the variable with the constant.

Pseudo Code:

//Constant folding

BEGIN

INCLUDE header files

DEFINE struct with variables

READ input

GET tokens by get_tokens

OPEN input file inside MAIN

WHILE true

READ char by getc and store in ch

WHILE ch true

BUFFER ++

ELSE break

DEFINE readinput

24

DECLARE variables

COPY buffer to temp by strcpy

READ tokens and compare

DEFINE gettokens

READ char in token

AND

COMPARE with DEFINED operator

IF match replace

END

Explanation:

Code optimization is a program modification strategy that endeavours to enhance the intermediate

code, so a program utilises the least potential memory, minimises its CPU time and offers high

speed. Code optimization is essential to enhance the execution and efficiency of a source code. It

is mandatory to deliver efficient target code by lowering the number of instructions in a program.

Code Optimization Techniques:

25

Examples:

Compile Time Evaluation:

x = 12.4,

y = x/2.3 ,

Evaluate x/2.3 as 12.4/2.3 at compile time.

Constant Propagation:

c=a*b

x=a

till

d=x*b+4

//After Optimization

c=a*b

x=a

till

d=a*b+4

Constant Propagation:

x = 12.4

y = x/2.3

Evaluates x/2.3 as 12.4/2.3 at compile time.

Copy Propagation:

c=a*b

x=a

till

d=x*b+4

//After Optimization

c=a*b

x=a

till

d=a*b+4

Dead Code Elimination:

c=a*b

26

x=a

till

d=a*b+4

//After elimination:

c=a*b

till

d=a*b+4

Unreachable Code Elimination:

#include <iostream>

using namespace std;

int main()

{

int num;

num=10;

cout << "GFG!";

return 0;

cout << num; //unreachable code

}

//after elimination of unreachable code

int main()

{

int num;

num=10;

cout << "GFG!";

return 0;

}

Induction Variable and Strength Reduction:

i = 1;

while (i<10)

{

y = i * 4;

}

27

//After Reduction

i=1

t=4

{

while( t<40)

y = t;

t = t + 4;

}

Sample Input and Output:

main()

{

float pi=3.14,r,a;

scanf("%f",&r);

a=pi*r*r;

printf("a = %f", a);

return 0;

}

$ ./a.out

main()

{

float pi = 3.14 , r , a ;

scanf("%f", , &r) ;

a = 3.14 * r * r ;

printf("a = %f" , a) ;

return 0 ;

}

RESULT:

Thus, the program to implement Constant folding Code Optimization is executed and verified.

28

Machine Code Generation – Back End Compiler

Ex. No.: 8

Aim:

To write a program to implement the Back end of the compiler.

Algorithm:

STEP 1: Read the intermediate codes as input from the user.

STEP 2: Read and check each and every intermediate code in order to find the operator

involved in it.

STEP 3: Based on the operator, we can print the mnemonics names for assembly code

instruction. i.e., if the operator involved is ‘+’ then we can print the equivalent

mnemonic name as ADD.

STEP 4: Similarly do steps 2 & 3 for all the intermediate codes given.

STEP 5: END.

Pseudo code:

BEGIN

DEFINE function isopr(c)

IF c EQUALS ‘+’ OR ‘-’ OR ‘*’ OR ‘/’

THEN RETURN 1

ELSE

RETURN 0

ASSIGN string TO tmp

IF tmp[0] is a DIGIT

PRINT newline PLUS MOV

CONCAT with string AS &#39 AND tmp AS &#39

CONCAT “R” with rno1

29

ELSE

PRINT newline PLUS MOV CONCAT tmp

CONCAT “R” WITH rnol

END IF

ELSE IF tmp[0] EQUALS ‘t’

THEN

CALL function addtoreg AND

RETURN rno2

PRINT newline PLUS “MOV R”

CONCAT rno1 AND “R”

CONCAT rno2

END IF

END

Explanation:

A compiler can broadly be divided into two phases based on the way they compile.

1. Analysis Phase

2.Synthesis Phase

Synthesis Phase (Back End of the Compiler):

Known as the back end of the compiler, the synthesis phase generates the target program with the

help of intermediate source code representation and symbol table.

A compiler back end takes that intermediate representation and produces object code. So, for

example, a C compiler front end will take an input file containing C statements and translate that

into some intermediate form. A compiler back end would convert that to a specific machine

language.

Example:

x = a+b*50

Intermediate Code:

temp1 = int to real (50)

temp2 = id3 * temp1

30

temp3 = id2 * temp2

id1 = temp3

Machine Code:

MOVF id3, R2

MULF #50.0, R2

MOVF id2, R2

ADDF R2, R1

MOVF R1, id1

Sample Input and Output:

Input:

Enter the number of Intermediate Code Entries : 3

Enter the Intermediate Code

t1 = a + b

t2 = 25 * t1

ans = t2

Output:

MOV a, R0

ADD b, R0

MOV #25, R1

MUL R0,R1

MOV R1, ans

Result:

Thus, the program to implement the back end of a Compiler was successfully executed.

31

Ex. No.: 9

Recursive Descent Parser

Aim:

To write a program to implement a Recursive Descent Parser.

Algorithm:

STEP 1: Read the number of productions.

STEP 2: Read the productions, Start Symbol and input string to be parsed.

STEP 3: Check each and every character of the input string with the Start symbol

production right side.

STEP 4: If any Non-Terminal Present in the production, then substitutes its

alternative from the remaining productions given.

STEP 5: If all the characters of the input string matches with productions right side

symbols then we can print that the parsing has been completed successfully.

STEP 6: Otherwise print that the parsing is not completed successfully.

STEP 7: End

Pseudo code:

IMPORT headers

DEFINE TRUE 1

DEFINE FALSE 0

READ no of productions

RAED productions, start symbol,string

SEARCH start symbol

PERFORM left factoring

DEFINE function TO parse single symbol

GET NEXT token FROM token stream

32

IF token MATCHES symbol

PRINT “Input is processed successfully”

THEN MOVE TO next symbol

ELSE

PRINT “Input does not matches”

END IF

END

Explanation:

Recursive Descent Parser uses the technique of Top-Down Parsing without backtracking. It can

be defined as a Parser that uses the various recursive procedure to process the input string with no

backtracking. It can be simply performed using a Recursive language.

The first symbol of the string of R.H.S of production will uniquely determine the correct

alternative to choose. The major approach of recursive-descent parsing is to relate each nonterminal with a procedure.

The objective of each procedure is to read a sequence of input characters that can be produced by

the corresponding non-terminal and return a pointer to the root of the parse tree for the nonterminal. The structure of the procedure is prescribed by the productions for the equivalent nonterminal.

Sample Input and Output:

Input:

Enter the no.of production rules : 3

Enter the production rules

S → cAd

A → ab

A→a

Output:

Enter the starting symbol : S

33

Enter the input string

:

cad

Current Production Symbol c

Input: c matches with the Production S → cAd

Current Production Symbol A

A is a non terminal, so expand with A → ab

Current Production Symbol a

Input: a matches with the Production A → ab

Current Production Symbol b

Input: a does not matches with Production A → ab

So, Backtrack and find the next alternative

Taking Next Alternative A → a

Current Production Symbol a

Input: a matches with the Production A → a

Current Production Symbol d

Input: d matches with the Production S → cAd

Input is processed successfully

Result:

Thus, the program to implement Recursive Descent Parser was executed successfully.

34

Ex. No.: 10

Symbol Table Generation

Aim:

To write a program to generate Symbol Table.

Algorithm:

STEP 1: Initialize an empty symbol table.

STEP 2: Parse the input source code and extract all identifiers (such as variable names,

function names, etc.).

STEP 3: For each identifier: Check if the identifier already exists in the symbol table.

i.

If it does, report an error (such as a redeclaration error).

ii.

If it does not, add the identifier to the symbol table with an associated

scope level, data type, and initial value (if applicable).

STEP 4: Repeat steps 2-3 for all source code files being compiled.

STEP 5: Return the symbol table for use by subsequent compiler phases.

Pseudo code:

BEGIN

INITIALIZE empty ST

PARSE input

EXTRACT identifiers

CHECK if identifier EXIST in ST

TRUE

report ERROR as REDECLARATION

FALSE

ADD to the ST

REPEAT

35

RETURN ST

END

Explanation:

Symbol Table is an important data structure created and maintained by the compiler in order to

keep track of semantics of variables i.e. it stores information about the scope and binding

information about names, information about instances of various entities such as variable and

function names, classes, objects, etc.

It is built-in lexical and syntax analysis phases. The information is collected by the analysis phases

of the compiler and is used by the synthesis phases of the compiler to generate code. It is used by

the compiler to achieve compile-time efficiency.

Items stored in Symbol table:

Variable names and constants

Procedure and function names

Literal constants and strings

Compiler generated temporaries

Labels in source languages

Information used by the compiler from Symbol table:

Data type and name

Declaring procedures

Offset in storage

If structure or record then, a pointer to structure table.

For parameters, whether parameter passing by value or by reference

Number and type of arguments passed to function

Base Address

Example:

main()

{

36

int counter;

int starPoint;

}

Symbol Table:

Name

Counter

starPoint

Type Address Scope

int

0

main

int

4

main

Sample Input and Output:

Input:

Enter the number of symbols: 4

Enter the symbol names:

x

y

z

s

Output:

Symbol table:

Name Address

x

0

y

4

z

8

s

12

Result:

Thus, the program to generate symbol table was executed successfully.

37