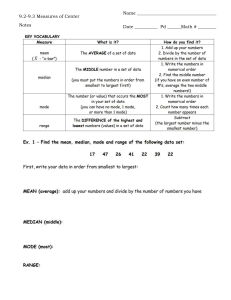

Topic 2 Turning Numbers into Information Key Objectives for Topic 2 • Understanding the importance of standardizing data • Capturing the main features of a big set of data - central tendency and spread • Understanding the pitfalls with various measures of central tendency and spread in data. • Using Excel to produce basic visualisations of numerical data - frequency distributions, histograms, box & whisker • Use Excel to calculate probabilities from the normal distribution. Remember the different types of data we introduced in Topic 1: Numerical Data and Categorical Data. Topic 1 explored Categorical Data, and here we discuss Numerical Data. What we are most interested in in this section is two key aspects of the data: 1. Some way of measuring where the data is centred 2. Some way of measuring how spread out it is But we also need to think about whether the data is shown with the right units for comparison - maybe it needs to be standardised first - that is where we will start. 2.1 Standardising Data Often an important issue in exploring data is to make sure that data are comparable. This may require scaling of the data in some particular way so that data from different sources can be compared. A good example of this is carbon dioxide (CO2) omissions. In Figure 2.1 in the first two columns we have the total CO2 emissions for the 20 countries which have the highest emissions. China is at the top of the list followed by the United States, India and so forth. Australia comes in at 15th place. While it may be of interest to examine total emissions, depending upon the precise question that is being addressed, it will often be more meaningful to normalize the data by population. This makes the quantity of emissions more comparable across countries. China has the largest emissions in main part because it has a very large population. However, each Chinese person's emissions are actually relatively low. If we calculate CO2 emissions per capita then we get the data in the two right hand columns of the table. We can see that this tells quite a different story. China is no longer even on this list. That is, the country's per capita emissions do not place it in the top 20 countries. Qatar heads the list along with some smaller countries-Curacao, Trinidad and Tobago and Kuwait. The US and Australia rank highly on this table indicating that not only do they have high total emissions but their emissions per person are also high. There are many different ways in which we may want to scale or adjust data in order to better understand it or prepare it for analysis. Some of these include relative measures such as: percentages proportions rates of return per person per hectare per employee per dollar invested Adjusting for Inflation A particularly important way of scaling data, and one that we will now spend some time discussing, is to adjust for inflation. This is relevant for data which is measured across time, as monetary values. We often need to correct for the effects of inflation, or put differently, the change in the value of money across time. For example, $1 in 1990 is worth more (in the sense that you could buy more goods and services with it) than is $1 now. Put differently, suppose you were asked to choose between the two options; either being gifted $100 now or $100 in 10 years time. Most people would choose the first option. Because in 10 years time, because prices tend to rise over time, $100 will be worth less than it is now. This is a really important observation because a lot of series are measured in dollar values. One of the most important is Gross Domestic Product (GDP). This measures the total value of all goods and services produced in the economy in a given period. In Figure 2.2, Australia's GDP per capita is shown (we have divided GDP by the population to make it more meaningful along the lines of the previous discussion above). This shows a massive rise in nominal GDP per capita. It has risen from around $25,000 in 1990 to almost $80,000 in 2018. This is a rise of around 3 times. But does this mean we are 3 times wealthier than in 1990. No, because prices also rose over this period. It turns out that prices have more than doubled over that period, so most of the increase in GDP has just been keeping up with price increases. The Statistics Office collects a lot of information about prices each quarter, and constructs what is called a “Price Index”, that tells us about the change in prices from one quarter to the next. We won’t go into the details of how that price index is constructed - there are a few of them, but the most common is the “Consumer Price Index” (CPI), which tells us average prices for everyday goods and services people buy, and is used to calculate the annual inflation rate. Figure 2.2: Australia's Nominal and Real GDP Per Capita In order to standardise the GDP data to allow for price increases over time, we take the value of GDP in a given quarter, and divide it by the price index for that quarter. This removes the effect of inflation from the GDP value. We will use the formula: Now look at the “real” GDP values in Figure 2.2: real GDP per capita has risen from $25,000 in 1990 to about $40,000 in 2018. This is a much less spectacular rise than we first thought, because now we have removed the effect of inflation. GDP per capita has increased, it has less than doubled over 28 years. The standardisation of data to remove the effect of inflation is a common task - we will do it when looking at wages, share prices and any measure that evolves over time. Here is some of the jargon people might use to describe the variables used in these situations. Nominal/Actual/Current Prices: the $ value of a series as actually measured at each point in time. Real/Constant Prices: the value a series would have taken if prices remained fixed at some point in history-the 'base' period (this is March 1990 in the Figure 2.2). Price Index (e.g. Consumer Price Index (CPI)): A weighted average of prices of goods and services indexed to 100 in the 'base' period (this is 1990 in Figure 2.2). 2.2 Where is the Centre of the Data? The most important way to summarise numerical data is to calculate some kind of measure of average or typical value. What are our options? Mean: (often referred to as the 'average'). This is calculated using the formula below: It is worthwhile getting a bit familiar with this sort of notation as it will come up quite often in the unit. We use 𝑋̅ to denote the value of the mean we are calculating. The term in the middle, i.e. between the two equals signs, uses summation notation to write the process of calculating the average compactly. What the summation notation means is reflected in the expression on the right hand side. That is, there are n observations in our data. We think of each of our n observations being indexed by i = 1, 2, . . . , n. So the first observation can be denoted by Xl, the second as X2 and so forth up to the last observation Xn. The formula tells us these are summed and then divided by the number of observations to calculate the average. In Excel: You can calculate the mean by entering the following formula: =AVERAGE(A2:A5086). Here the 'AVERAGE()' part is the Excel formula and 'A2:A5086' denotes a particular set of cells that you want to average. The outcome of this formula in Excel is $33,161. When does the mean not provide a suitable measure of the Centre of a set of data? The problem is that the mean can be influenced by a few extreme values that are not at all typical of the rest of the data. Eg A few really expensive houses in your city make the average house price quite high, higher than most prices actually sell for. The mean overstates where the “centre” of most of the data is located. Median: Another measure of the central tendency of the data is the median. The median is the number such that 50% of values are equal to or higher than the median, and 50% are equal to or lower. In other words, the median is the 'middle' value or 'typical' value. In Excel: the median is calculated as: =MEDIAN(A2:A5086). In the case of our data this gives $25,000. The median has an advantage over the mean in that it is not affected by a few extreme values. It does a good job of telling us what the 'typical' income of a household is in that particular suburb. But the median can also be misleading-it takes no account of how the data is distributed around the middle. e.g. Consider 5 housing properties that are up for sale in Clayton with prices at $1,270,000; $1,320,000; $1,460,000; $1,470,000 and $1,480,000. In Wantirna South, 5 similar housing properties up for sale are priced at $1,450,000; $1,450,000; $1,460,000; $1,850,000 and $2,000,000. Both suburbs have a median price of $1,460 000, so we would say a 'typical' housing property has the same price in each suburb. But clearly, most housing properties in Wantirna South are more costly than those in Clayton. The median does not give the full picture. Information about the range of property prices within each suburb does not enter into the computation of the median. Mode: This is the most commonly occurring value. Note, if values do not repeat very often, then the Mode is often not very interesting. A couple of repeated values may be the mode, but they may be nowhere near the centre of the data. The mode can be calculated in Excel as: =MODE(A2:A5086). e.g. Say you had data on the number of people in each household in a given suburb. If the mode is 4 it is telling us "the most common household size is 4 people". 2.3 What is the shape of the Data Distribution? Often the mean will be different from the median. Why? The reason is due to skewness. The relationship between the mean and the median will often tell us something about the distribution of the data. When the mean is above the median it is likely that the distribution of values exhibits positive skewness. To understand why, think about how the mean and the median are calculated. The median is the middle value (half the observations lie above it and half below it). The mean on the other hand is calculated as a sum of all the values divided by the number of values. Consider the impact of the highest value on the mean and the median. If the highest value increases (all other values unchanged) then this will increase the sum of all the values and the mean will rise. On the other hand, the median will not change. When the mean is above the median it is often because there are a small number of large values in the right tail of the distribution, i.e. the distribution is positively skewed. This leads to a higher mean relative to the median. In the reverse case, the mean is below the median and this indicates negative skewness. Of course, if the mean is equal to the median then this indicates that the distribution is likely symmetric. Some examples are shown in Figure 2.3. Figure 2.3a shows a negatively skewed distribution, Figure 2.3b a symmetric distribution and Figure 2.3c a positively skewed distribution, as we found for income. 2.4 How Spread out is the Data? Now to the task of measuring the dispersion or spread in the values. While the most important characteristic of a set of data is the mean or median (where is it centred?), the next most useful is to know about how spread the data is. Range One measure of the spread of the data is the range. This is calculated as the difference between the maximum and the minimum value. In Excel: We calculate the Range as: =MAX(A2:A5086) - MIN(A2:A5086). For our data this yields a value of $601,000. That is, the maximum income value was $601,000 and the minimum value was $0. The range is the difference between these two. The range is sometimes a useful number but we must note that it also can be distorted by one extreme observation. The range can change significantly if one particularly large observation is added to or removed from our sample. Hence the range is quite an unstable and somewhat unreliable measure of spread in the data. In addition, usually most of our data is clustered fairly closely around the mean and the range does not reflect this; it relies just on the two most extreme values in the dataset. Variance & Standard Deviation: A better measure of the spread in the data is provided by the variance (s2) and the standard deviation (s), which is simply the square root of the variance. The sample variance is calculated using the formula below. While this formula looks, and is, quite complicated the intuition is clear. What we want to do is measure the average deviation from the average (i.e. the mean) across each observation. The deviation for observation i is 𝑋𝑖 − 𝑋̅ . We can calculate this for each observation. But if we simply averaged this then we would get zero. To see this note that, To avoid this problem, we square the differences and average these. Squaring turns all the negative deviations into positive numbers. We sum across all the squared deviations and divide by n - 1. You may think we should divide by n because we have n observations. But there is a good reason for dividing by n - 1 which you can learn about in future study. The variance gives us an estimate of the average squared differences between each observation and the mean. Because we have squared the differences the variance is measured on a somewhat different scale from the data itself. It is useful to 'undo' the squared transformation by taking the square root of the variance which gives us the standard deviation, 𝑠 = √𝑠 2 . Interpreting the Standard Deviation is important: • In simple terms, we may say that the standard deviation measures “the average amount that the values vary above and below the mean” • More precisely, it is “the square root of the average of the squared deviations from the mean”. This is a bit complicated for everyday use, so we often explain the standard deviation using the simple interpretation. In Excel: the calculation of the sample standard deviation uses the following formula: =STDEV.S(A2:A5086). This gives $36,001. The sample variance can be calculated by squaring this figure or by using the formula: =VAR.S(A2:A5086). Quartiles and the Interquartile Range Suppose we could take our data and sort from smallest to biggest. The median referred to earlier is the “middle value”, the 50% value, where half the numbers exceed the median, and half are below it. We can also call the median the 50th percentile. Following the same idea, if we go to the ¼ and ¾ marks in this sorted data, we find the 25th percentile and the 75th percentile, also known as the 1st Quartile (Q1) and the 3rd Quartile (Q3). The 1st Quartile (25th percentile) has ¼ of values less than it, and ¾ bigger than it. Similarly, the 3rd Quartile (75th percentile) has ¾ below it, and ¼ above it. These are useful additional values to report to indicate the location of the data and the range over which half of the data lies. The Interquartile Range is the measure of spread associated with these Quartiles. Take Q3 Q1, and you have it! It measures the spread of the “middle 50% of the data”. In Excel: the first and third quartiles can be obtained via this function: =QUARTILE(A2:A584,1) and = QUARTILE(A2:A584,3). Here’s an example: I have a dataset of scores for a recent semester’s results in one of our 1st Year Statistics units. Here is the first few rows of the data. And now to some summary statistics: You see in this example the two measures of spread are quite similar: a standard deviation of 15.3 tells us the average variation around the mean mark of 63.8. And the Interquartile range of 16 says the middle 50% of students scored between 58 and 74, a range of 16 marks. 2.5 Descriptive Statistics in Excel As an alternative to using the specific formulas for each of these measures, there is a way to get most useful summary statistics in one place. Use the 'Data' tab, then select 'Data Analysis' and 'Descriptive Statistics' and ticking the 'Summary Statistics' box. Using the data on incomes discussed earlier, we get the output shown in Figure 2.4: Figure 2.4: Descriptive Statistics from Excel for Income This table covers most of the statistics we are interested in, except the Quartiles and Interquartile range. It also includes a few others that you can learn about in the future 😊. 2.6 More on Data Visualisation Visualisation is a useful approach to summarizing data. It is more flexible than the measures used so far, and can show unusual features or general patterns. In additional, visualization is an important communication tool - people can absorb information better in a well-designed graph or table. Let’s look at the income data we considered earlier. Before producing any tables or graphs, we need to work out the question that you want to answer or information you want to convey. For example, using this data we may be interested in the income distribution. You could ask a question like; "are individuals in this data mostly affluent, or is there an uneven distribution of income?" Frequency Distribution One of the best ways of answering these types of questions is by creating a frequency distribution: a table which shows the number of households earning income within particular ranges. This is shown in Figure 2.5. We look at the incomes of these individuals and count the number of individuals with incomes in each class range. The value in the first column titled 'Bins' gives the upper value of the range. So that the results show that 1,509 individuals earned less than $10,000, 939 individuals earned between $10,001 and $20,000 and so forth. Frequency distributions like this can be created in Excel using 'Data Analysis' and 'Histogram' from the 'Data' tab. Figure 2.5: Frequency Distribution of Individual Income Histograms We can also take this information and present it in a more visually appealing manner as a histogram. This is shown in Figure 2.6. A histogram will be produced by Excel if you tick "Chart Output" when you create a histogram using the 'Data Analysis' option. Make sure your histogram is well presented. Well presented histograms have: • Headings and labels for axes, including units of data. • No (or minimal) gaps between the bars - this is not the default Excel output. You will see in tutorials how to fix this. The problem with the table and chart above is that in its current form, the numbers are not particularly meaningful. Is 1,509 individuals a large or a small number? A more meaningful table or chart would be one that presents the frequency column as a percentage of all the individuals. In Figure 2.7 we have asked Excel to calculate the "Cumulative %" in each category and from this we can calculate the "Percentage %" in each category (we simply calculate the change in the cumulative value). This allows us to say things like "29.68% of households earn between $0 and $10,000 per annum." Figure 2.7: Frequency Distribution of Individual Income (With % of Total) Some points about creating frequency distributions and Histograms: • Do not have too few classes (ranges are too broad, and useful information is lost), but do not have too many (too much detail, hard to form overall impressions). Somewhere between 5 and 15 is normal, depending on how much original data you have, and how much it varies. • Select sensible class boundaries. Nice round numbers usually make the most sense (you will find that Excel's automatic class (bin) ranges do not do this, and so it is better to enter your own). • Giving cumulative frequencies and cumulative percentages is also a useful addition. It allows us to say things like "72.31% of households earn $40,000 or less per annum." Box and Whisker Plots Box and Whisker Plots are a nice visual way to show information about mean, median, quartiles and range of data. Let’s look again at the results for our recent Business Statistics Unit. The summary measures we calculated were as follows: Here is the Box and Whisker Plot Excel gives us for the same data: The Box shows the middle 50% and hence the first and third Quartiles. We also see the median and the mean, plus the extreme values - top score of 91%, lowest in the “normal” range of 34%, with 9 scores scattered below that, all the way to zero. In one graph we have a lot o information about the centre of the data, the spread, and about the “extreme” outcomes. I produced this chart Using the Insert tab in Excel, Charts, and under the Histogram button. Finally, this example illustrates the first point about visualization - you have to know what questions you are asking, ad design the graph to suit. This Box and Whisker is interesting, but in terms of unit results, people are more interested in questions like: how many failed? How many high distinctions were there? etc To present a graph that gives that kind of information, we need a histogram with carefully chosen Bin ranges. I will choose Bin ranges of 49, 59, 69, 79, cine Excel counts the number of values up to and including that value. After tidying up the labels and gaps between bars, converting to percentages, etc, I get the following histogram: Now we have a clearer picture of the distribution of marks, and a visual way of showing the answers to the questions we are interested in answering. 2.7 Probability and Probability Distributions The previous discussion has been mostly descriptive. But it is useful to extend our discussion by thinking about distributions of random variables and probabilities. We introduced probability briefly in Topic 1: now we will extend it to the idea of a random variable. What is the basic idea? We describe uncertainty in anything we measure by saying there is an element or randomness in what happens. Nothing is total predictable. So measurable quantities (variables) are random. But there is usually some structure to the randomness. Some values of the random variable are more likely to occur than others. We describe this structure with a probability distribution, a formula or a table of values of the random variable and the probabilities of those values occurring. Recall the frequency distribution for incomes of households shown in various ways in the previous section such as Figure 2.7. From the frequency distribution we know that 11.39% of individuals earn between $30,001 and $40,000 per annum. So, if we were to select one individual randomly, the chances of that individual earning between $30,001 and $40,000 would be 0.1139. In other words, the probability that a randomly selected person earns between $30,001 and $40,000 per annum is 0.1139. The probability of an event (a particular outcome in some process or phenomenon-such as drawing a household that earns between $30,001 and $40,000 p.a.) is usually understood as the proportion of times that event would occur, if the process were repeated many times. The table above represents the case where all individuals have been selected. When all 5,085 people have been selected, there would be 579 of these 5,085 individuals with an income in the range of $30,001 to $40,000. A table of events (e.g. income ranges) along with their associated probabilities of occurrence (frequency/percentage of occurrence) can be thought of as a probability distribution for a random variable. A random variable is a characteristic or quantity of interest that can take a range of values. Its probability distribution is a set of all possible values of that variable together with the probability of each of those values occurring. An example is shown in Figure 2.8. Figure 2.8: Probability Distribution of Individual Income To be a proper probability distribution, the outcomes/events listed in a probability distribution should have two characteristics: 1. Mutually exclusive: that is, no two outcomes in the list can be true at the same time. e.g. If we get a head, we can't get a tail! This is true in the example above, as the income ranges/categories do not overlap. 2. Exhaustive: that is, the list includes all possible outcomes, e.g. we must get either a head or a tail. There are no other possibilities. This is true in the examples above, because the ranges/categories cover all possible values. This is why the sum of probabilities in a probability distribution always equals one. The probability distributions that we have seen earlier (binomial and multinomial) are distributions for discrete or categorial random variables: there are a small number of outcomes that the random variable can take. With variables that can take a large number of values (possibly infinite sometimes), it makes more sense to talk about the chances of the variable falling within a particular range. E.g. Nobody wants to know “what is the probability of an income of $10,750?” More interesting, is “what is the probability of earning between $10,500 and $11,000, say”. The probability density function (PDF) provides a graphical representation of the probability distribution that allows us to calculate such probabilities. Probabilities of being in a particular range are given by the area under a curve over that range. We now consider one distribution that is very useful in practice and which you should become familiar with. 2.7.1 The Normal Distribution One PDF that you may already be familiar with is the normal distribution. In statistics, we often assume that data follows a normal distribution. A particular version of the normal distribution is the standard normal PDF. This is shown in Figure 2.9. The shape of this probability distribution is often described as “Bell-shaped”. The area under the curve is equal to 1, the mean is 0 and the variance is 1. What this curve illustrates is the relative likelihood of obtaining different variables for the random variable X, which is shown on the xaxis. The random variable X can take on any value. But the curve tells us that values around 0 (zero) are much more likely that values such as -4 or 4. This is because the curve is higher at this point. The y-axis measures the likelihood of different values of X. In high school maths you may have seen how to work out probabilities from a normal distribution. e.g. You could work out the probability of X being greater than a particular value, say 2, on the standard normal distribution (X ~ N (0, 1)) as shown in Figure 2.10. e.g. You could also work out the probability of X being less than a particular value, say 5, when X is not standard normal, e.g. X has a mean of 10 and variance of 9: X ~ N (10, 9). See Figure 2.11. This would be equivalent to standardising the X ~ N (10, 9) so that it maps onto a standard normal Z ~ N (0, 1). You would do this through a transformation, i.e. we subtract the mean (µ = 10) from X and get something with a mean of zero, and divide by its standard deviation (σ = 9) to get something with a standard deviation of 1. That is we calculate: 𝑧 = 𝑋−𝜇 𝜎 . In Excel, we can work out probabilities for the normal distribution using the function: =NORM.DIST(X value, mean, standard deviation, TRUE). This calculates the probability that a random variable from the normal distribution with the given mean and standard deviation will be BELOW the 'X value' entered. For example, =NORM.DIST(0, 0, 1, TRUE) gives and answer of 0.5. =NORM.DIST(1.96, 0, 1, TRUE) gives us 0.975. Sometimes we want to work out what the 'X value' is for a given probability. In this case we use: =NORM.INV(probability, mean, standard deviation). This tells us what the ‘X value' is such that the probability of getting a number lower than this is equal to the entered probability. For example, =NORM.INV(0.5, 0, 1) would give us a value of 0. =NORM.INV(0.975, 0, 1) gives a z value of 1.96.