Image Processing Questions: Systems, Histograms, Enhancement

advertisement

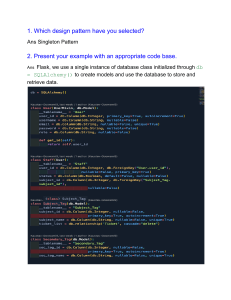

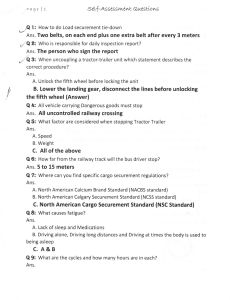

Question Set-01

(a) Illustrate the image processing system with block diagram. Explain its

two most crucial steps.

4

Ans:

(b)Why sampling is required? Explain the effects of quantization on the

quality of digital image.

3

Ans:

We have to convert an analog signal into a digital signal.

To create an image which is digital, we need to covert continuous data into digital form.

There are two steps in which it is done.

Sampling

Quantization

Quantization in Digital Image Processing:

It is opposite of sampling as sampling is done on the x-axis, while quantization is

done on the y-axis.

Digitizing the amplitudes is quantization. In this, we divide the signal amplitude

into quanta (partitions).

(c) State the model of grayscale and color image representation. 1.75

Ans:

Grayscale:

Grayscale is a range of shades of gray without apparent color. The darkest

possible shade is black, which is the total absence of transmitted or reflected

light. The lightest possible shade is white, the total transmission or reflection of

light at all visible wavelengths. Intermediate shades of gray are represented by

equal brightness levels of the three primary colors (red, green and blue) for

transmitted light, or equal amounts of the three primary pigments (cyan,

magenta and yellow) for reflected light.

(a) Illustrate the mathematical model to represent gray-scale and color

image. 2

(b) Define non-uniform sampling .Explain the effects of sampling and

Quantization on the quality of the digital image.

4

(c) Differentiate between gray-scale and B/W image. Suppose you have a

color in which the maximum intensity levels of Red, Green and Blue colors

are 25,26 and 27 respectively. How much memory is required to store the

image of size 200*200.

2.75

(a) Define the terms: brightness, contrast, dynamic range.

3

Ans:

Contrast:

The term contrast refers to the amount of color or grayscale differentiation that exists

between various image features in both analog and digital images.

Brightness:

Image brightness (or luminous brightness) is a measure of intensity after the

image has been acquired with a digital camera or digitized by an analog-to-digital

converter.

Dynamic range:

Dynamic Range (DR) is the range of exposure, i.e., scene brightness, over which a

camera responds with good contrast and/or Signal-to-Noise Ratio (SNR)

(b) Discuss the model of the image degradation and restoration process.

4

Ans:

i. The principal goal of restoration techniques is to improve an image in some

predefined sense. Restoration attempts to recover an image that has been degraded,

by using a priori knowledge of the degradation phenomenon.

ii. Thus restoration techniques are oriented towards modeling the degradation and

applying the inverse process in order to recover the original image.

iii. As Fig. 1 shows, the degradation process is modeled as a degradation function that,

together with an additive noise term, operates on an input image f(x, y) to produce a

degraded image g(x, y).

iv. Given g(x, y), some knowledge about the degradation function H, and some

knowledge about the additive noise term f(x, y), the objective of restoration is to obtain

an estimate f(x, y) of the original image.

v. We want the estimate to be as close as possible to the original input image and, in

general, the more we know about H and f, the closer f(x, y) will be to f(x, y).

vi. The restoration approach used mostly is based on various types of image restoration

filters

vii. We know that if H is a linear, position-invariant process, then the degraded image is

given in the spatial domain by

g(x,y)=h(x,y)∗f(x,y)+77(x,y)

Eq.(1)g(x,y)=h(x,y)∗f(x,y)+77(x,y)

Eq.(1)

Where h(x, y) is the spatial representation of the degradation function and the symbol

"*" indicates convolution.

viii. Now, convolution in the spatial domain is analogous to multiplication in the

frequency domain, so we may write the model in Eq. (1) in an equivalent frequency

domain representation:

G(u,v)=H(u,v)F(u,v)+N(u,v)

Eq.(2)G(u,v)=H(u,v)F(u,v)+N(u,v)

Eq.(2)

ix. Where the terms in capital letters are the Fourier transforms of the corresponding

terms in Eq. (1). These two equations are the bases for most of the restoration

material.

x. This is about the basic image restoration model.

© Illustrate the mathematical model for grayscale and binary image

representation in term of incident and reflected light intensity. 1.75

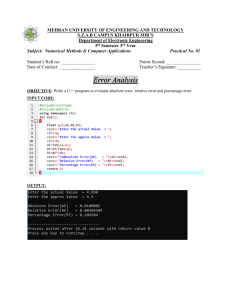

(a) Define digital image. Explain its interpretation with example.

3

Ans:

Digital image:

A digital image is a representation of a two-dimensional image as a finite set of digital

values, called picture elements or pixels.

► Interpolation — Process of using known data to estimate unknown values

e.g., zooming, shrinking, rotating, and geometric correction

► Interpolation (sometimes called resampling) — an imaging method to

increase (or decrease) the number of pixels in a digital image.

Some digital cameras use interpolation to produce a larger image than the sensor

captured or to create digital zoom

f 2 ( x, y )

(1 a) (1 b) f (l , k ) a (1 b) f (l 1, k )

(1 a) b f (l , k 1) a b f (l 1, k 1)

l floor ( x), k floor ( y ), a x l , b y k .

Bicubic interpolation:

► The intensity value assigned to point (x,y) is obtained by the following equation

► The sixteen coefficients are determined by using the sixteen nearest neighbors.

(b) Define 4-adjacency, 8-adjacency, m-adjacency with example.

3

Ans:

► Adjacency

Let V be the set of intensity values

4-adjacency: Two pixels p and q with values from V are 4-adjacent if q is in the

set N4(p).

8-adjacency: Two pixels p and q with values from V are 8-adjacent if q is in the

set N8(p).

m-adjacency: Two pixels p and q with values from V are m-adjacent if

(i) q is in the set N4(p), or

(ii) q is in the set ND(p) and the set N4(p) ∩ N4(q) has no pixels whose values

are from V.

(c) Explain the image digitization process briefly.

Ans:

► The representation of an M×N numerical array as

► The representation of an M×N numerical array as

2.75

► Discrete intensity interval [0, L-1], L=2k

► The number b of bits required to store a M × N digitized image

b=M×N×k

(a) Discuss the major application areas of digital image processing.

4

Ans:

Advantages:

Digital image processing made digital image can be noise free .

Easy to store and use, that is why computers use it

Some mathematical operations such as addition subtraction and logical operation

can be applied.

Image compression reduce the amount of data required to represent a digital

image.

Digital imaging allows the electronic transmission of images to third-party

providers

Improve visual quality, it can be easily process

Easily process an image so that the result is more suitable than the original image.

Disadvantages:

The initial cost can be high depending on the system used.

Digital communications require greater bandwidth than analogue to transmit the

same information.

People need knowledge in many fields to develop an application or part of an

application using image processing.

Calculations and computations are difficult.

(b) Define connectivity. Show the difference between 8-connectivity and mconnectivity with example. 4.75

Question Set-02

(a) Define image histogram. Is it possible to use histogram as feature for

image classification? Explain. 3

Ans:

Image histogram:

An image histogram is a graphical representation of the number of pixels in an

image as a function of their intensity. Histograms are made up of bins, each bin

representing a certain intensity value range.

(b) How does the histogram of the following image look like :

2

i)Dark image, ii) Bright image iii)Low contrast image iv)High contrast image.

Ans:

(b)Explain histogram equalization process for image enhancement with

example.

3.75

Ans:

Histogram equalization is used to enhance contrast. It is not necessary that

contrast will always be increase in this. There may be some cases were

histogram equalization can be worse. In that cases the contrast is decreased.

(a) Define histogram and illustrate its applications in image

understanding. 3

Ans:

Application of image histogram:

Histograms has many uses in image processing. The first use as it has also been

discussed above is the analysis of the image. We can predict about an image by just

looking at its histogram. Its like looking an x ray of a bone of a body.

The second use of histogram is for brightness purposes. The histograms has wide

application in image brightness. Not only in brightness, but histograms are also used in

adjusting contrast of an image.

Another important use of histogram is to equalize an image.

And last but not the least, histogram has wide use in thresholding. This is mostly used

in computer vision.

(b)Explain the process of histogram Equalization in image enhancement.

Differentiate between histogram equalization and histogram

specification methods for image enhancement. 3

Ans:

(c) Perform histogram equalization of 5*5 image having the following data.

2.75

Gray

level

0

1

2

3

4

5

6

7

No. of

pixels

0

0

0

8

12

6

0

0

(a) What is resolution? Explain spatial resolution and intensity level

resolution.

2

Ans:

Resolution:

Resolution refers to the number of pixels in an image. Resolution is sometimes

identified by the width and height of the image as well as the total number of

pixels in the image. For example, an image that is 2048 pixels wide and 1536

pixels high (2048 x 1536) contains (multiply) 3,145,728 pixels (or 3.1

Megapixels).

Spatial resolution:

Spatial resolution states that the clarity of an image cannot be determined by the pixel

resolution. The number of pixels in an image does not matter.

Spatial resolution can be defined as the smallest discernible detail in an image.

Intensity level resolution:

The number of bits utilized in the displayed image is presented directly above the slider,

as is the total number of gray levels. The gray-level resolution of a digital image is

related to the gray-level intensity of the optical image and the accuracy of the

digitizing device used to capture the optical image.

(b) What type of information of image is represented by histogram? Can you

it used image recognition? Explain.

2.75

(c) Obtain histogram equalization for following image segment of size

5*5.Write the interference on the image segment before and after

equalization.

44444,34543,35553,34543, 44444.

4

(a) Define image histogram. Explain its interpretation with example.

3

(b) Consider a 4*4 image segment having gray scale between [0,9].find the

histogram equalize image and draw the image histogram before and after

equalization.

3.75

2332,4342,2335,2424.

(a) Discuss the process of image acquisition using sensor strips.

4

(b) How digital images can be represented? Explain.

4.75

Question Set-03

(a) How point processing works for image enhancement? Discuss.

2.75

Ans:

The simplest spatial domain operations occur when the neighbourhood is

simply the pixel itself

In this case T is referred to as a grey level transformation function or a

point processing operation

Point processing operations take the form

s=T(r)

where s refers to the processed image pixel value and r refers to the

–

original image pixel value

Negative images are useful for enhancing white or grey detail attached in

dark regions of an image

Note how much clearer the tissue is in the negative image of the

mammogram below

Thresholding transformations are particularly useful for segmentation in

which we want to isolate an object of interest from a background

(b) Why Log transform is used in image enhancement? Explain With proper

example.

3

Ans:

The general form of the log transformation is

s = c * log(1 + r)

The log transformation maps a narrow range of low input grey level values into a wider

range of output values

The inverse log transformation performs the opposite transformation

Log functions are particularly useful when the input grey level values may have an

extremely large range of values

In the following example the Fourier transform of an image is put through a log

transform to reveal more detail

(c) How negative image is obtained? Explain gray level slicing method.

3

Ans:

Negative images are useful for enhancing white or grey detail attached in dark regions

of an image.

(a)Is its possible to reduce the noise contents by adding a set of noisy

images? Justify your answer. 3.75

Ans:

Noiseless image: f(x,y)

Noise: n(x,y) (at every pair of coordinates (x,y), the noise is uncorrelated and has zero

average value)

Corrupted image: g(x,y)

g(x,y) = f(x,y) + n(x,y)

Reducing the noise by adding a set of noisy images, {gi(x,y)}

K

1

g ( x, y )

K

g ( x, y )

1

g ( x, y )

K

K

i

i 1

g ( x, y )

i 1

i

1 K

E g ( x, y ) E g i ( x, y )

K i 1

1 K

2

2

E f ( x, y ) ni ( x, y )

1 K

K i 1

g ( x,y )

gi ( x , y )

1

f ( x, y ) E

K

f ( x, y )

n ( x, y )

i 1

K i 1

K

i

2K

1

ni ( x , y )

K i 1

1 2

n( x, y )

K

► In astronomy, imaging under very low light levels frequently causes sensor noise

to render single images virtually useless for analysis.

In astronomical observations, similar sensors for noise reduction by observing the same

scene over long periods of time. Image averaging is then used to reduce the noise

(b) Assume that the fingerprint shown in the following Figure-3(a) is

corrupted by noise.Write down the steps to eliminate to noise and its effects

on the fingerprint while distorting its as little as possible so that so that we

can get an image as shown in the following figure-3(b) 5

(a) Explain gray level and bit plan scaling . Mention their major application

areas. 3

(b) Discuss in detail the Homomorphic and derivative filters .

3

(c) Suppose you are have an x-ray image .How its intelligibility can by be

increased? Explain .

2.75

(a) Explain general mode of image enhancement with point processing . 2

(b) With necessary graphs, explain Log transformation and power law

transformation for spatial domain image enhancement. 4

(c) Suppose you have an image of landscape and you like to increase the

intensity of specific gray levels. 2.75

(a) When you need sharpening spatial filters? Discuss the un-sharp masking

and high-boost filtering in spatial domain. 4.75

(b) Derive sobel operators using the gradient. 4

Question Set-04

(a) What are the different causes of image degradation?

(b) Discuss the method of the image degradation and restoration process.

(c) Mention the drawbacks of inverse filtering.

(a) Mention the effects of power law transformation. Explain the outcomes of

the power law transformation with y=3 and y=0.3 in general equation,

s=c*ry.

(b) Differentiate between point processing neighborhood operations for

image enhancement in spatial

Dolman .Illustrate the general model of spatial filtering.

(c) Explain weighted smoothing filter operation with Example.

(a) Illustrate the general model of spatial filtering for image enhancement .is

there any relationship between the spatial resolution and the size of the

mask? Explain.

(b) Define cross correlation and autocorrelation with the example .Mention

the uses of autocorrelation.

(c) When edge detection is necessary ? Explain how image enhancement is

performed using histogram based thresholding.

(a) Define spatial filtering method with general equation. Explain the effects

of mask size .

(b) Define correlation and convolution .Consider the following functions f(x)

and g (y) draw the outcome of their convolution.

(c) Explain the use of Laplacian operator in edge detection of an image .

(a) Write the transfer function of Butterworth and Gaussian low-pass filters

and specify the parameters. Also show the radial cross section of different

orders.

(b) What is Homomorphic filtering?

Question Set-05

(a) Why image sharpening filter is required for image enhancement? Explain.

(b) Write down the steps of frequency domain image filtering. Compare it

with spatial filtering.

Ans:

Steps of frequency domain image filtering:

a) Find the Fourier transform of the input signal

b) Find the Fourier transform of the impulse response of the filter

c) Multiply the two FTs to compute the FT of output signal

d) Find the inverse FT of the FT of the output signal.

Difference between spatial domain and frequency domain:

There are many difference between spatial domain and frequency domain in image

enhancement. Some of them are given below:

Spatial domain deals with image plane itself whereas Frequency domain deals

with the rate of pixel change.

Spatial domain works based on direct manipulation of pixels whereas

Frequency domain works based on modifying fourier transform.

Spatial domain can be more easier to understand whereas Frequency domain

can be less easier to understand.

Spatial domain is more cheaper whereas Frequency domain is less cheaper.

Spatial domain takes less time to computer whereas Frequency domain takes

more time to compute.

(b)

Explain the problem of using ideal low pass filter .Explain the equation

Hhp(u,v) =1-HIp(u,v).

Ans:

We can thus identily three major difficulties faced with Ideal Low Pass filters as

1.INFINITELY NON-CAUSAL:- Therefore it can't be made causal by delaying.

2.UNSTABLE:-Or in mathematical language we can say

Challenging Problem:- Proove that Ideal filter is unstable.

Proof:-

which is greater than

which in turn is greater than

and we know that this series diverges. Hence it is established that the Ideal Low Pass

Filter is unstable.

Thus it implies that bounded input does not imply bounded output.Thus if we build an

oscillator with Ideal Low pass Filter a bounded input may result in an unstable output.

a. Derive the laplacian mask using 2nd derivative in spatial domain. 3

b. Consider the following image with 4 bit gray level and construct the

vector x consisting the intensity along the scanning line AB. Then

derive the 1st and 2nd derivatives of x.

c. Differentiate between 1st and 2nd derivatives for image sharpening.

d. Explain point processing ang neighborhood operations for image

enhancement. How are they related?

e. Consider the following image and draw the signal representing they

gray level values along the scanning line AB .then illustrate the

Fournier transform of the signal.

f. How frequency domain image filtering is implemented? Explain ideal

filter for image sharpening.

g. Define image sharpening filter and compare 1st and 2nd derivatives.

h. Discuss the process of frequency domain image filtering.

i. Explain and compare ideal low pass filter and Butterworth filter for

image smoothing.

j. Discuss the model of the image degradation and restoration process.

k. Briefly discuss about adaptive median filter.

l. What is high-boost filtering?

Question Set-06

a. Mention the sources of noise .Explain the model of noisy image.

3

Ans:

Sources of Image noise:

While image being sent electronically from one place to another.

Sensor heat while clicking an image.

With varying ISO Factor which varies with the capacity of camera to absorb light.

1. Gaussian Noise:

Gaussian Noise is a statistical noise having a probability density function equal to normal

distribution, also known as Gaussian Distribution. Random Gaussian function is added to

Image function to generate this noise. It is also called as electronic noise because it

arises in amplifiers or detectors.

2. Impulse Noise:

In the discrete world impulse function on a vale of 1 at a single location and In

continuous world impulse function is an idealised function having unit area.

3. Poisson Noise:

The appearance of this noise is seen due to the statistical nature of electromagnetic

waves such as x-rays, visible lights and gamma rays. The x-ray and gamma ray

sources emitted number of photons per unit time. These rays are injected in

patient’s body from its source, in medical x rays and gamma rays imaging systems.

These sources are having random fluctuation of photons. Result gathered image has

spatial and temporal randomness. This noise is also called as quantum (photon)

noise or shot noise.

4. Speckle Noise

A fundamental problem in optical and digital holography is the presence of speckle

noise in the image reconstruction process. Speckle is a granular noise that

inherently exists in an image and degrades its quality. Speckle noise can be

generated by multiplying random pixel values with different pixels of an image.

b. Discuss the model of suppressing periodic noise from the image.

3

Ans:

c. Explain Geometric mean and midpoint filter to remove noise.

2.75

Ans:

Geometric Mean filter:

Noise is an unavoidable side effect. Fig. 2 describes the filtering process. It sepa rates the red, green and blue channels. It is followed by introducing a gain to compensate the attenuation resulting from the filter. Each filtered channel is then combined to

form resulting colored image

The local image function f(x, y) is filtered image and g(s,t) is input image. In

Geometric mean filter each restored pixel is given by the product of the pixels in the

sub-image window, raised to the power 1/m×n as described in (1).

1/mn

f(x, y)=[π(s,t)є Sxy g(s,t)]

(1)

The purpose is to produce more objective images (ideally noiseless) for particular

application than the original images and hence, increase the accuracy of further algorithms,

making them more similar to the characteristics of human visual recognition system.

Midpoint filter:

Applies a midpoint filter to an image.

In the midpoint method, the color value of each pixel is replaced with the average of

maximum and minimum (i.e. the midpoint) of color values of the pixels in a surrounding

region. A larger region (filter size) yields a stronger effect.

The midpoint filter is typically used to filter images containing short tailed noise such as

Gaussian and uniform type noise.

d. Assume a 4 bit 6*6 image, f(x, y), has the following matrix of

intensity.

15

5

15

15

5

6

6

15

2

2

14

4

5

15

2

2

12

2

4

15

4

4

10

1

3

15

3

4

15

0

15

2

15

15

0

0

What are the 1st , 2nd 3rd and 4th bit phase of f(x, y)? What kind of

information do the higher-order and lower –order bit planes contain?

e. What will be the output image (intensity matrix),g(x,y),after

applying transformation function T(r) as shown in figure -6 (a)

and figure -6(b) on f (x,y).

Here r and s denote gray level of f(x,y) and g(x,y) ,respectively.

a. Define sobel operation .Write sobel horizontal and vertical edge

detection masks. 3.75

Ans:

The sobel operator is very similar to Prewitt operator. It is also a derivate mask and is

used for edge detection. Like Prewitt operator sobel operator is also used to detect two

kinds of edges in an image:

Vertical direction

Horizontal direction

Following is the vertical Mask of Sobel Operator:

-1

0

1

-2

0

2

-1

0

1

This mask works exactly same as the Prewitt operator vertical mask. There is only one

difference that is it has “2” and “-2” values in center of first and third column. When

applied on an image this mask will highlight the vertical edges.

How it works

When we apply this mask on the image it prominent vertical edges. It simply works like

as first order derivate and calculates the difference of pixel intensities in a edge region.

As the center column is of zero so it does not include the original values of an image but

rather it calculates the difference of right and left pixel values around that edge. Also

the center values of both the first and third column is 2 and -2 respectively.

This give more weight age to the pixel values around the edge region. This increase the

edge intensity and it become enhanced comparatively to the original image.

Following is the horizontal Mask of Sobel Operator

-1

-2

-1

0

0

0

1

2

1

Above mask will find edges in horizontal direction and it is because that zeros column is

in horizontal direction. When you will convolve this mask onto an image it would

prominent horizontal edges in the image. The only difference between it is that it have

2 and -2 as a center element of first and third row.

How it works

This mask will prominent the horizontal edges in an image. It also works on the principle

of above mask and calculates difference among the pixel intensities of a particular edge.

As the center row of mask is consist of zeros so it does not include the original values of

edge in the image but rather it calculate the difference of above and below pixel

intensities of the particular edge. Thus increasing the sudden change of intensities and

making the edge more visible.

b. Explain line detection technique. 3

Ans:

The masks shown below can be used to detect lines at various orientations

In practice, we run every mask over the image and we combine the responses:

R(x, y) = max(|R1(x, y)|, |R2(x, y)|, |R3(x, y)|, |R4(x, y)|)

If R(x, y) > T, then discontinuity

c. Define compression ratio. How are shift codes generated? 2

Ans:

Data compression ratio is defined as the ratio between the uncompressed size

and compressed size.

Shift code is generated by implementing the following steps:

a. Arrange the source symbols so that their probabilities are monotonically

decreasing.

b. Dividing the total number of symbols into symbol blocks of equal size.

c. Coiling the individual elements within all blocks identically.

d. Adding special shift-up and/or shift-down symbols to identify each block.

d. Discuss the model of the image degradation and restoration process.

e. Briefly discuss about any four noise probability density function with

diagram.

f. What is notch filter? 1

Ans:

A notch filter is a very narrow bandwidth bandstop filter – it has a gain which

drops and then rises very steeply with increasing frequency and so the magnitude

response has the shape of a notch.

g. What do you mean by full-color and pseudo-color image processing?

Derive trichromatic coefficients from tri-stimulus. 3

h. Briefly describe the gray level to color transformation process with block

diagram. 3.75

Ans:

There are two methods to convert it. Both has their own merits and demerits. The

methods are:

Average method

Weighted method or luminosity method

Average method

Average method is the most simple one. You just have to take the average of three

colors. Since its an RGB image, so it means that you have add r with g with b and then

divide it by 3 to get your desired grayscale image.

Its done in this way.

Grayscale = (R + G + B / 3)

For example:

If you have an color image like the image shown above and you want to convert it into

grayscale using average method. The following result would appear.

Explanation

There is one thing to be sure, that something happens to the original works. It means

that our average method works. But the results were not as expected. We wanted to

convert the image into a grayscale, but this turned out to be a rather black image.

Problem

This problem arise due to the fact, that we take average of the three colors. Since the

three different colors have three different wavelength and have their own contribution

in the formation of image, so we have to take average according to their contribution,

not done it averagely using average method. Right now what we are doing is this,

33% of Red, 33% of Green, 33% of Blue

We are taking 33% of each, that means, each of the portion has same contribution in

the image. But in reality thats not the case. The solution to this has been given by

luminosity method.

i. Explain CMY color model.

Question Set-07

(a) Define image segmentation with example . Illustrate the

process of detecting horizontal and vertical lines an

image with appropriate mask .

Ans:

Segmentation attempts to partition the pixels of an image into groups that strongly

correlate with the objects in an image.

Typically the first step in any automated computer vision application

(b) Explain the method of thesholding for image segmentation.

(c) Explain how histogram is used in image thresholding.

a. Associate each histogram shown in figure -7 [d-f] with an image shown

in figure-7[a-c].

b. Write down the steps to get the image in figure-7© from the image in

Figure-7(a).

c. Can we reconstruct any image from its histogram? Justify your answer.

d. Explain the terms hue and saturation for a color image.

e. Describe the procedures to convert colors from RGB to HIS and HSI to

RGB.

f. Explain the color complements of a color image with figure.

g. Why image segmentation is necessary? Explain the process of

detecting -450 line in the image using the appropriate mask.

h. How thresholding can be applied in image segmentation .Illustrate the

basic global thresholding algorithm for image segmentation.

i. Explain how histogram is used in image thresholding.

j. What do you mean by compression of an image? Why do we require

image compression?

k. Suppose there are six symbols a, b, c, d, e, f and their probability are

0.5, 0.3, 0.1, 0.06, 0.3 and 0.01 respectively. Encode and decode these

symbols with Huffman coding.

l. Draw the block diagram of lossless predictive coding model.

Question Set-08

a. Illustrate the applications of morphological operation in image

processing with example.

b. Explain opening operation with examples.

c. Define hit, fit and structuring element .Explain the effects of

structuring element in erosion.

d. Define compression ratio. How are the shift codes generated?

e. State the principle Of Huffman coding.

f. Mention the Limitation of Huffman coding.

g. Define image segmentation and mention its necessity. Explain basic

global thresholding method.

h. Differentiate between single and multi-value thresholding with

examples.

i. Write morphological algorithm for i ) convex Hull ii) Thinning and iii)

Thickening.

j. Write the basic formulation of region-based segmentation.

k. Briefly discuss hit-or-miss transformation.

l. Discuss boundary extraction algorithm using morphological processing.

m. What are the uses of the opening and closing morphological

operations?

n. How can we segment an image based on finding the regions directly?

o. What do you mean by Lossy compression and lossless compression?