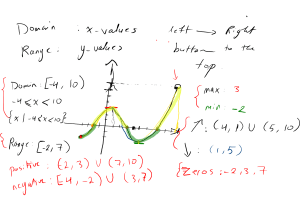

Sign Language Translation using Vision Transformers: A Cross-language Approach This research, explores automated sign language translation by integrating advanced artificial intelligence techniques. Focusing on the development and assessment of a novel cross-language training approach, which involves training AI models on one sign language and then fine-tuning them on another. This method aims to leverage shared features and patterns across different sign languages, thereby enhancing the model’s ability to accurately translate sign language into text. The study utilizes Vision Transformers (ViT) and the Roberta language model to interpret and translate sign language from videos. The research employs two diverse datasets – LSA64 for Argentinian Sign Language and RWTH-PHOENIX-Weather 2014 T for German Sign Language, to train and fine-tune the model. The effectiveness of the model is evaluated through a series of metrics, including ROGUE-2 scores. The study demonstrates considerable improvements in translation accuracy. Data scarcity is the biggest problem in Sign Language Translation and this study could be a solution to that. CHAPTER 1 INTRODUCTION Sign Language Sign language is a mode of communication utilized by the deaf and hard-of-hearing community. Unlike spoken languages that rely on sound, sign language utilizes hand gestures, facial expressions, and body movements to convey messages. Each country typically has its unique sign language, much like how people in different countries speak different languages. For instance, American Sign Language (ASL) is used in the United States while British Sign Language (BSL) is employed in the United Kingdom. They have distinct differences. Sign language goes beyond hand signals; it's a rich and detailed language that can express anything spoken language can range from casual conversations to expressing deep emotions and engaging in complex discussions. Sign Language Translation Sign language translation serves as a link between two distinct realms of communication. Similar to the way we translate Spanish or Chinese into English or French, sign language translation involves converting the gestures and expressions of sign language into written or spoken words. This plays a role in facilitating seamless communication between individuals who are deaf or hard of hearing and those who do not comprehend sign language. Traditionally human interpreters have fulfilled this role. Their availability is not always guaranteed. However, with advancements in technology, we are now witnessing the development of computer systems that can "observe" someone using sign language and subsequently translate it into text or speech. Although in its early stages, this technology aims to make conversations more convenient and inclusive, for everyone regardless of their preferred mode of communication. Challenges in sign language translation A key challenge in translating sign language is the scarcity of data. Unlike spoken languages, which have vast amounts of written texts and recorded speech available for training translation systems, sign language resources are limited. This is because sign languages have traditionally relied on visual and gestural communication rather than written or audio records. Finding enough video data on sign language, which includes varied expressions, gestures, and contexts, is difficult. This scarcity makes it challenging to train computer systems to accurately recognize and translate sign languages. Each sign language, being unique to its region, faces this data shortage, hindering the development of effective and versatile translation tools. 1 A Cross-language Approach Given the lack of enough data for each type of sign language, this research introduces an innovative approach: utilizing cross-language methods. This novel strategy is relatively unexplored in the field. The idea is to leverage the knowledge from one sign language to aid in the translation of another, capitalizing on shared patterns and characteristics found across different sign languages. This approach could revolutionize how we tackle the data scarcity problem, turning the challenge into an opportunity for innovation. By exploring this uncharted territory, this research seeks to significantly advance the capabilities of sign language translation technology, forging new paths in a field where such cross-language methods have not been thoroughly researched before. Problem Statement The main challenge in translating sign language using computers is that there isn't enough video data of people using sign language. This makes it hard to create computer programs that can understand and translate sign languages accurately. Also, since each country has its own unique sign language, like American Sign Language (ASL) in the U.S. and British Sign Language (BSL) in the U.K., it's tough to build a translation tool that works for all different types. My research tackles this problem by trying out a new idea: using what we learn from one sign language to help translate others. This method hasn't been explored before. The aim is to make a system that can understand different sign languages better, even when there isn't a lot of data available for each one. This could help create better translation tools, making it easier for people who are deaf or hard of hearing to communicate with everyone else. 2 CHAPTER 2 LITERATURE REVIEW In this chapter, we take a closer look at the important research and developments that have been done in the field of sign language translation and AI technology. We start by looking at how sign language has been traditionally translated, noting what has worked well and what hasn't. Then, we examine how artificial intelligence, with a focus on Vision Transformers, has begun to revolutionize our approach to understanding and interpreting visual communication. We also delve into various studies that have utilized insights from one language to enhance comprehension in another, highlighting the cross-disciplinary applications in this field, which is known as cross-language approaches. This is important for understanding how we can apply this idea to sign language. Additionally, this section takes a deep dive into the latest advancements in using AI for translating sign language, pointing out the exciting progress made as well as the challenges that still need to be solved. Traditional Methods of Sign Language Translation Traditional methods of translating sign language have played a role in facilitating communication for the deaf and hard-of-hearing community. The common approach involves skilled human interpreters who can translate sign language into spoken or written language in real-time. While this method is effective it faces challenges such as the number of qualified interpreters and variations in interpretation quality [1]. To overcome these limitations different manual transcription systems have been developed, including Stokoe Notation, HamNoSys, and SignWriting. These systems aim to capture the complexities of sign language, such as handshapes, movements, and facial expressions [2]. However due to their complexity and limited usage, in outside research settings, they are not widely practical. Furthermore, early forms of computer-assisted interpretation, including video relay services (VRS) and video remote interpreting (VRI), have been employed. These technologies utilize video conferencing tools to facilitate interpretation but are limited by the quality of the technology and the interpreter's skill, offering less than fully automated solutions [3]. Additionally, sign language dictionaries and learning resources have been invaluable in education and self-study. These resources provide visual references for signs but are not designed for real-time dynamic translation [4]. Despite their foundational role, traditional methods reveal significant limitations, particularly in real-time applicability and capturing the full spectrum of sign language nuances. This highlights the need for more advanced solutions, such as AI-driven methods and Vision Transformers, to improve communication accessibility. 3 Advances in AI for Sign Language Translation The field of sign language translation has witnessed significant advancements through the integration of artificial intelligence (AI). Initially, the focus was on traditional machine learning techniques. The advent of deep learning, particularly Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), marked a significant milestone. CNNs, known for their ability to process visual data, have been instrumental in interpreting the visual components of sign language, such as hand gestures and movements [5]. RNNs, on the other hand, excel in understanding sequential data, making them suitable for comprehending the temporal dynamics of sign language [6]. However, the recent emergence of Vision Transformers has brought a new dimension to AI's capability in this domain. Unlike their predecessors, Vision Transformers process image inputs by understanding the spatial-temporal patterns, offering nuanced and sophisticated interpretations of sign language [7]. This innovation presents a leap forward from the earlier CNN and RNN models, enabling more accurate and context-aware translations [8]. Alongside these technological advancements, there has been a concerted effort to create comprehensive datasets for sign language. Such datasets are crucial for training AI models and include diverse expressions and contexts of sign languages, contributing to the overall accuracy and effectiveness of translation systems [9]. Vision Transformers: Concepts and Applications Vision Transformers (ViTs) represent a significant evolution in the field of artificial intelligence, particularly in the realm of computer vision. Originating from the transformer models that revolutionized natural language processing, ViTs adapt the transformer architecture for visual data analysis. Unlike traditional Convolutional Neural Networks (CNNs) that process images through filters and pooling layers, ViTs decompose an image into a sequence of patches and use self-attention mechanisms to understand the relationship between these patches. This approach allows them to capture both local and global features of an image, leading to a more comprehensive understanding of the visual content [10]. The application of ViTs extends beyond standard image classification tasks. In the context of human action recognition, ViTs have shown remarkable potential. They are particularly adept at processing sequential and spatial-temporal data, making them well-suited for interpreting the dynamic and complex nature of action recognition. By analyzing video data as frames, ViTs can pick up subtle nuances in human actions.[11]. Beyond human action recognition, ViTs have found applications in various fields. In medical imaging, for example, they assist in diagnosing diseases by analyzing X-rays, MRI scans, and other medical images with high accuracy. In autonomous vehicles, ViTs contribute to a better understanding of the vehicle's surroundings, improving navigation and safety features. Additionally, in the realm of surveillance and security, ViTs enhance the capability of systems to detect and recognize objects and activities, thereby bolstering security measures [12]. 4 As research continues, the potential applications of Vision Transformers are expanding, offering promising advancements in various sectors. Their ability to handle complex visual data with remarkable accuracy and efficiency marks them as a groundbreaking technology in the AI landscape. Research Gap In the field of sign language translation using AI models, a major challenge is the scarcity of data. Most AI models for sign language translation are constrained by the limited availability of extensive datasets for each specific sign language. This scarcity significantly impacts the effectiveness and accuracy of these models. To tackle this issue, this research introduces a cross-language approach as a potential solution. The proposed method involves initially training AI models on one sign language and subsequently fine-tuning them on another. This technique is designed to leverage the features and patterns learned from the first language to enhance the translation capabilities for the second language, thereby overcoming the inherent data limitations. The implementation of a cross-language approach could mark a significant improvement in the performance of AI translation models for sign language. It presents an innovative and strategic solution to the critical problem of data scarcity, potentially leading to a transformative impact in the field of sign language translation. By addressing this research gap, the study aims to make strides toward more accurate and efficient translation tools in the realm of AI and sign language. 5 CHAPTER 3 METHODOLOGY This section outlines the methodology adopted for the research, focusing on a cross-language approach to AI-driven sign language translation. It details the journey from data collection to model evaluation metrics, emphasizing the application of Vision Transformers. The methodology is crafted to tackle the data scarcity in sign language translation models, illustrating the integration of advanced AI techniques with a novel cross-language strategy. This approach is intended to enhance both the accuracy and adaptability of sign language translation, addressing the key challenges identified in the field. Data Collection In this research, two publicly available distinct datasets are utilized: LSA64, a dataset for Argentinian Sign Language, and RWTH-PHOENIX-Weather 2014 T, which focuses on German Sign Language in the context of weather forecasting. 1. LSA64: A Dataset for Argentinian Sign Language The LSA64 dataset is designed for the Argentinian Sign Language (LSA), consisting of 3200 videos with 10 subjects performing 64 different common signs, including verbs and nouns. It was recorded in two phases: the first with 23 one-handed signs outdoors under natural light, and the second with 41 signs (22 two-handed and 19 one-handed) indoors under artificial light. Subjects wore black clothes and fluorescent-colored gloves against a white background, enhancing hand segmentation. The signs were performed with minimal constraints to ensure diversity, and all subjects were non-signers and right-handed. The recordings used a Sony HDR-CX240 camera, producing videos at a resolution of 1920x1080 at 60 frames per second. 6 2. RWTH-PHOENIX-Weather 2014 T The RWTH-PHOENIX Weather 2014 T dataset is a benchmark for continuous sign language recognition and translation, featuring recordings from the German TV station PHOENIX. Over three years (2009-2011), daily news and weather forecasts with sign language interpretation were recorded. A subset of 386 editions was transcribed using gloss notation, with German speech transcribed through automatic speech recognition and manual cleaning. The videos, recorded at 25 frames per second with a frame size of 210x260 pixels, feature interpreters in dark clothes against a gray background. 7 Model Architecture The model architecture leverages a specialized Video Vision Transformer (ViViT) as the encoder, specifically designed to handle the dynamic nature of video data. Unlike traditional ViTs that process static images by dividing them into patches, the Video Vision Transformer introduces an additional step to capture the temporal dimension present in videos. This is achieved through a process known as Tubelet Embedding. Figure: Vision Transformer - dividing images into patches In this process, instead of treating each frame in isolation, the Video Vision Transformer extracts volumes from the video clip. These volumes are akin to three-dimensional patches that include 8 both spatial and temporal data—essentially, a sequence of patches across consecutive frames. By doing so, it creates a series of tokens that encapsulate the movement and changes over time, which are crucial for understanding the nuances of sign language in video form. Figure: Video Vision Transformer - Tubelet Embedding. Once these video tokens, which now contain rich temporal information, are obtained, they are flattened and fed into the transformer encoder. This allows the encoder to perform self-attention not just over the spatial dimensions of the sign language, but also across the sequence of frames, capturing the flow and transitions of the signing actions. For decoding, the model employs a transformer architecture similar to Roberta, chosen for its effectiveness in language comprehension and generation. This decoder is built with multiple transformer blocks, including a cross-attention layer that is pivotal for integrating the encoder's visual output. The cross-attention allows the decoder to reference the encoded visual information and generate relevant text output. During the training phase, the model learns to predict subsequent tokens based on the visual input and the preceding textual context. For inference, the model generates text sequentially, using the decoder's output from one step as the input for the next, and continues this process until it produces a [EOS] token, denoting the sentence's conclusion. By combining the visual processing prowess of Vision Transformers with the language fluency of language models like Roberta, the architecture provides a potent tool for video-to-text translation, capable of rendering complex visual scenes into accurate and meaningful text descriptions. 9 Training Process 1. Fine-tune the encoder The training process begins with a pre-trained Video Vision Transformer model, specifically "google/vivit-b-16x2-kinetics400". This model has been initially trained on the Kinetics-400 dataset, which includes a wide variety of human activities and has learned a rich representation of human movements and actions. The choice of this pre-trained model is strategic, as it provides a solid foundation of visual features that are relevant for understanding the complexities of sign language. Upon integrating the pre-trained Video Vision Transformer as the encoder, the entire model is initially frozen. This means that the weights of the model, at this stage, are not updated during the training process. To further optimize the model for the target dataset, a series of experiments are conducted by selectively unfreezing higher-level layers of the model. These layers, being closer to the output, are more specialized in detecting patterns relevant to the task at hand—in this case, sign language translation. By unfreezing these layers, they become trainable, and the model can update these specific weights based on the feedback received from the performance on the LSA64 dataset. This allows the model to refine its feature extraction abilities. The training process is iterative, involving cycles of training, evaluation, and adjustment. At each iteration, the model is assessed for its translation accuracy and ability to generalize across the dataset. Adjustments are made based on the model's performance, which may include further unfreezing of layers or tweaking other training parameters such as the learning rate or batch size. Throughout the training process, various metrics are monitored to gauge the model's performance. These include loss functions, accuracy, precision, and f1-score that provide insight into how well the model is translating the sign language videos. The best-performing model configuration, determined through these metrics, is selected for the final model to be used as the encoder. This training process ensures that the pre-trained “google/vivit-b-16x2-kinetics400” Video Vision Transformer model is finely tuned to extract specific characteristics from the sign language videos, enabling effective cross-language sign language translation. 10 2. Fine-tune the decoder In this research, the Roberta architecture is employed as the decoder, functioning as a language model tailored for the task of sign language translation. The decoder's fine-tuning process is conducted using a specific subset of the RWTH-PHOENIX Weather 2014 T dataset, which includes 7096 German translation sentences corresponding to sign language videos. The vocabulary for this task comprises 2887 words, providing a rich language foundation for the model to learn from. An important advantage of this focused training approach is the efficiency it brings to the overall training process. As the self-attention layers are refined to better represent sentence structure, the complexity and time required for training the model are significantly reduced. Consequently, when the model progresses to the task of translating sign language, the optimizer can dedicate more resources to fine-tuning the cross-attention layers. These layers are crucial for integrating the visual input from the encoder with the language information in the decoder, leading to a more seamless translation process. 3. Training the Vision Encoder Decoder After fine-tuning the encoder and decoder parts, the Vision Encoder Decoder module of the hugging face library is used to combine the previously mentioned encoder and decoder parts. They're connected by a cross-attention layer, which helps the model pay attention to the right parts of the video while it translates what it sees into words. By training this combined model, it gets better at understanding sign language videos and turning them into written sentences that make sense. Evaluation Metrics For the encoder, which processes video input, the metrics of accuracy, precision, recall, and F1 score are utilized, alongside tracking the training and validation loss. These metrics provide a multi-dimensional view of the encoder's performance, ensuring it accurately and reliably interprets the visual data from sign language videos. When fine-tuning the decoder, which is responsible for generating text, the focus shifts to monitoring the training and validation loss. This helps in understanding how well the decoder is learning to translate the encoded visual information into coherent text. Additionally, random fill 11 mask tests are conducted on the decoder. These tests involve randomly masking words in sentences and having the decoder predict them, which further evaluates its understanding and generation of language. For the final sign language translation model, which combines the encoder and decoder, the ROGUE-2 (Recall-Oriented Understudy for Gisting Evaluation) metric is applied. ROGUE-2 is particularly suited for evaluating the quality of translation and text generation models, as it measures the overlap of bigrams between the generated text and a reference text, providing insight into the model's translation accuracy and fluency. CHAPTER 4 RESULTS AND DISCUSSION Analysis of Model Performance Fine-tune the encoder After fine-tuning the ViViT encoder on the LSA64 dataset, which contains 3200 video clips of Argentinian Sign Language across 64 sign categories, the model achieved promising results. The process, spanning 30 epochs with a batch size of 8, was tailored to accommodate the large dataset size, hence the lower number of epochs. The accuracy graph shows a steady increase before plateauing, indicating that the model quickly learned to classify the signs correctly and then maintained a high level of performance. This suggests that the model was able to distinguish between different signs with a high degree of reliability. Similarly, the precision graph also shows a consistent ascent and leveling, which signifies that when the model predicted a particular sign category, it was correct most of the time. This is important because it means the model wasn't just guessing signs; it was making precise predictions. 12 By experimenting with unfreezing multiple layers during training, the model learned general sign language features and adapted to the nuances of the LSA64 dataset. The final model achieved, 1. accuracy - 0.906250 2. precision - 0.941570 13 3. recall - 0.906250 (measures how well the model identifies all relevant instances) 4. F1 score - 0.910188 (harmonic mean of precision and recall) These figures collectively indicate a well-performing model that is both accurate and reliable in recognizing and categorizing Argentinian Sign Language signs. In simpler terms, the model did a great job of learning from the videos. It could tell the signs apart really well, didn't make many mistakes, and was pretty consistent in its predictions. The careful training approach, where parts of the model were gradually introduced to learning, paid off by making sure it didn't just memorize the signs but understood them. Training the Vision Encoder Decoder The graph representing the training and validation losses was obtained from the training of the vision encoder-decoder model over 1000 video samples for 24 epochs. Due to high RAM demands, a batch size of 2 was used, and an adaptive learning rate was introduced after the fifth epoch to address overfitting. Although overfitting was mitigated, the graph indicates its presence as the validation loss plateaus above the training loss. For further reduction of overfitting, several strategies can be considered: 14 Regularization Techniques: Regularization methods such as dropout or L1/L2 regularization may be applied. These methods serve to discourage complexity in the model by penalizing the loss function for large weights or by randomly omitting neurons during the training process. Early Stopping: The incorporation of early stopping in the training regimen is advised. This technique entails halting the training when an increase in validation loss is observed, signaling the onset of overfitting. Cross-Validation: The deployment of cross-validation could ensure broader generalization across various data subsets. Model Simplification: A reduction in the model's complexity might be warranted. This could be achieved by decreasing the number of layers or the number of units within each layer. The results demonstrate that an initial decrease in loss was effectively achieved, suggesting successful learning. However, the validation loss's failure to continue decreasing alongside the training loss post-epoch 5, despite the adaptive learning rate, signals residual overfitting. The implementation of the aforementioned strategies could potentially lead to a further reduction in validation loss, indicative of a model with enhanced generalization capabilities. Impact of Cross-language Training In this section, how training the encoder on the LSA64 Argentinian Sign Language dataset influences the model’s performance is discussed. For this evaluation, 100 random, unseen videos from the unutilized samples of the RWTH-PHOENIX Weather 2014 T dataset were selected as a test set. Two versions of the model were compared: one with an encoder fine-tuned on the LSA64 dataset, representing the proposed method, and another without this specialized fine-tuning. The results, as depicted in the provided graph, show the ROGUE measures for both models. Although the ROGUE scores for precision, recall, and F-measure are relatively low for both models, the model with cross-language training exhibits a noticeable improvement across all three metrics. This suggests that even though the overall scores are modest, the cross-language approach yields a positive effect on the model's ability to translate sign language into text. 15 Specifically, the fine-tuned model demonstrates a better understanding of the context and nuances of sign language, as indicated by the higher precision and recall. Precision reflects the model’s ability to produce relevant translations, while recall captures its capacity to identify all pertinent information. The F-measure, which combines precision and recall, confirms that the fine-tuning process contributes to a more balanced and effective translation model. So, the model that learned from the Argentinian Sign Language videos did a better job of understanding and translating new sign language videos from German Sign Language it hadn't seen before. Even though there's still a lot of room for improvement, fine-tuning the encoder part of the model with videos from a different sign language helped it get better at its job. 16 Following table shows a comparison between reference sentences and those generated by the model. Video ID from RWTH-PHOENI X Weather 2014 T Dataset Reference Sentence (German) Reference Sentence (English Translation) Generated Sentence (German) Generated Sentence (English Translation) 13July_2010_Tu esday_tagessch au-4309 am tag scheint verbreitet die sonne hier und da ein paar quellwolken During the day, the sun shines, a few clouds here and there Während der Sonne scheinen hier und da Wolken During the sun shines clouds here and there 05August_2010 _Thursday_tage sschau-4257 auch gewitter sind dabei Thunderstorms are also present Gewitter werden Thunderstorms will be 05December_20 11_Monday_tag esschau-4179 dort regnet oder schneit es auch am tag It rains or snows there even during the day Tagsüber regnet es dort rains there during the day 05December_20 11_Monday_tag esschau-4174 tiefer luftdruck bestimmt unser wetter Low air pressure determines our weather Luft hier ist das Wetter air here is the weather 10April_2010_S aturday_tagessc hau-4142 auch schnee bleibt im bergland immer noch ein thema Snow also still remains an issue in the mountains Schnee hier in den Bergen Snow present here in the mountains In the results presented, it is evident through comparative analysis that while the model demonstrates a capacity for sign language translation, there are notable discrepancies between the reference and generated sentences. These inaccuracies highlight the model's current limitations in fully capturing the complexity of sign language communication. However, the potential for improvement is significant. 17 CHAPTER 5 CONCLUSION In this research, it was found that using a special training method where the computer learns from one sign language and then gets better at another (cross-language training) helped improve how well the computer could translate sign language. Video datasets of Argentinian Sign Language and another for German Sign Language were used and noticed that this method made the model’s translations better, even though they weren't perfect. The numbers from our tests, such as rogue measures, showed that the model was getting more right answers after training this way. This shows that this idea works and could be used to make even better sign language translation tools in the future. The significance of this research is highlighted by its contribution to the advancement of sign language translation technologies. By demonstrating the potential of cross-language training, it has been shown that models can be effectively adapted to improve their translation accuracy across different languages. This approach holds promise for enhancing communication tools for the deaf and hard-of-hearing community, suggesting that with further refinement, such technologies could become more accessible and widely used. 5.1 Future Work For future work, several avenues present themselves based on the findings of this study. A critical goal will be to increase the prediction speed of the model to enable real-time translation. This is a feature essential for live conversations and broadcasts. Efforts could focus on optimizing the model architecture for faster processing without compromising accuracy. Implementing more efficient algorithms and taking advantage of hardware acceleration are potential strategies. Additionally, research into lightweight models that maintain high performance while operating at lower computational costs could make real-time translation more accessible on various devices. Also, expanding the datasets to include more sign languages and dialects could further test the robustness of the cross-language approach. Additionally, incorporating real-time feedback mechanisms for users to interact with and train the model could improve its practical application. Exploring the integration of other sensory data may also enhance the translation accuracy for nuanced signs. Advancements in deep learning architectures, such as transformer models tailored specifically for sign language, hold the potential for even greater accuracy and fluency. Lastly, developing user-friendly interfaces and conducting usability studies with the deaf and hard-of-hearing people will be essential in ensuring that the technologies developed are aligned with user needs and preferences. 18 REFERENCES 1. [1]H. Haualand, “Sign Language Interpreting: A Human Rights Issue,” International Journal of Interpreter Education, vol. 1, no. 1, Nov. 2009, Available: https://tigerprints.clemson.edu/ijie/vol1/iss1/7/ 2. [2]S. Kelsall, “Movement and Location Notation for American Sign Language,” scholarship.tricolib.brynmawr.edu, 2006, Accessed: Jan. 19, 2024. [Online]. Available: https://scholarship.tricolib.brynmawr.edu/items/fc6fc685-bc03-4f46-8be7-a41a8e4440f2 3. [3]J. Napier, R. Skinner, and G. Turner, “‘It’s good for them but not so for me’: Inside the sign language interpreting call center,” The International Journal of Translation and Interpreting Research, vol. 9, no. 2, Jul. 2017, doi: https://doi.org/10.12807/ti.109202.2017.a01. 4. [4]D. Bragg, K. Rector, and R. E. Ladner, “A User-Powered American Sign Language Dictionary,” Proceedings of the 18th ACM Conference on Computer Supported Cooperative Work & Social Computing, Feb. 2015, doi: https://doi.org/10.1145/2675133.2675226. 5. [5]R. Rastgoo, K. Kiani, and S. Escalera, “Sign Language Recognition: A Deep Survey,” Expert Systems with Applications, vol. 164, p. 113794, Feb. 2021, doi: https://doi.org/10.1016/j.eswa.2020.113794. 6. [6]I. Papastratis, C. Chatzikonstantinou, D. Konstantinidis, K. Dimitropoulos, and P. Daras, “Artificial Intelligence Technologies for Sign Language,” Sensors (Basel, Switzerland), vol. 21, no. 17, p. 5843, Aug. 2021, doi: https://doi.org/10.3390/s21175843. 7. [7]B.-K. Ruan, Hong-Han Shuai, and W.-H. Cheng, “Vision Transformers: State of the Art and Research Challenges,” arXiv (Cornell University), Jul. 2022, doi: https://doi.org/10.48550/arxiv.2207.03041. 8. [8]A. Ulhaq, N. Akhtar, G. Pogrebna, and A. Mian, “Vision Transformers for Action Recognition: A Survey,” arXiv.org, Sep. 12, 2022. https://arxiv.org/abs/2209.05700 (accessed Jan. 19, 2024). 9. [9]D. M. Madhiarasan and P. P. P. Roy, “A Comprehensive Review of Sign Language Recognition: Different Types, Modalities, and Datasets,” arXiv:2204.03328 [cs], Apr. 2022, Available: https://arxiv.org/abs/2204.03328 10. [10]A. Dosovitskiy et al., “An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale,” arXiv:2010.11929 [cs], Oct. 2020. 19 11. [11]A. Ulhaq, N. Akhtar, G. Pogrebna, and A. Mian, “Vision Transformers for Action Recognition: A Survey,” arXiv.org, Sep. 12, 2022. https://arxiv.org/abs/2209.05700 (accessed Jan. 19, 2024). 12. [12 ]K. Han et al., “A Survey on Vision Transformer,” IEEE Transactions on Pattern Analysis and Machine Intelligence, pp. 1–1, 2022, doi: https://doi.org/10.1109/tpami.2022.3152247. 20