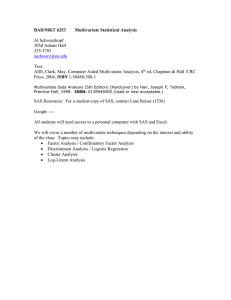

Statistics 6545

Multivariate Statistical Methods

2

2.1

Matrix Algebra and Random Vectors

Introduction

The study of multivariate methods, and statistical methods in

general, is greatly facilitated by the use of matrix algebra

Statistics 6545 Multivariate Statistical Methods

53

Robert Paige (Missouri S &T)

2.2

Some Basics of Matrix Algebra and Random Vectors

Data in a multivariate analysis can be represented as a matrix

x11 x12 · · · x1k · · · x1p

x21 x22 · · · x2k · · · x2p

.

..

..

..

.

X =

x.j1 x.j2 · · · x.jk · · · x.jp

.

.

.

.

xn1 xn2 · · · xnk · · · xnp

Many calculations in multivariate analysis are best performed with

matrices and vectors

Statistics 6545 Multivariate Statistical Methods

54

Robert Paige (Missouri S &T)

2.2.1

Vectors

An array x of n real numbers x1, . . . , xn is called a vector and is

written as

x1

x2

x=

..

xn

or

′

x = x1 x2 · · · xn

A vector can be represented geometrically as a directed line in n

dimensions

Statistics 6545 Multivariate Statistical Methods

55

Robert Paige (Missouri S &T)

A vector can be expanded or contracted by multiplying it by a

constant c

cx1

cx2

cx =

..

cxn

Statistics 6545 Multivariate Statistical Methods

56

Robert Paige (Missouri S &T)

Example: Let x = [0, 1]′ then 4x = [4 (0) , 4 (1)]′ = [0, 4]′

Statistics 6545 Multivariate Statistical Methods

57

Robert Paige (Missouri S &T)

Two vectors may be added

x1

y1

x2 y2

x+y =

.. + ..

xn

yn

x1 + y1

x2 + y2

=

.

.

xn + yn

Statistics 6545 Multivariate Statistical Methods

58

Robert Paige (Missouri S &T)

′

′

Example: If x = [0, 1] and y = [1, 1] then x + y = [1, 2]′

A vector has both direction and length

The length of a vector is defined as

Lx =

x21 + x22 + · · · + x2n

For n = 2 the length of x can be viewed as the hypotenuse of a

right triangle

Statistics 6545 Multivariate Statistical Methods

59

Robert Paige (Missouri S &T)

Note that

Lcx =

c2x21 + c2x22 + · · · + c2x2n

√

= c2

= |c| Lx

x21 + x22 + · · · + x2n

√

Example: If x = [0, 1] then Lx = 02 + 12 = 1

′

Another important concept is that of the angle between two vectors x and y

Suppose that n = 2 and that the angles associated with x =

′

′

[x1, x2] and y = [y1, y2] are θ1 and θ2 respectively and θ1 < θ2 so

that the angle between x and y is θ2 − θ1

Statistics 6545 Multivariate Statistical Methods

60

Robert Paige (Missouri S &T)

We have that

x1

cos (θ1) = , sin (θ1) =

Lx

y1

cos (θ2) = , sin (θ2) =

Ly

x2

Lx

y2

Ly

cos (θ) = cos (θ2 − θ1)

= cos (θ2) cos (θ1) + sin (θ2) sin (θ1)

y1

x1

y2

x2

=

+

Ly

Lx

Lx

Lx

y1x1 + y2x2

=

LxLy

Statistics 6545 Multivariate Statistical Methods

61

Robert Paige (Missouri S &T)

Here

′

x y = y1x1 + y2x2

is the inner product of x and y

Note that

√ ′

Lx = x x

so we have

y1x1 + y2x2

LxLy

′

xy

=√ ′

x x y′ y

cos (θ) =

′

′

Example: x = [0, 1] and y = [1, 1]

(1) (0) + (1) (1)

√

cos (θ) =

1 2

1

=√

2

Statistics 6545 Multivariate Statistical Methods

62

Robert Paige (Missouri S &T)

′

Vectors x and y are perpendicular (orthogonal) when x y = 0

′

′

′

Example: If x = [0, 1] and y = [1, 0] then x y = 0

All of this holds for vectors of length n

A set of vectors x1, x2, . . . , xk are linearly dependent if there exists

constants c1, c2, . . . , ck , where at least one is nonzero, where

c1x1 + · · · + ck xk = 0

and 0 represents the vector of all zeroes

When this is the case then at least one of the vectors can be written

as a linear combination of the others

If all the ci must be zero for

c1x1 + · · · + ck xk = 0

then the set of vectors is linearly independent

Statistics 6545 Multivariate Statistical Methods

63

Robert Paige (Missouri S &T)

Example:

1

1

1

x1 = 1 , x2 = 1 , x3 = 0

1

0

0

are these vectors linearly dependent?

The space of all real m-tuples with scalar multiplication and vector

addition is a called a vector space

Note that this is simply n-dimensional Euclidean space Rm

Rm is simply the linear span of its basis vectors (the set of all

linear combinations of the basis vectors)

Example:

1

0

0

R 3 = x : x = c1 0 + c2 1 + c3 0

0

0

1

Statistics 6545 Multivariate Statistical Methods

64

Robert Paige (Missouri S &T)

Any set of m linearly independent vectors is called a basis for

vector space of all m-tuples of real numbers

Example: A basis for R3 is

0

0

1

0 , 1 , 0

0

1

0

Every vector in Rm can be represented as a linear combination of

a fixed basis

The orthogonal projection ("shadow") of a vector x on y is

′

xy

′ y

yy

′

xy y

=

Ly Ly

Projection of x on y =

where

Statistics 6545 Multivariate Statistical Methods

y

Ly

65

Robert Paige (Missouri S &T)

is a unit vector and describes the direction of the projection and

′

′

xy

xy

= Lx

= Lx cos (θ)

Ly

Ly Lx

The length of the projection is

|Lx cos (θ)| = Lx |cos (θ)|

′

′

Example: x = [0, 1] and y = [1, 1]

1

Projection of x on y = y = [1/2, 1/2]′

2

Statistics 6545 Multivariate Statistical Methods

66

Robert Paige (Missouri S &T)

Note that we can also define a projection matrix

′

xy

Projection of x on y = ′ y

yy

′

yx

=y ′

yy

−1

= y (y ′y) y ′x

So then

′

−1

y (y y)

′

y =

=

and

Projection of x on y =

′

1 1

[1, 1]

1 2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

0

1

=

1

2

1

2

If y1, y2, . . . , yr are mutually orthogonal then the projection of

Statistics 6545 Multivariate Statistical Methods

67

Robert Paige (Missouri S &T)

vector x on the linear span of y1, y2, . . . , yr is given as

′

′

′

x y1

x y2

x yr

y1 + ′ y2 + · · · + ′ yr

′

yr yr

y1y1

y2y2

Gram-Schmidt Process: Given linearly independent vectors

x1, x2, . . . , xk

there exits mutually orthogonal vectors

u1, u2, . . . , uk

with the same linear span.

u1 = x1

′

x2u1

u2 = x2 − ′ u1

u1u1

..

′

′

xk u1

xk uk−1

uk−1

uk = xk − ′ u1 − · · · − ′

u1u1

uk−1uk−1

Statistics 6545 Multivariate Statistical Methods

68

Robert Paige (Missouri S &T)

Example:

Then

1

1

1

x1 = 1 , x2 = 1 , x3 = 0

1

0

0

1

u1 = x1 = 1

1

Statistics 6545 Multivariate Statistical Methods

69

Robert Paige (Missouri S &T)

′

x2u1

u2 = x2 − ′ u1

u1u1

1

1

2

=1− 1

3 1

0

1

=

Statistics 6545 Multivariate Statistical Methods

3

1

3

− 23

70

Robert Paige (Missouri S &T)

′

′

x3u1

x3u2

u3 = x3 − ′ u1 − ′ u2

u1u1 u2u2 1

1

1

1

3

1

3 1

1 −6

= 0 −

3

3

9

0

1

− 23

1

2

= − 12

0

2.2.2

Matrices

A matrix is a rectangular array of

columns, for instance,

a11 a12

a21 a22

A =

..

..

(n×p)

an1 an2

Statistics 6545 Multivariate Statistical Methods

71

numbers with n rows and p

· · · a1p

· · · a2p

. . . ..

· · · anp

Robert Paige (Missouri S &T)

The transpose of a matrix A changes its columns into rows and is

′

denoted by A

The product of a constant c and matrix A is

ca11 ca12 · · · ca1p

ca21 ca22 · · · ca2p

cA =

.. . . . ..

..

(n×p)

can1 can2 · · · canp

If matrices A and B have the same dimensions then

A+B

is defined and has (i, j)th entry

aij + bij

When A is (n × k) and B is (k × p) then the product

AB

Statistics 6545 Multivariate Statistical Methods

72

Robert Paige (Missouri S &T)

is defined with (i, j)th entry that is the inner product of the ith

row of A and jth column of B;

k

ai1b1j + ai2b2j + · · · + aik bkj =

ail bkl

l=1

A square matrix (n = p) is symmetric if

′

A=A

A square matrix A has inverse B if

AB = I = BA

where

1

0

I =

0

0

Statistics 6545 Multivariate Statistical Methods

0

1

0

0

73

0

0

...

0

0

0

0

1

Robert Paige (Missouri S &T)

is the identity matrix

Example: Let

1 1 1

A=1 1 0

1 0 0

0 0 1

B = A−1 = 0 1 −1

1 −1 0

1 1 1

0 0 1

1

AB = 1 1 0 0 1 −1 = 0

1 0 0

1 −1 0

0

0 0 1

1 1 1

1

BA = 0 1 −1 1 1 0 = 0

1 −1 0

1 0 0

0

Statistics 6545 Multivariate Statistical Methods

74

0 0

1 0

0 1

0 0

1 0

0 1

Robert Paige (Missouri S &T)

The determinant of the square k × k matrix A is the scalar given

as

|A| = a11

if k = 1

a11 a12

|A| =

a21 a22

= a11a22 − a12a21

if k = 2 more generally

|A| =

k

j=1aij

|Aij | (−1)i+j

where Aij is the (k − 1) × (k − 1) matrix obtained by deleting the

ith row and the jth column of A

Statistics 6545 Multivariate Statistical Methods

75

Robert Paige (Missouri S &T)

Example:

1 1 1

1 1 0 =1 1 0 −1 1 0 +1

0 0

1 0

1 0 0

=0−0−1

= −1

The inverse of any 2 × 2 invertible matrix

a11 a12

A=

a21 a22

is

1

a22 −a21

−1

A =

|A| −a12 a11

The inverse of any 3 × 3 invertible matrix

a11 a12 a13

A = a21 a22 a23

a31 a32 a33

Statistics 6545 Multivariate Statistical Methods

76

1 1

1 0

Robert Paige (Missouri S &T)

is

a22

+

a32

1

−1

− a21

A =

a31

|A|

a

+ 21

a31

a12

a32

a

+ 11

a31

a

− 11

a31

a23

a33

a23

a33

a22

a32

a13

a33

a13

a33

a12

a32

−

a12

a22

a

− 11

a21

a

+ 11

a21

+

a13

a23

a13

a23

a12

a22

In general the (j, i)th entry of A−1 is

|Aij |

(−1)i+j

|A|

Example:

1 1 1

A=1 1 0

1 0 0

Statistics 6545 Multivariate Statistical Methods

77

Robert Paige (Missouri S &T)

A−1

1 0

1 1

+

−

0 0

0 0

1

− 1 0 + 1 1

=

1 0

1 0

−1

1 1

1 1

+

−

1 0

1 0

0 0 1

= 0 1 −1

1 −1 0

1

1

1

−

1

1

+

1

+

1

0

1

0

1

1

Inverse matrix B exists if a1, . . . , ak the k columns of A;

are linearly independent

A = [a1 · · · ak ]

Statistics 6545 Multivariate Statistical Methods

78

Robert Paige (Missouri S &T)

It is very easy to find the inverse of a diagonal matrix, for instance

−1

0

0

a11 0 0 0

1/a11 0

0 a22 0 0

0

= 0 1/a22 0

0 0 a33 0

0

0 1/a33 0

0 0 0 a44

0

0

0 1/a44

Orthogonal matrices is a class of matrices for which it is also easy

to find an inverse;

′

QQ = Q′Q = I

so that

′

Q−1 = Q

Note that

Q′Q = I

implies that for

Q = [q1 · · · qk ]

′

0 if i = j

qiqj =

1 if i = j

Statistics 6545 Multivariate Statistical Methods

79

Robert Paige (Missouri S &T)

The columns of Q are mutually orthogonal and have unit length

Since

′

QQ = I

then rows of Q have the same property

Example: Consider the counterclockwise rotation matrix seen in

Chapter 1

cos (θ) sin (θ)

A=

− sin (θ) cos (θ)

A−1 = A′ =

Statistics 6545 Multivariate Statistical Methods

cos (θ) − sin (θ)

sin (θ) cos (θ)

80

Robert Paige (Missouri S &T)

This is the clockwise rotation matrix

cos (θ) sin (θ)

cos (θ) − sin (θ)

AA−1 =

− sin (θ) cos (θ)

sin (θ) cos (θ)

cos2 θ + sin2 θ

0

=

0

cos2 θ + sin2 θ

1 0

=

= A−1A

0 1

For a square matrix A of dimension k × k the following are equivalent

1. Ax = 0 implies that x = 0 (meaning that A is nonsingular)

2. |A| = 0

3. There exists a matrix A−1 such that AA−1 = A−1A = I

Let A and B be k × k matrices and assume that their inverses

exist, then

Statistics 6545 Multivariate Statistical Methods

81

Robert Paige (Missouri S &T)

′

′

1. A−1 = A

−1

2. (AB)−1 = B −1A−1

3. |A| = |A′|

4. If each element of a row (column) of A is zero then |A| = 0

5. If any two rows (column) of A are identical then |A| = 0

6. If A is nonsingular then |A| = 1/ A−1 so that |A| A−1 = 1

7. |AB| = |A| |B|

8. |cA| = ck |A| for scalar c

Let A = {aij } be a k × k matrix, then the trace of A is

k

tr (A) =

aii

i=1

Let A and B be k × k matrices and c be a scalar

Statistics 6545 Multivariate Statistical Methods

82

Robert Paige (Missouri S &T)

1. tr (cA) = ctr (A)

2. tr (A ± B) = tr (A) ± tr (B)

3. tr (AB) = tr (BA)

4. tr B −1AB = tr (A)

5. tr (AA′) =

k

i=1

k

2

a

j=1 ij

A square matrix A is said to have an eigenvalue λ, with corresponding eigenvector x = 0 if

Ax = λx

′

Usually eigenvector x is normalized so that x x = 1 in which case

we denote x as e

The k eigenvalues of a k × k matrix A satisfy the polynomial

equation

|A − λI| = 0

and as such are sometimes referred to as the characteristic roots

of A

Statistics 6545 Multivariate Statistical Methods

83

Robert Paige (Missouri S &T)

Example:

3 −2

4 −1

A=

|A − λI| =

has solutions

3 − λ −2

= λ2 − 2λ + 5 = 0

4 −1 − λ

λ = 1 ± 2i

The associated eigenvectors are

1

2

Statistics 6545 Multivariate Statistical Methods

± 12 i

1

84

Robert Paige (Missouri S &T)

Check;

3 −2

4 −1

1

2

− 12 i

1

=

− 12 − 32 i

1 − 2i

= (1 − 2i)

3 −2

4 −1

1

2

+ 12 i

1

=

1

2

− 12 i

1

1

2

+ 12 i

1

− 12 + 32 i

1 + 2i

= (1 + 2i)

Result: Let A be a k × k square symmetric matrix. Then A has

k pairs of real eigenvalues and eigenvectors;

λ1, e1, λ2, e2 · · · λk , ek

where the eigenvectors have unit length, are mutually orthogonal

and unique unless two or more of the eigenvalues are equal

Statistics 6545 Multivariate Statistical Methods

85

Robert Paige (Missouri S &T)

Example: Changing the previous matrix to make it symmetric...

A=

The eigenvalues are

3 4

4 −1

√

λ=1±2 5

The associated eigenvectors are

√

1

1

2 ±2 5

1

Note that for non-symmetric matrices the eigenvectors need not

be orthogonal but they always will be linearly independent

Singular Value Decomposition (SVD): Let A be a m × k matrix

of real numbers, then there exits an m × m orthogonal matrix U

and a k × k orthogonal matrix V such that

A = U ΛV ′

where the m × k matrix Λ has (i, i)th entry λi ≥ 0 for i =

Statistics 6545 Multivariate Statistical Methods

86

Robert Paige (Missouri S &T)

1, 2, . . . , min (m, k) and the other entries are zero. The λi are

called the singular values of A.

When A is of rank r (number of linearly independent columns in

A is r) then one may write A as

r

λiuivi′ = Ur Λr Vr′

A=

i=1

where

Ur = [u1, u2, . . . , ur ]

Vr = [v1, v2, . . . , vr ]

both have orthogonal columns and Λr is the diagonal matrix with

diagonal entries λi

It can be shown that (homework)

AA′ui = λ2i ui

A′Avi = λ2i vi

Statistics 6545 Multivariate Statistical Methods

87

Robert Paige (Missouri S &T)

where

and

λ21, λ22, . . . , λ2r > 0

λ2r+1 = λ2r+2 = · · · = λ2m = 0

It also follows that

′

vi = λ−1

A

ui

i

ui = λ−1

i Avi

Example:

1 1 1 1

A=1 1 0 2

1 0 0 3

Statistics 6545 Multivariate Statistical Methods

88

Robert Paige (Missouri S &T)

4. 194 0

0 0

0.381 0.812 0.440

A = 0.570 0.167 −0.803 0 1. 441 0 0

0

0 0.573 0

0.727 −0.558 0.399

−2

0.400

0.227 9.102 × 10

0.883

0.292

0.680

0.563

−0.365

×

−2

−2

6.308 × 10 −0.634

0.768

5.533 × 10

0.866

−0.288

−0.288

−0.288

Let A be a m × k matrix of real numbers with m ≥ k with SVD

A = U ΛV ′

and let s < k = rank (A) then

s

λiuivi′

B=

i=1

is the rank-s least squares approximation in the sense that it minStatistics 6545 Multivariate Statistical Methods

89

Robert Paige (Missouri S &T)

imizes

m

tr (A − B) (A − B)′ =

k

i=1 j=1

(aij − bij )2

over all m × k matrices B having rank no greater than s

Example: For

1 1

A=1 2

1 3

The SVD is

0.323 11 0.853 78 0.408 25

4. 079 1

0

0.547 51 0.183 22 −0.816 50 0

0.600 49

0.771 9 −0.487 34 0.408 25

0

0

0.402 66 0.915 35

×

0.915 35 −0.402 66

the 2−rank approximation is

Statistics 6545 Multivariate Statistical Methods

90

Robert Paige (Missouri S &T)

0.323 11 0.853 78

0

0.547 51 0.183 22 4. 079 1

0

0.600 49

0.771

9

−0.487

34

1 1

0.999 99 0.999 99

= 0.999 99 2. 000 0 ≃ 1 2

1 3

0.999 97 3. 000 0

Homework 2.1

2.3

0.402 66 0.915 35

0.915 35 −0.402 66

Positive Definite Matrices

The spectral decomposition of a k×k symmetric matrix A is given

by

′

′

′

A = λ1e1e1 + λ2e2e2 + · · · + λk ek ek

Example:

A=

Statistics 6545 Multivariate Statistical Methods

1

2

1

2

91

1

2

1

2

Robert Paige (Missouri S &T)

1

2

|A − λI| =

−λ

1

2

1

2

The eigenvalues are λ = 0, 1

1

2

−λ

= λ2 − λ = 0

Let’s find the eigenvectors by hand

First the one associated with λ = 0

1

2

1

2

1

2

1

2

e11

e12

=0

e11

e12

We see that e11 = −e12 so we have

−1

√

2

1

√

2

e1 =

Now the eigenvector associated with λ = 1

Statistics 6545 Multivariate Statistical Methods

92

Robert Paige (Missouri S &T)

1

2

1

2

1

2

1

2

e21

e22

e21

e22

=1

e21 + e22 = 2e21

e21 + e22 = 2e22

e22 = e21

√1

2

1

√

2

e2 =

Check;

′

′

λ1e1e1 + λ2e2e2 = 0

=

1

2

1

2

−1

√

2

1

√

2

1

2

1

2

Statistics 6545 Multivariate Statistical Methods

−1

√

2

1

√

2

93

′

+1

√1

2

1

√

2

√1

2

1

√

2

T

Robert Paige (Missouri S &T)

The spectral decomposition is a very useful tool for studying quadratic

forms

A quadratic form associated with symmetric matrix is

k

k

′

x Ax =

aij xixj

i=1 j=1

When

′

x Ax ≥ 0

for all x then A is said to be nonnegative definite

If

′

x Ax > 0

for all x = 0 then A is said to be positive definite

The spectral decomposition can be used to show that a k ×k symmetric matrix A is positive definite if and only if every eigenvector

of A is positive (homework)

Statistics 6545 Multivariate Statistical Methods

94

Robert Paige (Missouri S &T)

A k × k symmetric matrix A is nonnegative definite if and only if

every eigenvector of A is greater than or equal to zero

Note that

′

′

′

′

′

x Ax = x λ1e1e1 + λ2e2e2 + · · · + λk ek ek x

′

′

′

′

′

′

= λ1x e1e1x + λ2x e2e2x + · · · + λk x ek ek x

= λ1y12 + λ2y22 + · · · + λk yk2

where scalar yi is given as

′

yi = eix

for i = 1, 2, . . . , k

Note that

so that if

′

y1

e1

y = .. = Ex = .. x

′

yk

ek

′

x=Ey =0

Statistics 6545 Multivariate Statistical Methods

95

Robert Paige (Missouri S &T)

then

y = Ex = 0

Recall from Chapter 1 the formula for the statistical distance from

P = (x1, x2, . . . , xp) to O = (0, 0, . . . , 0) is

d (O, P ) =

a11x21 + a22x22 + · · · + appx2p

+2a12x1x2 + 2a13x1x3 + · · · + 2ap−1,pxp−1xp

Now

a11x21 + a22x22 + · · · + appx2p

d (O, P ) =

+2a12x1x2 + 2a13x1x3 + · · · + 2ap−1,pxp−1xp

2

It turns out that

aij = aji

Statistics 6545 Multivariate Statistical Methods

96

Robert Paige (Missouri S &T)

for all i and j so that

a11 a12

a21 a22

2

d (O, P ) = [x1, x2, . . . , xp]

..

..

an1 an2

· · · a1p

x1

x2

· · · a2p

. . . ..

..

· · · anp

xp

We know that since d2 (O, P ) is a square distance function that

for x = 0

′

d2 (O, P ) = x Ax > 0

Note that a positive quadratic form can be interpreted as square

distance

Note that for p = 2 the points of constant distance c from the

origin satisfy

′

x Ax = a11x21 + 2a12x1x2 + a22x22 = c2

and by the spectral decomposition

′

x Ax = λ1y12 + λ2y22

Statistics 6545 Multivariate Statistical Methods

97

Robert Paige (Missouri S &T)

so that

2

2

λ1y12 + λ2y22 = λ1 (x′e1) + λ2 (x′e2) = c2

Since λ1, λ2 > 0

λ1y12 + λ2y22 = c2

is an ellipse in

y1 = x′e1

y2 = x′e2

Note that at

−1/2

x = cλ1

′

x Ax = λ1

and at

−1/2

cλ1 e′1e1

−1/2

x = cλ2

Statistics 6545 Multivariate Statistical Methods

e1

98

2

= c2

e2

Robert Paige (Missouri S &T)

′

x Ax = λ2

2.4

−1/2

cλ2 e′2e2

2

= c2

A Square-Root Matrix

We know that the spectral decomposition of a k × k symmetric

positive definite matrix A is given by

′

′

′

A = λ1e1e1 + λ2e2e2 + · · · + λk ek ek

Statistics 6545 Multivariate Statistical Methods

99

Robert Paige (Missouri S &T)

which may be rewritten as

Λ P′

A = P

(k×k)

(k×k)(k×k)(k×k)

where

P = [e1, e2, . . . , ek ]

′

and λi > 0 for all i

′

P P = P P = I

λ1 0 · · · 0

0 λ2 · · · 0

Λ =

.. .. . . . ..

(k×k)

0 0 · · · λk

Therefore

A−1 = P Λ−1P ′

1

1

1

′

′

′

= e1e1 + e2e2 + · · · + ek ek

λ1

λ2

λk

Statistics 6545 Multivariate Statistical Methods

100

Robert Paige (Missouri S &T)

since

P Λ−1P ′ P ΛP ′ = (P ΛP ′) P Λ−1P ′

= PP′

=I

The square-root of a positive definite matrix A is given as

1/2

A

′

= λ1e1e1 +

= P Λ1/2P ′

′

λ2e2e2 + · · · +

′

λk ek ek

Note that A1/2 can similarly be defined for non-negative definite

matrices

This matrix has the following properties:

1/2

1. A

′

= A1/2

2. A1/2A1/2 = A

Statistics 6545 Multivariate Statistical Methods

101

Robert Paige (Missouri S &T)

3.

1/2

A

−1

4.

1

1

1

′

′

′

= √ e1e1 + √ e2e2 + · · · + √ ek ek

λ1

λ2

λk

= P Λ−1/2P ′

A1/2A−1/2 = A−1/2A1/2 = I

A−1/2A−1/2 = A−1

where

−1/2

A

1/2

= A

Example: For

A=

1

2

1

2

−1

1

2

1

2

we have λ = 0, 1

Statistics 6545 Multivariate Statistical Methods

102

Robert Paige (Missouri S &T)

e1 =

−1

√

2

1

√

2

e2 =

√1

2

1

√

2

Now

A1/2 =

′

λ1e1e1 +

−1

√

2

1

√

2

√

= 0

=

1

2

1

2

1

2

1

2

′

λ2e2e2

!

−1 √1

√

2

2

√

+ 1

√1

2

1

√

2

√1 √1

2

2

!

=A

Why?

2.5

Random Vectors and Matrices

A random matrix (vector) is a matrix (vector) whose elements

consist of random variables

Statistics 6545 Multivariate Statistical Methods

103

Robert Paige (Missouri S &T)

The expectation of a random

componentwise fashion, i.e. if

X11

X21

X=

..

Xn1

then

where

matrix (vector) is performed in a

X12

X22

..

Xn2

· · · X1p

· · · X2p

. . . ..

· · · Xnp

E [X11] E [X12]

E [X21] E [X22]

E [X] =

..

..

E [Xn1] E [Xn2]

E [Xij ] =

"∞

−∞ xij f (xij ) dxij

xij xij f (xij )

· · · E [X1p]

· · · E [X2p]

..

...

· · · E [Xnp]

,Xij continuous

,Xij is discrete

Expectation is linear

E [Xij + Yij ] = E [Xij ] + E [Yij ]

Statistics 6545 Multivariate Statistical Methods

104

Robert Paige (Missouri S &T)

E [cXij ] = cE [Xij ]

Then it can be shown for random matrices X and Y (homework)

and constant matrices A and B that

E [X + Y ] = E [X] + E [Y ]

E [AXB] = AE [X] B

Example: The joint and marginal distributions of X1 and X2 are

given below

x1\x2 0 1 p1 (x1)

0

.2 .4

.6

1

.1 .3

.4

p2 (x2) .3 .7

1

E (X1) =

x1p1 (x1) = (0) (.6) + 1 (.4) = .4

x1

E (X2) =

x2p2 (x2) = (0) (.3) + 1 (.7) = .7

x2

Statistics 6545 Multivariate Statistical Methods

105

Robert Paige (Missouri S &T)

E (X) = E

X1

X2

=

E (X1)

E (X2)

=

.4

.7

Homework 2.2

2.6

Mean Vectors and Covariance Matrices

′

Suppose that X = [X1, . . . , Xp] is a random vector

The marginal µi means and variances σi2 are defined as

µi = E [Xi]

!

2

σi2 = E (Xi − µi)

The behavior of (Xi, Xj ) is described by their joint probability

distribution

A measure of linear association between them is their covariance

σij = E (Xi − µi) (Xj − µj )

Statistics 6545 Multivariate Statistical Methods

106

Robert Paige (Missouri S &T)

where

"∞ "∞

(xi − µi) (xj − µj ) fij (xi, xj ) dxidxj if

−∞

−∞

(Xi, Xj ) are jointly continuous

σij =

if

xi

xj (xi − µi ) (xj − µj ) fij (xi , xj )

(Xi, Xj ) are jointly discrete

Note that

σii = σi2

σij is also denoted as

Cov (Xi, Xj )

Continuous random variables Xi and Xj are (statistically) independent if

fij (xi, xj ) = fi (xi) fj (xj )

Statistics 6545 Multivariate Statistical Methods

107

Robert Paige (Missouri S &T)

Continuous random variables X1, . . . , Xp are mutually (statistically) independent if

f12···p (x1, x2, . . . , xp) = f1 (x1) f2 (x2) · · · fp (xp)

Note that

Cov (Xi, Xj ) = 0

if Xi and Xj are independent

′

The means and covariances of X = [X1, X2, . . . , Xp] can be represented in matrix-vector form

E (X1)

E (X2)

=µ

E (X) =

.

.

E (Xp)

Statistics 6545 Multivariate Statistical Methods

108

Robert Paige (Missouri S &T)

σ11 σ12 · · · σ1p

σ21 σ22 · · · σ2p

=Σ

Cov (X) =

.

.

.

.

.

.

. .

.

σp1 σp2 · · · σpp

Example: The joint and marginal distributions of X1 and X2 are

x1\x2

0

1

p2 (x2)

0

.2

.1

.3

1 p1 (x1)

.4

.6

.3

.4

.7

1

Let’s find Σ

σ11 = E (X1 − µ1)2 =

x1

(x1 − µ1)2 p1 (x1)

= (0 − .4)2 (.6) + (1 − .4)2 (.4)

= 0.24

Statistics 6545 Multivariate Statistical Methods

109

Robert Paige (Missouri S &T)

σ22 = E (X2 − µ2)2 =

x2

(x2 − µ2)2 p2 (x2)

= (0 − .7)2 (.3) + (1 − .7)2 (.7)

= 0.21

σ12 = E (X1 − µ1) (X2 − µ2)

=

x1 ,x2

(x1 − µ1) (x2 − µ2) p (x1, x2)

= (0 − .4) (0 − .7) (.2) + (0 − .4) (1 − .7) (.4)

+ (1 − .4) (0 − .7) (.1) + (1 − .4) (1 − .7) (.3)

= 0.02

Σ=

Statistics 6545 Multivariate Statistical Methods

0.24 0.02

0.02 0.21

110

Robert Paige (Missouri S &T)

Note that

′

!

Σ = E (X − µ) (X − µ)

X1 − µ1

..

[X1 − µ1, . . . , Xp − µp]

= E

Xp − µp

This simplifies to

2

(X1 − µ1)

· · · (X1 − µ1) (Xp − µp)

..

..

...

E

(Xp − µp)2

(Xp − µp) (X1 − µ1) · · ·

and

2

· · · E (X1 − µ1) (Xp − µp)

E (X1 − µ1)

..

..

...

E (Xp − µp)2

E (Xp − µp) (X1 − µ1) · · ·

Statistics 6545 Multivariate Statistical Methods

111

Robert Paige (Missouri S &T)

and then

σ11 σ12

σ21 σ22

.

..

.

σp1 σp2

· · · σ1p

· · · σ2p

. . . ..

· · · σpp

The population correlation coefficient ρij is

σij

ρij = √ √

σii σjj

A measure of linear association between Xi and Xj

Note that

σii

ρii = √ √ = 1

σii σii

Statistics 6545 Multivariate Statistical Methods

112

Robert Paige (Missouri S &T)

The population correlation matrix is given as

1 ρ12 · · · ρ1p

ρ21 1 · · · ρ2p

ρ=

.. . . . ..

..

ρp1 ρp2 · · · 1

Example: The joint and marginal distributions of X1 and X2 are

x1\x2

0

1

p2 (x2)

Σ=

Statistics 6545 Multivariate Statistical Methods

0

.2

.1

.3

1 p1 (x1)

.4

.6

.3

.4

.7

1

0.24 0.02

0.02 0.21

113

Robert Paige (Missouri S &T)

Let’s find ρ

σ12

ρ12 = √ √

σ11 σ22

0.02

√

=√

0.24 0.21

= 0.089

1 0.089

0.089 1

The standard deviation matrix is given as

√

σ11 0 · · · 0

√

σ22 · · · 0

0

1/2

= .

V

.

.

.

.

.

.

.

.

√

0

0 · · · σkk

ρ=

It turns out that

V 1/2ρV 1/2 = Σ

Statistics 6545 Multivariate Statistical Methods

114

Robert Paige (Missouri S &T)

and

ρ= V

1/2

−1

Σ V

1/2

−1

Example: Consider covariance and correlation matrices

Σ=

ρ=

Now

V 1/2 =

0.24 0.02

0.02 0.21

1 0.089

0.089 1

√

σ11 0

√

0

σ22

Statistics 6545 Multivariate Statistical Methods

115

=

√

0.24 √ 0

0

0.21

Robert Paige (Missouri S &T)

and

V

=

=

2.6.1

1/2

−1

Σ V

1/2

−1

√

−1

0.24 √ 0

0.24 0.02

0.02 0.21

0

0.21

1 0.089

=ρ

0.089 1

√

0.24 √ 0

0

0.21

−1

Partitioning the Covariance Matrix

We can partition the p characteristics in X into two groups

X1

..

(1)

Xq

X

X=

Xq+1 = X (2)

.

.

Xp

Statistics 6545 Multivariate Statistical Methods

116

Robert Paige (Missouri S &T)

µ1

..

µq

=

µ = E (X) =

µq+1

.

.

µp

µ(1)

µ(2)

We determine the matrix of covariances between X (1) and X (2) as

′

X (1) − µ(1) X (2) − µ(2)

X1 − µ1

..

[Xq+1 − µq+1, . . . , Xp − µp]

= E

Xq − µq

Σ12 = E

Statistics 6545 Multivariate Statistical Methods

117

Robert Paige (Missouri S &T)

This simplifies to

E (X1 − µ1) (Xq+1 − µq+1) · · · E (X1 − µ1) (Xp − µp)

..

..

...

E (Xq − µq ) (Xq+1 − µq+1) · · · E (Xq − µq ) (Xp − µp)

and

σ1,q+1 σ1,q+2

σ2,q+1 σ2,q+2

.

..

.

σq,q+1 σq,q+2

· · · σ1p

· · · σ2p

. . . ..

= Σ12

· · · σqp

Note that

′

(X − µ) (X − µ)

=

X (1) − µ(1)

X (2) − µ(2)

X (1) − µ(1)

X (1) − µ(1)

Statistics 6545 Multivariate Statistical Methods

′

′

118

X (1) − µ(1)

X (2) − µ(2)

X (2) − µ(2)

X (2) − µ(2)

′

′

Robert Paige (Missouri S &T)

Therefore

′

Σ = E (X − µ) (X − µ)

!

X (1) − µ(1) X (1) − µ(1)

=E

X (2) − µ(2) X (1) − µ(1)

q p−q

q

Σ11 Σ12

=

Σ21 Σ22

p−q

Note that

σ11 σ12

σ21 σ22

Σ11 =

..

..

σq1 σq2

Statistics 6545 Multivariate Statistical Methods

119

′

′

X (1) − µ(1)

X (2) − µ(2)

X (2) − µ(2)

X (2) − µ(2)

′

′

· · · σ1q

· · · σ2q

. . . ..

· · · σqq

Robert Paige (Missouri S &T)

σq+1,q+1 σq+1,q+2 · · · σq+1,p

σq+2,q+1 σq+2,q+2 · · · σq+2,p

Σ22 =

.

.

.

.

.

.

.

.

.

σp,q+1 σp,q+12 · · · σpp

σ1,q+1 σ1,q+2 · · · σ1p

σ2,q+1 σ2,q+2 · · · σ2p

Σ12 =

.

.

.

.

.

.

. .

.

σq,q+1 σq,q+2 · · · σqp

σq+1,1 σq+1,2 · · · σq+1,q

σq+2,1 σq+2,2 · · · σq+2,q

Σ21 =

.

.

.

.

.

.

.

.

.

σp1

σp2 · · · σpq

Σ′12 = Σ21

Note that we sometimes use the notation

Cov X (1), X (2) = Σ12

Statistics 6545 Multivariate Statistical Methods

120

Robert Paige (Missouri S &T)

2.6.2

The Mean Vector and Covariance Matrix for Linear Combinations of Random Variables

Note that for scalar random variables X1 and X2, and constants

a, b, and c we have that

E (cX1) = cE (X1)

2

V ar (cX1) = E (cX1 − cµ1)

2

= c2E (X1 − µ1)

= c2V ar (X1)

= c2σ11

Statistics 6545 Multivariate Statistical Methods

121

!

!

Robert Paige (Missouri S &T)

Cov (aX1, bX2) = E [(aX1 − aµ1) (bX2 − bµ2)]

= abE [(X1 − µ1) (X2 − µ2)]

= abCov (X1, X2) = abσ12

E [aX1 + bX2] = aE [X1] + bE [X2]

= aµ1 + bµ2

V ar [aX1 + bX2] = E [(aX1 + bX2) − (aµ1 + bµ2)]2

= E [(aX1 − aµ1) + (bX2 − bµ2)]2

2

a2 (X1 − µ1)2 + b2 (X2 − µ2)2

=E

+2ab (X1 − µ1) (X2 − µ2)

= a2V ar (X1) + b2V ar (X2) + 2abCov (X1, X2)

= a2σ11 + b2σ22 + 2abσ12

Statistics 6545 Multivariate Statistical Methods

122

Robert Paige (Missouri S &T)

Note that

X1

X2

aX1 + bX2 = a b

= c′X

and

E [aX1 + bX2] = a b

µ1

µ2

= c′ µ

V ar [aX1 + bX2] = a b

σ11 σ12

σ21 σ22

a

b

= c′Σc

In general, for linear combination

c′X = c1X1 + · · · + cpXp

Statistics 6545 Multivariate Statistical Methods

123

Robert Paige (Missouri S &T)

we have

E [c′X] = c′µ

V ar [c′X] = c′Σc

where

E [X] = µ

Cov [X] = Σ

More generally for a constant matrix C

E [CX] = Cµ

Cov [CX] = CΣC ′

Example: Let

X=

Statistics 6545 Multivariate Statistical Methods

X1

X2

124

Robert Paige (Missouri S &T)

be a random vector with mean vector

µ1

µX =

µ2

and covariance matrix

σ11 σ12

σ21 σ22

ΣX =

Let’s find the mean vector and covariance matrix of

Z1 = X1 − X2

Z=

Z1

Z2

µZ = CµX =

Z2 = X1 + X2

1 −1

X1

=

1 1

X2

1 −1

1 1

Statistics 6545 Multivariate Statistical Methods

125

µ1

µ2

=

= CX

µ1 − µ2

µ1 + µ2

Robert Paige (Missouri S &T)

1 1

1 −1

σ11 σ12

1 1

σ21 σ22

−1 1

σ11 − 2σ12 + σ22

σ11 − σ22

σ11 − σ22

σ11 + 2σ12 + σ22

ΣZ = CΣX C ′ =

=

If σ11 = σ22 then X1 − X2 and X1 + X2 are uncorrelated

2.6.3

Partitioning the Sample Mean Vector

and Covariance Matrix

Our data in matrix form is

x11 x12 · · · x1k

x21 x22 · · · x2k

.

..

..

.

X=

xj1 xj2 · · · xjk

.

..

..

.

xn1 xn2 · · · xnk

Statistics 6545 Multivariate Statistical Methods

126

· · · x1p

· · · x2p

..

· · · xjp

..

· · · xnp

Robert Paige (Missouri S &T)

x̄1

x̄2

x̄ =

.

.

x̄p

where

where

n

1

x̄k =

xjk

n j=1

s11 s12 · · ·

s21 s22 · · ·

Sn =

.. .. . . .

sp1 sp2 · · ·

1

sik =

n

n

j=1

s1p

s2p

..

spp

(xji − x̄i) (xjk − x̄k )

We can of course also partition the sample mean vector

Statistics 6545 Multivariate Statistical Methods

127

Robert Paige (Missouri S &T)

x̄1

..

x̄q

x̄ =

x̄q+1 =

.

.

x̄p

and the sample covariance matrix

Sn =

where

q

p−q

s11 s12

s21 s22

S11 =

.. ..

sq1 sq2

Statistics 6545 Multivariate Statistical Methods

128

x̄(1)

x̄(2)

q p−q

S11 S12

S21 S22

· · · s1q

· · · s2q

. . . ..

· · · sqq

Robert Paige (Missouri S &T)

sq+1,q+1 sq+1,q+2 · · ·

sq+2,q+1 sq+2,q+2 · · ·

S22 =

..

..

...

sp,q+1 sp,q+12 · · ·

s1,q+1 s1,q+2 · · ·

s2,q+1 s2,q+2 · · ·

S12 =

..

...

..

sq,q+1 sq,q+2 · · ·

′

S21 = S12

2.7

sq+1,p

sq+2,p

..

spp

s1p

s2p

..

sqp

Matrix Inequalities and Maximization

Cauchy-Schwarz Inequality: Let b and d be any two p × 1 vectors,

then

2

(b′d) ≤ (b′b) (d′d)

with equality holding if and only if b = cd for some scalar constant

c

Statistics 6545 Multivariate Statistical Methods

129

Robert Paige (Missouri S &T)

Review (completing the square):

Idea is to write

a1x2 + a2x + a3

in the form

a1(x − h)2 + k

Equating coefficients yields

−a2

h=

2a1

a22

k = a3 −

4a1

Proof:

Trivially true if b = 0 or d = 0

Assume that b = 0 or d = 0 and consider

b − xd

where x is an arbitrary scalar constant

Statistics 6545 Multivariate Statistical Methods

130

Robert Paige (Missouri S &T)

Now

0 < (b − xd)′ (b − xd) = b′b − 2xb′d + x2d′d

we can complete the square in the right to obtain

a1 = d′d

a2 = −2b′d

a3 = b′b

−a2 2b′d b′d

h=

= ′ = ′

2a1

2d d d d

a22

(−2b′d)2

(b′d)2

′

′

k = a3 −

=bb−

=bb− ′

′

4a1

4d d

dd

2

′ 2

′

(b

d)

b

d

0 < b′b − ′ + (d′d) x − ′

dd

dd

If we let

b′d

x= ′

dd

Statistics 6545 Multivariate Statistical Methods

131

Robert Paige (Missouri S &T)

we obtain

and

′ 2

(b

d)

′

0<bb− ′

dd

2

0 < (b′b) (d′d) − (b′d)

If

b = cd

then

(b − cd)′ (b − cd) = 0

and we can retrace the previous steps to show that

2

0 = (b′b) (d′d) − (b′d)

Extended Cauchy-Schwarz Inequality: Let b and d be any two p×1

vectors and B be a p × p positive definite matrix then

2

(b′d) ≤ (b′Bb) d′B −1d

Statistics 6545 Multivariate Statistical Methods

132

Robert Paige (Missouri S &T)

with equality holding if and only if b = cB −1d for some scalar

constant c

Proof:

Trivially true if b = 0 or d = 0

Assume that b = 0 or d = 0

B 1/2 may be written as

B

1/2

′

λ1e1e1 +

B

λpepep

1

1

′

′

= √ e1e1 + √ e2e2 + · · · +

λ1

λ2

1

′

ep ep

λp

Now

′

′

λ2e2e2 + · · · +

=

and B −1/2 is

−1/2

′

′

bd=bB

1/2

B

−1/2

Statistics 6545 Multivariate Statistical Methods

d= B

133

1/2

b

′

B −1/2d

Robert Paige (Missouri S &T)

Finally we apply the Cauchy-Schwarz Inequality to B 1/2b and

B −1/2d

Maximization Lemma: Let B be a p × p positive definite matrix

and d a given p × 1 vector then

(x′d)2

max ′

= d′B −1d

x=0 x Bx

where the maximum is attained for

x = cB −1d

for any scalar c = 0

Proof:

By extended Cauchy-Schwarz

2

(x′d) ≤ (x′Bx) d′B −1d

Statistics 6545 Multivariate Statistical Methods

134

Robert Paige (Missouri S &T)

Dividing by x′Bx > 0 yields

(x′d)2

′ −1

≤

d

B d

x′Bx

so that the maximum must occur for

x = cB −1d

where c = 0

Maximization of Quadratic Forms for Points on the Unit Sphere:

Let B be a p × p positive definite matrix with eigenvalues

λ1 ≥ λ2 ≥ · · · ≥ λp > 0

and associated normalized eigenvectors e1, e2, . . . , ep. Then

x′Bx

max ′ = λ1

x=0 x x

Statistics 6545 Multivariate Statistical Methods

135

Robert Paige (Missouri S &T)

attained when x = e1;

x′Bx

min ′ = λp

x=0 x x

attained when x = ep. Also

x′Bx

max

= λk+1

x⊥e1 ,e2,...,ek x′ x

attained when x = ek+1 for k = 1, 2, . . . , p − 1

Proof:

Consider the spectral decomposition of B;

B = P ΛP ′

and

B 1/2 = P Λ1/2P ′

Statistics 6545 Multivariate Statistical Methods

136

Robert Paige (Missouri S &T)

Now let y = P ′x

x′Bx x′B 1/2B 1/2x

=

x′x

x′P P ′x

x′B 1/2B 1/2x

=

y ′y

x′P Λ1/2P ′P Λ1/2P ′x

=

y ′y

y ′Λy

= ′

yy

p

2

λ

y

i

i

= i=1

p

2

i=1 yi

and

p

2

i=1 λi yi

p

2

i=1 yi

Statistics 6545 Multivariate Statistical Methods

≤ λ1

137

p

2

i=1 yi

p

2

i=1 yi

= λ1

Robert Paige (Missouri S &T)

When x = e1 we have

and

1

0

′

y = P e1 =

.

.

0

x′Bx

=

′

xx

p

2

i=1 λi yi

p

2

i=1 yi

= λ1

Now note that

p

2

λ

y

i

i

i=1

p

2

i=1 yi

≥ λp

When x = ep we have

p

2

y

i=1 i

p

2

y

i=1 i

0

0

′

y = P ep =

..

1

Statistics 6545 Multivariate Statistical Methods

138

= λp

Robert Paige (Missouri S &T)

and

p

2

i=1 λi yi

p

2

i=1 yi

x′Bx

=

′

xx

Now

= λp

y = P ′x

so

x = Py

= y1e1 + y2e2 + · · · + ypep

Since x ⊥ e1, e2, . . . , ek we have that for 1 ≤ i ≤ k

0 = e′ix = y1e′ie1 + y2e′ie2 + · · · + ype′iep

= yi

and

x′Bx

=

x′x

Statistics 6545 Multivariate Statistical Methods

p

2

λ

y

i

i

i=k+1

p

2

i=k+1 yi

139

≤ λk+1

Robert Paige (Missouri S &T)

The maximum is attained for yk+1 = 1, yk+2 = · · · = yp = 0

Example: Let

1 0

0 2

B=

Here λ1 = 2, λ2 = 1 and

e1 =

0

1

is attained for

x1

x2

1 0

0 2

x′Bx = x1 x2

we have that

e2 =

1

0

= x21 + 2x22

x21 + 2x22

max 2

=2

x=0 x1 + x22

e1 =

Statistics 6545 Multivariate Statistical Methods

140

0

1

Robert Paige (Missouri S &T)

x21 + 2x22

min 2

=1

2

x=0 x1 + x2

is attained at

e2 =

Statistics 6545 Multivariate Statistical Methods

141

1

0

Robert Paige (Missouri S &T)

Note that

where

x′Bx

′

=

z

Bz

′

xx

√

z = x/ x′x

Vector z lies on the surface of the p-dimensional unit sphere centered at 0 since

z ′z = 1

Therefore the results of this section actually have to do with the

max/minimization of x′Bx on the unit sphere

Homework 2.3

Statistics 6545 Multivariate Statistical Methods

142

Robert Paige (Missouri S &T)