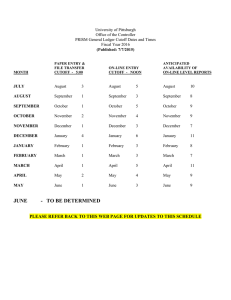

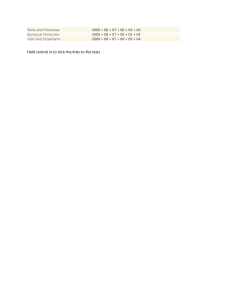

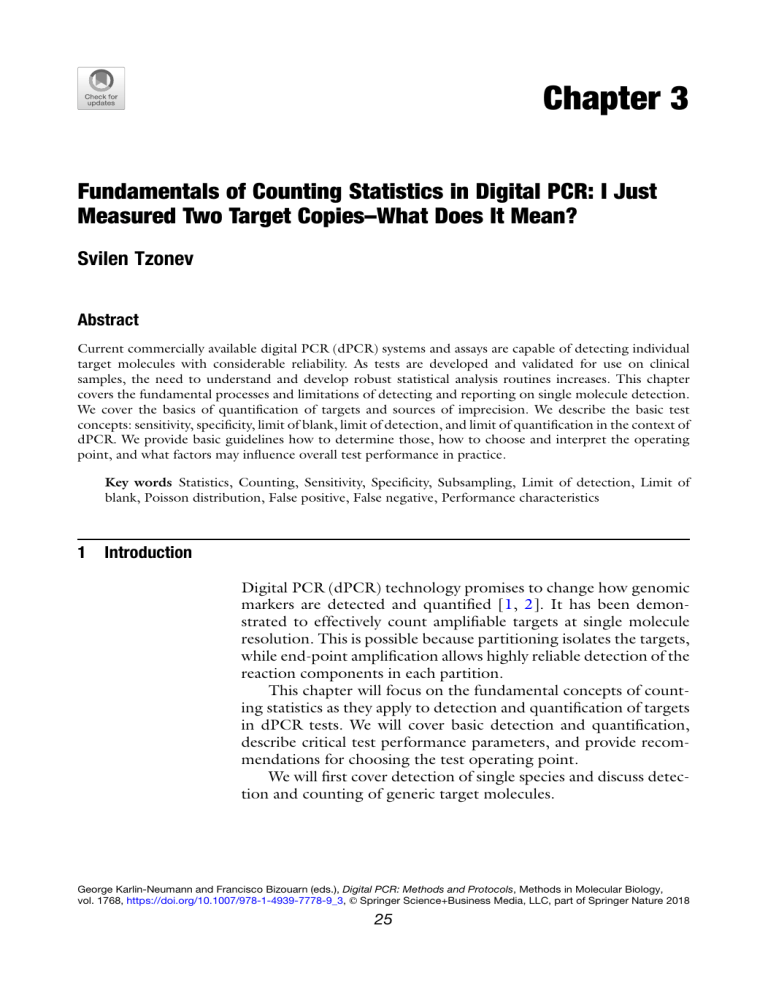

Chapter 3 Fundamentals of Counting Statistics in Digital PCR: I Just Measured Two Target Copies–What Does It Mean? Svilen Tzonev Abstract Current commercially available digital PCR (dPCR) systems and assays are capable of detecting individual target molecules with considerable reliability. As tests are developed and validated for use on clinical samples, the need to understand and develop robust statistical analysis routines increases. This chapter covers the fundamental processes and limitations of detecting and reporting on single molecule detection. We cover the basics of quantification of targets and sources of imprecision. We describe the basic test concepts: sensitivity, specificity, limit of blank, limit of detection, and limit of quantification in the context of dPCR. We provide basic guidelines how to determine those, how to choose and interpret the operating point, and what factors may influence overall test performance in practice. Key words Statistics, Counting, Sensitivity, Specificity, Subsampling, Limit of detection, Limit of blank, Poisson distribution, False positive, False negative, Performance characteristics 1 Introduction Digital PCR (dPCR) technology promises to change how genomic markers are detected and quantified [1, 2]. It has been demonstrated to effectively count amplifiable targets at single molecule resolution. This is possible because partitioning isolates the targets, while end-point amplification allows highly reliable detection of the reaction components in each partition. This chapter will focus on the fundamental concepts of counting statistics as they apply to detection and quantification of targets in dPCR tests. We will cover basic detection and quantification, describe critical test performance parameters, and provide recommendations for choosing the test operating point. We will first cover detection of single species and discuss detection and counting of generic target molecules. George Karlin-Neumann and Francisco Bizouarn (eds.), Digital PCR: Methods and Protocols, Methods in Molecular Biology, vol. 1768, https://doi.org/10.1007/978-1-4939-7778-9_3, © Springer Science+Business Media, LLC, part of Springer Nature 2018 25 26 Svilen Tzonev 2 Counting Molecules In digital PCR the PCR reaction is first partitioned into independent, usually equal size, volumes (droplets or chambers on a device) [3]. During a standard thermocycling protocol, amplifiable genomic target molecules will be replicated and their number will increase exponentially. In a common format, hydrolysis probes matching specific amplicon counterparts are used. The probes have quencher and dye molecules attachments. When the polymerase enzyme cleaves the hybridized probes it releases unquenched dye molecules into the reaction. Thus, the concentration of free dye in the partition will increase exponentially with the PCR cycles. At end-point, any partition that contained at the beginning an amplifiable target will contain detectable high concentration of fluorescent dye and can be detected as “positive.” Any partition that did not contain a target will remain “negative.” In practice, we have to deal with damaged or otherwise poorly PCR-accessible target molecules that may not amplify from cycle 1, nonspecific probe hybridization that may lead to false positives, polymerase errors that may lead to false positive or false negatives, and other complications. We will cover such cases later. First we will focus on an “ideal dPCR counting machine” that can detect with 100% certainty and no confusion the presence or absence of a target molecule in a partition. 3 Subsampling Let us first consider a test that detects a single species of target molecules. Even a perfect counting machine, which makes no mistakes of any kind, has to deal with the stochastic nature of many molecular phenomena. In a typical experiment or test, we want to measure the concentration of a particular biomolecule as it is in vivo. In order to do this, we have to use a subsample of the whole—a draw of blood or a tissue biopsy. We measure the subsample in vitro to estimate the state of the whole. In the following we will use the liquid biopsy paradigm to illustrate the basic concepts. A hypothetical patient has 5 L of blood. If we draw 10 ml of blood at a time, we are subsampling 0.2% of the whole blood volume. Let us say that there are 5000 copies of the target molecules of interest in the 5 L of blood. This corresponds to a concentration of 1 target/1 ml of blood. In any 10 ml draw, we expect to collect on average 10 target molecules. Assuming perfect sample processing with no losses of any kind, we would expect to load on average 10 targets in our machine, which would detect all of them. However, any individual 10 ml draw may contain 8 or 11 or Counting Statistics 5 L of blood 10ml (0.2%) 27 8 copies present 11 copies present 5000 copies present expect 10 copies 10 copies present 3 different draws Fig. 1 Subsampling process. A patient has 5 L of blood in which 5000 molecules of interest are present. Any 10 ml draw of blood represents 0.2% of the total blood volume. We would expect to see on average 10 target molecules. However, in any actual 10 ml drawn, we may see different numbers of targets molecules due to the stochastic nature of molecule distribution in the 5 L of blood 10 actual target molecules. Our ability to make confident statements about the whole is still limited by the subsampling problem. See Fig. 1. How variable is the number of actual targets per draw? When we are drawing a tiny fraction of the whole and, as long as we can assume that the targets are all independent and equally likely to be sampled, we can describe the probability to draw exactly k actual targets with the Poisson distribution: Probability ðto draw k molecules; given expected number molecules is μÞ e μ k! where μ is the expected or average number drawn; in our case it is ¼ μk 10. Note that k must be an integer—we may draw 0, 1, 2, etc. molecules, while μ can be a noninteger number—the average may be 1.5 molecules. Figure 2 illustrates the Poisson distribution for μ ¼ 3, 10, and 100. As we can see, when μ is small, there is significant variability of how many targets will be subsampled in a draw relative to the expected number. Note how in the case of three expected molecules, there is about 5% probability that the draw would contain no targets at all! This observation will form the basis of the fundamental bound on the limit of detection, this is discussed later. Note also that for large μ the relative uncertainty shrinks as the distribution becomes more concentrated around the average. It also becomes more like the familiar normal or Gaussian distribution. 28 Svilen Tzonev 10 11 12 13 14 15 16 17 18 19 20 0 1 2 3 4 5 6 7 8 9 9 10 8 7 6 5 4 3 2 1 0 0.00 0.05 0.10 0.15 0.20 0.00 0.02 0.04 0.06 0.08 0.10 0.12 0.14 0.25 Probability 0.00 0.01 0.02 0.03 0.04 0.05 Number of molecules drawn 80 90 100 110 120 * Fig. 2 Poisson distribution. Poisson distribution for three different values of the expected number of molecules μ drawn, μ ¼ 3, 10 or 100. Probability (to draw k molecules, given expected number molecules is μ ¼ μk ek ! ). The height of each bar for particular integer k represents the probability to draw k molecules. Note how for small μ, the distribution shape is skewed and becomes increasingly more symmetrical and concentrated around the maximum for larger values of μ. For each case, the probability to draw exactly zero molecules is also shown 4 Multiple Occupancy and Partitioning The original concept of dPCR assumed a limiting dilution regime, i.e., each partition would not contain more than one target with any significant probability. Most of current commercial systems [4–7] allow robust measurement of target copies even when multiple molecules can occupy the same partition with a significant probability. Quantification is possible under the assumption that Counting Statistics 29 the targets are allocated to a partition independently and with equal probability. (Such assumptions would be violated if the targets are in some way “entangled” or if the partitions are of different volumes.) It turns out that the appropriate statistical framework is also based on the Poisson distribution, since each partition is similar to a blood draw from the previous example—each partition is a small subsample of a larger whole. For specific details see [8, 9]; here we will only focus on how the target concentration is estimated. A positive partition may have contained one or more targets. There is no way to be certain. A negative partition, on the other hand, must have contained exactly 0 targets, otherwise it would be positive. Once we have counted all partitions (positive and negative), Ntot, and all negative partitions, Nneg, we can use this formula to estimate the average target occupancy per partition, λ: N neg λ ¼ ln ðN tot Þ ln N neg ¼ ln N tot The total number of targets, T, present at the beginning of the reaction is then simply T ¼ λ N tot The value of λ calculated in this way is the “most likely value.” We cannot be sure of the exact number in reality since we do not know the precise distribution of targets into partitions. To arrive at the target concentration, we divide λ by the partition volume: ½T ¼ λ V partition Consider a given reaction mix with a fixed number of targets in it. If we perform multiple thought partitioning experiments we can understand the variability of the results around the most likely values. For each partitioning, depending on the exact (stochastic) pattern of target distribution into the partitions, we may see different number of negative partitions. This would lead us to estimate slightly different values of the target copies. We are again subject to the inevitable stochastic nature of how molecules “choose” their partitions. Figure 3 illustrates the concept of partitioning uncertainty. We have partitioned the same reaction volume with the same number of molecules, T, three times into the same number of partitions of equal volume. Since the molecules are distributed stochastically into the partitions, the patterns in each realization will be different. The observed number of partitions with no molecules will also vary between realizations. In fact, the number of actual negative partitions is a variable that also follows a Poisson distribution, given the expected number of negative partitions. We can then use similar 30 Svilen Tzonev 20 negatives 17 negatives 19 negatives Fig. 3 Partitioning uncertainty. A thought experiment where a reaction volume with fixed number of molecules is partitioned three times. Exact location of each molecule in the resulting partitions is stochastic. This leads to a different number of partitions with no molecules at all—negative partitions are highlighted. This will result in somewhat different “most likely estimate” of the number of molecules in the reaction. The number of negative partitions is itself a variable that follows a Poisson distribution math as above to describe how uncertain the estimate of λ is going to be even when we know the exact number of molecules, T, in the total volume. 5 Total Poisson Uncertainty So far, we saw how subsampling and partitioning variability limit our ability to estimate precisely the number of target molecules in vivo even with a perfect counting machine. When we combine the two sources of variability, we arrive at the frequently described dPCR uncertainty curve, shown also Chap. 2. When only a small number of targets is drawn (provided we have enough partitions), the total uncertainty is dominated by the subsampling processes. In other words, it does not matter if we put, five actual targets in 20,000 or 20,000,000 partitions, we will know with high confidence that there were five targets in the reaction we measured and approximately five targets per blood draw, if these were repeated multiple times. When the number of targets increases relative to the number of partitions (average occupancy λ is larger), at some point there will be too few negative partitions. Since the actual number of negative partitions is a stochastic variable, the uncertainty of λ increases and, thus, the uncertainty of T. Figure 4 illustrates the contributions from subsampling and partitioning errors to the total Poisson statistical error. For low occupancy numbers, λ, (number of targets is much less than the number of partitions), total uncertainty is dominated by subsampling. While for large occupancy numbers (number of targets much larger than the number of partitions), total uncertainty is dominated by partitioning. The lowest overall uncertainty is reached at λopt 1.6. For 10,000 partitions even when λ ¼ 6, the coefficient of 31 2 Partitioning error 1 CV, % 3 4 5 Counting Statistics Total Poisson error 0 Subsampling error 0 1 2 3 4 5 6 Average targets per partition, λ Fig. 4 Total Poisson Error ¼ Subsampling + Partitioning Errors, expressed as % CV, as a function of average targets per partition, λ. The black curve shows the subsampling error. The blue curve shows the total Poisson error. The distance between the curves is due to the partitioning error. For small λ, the subsampling error dominates, while the partitioning error is small. For large λ, the subsampling error is small, while the partitioning error dominates. The total Poisson error is minimized at λ_opt 1.6. Values for %CV are calculated for 10,000 partitions. Increasing the number of partitions brings all curves lower (smaller %CV) but does not change the general shapes. Optimum stays at 1.6 targets per partition variation of λ and T is ~3%, i.e., sufficiently low to allow accurate and precise quantification in most cases. A few words about the concept of confidence intervals. When we estimate experimentally some unknown variable we talk about a point estimate and a confidence interval around it. The point estimate is the most likely value of the variable; this is what is usually reported. A 95% confidence interval, around the point estimate, describes the range between a minimum and a maximum value of the variable, between which the true value of it will lie 95% of the time. In other words, if we knew the exact value of the variable and repeated the estimation experiment many times, 95% of the time it will indeed lie in the stated confidence interval. Most commercial dPCR systems report both point value estimates and 95% confidence intervals for target copies and concentrations. 6 False Positives and False Negatives So far, we have discussed an idealized case where our perfect system makes no mistakes of any kind. In practice, we have to deal with false positive and false negative reporting. In the simplest case, we can define a false positive or negative at the level of the partitions—a 32 Svilen Tzonev partition that should have been detected as a negative is reported as a positive and vice versa. The rates of such false reporting per partition define a false positive rate, FPR, and a false negative rate, FNR. Which of these is more important depends on the test and how much we care about each kind of error. For example, when false (positive and negative) rates are small and there is a small number of targets, the FNR is usually not important in absolute terms, as we only have a few true positive partitions that may be converted into a false negative. In this case, the FPR is more significant as there is a large number of true negative partitions, each of which may turn into a false positive. In the opposite case—a large number of targets—it is the positive partitions that are numerous and thus FNR matters more, in absolute terms, than the FPR per partition. In both cases, the prevalence of the state or condition measured plays a role in determining which false rate is more important. This is true at the patient or sample level as well, in the sense of the prevalence of the condition we are trying to measure with our test. There are cases where the danger of misdiagnosis at the clinical level influences greatly the relative importance of FPR and FNR. For example, in a prenatal screening test for Down syndrome, most patients are expected to have normal pregnancies. Due to the large number of individuals screened, even a small test FPR will produce a large number of false positive results that would require additional procedures. On the other hand, a false negative result is also very damaging as the overall cost to the patient is significant, since the fetus would be incorrectly classified as normal. We can think of false positive and false negative rates at the level of a well, test (multiple wells) or sample (if multiple tests are performed). It is important to keep in mind which of these concepts is being used. The combination of the sample preparation protocol, detection assay and dPCR measurement system will be subject to the limitations imposed by both stochastic molecular processes and the FP/FN detection rates. A few common examples of processes that can lead to false results include: l Nonspecific primers and/or probe hybridization l Polymerase errors l Fluorescent dust particles l Contamination and cross-contamination l Inappropriate thresholding algorithms In practice, these should be recognized and controlled at the assay design level, periodic equipment maintenance, robust laboratory practices, and frequent specific process monitoring. Counting Statistics 7 33 Basic Test Concepts and Their Interpretation in dPCR We distinguish two major types of tests—qualitative and quantitative. A qualitative test can only categorize an unknown sample as positive or negative (in some case there is also a third, uncalled category). A quantitative test will produce a value for the measurand of interest in an unknown sample when possible or perhaps produce an out-of-reportable-range result. For dPCR, quantification is most often as target counts or copies in the test or concentration of target copies in the test. There may be other derivative measures that are based on combinations of such measurements, for example a ratio of two concentrations or similar. dPCR essentially always produces a direct estimate of the target copies in the reaction. Thus, it is by nature quantitative. To call a sample negative or positive, an analytical call cutoff value is applied—any sample with detected copies higher than or equal to the cutoff is declared as positive; any sample with fewer copies than the cutoff is declared as negative. In some cases, there may be a “grey zone” where no call is produced. We will focus on the simpler binary version of sample positive/negative determination. Three fundamental parameters determine the performance of a given dPCR test: FPR, FNR, and the choice of analytical cutoff value (if needed for a qualitative test). Next, we will define additional important test descriptors and discuss how they are influenced by these fundamental parameters in the context of counting assays in dPCR. Looking ahead at Fig. 5, discussed below, will help the reader visualize the concepts. 8 Sensitivity For a qualitative test the sensitivity is defined as the probability that a truly positive (as determined by a prior test or clinical criterion) sample is measured as positive by the test. 100% sensitivity means that all truly positive samples do come out as positive by the test. Sensitivity may be less than 100% when the FNR per test is higher than zero or if the call cutoff value is set too high and truly positive samples are called as negatives. Intuitively, if we challenge the test with strongly positive or strongly negative samples, we can achieve better performance (See below on the concept of limit of detection). 9 Specificity For a qualitative test, specificity is defined as the probability that a truly negative sample is measured as negative by the test. Again, 100% specificity means that the test is never wrong when negative 34 Svilen Tzonev a Call cut-off NEGATIVE POSITIVE Probability LOD negative control level 0 LOD positive controls level Detected positive events per test level b Fig. 5 Relationship between sensitivity, specificity, and call cutoff (a). Classic description and definition of these test concepts. Horizontal axis—signal measured by the test (in dPCR this is detected targets/events). Vertical axis—probability of a particular level to be measured. Left curve—the test response to a negative/ blank control, Right curves—the test response to two positive samples at different levels. Depending where we choose the value of the call cutoff, the areas under the curves to the right and to the left of the cutoff determine the values of specificity (β level) and sensitivity (α level), respectively. Sensitivity ¼ 1 α. Specificity ¼ 1 β. Moving the cutoff to the right will decrease β and increase α , thus, increase specificity but decrease sensitivity. The opposite is true when moving the cut-off to the left. The value of the measurand for which α0 ¼ 5%, determines the 95% confidence level limit of detection of the test (indicated as the peak of the corresponding positive control curve, or most likely value). For increased Sensitivity, α1 < 5%, the corresponding LOD will be greater than the LOD at 95%. When we use blank samples for the negative control, the cutoff value corresponds to the limit of blank at 1β level. (b). Same concepts as they apply in dPCR. Continuous curves are replaced by histograms as dPCR reports integer values for the targets detected. Blue: histogram of possible copies for a negative sample, Red: histogram of possible copies for a positive sample. Moving the cutoff value is only possible in discrete ways, which results in jumps of possible values of sensitivity and specificity (α and β levels not shown) Counting Statistics 35 samples are measured. Any value higher than zero for the FPR per test will lead to specificity that is less than 100%. Alternatively, a cutoff that is set too low, causing truly negative samples to be called as positives, will also cause specificity of less than 100%. Figure 5 illustrates the inherent trade-off between sensitivity and specificity. Note how by moving the cutoff value, one changes both the specificity and sensitivity of a given test, increasing one while decreasing the other. These change in opposite directions. For tests that produce integer values (molecules detected in test) cutoff values can only be discrete, which leads to a set of possible combinations of sensitivity/specificity values. 10 Limit of Blank (LOB) The limit of blank, LOB, at a particular confidence level, usually 95%, is a critical concept. The formal definition, according to CLSI guidelines [10], is the maximum value of the analyte that may be reported 95% of the time when we measure a true blank sample. In dPCR we report counts of target molecules, thus the LOB must be an integer—0, 1, 2, etc. counts per test. When we say that the LOB for a test is 1 copy, we mean that 95% of the time when we measure a negative/blank sample we will report at most one copy. This is equivalent to stating that we will report 2, 3, or more copies at most 5% of the time (See Fig. 5). The LOB is closely related to the specificity of the test and the underlying FPR per partition or per test. When we measure a blank sample, each partition should be reported as negative. However, when the FPR per partition is not 0, some truly negative partitions may be reported as false positives. In most tests we will still report 0 positive partitions and, thus, 0 target copies. But for some samples, we may report 1, 2 or more copies. These will all be false positive counts. The robust way to experimentally measure LOB of a test is to run multiple blank samples, record the number of targets reported and rank order the results. When we draw a line at the 95% percentile, the value where this happens is the 95% LOB. It is good practice to verify the assumed Poisson distribution of the counts by fitting a model. Theory predicts that these counts will also follow a Poisson distribution, as long as each negative partition may be independently misreported as positive. The average number of false positive target counts per test will equal the FPR per partition times the number of partitions per test. If the counts per test do not appear to follow a Poisson distribution, we may still use the LOB number, but need to be aware there are other effects playing a role, other than unreliable detection of negative partitions. These may point to additional assay variability or inadequate lab practices. 36 11 Svilen Tzonev Limit of Detection (LOD) The limit of detection, LOD, at a particular confidence level, frequently 95%, is another critical concept. The formal definition, according to the CLSI guidelines, is the minimum level of the analyte in a sample that will be reported as detected with this same 95% probability. The LOD level depends on the analytical call cutoff threshold and FPR and FNR values. An increase of the FPR per partition will lead to an increase of the false positives and will cause the system to report on average higher counts than truth, pushing the apparent LOD lower if the cutoff is held constant (see below). An increase of the FNR has the opposite effect—it may cause positive partitions to appear as negative, effectively reporting a lower value for the analyte than truth. This will increase the LOD, if we hold the cutoff threshold constant. The lowest possible value for the call cutoff is 1 copy per test, i.e., if we see a single copy of the target we will call the sample positive. Even if FPR and FPN are exactly zero, we already saw how subsampling puts a fundamental limit on what target levels we can detect confidently. Recall when we expect to draw on average three target copies, there is a 5% probability that any individual draw will contain no copies at all—there would be nothing to detect! This observation sets a critical level of three copies as the natural LOD of molecular counting assays like in dPCR when subsampling is a factor (see exceptions in “Finite Subsampling” section below). This limitation applies to any other counting based methods, like versions of next generation sequencing, that detect individual target molecules. Choosing a higher level for the call cutoff will produce a higher LOD value. Table 1 illustrates the LOD 95% values for different cutoff values. A word of caution, the reader should not confuse the concepts of Sensitivity and Positive Predictive Value (PPV) of a test. The Table 1 LOD 95% values for different test cutoff values (copies per test). FPR ¼ 0, FNR ¼ 0 Call cutoff (positive sample) copies per test LOD 95% copies per test 1 3.00 2 4.74 3 6.30 4 7.75 5 9.15 Counting Statistics 37 Sensitivity, again, relates to the probability that the test will call a positive sample as positive, while PPV inverts the logic—it defines the probability that a sample that is called positive by the test is indeed positive in reality. 12 Limit of Quantification (LOQ) For quantitative tests the limit of quantification (LOQ) of a test is defined as the minimum level of an analyte that can be measured within a predefined level of uncertainty. The uncertainty is typically expressed by the standard deviation or the coefficient of variation based on multiple measurements of a given sample at the LOQ level. The preferred imprecision level depends on the situation, typical numbers include 20%, 35%, or higher and depend on the requirements of the application. By definition, the values of the three parameters must be ordered LOB < LOD LOQ For any test the LOD must be higher than the LOB, and the LOQ cannot be lower than the LOD. At the lowest LOD achievable, three copies, the minimal possible coefficient of variation is 1 CV min ¼ pffiffiffi 58% 3 In practice, the observed CV at this level will usually be higher as other sources of variability will inevitably add to this theoretical minimum. There are dPCR tests with remarkably low levels of LOB, LOD, and LOQ, which reach very close to the theoretical limits of single molecule detection levels. 13 Why and How Do These Performance Characteristics Matter The concepts discussed above describe the overall performance of a test of interest. This is expressed in the context of “absolute truth” on the analytical side or a prior method of measurement of what matters on the clinical side. For example, if a patient has ten viral particles per 10 ml of blood (for a total of 5000 in the circulation), is this clinically significant? Should this be viewed as a positive or a negative patient? Typically, one chooses the clinical cutoff level on a population basis based on criteria relevant to patient health or prognosis. The relationships between the test performance characteristics and the clinical cutoff level determine the ultimate utility of the test. 38 Svilen Tzonev Table 2 Clinical and test concepts meaning and context When known How known or chosen relative to test Applies to Determines Clinical cutoff Patient population Is a patient considered clinically Based on external positive or negative clinical knowledge Before the test is performed Test/call cutoff Test When the test reports positive or Chosen to satisfy test negative result requirements Before the test is performed LOB Test Maximum value (95% probability) that may be reported on a blank sample Determined during test Before the test is performed development and validation LOD Test Level of analyte that can be reliably (95% probability) detected by the test Determined during test Before the test development and is performed validation Test result Sample + Test What the test measured for a given sample By performing a test on After the test is a sample performed The meaning of and the context of selected concepts are summarized in Table 2. In general, a test is suitable for determining patient status when its LOD matches the clinical cutoff and does not produce too many false positive results. But there may be cases where higher specificity is preferred and the LOD differs from the clinical cutoff. Table 2 also illustrates why it may be acceptable to report below a stated LOD: the LOD is what we know about the test before we have measured any particular sample. The test result is what we know about the combination of test and sample—after we have performed a measurement (see “Reporting below LOD” below). 14 Effects of False Positives, False Negatives, and Call Cutoffs When a test has a finite (nonzero) FPR or FNR values, the values of LOB, LOD, and LOQ will be different than when the FPR and FNR are zero. In general, higher rates of false positive partitions will lead to higher values of LOB for a fixed specificity. Since the cutoff values must be integers, in practice there is a quantization effect in this relationship. As an illustration, let us consider first a test with zero FPR and FNR. In this case the LOB will be zero copies per test at 100% specificity level, the LOD will be three copies per test at the 95% sensitivity level for a call cutoff of one copy per test. (The LOB will of course also be zero at 95% specificity level.) The LOQ could be 11 copies per test at 30% CV, or perhaps, five copies per test at 45% CV. These are the best possible performance characteristics of any Counting Statistics 39 test based on counting single molecules, no matter what technology is used. Now let us increase the FPR to 0.01 copies per test. The LOB is zero copies but at 99% specificity level, the LOD will be 2.99 copies at 95% sensitivity. As we increase further the FPR per test, we can keep LOB at zero but will lose specificity; at the same time LOD will keep going lower for a fixed sensitivity level. We are still keeping the cutoff value at one copy per test. Refer to Fig. 5b. At some value of the FPR per test, the specificity may become unacceptably low and we may be forced to choose a different cutoff—to the next integer up. When we do this, we regain higher specificity, but have to jump to a different zone for the LOD value—as a result, there will be a significant change in the LOD value. This happens because the specificity, sensitivity, and cutoff values are interrelated, and in digital we must use an integer value for the cutoff. This “integerness” requirement leads to specific zones of operation for any digital test. Table 3 illustrates the critical values of various parameters for selected specificity and sensitivity levels and the zones defined by the choice of cutoff level. Here is how to read it: The first column contains the value of the call cutoff. Column 2 contains the maximum value of the FP counts per test allowed so we can operate at 95% specificity. Column 3 contains the corresponding value for the LOD at 95% sensitivity (given the cutoff value and the max FPR). All units are copes per test. The last column repeats the numbers from Table 1 and illustrates the LOD level if we were operating at exactly zero FPR. Let us look at the row for cutoff of 1. If we need to have specificity of at least 95% and use this cutoff, our test cannot have FPR of higher than 0.05 copies per test. At this FPR level, the LOD at 95% sensitivity will be 2.94 copies per test. Table 3 Critical levels of cutoff value, FPR, and LOD for selected specificity and sensitivity values Spec 95%, Sens 95% Call cutoff (positive sample) max FPR copies per test LOD 95% LOD 95% at 0 FPR 1 0.05 2.94 3.00 2 0.36 4.39 4.74 3 0.82 5.48 6.30 4 1.37 6.38 7.75 5 1.97 7.19 9.15 40 Svilen Tzonev For a test with FPR of 0.1 copies per test, in order to keep specificity above 95%, we must choose a cutoff of 2. For this cutoff, maximum FPR is 0.36 copies per test and the LOD at this FPR will be 4.39 at 95% Sensitivity (row 2). Actual specificity for a test with 0.1 FPR and cutoff of 2 copies will be higher than 95% and we can estimate the LOD 95% by subtracting 0.1 from 4.74 to get 4.64. The careful reader will notice that the values of columns 2 and 3 add up to the value of column 5 for any given cutoff value (subject to rounding effects). Intuitively, this roughly translates to the statement that “all positive calls are either a true or a false positive for any given cutoff level.” Our last example will be a test with a FPR of one copy per test and also negligible FNR. Since we cannot choose a cutoff between three and four, we have to go to four. A sample is positive when we see at least four copies. In this case the LOB is three copies (at a level higher than 95% but we have no choice) per test. The 95% LOD is approximately 6.75 copies per test (7.75–1.00). In this framework, we call a sample positive when we measure four copies and we will correctly call a sample as positive when the expected number of copies in our test is 6.75 as drawn from the larger whole. We need to emphasize once more that these relationships between the critical values are governed by fundamental counting statistics considerations. As long as we are trying to ascertain something about the sample in vivo based on small representative subsample drawn from it, they will always apply. Subsampling at the molecular level is governed by Poisson statistics and no technology or approach can do better. dPCR technology and its commercial implementation do allow us to reach very close to these physical limitations, indeed. 15 Additional Considerations 15.1 Selecting the Operating Point We saw that the value of call cutoff, sensitivity, and specificity are interrelated. How one chooses where to operate depends on the requirement of the test: Is it more acceptable to call false positives or false negatives? A commonly used scheme is to first select the specificity level required. Together with the measured FPR (and related LOB), this will determine the choice for the analytical cutoff value. Then we evaluate the sensitivity required, which will finally determine the LOD level for the test. Referring to Fig. 5a, we first measure the distribution of results on blank samples (left curve). We choose desired specificity, (1 β), which sets the value of the call cutoff (area under the curve to the right of the cutoff). We next select the desired Sensitivity, (1 α). To do so, measure multiple replicates of samples at different levels of positivity to generate a family of response curves (see Fig. 5a). The curve (i.e., level of positivity) for which α% of the curve area is Counting Statistics 41 to the left of the cutoff value determines the LOD at α%. Again, for quantized measurements, we may be forced to settle on particular values for specificity and sensitivity, since the cutoff must be an integer. 15.2 Finite Subsampling There are circumstances where subsampling is minimal and we therefore do not subsample a very small fraction of the whole. For example, if experiments are performed on a single cell level and most of the biological material is actually assayed. In such cases we will not suffer the subsampling penalties to the same level. We need to deal with hypergeometric distributions instead of Poisson, but such details go beyond the scope of this chapter. In the extreme case where we do measure the whole, “what we see is what was there” principle applies and the only uncertainty would come from partitioning variability and any dead volume in the system. 15.3 Nonstandard Variability We described above the cases where pure molecular stochasticity is involved—the factors we know will be always present and that we cannot control. In practice, there may be other sources of variability that could extend uncertainty. Issues like inadequate lab practices, contamination, reagent quality, and intermittent system performance may invalidate the rules described here. Regular monitoring of predicted versus measured behavior is important. This is accomplished by running controls with any unknown samples and periodic evaluation of performance on such controls. Monitoring the LOB on negative controls and verification of a Poisson distribution of the false positives is helpful as well. 15.4 Multitarget and Related-Target Considerations A very common type of diagnostic tests is where a reference target is measured together with the biomarker of interest. For example, the number of wild-type genome copies detected is used as normalization for the number of mutant target copies. In this case a natural quantity of interest is the Minor Allele Frequency (MAF), the ratio of (mutant)/(mutant + wild type) copies. Clearly, samples with a higher number of wild-type copies may show a higher number of false mutant copies. The FPR per wild-type copy, then, becomes the natural metric to watch. Careful estimation and monitoring of this rate is important to develop and apply appropriate cutoff criteria. The concepts of LOB, LOD, and LOQ, as described above, are applicable for the MAF value. In many cases, the LOD when expressed in MAF space, will still be determined by the availability of actual mutant copies. As we saw, the critical value is three mutant copies for 95% confidence, which, when divided by the wild-type copies present, will establish the critical level for the MAF at 95% confidence. When a large number of wild-type copies are present, the cluster of partitions with both wild-type and mutant copies, i.e., double-positives, becomes more diffuse and it may be harder to 42 Svilen Tzonev select appropriate amplitude threshold values. One technique that can minimize the false calls is to use only pure mutant partitions as a reliable source of mutant copies and compare against the doublenegative partitions’ count, although this will somewhat diminish the sensitivity of the test. 15.5 Reporting Below LOD Should we report a result which falls below the LOD? The nature of dPCR allows us to do this with confidence, provided that certain criteria are met. Let us first consider what the LOD means. This is what we know about the detection capabilities of our test before we have performed an experiment on an unknown sample. At this stage we usually know nothing about the sample—this is why it is called unknown. After we have performed the measurement, we know more about the sample. The LOD applies to the test, while actual reported level applies to the sample. Because dPCR essentially counts individual molecules, when we know that the FPR is sufficiently low, we may reliably call sample levels below formal LOD. Consider a test with FPR less than 0.05 per test and, thus, with 95% LOB of zero target copies. If we detect one or two targets, we are 95% confident that these are real and can be reported with such confidence, even though this is below the 95% LOD of three copies per test. Operating at very low FPR is always desirable from a performance guarantee perspective and allows one to report confidently below formal test LOD levels. 15.6 Increasing the Measured Volume Many of the limitations described above arise due to low levels of target molecule counts. If we subsample a small volume of the whole, we will always be limited by the molecular stochasticity processes. One way to counteract that is to increase the subsampling volume so we do not operate so close to the fundamental limit of three copies. In other words, increasing the expected number of molecules to be detected will always help to improve test performance. However, if preamplification is used, one has to watch for potential biases and nonuniformity and accept the likely loss of absolute quantification—a major benefit for dPCR. 16 Conclusions Digital PCR technology and available commercial systems and assays have reached the point where they are becoming mainstream and preferred approaches when most precise and sensitive detection of genomic targets is required. With increased availability of such solutions, the field needs to understand the issues that are specific to counting statistics so better tests can be developed, validated, and put into practice for the benefit of science and patients alike. Counting Statistics 43 While we cannot beat the fundamental limits due to molecular stochasticity, dPCR technology allows us to operate at the best possible mode. Acknowledgments Many thanks to my Bio-Rad colleagues George Karlin-Neumann, Dianna Maar, Xitong Li, Lucas Frenz, and Francisco Bizouarn for multiple suggestions on content and clarity of this chapter. References 1. Sykes PJ, Neoh SH, Brisco MJ et al (1992) Quantitation of targets for PCR by use of limiting dilution. BioTechniques 13(3):444–449 2. Vogelstein B, Kinzler KW (1999) Digital PCR. Proc Natl Acad Sci U S A 96(16):9236–9241 3. Baker M (2012) Digital PCR hits its stride. Nat Meth 9:541–544 4. Bhat S, Herrmann J, Armishaw P et al (2009) Single molecule detection in nanofluidic digital array enables accurate measurement of DNA copy number. Anal Bioanal Chem 394 (2):457–467 5. Hindson BJ, Ness KD, Masquelier DA et al (2011) High-throughput droplet digital PCR system for absolute quantitation of DNA copy number. Anal Chem 83(22):8604–8610. https://doi.org/10.1021/ac202028g 6. Taly V, Pekin D, Benhaim L, Kotsopoulos SK et al (2013) Multiplex picodroplet digital PCR to detect KRAS mutations in circulating DNA from the plasma of colorectal cancer patients. Clin Chem 2013(59):1722–1731 7. Madic J, Zocevic A, Senlis V et al (2016) Three-color crystal digital PCR. Biomolecular Detection and Quantification 2016 (10):34–46. https://doi.org/10.1016/j.bdq. 2016.10.002 8. Dube S, Qin J, Ramakrishnan R (2008) Mathematical analysis of copy number variation in a DNA sample using digital PCR on a nanofluidic device. PLoS One 3(8):e2876 9. Whale AS, Huggett JF, Tzonev S (2016) Fundamentals of multiplexing with digital PCR. Biomolecular Detection and Quantification. https://doi.org/10.1016/j.bdq.2016.05.002 10. CLSI (2012) Evaluation of detection capability for clinical laboratory measurement procedures; approved guideline - second edition. CLSI document EP17-A2. Clinical and Laboratory Standards Institute, Wayne, PA