A friendly course on NUMERICAL METHODS

Maria-Magdalena Boureanu and Laurenţiu Temereancă

2

Contents

1 Introduction

5

2 Solving linear systems - direct methods

7

2.1

2.2

2.3

Gaussian elimination method (Gauss pivoting method) . . . . . . . . . . . . . .

9

2.1.1

Gauss method with partial pivoting at every step . . . . . . . . . . . . .

21

2.1.2

Gauss method with total pivoting at every step . . . . . . . . . . . . . .

25

L − R Decomposition Method . . . . . . . . . . . . . . . . . . . . . . . . . . . .

28

2.2.1

The L − R Doolittle factorization method . . . . . . . . . . . . . . . . .

29

2.2.2

The L − R Croût factorization method . . . . . . . . . . . . . . . . . . .

35

2.2.3

The L − R factorization method for tridiagonal matrices . . . . . . . . .

37

Chio pivotal condensation method . . . . . . . . . . . . . . . . . . . . . . . . . .

42

3 Solving linear systems and nonlinear equations

45

3.1

Jacobi’s method for solving linear systems . . . . . . . . . . . . . . . . . . . . .

48

3.2

Seidel-Gauss method for solving linear system . . . . . . . . . . . . . . . . . . .

54

3.3

Solving nonlinear equations by the successive approximations method . . . . . .

65

4 Eigenvalues and eigenvectors

4.1

75

Krylov’s method to determine the characteristic polynomial . . . . . . . . . . .

5 Polynomial interpolation

5.1

76

87

Lagrange form for the interpolation polynomials . . . . . . . . . . . . . . . . . .

3

89

4

CONTENTS

Chapter 1

Introduction

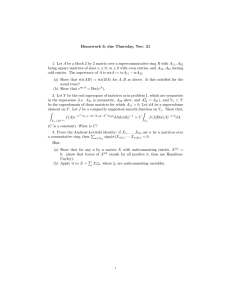

The purpose of this course is to introduce to students the concept of ”Numerical Methods” and

some basic techniques. We start by answering to a set of questions that naturally appear in

one’s mind.

• Q1: What do we understand by ”Numerical Methods”?

They usually represent the methods that are used when we need to obtain the solution

of a numerical problem by means of a computer.

More exactly, this implies to have a complete set of procedures clearly stated in order to

obtain the solution of a mathematical problem together with its error estimates.

• Q2: Why do error estimates appear?

Sometimes we do not obtain an exact solution of a problem. Instead, from reasons that

we are going to discuss later, we prefer to construct an approximation of it.

There are some errors that simply appear in the calculus when we deal with periodic

continued fractions. For example, if in a problem we arrive at the value 13 , this means

0, (3), but the computer is not able to take infinitely long sequences of decimals. So this

is another reason for the errors to appear and, in this case, to also accumulate.

• Q3: Why do we use the ”Numerical Methods”?

While the analytical methods studied in schools had the role to help understand the

mechanism of a problem, the role of the numerical methods is to solve very heavy problems

which would take too much time and energy to be solved without the involvement of the

software. For example, in highschool we all solved linear systems of 3 or 4 equations. But

how such will it take us to solve a linear system involving hundreds of equations by hand?

This is a question that is better to leave it unanswered.

• Q4: Where do we apply these ”Numerical Methods”?

You have to always keep in mind that mathematics appeared due to real-life necessities.

We do not solve a linear system of hundreds of equations because it is fun, but because

5

6

CHAPTER 1. INTRODUCTION

it is necessary in some specific situations, like when looking into the space technology.

Generally speaking, the numerical methods are quit useful in engineering, natural sciences,

social sciences, antreprenorship, medicine, etc...

To other questions, that will probably occur to students thoughts, we will try to answer during

classes.

Chapter 2

Solving linear systems - direct methods

Definition 1. By a linear system of m linear equations and n unknown variables, with real

coefficients, we understand the following set of equalities

(2.1)

a11 x1 + a12 x2 + ... + a1n xn = b1

a21 x1 + a22 x2 + ... + a2n xn = b2

...........

am1 x1 + am2 x2 + ... + amn xn = bm ,

where aij , bi ∈ R, for all i ∈ {1, 2, ..., m} and all j ∈ {1, 2, ..., n}.

In addition, if b1 = b2 = ... = bm = 0, then the above system is called a homogeneous system of

linear equations (or a homogeneous linear system).

Remark 1. An equation is called linear because the polynomial function involved in it is of

first degree.

x

e1

x

e2

N

Definition 2. By a solution of system (2.1) we understand a vector x

e=

... ∈ R such

x

en

that when we replace each xi by x

ei , for all i ∈ {1, 2, ..., n}, all the equalities in the system hold

simultaneously.

Depending on the existence of at least one solution for system (2.1), we distinguish between

incompatible systems, that is, systems with no solution, and compatible system, that is, systems

which admit at least one solution. If the solution of a system is unique, that system is called

determined compatible. If the system admits infinitely many solutions, that system is called

undetermined compatible.

7

8

CHAPTER 2. SOLVING LINEAR SYSTEMS - DIRECT METHODS

To every system (2.1) we can associate the

a11

a21

A=

...

am1

following matrix:

a12 . . . a1n

a22 . . . a2n

.

... ... ...

am2 . . . amn

The free terms on the right-hand side of the linear equations can be kept in a vector

b1

b2

b=

... .

bn

The above system (2.1) can be written in the compact form

n

X

(2.2)

aij xj = bi ,

i = 1, 2, ..., m.

j=1

Moreover, the matrix form of the system (2.1) is the following

(2.3)

Ax = b,

where

x1

x2

x=

... .

xn

Notice that we denote by

a11 a12

a21 a22

A=

... ...

am1 am2

. . . a1n

. . . a2n

... ...

. . . amn

b1

b2

,

...

bm

the extended matrix associated to system (2.1)

There are two types of methods that we can use to solve a system of linear equations via

numerical methods:

1. direct methods

• they are used for systems with less than 100 unknown variables and equations;

• these allow us to arrive at the solution of the system after a finite number of steps.

2. iterative methods

2.1. GAUSSIAN ELIMINATION METHOD (GAUSS PIVOTING METHOD)

9

• they are used for systems with more than 100 unknown variables and equations;

• with these methods we obtain an approximation of the solution.

In this chapter we only focus on the direct methods used for solving linear systems and on

a direct method to calculate the determinant of a matrix, since the calculus of determinants

often appears when we solve linear systems. The iterative methods are discussed in the next

chapter of this book.

2.1

Gaussian elimination method (Gauss pivoting method)

Gauss’s method is a general method introduced by Gauss in 1823. This method is based

on a successive elimination schema of the unknowns of the system by performing elementary

transformations of the system’s matrix: the permutation of two rows (that is, horizontal lines)

or columns (that is, vertical lines), multiplications of one row by scalars and the sums of this

one with another, etc (see [7, Ch.2]).

Let us start by discussing the standard solving of a linear system of two equations and two

unknown variables. We will go through a simple example

x1 − 3x2 = −8

3x1 + 2x2 = 9.

We multiply the first line by −3 and we get

−3x1 + 9x2 = 24

3x1 + 2x2 = 9.

We add the two lines of the system and we deduce that

11x2 = 33,

hence x2 = 3 and then we obtain x1 = 1.

Obviously, this is a trivial exercise, but it will help us better understand how the Gaussian

elimination method works. To this end, let

us write

the above system under the matrix form

1 −3

−8

Ax = b, where A =

, and b =

. Our action consist in adding to the second

3 2

9

line the first line multiplied by 3. This can be written as follows:

1 −3 −8 L2 −3L1 1 −3 −8

A=

∼

,

(2.4)

3 2

9

0 11

33

where the vertical vertical bar from the extended matrix only appears because it helps us

separate more easy the column of the free terms, and ∼ denotes the fact that these two extended

matrices correspond to equivalent systems. We recall that by equivalent systems we understand

10

CHAPTER 2. SOLVING LINEAR SYSTEMS - DIRECT METHODS

that the systems have the same solution. Thus, even though we have worked with the lines of

the system, the solution did not change. And this occurs naturally, as we have seen from the

detailed solving of the system. Notice that in (2.4) the second extended matrix has kept the

first row as it was, while the second row was modified to correspond to the equation 11x2 = 33.

Passing to more complicated example, let us solve the system

x1 + x2 − 2x3 = −8

−4x1 + x2 − x3 = 1

3x1 − 3x2 + 2x3 = 2.

We intend to eliminate x1 from the second end the third line of the system and to preserve the

first line exactly as it is. How do we proceed? To the second line we add the first line multiplied

by 4, while to the third line we add the first line multiplied by (−3). We have arrived at

x1 + x2 − 2x3 = −8

5x − 9x3 = −31

2

−6x2 + 8x3 = 26.

What do we observe? That the second and the third line from a system of two equations and

two unknowns. Next, we eliminate x2 from the last equation. More precisely, we preserve the

second line as it is and to the third line we add the second line multiplied by 65 (this is exactly

as multiplying the second line by 6, the third line by 5, and adding them). We have obtained

x1 + x2 − 2x3 = −8

(2.5)

5x2 − 9x3 = −31

14

− 5 x3 = − 56

,

5

consequently x3 = 4. We substitute the value of x3 in the second equation and we deduce that

x2 = 1. Finally, we substitute the values of x2 and x3 in the firs equation and we deduce that

x1 = −1. The above method is actually the Gaussian elimination method . Only that the will

work with the corresponding matrix form instead:

1

1 −2 −8

1 1 −2

−8

1 1 −2

−8

A = −4 1 −1

1 ∼ 0 5 −9 −31 ∼ 0 5 −9

−31 .

3 −3 2

2

0 −6 8

26

0 0 − 14

− 56

5

5

We write the system corresponding to the last matrix, which is exactly system (2.5), and then

we solve it by the backward substitution method, as we already saw. A question remains

though: how can we arrive at system (2.5) by only using the matrix form? Can we formulate

a rule that allows us to jump from matrix A to an equivalent matrix and then to another

equivalent matrix?

Indeed, Gauss gave us the so-called ”rectangular rule” which allows us to arrive at a matrix

associated to an equivalent system without performing the previous calculus (although, in some

sense, we are performing exactly the previous calculus, but in a different approach). Hence let

us try to write the general rules of this method by observing the transformations on the above

example.

2.1. GAUSSIAN ELIMINATION METHOD (GAUSS PIVOTING METHOD)

11

First of all, it is clear that goal is to obtain an upper-triangular matrix so that we could solve

the corresponding system by back substitution. here we should clarify the meaning of some

notions.

• What is an upper-triangular matrix?

Definition 3. An upper-triangular matrix is a matrix that has only zeros under the main

diagonal, that is, a matrix of the following form

C=

c11 c12 c13

0 c22 c23

0

0 c33

... ... ...

0

0

0

... c1,n−1

... c2,n−1

... c3,n−1

...

...

...

0

c1n

c2n

c3n

...

cnn

.

Note that this notion only applies to square matrices, that is, matrices in which the number of

rows coincides with the number of columns. This is fine with us because in applications we are

mostly interested in the situation when a system is a compatible determined system (that is,

the system admits a unique solution). So we will focus on establishing whether thus is the case

or not, and, as a consequence, we will treat systems in which the number of unknown variables

is the same with the number of equations. Correspondingly, the number of columns is the same

with the number of rows and the matrix associated to the system is a square matrix.

Remark 2. Similarly, we can define a lower-triangular matrix. More specific, a lowertriangular matrix is a square matrix that has only zeros above the main diagonal, that is, a

matrix of the form

c11

c21

c31

...

0

c22

c32

...

0

0

c33

...

C=

cn−1,1 cn−1,2 cn−1,3

cn1

cn2

cn3

...

0

0

...

0

0

...

0

0

...

...

...

... cn−1,n−1 0

... cn,n−1 cnn

.

Returning to our discussion, we identify another question that might ”pop” in one’s head:

• What do we mean by solving a system by back substitution?

Well, it means that we first found out the value of xn from the last equation, then we go back

and we substitute the value of xn in the previous equation which gives us the value of xn−1 .

Then we go back and we substitute the values of xn and xn−1 in the previous equation which

gives us the value of xn−2 , and so on, until we reach the first equation of the system and we

12

CHAPTER 2. SOLVING LINEAR SYSTEMS - DIRECT METHODS

find out the value of x1 . At this point we have obtained the solution of the system

x1

x2

..

S = . ,

xn−1

xn

but be careful at the order of writing the values of the unknown variables into S (it is not the

order in which we have found them!). Obviously, the back substitution solving method works

”hand in hand” with an upper-triangular matrix associated to the system.

Remark 3. Similarly, when solving a system by direct substitution, we first find the value of

x1 from the first equation, then we substitute the value of x1 in the equation below and we obtain

x2 . Then we substitute the values of x1 and x2 in the equation below and we obtain the value of

x3 . We continue this procedure until we obtain the value of xn . This direct substitution method

is appropriate for the situation when the matrix associated to our system is a lower-triangular

matrix.

Note that we have clarified these notions, let us see how we can attain our goal, that is, to

arrive to an upper-triangular matrix. We notice the we always perform the same actions:

1. We keep unchanged the first row or the row at which we are at that moment (and implicitly, the rows above it, if they exist).

2. Below the pivot we change all the entries in that column into zeros.

3. We apply the ”rectangle rule” to find out the rest of the elements of the extended matrix.

These are the three instructions that we must apply at every step of the algorithm until we

obtain an upper-triangular matrix. The immediate questions are the following: what is a pivot

and what is the ”rectangle rule”?

A pivot is an element of the matrix A which is situated on the main diagonal (hence is the form

akk ) and it must always be non-trivial. In the eventuality in which akk = 0, we will interchange

row k with another row situated below it. Why below? Because above we already applied the

three instructions and those elements are already the elements we need. Can we interchange

two rows of matrix A without any consequences? Yes, of course, because this is equivalent

to interchanging the order of two equations into our system, and this will not have any effect

on the solution. Be careful though, when interchanging the rows of matrix A we necessary

have to interchange the corresponding elements from the column of free terms (actually we are

interchanging two rows of the extended matrix A).

Remark 4. The method that we are explaining now represents the ”basic Gauss”. Later we

are going to study the ”partial pivoting Gauss method” and the ”total pivoting Gauss method”

and these more advantage methods help us avoid the situation in which a pivot is null.

2.1. GAUSSIAN ELIMINATION METHOD (GAUSS PIVOTING METHOD)

13

Finally, let us see what is with this ”rectangular rule”. At every step of the algorithm we

change the values of the elements of the extended matrix A with new ones (usually).

In fact, we have seen that the elements on the row k at which we are at some point (since we

start our discussion with a11 on row 1, then we move to a22 which is on row 2 etc.) remain

as they were at the previous step. In addition, under akk we will put zero everywhere on the

column. Without returning and modifying any of the elements that we already calculated (these

are the elements above row k and at the left of column k) we apply the ”rectangular rule” to all

the other elements aij . To apply the ”rectangular rule” we imagine a rectangular in which the

first diagonal starts at akk and ends at aij . We can identify the other corners of this rectangular

as being aik and akj , and we can visualize the other diagonal. The rule is the following

akk aij − aik akj

,

akk

and now it is clear why the pivot can never be 0.

matrix below

a11 a12 ... a1k ...

..

..

..

.

.

.

ak1 ak2 ... akk ↔

..

..

.

.

l

ai1 ai2 ... aik ↔

..

..

..

.

.

.

an1 an2 ... ank ...

We try to present an image of this rule in the

a1j ... a1n

..

..

.

.

akj ... akn

..

l

.

aij

..

.

... ain

..

.

anj ... ann

b1

..

.

bk

..

.

.

bi

..

.

bn

We consider the linear system

(2.6)

Ax = b,

where A = (aij ) 1≤i≤n ∈ Mn×n (R) a matrix with n rows and n columns, b = (bi )1≤i≤n ∈ Mn×1 (R)

1≤j≤n

a column vector and x = (xi )1≤i≤n ∈ Rn the unknown vector.

First, we consider the extended matrix

A = (aij )

1≤i≤n

1≤j≤n+1

,

where we denote ai,n+1 = bi , 1 ≤ i ≤ n.

The Gaussian elimination method consists of processing the extended matrix A by elementary transformations, such that, in n − 1 steps the matrix A becomes upper-triangular:

(n) (n)

(n)

(n)

(n)

a11 a12 ... a1,n−1

a1,n

a1,n+1

(n)

(n)

(n)

(n)

a2,n

a2,n+1

0 a22 ... a2,n−1

.

not. (n)

..

..

..

..

= A , where A(1) = A.

(2.7)

. ...

.

.

(n)

(n)

(n)

0 ... an−1,n−1 an−1,n an−1,n+1

0

(n)

(n)

0

0 ...

0

an,n

an,n+1

14

CHAPTER 2. SOLVING LINEAR SYSTEMS - DIRECT METHODS

Remark 5. The extended matrix A(n) has n rows and n + 1 columns.

(k)

In what follows, aij , ∀1 ≤ i, k ≤ n, 1 ≤ j ≤ n + 1, represents the element of the extended

matrix A(k) at step k, located on the row i and column j.

(k)

We apply the following algorithm to obtain the matrix (2.7), assuming that akk 6= 0, 1 ≤ k ≤

(k)

n − 1, where the element akk is called pivot:

• we copy the first k rows;

• on column ”k”, under pivot, the elements will be null (zero);

• the remaining elements, under the row ”k”, at the right of the column ”k”,will be calculated with the ”rectangle rule”:

..

.

row k · · ·

(2.8)

row i · · ·

..

.

(k)

akk

..

.

·········

(k)

·········

aik

..

.

column k

(k)

akj

..

.

(k)

aij

..

.

...

(k) (k)

⇒

...

(k+1)

aij

=

(k) (k)

akk aij − aik akj

(k)

.

akk

column j

Therefore, for 1 ≤ k ≤ n − 1, we obtain the following formulae:

(k)

1 ≤ i ≤ k, i ≤ j ≤ n + 1

aij

(k+1)

0

1 ≤ j ≤ k, j + 1 ≤ i ≤ n

(2.9)

aij

=

(k)

aik

(k)

(k)

aij − (k) · akj

k + 1 ≤ i ≤ n, k + 1 ≤ j ≤ n + 1.

akk

At the last step k = n − 1, we obtain the upper-triangular system

(n)

(n)

(n)

(n)

a11 x1 + a12 x2 + ... + a1n xn = a1,n+1

(n)

(n)

(n)

a22 x1 + ... + a2n xn = a2,n+1

..........

(2.10)

(n)

(n)

(n)

aii x1 + ... + ain xn = ai,n+1

..........

(n)

(n)

ann xn = an,n+1 .

The above system (2.10) has the same solution with the initial one (2.6), but this system has

a triangular form. The solution components of system (2.10) are directly obtained by back

substitution.

From the last equation of system (2.10) we have the following cases

(n)

• If ann 6= 0, then

(2.11)

(n)

(n)

xn = an,n+1 ann

.

2.1. GAUSSIAN ELIMINATION METHOD (GAUSS PIVOTING METHOD)

15

(n)

• If ann = 0, then the system (2.6) has no unique solution.

Moreover, from back substitution in (2.10) we obtain for every i = n − 1, n − 2, ..., 1,

!

n

X

(n)

(n)

(n)

(2.12)

xi = ai,n+1 −

aij · xj

aii .

j=i+1

(k)

Remark 6. In this algorithm we suppose that the pivot akk 6= 0, for every 1 ≤ k ≤ n.

(k)

If at a certain step we have akk = 0, we search, on column k of the matrix A(k) , an element

(k)

aik k 6= 0, k < ik ≤ n and we switch rows ik and k in the matrix A(k) , and we apply the above

formulae (2.9).

Example 1. Solve the following systems using the Gaussian elimination method

−x1 + 3x2 + x3 = −2

2x + 4x2 − x3 = −4

1

−5x1 − 2x2 + 3x3 = 3.

Proof. We have n = 3, and the corresponding matrix and the free therm of the above system

are

−1 3

1

−2

A= 2

4 −1 , b = −4 .

−5 −2 3

3

The extended matrix corresponding to the system is

−1

3

1

(1)

A =A=

2

4 −1

−5 −2 3

(1)

−2

−4 .

3

Step 1. To obtain the matrix A(2) we choose a11 = −1 6= 0 pivot. We keep row 1 from A(1) .

(1)

In the first column, under pivot a11 = −1 the elements will be zero and the other elements are

calculated using the ”rectangle rule” (2.8):

(1)

(1)

(1)

(1)

a11 · a22 − a21 · a12

−1 · 4 − 2 · 3

(2)

a22 =

=

= 10,

(1)

−1

a11

(1)

(1)

(1)

(1)

(2)

a11 · a23 − a21 · a13

(2)

a11

(1)

(1)

(1)

(1)

a11 · a24 − a21 · a14

a23 =

a24 =

(1)

(1)

(2)

a32

=

a11 ·

=

a11 ·

(1)

(2)

a33

(1)

a11

(1)

(1)

a32 − a31

(1)

a11

(1)

(1)

a33 − a31

(1)

a11

=

−1 · (−1) − 2 · 1

= 1,

−1

=

−1 · (−4) − 2 · (−2)

= −8,

−1

=

−1 · (−2) − (−5) · 3

= −17,

−1

=

−1 · 3 − (−5) · 1

= −2,

−1

(1)

· a12

(1)

· a13

16

CHAPTER 2. SOLVING LINEAR SYSTEMS - DIRECT METHODS

(1)

(2)

a34

=

(1)

(1)

(1)

a11 · a34 − a31 · a14

(1)

a11

=

−1 · 3 − (−5) · (−2)

= 13.

−1

We obtain the matrix

A(2)

−1 3

1

=

0

10

1

0 −17 −2

−2

−8 .

13

(2)

Step 2. We choose a22 = 10 6= 0 pivot and we keep rows 1 and 2 from A(2) . In the second

(2)

column, under the pivot a22 = 10 the elements will be zero and the other elements are calculated

using the ”rectangle rule”:

(2)

(2)

(2)

(2)

3

10 · (−2) − (−17) · 1

a22 · a33 − a32 · a23

(3)

a33 =

=− ,

=

(2)

10

10

a22

(2)

(3)

a34

=

(2)

(2)

(2)

a22 · a34 − a32 · a24

(2)

a22

=

10 · 13 − (−17) · (−8)

6

=− .

10

10

We get the matrix

A(3)

−1 3

1

=

0 10 1

3

0 0 − 10

−2

−8 .

6

− 10

The system corresponding to the matrix A(3) is

−x1 + 3x2 + x3 = −2

10x2 + x3 = −8

6

3

x3 = − 10

.

− 10

The above system has the same solution with the initial one, but this system has a triangular

form. The solution components of system are directly obtained by back substitution:

6

3

x3 = − 10

/ − 10

=2

x2 = (−8 − x3 )/10 = (−8 − 2)/10 = −1

x1 = (−2 − x3 − 3x2 )/(−1) = (−2 − 2 − 3 · (−1))/(−1) = 1

and therefore, the solution is,

x1

1

x2 = −1 .

x3

2

Example 2. Solve the following system by using the Gaussian elimination method

(2.13)

x1 + 3x2 − 2x3 − 4x4 = −2

2x1 + 6x2 − 7x3 − 10x4 = −6

−x1 − x2 + 5x3 + 9x4 = 9

−3x1 − 5x2 + 15x4 = 13.

2.1. GAUSSIAN ELIMINATION METHOD (GAUSS PIVOTING METHOD)

17

Proof. We have n = 4, and the corresponding matrix and the free therm of the above system

are

1

3 −2 −4

−2

2

6 −7 −10

, b = −6 .

A=

−1 −1 5

9

9

−3 −5 0

15

13

The extended matrix corresponding to the system is

A(1)

1

3 −2 −4

2

6

−7 −10

=A=

−1 −1 5

9

−3 −5 0

15

(1)

−2

−6

.

9

13

Step 1. To obtain the matrix A(2) we choose a11 = 1 6= 0 pivot. We keep row 1 from A(1) .

(1)

In the first column, under pivot a11 = 1 the elements will be zero and the other elements are

calculated using the ”rectangle rule” (2.8):

(1)

(1)

(1)

(1)

1·6−2·3

a11 · a22 − a21 · a12

(2)

=

= 0,

a22 =

(1)

1

a11

(1)

(1)

(1)

(1)

(2)

a11 · a23 − a21 · a13

(2)

a11

(1)

(1)

(1)

(1)

a11 · a24 − a21 · a14

a23 =

a24 =

(1)

(1)

(2)

a25

=

a11 ·

=

a11 ·

(1)

(2)

a32

(1)

(2)

a33 =

a11 ·

(1)

(2)

a34

=

a11 ·

=

a11 ·

(1)

(2)

a35

(1)

(2)

a11 ·

(2)

a11 ·

a42 =

(1)

a43 =

(1)

(2)

a44

=

a11 ·

=

a11 ·

(1)

(2)

a45

(1)

a11

(1)

(1)

a25 − a21

(1)

a11

(1)

(1)

a32 − a31

(1)

a11

(1)

(1)

a33 − a31

(1)

a11

(1)

(1)

a34 − a31

(1)

a11

(1)

(1)

a35 − a31

(1)

a11

(1)

(1)

a42 − a41

(1)

a11

(1)

(1)

a43 − a41

(1)

a11

(1)

(1)

a44 − a41

(1)

a11

(1)

(1)

a45 − a41

(1)

a11

=

1 · (−7) − 2 · (−2)

= −3,

1

=

1 · (−10) − 2 · (−4)

= −2,

1

=

1 · (−6) − 2 · (−2)

= −2,

1

=

1 · (−1) − (−1) · 3

= 2,

1

=

1 · 5 − (−1) · (−2)

= 3,

1

=

1 · 9 − (−1) · (−4)

= 5,

1

=

1 · 9 − (−1) · (−2)

= 7,

1

=

1 · (−5) − (−3) · 3

= 4,

1

=

1 · 0 − (−3) · (−2)

= −6,

1

=

1 · 15 − (−3) · (−4)

= 3,

1

=

1 · 13 − (−3) · (−2)

= 7.

1

(1)

· a15

(1)

· a12

(1)

· a13

(1)

· a14

(1)

· a15

(1)

· a12

(1)

· a13

(1)

· a14

(1)

· a15

18

CHAPTER 2. SOLVING LINEAR SYSTEMS - DIRECT METHODS

Therefore, we obtain the matrix

A(2)

1

0

=

0

0

3 −2 −4

0 −3 −2

2 3

5

4 −6 3

−2

−2

.

7

7

(2)

Step 2. Since a22 = 0, we can not apply the rectangle rule and we will change (switch) the

rows 2 and 3 in the matrix A(2) , and we obtain

1 3 −2 −4 −2

7

2

3

5

L ↔L 0

A(2) 2= 3

0 0 −3 −2 −2 .

7

0 4 −6 3

(2)

We choose a22 = 2 6= 0 pivot and we keep rows 1 and 2 from A(2) . In the second column, under

(2)

the pivot a22 = 2 the elements will be zero and the other elements are calculated using the

”rectangle rule” (2.8):

(2)

(2)

(2)

(2)

a22 · a33 − a32 · a23

2 · (−3) − 0 · 3

(3)

a33 =

=

= −3,

(2)

2

a22

(2)

(3)

a34

=

(2)

(3)

a22 ·

(3)

a22 ·

a35 =

(2)

a43 =

(2)

(3)

a44

=

a22 ·

(2)

(3)

a45 =

(2)

(2)

(2)

a22 · a34 − a32 · a24

a22 ·

(2)

a22

(2)

(2)

a35 − a32

(2)

a22

(2)

(2)

a43 − a42

(2)

a22

(2)

(2)

a44 − a42

(2)

a22

(2)

(2)

a45 − a42

(2)

a22

=

2 · (−2) − 0 · 5

= −2,

2

=

2 · (−2) − 0 · 7

= −2,

2

=

2 · (−6) − 4 · 3

= −12,

2

=

2·3−4·5

= −7,

2

=

2·7−4·7

= −7.

2

(2)

· a25

(2)

· a23

(2)

· a24

(2)

· a25

We get the matrix

A(3)

1

0

=

0

0

3 −2 −4

2

3

5

0 −3 −2

0 −12 −7

−2

7

.

−2

−7

(2)

Step 3. We choose a33 = −3 6= 0 pivot and we keep rows 1, 2 and 3 from A(3) . In the column

(3)

3, under pivot a33 = −3 the elements will be zero and the other elements are calculated using

the ”rectangle rule” (2.8):

(3)

(3)

(3)

(3)

a33 · a44 − a43 · a34

−3 · (−7) − (−12) · (−2)

(4)

=

= −1,

a44 =

(3)

−3

a33

(3)

(4)

a45

=

(3)

(3)

(3)

a33 · a45 − a43 · a35

(3)

a33

=

−3 · (−7) − (−12) · (−2)

= −1.

−3

2.1. GAUSSIAN ELIMINATION METHOD (GAUSS PIVOTING METHOD)

19

We obtain

A(4)

1

0

=

0

0

−2

7

.

−2

−1

3 −2 −4

2 3

5

0 −3 −2

0 0 −1

The system corresponding to the matrix A(4) is

x1 + 3x2 − 2x3 − 4x4 = −2

2x2 + 3x3 + 5x4 = 7

−3x3 − 2x4 = −2

−x4 = −1.

The above system has the same solution with the initial one, but this system has a triangular

form. The solution components of system are directly obtained by back substitution:

x4 = −1/(−1) = 1

x3 = (−2 + 2x4 )/(−3) = (−2 + 2 · 1)/(−3) = 0

x

2 = (7 − 5x4 − 3x3 )/2 = (7 − 5 · 1 − 3 · 0)/2 = 1

x1 = (−2 + 4x4 + 2x3 − 3x2 )/1 = (−2 + 4 · 1 + 2 · 0 − 3 · 1)/1 = −1,

and therefore, the solution is,

x1

−1

x2 1

x3 = 0

x4

1

.

Example 3. Solve the following system by using the Gaussian elimination method

x1 − x2 − 3x3 = 8

3x − x2 + x3 = 4

1

2x1 + 3x2 + 19x3 = 10.

Proof. The extended matrix corresponding to the system is

8

1 −1 −3

A(1) = A = 3 −1 1

4 .

10

2

3 19

(1)

Step 1. We choose a11 = 1 6= 0 pivot, and we keep row 1 from A(1) . In the first column,

(1)

under pivot a11 = 1 the elements will be zero and the other elements are calculated using the

”rectangle rule”:

(1)

(1)

(1)

(1)

1 · (−1) − 3 · (−1)

a11 · a22 − a21 · a12

(2)

=

= 2,

a22 =

(1)

1

a11

(1)

(1)

(1)

(1)

a11 · a23 − a21 · a13

1 · 1 − 3 · (−3)

(2)

a23 =

=

= 10,

(1)

1

a11

20

CHAPTER 2. SOLVING LINEAR SYSTEMS - DIRECT METHODS

(1)

(2)

a24

(1)

(1)

=

=

a11 ·

(1)

(2)

a32

(1)

a11 · a24 − a21 · a14

(1)

(2)

a11 ·

(2)

a11 ·

a33 =

(1)

a34 =

(1)

a11

(1)

(1)

a32 − a31

(1)

a11

(1)

(1)

a33 − a31

(1)

a11

(1)

(1)

a34 − a31

(1)

a11

=

1·4−3·8

= −20,

1

=

1 · 3 − 2 · (−1)

= 5,

1

=

1 · 19 − 2 · (−3)

= 25,

1

(1)

· a12

(1)

· a13

(1)

· a14

=

We obtain the matrix

1 · 10 − 2 · 8

= −6.

1

1 −1 −3

(2)

A =

0 2 10

0 5 25

8

−20 .

−6

(2)

Step 2. We choose a22 = 2 6= 0 pivot and we keep rows 1 and 2 from A(2) . In the second column,

(2)

under the pivot a22 = 2 the elements will be zero and the other elements are calculated using

the ”rectangle rule”:

(2)

(2)

(2)

(2)

2 · 25 − 5 · 10

a22 · a33 − a32 · a23

(3)

a33 =

=

= 0,

(2)

2

a22

(2)

(3)

a34

=

(2)

(2)

(2)

a22 · a34 − a32 · a24

(2)

a22

We obtain the matrix

=

2 · (−6) − 5 · (−20)

= 44.

2

1 −1 −3

8

A(3) = 0 2 10

−20 .

0 0

0

44

The system corresponding to the matrix A(3) is

x1 − x2 − 3x3 = 7

2x2 + 10x3 = −20

0x3 = 44,

and therefore, the system is incompatible.

Example 4. Solve the following systems by using the Gaussian elimination method

x1 + 2x2 + 3x3 + x4 = 7

x1 + 3x2 − 2x3 − 4x4 = −2

2x1 + x2 + 2x3 + 3x4 = 8

2x1 + 6x2 − 7x3 − 10x4 = −6

b)

a)

2x − x2 − 4x3 + 4x4 = 1

−x1 − x2 + 5x3 + 9x4 = 9

1

2x1 + x3 − 3x4 = 0

−3x1 − 5x2 + 15x4 = 13.

In a short reviewer of what we did we notice that, in order to continue with our method,

when a pivot was zero we interchange the row of the pivot with a row below (since the rows

above can be considered as being already arranged in a suitable manner for our method to

work). Obviously, a necessity is that the new pivot to be nonzero. But other than, do we have

additional criteria when decided with which row to interchange the row i of the pivot?

2.1. GAUSSIAN ELIMINATION METHOD (GAUSS PIVOTING METHOD)

21

The answer is yes, if we pursue a better numerical stability. Hence we distinguish between

two variants of the Gaussian elimination method:

1. Gauss method with partial pivoting at every step

2. Gauss method with total pivoting at every step

Let us address the firs one.

2.1.1

Gauss method with partial pivoting at every step

Here, to choose the pivot, we must search for the element with the greatest absolute value

among all the elements of the matrix which are situated underneath or at the position of the

pivot, on the same column. More precisely, at every step k, for 1 ≤ k ≤ n − 1, the pivot is the

(k)

element aik k , k ≤ ik ≤ n, with the property

(k)

(k)

aik k = max aik .

k≤i≤n

Why using this method for solving systems? On the one hand,the partial pivoting ensures a

better numerical stability because when we choose elements that are far away from zero to be

the pivot we avoid dangerous numerical situations, like dividing by 0 or by some number close

to zero. On the other hand, to justify the choice of a Gauss method we have to say that its

cost of the algorithm is quite low, of order 23 n3 . Let us say what we mean by the cost of an

algorithm.

Definition 4. The total number of elementary mathematical operations (+, −, ·, :) of an algorithm is called the cost of the algorithm.

The cost of the algorithm for the Gaussian elimination method with partial pivoting

3 is

2 3

Therefor, we can say that this method is of order 3 n , and we denote O 2n3 . To

make a comparison, the cost of the algorithm for Cramer’s method, which was the common

method for solving systems in high school, is n(n + 1)!. We denote by cG the cost of the Gaussian method and by cC the cost of the Cramer’s method. For a better understanding of the

cost differences, let us give some values to n.

4n3 +9n2 −7n

.

6

• For n = 2, cG = 9, cC = 12,

• For n = 3, cG = 28, cC = 72,

• For n = 4, cG = 62, cC = 480,

• For n = 5, cG = 115, cC = 3600.

22

CHAPTER 2. SOLVING LINEAR SYSTEMS - DIRECT METHODS

It is clear now that the larger n becomes, the larger the difference between the two costs. And

in these days, when ”time is money” and we want everything to go faster, of course that we

want our system to be solved as quickly as possible. Especially when we think at low big this

difference between costs would be when n = 70, for example.

With the hope that we have convinced the reader of the utility of the Gaussian elimination

method with partial pivoting, we introduce an example of solving a system.

Example 5. Solve the following system by using the Gauss method with partial pivoting at

every step

x1 + 3x2 − 2x3 − 4x4 = 3

−x1 − x2 + 5x3 + 9x4 = 14

2x + 6x2 + −7x3 − 10x4 = −2

1

−3x1 − 5x2 + 15x4 = −6.

Proof. The extended matrix corresponding to the system is

1

3 −2 −4

−1 −1 5

9

A(1) = A =

2

6 −7 −10

−3 −5 0

15

3

14

.

−2

−6

We search the pivot to be put in the position of a11 on the firs column. More precisely, we

search the element with the largest absolute value from the first column of the matrix A(1) , i.e.

o

n

(1)

(1)

(1)

(1)

(1)

|piv (1) | = max ai1 = max a11 , a21 , a31 , a41

1≤i≤4

(1)

= max {|1| , |−1| , |2| , |−3|} ⇒ piv (1) = a41 = −3.

Be careful, although we search the biggest absolute value, the pivot is not the absolute value,

instead it is the element with this absolute value.

(1)

Sine we found out that a41 should be the pivot, we interchange the row 1 with row 4 in A(1)

and we obtain

−6

−3 −5 0

15

14

−1 5

9

L ↔L −1

.

A(1) 1= 4

2

6 −7 −10 −2

3

1

3 −2 −4

We now proceed with the Gaussian elimination method as usual (see the solving of system

(1)

(2.13)). We choose a11 = −3 6= 0 pivot and we keep row 1 from A(1) . In the first column, under

pivot the elements will be zero and the other elements are calculated using the ”rectangle rule”:

(1)

(1)

(1)

(1)

a11 · a22 − a21 · a12

−3 · (−1) − (−1) · (−5)

2

(2)

a22 =

=

= ,

(1)

−3

3

a11

(1)

(2)

a23

=

(1)

(1)

(1)

a11 · a23 − a21 · a13

(1)

a11

=

−3 · 5 − (−1) · 0

= 5,

−3

2.1. GAUSSIAN ELIMINATION METHOD (GAUSS PIVOTING METHOD)

(1)

(2)

a24

=

(1)

(2)

a11 ·

(2)

a11 ·

(1)

a33 =

(1)

=

a11 ·

=

a11 ·

(1)

(2)

a35

(1)

(2)

a42 =

a11 ·

(1)

(2)

a43

=

a11 ·

=

a11 ·

(1)

(2)

a44

(1)

a11 ·

a32 =

(2)

a34

(1)

=

(1)

(2)

a25

(1)

a11 · a24 − a21 · a14

(1)

=

−3 · 9 − (−1) · 15

= 4,

−3

=

−3 · 14 − (−1) · (−6)

= 16,

−3

=

8

−3 · 6 − 2 · (−5)

= ,

−3

3

=

−3 · (−7) − 2 · 0

= −7,

−3

=

−3 · (−10) − 2 · 15

= 0,

−3

=

−3 · (−2) − 2 · (−6)

= −6,

−3

=

−3 · 3 − 1 · (−5)

4

= ,

−3

3

=

−3 · (−2) − 1 · 0

= −2,

−3

=

−3 · (−4) − 1 · 15

= 1,

−3

(1)

· a15

(1)

· a12

(1)

· a13

(1)

· a14

(1)

· a15

(1)

· a12

(1)

· a13

(1)

· a14

(1)

−3 · 3 − 1 · (−6)

= 1.

−3

Therefore, we obtain the matrix

−3 −5 0

2

0

5

3

A(2) =

8

0

−7

3

4

0

−2

3

(2)

a45 =

a11 ·

(1)

a11

(1)

(1)

a25 − a21

(1)

a11

(1)

(1)

a32 − a31

(1)

a11

(1)

(1)

a33 − a31

(1)

a11

(1)

(1)

a34 − a31

(1)

a11

(1)

(1)

a35 − a31

(1)

a11

(1)

(1)

a42 − a41

(1)

a11

(1)

(1)

a43 − a41

(1)

a11

(1)

(1)

a44 − a41

(1)

a11

(1)

(1)

a45 − a41

(1)

a11

23

· a15

=

We search

(2)

(2)

ai2

n

−6

16

.

−6

1

15

4

0

1

(2)

a22

(2)

a32

(2)

a42

o

|piv | max

= max

,

,

=

2≤i≤4

8

8 2 4

(2)

,

,

⇒ piv (1) = a32 = .

= max

3 3 3

3

We interchange the row 2 with row 3 in A(2) and we get

−3 −5

0 15

8/3 −7 0

L ↔L 0

A(2) 2= 3

0

2/3

5 4

0

4/3 −2 1

(2)

−6

−6

.

16

1

We choose a22 = 8/3 pivot and we keep row 1 and row 2 from A(2) . In the second column,

under pivot the elements will be zero and the other elements are calculated using the ”rectangle

rule”:

24

CHAPTER 2. SOLVING LINEAR SYSTEMS - DIRECT METHODS

(2)

(3)

a33

=

(2)

(3)

a22 ·

(2)

(3)

a43 =

a22 ·

(2)

=

a22 ·

=

a22 ·

(2)

(3)

a45

(2)

a22 ·

a35 =

(3)

a44

(2)

=

(2)

(3)

a34

(2)

a22 · a33 − a32 · a23

(2)

a22

(2)

(2)

a34 − a32

(2)

a22

(2)

(2)

a35 − a32

(2)

a22

(2)

(2)

a43 − a42

(2)

a22

(2)

(2)

a44 − a42

(2)

a22

(2)

(2)

a45 − a42

(2)

a22

=

(2)

· a24

=

(2)

· a25

=

(2)

· a23

=

(2)

· a24

=

(2)

· a25

=

8

3

8

3

· 5 − 23 · (−7)

8

3

2

3

·4− ·0

8

3

8

3

=

= 4,

· 16 − 23 · (−6)

8

3

8

3

=

· (−2) − 43 · (−7)

8

3

8

3

· 1 − 43 · 0

8

3

8

3

27

,

4

35

,

2

3

= ,

2

= 1,

· 1 − 43 · (−6)

8

3

= 4.

Therefore, we obtain the matrix

A(3)

−3 −5

0

15

0 8/3 −7 0

=

0

0 27/4 4

0

0

3/2 1

−6

−6

.

35/2

4

We search

piv

(3)

= max

3≤i≤4

(3)

ai3

= max

n

(3)

a33

,

(3)

a43

o

= max

27 3

,

4

2

(3)

⇒ piv (3) = a33 =

27

.

4

At this step we do need to interchanges any rows.

(3)

We choose the pivot a33 = 27

, and we keep rows 1, 2 and 3 from A(3) . In the column 3, under

4

pivot the elements will be zero and the other elements are calculated using the ”rectangle rule”:

(3)

(3)

(3)

(3)

27

· 1 − 23 · 4

1

a33 · a44 − a43 · a34

(4)

4

=

= ,

a44 =

27

(3)

9

a33

4

(3)

(3)

(3)

(3)

27

3 35

·4− 2 · 2

a ·a −a ·a

1

(4)

= .

a45 = 33 45 (3) 43 35 = 4

27

9

a33

4

We obtain the matrix

−3 −5

0

15

−6

0 8/3 −7

0

−6

.

A(4) =

0

0 27/4 4

35/2

0

0

0

1/9

1/9

The system corresponding to the matrix A(4) is

−3x1 − 5x2 + 15x4 = −6

8

x − 7x3 = −6

3 2

27

x + 4x = 35

2

14 3 1 4

x

=

.

4

9

9

2.1. GAUSSIAN ELIMINATION METHOD (GAUSS PIVOTING METHOD)

25

The solution components of system are directly obtained by back substitution:

x4 = 19 / 19 = 1

x3 = 35

− 4x4 / 27

= 35

− 4 · 1 / 27

=2

2

4

2

4

8

8

x2 = (−6 + 7x3 )/ 3 = (−6 + 7 · 2)/ 3 = 3

x1 = (−6 − 15x4 + 5x2 )/(−3) = (−6 − 15 · 1 + 5 · 3)/(−3) = 2,

and therefore, the solution is,

x1

2

x2 3

x3 = 2

x4

1

.

Remark 7. If, at any step k of the algorithm, we notice that

(k)

piv (k) = max aik = 0,

k≤i≤n

then we deduce that the system cannot admit unique solution (either it is incompatible, either

it has infinitely many solutions).

Remark 8. The Gaussian elimination method can also provide the value of the determinant

of the matrix A :

n

Y

(n)

Np

det(A) = (−1)

aii ,

i=1

where Np represents the total number of permutations (of rows or columns, as we will see below

when presenting the Gauss method with total pivoting at every step).

2.1.2

Gauss method with total pivoting at every step

The major difference between Gauss elimination method with partial pivoting at every step

and Gauss elimination method with total pivoting is that, on the second variant, the role of

the pivot at step k will be played by the element that has the greatest absolute value among

the elements from the square matrix that has akk as its first element. More exactly, the pivot

at step k should verify

(k)

piv (k) = max aij .

k≤i,j≤n

As a consequence, sometimes we will interchange columns too, and we should be very careful

because every time we interchange column k with column j. We should be aware of the fact

that we change the order of the variables xk and xj too. Thus, in order to obtain in the

end the right solution, we should memorize the interchanges of the columns and then perform

the corresponding interchanges of the elements that are find to be the values of the unknown

variables. So, from the beginning we notice a disadvantage of this method: it needs more

26

CHAPTER 2. SOLVING LINEAR SYSTEMS - DIRECT METHODS

memory space to retain all these permutations. What is the advantage then? It offers total

numerical stability to the algorithm. As for the cost of the algorithm, it remains the same.

Also formula for finding the value of the determinant of A holds true.

Example 6. a) Solve the following systemby using the Gauss method with total pivoting at

every step

−2x1 + x3 = 1

x1 + 4x2 + x4 = −3

2x1 − 3x4 = −3

−2x1 + x3 + x4 = 2.

b) Find the value of the determinant of the matrix A, corresponding to the above system.

Proof. a) The extended matrix corresponding

−2

1

A(1) = A =

2

−2

to the system is

0

4

0

0

1

−3

.

−3

2

1 0

0 1

0 −3

1 1

We search the element with the property

(1)

piv (1) = max aij

1≤i,j≤4

(1)

= a22 = 4.

(1)

The pivot piv (1) should be a22 = 4.

First we interchange row 1 with row 2 (and we have seen that this does not have any impact

on the solution of the system), and secondly, we interchange column 1 with column 2 in A(1) ,

but this also mens that we interchange x1 with x2 , so this should be remember at the end! We

obtain the matrix

1 4 0 1

−3

−3

4

1 0 1

C1 ↔ C2 0 −2 1 0

0

1

1

L ↔L −2 0 1

.

A(1) 1= 2

=

2 0 0 −3 −3

0

2 0 −3 −3

−2 0 1 1

2

2

0 −2 1 1

(1)

We choose a11 = 4 6= 0 pivot and we remark that

A(2) = A(1) .

We search the element with the property

(2)

piv (2) = max aij

2≤i,j≤4

(2)

= a34 = | − 3|.

We interchange the row 2 with row 3, and we interchange column 2

we obtain

4

1

4 1 0 1

−3

C

↔

C

2

4 0

2 0 −3 −3

−3

L ↔L 0

A(2) 2= 3

=

0 −2 1 0

1

0

0

0 −2 1 1

2

0

1

with column 4 in A(2) and

0 1

0 2

1 −2

1 −2

−3

−3

.

1

2

2.1. GAUSSIAN ELIMINATION METHOD (GAUSS PIVOTING METHOD)

(2)

27

We choose a22 = −3 6= 0 pivot and we keep rows 1 and 2 from A(2) . In the second column, under

the pivot the elements will be zero and the other elements are calculated using the ”rectangle

rule”, and we obtain

−3

4 1 0 1

0 −3 0 2

−3

.

A(3) =

0 0 1 −2

1

1

0 0 1 − 43

We search the element with the property

(3)

piv (3) = max aij

3≤i,j≤4

(3)

= a34 = |−2| .

We interchange column 3 with column 4 in A(3) and we

4 1

1

C

↔

C

2

4

2

0 −3

=

A(3)

0 0

−2

0 0 − 43

obtain

−3

−3

.

1

1

0

0

1

1

(3)

We choose a33 = −2 6= 0 pivot and we get

4 1

1 0

0 −3 2 0

A(4) =

0 0 −2 1

0 0

0 13

−3

−3

.

1

1

3

We deduce, by direct substitution method, the intermediate solution

x4 = 1

x3 = 0

x =1

2

x1 = −1,

and we interchange in the following

component

component

component

More precisely, we have

x1 = −1

x2 = 1

x =0

3

x4 = 1

order

x1

x2

C3 ↔C4

⇒

x

3

x4

3 ↔ component 4 (since C3 ↔ C4 )

2 ↔ component 4 (since C2 ↔ C4 )

1 ↔ component 2 (since C1 ↔ C2 ).

= −1

=1

=1

=0

x1

x2

C2 ↔C4

⇒

x

3

x4

= −1

=0

=1

=1

x1

x2

C1 ↔C2

⇒

x

3

x4

So the solution of system is

x1

x2

x

3

x4

(4)

(4)

(4)

(4)

=0

= −1

=1

= 1.

b) det(A) = (−1)2+3 a11 · a22 · a33 · a44 = −4 · (−3) · (−2) ·

1

3

= −8

=0

= −1

=1

= 1.

28

CHAPTER 2. SOLVING LINEAR SYSTEMS - DIRECT METHODS

We present next another direct method for solving linear systems

2.2

L − R Decomposition Method

This method is also called L − U decomposition method, or instead of ”decomposition method”

we say sometimes ”factorization method”. The general idea is to solve a linear system of the

form

A · x = b,

(2.14)

where A ∈ Mn×n (R) and b ∈ Mn×1 (R), by finding two matrices L, R ∈ Mn×n (R) such that

A = L · R,

where L is a lower-triangular matrix, which means that all its nonzero elements are situated

at the left of the main diagonal (hence the ”L” from the notation comes from ”left”), and R

is an upper-triangular matrix, which means that all its nonzero elements are situated at the

right of the main diagonal (hence the ”R” from the notation comes from ”right”). on the other

hand, some prefer to denote these matrices by L from ”lower-triangular”, respectively by U

from ”upper-triangular”, thus, as we said, this method is also called ”L − U Decomposition

Method”.

Note that there is an additional property to be fulfilled: one of the matrix L or R should have

only 1 on the main diagonal. Depending on which matrix has this property, we distinguish two

variants of this method. This way, if

1 0 ... 0

r11 r12 ... r1n

0 r22 ... r2n

l21 1 ... 0

(2.15)

L = ..

.. . . .. and R = ..

.. . .

.. ,

.

.

. .

. .

.

.

ln1 ln2

... 1

0

0

...

rnn

then the method bears the name Doolittle factorization method.

Remark 9. A matrix L taken as above is called unit lower triangular matrix.

On the other hand, if

(2.16)

L=

l11 0 ... 0

l21 l22 ... 0

..

.. . .

.

. ..

.

.

ln1 ln2 ... lnn

and R =

1 r12 ... r1n

0 1 ... r2n

.. .. . .

.. ,

. .

. .

0 0 ... 1

then this method is called Croût factorization method.

Remark 10. A matrix R taken as above is called unit upper triangular matrix.

2.2. L − R DECOMPOSITION METHOD

29

Why is better to work with a unit lower triangular matrix ar a unit upper triangular matrix

instead of a regular triangular matrix and a regular upper triangular matrix? (This questions is

addressed to the students, after they will compute the laboratory implementation of the L − R

factorization method).

In what follows, we would like to clarify two aspects:

1. how do we use the decomposition A = L · R to solve the system (2.14).

2. how do we find L and R.

For the first aspect we notice that

A · x = b ⇔ (L · R)x = b ⇔ L · (Rx) = b.

We denote Rx = y and we solve the system Ly = b by using the direct substitution method,

due to the fact that L is a lower triangular matrix. After finding the solution y in this way, we

solve the system Rx = y by using the backward substitution method.

For the clarification of the second aspect, the answer depends on which method we use.

2.2.1

The L − R Doolittle factorization method

Let us multiply L by R, where L and R are given by (2.15). Then by making the identification

of the elements so that L · R = A, we obtain

min{i,j}

X

rih · lhj = aij ,

for all 1 ≤ i, j ≤ n,

h=1

and we find the following formulae

r1j = a1j ,

li1 = ai1 /r11 ,

k−1

X

rkj = akj −

lkh · rhj ,

(2.17)

h=1

!

k−1

X

lih · rhk /rkk ,

lik = aik −

for all

for all

1≤j≤n

2≤i≤n

for all

2 ≤ k ≤ n,

k≤j≤n

for all

2 ≤ k ≤ n,

k + 1 ≤ i ≤ n.

h=1

We observe that, by these formulae, we first calculate a row from R, then a column from L,

then another row from R, then another column from L an so until we find out all the elements

of the matrices L and R. More specific, the first row of R is in fact the first row of A. The first

column of L, starting with its second element, is obtained by dividing the first column of A to

r11 . For the second row of R we perform:

r22 = a22 − l21 · r12 ,

30

CHAPTER 2. SOLVING LINEAR SYSTEMS - DIRECT METHODS

r23 = a23 − l21 · r13 ,

r24 = a24 − l21 · r14 .

For the second column of L, we have to divide by r22 :

l32 = (a32 − l31 · r12 ) /r22 .

We do not continue in this manner because we will solve in detail an example below. But first

we want to make a very important remark.

Remark 11. It is obvious that, in order to be able to apply this method, it is necessary to

have rkk 6= 0 at every step k. This actually means that, before applying the L − R factorization

method (be it Doolittle, be it Croût, since at Croût method we have to divide by lkk 6= 0 at every

step k) we have to verify that all the principal minors of the matrix A are nonzero.

Definition 5. Let C ∈ Mn×n (R) . By the principal minors of C (also called diagonal minors

of C) we understand the following determinants:

∆1 = c11 − is the principal minor of first order,

∆2 =

∆3 =

c11 c12

c21 c22

− is the principal minor of second order,

c11 c12 c13

c21 c22 c23

c31 c32 c33

− is the principal minor of third order,

..

.

∆3 =

c11 c12 c13

c21 c22 c23

..

..

..

.

.

.

c31 c32 c33

···

···

···

···

c1,n−1

c2,n−1

..

.

− is the principal minor of order n − 1,

cn−1,n−1

∆n = det(C) − is the principal minor of order n.

Now that we have clarified what we understand by a principal (or diagonal) minor of a matrix,

let us ask ourselves: what happens if one of the principal minors of A is zero? Does it mean

that we cannot apply the L − R factorization method? Not at all, it only means that we have

to interchange two rows of the extended matrix: row k (when at step k) and a row below row

k. Then we verify again if all the principal minors of the new A are non-zero, and, if the answer

is positive, we apply the L − R decomposition method.

We give an explicit example of solving a linear system by applying the L − R Doolittle factorization method.

Example 7. Solve the following system by using the L − R Doolittle factorization method

x1 + 2x2 − x3 = 7

2x − 4x2 + 10x3 = 18

1

−x1 − 2x2 + 5x3 = −7.

2.2. L − R DECOMPOSITION METHOD

31

The extended matrix corresponding to the above system is

7

18 .

−7

1

2 1

A=

2 −4 10

−1 −2 5

Before applying the method, we make sure that it is going to work by calculating the diagonal

minors of matrix A

∆1 = 1 6= 0,

1 2

∆2 =

= −8.

2 −4

1

2 −1

∆3 = det(A) = 2 −4 10 = −32 6= 0.

−1 −2 5

Since ∆1 , ∆2 , ∆3 6= 0, the matrix A admits a LR factorization. More precisely, we search two

matrices

1 0 0

r11 r12 r13

L = l21 1 0 and R = 0 r22 r23 ,

l31 l32 1

0

0 r33

such that L · R = A.

At the first step, we compute the elements of the first row of the matrix R using the first

formula from (2.17):

r11 = a11 = 1.

r12 = a12 = 2.

r13 = a13 = −1.

Next, we determine the elements of the first column of the matrix L using the second formula

from (2.17):

21

l21 = ar11

= 21 = 2.

31

l31 = ar11

= −1

= −1.

1

At the second step, we calculate the elements of second row of the matrix R:

r22 = a22 − l21 r12 = −2 − 2 · 2 = −8.

r23 = a23 − l21 r13 = 10 − 2 · (−1) = 12.

Then we calculate the unknown element of the second column of L, that is l32 :

l32 = (a32 − l31 r12 ) /r22 = (−2 − (−1) · 2) /(−8) = 0.

At our final, we determine the unknown element of row 3 of the matrix R:

r33 = a33 − l31 r13 − l32 r23 = 5 − (−1) · (−1) − 0 · 12 = 4.

So, we get

1 0 0

L= 2 1 0

−1 0 1

1 2 −1

R = 0 −8 12

0 0

4

Remark 12. While writing the algorithm of this method into C + + (or other programming

32

CHAPTER 2. SOLVING LINEAR SYSTEMS - DIRECT METHODS

language). We notice that the matrix A goes through

1

2 −1

1

2 −1

A = 2 −4 10 7→ 2 −4 10 →

7

−1 −2 5

−1 −2 5

the following transformations:

1

2 −1

1

2 −1

2 −8 12 7→ 2 −8 12 .

−1 0

5

−1 0

4

Exercise for students: Please explain the previous Remark.

Moving further with the solving of the system, we denote Rx = y, since A = L · R and Ax = b

is equivalent to L(Rx) = b. We solve the system Ly = b, that is,

1 0 0

y1

7

2 1 0 y2 = 18 ,

(2.18)

−1 0 1

y3

−7

by direct substitution method because (2.18) is the matrix form of the system

y1 = 7

2y + y2 = 18

1

−y1 + y3 = −7.

We substitute y1 = 7, in the second equation and we get y2 = 18 − 2y1 = 4. We substitute y1 ,

in the third equation and we get y3 = 0.

Remark 13. Normally, we would substitute y1 and y2 in the third equation, but in this particular situation y2 is missing from the third equation of the system.

7

We have obtained that y = 4 .

0

Now we solve the system Rx = y, that is,

1 2 −1

x1

7

0 −8 12 x2 = 4 ,

0 0

4

x3

0

which is equivalent to

x1 + 2x2 − x3 = 7

8x + 12x3 = 4

2

4x3 = 0.

From the last equation we get that x3 = 0, and we solve the system by backward substitution

method. So, we substitute x3 = 0, in the second equation and we get x2 = − 21 . Then we

substitute the values of x3 and x2 into the first equation and we get x1 = 8. Hence, we have

determined the solution of the system,

x1

8

x2 = − 1 .

2

0

x3

2.2. L − R DECOMPOSITION METHOD

33

By looking closely at the above calculus one can see that we have applied the following formulae

to determine the intermediate solution of system L · y = b:

y1 = b1 ,

i−1

X

(2.19)

y

=

b

−

lik · yk , i = 2, 3, ..., n.

i

i

k=1

Moreover, the system R · x = y, has the following solution

xn = yn /rnn , n

!

X

(2.20)

rik · xk /rii , i = n − 1, n − 2, ..., 1.

xi = y i −

k=i+1

Example: Solve the following system with LR factorization

−x1 + 2x2 + 3x3 = −8

x − 2x2 − x3 = 4

1

−2x1 + 6x2 + 6x3 = −14.

Proof. The extended matrix is

−1 2

3

A=

1 −2 −1

−2 6

6

−8

4 .

−14

We check if the corner determinants of matrix A are nenull.

∆1 = −1 6= 0,

−1 2

∆2 =

= 0.

1 −2

Since the determinant ∆2 = 0, we interchange the row 2 with row 3 in the extended matrix A,

and we obtain

−1 2

3

−8

L ↔L

A 2= 3 −2 6

6

−14 .

1 −2 −1

4

We have

∆1 = −1 6= 0,

−1 2

∆2 =

−2 6

∆3 = det(A) =

= −2 6= 0,

−1 2

3

−2 6

6

1 −2 −1

= −4 6= 0.

−1 2

3

Since ∆1 , ∆2 , ∆3 =

6 0, the matrix A = −2 6

6 admits a L − R factorization. More

1 −2 −1

34

CHAPTER 2. SOLVING LINEAR SYSTEMS - DIRECT METHODS

precisely, we search two matrices

1 0 0

L = l21 1 0

l31 l32 1

r11 r12 r13

and R = 0 r22 r23 ,

0

0 r33

such that L · R = A.

In what follows, if we multiply the row i of matrix L with column j of matrix R, we will denote

with Li (L) × Cj (R).

We determine the elements of first row of the matrix R:

L1 (L) × C1 (R) ⇒ r11 = a11 = −1.

L1 (L) × C2 (R) ⇒ r12 = a12 = 2.

L1 (L) × C3 (R) ⇒ r13 = a13 = 3.

We determine the elements of first column of the matrix L:

−2

21

L2 (L) × C1 (R) ⇒ l21 r11 = a21 ⇒ l21 = ar11

= −1

= 2.

a31

1

L3 (L) × C1 (R) ⇒ l31 r11 = a31 ⇒ l31 = r11 = −1 = −1.

We determine the elements of second row of the matrix R:

L2 (L) × C2 (R) ⇒ l21 r12 + r22 = a22 ⇒ r22 = a22 − l21 r12 = 6 − 2 · 2 = 2.

L2 (L) × C3 (R) ⇒ l21 r13 + r23 = a23 ⇒ r23 = a23 − l21 r13 = 6 − 2 · 3 = 0.

We determine the elements of second column of the matrix L:

L3 (L) × C3 (R) ⇒ l31 r12 + l32 r22 = a32 ⇒ l32 = (a32 − l31 r12 ) /r22 = (−2 − (−1) · 2) /2 = 0.

We determine the elements of row 3 of the matrix R:

L3 (L)×C3 (R) ⇒ l31 r13 +l32 r23 +r33 = a33 ⇒ r33 = a33 −l31 r13 −l32 r23 = −1−(−1)·3−0·0 = 2.

So, we get

1 0 0

L= 2 1 0

−1 0 1

−1 2 3

R= 0 2 0

0 0 2

The our system Ax = b is equivalent with

L · R · x = b.

If we denote R · x = y, where y ∈ R3 , in order to obtain the solution x ∈ R3 , we need to solve

the following two triangular systems:

(S1)L · y = b,

(S2)R · x = y.

The lower-triangular system (S1) is equivalent with

1 0 0

y1

−8

2 1 0 y2 = −14

−1 0 1

y3

4

2.2. L − R DECOMPOSITION METHOD

35

We remark that the free therm b is chosen from the matrix A in which we interchanged the

rows. The solution y is obtain by direct substitution

y1 = −8

y = −14 − 2y1 = 2

2

y3 = 4 + y1 − 0y2 = −4.

The upper-triangular system (S2)

−1

0

0

is equivalent with

2 3

x1

−8

2 0 x2 = 2 .

0 2

x3

−4

The solution x is obtain by back substitution

x3 = −4/2 = −2

x = (2 − 0x3 )/2 = 1

2

x1 = (−8 − 3x3 − 2x2 )/(−1) = 4.

So,

system is

thesolution

of

x1

4

x2 = 1

x3

−2

2.2.2

The L − R Croût factorization method

As the reader probably already understand, the L − R Croût factorization method is quite

similar to the L − R Doolittle method. Thus, we multiply L by R, where L and R are given

by (2.16). Then, by identifying the equal elements from the two equal matrices L · R and A,

we arrive at the following formulae:

li1 = ai1 ,

for all 1 ≤ i ≤ n

r1j = a1j /l11 ,

for all 2 ≤ j ≤ n

!

k−1

X

lik = aik −

lih · rhk ,

for all 2 ≤ k ≤ n, k ≤ i ≤ n

(2.21)

h=1

!

k−1

X

lkh · rhj /lkk , for all 2 ≤ k ≤ n, k + 1 ≤ j ≤ n.

rkj = akj −

h=1

Once we have found the matrices L and R, we replace, as usual, A by L · R into the matrix

form of the system, so we have

L(Rx) = b,

and, by denoting Rx = y, we solve the system Ly = b by the direct substitution method by

applying the formulae

y1 = b1 l11 ,

!

i−1

X

lik · yk /lii , i = 2, 3, ..., n.

yi = bi −

k=1

36

CHAPTER 2. SOLVING LINEAR SYSTEMS - DIRECT METHODS

After finding out the value of y, we use the backward substitution method to solve the system

R · x = y by applying the formulae

xn = yn , n

X

x

=

y

−

rik · xk , i = n − 1, n − 2, ..., 1.

i

i

k=i+1

Remark 14. Before starting to solve a linear system by the L − R Croût factorization method

we test the principal minors to see if they are non-zero, as explained before.

Exercise 1. Solve the previous system by applying the L − R Croût factorization method.

In addition to solving the linear system Ax = b, there are some other applications to the L − R

factorization method.

Further applications:

1. We can find the value of the determinant of matrix A. Indeed, since A = LR, we have

n

Q

that det A = det L · det R, thus det A = (lii · rii ).

i=1

2. We can find the inverse of matrix A, that is,

x11

x

21

.

−1

A =X=

.

.

xn1

A−1 . More exactly, we denote

x12 . . . x1n

x22 . . . x2n

.

.

,

. ...

.

.

.

xn2 . . . xnn

and, by taking into consideration that

A · A−1

1 0 ...

0

0 1 ... 0

.

.

.

= In =

,

. ...

.

.

.

.

.

0 0 ... 1

2.2. L − R DECOMPOSITION METHOD

37

solving A · X = In reduces to solving n systems:

A·

x11

x21

.

.

.

xn1

=

1

0

.

.

.

0

,

A·

x12

x22

.

.

.

xn2

=

0

1

.

.

.

0

,

...

A·

x1n

x2n

.

.

.

xnn

=

0

0

.

.

.

1

.

To make a comparison between the L − R factorization method and the Gauss elimination

method, we first notice that they both have the algorithm cost of order 23 n3 and we have to

say that, among all the factorization methods, the L − R method has the ”cheapest” cost. The

advantage of the L − R factorization method is that it allows us to solve in an easier manner

multiple systems of the form Ax = b, where the matrix A stays the same and only b changes

(that was the case of the calculus of the inverse matrix A−1 presented above). On the other

hand, the Gauss elimination method with total pivoting has total numerical stability, while

nothing guarantees the stability of the L − R factorization method unless we use a partial or

total pivoting for this method too.

Exercise 2. Solve the following linear system using first the L − R Doolittle factorization

method and then the L − R Croût factorization method:

x1 + 2x2 − x3 + x4 = 6

2x1 − 2x3 − x4 = 0

−3x1 − 6x2 + 3x3 + 2x4 = 2

3x1 + 6x2 − x3 − 3x4 = −8.

We discuss next a particular case of the L − R factorization method.

2.2.3

The L − R factorization method for tridiagonal matrices

Definition 6. By a tridiagonal matrix we understand a matrix that has non-zero elements

only on the main diagonal, on the diagonal upon the main diagonal, and on the diagonal below

the main diagonal. More exactly, a matrix A ∈ Mn×n (R) is called tridiagonal if it has the form

A=

a1 b 1

0 0 ...

0

c1 a2 b2 0 ...

0

0 c2 a3 b3 ...

0

.. .. . . . . . .

..

.

.

.

. .

.

0 0

0

... an−1

0 0

0 0 ... cn−1

0

0

0

bn−1

an

.

38

CHAPTER 2. SOLVING LINEAR SYSTEMS - DIRECT METHODS

Obviously, a linear system of the form Ax = b where A is a tridiagonal matrix can be solved

by the usual L − R factorization method described in the previous subsections. However, since

we have so many zeros into a matrix of this particular form, we search for matrices L and R of

a particular form too:

1

l1

0

..

.

L=

0

0

0

1

l2

..

.

0

0

1

..

.

...

...

...

..

.

0

0

0

..

.

0

0

0

0

...

1

... ln−1

0

0

0

..

.

0

1

r1

0

0

..

.

and R =

0

0

s1 0 ...

0

0

r2 s2 ...

0

0

0 r3 ...

0

0

..

.. . .

..

..

.

.

.

.

.

0 0 ... rn−1 sn−1

0 0 ...

0

rn

.

The form of the matrices makes the calculus much simpler, since we only have to find the value

of the elements ri , where i ∈ {1, . . . , n}, and li , si , where i ∈ {1, . . . , n − 1}. By performing

the calculus L · R and by taking into consideration the fact that L · R = A, we arrive at the

following formulae:

(2.22)

r1 = a1

si = b i , 1 ≤ i ≤ n − 1

ci

, 1≤i≤n−1

l

=

i

ri

ri+1 = ai+1 − li si , 1 ≤ i ≤ n − 1.

As usual, after we determine the matrices L and R, instead of the system LRx = b we can

solve:

Ly = b

Rx = y.

We solve the system Ly = b by the direct substitution method, that is, by using the formulae:

y 1 = b1

yi+1 = bi+1 − li yi ,

1 ≤ i ≤ n − 1.

We solve the system Ly = b by the backward substitution method, that is, by using the

formulae:

(

yn

xn = ,

rn

xi = (yi − si xi+1 ) /ri , i ∈ {n − 1, n − 2, . . . , 1}.

Remark 15. Again, in order to make sure that the elements that play the role of pivots, ri ,

i ∈ {1, . . . , n}, are non-zero, we test the principal minors of the matrix A to see if they are nonzero before applying this method. But be careful, when interchanging two lines of a tridiagonal

matrix, most probably the new matrix will not be tridiagonal anymore, so we will have to rely

on the L − R factorization methods for usual matrices.

2.2. L − R DECOMPOSITION METHOD

39

Remark 16. There is no point to occupy the memory of the computer with a n × n matrix

when we only care for those three diagonals of the matrix A. Therefore when we are going

to write the pseudocode of this numerical algorithm we are going to keep the three diagonals

containing non-zero elements into three vectors:

a = (a1 , a2 , . . . , an ),

a = (b1 , b2 , . . . , bn−1 ),

c = (c1 , c2 , . . . , cn−1 ).

The same goes for the matrices L and R.

Example 8. Solve the following linear system:

−x1 + 2x2 = −3

−x1 + 4x2 + x3 = −5

2x − x3 − x4 = 4

2

x3 + 5x4 = −3.

Proof. The matrix associated to this system

−1

−1

A=

0

0

is

2

0 0

4

1 0

.

2 −1 −1

0

1 5

We notice that A is a tridiagonal matrix. Before starting to apply the L − R factorization

method for tridiagonal matrixes we check if all the principal minors are non-zero. If one of

the principal minors proves to be 0, we will interchange the row corresponding to the order of

the minor with a row below it. Be careful though: after we perform an interchange of rows,

most probably the matrix that we obtain will not be a tridiagonal matrix anymore and in this

case we have to apply a L − R factorization method for arbitrary matrices (Doolittle or Croût)

instead of the L − R factorization method for tridiagonal matrices.

∆1 = −1 6= 0;

∆4 =

−1

−1

0

0

∆2 =

2

0 0

4

1 0

2 −1 −1

0

1 5

−1 2

−1 4

C2 +2C1

=

= 10 6= 0;

−1

−1

0

0

∆3 =

0

0 0

2

1 0

2 −1 −1

0

1 5

−1 2 0

−1 4 1

0 2 −1

1+1

= (−1)

= 4 + 2 − 2 = 4 6= 0;

2 1

0

2 −1 −1

0 1

5

= −18 6= 0.

Since all the principal minors prove to be non-zero, we proceed further with the L − R factorization method for tridiagonal matrices. So our aim is to find two matrices L and R such that

A = LR, where L and R are of the specific form

r1 s1 0 0

1 0 0 0