INFORMATION

STORAGE AND

MANAGEMENT (ISM) V4

Revision [1.0]

PARTICIPANT GUIDE

PARTICIPANT GUIDE

Dell Confidential and Proprietary

Copyright © 2019 Dell Inc. or its subsidiaries. All Rights Reserved. Dell Technologies,

Dell, EMC, Dell EMC and other trademarks are trademarks of Dell Inc. or its

subsidiaries. Other trademarks may be trademarks of their respective owners.

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page i

Table of Contents

Course Introduction.................................................................................. 1

Information Storage and Management (ISM) v4 ...................................................... 2

Prerequisite Skills ................................................................................................................ 3

Course Agenda .................................................................................................................... 4

Introduction to Information Storage ........................................................ 5

Introduction to Information Storage ......................................................................... 6

Introduction to Information Storage ...................................................................................... 7

Assessment ....................................................................................................................... 24

Summary................................................................................................................... 25

Modern Technologies Driving Digital Transformation ......................... 26

Cloud Computing Lesson ....................................................................................... 27

Cloud Computing ............................................................................................................... 28

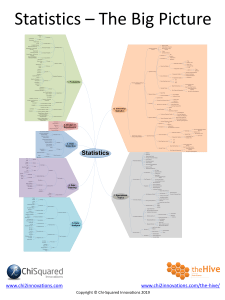

Big Data Analytics Lesson ...................................................................................... 51

Big Data Analytics .............................................................................................................. 52

Internet of Things Lesson ....................................................................................... 68

Internet of Things ............................................................................................................... 69

Machine Learning Lesson ....................................................................................... 75

Machine Learning .............................................................................................................. 76

Concepts in Practice Lesson .................................................................................. 82

Concepts in Practice .......................................................................................................... 83

Assessment ....................................................................................................................... 85

Summary................................................................................................................... 86

Information Storage and Management (ISM) v4

Page ii

© Copyright 2019 Dell Inc.

Modern Data Center Environment ......................................................... 87

Compute System Lesson ........................................................................................ 88

Compute System ............................................................................................................... 89

Compute and Desktop Virtualization Lesson ...................................................... 105

Compute and Desktop Virtualization ................................................................................ 106

Storage and Network Lesson ................................................................................ 122

Storage and Network ....................................................................................................... 123

Applications Lesson .............................................................................................. 135

Applications ..................................................................................................................... 136

Software-Defined Data Center (SDDC) Lesson ................................................... 146

Software-Defined Data Center (SDDC) ............................................................................ 147

Modern Data Center Infrastructure Lesson ......................................................... 152

Modern Data Center Infrastructure ................................................................................... 153

Concepts in Practice Lesson ................................................................................ 170

Concepts in Practice ........................................................................................................ 171

Assessment ..................................................................................................................... 177

Summary................................................................................................................. 178

Intelligent Storage Systems ................................................................. 179

Components of Intelligent Storage Systems Lesson ......................................... 180

ISMv4 Source - Intelligent Storage Systems - Components ............................................. 181

RAID Techniques Lesson ...................................................................................... 211

RAID Techniques ............................................................................................................. 212

Types of Intelligent Storage Systems Lesson ..................................................... 233

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page iii

Types of Intelligent Storage Systems ............................................................................... 234

Assessment ..................................................................................................................... 238

Summary................................................................................................................. 239

Block-Based Storage System .............................................................. 240

Components of a Block-Based Storage System Lesson.................................... 241

Components of a Block-Based Storage System ............................................................... 242

Storage Provisioning Lesson ............................................................................... 256

Storage Provisioning ........................................................................................................ 257

Storage Tiering Lesson ......................................................................................... 269

Storage Tiering ................................................................................................................ 270

Concepts in Practice Lesson ................................................................................ 278

Concepts in Practice ........................................................................................................ 279

Assessment ..................................................................................................................... 282

Summary................................................................................................................. 283

Fibre Channel SAN ............................................................................... 284

Introduction to SAN Lesson .................................................................................. 285

Introduction to SAN .......................................................................................................... 286

FC SAN Overview Lesson ..................................................................................... 289

FC SAN Overview ............................................................................................................ 290

FC Architecture Lesson......................................................................................... 302

FC SAN Architecture........................................................................................................ 303

Topologies, Link Aggregation and Zoning Lesson ............................................. 314

Topologies, Link Aggregation and Zoning ........................................................................ 315

SAN Virtualization Lesson .................................................................................... 328

Information Storage and Management (ISM) v4

Page iv

© Copyright 2019 Dell Inc.

SAN Virtualization ............................................................................................................ 329

Concepts in Practice Lesson ................................................................................ 339

Concepts In Practice ........................................................................................................ 340

Assessment ..................................................................................................................... 343

Summary................................................................................................................. 344

IP and FCoE SAN .................................................................................. 345

Overview of TCP/IP Lesson................................................................................... 346

Overview of TCP/IP ......................................................................................................... 347

Overview of IP SAN Lesson .................................................................................. 356

Overview of IP SAN ......................................................................................................... 357

iSCSI Lesson .......................................................................................................... 363

iSCSI ............................................................................................................................... 364

FCIP Lesson ........................................................................................................... 386

FCIP ................................................................................................................................ 387

FCoE Lesson .......................................................................................................... 395

FCoE ............................................................................................................................... 396

Concepts in Practice Lesson ................................................................................ 404

Concepts In Practice ........................................................................................................ 405

Assessment ..................................................................................................................... 408

Summary................................................................................................................. 409

File-Based and Object-Based Storage System ................................... 410

NAS Components and Architecture Lesson ........................................................ 411

NAS Components and Architecture ................................................................................. 412

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page v

File-Level Virtualization and Tiering Lesson ....................................................... 432

File-Level Virtualization and Tiering ................................................................................. 433

Object-Based and Unified Storage Lesson .......................................................... 440

Object-Based and Unified Storage Overview ................................................................... 441

Concepts in Practice Lesson ................................................................................ 462

Concepts in Practice ........................................................................................................ 463

Assessment ..................................................................................................................... 465

Summary................................................................................................................. 466

Software-Defined Storage and Networking ......................................... 467

Software-Defined Storage (SDS) Lesson ............................................................. 468

Software-Defined Storage (SDS) ..................................................................................... 469

Software-Defined Networking (SDN) Lesson ....................................................... 493

Software-Defined Networking (SDN) ................................................................................ 494

Concepts in Practice Lesson ................................................................................ 502

Concepts in Practice ........................................................................................................ 503

Assessment ..................................................................................................................... 505

Summary................................................................................................................. 506

Introduction to Business Continuity ................................................... 507

Business Continuity Overview Lesson ................................................................ 508

Business Continuity Overview .......................................................................................... 509

Business Continuity Fault Tolerance Lesson ..................................................... 529

Fault Tolerance IT Infrastructure ...................................................................................... 530

Concepts in Practice Lesson ................................................................................ 556

Concepts In Practice ........................................................................................................ 557

Information Storage and Management (ISM) v4

Page vi

© Copyright 2019 Dell Inc.

Assessment ..................................................................................................................... 560

Summary................................................................................................................. 561

Data Protection Solutions .................................................................... 562

Replication Lesson ................................................................................................ 563

Replication ....................................................................................................................... 564

Backup and Recovery Lesson .............................................................................. 594

Backup and Recovery Overview ...................................................................................... 595

Data Deduplication Lesson ................................................................................... 622

Data Deduplication........................................................................................................... 623

Data Archiving Lesson .......................................................................................... 634

ISMv4 Source - Data Protection Solutions - Data Archiving ............................................. 635

Migration Lesson ................................................................................................... 647

Migration .......................................................................................................................... 648

Concepts in Practice Lesson ................................................................................ 660

Concepts In Practice ........................................................................................................ 661

Assessment ..................................................................................................................... 672

Summary................................................................................................................. 674

Storage Infrastructure Security ........................................................... 675

Introduction to Information Security Lesson ...................................................... 676

Introduction to Information Security .................................................................................. 677

Storage Security Domains and Threats Lesson .................................................. 691

Storage Security Domains and Threats............................................................................ 692

Security Controls Lesson...................................................................................... 703

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page vii

Security Controls.............................................................................................................. 704

Concepts in Practice Lesson ................................................................................ 730

Concepts in Practice ........................................................................................................ 731

Assessment ..................................................................................................................... 735

Summary................................................................................................................. 736

Storage Infrastructure Management.................................................... 737

Introduction to Storage Infrastructure Management Lesson ............................. 738

Introduction to Storage Infrastructure Management ......................................................... 739

Operations Management ....................................................................................... 751

Operations Management.................................................................................................. 752

Concepts in Practice Lesson ................................................................................ 790

Concepts In Practice ........................................................................................................ 791

Assessment ..................................................................................................................... 793

Summary................................................................................................................. 794

Course Conclusion ............................................................................... 795

Information Storage and Management (ISM) v4 .................................................. 796

Summary ......................................................................................................................... 797

Information Storage and Management (ISM) v4

Page viii

© Copyright 2019 Dell Inc.

Course Introduction

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page 1

Information Storage and Management (ISM) v4

Information Storage and Management (ISM) v4

Introduction

Information Storage and Management (ISM) is a unique course that provides a

comprehensive understanding of the various storage infrastructure components in

a modern data center environment. Participants will learn the architectures,

features, and benefits of intelligent storage systems including block-based, filebased, object-based, and unified storage; software-defined storage; storage

networking technologies such as FC SAN, IP SAN, and FCoE SAN; business

continuity solutions such as backup and replication; the highly-critical area of

information security; and storage infrastructure management. This course takes an

open-approach to describe all the concepts and technologies, which are further

illustrated and reinforced with Dell products and based on real world use cases.

This course aligns to the Associate level proven professional certification which

serves as a baseline for a number of additional product specializations.

Information Storage and Management (ISM) v4

Page 2

© Copyright 2019 Dell Inc.

Information Storage and Management (ISM) v4

Prerequisite Skills

The following skills are prerequisites:

To understand the content and successfully complete this course, a participant

must have a basic understanding of computer architecture, operating systems,

networking, and databases

Participants with experience in specific segments of storage infrastructure

would also be able to assimilate the course material

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page 3

Information Storage and Management (ISM) v4

Course Agenda

Introductions

Information Storage and Management (ISM) v4

Page 4

© Copyright 2019 Dell Inc.

Introduction to Information Storage

Introduction

This module presents digital data, types of digital data, and information. This

module also focuses on data center characteristics and technologies driving digital

transformation.

Upon completing this module, you will be able to:

Describe digital data, types of digital data, and information

Describe data center and its key characteristics

Describe the technologies driving digital transformation

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page 5

Introduction to Information Storage

Introduction to Information Storage

Information Storage and Management (ISM) v4

Page 6

© Copyright 2019 Dell Inc.

Introduction to Information Storage

Introduction to Information Storage

Growth of the Digital Universe

Digital universe is created and defined by

software

Digital data is continuously generated,

collected, stored, and analyzed through

software

IDC report predicts worldwide data creation will

grow to an enormous 163 (ZB) by 2025

Technologies driving digital transformation add to data growth

Notes

We live in a digital universe – software creates and defines a world. A massive

amount of digital data is continuously generated, collected, stored, and analyzed

through software in the digital universe. IDC report predicts worldwide data creation

will grow to an enormous 163 zettabytes (ZB) by 2025.

The data in the digital universe comes from diverse sources, including both

individuals and organizations. Individuals constantly generate and consume

information through numerous activities, such as web searches, emails, uploading

and downloading content and sharing media files. In organizations, the volume and

importance of information for business operations continue to grow at astounding

rates. Technologies driving digital transformation including Internet of Things (IoT)

have significantly contributed to the growth of the digital universe.

In the past, individuals created most of the data in the world. Now IDC predicts

organizations will create 60 percent of world’s data through applications relying on

machine learning, automation, machine-to-machine technologies, and embedded

devices.

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page 7

Introduction to Information Storage

Why Information Storage and Management

Organizations are dependent on continuous and reliable access to information

Organizations seek to store, protect, process, manage, and use information

Organizations are increasingly implementing intelligent storage solutions:

To efficiently store and manage information

To gain competitive advantage

To derive new business opportunities

Notes

Organizations have become increasingly

information-dependent in the 21st century, and

information must be available whenever and

wherever it is required. It is critical for users and applications to have continuous,

fast, reliable, and secure access to information for business operations to run as

required. Some examples of such organizations and processes include banking

and financial institutions, online retailers, airline reservations, social networks, stock

trading, scientific research, and healthcare.

Data is the lifeblood of a rapidly growing digital existence, opening up new

opportunities for businesses and gain a competitive edge. For example, an online

retailer may need to identify the preferred product types and brands of customers

by analyzing their search, browsing, and purchase patterns. This information helps

the retailer to maintain a sufficient inventory of popular products, and also advertise

relevant products to the existing and potential customers. It is essential for

organizations to store, protect, process, and manage information in an efficient and

cost-effective manner. Legal, regulatory, and contractual obligations regarding the

availability, retention, and protection of data further add to the challenges of storing

and managing information.

To meet all these requirements and more, organizations are increasingly

undertaking digital transformation initiatives to implement intelligent storage

solutions. These solutions enable efficient and optimized storage and management

of information. They also enable extraction of value from information to derive new

Information Storage and Management (ISM) v4

Page 8

© Copyright 2019 Dell Inc.

Introduction to Information Storage

business opportunities, gain a competitive advantage, and create sources of

revenue.

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page 9

Introduction to Information Storage

Digital Data

Definition: Digital Data

A collection of facts that is transmitted and stored in electronic form,

and processed through software.

Video

Laptop

Text

Photos

Desktop

Internal or External Storage

Digital Data

Tablet and Mobile

Notes

A generic definition of data is that it is a collection of facts, typically collected for

analysis or reference. Data can exist in various forms such as facts stored in a

person's mind, photographs and drawings, a bank ledger, and tabled results of a

scientific survey. Digital data is a collection of facts that is transmitted and stored in

electronic form, and processed through software. Devices such as desktops,

laptops, tablets, mobile phones, and electronic sensors generate digital data.

Digital data is stored as strings of binary values on a storage medium. This storage

medium is either internal or external to the devices generating or accessing the

data. The storage devices may be of different types, such as magnetic, optical, or

SSD. Examples of digital data are electronic documents, text files, emails, ebooks,

digital images, digital audio, and digital video.

Information Storage and Management (ISM) v4

Page 10

© Copyright 2019 Dell Inc.

Introduction to Information Storage

Types of Digital Data

Unstructured

Quasi-Structured

Semi-Structured

Structured

Unstructured data has no inherent structure and is usually stored as different

types of files

Text documents, PDFs, images, and videos

Quasi-structured data consists of textual data with erratic formats that can be

formatted with effort and software tools

Clickstream data

Semi-structured data consists of textual data files with an apparent pattern,

enabling analysis

Spreadsheets and XML files

Structured data has a defined data model, format, structure

Database

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page 11

Introduction to Information Storage

Notes

Based on how it is stored and managed, digital data can be broadly categorized

into structured, semi-structured, quasi-structured, and unstructured.

Structured data is organized in fixed fields within a record or file. To structure

the data, you require a data model. A data model specifies the format for

organizing data, and also specifies how different data elements are related to

each other. For example, in a relational database, data is organized in rows and

columns within named tables.

Semi-structured data does not have a formal data model but has an apparent,

self-describing pattern and structure that enable its analysis. Examples of semistructured data include spreadsheets that have a row and column structure, and

XML files that are defined by an XML schema.

Quasi-structured data consists of textual data with erratic data formats, and

can be formatted with effort, software tools, and time. An example of quasistructured data is a “clickstream” that includes data about which webpages a

user visited and in what order – which is the result of the successive mouse

clicks the user made. A clickstream shows when a user entered a website, the

pages viewed, the time that is spent on each page, and when the user exited.

Unstructured data does not have a data model and is not organized in any

particular format. Some examples of unstructured data include text documents,

PDF files, emails, presentations, images, and videos.

The majority, which is more than 90 percent of the data that is generated in the

digital universe today is non-structured data (semi-, quasi-, and unstructured).

Although the illustration shows four different and separate types of data, in reality a

mixture of these data is typically generated.

Information Storage and Management (ISM) v4

Page 12

© Copyright 2019 Dell Inc.

Introduction to Information Storage

What is Information?

Definition: Information

Processed data that is presented in a specific content to enable useful

interpretation and decision-making.

Example: Annual sales data processed into a sales report

Enables calculation of the average sales for a product and the comparison

of actual sales to projected sales

Emerging architectures and technologies enable extracting information from

non-structured data

Notes

The terms “data” and “information” are closely related and you can use these two

terms interchangeably. However, it is important to understand the difference

between the two. Data, by itself, is simply a collection of facts that requires

processing for it to be useful. For example, annual sales figures of an organization

is data. When data is processed and in a specific context, it can be interpreted in a

useful manner. This processed and organized data is called information.

For example, when you process the annual sales data into a sales report, it

provides useful information, such as the average sales for a product (indicating

product demand and popularity), and a comparison of the actual sales to the

projected sales.

Information thus creates knowledge and enables decision-making. Processing and

analyzing data is vital to any organization. It enables organizations to derive value

from data, and create intelligence to enable decision-making and organizational

effectiveness. It is easier to process structured data due to its organized form. On

the other hand, processing non-structured data and extracting information from it

using traditional applications is difficult, time-consuming, and requires considerable

resources. Emerging architectures, technologies, and techniques enable storing,

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page 13

Introduction to Information Storage

managing, analyzing, and deriving value from unstructured data coming from

numerous sources.

Information Storage and Management (ISM) v4

Page 14

© Copyright 2019 Dell Inc.

Introduction to Information Storage

Information Storage

Information is stored on storage devices on non-volatile media

Magnetic storage devices: Hard disk drive and magnetic tape drive

Optical storage devices: Blu-ray, DVD, and CD

Flash-based storage devices: Solid-state drive (SSD), memory card, and

USB thumb drive

Storage devices are assembled within a storage system or “array”

Provides high capacity, scalability, performance, reliability, and security

Storage systems along with other IT infrastructure are housed in a data center

Notes

In a computing environment, storage devices (or storage) are devices consisting of

nonvolatile recording media on which digital data or information can be persistently

stored. Storage may be internal or external to a compute system. Based on the

nature of the storage media used, storage devices are classified as: magnetic

storage devices, optical storage devices, or flash-based storage devices.

Storage is a core component in an organization’s IT infrastructure. Various factors

such as the media, architecture, capacity, addressing, reliability, and performance

influence the choice and use of storage devices in an enterprise environment. For

example, disk drives and SSDs are used for storing business-critical information

that needs to be continuously accessible to applications. Magnetic tapes and

optical storage are typically used for backing up and archiving data.

In enterprise environments, information is typically stored on storage

systems/storage arrays. A storage system is a hardware component that contains a

group of homogeneous/heterogeneous storage devices that are assembled within

a cabinet. These enterprise-class storage systems are designed for high capacity,

scalability, performance, reliability, and security to meet business requirements.

The compute systems that run business applications are provided storage capacity

from storage systems. Storage systems are covered in Module, ‘Intelligent Storage

Systems (ISS)’. Organizations typically house their IT infrastructure, including

compute systems, storage systems, and network equipment within a data center.

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page 15

Introduction to Information Storage

Information Storage and Management (ISM) v4

Page 16

© Copyright 2019 Dell Inc.

Introduction to Information Storage

Data Center

A data center typically comprises:

Facility: The building and floor space where

the data center is constructed

IT equipment: Compute system, storage, and

connectivity elements

Support infrastructure: Power supply, fire

detection, HVAC, and security systems

Organizations are moving towards modern data

center to overcome the business and IT

challenges

Helps them to be successful in their digital

transformation journey

Notes

A data center is a dedicated facility where an organization houses, operates, and

maintains its IT infrastructure along with other supporting infrastructures. It

centralizes an organization’s IT equipment and data-processing operations. A data

center may be constructed in-house and located in an organization’s own facility.

The data center may also be outsourced, with equipment being at a third-party site.

A data center typically consists of the following:

Facility: It is the building and floor space where organizations construct the data

center. It typically has a raised floor with ducts underneath holding power and

network cables.

IT equipment: It includes components such as compute systems, storage, and

connectivity elements along with cabinets for housing the IT equipment.

Support infrastructure: It includes power supply, fire, heating, ventilation, and air

conditioning (HVAC) systems. It also includes security systems such as

biometrics, badge readers, and video surveillance systems.

Digital transformation is disrupting every industry, and with the evolution of modern

technologies, organizations are facing too many business challenges.

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page 17

Introduction to Information Storage

Organizations must operate in real time, develop smarter products, and deliver a

great user experience. They must be agile, operate efficiently, and make decisions

quickly to be successful. However, these disruptive technologies along with agile

methodologies are less resilient on traditional IT infrastructure and services.

Organization’s IT department also faces several challenges in supporting business

challenges. So, organizations are moving towards modern data center to overcome

the business challenges and be successful in their digital transformation journey.

Information Storage and Management (ISM) v4

Page 18

© Copyright 2019 Dell Inc.

Introduction to Information Storage

Key Characteristics of a Data Center

Data centers are designed and built to fulfill the key characteristics as shown in the

figure. Although the characteristics are applicable to almost all data center

components, the details here primarily focus on storage systems.

Availability

Data Integrity

Security

Manageability

Performance

Capacity

Scalability

Notes

Data center characteristics are:

Availability: Availability of information as and when required should be

ensured. Unavailability of information can severely affect business operations,

lead to substantial financial losses, and damage the reputation of an

organization.

Security: Policies and procedures should be established, and control measures

should be implemented to prevent unauthorized access to and alteration of

information.

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page 19

Introduction to Information Storage

Capacity: Data center operations require adequate resources to efficiently store

and process large and increasing amounts of data. When capacity requirements

increase, additional capacity should be provided either without interrupting the

availability or with minimal disruption. Capacity may be managed by adding new

resources or by reallocating existing resources.

Scalability: Organizations may need to deploy additional resources such as

compute systems, new applications, and databases to meet the growing

requirements. Data center resources should scale to meet the changing

requirements, without interrupting business operations.

Performance: Data center components should provide optimal performance

based on the required service levels.

Data integrity: Data integrity refers to mechanisms, such as error correction

codes or parity bits, which ensure that data is stored and retrieved exactly as it

was received.

Manageability: A data center should provide easy, flexible, and integrated

management of all its components. Efficient manageability can be achieved

through automation for reducing manual intervention in common, repeatable

tasks.

Information Storage and Management (ISM) v4

Page 20

© Copyright 2019 Dell Inc.

Introduction to Information Storage

Digital Transformation

Digital transformation puts technology at the heart of an organization’s products,

services, and operations.

Notes

Digital transformation is imperative for all businesses. Businesses of all shapes and

sizes are changing to a more digital mindset. This digital mindset is being driven by

the need to innovate more quickly. Digital transformation puts technology at the

heart of an organization’s products, services, and operations.

In general terms, digital transformation is defined as the integration of digital

technology into all areas of a business. This results in fundamental changes to how

businesses operate and how they deliver value to customers, improve efficiency,

reduce business risks, and uncover new opportunities.

With people, customers, businesses, and things communicating, transacting, and

negotiating with each other, a new world comes into being. It is the world of the

digital business that uses data as a way to create value. According to Gartner, by

2020, more than seven billion people and businesses, and at least 30 billion

devices, will be connected to the Internet. Organizations need to accelerate their

digital transformation journeys to avoid being left behind in an increasingly digital

world.

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page 21

Introduction to Information Storage

Information Storage and Management (ISM) v4

Page 22

© Copyright 2019 Dell Inc.

Introduction to Information Storage

Key Technologies Driving Digital Transformation

In this digital world, organizations need to develop new applications using agile

processes and new tools to assure rapid time-to-market. Simultaneously, the

organizations still expect IT to operate and manage the traditional applications

which provide much revenue.

To survive, the organization has to transform and adopt modern technologies to

support the digital transformation. Some of the key technologies that drive digital

transformation are listed in the figure.

Cloud

Big Data Analytics

Internet of Things

Machine Learning

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page 23

Introduction to Information Storage

Assessment

1. Which data asset is an example of unstructured data?

A. XML data file

B. News article text

C. Database tableTBF

D. Webserver log

2. Why are businesses undergoing the digital transformation?

A. To innovate more quickly

B. To avoid security risks

C. To avoid compliance penalty

D. To eliminate management costs

Information Storage and Management (ISM) v4

Page 24

© Copyright 2019 Dell Inc.

Summary

Summary

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page 25

Modern Technologies Driving Digital Transformation

Introduction

This module presents an overview on the modern technologies that are driving

digital transformation in today’s world. The modern technologies covered in this

lesson include cloud computing, big data analytics, Internet of Things (IoT), and

machine learning.

Upon completing this module, you will be able to:

Describe cloud computing

Describe Big Data analytics

Describe Internet of Things

Describe machine learning

Information Storage and Management (ISM) v4

Page 26

© Copyright 2019 Dell Inc.

Cloud Computing Lesson

Cloud Computing Lesson

Introduction

This lesson presents an overview of cloud computing along with its essential

characteristics, various cloud deployment and service models, and uses cases.

This lesson covers the following topics:

Cloud computing and its essential characteristics

Cloud service models

Cloud deployment models

Use cases of cloud computing

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page 27

Cloud Computing Lesson

Cloud Computing

Cloud Computing: An Overview

Definition: Cloud Computing

A model for enabling convenient, on-demand network access to a

shared pool of configurable computing resources (for example,

networks, servers, storage, applications, and services) that can be

rapidly provisioned and released with minimal management effort or

service provider interaction.

Source: The National Institute of Standards and Technology (NIST)—

a part of the U.S. Department of Commerce—in its Special

Publication 800-145

Cloud Infrastructure

Desktop

VM

VM

APP

APP

OS

OS

Applications

Hypervisor

LAN/WAN

Laptop

Compute

Network

Storage

Applications

Platform Software

Tablet and

Mobile

Information Storage and Management (ISM) v4

Page 28

© Copyright 2019 Dell Inc.

Cloud Computing Lesson

Notes

The term “cloud” originates from the cloud-like bubble that is commonly used in

technical architecture diagrams to represent a system. This system may be the

Internet, a network, or a compute cluster. In cloud computing, a cloud is a collection

of IT resources, including hardware and software resources. You can deploy these

resources either in a single data center, or across multiple geographically

dispersed data centers that are connected over a network.

A cloud service provider is responsible for building, operating, and managing cloud

infrastructure. The cloud computing model enables consumers to hire IT resources

as a service from a provider. A cloud service is a combination of hardware and

software resources that are offered for consumption by a provider. The cloud

infrastructure contains IT resource pools, from which you can provision resources

to consumers as services over a network, such as the Internet or an intranet.

Resources are returned to the pool when the consumer releases them.

Example: The cloud model is similar to utility services such as electricity, water,

and telephone. When consumers use these utilities, they are typically unaware of

how the utilities are generated or distributed. The consumers periodically pay for

the utilities based on usage. Similarly, in cloud computing, the cloud is an

abstraction of an IT infrastructure. Consumers hire IT resources as services from

the cloud without the risks and costs that are associated with owning the resources.

Cloud services are accessed from different types of client devices over wired and

wireless network connections. Consumers pay only for the services that they use,

either based on a subscription or based on resource consumption.

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page 29

Cloud Computing Lesson

Essential Cloud Characteristics

In SP 800-145, NIST specifies that a cloud infrastructure should have the five

essential characteristics.

Measured Service

Resource Pooling

Cloud Characteristics

Rapid Elasticity

On-demand Selfservice

Broad Network

Access

Notes

The five characteristics are:

Measured Service: “Cloud systems automatically control and optimize

resource use by leveraging a metering capability at some level of abstraction

appropriate to the type of service (for example, storage, processing, bandwidth,

and active user accounts). Resource usage can be monitored, controlled, and

reported, providing transparency for both the provider and consumer of the

utilized service.” – NIST

Resource Pooling: “The provider’s computing resources are pooled to serve

multiple consumers using a multitenant model, with different physical and virtual

resources that are dynamically assigned and reassigned according to consumer

demand. There is a sense of location independence in that the customer

generally has no control or knowledge over the exact location of the provided

Information Storage and Management (ISM) v4

Page 30

© Copyright 2019 Dell Inc.

Cloud Computing Lesson

resources but may be able to specify location at a higher level of abstraction (for

example, country, state, or datacenter). Examples of resources include storage,

processing, memory, and network bandwidth.” – NIST

Rapid Elasticity: “Capabilities can be rapidly and elastically provisioned, in

some cases automatically, to scale rapidly outward and inward commensurate

with demand. To the consumer, the capabilities available for provisioning often

appear to be unlimited and can be appropriated in any quantity at any time.” –

NIST

On-demand Self-service: “A consumer can unilaterally provision computing

capabilities, such as server time or networked storage, as needed automatically

without requiring human interaction with each service provider.” – NIST

Broad Network Access: “Capabilities are available over the network and

accessed through standard mechanisms that promote use by heterogeneous

thin or thick client platforms (for example, mobile phones, tablets, laptops, and

workstations).” – NIST

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page 31

Cloud Computing Lesson

Cloud Service Models

Infrastructure as a Service

Cloud Service Models

Platform as a Service

Software as a Service

A cloud service model specifies the services and the capabilities that are

provided to consumers

In SP 800-145, NIST classifies cloud service offerings into the three primary

models:

Infrastructure as a Service (IaaS)

Platform as a Service (PaaS)

Software as a Service (SaaS)

Notes

Cloud administrators or architects assess and identify potential cloud service

offerings. The assessment includes evaluating what services to create and

upgrade, and the necessary feature set for each service. It also includes the

service level objectives (SLOs) of each service aligning to consumer needs and

market conditions. SLOs are specific measurable characteristics such as

availability, throughput, frequency, and response time. They provide a

measurement of performance of the service provider. SLOs are key elements of a

Information Storage and Management (ISM) v4

Page 32

© Copyright 2019 Dell Inc.

Cloud Computing Lesson

service level agreement (SLA). SLA is a legal document that describes items such

as what service level will be provided, how it will be supported, service location,

and the responsibilities of the consumer and the provider.

Many alternate cloud service models based on IaaS, PaaS, and SaaS are defined

in various publications and by different industry groups. These service models are

specific to the cloud services and capabilities that are provided. Examples of such

service models include Network as a Service (NaaS), Database as a Service

(DBaaS), Big Data as a Service (BDaaS), Security as a Service (SECaaS), and

Disaster Recovery as a Service (DRaaS). However, these models eventually

belong to one of the three primary cloud service models.

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page 33

Cloud Computing Lesson

Infrastructure as a Service (IaaS)

Definition: Infrastructure as a Service

“The capability provided to the consumer is to provision processing,

storage, networks, and other fundamental computing resources where

the consumer is able to deploy and run arbitrary software, which can

include operating systems and applications. The consumer does not

manage or control the underlying cloud infrastructure but has control

over operating systems, storage, and deployed applications; and

possibly limited control of select networking components (for example,

host firewalls).” – NIST

IaaS pricing may be subscription-based or based on resource usage

Provider pools the underlying IT resources and multiple consumers share

these resources through a multitenant model

Organizations can even implement IaaS internally, where internal IT manages

the resources and services

Examples:

Application

Database

- Amazon EC2, S3

Consumer's Resources

Programming Framework

- Virtustream

Operating System

- Google Compute Engine

Cloud Infrastructure

Compute

Provider's Resources

Storage

Network

Information Storage and Management (ISM) v4

Page 34

© Copyright 2019 Dell Inc.

Cloud Computing Lesson

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page 35

Cloud Computing Lesson

Platform as a Service (PaaS)

Definition: Platform as a Service

In the PaaS model, a cloud service includes compute, storage, and network

resources along with platform software

Platform software includes software such as:

Operating system, database, programming frameworks, middleware

Tools to develop, test, deploy, and manage applications

Most PaaS offerings support multiple operating systems and programming

frameworks for application development and deployment

Typically you can calculate PaaS usage fees based on the following factors:

Number of consumers

Types of consumers (developer, tester, and so on)

The time for which the platform is in use

The compute, storage, or network resources that the platform consumes

Information Storage and Management (ISM) v4

Page 36

© Copyright 2019 Dell Inc.

Cloud Computing Lesson

Consumer's Resources

Application

Database

Programming Framework

Operating System

Cloud

Infrastructure

Provider's Resources

Compute

Storage

Network

Examples:

- Pivotal Cloud Foundry

- Google App Engine

- AWS Elastic Beanstalk

- Microsoft Azure

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page 37

Cloud Computing Lesson

Software as a Service (SaaS)

Definition: Software as a Service

“The capability provided to the consumer is to use the provider’s

applications running on a cloud infrastructure. The applications are

accessible from various client devices through either a thin client

interface, such as a web browser (for example, web-based email), or

a program interface. The consumer does not manage or control the

underlying cloud infrastructure including network, servers, operating

systems, storage, or even individual application capabilities, except

limited user-specific application configuration settings.” – NIST

In the SaaS model, a provider offers a cloud-hosted application to multiple

consumers as a service

The consumers do not own or manage any aspect of the cloud infrastructure

Some SaaS applications may require installing a client interface locally on an

end-point device

Examples of applications that are delivered through SaaS:

Customer Relationship Management (CRM)

Enterprise Resource Planning (ERP)

Email and Office Suites

Information Storage and Management (ISM) v4

Page 38

© Copyright 2019 Dell Inc.

Cloud Computing Lesson

Application

Database

Programming Framework

Provider's Resources

Operating System

Cloud Infrastructure

Compute

Storage

Network

Examples:

- Salesforce

- Google Apps

- Microsoft Office 365

- Oracle

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page 39

Cloud Computing Lesson

Cloud Deployment Models

A cloud deployment model provides a basis for how cloud infrastructure is built,

managed, and accessed

In SP 800 to 145, NIST specifies the four primary cloud deployment models

listed in the figure

Each cloud deployment model may be used for any of the cloud service models:

IaaS, PaaS, and SaaS

The different deployment models present several tradeoffs in terms of control,

scale, cost, and availability of resources

Public Cloud

Private Cloud

Cloud Deployment Models

Hybrid Cloud

Community Cloud

Information Storage and Management (ISM) v4

Page 40

© Copyright 2019 Dell Inc.

Cloud Computing Lesson

Public Cloud

Definition: Public Cloud

“The cloud infrastructure is provisioned for open use by the general

public. It may be owned, managed, and operated by a business,

academic, or government organization, or some combination of them.

It exists on the premises of the cloud provider.” – NIST

Public cloud services may be free, subscription-based, or provided on a payper-use model

A public cloud provides the benefits of low upfront expenditure on IT

resources and enormous scalability

Some concerns for the consumers include:

Network availability

Risks associated with multitenancy

Visibility

Control over the cloud resources and data

Restrictive default service levels.

Enterprise Q

Enterprise P

Resources of

Cloud Provider

VM

VM

Individual R

Hypervisor

Applications

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page 41

Cloud Computing Lesson

Information Storage and Management (ISM) v4

Page 42

© Copyright 2019 Dell Inc.

Cloud Computing Lesson

Private Cloud

Definition: Private Cloud

“The cloud infrastructure is provisioned for exclusive use by a single

organization comprising multiple consumers (for example, business

units). It may be owned, managed, and operated by the organization,

a third party, or some combination of them, and it may exist on or off

premises.” – NIST

Many organizations may not want to adopt public clouds due to concerns

related to privacy, external threats, and lack of control over the IT resources and

data

When compared to a public cloud, a private cloud offers organizations a

greater degree of privacy and control over the cloud infrastructure,

applications, and data

There are two variants of private cloud: on-premise and externally hosted

An organization deploys on-premise private cloud in its data center within its

own premises

Enterprise P

Resources of Cloud

Provider

1. On-premise Private Cloud

Enterprise P

Resources of

Enterprise P

Dedicated for

Enterprise P

2. Externally Hosted Private Cloud

Application

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page 43

Cloud Computing Lesson

Notes

In the externally hosted private cloud (or off-premise private cloud) model:

An organization outsources the implementation of the private cloud to an

external cloud service provider

The cloud infrastructure is hosted on the premises of the provider and multiple

tenants may share

However, the organization’s private cloud resources are securely separated

from other cloud tenants by access policies implemented by the provider

Information Storage and Management (ISM) v4

Page 44

© Copyright 2019 Dell Inc.

Cloud Computing Lesson

Community Cloud

Definition: Community Cloud

“The cloud infrastructure is provisioned for exclusive use by a specific

community of consumers from organizations that have shared

concerns (for example, mission, security requirements, policy, and

compliance considerations). It may be owned, managed, and

operated by one or more of the organizations in the community, a

third party, or some combination of them, and it may exist on or off

premises.” – NIST

The organizations participating in the community cloud typically share the cost

of deploying the cloud and offering cloud services

This enables them to lower their individual investments

Since the costs are shared by a fewer consumer than in a public cloud, this

option may be more expensive

However, a community cloud may offer a higher level of control and protection

than a public cloud

There are two variants of a community cloud: on-premise and externally hosted

Enterprise P

Enterprise Q

Enterprise R

Community

Users

Resources of Cloud

Provider

Dedicated for

Community

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page 45

Cloud Computing Lesson

Information Storage and Management (ISM) v4

Page 46

© Copyright 2019 Dell Inc.

Cloud Computing Lesson

Hybrid Cloud

Definition: Hybrid Cloud

“The cloud infrastructure is a composition of two or more distinct cloud

infrastructures (private, community, or public) that remain unique

entities, but are bound by standardized or proprietary technology that

enables data and application portability (for example, cloud bursting

for load balancing between clouds.)” – NIST

Enterprise Q

Public

Enterprise P

Private

Individual R

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page 47

Cloud Computing Lesson

Evolution of Hybrid Cloud: Multicloud

To create the best possible solution for their businesses, today organizations

want to choose different public cloud service providers

To achieve this goal, some organizations have started adopting a multicloud

approach

Public Cloud

Private Cloud

Public Cloud

Notes

The drivers for adopting this approach include avoiding vendor lock-in, data control,

cost savings, and performance optimization. This approach helps to meet the

business demands since, sometimes no single cloud model can suit the varied

requirements and workloads across an organization. Some application workloads

run better on one cloud platform while other workloads achieve higher performance

and lower cost on another platform.

Also, certain compliance, regulation, and governance policies require an

organization’s data to reside in particular locations. A multicloud strategy can help

organizations meet those requirements because different cloud models from

Information Storage and Management (ISM) v4

Page 48

© Copyright 2019 Dell Inc.

Cloud Computing Lesson

various cloud service providers can be selected. Each cloud vendor offers different

service options at different prices.

Organizations can also analyze the performance of their various application

workloads and compare them to what is available from other vendors. This method

helps to analyze both workload performance and cost for various services in each

cloud. Options can then be identified that meet the workload performance and cost

requirements of the organization.

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page 49

Cloud Computing Lesson

Cloud Computing Use Cases

Use Case

Description

Cloud bursting

Provisioning resources for a limited time from a public

cloud to handle peak workloads

Web application

hosting

Hosting less critical applications on the public cloud

Migrating packaged

applications

Migrating standard packaged applications such as e-mail

to the public cloud

Application

development and

testing

Developing and testing applications in the public cloud

before launching them

Big Data Analytics

Using cloud to analyze the voluminous data to gain

insights and for deriving business value

Disaster Recovery

Adopting cloud for a DR solution can provide cost benefit,

scalability and faster recovery of data

Internet of Things

IoT in cloud provides infrastructure for enhancing the

network connectivity, storage space, and tools for data

analysis

Information Storage and Management (ISM) v4

Page 50

© Copyright 2019 Dell Inc.

Big Data Analytics Lesson

Big Data Analytics Lesson

Introduction

This lesson presents an overview of Big Data along with its characteristics, data

repositories, components of big data analytics solution, and uses cases.

This lesson covers the following topics:

Big Data and its key characteristics

Data repositories

Components of Big Data analytics solution

Use cases of Big Data

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page 51

Big Data Analytics Lesson

Big Data Analytics

Big Data: An Overview

Definition: Big Data

Information assets whose high volume, high velocity, and high variety

require the use of new technical architectures and analytical methods

to gain insights and for deriving business value.

Characteristics of Data

Data Processing Nodes

Business Value

Big Data

Big Data:

Represents the information assets whose high volume, high velocity, and high

variety require the use of new technical architectures and analytical methods to

gain insights and for deriving business value.

Many organizations such as government departments, retail,

telecommunications, healthcare, social networks, banks, and insurance

companies employ data science techniques to benefit from Big Data analytics.

The definition of Big Data has three principal aspects, which are:

Information Storage and Management (ISM) v4

Page 52

© Copyright 2019 Dell Inc.

Big Data Analytics Lesson

Characteristics of Data

Big Data includes data sets of considerable sizes containing both structured and

non-structured digital data. Apart from its size, the data gets generated and

changes rapidly, and also comes from diverse sources. These and other

characteristics are covered next.

Data Processing Needs

Big Data also exceeds the storage and processing capability of conventional IT

infrastructure and software systems. It not only needs a highly-scalable architecture

for efficient storage, but also requires new and innovative technologies and

methods for processing.

These technologies typically make use of platforms such as distributed processing,

massively-parallel processing, and machine learning. The emerging discipline of

Data Science represents the synthesis of several existing disciplines, such as

statistics, mathematics, data visualization, and computer science for Big Data

analytics.

Business Value

Big Data analytics has tremendous business importance to organizations.

Searching, aggregating, and cross-referencing large data sets in real-time or nearreal time enables gaining valuable insights from the data. This enables better datadriven decision making.

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page 53

Big Data Analytics Lesson

Characteristics of Big Data

Apart from the characteristics of volume, velocity, and variety—popularly known as

“the 3V’s, the three other characteristics of Big Data include variability, veracity,

and value

Volume

Velocity

Variety

Variability

Veracity

Value

• Costeffectiveness and

business value

• Massive volumes

of data

• Rapidly

changing data

• Diverse data

from numerous

sources

• Constantly

changing meaning

of data

• Varying quality

and reliability of

data

• Challenges in

storage and

analysis

• Challenges in

real-time analysis

• Challenges in

integration, and

analysis

• Challenges in

gathering and

interpretation

• Challenges in

transforming and

trusting data

Notes

Volume: The word “Big” in Big Data refers to the massive volumes of data.

Organizations are witnessing an ever-increasing growth in data of all types.

These types include transaction-based data that is stored over the years,

sensor data, and unstructured data streaming in from social media. The volume

of data has already reached Petabyte and Exabyte scales, and it is still growing

everyday. The excessive volume not only requires substantial cost-effective

storage, but also rises challenges in data analysis.

Velocity: Velocity refers to the rate at which data is produced and changes, and

also how fast the data must be processed to meet business requirements.

Today, data is generated at an exceptional speed, and real-time or near-real

time analysis of the data is a challenge for many organizations. It is essential to

process and analyze the data, and to deliver the results in a timely manner. An

example of such a requirement is real-time face recognition for screening

passengers at airports.

Variety: Variety refers to the diversity in the formats and types of data. There

are numerous sources that generate data in various structured and unstructured

forms. Organizations face the challenge of managing, merging, and analyzing

Information Storage and Management (ISM) v4

Page 54

© Copyright 2019 Dell Inc.

Big Data Analytics Lesson

the different varieties of data in a cost-effective manner. The combination of

data from a variety of data sources and in a variety of formats is a key

requirement in Big Data analytics. An example of such a requirement could be

an autonomous vehicle dealing with various data formats and sources to

operate safely.

Variability: Variability refers to the constantly changing meaning of data. It

highlights the importance of deriving the right information at all possible

contexts. For example, analysis of natural language search and social media

posts requires interpretation of complex and highly variable grammar. The

inconsistency in the meaning of data creates challenges that are related to

gathering the data and in interpreting its context.

Veracity: Veracity refers to the reliability and verifiability of the data. The quality

of the data being gathered can differ greatly, and the accuracy of analysis

depends on the veracity of the source data. Establishing trust in Big Data

presents a major challenge because as the variety and number of sources

grows, the likelihood of noise and errors in the data increases. Therefore,

significant effort may go into cleaning data to remove noise and errors, and to

produce accurate datasets before analysis can begin. For example, a retail

organization may have gathered customer behavior data from across systems

to analyze product purchase patterns and to predict purchase intent. The

organization would have to clean and transform the data to make it consistent

and reliable.

Value: Value refers to the cost-effectiveness of the Big Data analytics

technology that is used and the business value that is derived from it. Many

large enterprise scale organizations have maintained large data repositories,

such as data warehouses, managed unstructured data, and carried out realtime data analytics for many years. With hardware and software becoming more

affordable and the emergence of more providers, Big Data analytics

technologies are now available to a broader market. Organizations are also

gaining the benefits of business process enhancements, increased revenues,

and better decision making.

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page 55

Big Data Analytics Lesson

Data Repositories

Data Warehouses

Data Lake

• Central repository of data

gathered from different sources

• Collection of structured and

unstructured data assets

Data Repositories

• Stores current and historical

data in a structured format

• Designed for query and analysis

• Uses 'store everything'

approach to big data

• Presents unrefined view of

data

Notes

Data for analytics typically comes from repositories such as enterprise data

warehouses and data lakes.

A data warehouse is a central repository of integrated data that is gathered from

multiple different sources. It stores current and historical data in a structured

format. It is designed for query and analysis to support the decision-making

process of an organization. For example, a data warehouse may contain current

and historical sales data that is used for generating trend reports for sales

comparisons.

A data lake is a collection of structured and unstructured data assets that are

stored as exact or near-exact copies of the source formats. The data lake

architecture is a “store-everything” approach to Big Data. Unlike conventional data

warehouses, you do not classify the data when it is stored in the repository, as the

value of the data may not be clear at the outset. The data is also not arranged as

per a specific schema and is stored using an object-based storage architecture. As

a result, data preparation is eliminated and a data lake is less structured compared

to a data warehouse. Data is classified, organized, or analyzed only when it is

accessed. When a business need arises, the data lake is queried, and the resultant

subset of data is then analyzed to provide a solution. The purpose of a data lake is

to present an unrefined view of data to highly skilled analysts. Also to enable them

to implement their own data refinement and analysis techniques.

Information Storage and Management (ISM) v4

Page 56

© Copyright 2019 Dell Inc.

Big Data Analytics Lesson

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page 57

Big Data Analytics Lesson

Components of a Big Data Analytics

The technology layers in a Big Data analytics solution include storage plus

MapReduce and query technologies

These components are collectively called the ‘SMAQ stack’

SMAQ solutions may be implemented as a combination of multi-component

systems

May also be offered as a product with a self-contained system comprising

storage, MapReduce, and query – all in one

• Foundational layer of the stack

Storage

• Distributed architecture

• Enables distribution of computation

MapReduce

• Uses multiple compute systems for parallel processing

• Implements NoSQL database

Query

• Provides platform for analytics and reporting

Notes

The technology layers in a Big Data analytics solution include storage, MapReduce

technologies, and query technologies. These components are collectively called

the ‘SMAQ stack’.

Storage: It is the foundational layer of the stack, and has a distributed

architecture characteristic with primarily unstructured content in non-relational

form.

Information Storage and Management (ISM) v4

Page 58

© Copyright 2019 Dell Inc.

Big Data Analytics Lesson

MapReduce: It is an intermediate layer in the stack. It enables the distribution

of computation across multiple generic compute systems for parallel processing

to gain speed and cost advantage. It also supports a batch-oriented processing

model of data retrieval and computation as opposed to the record-set

orientation of most SQL-based databases.

Query: This layer typically implements a NoSQL database for storing,

retrieving, and processing data. It also provides a user-friendly platform for

analytics and reporting.

Information Storage and Management (ISM) v4

© Copyright 2019 Dell Inc.

Page 59

Big Data Analytics Lesson

Storage

Storage systems consist of multiple nodes that are collectively called a “cluster”

Based on distributed file systems

Each node has processing capability and storage capacity

Highly scalable architecture

You may implement a NoSQL database on top of the distributed file system

Notes