Accelerate: Lean Software & DevOps for High Performance

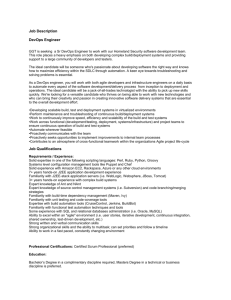

advertisement