MODULE 5 -UIUX

Information Search and Data

Visualization:

Information visualization is the interactive visual illustrations of

conceptual data that strengthen human understanding. It has

emerged from the research in human-computer interaction and is

applied as a critical component in varied fields. It allows users to

see, discover, and understand huge amounts of information at once.

Information visualization is also an assumption structure, which is

typically

followed

by

formal

examination

such

as

statistical

hypothesis testing.

Advanced Filtering

Following are the advanced filtering procedures −

Filtering with complex Boolean queries

Automatic filtering

Dynamic queries

Faceted metadata search

Query by example

Implicit search

Collaborative filtering

Multilingual searches

Visual field specification

Hypertext and Hypermedia

Hypertext can be defined as the text that has references to

hyperlinks with immediate access. Any text that provides a

reference to another text can be understood as two nodes of

information with the reference forming the link. In hypertext, all

the links are active and when clicked, opens something new.

Hypermedia on the other hand, is an information medium that

holds different types of media, such as, video, CD, and so forth, as

well as hyperlinks.

Hence, both hypertext and hypermedia refers to a system of linked

information. A text may refer to links, which may also have visuals

or media. So hypertext can be used as a generic term to denote a

document, which may in fact be distributed across several media.

Object Action Interface Model for Website

Design

Object Action Interface (OAI), can be considered as the next step of

the Graphical User Interface (GUI). This model focusses on the

priority of the object over the actions.

OAI Model

The OAI model allows the user to perform action on the object.

First the object is selected and then the action is performed on the

object. Finally, the outcome is shown to the user. In this model, the

user does not have to worry about the complexity of any

syntactical actions.

The object–action model provides an advantage to the user as they

gain a sense of control due to the direct involvement in the design

process. The computer serves as a medium to signify different tools.

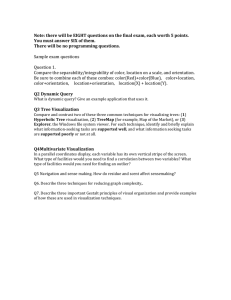

FIVE STAGE SEARCH FRAMEWORK:

The following points are the five-phase frameworks that clarifies

user interfaces for textual search −

Formulation − expressing the search

Initiation of action − launching the search

Review of results − reading messages and outcomes

Refinement − formulating the next step

Use − compiling or disseminating insight.

FORMULATION:-

What is the formulation stage of the information search process?

The fourth stage in the ISP, formulation, is the turning point of the ISP, when

feelings of uncertainty diminish and confidence increases. The task is to form

a focus from the information encountered.

Formulation, when a focused perspective is formed and uncertainty

diminishes as confidence begins to increase.

Initiation of Action:Consumer behaviour is the study of individuals, groups, or organisations and

all the activities associated with the purchase, use and disposal of goods and

services. Consumer behaviour consists of how the consumer's emotions,

attitudes, and preferences affect buying behaviour. Consumer behaviour

emerged in the 1940–1950s as a distinct sub-discipline of marketing, but

has become an interdisciplinary social science that blends elements

from psychology, sociology, social

anthropology, anthropology, ethnography, ethnology, marketing,

and economics (especially behavioural economics).

The study of consumer behaviour formally investigates individual qualities

such as demographics, personality lifestyles, and behavioural variables (such

as usage rates, usage occasion, loyalty, brand advocacy, and willingness to

provide referrals), in an attempt to understand

people's wants and consumption patterns. Consumer behaviour also

investigates on the influences on the consumer, from social groups such as

family, friends, sports, and reference groups, to society in general (brandinfluencers, opinion leaders).

Research has shown that consumer behaviour is difficult to predict, even for

experts in the field; however, new research methods, such

as ethnography, consumer neuroscience, and machine learning[1] are

shedding new light on how consumers make decisions. In addition, customer

relationship management (CRM) databases have become an asset for the

analysis of customer behaviour. The extensive data produced by these

databases enables detailed examination of behavioural factors that contribute

to customer re-purchase intentions, consumer retention, loyalty, and other

behavioural intentions such as the willingness to provide positive referrals,

become brand advocates, or engage in customer citizenship activities.

Databases also assist in market segmentation, especially behavioural

segmentation such as developing loyalty segments, which can be used to

develop tightly targeted customised marketing strategies on a one-to-one

basis..

Review of Results:-

Data visualization is the practice of translating information into a

visual context, such as a map or graph, to make data easier for the

human brain to understand and pull insights from. The main goal of

data visualization is to make it easier to identify patterns, trends and

outliers in large data sets. The term is often used interchangeably

with others, including information graphics, information visualization

and statistical graphics.

Data visualization is one of the steps of the data science process,

which states that after data has been collected, processed and

modeled, it must be visualized for conclusions to be made. Data

visualization is also an element of the broader data presentation

architecture (DPA) discipline, which aims to identify, locate,

manipulate, format and deliver data in the most efficient way possible.

Data visualization is important for almost every career. It can be used

by teachers to display student test results, by computer scientists

exploring advancements in artificial intelligence (AI) or by executives

looking to share information with stakeholders. It also plays an

important role in big data projects. As businesses accumulated

massive collections of data during the early years of the big data

trend, they needed a way to get an overview of their data quickly and

easily. Visualization tools were a natural fit.

Visualization is central to advanced analytics for similar reasons.

When a data scientist is writing advanced predictive analytics or

machine learning (ML) algorithms, it becomes important to visualize

the outputs to monitor results and ensure that models are performing

as intended. This is because visualizations of complex algorithms are

generally easier to interpret than numerical outputs.

Refining data consists of cleansing and shaping it. When you cleanse data,

you fix or remove data that is incorrect, incomplete, improperly formatted, or

duplicated. And when you shape data, you customize it by filtering, sorting,

combining or removing columns, and performing operations.

As you manipulate your data, you build a customized Data Refinery flow that

you can modify in real time and save for future re-use. When you save the

refined data set, you typically load it to a different location than where you

read it from. In this way, your source data can remain untouched by the

refinement process.

Prerequisites

Refine your data

Data set previews

Data Refinery flows and steps

Prerequisites

Before you can refine data, you’ll need a project.

If you have data in cloud or on-premises data sources, you’ll

need connections to those sources and you’ll need to add data assets from

each connection. If you want to be able to save refined data to cloud or onpremises data sources, create connections for this purpose as well. Source

connections can only be used to read data; target connections can only be

used to load (save) data. When you create a target connection, be sure to use

credentials that have Write permission or you won’t be able to save your Data

Refinery flow output to the target.

REFINEMENT:Refine your data

Access Data Refinery from within a project. Click Add to project, and

then choose DATA REFINERY FLOW. Then select the data you want

to work with.

Alternatively, from the Assets tab of a project page, you can perform

one of the following actions:

Select Refine from the menu of an Avro, CSV, JSON, Parquet,

or text data asset

Click an Avro, CSV, JSON, Parquet, or text data asset to

preview it first and then click the Refine link

If you already have a Data Refinery flow, click New Data

Refinery flow from the Data Refinery flows section and

then select the data that you want to work with.

Tip: If your data doesn’t display in tabular form, specify the format of

your data source. Go to the Data tab. Scroll down to the SOURCE

FILE information at the bottom of the page. Click the Specify data

format icon.

On the Data tab, apply operations to cleanse, shape, and enrich your

data. You can enter R code in the command line and let autocomplete

assist you in getting the correct syntax.

Validate your data throughout the data refinement process.

Use visualizations to gain insights into your data and to uncover

patterns, trends, and correlations within your data.

When you’ve refined the sample data set to suit your needs, click the

Run Data Refinery flow icon in the toolbar to run the Data Refinery flow

on the entire data set.

By default, Data Refinery uses the name of the data source to name

the Data Refinery flow and the target data set. You can change these

names, but you can’t change the project that these data assets belong

to.

In the Data Refinery flow details pane, optionally edit the name

of the Data Refinery flow and enter a description for it. You can

also add a one-time or repeating schedule for the Data Refinery

flow.

In the Data Refinery flow output pane, optionally edit the name

of the target data set and enter a description for it. You can save

the target data set to the project, to a connection, or to a

connected data asset. If you save it to the project, you can save

it as a new data asset (by default) or you can replace an existing

data asset. To save the target data set to a connection or as a

connected data asset or to replace an existing data asset,

use Change Location.

If you select an existing relational database table or view or you

select a connected relational data asset as the target for your

Data Refinery flow output, you have a number of options for

impacting the existing data set.

Overwrite - Overwrite the rows in the existing data set

with those in the Data Refinery flow output

Recreate - Delete the rows in the existing data set and

replace them with the rows in the Data Refinery flow

output

Insert - Append all rows of the Data Refinery flow output

to the existing data set

Update - Update rows in the existing data set with the

Data Refinery flow output; don’t insert any new rows

Upsert - Update rows in the existing data set and append

the rest of the Data Refinery flow output to it

For the Update and Upsert options, you’ll need to select the

columns in the output data set to compare to columns in the

existing data set. The output and target data sets must have the

same number of columns, and the columns must have the same

names and data types in both data sets.

Use -Dynamic Queries and Faceted Search :

The query cycle

1.

2.

Send the user's query to the search engine.

Execute the search and retrieve the records that match the query. In

this step, you'll derive the facets from the retrieved records .

3.

Send back the results and facets.

4.

Render the results and facets on screen.

Overview of dynamic faceting

Dynamic faceting displays to the user a different set of

facets depending on the user’s intent. To understand this,

consider an ecommerce music store that sells two

categories of items: CDs and audio equipment. This

business wants to display a set of relevant facets. What

good is it to propose “brand” when the user’s intent is to

find their favorite music? Likewise, what good is it to

propose “musical genre” when the user’s intent is to find

audio equipment? Dynamic faceting ensures that only the

most appropriate facets show up.

Ecommerce businesses with a diversity of products benefit

from displaying different facets depending on the items the

user is searching for:

Pharmaceutical companies display different facets

for their medical vs. cosmetic products.

Newspapers display different facets in their

Entertainment and Political sections.

Online marketplaces, like the Amazon example

below, change facet lists as people navigate through

their vast diversity of offerings.

Example use case: ecommerce

marketplaces & dynamic faceting

Amazon uses dynamic faceting for many of its categories.

In the image below, you see two queries: “music” on the

left, “movies” on the right. As you can see, both sides

include the “price” facet, but the musical query includes

“customer reviews”, “artist”, and “musical format”, while the

movie query includes “director”, “video format” and “movie

genre”.

Amazon uses dynamic faceting to create an enhanced

search experience by guiding the user in a smart and

curated way depending on the products they are

searching for.

Let’s see how this is done.

The query cycle and the logic behind

facet search

First, some terminology:

Facet keys are attributes like “color”, “price”,

“shoe_category”, and “sleeves”.

Facet values are the key’s values. For example,

“color” contains “red” and “green”; “sleeves” contain

“short” and “long”.

The dataset

We’ll use a dataset with two kinds of products: shirts and

shoes. The example below contains two typical items. Both

items include the “price”, “color”, and “clothing_type” facets.

However, shirts contain a “sleeves” facet and shoes a

“shoe_category” facet.

The query cycle

A search query follows a 4-part cycle. Here’s an overview.

We’ll give more details and code examples in the section

that follows.

1. Send the user’s query to the search engine.

2. Execute the search and retrieve the records that

match the query. In this step, you’ll derive the facets

from the retrieved records .

3. Send back the results and facets.

4. Render the results and facets onscreen.

As you’ll see, instead of using a pre-defined list of facets,

the logic consists in dynamically generating a new list with

each query. This is possible by doing the following:

On the back end, you’ll extract facets from every set

of query results.

On the front end, you’ll use undefined containerplaceholders instead of pre-defined containers.

The query request: sending the query

with or without a filter

The starting point of the cycle is to send a query and any

facet value the user has selected to filter their results.

Filtering results creates a cohesive result set, which in turn

generates a list of facets relevant to all of the items that

appear in the results. On the other hand, if the user does

not select a facet, the items will be more diverse — and

therefore, the facets might not apply to all products.

However, this is perfectly fine. As you’ll see in the next step,

presenting the top 5 most common facets ensures that

most items will contain these facets.

Now for the code. Here’s how Algolia’s API implements the

query “Get all short-sleeved summer t-shirts”. (Since all

search tools allow filtering, the following code is only one

among many ways to do this).

The query execution: creating the list

of top 5 facets

The dataset we use in this article contains two kinds of

products, each with a set of unique facet attributes. After

executing the query, the search engine extracts every facet

key that shows up in every record, then selects 5 facets

that appear most often.

Why top 5? Because a screen with 5 facets is usually

enough. Ten is an outer limit – any more would be overkill

and create unused clutter.

There are two methods to create a list of facets:

1. A pre-defined list: save a list of facet keys either

directly in the code or in a separate dataset or local

storage.

2. A dynamically generated list: extract a distinct list of

facet keys from the results of the query and put them

in the query response as a separate record.

We’ve chosen to use method 2, but some implementations

use method 1.

Method 1 – Hardcoding the list of facet keys

For this method, you create a fixed set of facets based on

what you already know about your products. This list can

be either hardcoded, or placed in a file or table in a

database that can be manually updated whenever new

products are added or modified.

Whatever the manner of storing the fixed list, the list must

contain the following kind of example:

For every “clothing_type=shirt”, send back the

following facets: “color, price, clothing_type, sleeves,

gender”.

For every “clothing_type=shoe”, send back the

following facets: “color, price, clothing_type,

shoe_category, brand”.

This is not the preferred method because, as with all

hardcoding or semi-hardcoding, it has limited scalability. If

you want to add more relations, for example

“shoe_styles“ = “high-top, leisure, and cross-fit”, you’ll need

to manually add a new line to the list. Manual maintenance

is extra work and prone to error and delay.

The approach we present in the rest of this article (method

2) removes manual maintenance from the process, making

the process deductive and therefore entirely dynamic.

Method 2 – Dynamically generate the list of facets

In this method, we’ll extract the list of facets from the

products themselves.

In a sense, the only difference between the manual and

dynamic approaches is that the generated list of top 5

facets is dynamic. The resulting list itself will be formatted

in the same way.

Here are the steps:

1. Get the query results:

o

Execute the query, find X number of products.

o

Save those records, to be sent back as the

search results.

2. Get the top 5 facet keys and all their values:

o

From the results, collect all facet attributes

(we’ll show below how to identify an attribute

as a facet).

o

Create a record that contains the full list of

extracted facet keys.

o

Determine which facets appear the most often.

Sort the list by the highest number of records.

o

Take only the top 5 from this list. These are the

most common facets.

o

Add the product’s values to their respective key.

Done.

The generated list would be the same as with method 1:

For every “clothing_type=shirt”, send back the

following facets: “color, price, clothing_type, sleeves,

gender”.

For every “clothing_type=shoe”, send back the

following facets: “color, price, clothing_type,

shoe_category, brand”.

Thus, if most products are shirts, we would display

“sleeves” and “gender” as the 4th and 5th facets to display.

If they were shoes, then the 4th and 5th facets would be

“shoe_category” and “brand”.

The query response: sending back the

response

Here we’ll simply send back the search results and the

generated list of 5 facet keys with their respective values.

The response should include whatever the front end needs

to build its search results page. In all facet search query

cycles, the front end needs:

A list of products with “name”, “description”, “price”,

and “image_url”. (Don’t send image files, as their size

will slow down the overall response time of the

search).

The list of facet key and their values.

A response will also contain additional information

needed for display purposes or business logic. We

do not show those.

Results:

Here is how we would return the set of shirts. All attributes

will be used as information in the search results, except

“objectID”, which will be used to identify a product for

technical reasons (click analytics, detailed page view, or

other reasons).

Facets:

The facet response is a combination of facet keys with their

values. Here’s a small extract. The example does not

include all of the facets:

Two things to note:

Only “sleeves” and “gender” show up, not

“shoe_category” or “brand”. As mentioned, this is

because there are more shirts than shoes.

The number after each facet indicates the number of

records that have that value. We discuss this a bit

more in the section on adding number of facets.

The front-end display: dynamically

displaying the list of facets

The job here is to render the results and facets on the

screen. In terms of UX design, industry standard is to have

the results in the middle, and facets on the left.

There is one container (“facet-lists”) for the 5 facets. The

rendering code generate an unordered list to display the fa

cets in that container.

The results go in the “results-container”.

Next, render the data. As this can take many forms, and

this article is not strictly a tutorial, take a look at

our dynamic faceting GitHub repo for a complete front-end

implementation.

Making the solution more robust

Adding number of facets

You’ll want to let your users know how many records have

a given facet value. Getting the number of facets is useful

because they inform users about the search results. For

example, it is useful to know that there are more shortsleeved shirts than long-sleeved shirts. These numbers of

facets are normally calculated in the back end during query

execution.

Adding facet metadata

Every record needs to contain information that helps the

process know which attributes are facets. To do this, you

need to add facet meta data to each record by using an

additional attribute that defines the record’s facets:

Going one step further, it’s also useful to include the type of

attribute:

With this information, the front-end code can apply a range

slider for price and a dropdown for the sleeves.

Grouping items using facets:

You’ll want to treat color differently from the other

facet attributes. This is because different colors appear

on the same shirt. For this, the logic question is: Do

you need 1 record per shirt that includes an attribute

with all available colors? or 1 record per color, which

requires multiple records for each shirt? Typical

database thinking would say, of course, only 1 record.

However, as discussed at length in our first

article, faceted search is different.

We put all searchable items in separate records. This

allows people to find “red shirts” using the search bar

without needing to click on a color facet.

Our main goal in this article was to add process to data in

our series on facets & data. We described a particular way

to execute a search and display a set of facets, following a

query cycle of request, execution, response, and

display. To stretch your understanding of facets, we did this

within the context of an advanced use case: we made our

facet search dynamic. Dynamic faceting creates a more

intuitive and useful facet search experience, particularly for

businesses that offer a diverse collection of products and

services.

Command languages

Typically think of OS command languages: DOS or Unix.

Interaction paradigm: user enters command, computer carries it out (with

feedback if required) then requests next command.

Cognitive requirements: relate to written languages

Not much new activity in command language development.

Command Language Design

Important design considerations:

Provide only sufficient functionality for users to carry out tasks

Macro or scripting capability

Consistency in command language design

Command abbreviations

Help feature

actions become commands

objects become arguments

Consistency is very important in command language design

(very taxing on memory, so minimize the number of chunks)

use of metaphor yields consistent terminology (command names)

(easier to learn, retain, recall -- e.g. up/down not forward/down)

consistent argument ordering

(e.g. first argument is source and second is destination)

consistent naming of command options

(e.g. v for verbose output regardless of command)

Command abbreviation useful for expert user

important because expert benefits the most from CUI

novice will require/prefer full names

easier to learn if only one abbreviation strategy used.

Multimedia Document Search and Specialized Search:

Multimedia search enables information search using queries in multiple data

types including text and other multimedia formats. Multimedia search can be

implemented through multimodal search interfaces, i.e., interfaces that allow

to submit search queries not only as textual requests, but also through other

media. We can distinguish two methodologies in multimedia search:

Metadata search: the search is made on the layers of metadata.

Query by example: The interaction consists in submitting a piece of

information (e.g., a video, an image, or a piece of audio) for the purpose

of finding similar multimedia items.

Metadata search

Search is made using the layers in metadata which contain information of

the content of a multimedia file. Metadata search is easier, faster and

effective because instead of working with complex material, such as an audio,

a video or an image, it searches using text.

There are three processes which should be done in this method:

Summarization of media content (feature extraction). The result of

feature extraction is a description.

Filtering of media descriptions (for example, elimination

of Redundancy)

Categorization of media descriptions into classes.

Query by example[edit]

In query by example, the element used to search is a multimedia content

(image, audio, video). In other words, the query is a media. Often, it's

used audiovisual indexing. It will be necessary to choose the criteria we are

going to use for creating metadata. The process of search can be divided in

three parts:

Generate descriptors for the media which we are going to use as query

and the descriptors for the media in our database.

Compare descriptors of the query and our database’s media.

List the media sorted by maximum coincidence.

Multimedia search engine

There are two big search families, in function of the content:

Visual search engine

Audio search engine

Visual search engine

Inside this family we can distinguish two topics: image search and video

search

Image search: Although usually it's used simple metadata search,

increasingly is being used indexing methods for making the results of users

queries more accurate using query by example. For example, QR codes.

Video search: Videos can be searched for simple metadata or by

complex metadata generated by indexing. The audio contained in the

videos is usually scanned by audio search engines.

Audio search engine

There are different methods of audio searching:

Voice search engine: Allows the user to search using speech instead of

text. It uses algorithms of speech recognition. An example of this

technology is Google Voice Search.

Music search engine: Although most of applications which searches

music works on simple metadata (artist, name of track, album…) . There

are some programs of music recognition, for

example Shazam or SoundHound.

Image Search – Video Search – Audio Search:

Searching

To search for media with the Shutterstock API, you need:

An account at https://www.shutterstock.com

An application at https://www.shutterstock.com/account/developers/apps

One of these types of authentication:

o

Basic authentication: You provide the client ID and client secret with

each API request.

o

OAuth authentication: You use the client ID and client secret to get

an authentication token and use this token in each request. In the

examples on this page, the access token is in

the SHUTTERSTOCK_API_TOKEN environment variable. For more

information about authentication, see Authentication.

Some API subscriptions return a limited set of results. See Subscriptions in the API

reference.

Keyword searches

To search for media with a keyword, pass the search keywords to the appropriate

endpoint:

Images: GET https://api.shutterstock.com/v2/images/search

Video: GET https://api.shutterstock.com/v2/videos/search

Audio: GET https://api.shutterstock.com/v2/audio/search

The search keywords must be URL encoded and in the query query parameter. The

API searches for the keywords in all textual fields, including but not limited to the

description, keywords, and title. For examples of search results, see Results.

Searches do not support wildcards such as *.

Here are some examples of simple image, video, and audio search requests:

Simple image keyword search

cURL

CLI

PHP

JavaScript

curl -X GET "https://api.shutterstock.com/v2/images/search" \

-H "Authorization: Bearer $SHUTTERSTOCK_API_TOKEN" \

-G \

--data-urlencode "query=kites"

Simple video keyword search

cURL

CLI

PHP

JavaScript

curl -X GET "https://api.shutterstock.com/v2/videos/search" \

-H "Authorization: Bearer $SHUTTERSTOCK_API_TOKEN" \

-G \

--data-urlencode "query=hot air balloon"

Simple audio keyword search

cURL

CLI

PHP

JavaScript

curl -X GET "https://api.shutterstock.com/v2/audio/search" \

-H "Authorization: Bearer $SHUTTERSTOCK_API_TOKEN" \

-G \

--data-urlencode "query=bluegrass"

Conditional searches

Searches for images and video can use AND, OR, and NOT conditions, but searches

for audio do not support these keywords.

To use AND, OR, or NOT in searches, you include these operators in

the query query parameter. The operators must be in upper case and they must be in

English, regardless of the language the keywords are in.

AND is added implicitly between each search keyword. Therefore, searching

for dog AND cat is equivalent to searching for dog cat.

OR searches for results that include any of the specified keywords, such

as dog OR cat OR mouse.

NOT searches exclude keywords from search results, such as dog NOT hot

dog. You can also use NOT in the contributor search field.

You can group conditional search terms with parentheses. For example, to search for

images with either dogs or cats, but not both, use (dog NOT cat) OR (cat NOT dog).

Here are some examples of searching with conditions:

cURL

CLI

PHP

JavaScript

curl -X GET "https://api.shutterstock.com/v2/images/search" \

-H "Authorization: Bearer $SHUTTERSTOCK_API_TOKEN" \

-G \

--data-urlencode "query=dog AND cat"

curl -X GET "https://api.shutterstock.com/v2/videos/search" \

-H "Authorization: Bearer $SHUTTERSTOCK_API_TOKEN" \

-G \

--data-urlencode "query=mountain NOT camping"

Bulk searches

You can run up to 5 image searches in a single request with the POST

/v2/images/bulk_search endpoint. The API returns a maximum of 20 results for each

search. To use this endpoint, pass multiple searches in the queries array in the

request body. Each search in the queries array has the same parameters as an

individual image search.

You can also pass query parameters in the request. These parameters become

defaults for each search, but the parameters in the individual searches override them.

Here is an example of sending 2 searches in a single request:

DATA='[

{

"query": "cat",

"license": ["editorial"],

"sort": "popular"

},

{

"query": "dog",

"orientation": "horizontal"

}

]'

curl -X POST "https://api.shutterstock.com/v2/images/bulk_search" \

-H "Authorization: Bearer $SHUTTERSTOCK_API_TOKEN" \

-H "Content-Type: application/json" \

-d "$DATA"

GEOGRAPHIC INFORMATION

SYSTEMS (GIS) SEARCH:

What We Do

Geographic Information Systems (GIS) is used to enhance decision making and provide

maps and information to the public and County agencies including:

Assessors Office

Community Development Resource Agency

County Executive Office

Elections

Facility Services

Health and Human Services

Public Works

Requests

For zoning information, please email our Public Counter Technicians.

For GIS/mapping requests (not zoning), please email the GIS Division.

Services & Information

Placer County Open GIS Data: Web mapping applications and GIS data download.

The place to find GIS data for Placer County that you can download or incorporate into

existing ArcGIS projects. Here you can also find web apps that include active building permits,

code compliance cases, and planning projects, among others.

Interactive GIS/Parcel Data Search: Search by Assessors Parcel Number or

address for zoning, parcel related information, aerial photography, etc.

Assessment Inquiry Website: Data information from the County Assessment Roll

(assessed values, assessment maps, etc.)

Current Permit Information: Check permit status, permit activity, project descriptions

and other permit-related information

Permit Research Tools: Various tools available for conducting land-use-related

permit research.

Multilingual Searches :

Multilingual meta-search engines: These search engines aggregate results from

multiple search engines, each specialized in a particular language or region.

They provide users with a unified interface to search across different search

engines and retrieve multilingual results from various sources.

A Moroccan immigrant who speaks some French and German in addition to his

Arabic dialect is multilingual, as is the conference interpreter who confidently

uses her three native or first languages of English, German and French as

working languages..

The Main Benefits of Multilingualism

Cognitive Benefits. Learning and knowing several languages sharpens

the mind and improves memory. ...

Improvement of Ability to Multitask. Being able to speak in multiple

languages allows you to perform better in many ways. ...

Improvement of Communication Skills.

DATA VISUALIZATION:

Data visualization is the graphical representation of information and data. By

using visual elements like charts, graphs, and maps, data visualization tools

provide an accessible way to see and understand trends, outliers, and patterns

in data. Additionally, it provides an excellent way for employees or business

owners to present data to non-technical audiences without confusion.

In the world of Big Data, data visualization tools and technologies are

essential to analyze massive amounts of information and make data-driven

decisions.

What are the advantages and disadvantages of data

visualization?

Something as simple as presenting data in graphic format may seem to have

no downsides. But sometimes data can be misrepresented or misinterpreted

when placed in the wrong style of data visualization. When choosing to create

a data visualization, it’s best to keep both the advantages and disadvantages

in mind.

Advantages

Our eyes are drawn to colors and patterns. We can quickly identify red from

blue, and squares from circles. Our culture is visual, including everything from

art and advertisements to TV and movies. Data visualization is another form of

visual art that grabs our interest and keeps our eyes on the message. When

we see a chart, we quickly see trends and outliers. If we can see something, we

internalize it quickly. It’s storytelling with a purpose. If you’ve ever stared at a

massive spreadsheet of data and couldn’t see a trend, you know how much

more effective a visualization can be.

Some other advantages of data visualization include:

Easily sharing information.

Interactively explore opportunities.

Visualize patterns and relationships.

Disadvantages

While there are many advantages, some of the disadvantages may seem less

obvious. For example, when viewing a visualization with many different

datapoints, it’s easy to make an inaccurate assumption. Or sometimes the

visualization is just designed wrong so that it’s biased or confusing.

Some other disadvantages include:

Biased or inaccurate information.

Correlation doesn’t always mean causation.

Core messages can get lost in translation.

Why data visualization is important

The importance of data visualization is simple: it helps people see, interact

with, and better understand data. Whether simple or complex, the right

visualization can bring everyone on the same page, regardless of their level of

expertise.

It’s hard to think of a professional industry that doesn’t benefit from making

data more understandable. Every STEM field benefits from understanding

data—and so do fields in government, finance, marketing, history, consumer

goods, service industries, education, sports, and so on.

While we’ll always wax poetically about data visualization (you’re on the

Tableau website, after all) there are practical, real-life applications that are

undeniable. And, since visualization is so prolific, it’s also one of the most

useful professional skills to develop. The better you can convey your points

visually, whether in a dashboard or a slide deck, the better you can leverage

that information. The concept of the citizen data scientist is on the rise. Skill

sets are changing to accommodate a data-driven world. It is increasingly

valuable for professionals to be able to use data to make decisions and use

visuals to tell stories of when data informs the who, what, when, where, and

how.

While traditional education typically draws a distinct line between creative

storytelling and technical analysis, the modern professional world also values

those who can cross between the two: data visualization sits right in the

middle of analysis and visual storytelling.

TASKS IN DATA VISUALIZATION:

Common roles for data visualization include:

showing change over time.

showing a part-to-whole composition.

looking at how data is distributed.

comparing values between groups.

observing relationships between variables.

looking at geographical data.

Task-based effectiveness of

basic visualizations

This is a summary of a recent paper on an age-old topic: what visualisation

should I use? No prizes for guessing “it depends!” Is this the paper to finally

settle the age-old debate surrounding pie-charts??

Task-based effectiveness of basic visualizations Saket et al., IEEE

Transactions on Visualization and Computer Graphics 2019

So far this week we’ve seen how to create all sorts of fantastic

interactive visualisations, and taken a look at what data analysts

actually do when they do ‘exploratory data analysis.’ To round off the

week today’s choice is a recent paper on an age-old topic: what

visualisation should I use?

…the effectiveness of a visualization depends on

several factors including task at the hand, and

data attributes and datasets visualized.

Saket et al. look at five of the most basic visualisations —bar charts,

line charts, pie charts, scatterplots, and tables— and study their

effectiveness when presenting modest amounts of data (less than 50

visual marks) across 10 different tasks. The task taxonomy comes

from the work of Amar et al., describing a set of ten low-level analysis

tasks that describe users’ activities while using visualization tools.

1.

Finding anomalies

2.

Finding clusters (counting the number of groups with similar

data attribute values)

3.

Finding correlations (determining whether or not there is a

correlation between two data attributes)

4.

Computing derived values, for example, computing an

aggregate value

5.

Characterising distributions, for example, figuring out which

percentage of data points have a value over a certain threshold

6.

Finding extremes (i.e., min and max)

7.

Filtering (finding data points that satisfy a condition)

8.

Ordering (ranking data points according to some metric)

9.

Determining a range (finding the span of values – pretty

straightforward if you can find the extremes – #6)

10.

Retrieving a value (identifying the values of attributes for

given points).

DATA & VIEW SPECIFICATION:

These controls enable users to selectively visualize the data,

to filter out unrelated information to focus on relevant items,

and to sort information to expose patterns.

Data and View Specification involve determining which data is to be shown and visualized with

programs such as Microsoft Excel. Then it involves the filtering of data which shifts the focus

among the different data subsets to isolate specific categories of values. Sorting the data can show

surface trends and clusters and organize data according to a unit of analysis. The following image

shows a more complex form of a matrix-based visualization of a social network.

The first matrix plot shows

a social network when people are sorted alphabetically. The second plot shows a reordering by

node degrees resulting in more structure and the third plot is permutated by network connectivity,

showing underlying clusters of communities. The final step is to derive new attributes from

existing values when input data is insufficient.

View Manipulation :

The second dynamic is View Manipulation which consists of highlighting patterns, investigation of

hypotheses and revealing additional details. Selection allows for pointing to an item of interest, for

example, dragging along axes to "create interactive selections that highlight automobiles with low

weight and high mileage." Navigating is determined by where the analyst begins, such as in a crime

map that depicts crime activity by time and region. Coordinating allows the analyst to see multiple

coordinated views at once which can facilitate comparison. This can be done in histograms, maps

or network diagrams. The following image shows a complex patchwork of interlinked tables, plots

and maps to analyze outcomes of elections in Michigan.

The image shows a combination of tables, plots and maps. The final step, organization, involves

arranging visualization views, legends and controls for more simplified viewing.

Process and Provenance:

The final dynamic is Process and Provenance which involves the actual interpretation of data.

Recording involves chronicling and visualizing analysts' interaction histories in both a

chronological and sequential fashion. Annotation includes recording, organizing and

communicating insights gained during analysis. Sharing involves the accumulation of multiple

analyses and interpretations derived from several people and the dissemination of results. Guiding

is the final step and includes developing new strategies to guide newcomers.

Visualization by Data Type :

Visualizing data is crucial to ensure that all the data you collect

translates into decisions that amplify your business growth. Here

are five data visualizations that are commonly used by companies

across the world.

Common Types of Data Visualizations

1.

Bar Chart

2.

Doughnut Chart or Pie Chart

3.

Line Graph or Line Chart

4.

Pivot Table

5.

Scatter Plot

1. Bar Chart

Bar charts or column charts have rectangle bars arranged on the X

or Y-axis. Comparing objects by aligning them with the same

parameters is the most popular visualization out there. Bar charts

can be used to track changes over time. However, bar graphs used

for time series yield accurate results when the changes are

considerably large. There are different categories of bar graphs like

stacked bar graphs, 100% stacked bar graphs, grouped bar graphs,

box plots, and waterfall charts (advanced bar graphs).

Bar charts are most suitable for:

Comparing a numerical value across categories

Identifying the order within a category

Representing a histogram (where the values on the X-axis are

grouped into buckets)

Use Case: You can use a bar chart for a visual representation of

your overall business revenue against your peers. If you want to

compare the individual split of product-wise revenue, your best

choice would be a stacked/grouped bar chart.

2. Doughnut Chart or Pie Chart

A Doughnut chart slices a doughnut into multiple parts based on

the field value. Doughnut charts or pie charts are suitable to depict

parts of a whole relationship, where all units together represent

100%. Drilled-down doughnut charts are interactive and help users

decipher complex data to get to the source of the issue or the

solution.

Use Case: You can analyze your budget using a doughnut chart.

Splitting the total budget across your expenses, investments, loan,

savings, etc. would give you an instant understanding of your

budget plan.

3. Line Graph or Line Chart

Line graphs have values plotted as lines across the X and Y-axis.

They are used to track changes over a short/long time frame. Line

graphs are better to use than bar graphs particularly when the

changes are minor. You also have the multi-line graph option when

you need to compare changes over the same time period for more

than one attribute.

Line graphs are best used to:

Display trend over a time series

Pinpoint outliers

Visualize forecasted data

Use Case: Say, you want to analyze your business’ month-wise

expenditure. The line graph will give you the best rendition by

plotting the values across months on the X-axis and expenditure on

the Y-axis.

4. Pivot Table

Pivot table as the name indicates has columns and rows with

aggregated values populated in the cells. The pivot table is the most

straight-forward visualization that can be used to convey a huge

amount of data at a single glance. It is easy to build and flexible to

modify. However, unlike the other infographic visualizations

discussed here, tables are not graphical and hence can be used only

in specific cases —

When you want to compare different unrelated metrics

required

When there are relatively lesser rows (display of data at the

top level as opposed to the granular level)

Use Case: Financial reports are generally depicted over tables.

Bringing in the years on rows and operating cash flow, investing

cash flow, cash from financing, and other metrics on the columns

will help you understand your business’s cash flow over the years.

5. Scatter Plot

Scatter plot shows the relationship of the common attribute

between two numerical variables plotted along both X and Y axes.

If you are a data scientist working with different sets of data,

scatter plot would be something that you commonly work with,

but for a novice user, it could be a little unfamiliar. Scatter plots

are best suitable to compare two numerical values simultaneously.

Segmentation charts and bubble charts are the advanced versions

of the scatter plot. The segmentation chart demarcates the scatter

plot into four quadrants, making the choice of the users easier.

Bubble sort brings in an additional dimension to the chart by

displaying varied sizes of bubbles over the scatter plot.

Use Case: You can present data for product price revision using the

scatter plot by bringing in the number of units sold on the X-axis,

current price on the Y-axis, and the products on the quadrant. It

will give you a clear outlook on the products that have a low price

yet have sold a good deal and can be considered for price

increment. Alternatively, you can bring in a price drop for

products that have a high price but are in low demand.

These types of charts, along with area charts, heat maps, and

treemaps are widely used visualization techniques by data analysts,

marketers, and financial analysts across the world. However, there

are specific visualizations that can be used to tackle the reporting

needs of unique data sets – for instance, it would be ideal to use

choropleth (Map chart), tree diagram, and radar chart for

geospatial, conditional, and multivariable data points respectively.

Choosing the perfect visualization from different types of data

visualizations can be challenging, but with a basic understanding of

these fundamental charts, your choice will be easier.

Challenges for Data Visualization:

Data visualization is a quick and simple technique to depict complicated

ideas for improved comprehension and intuition graphically. It must find

diverse relationships and patterns concealed by the massive amounts of

data. We can use traditional graphical representations to organize and

represent data. Still, it can be challenging to display Huge amounts of

data that is very diverse uncertain, and semi-structured or unstructured

in real-time. Massive parallelization is required to handle and visualize

such dynamic data. In this article, we will cover the major challenges

faced by big data visualization and its solutions.

Content

Data Quality

Accuracy

Completeness

Consistency

Format

Integrity

Timeliness

Not Choosing the Right Data Visualization Tools

Confusing Color Palate

Analytical & Technical Challenges

Data Quality

The data quality plays a crucial role in determining its effectiveness. Not

all data is created in the same way, and each has a different origin,

hence its heterogeneity.

No matter how powerful and comprehensive the big data tools at the

organization’s disposal are, insufficient or incomplete data can often lead

data scientists to conclusions that may not be entirely correct and,

therefore, could negatively impact business.

The effectiveness of big data in analysis depends on the accuracy,

consistency, relevance, completeness, and updating of the data used. With

these factors, the data analysis ceases to be reliable.

Accuracy

The most important thing is: How accurate is the data? How much can

you trust it? Is there certainty about the collection of relevant data? The

values in each field of the database must be correct and accurately

represent “real world” values.

Example: A registered address must be a real address. Names must be

spelled correctly.

Completeness

The data must contain all the necessary information and be easily

understandable by the user.

Example: If the first and last name are required in a form, but the

middle name is optional, the form can be considered complete even if the

middle name is not entered.

Consistency

The data must be the same throughout the organization and in all

systems.

Example: The data of a sale registered in the CRM of a company must

match the data registered in the accounting dashboard that you manage.

Format

The data must meet certain standards of type, format, size, etc.

Example: All dates must follow the format DD/MM/YY, or whichever

format you prefer. Names should only have letters, no numbers or

symbols.

Integrity

The data must be valid, which means that there are registered

relationships that connect all the data. Keep in mind that unlinked

records could introduce duplicate entries to your system.

Example: If you have an address registered in your database, but it is not

linked to any individual, business, or other entity, it is invalid.

Timeliness

Data must be available when the user expects and needs it.

Example: A hospital must track the time of patient care events in the ICU

or emergency room to assist doctors and nurses.

Not Choosing the Right Data Visualization Tools

The selection of big data tools often focuses on the technical plane, leaving

aside everything that is not directly related to the analysis. Acting this

way means ending up implementing solutions whose visualization

potential is narrower.

When this happens, the consequences do not take long to appear:

Causing difficulties in understanding the data.

Subtracting agility from the process of extracting and sharing

knowledge within the organization.

Increasing latencies in taking action.

Being able even to divert decision-making, which would lose

effectiveness.

Many data visualization tools, such as Tableau, Microsoft Power BI,

Looker, Sisense, Qlik, etc., offer data visualization integration capabilities.

If your organization already uses one of these tools, start there. If not, try

one. Once you select a tool, you’ll need to do a series of prototypes to

validate capabilities, ease of use, and operational considerations.

Here is a detailed list of considerations:

Do the chart types meet the business needs

How easy is it to integrate?

What are the flexibilities in device design and compatibility?

Is the security configurable for the required end user/consumer

rights?

Is it performing fast enough to integrate into an application?

Are the platform’s costs and pricing models aligned?