Notes on Algorithms

Kevin Sun

Preface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1 Array Algorithms

1.1 Max in Array .

1.2 Two Sum . . .

1.3 Binary Search .

1.4 Selection Sort .

1.5 Merge Sort . . .

1.6 Exercises . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

2 Basic Graph Algorithms

2.1 Breadth-First Search . .

2.2 Depth-First Search . . .

2.3 Minimum Spanning Tree

2.4 Exercises . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

3 Greedy Algorithms

3.1 Selecting Compatible Intervals

3.2 Fractional Knapsack . . . . .

3.3 Maximum Spacing Clusterings

3.4 Stable Matching . . . . . . . .

3.5 Exercises . . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

3

.

.

.

.

.

.

4

4

5

6

7

8

11

.

.

.

.

13

13

14

15

18

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

21

21

22

23

23

26

4 Dynamic Programming

4.1 Sum of Array . . . . . . . . . .

4.2 Longest Increasing Subsequence

4.3 Knapsack . . . . . . . . . . . .

4.4 Edit Distance . . . . . . . . . .

4.5 Independent Set in Trees . . . .

4.6 Exercises . . . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

28

28

28

29

30

31

33

.

.

.

.

.

35

35

36

36

37

39

5 Shortest Paths

5.1 Paths in a DAG . . .

5.2 Dijkstra’s algorithm .

5.3 Bellman-Ford . . . .

5.4 Floyd-Warshall . . .

5.5 Exercises . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

1

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

6 Flows and Cuts

6.1 Ford-Fulkerson . . . . .

6.2 Bipartite Matching . . .

6.3 Vertex Cover of Bipartite

6.4 Exercises . . . . . . . . .

7 Linear Programming

7.1 Basics of LPs . . .

7.2 Vertex Cover . . .

7.3 Zero-Sum Games .

7.4 Duality . . . . . . .

7.5 Exercises . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

. . . . .

. . . . .

Graphs

. . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

8 NP-Hardness

8.1 Basic Computational Complexity

8.2 3-SAT to Independent Set . . . .

8.3 Vertex Cover to Dominating Set .

8.4 Subset Sum to Scheduling . . . .

8.5 More Hard Problems . . . . . . .

8.6 Exercises . . . . . . . . . . . . . .

9 Approximation Algorithms

9.1 Vertex Cover . . . . . . .

9.2 Load Balancing . . . . . .

9.3 Metric k -Center . . . . . .

9.4 Maximum Cut . . . . . . .

9.5 Exercises . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

2

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

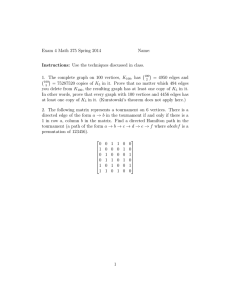

40

41

42

43

45

.

.

.

.

.

46

46

47

48

49

51

.

.

.

.

.

.

53

53

54

55

56

57

58

.

.

.

.

.

60

60

60

61

62

63

Preface

These notes are primarily written for those who are studying algorithms at a standard

undergraduate level. They assume familiarity with basic programming concepts (e.g., arrays,

loops), discrete math (e.g., graphs, proofs), and asymptotic notation. For a more thorough

treatment of the material, I recommend the following textbooks. I think they are all very

well written, and these notes draw heavily from them.

• Algorithms by Dasgupta, Papadimitriou, and Vazirani

• Algorithms by Erickson

• Algorithm Design by Kleinberg and Tardos

• Introduction to Algorithms by Cormen, Leiserson, Rivest, and Stein

Of course, there are many other wonderful textbooks and resources not listed above, and I

encourage you to seek them out. Here, each chapter starts with the main content and ends

with a list of exercises in roughly increasing order of difficulty.

I hope these notes teach you something, jog your memory about something you’ve learned

before, and/or inspire you to learn something new.

— Kevin Sun

June 2021

Last updated: August 2023

3

1

Array Algorithms

The problems in this chapter might look familiar, but we’ll examine them through the lens

of designing and analyzing algorithms (rather than writing executable code). In particular,

through these problems, we’ll explore two fundamental concepts used throughout theoretical computer science (TCS): correctness proofs and running time analysis. Note that we

generally index arrays starting at 1 (not 0).

1.1

Max in Array

Problem statement. Let A be an array of n integers. Our goal is to find the largest

integer in A.

Algorithm. We scan the elements of A, and whenever we encounter a value A[i] larger

than m (initially −∞), we set m to A[i]. After the scan, we return m.

Algorithm 1 Max in Array

Input: Array A of n integers

1: m ← −∞

2: for i ← 1, . . . , n do

3:

if A[i] > m then

4:

m ← A[i]

5: return m

Theorem 1.1. Algorithm 1 finds the largest integer in A.

Proof. Let i∗ denote the smallest index containing the largest integer in A. In Algorithm 1,

when i = i∗ , m is either −∞ or A[j] for some j < i∗ . Either way, A[i∗ ] > m, so m becomes

A[i∗ ]. In the rest of the scan, A[k] ≤ A[i∗ ] for all k from i∗ + 1 through n, so m remains

equal to A[i∗ ] and gets returned at the end.

The running time of an algorithm is the number of primitive operations that it makes

on a worst-case input (i.e., one that maximizes the number of such operations), in terms of

the input length. (An array containing n integers has length n.) Each of the following is a

primitive operation:

• Assigning a value to a variable (e.g., x ← 0)

• Performing a comparison (e.g., x > y)

• Performing an arithmetic operation (e.g., x + y)

• Indexing into an array (e.g., accessing A[3])

• Calling or returning from a method

4

Since asymptotic notation is ubiquitous in the field of algorithms, the exact number of

primitive operations does not matter, so it is not important to count extremely rigorously.

(For example, it does not matter exactly which operations are involved in a for-loop.) In

this chapter, we’ll count primitive operations somewhat more meticulously than we will in

later chapters.

Theorem 1.2. Algorithm 1 runs in O(n) time.

Proof. Line 1 is an assignment, which is 1 (primitive) operation. Line 2 assigns 1 to the

variable i, then increments i n − 1 times, so Line 2 contributes n operations over the course

of the algorithm. In the body of the loop, we do at most 4 primitive operations: index into

A, perform a comparison against m, index into A again, and set m to A[i]. Thus, Lines 3–4

contribute a total of at most 4n operations. Finally, returning m is a primitive operation.

Adding everything together, we see that the total number of primitive operations is at most

1 + n + 4n + 1 = 5n + 2 = O(n).

1.2

Two Sum

Problem statement. Let A be an array of n distinct integers sorted in increasing order,

and let t be an integer. Our goal is to find indices i, j such that i < j and A[i] + A[j] = t,

or report that no solution exists.

Algorithm. The algorithm repeats the following, starting with i = 1 and j = n: if

A[i] + A[j] = t, return (i, j). If A[i] + A[j] < t then increment i (which increases A[i]), and

if A[i] + A[j] > t then decrement j (which decreases A[j]). If we ever hit i = j, then return

0 to indicate that no solution exists.

Algorithm 2 Two Sum

Input: Sorted array A of n distinct integers, t

1: i, j ← 1, n

2: while i 6= j do

3:

if A[i] + A[j] = t then

4:

return (i, j)

5:

if A[i] + A[j] < t then

6:

i←i+1

7:

else

8:

j ←j−1

9: return 0

Theorem 1.3. Algorithm 2 correctly solves the Two Sum problem.

Proof. We need to show two things: (1) if the algorithm returns a solution (i, j), then

A[i] + A[j] = t, and (2) if the algorithm returns 0, then the input has no solution. Part (1) is

relatively simple: if the algorithm returns (i, j), then A[i] + A[j] = t because the algorithm

checks this equality immediately before returning (i, j).

5

Now let’s show (2). We’ll actually show the contrapositive: if the input has a solution,

then the algorithm returns a solution (i, j). Let (i∗ , j ∗ ) denote the solution with the smallest

value of i∗ . If 1 = i∗ and j ∗ = n, then the algorithm immediately returns (1, n). If not, then

i and j iteratively get closer to each other until one of the following cases occurs:

• i reaches i∗ before j reaches j ∗ : When i = i∗ , A[i] + A[j] > A[i] + A[j ∗ ] = t for all

j > j ∗ since the integers in A are sorted and distinct. Thus, the algorithm decreases j

until j = j ∗ while keeping i = i∗ .

• j reaches j ∗ before i reaches i∗ (similar to the previous case): When j = j ∗ , A[i]+A[j] <

t for all i < i∗ , so the algorithm increases i until i = i∗ while keeping j = j ∗ .

In either case, the algorithm eventually tests if A[i∗ ] + A[j ∗ ] = t, at which point it correctly

returns (i∗ , j ∗ ).

Theorem 1.4. Algorithm 2 runs in O(n) time.

Proof. Line 1 makes 2 primitive operations. In each iteration of the while loop, we perform

a comparison (i 6= j); at most 2 more comparisons and 4 instances of indexing into A

(Lines 3 and 5); and at most one return, increment, or decrement (Lines 4, 6, 8). Since

the distance between j − i is initially n − 1, and each iteration either decreases j − i by

1 or terminates the algorithm, the number of iterations is at most n − 1. Thus, Lines 2–8

contribute at most (1 + 6 + 1) · (n − 1) = 8n − 8 operations. Finally, Line 9 contributes at

most 1 operation. Adding everything together, we see that the total number of operations

is at most 2 + 8n − 8 + 1 = 8n − 5 = O(n).

1.3

Binary Search

Problem statement. The Search problem is the following: Let A be an array of n integers

sorted in non-decreasing order, and let t be an integer. Our goal is to find an index x such

that A[x] = t, or report that no such index exists.

Intuition. We start by checking if the the middle element of A is t. If it’s too small, then

t must be in the right half of A (if it’s in A at all), so we recurse on that subarray; otherwise,

we recurse on the left half of A. We continue halving the size of the subarray until we find

t or determine it’s not in A. In the worst case, t 6∈ A and the number of rounds is roughly

log2 n, because that’s how many times we can halve n until we reach 1. Since each round

takes constant time, the overall running time is O(log n).

Algorithm. Starting with i = 1 (representing the left endpoint of the subarray under

consideration) and j = n (the right endpoint), we will repeatedly calculate m = b(i + j)/2c

and checking if A[m] = t. If so, we return m; if A[m] < t, we set i to m + 1; else, we set j

to m − 1. If i > j at any point, we return 0, which signifies that t is not in A.

Theorem 1.5. Algorithm 3 correctly solves the Search problem.

6

Algorithm 3 Binary Search

Input: Sorted array A of n integers, t

1: i ← 1, j ← n

2: while i ≤ j do

3:

m ← b(i + j)/2c

4:

if A[m] = t then

5:

return m

6:

if A[m] < t then

7:

i←m+1

8:

else

9:

j ←m−1

10: return 0

Proof. We again prove two parts, where first part is much simpler: (1) If the algorithm

returns some m, then it must satisfy A[m] = t. For part (2), we want to show that if t is in

A, then Algorithm 3 finds it.

The key idea is that in every iteration, the algorithm shrinks A[i : j] (the subarray of A

from index i through index j) while ensuring that t is still in the shrunken subarray. To see

this, notice that if A[m] < t, then t is in A[m + 1 : j] because A is sorted, and in this case,

the algorithm increases i to m + 1. (This is indeed an increase since the starting value of i is

b2i/2c ≤ b(i + j)/2c = m.) Similarly, if A[m] > t, the algorithm decreases j to m − 1 while

maintaining that t is in A[i : j]. So in each iteration, the algorithm either finds t or shrinks

the size of A[i : j]. This continues until it returns some m or i = j, at which point m = i = j

so A[i : j] = A[m] = t.

Theorem 1.6. Algorithm 3 runs in O(log n) time.

Proof. At any point in the algorithm, the size of the subarray under consideration is j − i + 1

(initially n), and in the worst case, t is not in A so we iterate until i > j. Let d = j − i + 1

at the beginning of some iteration, and let d0 = j − i + 1 at the end of the iteration. If i

becomes m + 1, then we have

i+j

i+j 1

j−i+1

d

0

≤j−

+ =

= .

d = j − (m + 1) + 1 = j −

2

2

2

2

2

Similarly, if j becomes m − 1, then we have d0 = (m − 1) − i + 1 ≤ d/2. Either way, d0 ≤ d/2,

i.e., in each round, A[i : j] shrinks to at most half of its size at the beginning of the round.

Initially, A[i : j] = A has n elements, so after k rounds, A[i : j] has at most n/2k elements.

This means if k > log2 n, then A[i : j] has no elements (i.e., i > j), so the algorithm must

terminate at this point. In each round, the algorithm performs a constant number of operations (e.g., 3 comparisons, at most 1 return, etc.), so the total number of operations is

O(log n).

1.4

Selection Sort

Problem statement. Let A be an array of n integers. Our goal is to sort the elements of

A (i.e., rearrange them such that A[1] ≤ A[2] ≤ · · · ≤ A[n]).

7

Algorithm. The algorithm proceeds in rounds, starting with i = 1. In each round of the

algorithm, we scan A[i] through A[n] to find the index j of the smallest element in this

subarray A[i : n]. Then we swap the values of A[i] and A[j], increment i, and repeat the

process until i reaches n.

Algorithm 4 Selection Sort

Input: Array A of n integers

1: for i ← 1, . . . , n do

2:

m←i

3:

for j ← i + 1, . . . , n do

4:

if A[j] < A[m] then

5:

m←j

6:

Swap A[i], A[m]

7: return A

Theorem 1.7. Algorithm 4 sorts the input.

Proof. Sorting A amounts to setting A[1] as the smallest element of A, A[2] the second

smallest element of A, and so on; this is precisely what the algorithm does.

We can proceed more formally by induction. Our claim is that at the end of round i,

A[1 : i] contains the i smallest i elements of A in sorted order. The theorem follows from this

claim when i = n. Our base case is when i = 1, in which case the algorithm indeed sets A[1]

to be the smallest element of A. More generally, in Lines 2–5 of round i + 1, the algorithm

scans A[i + 1 : n] to find the smallest element of A[i + 1 : n], which is the (i + 1)-th smallest

element of A (since A[1 : i] contains i smaller elements). In Line 6, it places this element at

index i. The result is that at the end of round i + 1, A[1 : i + 1] contains the i + 1 smallest

elements of A in sorted order, as desired.

Theorem 1.8. Algorithm 4 runs in O(n2 ) time.

Proof. In round i, j iterates from i + 1 through n. In each of these n − i iterations, the

algorithm makes a constant number of operations (increment j, access A twice, perform a

comparison, etc.). Besides those, in round i, the algorithm performs a constant number of

operations (set m to i, access A twice, etc.). So the total number of operations in round i

is at most c(n − i) for some constant c. Summing over all i = 1, . . . , n, we see that the total

number of operations is at most

!

n

n

X

X

n(n + 1)

n2 − n

2

2

=c·

= O(n2 ).

c

(n − i) = c · n −

i =c· n −

2

2

i=1

i=1

In fact, regardless of the values in A, Algorithm 4 makes at least c0 (n−i) operations in round

i for some constant c0 , so the running time is Θ(n2 ).

1.5

Merge Sort

Problem statement. Let A be an array of n integers. Our goal is to sort the elements of

A (i.e., rearrange them such that A[1] ≤ A[2] ≤ · · · ≤ A[n]).

8

Algorithm. The algorithm is recursive: if A only has 1 element, then we can simply return

A. Otherwise, we split A into two its left and right halves, and recursively sort each half.

Then we perform the defining step of this algorithm: in linear time, we merge the two sorted

halves into a single sorted array.

Algorithm 5 Merge Sort

Input: Array A of n integers

1: if n = 1 then

2:

return A

3: k ← bn/2c

4: AL , AR ← MergeSort(A[1 : k]), MergeSort(A[k + 1 : n])

5: Create empty list B

6: i, j ← 1, 1

7: while i ≤ k and j ≤ n − k do

. Extend B by merging AL and AR

8:

if AL [i] ≤ AR [j] then

9:

Append AL [i] to B, i ← i + 1

10:

else

11:

Append AR [j] to B, j ← j + 1

12: if i > k then

. Append remaining elements

13:

Append each element of AR [j : ] to B

14: else

15:

Append each element of AL [i : ] to B

16: return B (as an array)

Theorem 1.9. Algorithm 5 sorts the input.

Proof. Since the algorithm is recursive, it is natural for us to proceed by induction. If n = 1,

then the algorithm correctly returns A as it is. Otherwise, suppose the algorithm sorts all

arrays of size less than n, so AL and AR are each sorted.

Consider the first iteration of Line 7: if AL [1] ≤ AR [1], then because AL and AR are

sorted, AL [1] must be the smallest element of A. Therefore, appending AL [1] as the first

element of B is correct. In general, if AL [i] ≤ AR [j], then among the elements of A that

haven’t been appended to B, AL [i] must be the smallest, so appending it to B (in Line 9)

is correct. (The same reasoning holds if AR [j] < AL [i].) If i exceeds k, then for the value

of j at that point, we must have AL [k] ≤ AR [j]. Thus, the subarray AR [j : ] contains all

remaining elements of A in sorted order, so appending them to B (in Line 13) is correct.

(The same reasoning holds if j exceeds n − k.)

Theorem 1.10. Algorithm 5 runs in O(n log n) time.

Proof. Let T (n) denote the running time of Algorithm 5 on an input of size n. In Line 4,

we call the algorithm itself on two inputs of size roughly n/2 each.1 In each iteration of

1

For simplicity, we are assuming n/2 is always an integer, but the full analysis isn’t very different.

9

Line 7, i + j increases by 1, and since i + j ≤ n, Lines 7-11 take O(n) time. Appending the

remaining elements of AR or AL also takes O(n) time. Putting this all together, we have

n

+ cn

T (n) = 2 · T

2

for some constant c, and we can assume T (1) = k for some constant k ≥ c. We shall prove

T (n) ≤ kn(log n + 1) by induction, where the logarithm is of base 2. The base case is true

since T (1) = k ≤ k · 1 · (0 + 1). Now we proceed inductively:

n

T (n) = 2 · T

+ cn

2

kn n

≤2·

log + 1 + cn

2

2

≤ kn log n + kn

= kn(log n + 1).

If n ≥ 2, then 1 ≤ log n, so T (n) ≤ kn · (2 log n) = O(n log n), as desired.

10

1.6

Exercises

Throughout these notes, whenever an exercise asks for an algorithm that solves some problem

in some amount of time, it is implicitly also asking for a proof of correctness and a running

time analysis.

1.1. Suppose we want to maximize the number of operations performed by Algorithm 1

(finding the max in an array). What kind of input would we give it?

1.2. What is a lower bound on the number of operations made by Algorithm 1? Your

answer should be in terms of n and as large as possible.

1.3. Run Algorithm 2 (Two Sum) on the input A = [1, 3, 4, 5, 6, 9] and t = 8, keeping track

of exactly how i and j change.

1.4. Run Algorithm 4 (Selection Sort) on the input A = [4, 1, 3, 5, 9, 6], printing the contents

of A at the end of each round.

1.5. Our solution to the Two Sum problem is considered a “two-pointer” approach rather

than “brute force.” Give an O(n2 )-time, brute-force algorithm for the same problem.

What are the advantages and disadvantages of each approach?

1.6. Consider the Two Sum problem, but suppose the constraint is i ≤ j (rather than

i < j). How does the two-pointer algorithm and its analysis change, if at all?

1.7. What is the running time of Selection Sort if the input is already sorted in increasing

order? And if the input is sorted in decreasing order?

1.8. Let A be an array of n distinct, positive integers, and suppose we want to find the

sum of the two largest elements in A. Give an O(n)-time algorithm for this problem.

Bonus: Your algorithm should loop through the array exactly once.

1.9. Let A be an array of n distinct integers, and let t be an integer. We want to find

distinct indices i, j, k such that A[i] + A[j] + A[k] = t. Give an O(n2 )-time algorithm

for this problem.

1.10. Let A be an (unsorted) array containing n + 1 integers; every integer {1, 2, . . . , n} is

in A, and one of them appears in A twice. Give an O(n)-time algorithm that returns

the integer that appears twice. Bonus: Do not construct a separate array.

1.11. Let A be an array of n integers. We want to find indices i, j such that i ≤ j and

the sum from A[i] through A[j] is maximized. Give an O(n2 )-time algorithm for this

problem. Bonus: O(n) instead of O(n2 ).

1.12. Let A be a sorted array of n integers. We want to return a sorted array that contains

the squares of the elements of A. Give an O(n2 )-time algorithm for this problem.

Bonus: O(n) instead of O(n2 ).

11

1.13. Let A be an array of n integers. We want to find indices i, j such that i < j and

A[j] − A[i] is maximized. Give an O(n2 )-time algorithm for this problem. Bonus:

O(n) instead of O(n2 ).

1.14. Let A be an array of n positive integers. We want to find indices i, j such that i < j and

(j − i) · min(A[i], A[j]) is maximized. Give an O(n2 )-time algorithm for this problem.

Bonus: O(n) instead of O(n2 ).

1.15. Let A be an array of n integers. For any pair of indices (i, j), we call (i, j) an inversion

if i < j and A[i] > A[j]. Give an O(n log n)-time algorithm that returns the number

of inversions in A.

12

2

Basic Graph Algorithms

A graph G = (V, E) consists of a set of vertices and edges. If the graph is directed, then

each edge is of the form (u, v); otherwise, an edge between u and v is technically {u, v}, but

people sometimes still use (u, v), maybe because it looks a bit nicer.

2.1

Breadth-First Search

Problem statement. Let G = (V, E) be a directed graph and s be a vertex of G. Our

goal is to find the shortest path from s to all other vertices in G.

Remark. We’ll cheat a bit: instead of finding the shortest path from s to all other vertices,

we’ll find the length of the shortest path (i.e., distance) from s to all other vertices. Once we

understand how to find distances, recovering the paths themselves is fairly straightforward.

This concept appears throughout these notes.

Algorithm. We’ll populate an array d, where d[u] will eventually contain the distance from

s to u, starting with d[s] = 0. Then, for each out-neighbor u of s, set d[u] = 1. Then, for

each out-neighbor v of those out-neighbors (that aren’t out-neighbors of s), set d[v] = 2. In

general, the algorithm proceeds out from s in these layers using a queue to properly order the

vertices. Since it finishes each layer before moving onto the next, it is known as breadth-first

search (BFS).

Algorithm 6 Breadth-First Search (BFS)

Input: Graph G = (V, E), s ∈ V

1: d[s] ← 0, d[u] ← ∞ for each u ∈ V \ {s}

2: Create a queue Q = (s)

3: while Q is not empty do

4:

Dequeue a vertex u from Q

5:

for each out-neighbor v of u do

6:

if d[v] = ∞ then

7:

d[v] ← d[u] + 1, add v to Q

8: return d

Theorem 2.1. At the end of BFS, for every vertex v, d[v] is the distance from the starting

vertex s to v.

Proof. Let k denote the distance between s and v; we proceed by induction on k. If k = 0,

then s = v and d[s] = 0, so we are done. Otherwise, by induction, we can assume d[u] = k −1

for every vertex u that has distance k − 1 from s. At some point during the algorithm, these

vertices are exactly the ones in Q. At least one such vertex has v as an out-neighbor; let u

denote the first such vertex removed from Q. When u gets removed from Q, v still has not

been added, so the algorithm sets d[v] = d[u] + 1 = k − 1 + 1 = k, as desired.

Theorem 2.2. BFS runs in O(m + n) (i.e., linear) time.

13

Proof. The initialization of d and Q take O(n) time. Every vertex gets added to Q at most

once, so we execute the main loop at most n times. It might seem like processing a vertex u

involves O(n) operations (since u could have roughly n neighbors), making the total running

time O(n2 ). This P

is true, but we can analyze this more carefully: processing u only requires

deg(u) time, and u∈V deg(u) = 2m, so the overall running time is O(n + m). This is O(m)

if the graph is connected, since in that case, n ≤ m + 1.

Also, we generally assume that the graph is given in adjacency list format, so for a graph

with n vertices and m edges, the size of the input is roughly m + n. Any algorithm whose

running time is proportional to the length of the input is a linear-time algorithm.

To find the shortest path from s to all other vertices, we can maintain a “parent array”

p as we run BFS. In particular, whenever we set d[v] to be d[u] + 1, we also set p[v] = u.

This indicates that on the shortest path from s to v, u is the vertex before v. By tracing

these pointers p backwards, we can recover the shortest paths.

2.2

Depth-First Search

Problem statement. Let G = (V, E) be a directed graph. Our goal is return a directed

cycle in G or report that none exists.

Remark. Lets start by looking at how Depth-First Search (DFS) labels every vertex u with

two positive integers: pre[u] and post[u]. These values allow us to solve multiple problems,

including the cycle detection problem stated above.

DFS uses an “explore” procedure (Algorithm 7), which recursively traverses some path

until it gets “stuck” because it has already visited all neighbors of the current vertex. It

then traverses another path until it gets stuck again. This process repeats until all vertices

have been visited (by possibly restarting the process from another vertex). The algorithm

gets its name because it goes as “deep” as possible (along some path) before turning around.

Algorithm 7 Explore

Input: Graph G = (V, E), u ∈ V

1: Mark u as visited, pre[u] ← t, t ← t + 1

2: for each out-neighbor v of u do

3:

if v has not been marked as visited then

4:

p[v] ← u, Explore(G, v)

5: post[u] ← t, t ← t + 1

DFS also maintains a global “clock” variable t, which is incremented whenever a vertex

receives its pre or post value. Every vertex u receives its pre value when the exploration of u

begins, and it receives its post value when the exploration ends. Also, when the exploration

traverses an edge (u, v) we set p[v] = u, indicating that vertex v “came from” u, so u is the

“parent” of v. If s is the first vertex explored then pre[s] = 1, and if v is the last vertex to

finish exploring then post[v] = 2n. Every vertex gets explored at most once, and every edge

participates in Line 2 at most once, so the running time of DFS is O(m + n).

14

Algorithm 8 Depth-First Search (DFS)

Input: A graph G = (V, E)

1: Initialize all vertices as unmarked, t ← 1

2: for each vertex u ∈ V do

3:

if u has not been marked as visited then

4:

p[u] ← u, Explore(G, u)

5: return pre, post, p

Definition 2.3. An edge (u, v) in G is a back edge if pre[v] < pre[u] < post[u] < post[v].

Theorem 2.4. A directed graph has a cycle if and only if DFS creates a back edge.

Proof. If (u, v) is a back edge, then while v was being explored, the algorithm discovered u.

Since the algorithm only follows edges in the graph, this means there is a path from v to u

in the graph. This path, combined with the edge (u, v), is a cycle.

Conversely, suppose the graph has a cycle C. Let v denote the first vertex of C explored

by DFS, and let u denote the vertex preceding v in C. Then while v is being explored, u

will be discovered, which will lead to (u, v) being a back edge.

The proof of Theorem 2.4 gives our O(m + n)-time algorithm for the cycle detection

problem. If DFS does not create any back edges, then the graph is acyclic. Otherwise, if

(u, v) is a back edge, then there must be a path from v to u traversed by DFS, which together

with (u, v) forms a cycle.

Classifying edges. After running DFS on a directed graph G, we can naturally partition

the edges as follows:

• An edge (u, v) is a tree edge if it was directly traversed by DFS (i.e., p[v] = u). Let T

denote the subgraph of G containing its vertices and tree edges.

• An edge (u, v) is a forward edge if there is a path from u to v in T .

• An edge (u, v) is a back edge if there is a path containing from v to u in T . (This is

equivalent to the definition given above.)

• An edge (u, v) is a cross edge if there is no (directed) path from u to v or from v to u

in T (i.e., u and v do not have an ancestor/descendant relationship).

2.3

Minimum Spanning Tree

Problem statement. Let G = (V, E) be an undirected graph where each edge e has

weight we > 0. Our goal is to compute F ⊆ E such that (V, F ) is connected and the total

weight of edges in F is minimized. Any subset of E satisfying these two properties is a

minimum spanning tree (MST) of G.

15

Remark. For simplicity, unless stated otherwise, we assume that all of the edge weights

in G are distinct. It follows (by an exchange argument similar to the proofs in this section)

that G has exactly one MST. Thus, we can refer to the (rather than an) MST of G.

Algorithm. Instead of giving a single algorithm, we first prove two facts about MSTs

known as the cut property and the cycle property. Using these two facts, we can prove the

correctness of three natural greedy algorithms for the MST problem.

Lemma 2.5 (Cut Property). For any subset S of V (S is called a cut), the lightest edge

crossing S (i.e., having exactly one endpoint in S) is in the MST of G.

Proof. Let T denote the MST of G, and for contradiction, suppose there exists a cut S such

that the lightest edge e that crosses S is not in T . Notice that T ∪ {e} must contain a

cycle C that includes e. Since e crosses S, some other edge f ∈ C must cross S as well,

and since e is the lightest edge crossing S, we must have w(e) < w(f ). Thus, adding e and

removing f from T yields a spanning tree with less total weight than T , and this contradicts

our assumption that T is the MST.

Lemma 2.6 (Cycle Property). For any cycle C in G, the heaviest edge in C is not in the

MST of G.

Proof. The proof is similar to that of the cut property. Let T denote the MST of G, and for

contradiction, suppose there exists a cycle C whose heaviest edge f belongs to T . Removing

f from T disconnects T into two trees, and also defines a cut of G. Some other edge e of C

must cross this cut, and since f is the heaviest in C, we have w(e) < w(f ). Thus, adding

e and removing f from T yields a spanning tree with less total weight than T , and this

contradicts our assumption that T is the MST.

We are now ready to state three algorithms for the MST problem:

1. Kruskal’s algorithm. Sort the edges in order of increasing weight. Then iterate

through the edges in this order, adding each edge e to F (initially empty) if (V, F ∪{e})

remains acyclic.

2. Prim’s algorithm. Let s be an arbitrary vertex and initialize a set S = {s}. Repeat

the following n − 1 times: add the lightest edge e crossing S to the solution (initially

empty), and add the endpoint of e not in S to S.

3. Reverse-Delete. Sort the edges in order of decreasing weight. Then iterate through

the edges in this order, removing each edge e from F (initially all of E) if (V, F \ {e})

remains connected. (This a “backward” version of Kruskal’s algorithm.)

Given the cut and cycle properties, the proofs of correctness for all three algorithms are

fairly short and straightforward. Below, we prove the correctness of Kruskal’s algorithm.

Theorem 2.7. At the end of Kruskal’s algorithm, F is a minimum spanning tree.

16

Proof. Consider any edge e = {u, v} added to F , and let S denote the set of vertices

connected to u via F immediately before e was added. Notice that u ∈ S and v ∈ V \ S.

Furthermore, no edge crossing S has been considered by the algorithm yet, because it would

have been added, which would have caused both of its endpoints to be in S. Thus, e is the

lightest edge crossing S, so by the cut property, e is in the MST.

Now we know F is a subset of the MST; we still need to show that F is spanning. For

contradiction, suppose that there exists a cut S ⊂ V such that no edge crossing S is in F .

But the algorithm would have added the lightest edge crossing S, so we are done.

For each of the three algorithms, there is a relatively straightforward implementation

that runs in O(m2 ) time; we leave the details as an exercise.

17

2.4

Exercises

2.1. Let G be an undirected graph on n vertices, where n ≥ 2. Is it possible, depending on

the starting vertex and tiebreaking rule, that at some point during BFS, all n vertices

are simultaneously in the queue?

2.2. Recall that the running time of BFS is O(m+n), where m and n are the number of edges

and vertices, respectively, in the input graph. In a complete graph, m = n(n − 1)/2,

which makes the running time Ω(n2 ). In light of this case, why do we still say that

BFS runs always runs in linear time?

2.3. Let G be a directed path graph on n vertices. If we run BFS on G starting at the first

vertex, what is the d value of each vertex? After we run DFS on G starting at the first

vertex, what are the pre and post values of each vertex?

2.4. Let T be an MST of a graph G. Suppose we increase the weight of every edge in G by

1. Prove that T is still an MST of G, or give a counterexample.

2.5. Suppose every edge in an undirected graph G has the same weight w. Give a linear-time

algorithm that returns an MST of G.

2.6. Give an O(n)-time algorithm that finds a cycle in an undirected graph G, or reports

that none exists.

2.7. Show that Kruskal’s, Prim’s, and the Reverse-Delete algorithm can be implemented to

run in O(m2 ) time.

2.8. Give the analogous versions of Definition 2.3 for tree, forward, and cross edges. Note

that the definitions might overlap.

2.9. Suppose we run DFS on an undirected graph. How does the edge classification system

(tree/forward/back/cross) change, if at all?

2.10. Give an undirected graph G with distinct edge weights such that Kruskal’s and Prim’s

algorithms, when run on G, return their solutions in different orders.

2.11. Let G = (V, E) be an undirected graph with n vertices. Show that if G satisfies any

two of the following properties, then it also satisfies the third: (1) G is connected, (2)

G is acyclic, (3) G has n − 1 edges.

2.12. Prove that an undirected graph is a tree if and only if there exists a unique path

between each pair of vertices.

2.13. Prove that a graph has exactly one minimum spanning tree if all edge weights are

unique, and show that the converse is not true.

2.14. Consider the MST problem on a graph containing n vertices.

(a) Prove the correctness of Prim’s algorithm, and show that it can be implemented

to run in O(n2 ) time.

18

(b) Prove the correctness of the Reverse-Delete algorithm.

2.15. Let G be a directed graph and s be a starting vertex. Give a linear-time algorithm

that returns the number of shortest paths between s and all other vertices.

2.16. An undirected graph G = (V, E) is bipartite if there exists a partition of V into two

nonempty groups such that no edge of E has one endpoint in each group.

(a) Prove that G is bipartite if and only if G contains no odd cycle.

(b) Give a linear-time algorithm to determine if an input graph G is bipartite. If so,

the algorithm should return a valid partition of V (i.e., the algorithm should label

each vertex using one of two possible labels). If not, the algorithm should return

an odd cycle in G.

2.17. Let G be a directed acyclic graph (DAG). Use DFS to give a linear-time algorithm that

orders the vertices such that for every edge (u, v), u appears before v in the ordering.

(Such an ordering is known as a topological sort of G.)

2.18. Let G be a connected undirected graph. An edge e is a bridge if the graph (V, E \ {e})

is disconnected.

(a) Give an O(m2 )-time algorithm to determine if G has a bridge, and if so, the

algorithm should return one.

(b) Assume G is bridgeless. Give a linear-time algorithm that orients every edge (i.e.,

turns it into a directed edge) in a way such that the resulting (directed) graph is

strongly connected.

2.19. Let G be a graph containing a cycle. Our goal is to return the shortest cycle in G.

Give an O(m2 )-time algorithm assuming G is undirected, and give an O(mn)-time

algorithm assuming G is directed.

2.20. Let G be a directed graph. We say that vertices u and v are in the same strongly

connected component (SCC) if they are strongly connected, i.e., there exists a path

from u to v and a path from v to u. Consider the graph GS whose vertices are the

SCC’s of G and there exists an edge from A to B if (and only if) there exists at least

one edge (u, v) in G with u ∈ A and v ∈ B.

(a) Show that GS is a DAG.

(b) Give a linear-time algorithm to construct GS using G.

2.21. Recall that the distance between two vertices is the length of the shortest path between

them. The diameter of a graph is the maximum distance between any two vertices.

(a) Give an O(mn)-time algorithm that computes the diameter of a graph.

(b) Give a linear-time algorithm that computes the diameter of a tree.

19

2.22. Consider the following variant of the MST problem: our goal is to find a spanning tree

such that the weight of the heaviest edge in the tree is minimized. In other words,

instead of minimizing the sum, we are minimizing the maximum. Prove that a standard

MST is also optimal for this new problem.

2.23. Let T be a spanning tree of a graph G, which has distinct edge weights. Prove that

T is the MST of G if and only if T satisfies the following property: for every edge

e ∈ E \ T , e is the heaviest edge in the cycle in T ∪ {e}.

20

3

Greedy Algorithms

In this chapter, we consider optimization problems whose optimal solution can be built

iteratively as follows: in each step, there is a natural “greedy” choice, and the algorithm is

to repeatedly make that greedy choice. Note that the three algorithms for the MST problem

can all be considered greedy.

Sometimes, there are multiple interpretations for what it means to be greedy, and we will

have to figure out which choice is the correct one for the problem. And for many problems,

there is no optimal greedy solution. But in this chapter, we will only be concerned with

problems for which some greedy algorithm works. The fact that a greedy algorithm works

at all is the main focus of this chapter; we will not focus on running times.

3.1

Selecting Compatible Intervals

Problem statement. Suppose there is a set of n events. Each event i is represented by

a time interval [s(i), t(i)], where s(i) and t(i) are positive integers and s(i) < t(i). A subset

of events is compatible if no two events in the subset overlap at any point in time. Our goal

is to select a largest subset of compatible events.

Algorithm. Repeatedly select the event that finishes earliest. More formally: sort the

events by their end time so that t(1) ≤ t(2) ≤ · · · ≤ t(n). Then add the first event to the

solution and traverse the list until we reach a non-conflicting event. Add this event as well,

and repeat this process until we’ve traversed the entire list.

Theorem 3.1. The algorithm optimally solves the problem of selecting a largest subset of

compatible intervals.

We’ll give two proofs of this theorem; each one is acceptable for our purposes.

Proof 1 (“ALG stays ahead”). Suppose our solution returns m events, which we denote by

ALG = {i1 , . . . , im }. Let OPT = {j1 , . . . , jm∗ } denote an optimal solution containing m∗

events. Assume that ALG and OPT are both sorted in increasing finishing time, so i1 is the

event that finishes first among all n events. This means t(i1 ) ≤ t(j1 ), so if we only consider

i1 and j1 , ALG is “ahead” of OPT because it finishes at least as early.

In general, we can use induction to show that ALG “stays ahead” of OPT at every step,

that is, t(ik ) ≤ t(jk ) for every k ≤ m. We’ve shown the statement is true for k = 1.

Assuming it is true for k − 1, we have t(ik−1 ) ≤ t(jk−1 ). Since jk starts after jk−1 ends, it

also starts after ik−1 ends. This means jk is a candidate after the algorithm picks ik−1 , so

by the algorithm’s greedy choice when picking ik , we must have t(ik ) ≤ t(jk ).

In particular, this means t(im ) ≤ t(jm ). Now, for contradiction, suppose m∗ ≥ m + 1.

The event jm+1 starts after jm ends, so it also starts after im ends. This means the algorithm

could have picked jm+1 after picking im , but it actually terminated. This contradicts the

fact that the algorithm processes the entire list of events.

Proof 2 (An “exchange” argument). Sort the events such that t(1) ≤ t(2) ≤ · · · t(n). Let

ALG be the algorithm’s solution, let OPT be an optimal solution. Also, let ALG(i) and

21

OPT(i) denote the i-th event in ALG and OPT, respectively. Finally, let m = |ALG| and

m∗ = |OPT| denote the number of events in ALG and OPT, and let [n] = {1, . . . , n} for any

positive integer n. We know m ≤ m∗ ; our goal is to show that m∗ > m is not possible.

Suppose ALG(i) = OPT(i) for all i ∈ [m]. Then m∗ ≤ m because otherwise, the algorithm

could have added OPT(m + 1) instead of stopping after ALG(m), but this contradicts the

definition of ALG(m) as the last event in ALG.

On the other hand, suppose ALG 6= OPT, and let i ∈ [m] denote the smallest index

such that ALG(i) 6= OPT(i). We will construct another optimal solution OPT0 such that

|OPT0 | = |OPT| and ALG(j) = OPT0 (j) for all j ∈ [i]. Notice that this suffices because,

by repeating the “exchange” procedure described below, we can keep modifying the optimal

solution under consideration until it agrees with ALG for all i ∈ [m]; as shown above, this

implies m∗ ≤ m.

To construct OPT0 from OPT, we start with OPT and replace OPT(i) with ALG(i).

This doesn’t affect the number of events, so |OPT0 | = |OPT| = m∗ , and now we have

ALG(j) = OPT0 (j) for all j ∈ [i]. All that remains is to show that OPT0 is feasible; in

particular, we need to show that OPT0 (i) is compatible with all other events in OPT0 . Since

ALG is feasible, ALG(i) = OPT0 (i) is compatible with ALG(j) = OPT(j) = OPT0 (j) for all

j < i. Furthermore, since ALG always picks the event that ends earliest, ALG(i) = OPT0 (i)

ends before OPT(i) does. Thus, ALG(i) = OPT0 (i) is also compatible with OPT(j) for all

j > i, so we are done.

3.2

Fractional Knapsack

Problem statement. Suppose there is a set of n items labeled 1 through n, where the

i-th item has weight wi > 0 and value vi > 0. We have a knapsack withP

capacity B. Our

n

goal is to determine xi ∈ [0, 1] for each item

Pn i to maximize the total value i=1 xi vi , subject

to the constraint that the total weight i=1 xi wi is at most B.

Remark. In the standard (i.e., not fractional) Knapsack problem, we cannot take a fraction

of an item, i.e., we must set xi ∈ {0, 1} for every item i. In this setting, there is no natural

greedy algorithm that always returns an optimal solution. However, it can be solved using

a technique known as dynamic programming, as we’ll see in the next chapter.

Algorithm. Relabel the items in decreasing order of “value per weight,” so that

v1

v2

vn

≥

≥ ··· ≥

.

w1

w2

wn

In this order, take as much of each item as possible (i.e., set each xi = 1) until the total

weight of selected items reaches the capacity of the knapsack B.

Theorem 3.2. The greedy algorithm above returns an optimal solution to the fractional

knapsack problem.

Proof. Let ALG = (x1 , . . . , xn ) denote the solution returned by the greedy algorithm, and

let OPT = (x∗1 , . . . , x∗n ) denote an optimal solution. For contradiction, assume OPT > ALG;

22

let i denote the smallest index such that x∗i 6= xi . By design of the algorithm, we must have

x∗i < xi ≤ 1. Furthermore, since OPT > ALG, there must exist some j > i such that x∗j > 0.

We now modify OPT as follows: increase x∗i by > 0 and decrease x∗j by wi /wj . Note that

can be chosen small enough such that x∗i and x∗j remain in [0, 1] after the modification. Let

OPT0 denote this modified solution; notice that OPT and OPT0 have the same total weight

so OPT0 is feasible. Now consider the value of OPT0 :

wi

wi

0

OPT = OPT + · vi −

· vj = OPT + · vi −

· vj ≥ OPT,

wj

wj

where the inequality follows from > 0 and vi /wi ≥ vj /wj . Thus, OPT0 is also an optimal

solution but it takes more of item i than OPT does. By repeating this process, we can arrive

at an optimal solution identical to ALG.

3.3

Maximum Spacing Clusterings

Problem statement. Let X denote a set of n points. For any two points u, v, we are given

their distance d(u, v) ≥ 0. (Distances are symmetric, and the distance between any point

and itself is 0.) The spacing of a clustering (i.e., partition) of X is the minimum distance

between any pair of points lying in different clusters. We are also given k ≥ 1, and our goal

is to find a clustering of X into k groups with maximum spacing.

Algorithm. Our algorithm is essentially Kruskal’s algorithm for Minimum Spanning Tree

(Sec. 2.3). In the beginning, each point belongs to its own cluster. We repeatedly add an

edge between the closest pair of points in different clusters until we have k clusters. (This is

equivalent to computing the MST and removing the k − 1 longest edges.)

Theorem 3.3. The algorithm above returns a clustering with maximum spacing.

Proof. Let α denote the spacing of the clustering ALG produced by the algorithm, so α is

the length of the edge that the algorithm would have added next, but did not. Now consider

the optimal clustering OPT. It suffices to show that the spacing of OPT is at most α. If

ALG 6= OPT, then there must exist two points u, v ∈ X such that in ALG, u and v are in the

same cluster, but in OPT, u and v are in different clusters.

Now consider the path P between u and v along the edges in ALG. Since the algorithm

adds edges in non-decreasing order of length, and the edge corresponding to α was not

added, all of the edges in P have length at most α. Furthermore, since u and v lie in

different clusters of OPT, there must be some edge on P whose endpoints lie in different

clusters of OPT. Thus, the distance between these two clusters is at most α.

3.4

Stable Matching

Problem statement. Let G = (X ∪ Y, E) be a bipartite graph where |X| = |Y | = n.

The vertices in X represent students, and the vertices in Y represent companies. Each

student has a preference list (i.e., an ordering) of the n companies, and each company has a

preference list of the n students.

23

Our goal is to construct a matching function M : Y → X such that each company is

matched with exactly one student, and there are no instabilities. An instability is defined as

a (student, company) pair (x, y) such that y prefers x over M (y), and x prefers y over the

company y 0 such that M (y 0 ) = x.1

Remark. It is not obvious that a stable matching even exists for every possible set of

preference lists. In this section, we not only prove this holds, but we do this by presenting an

algorithm (known as the Gale-Shapley algorithm) that efficiently returns a stable matching.

Algorithm. If we focus our attention on a particular student x, then the greedy choice to

make would be to match that student to their favorite company. So in fact, our algorithm

does this in the first round: it assigns every student to their favorite company. If every

company receives exactly one applicant, then it’s not difficult to show that we’d be done.

But if a company receives more than 1 applicant, then it tentatively gets matched with that

student, and the other applicants return to the pool.

In each subsequent round, an unmatched student applies to their favorite company that

hasn’t rejected them yet, that company gets tentatively matched with their favorite, and the

rejected student (if one exists) returns to the pool. Note that a company never rejects an

applicant unless a better one (from the company’s perspective) applies.

Algorithm 9 The Gale-Shapley algorithm

Input: A bipartite graph G = (X ∪ Y, E) where |X| = |Y |, as well as a preference list for

every vertex (i.e., an ordering of the vertices on the opposite side).

1: S ← X, M (y) ← ∅ for all y ∈ Y

2: while S is not empty do

3:

Remove some student x from S

4:

Let y denote x’s favorite company that hasn’t rejected them yet

5:

if M (y) = ∅ or y prefers x to M (y) then

6:

Add M (y) to S and set M (y) = x

. x and y are tentatively matched

7:

else

8:

Return x to S

. x is rejected by y

9: return the matching function M

Theorem 3.4. Algorithm 9 returns a stable matching.

Proof. We first show that the algorithm terminates and the output is a matching. Notice

that no student applies to the same company more than once, so the algorithm terminates

in most n2 iterations. Now suppose the algorithm ended with an unmatched student x.

Whenever a company y receives an applicant, y is (tentatively) matched for the rest of the

algorithm. Since x must have applied to every company, in the end, every company must

be matched. But |X| = |Y |, so it cannot be the case that every company is matched while

x remains unmatched.

1

If we view X as men and Y as women, then an instability consist of a man and a woman who both wish

they could cheat on their respective partners.

24

Now we show at M is stable. For contradiction, suppose (x, y) is an instability, where x

is matched to some y 0 but prefers y, and y is matched to M (y) but prefers x. Since x prefers

y to y 0 , x must have applied to y at some point. But, since y ends up matched with M (y), y

must have rejected x at some point in favor of someone y prefers more. The tentative match

for y only improves over time (according to y’s preference list), so y must prefer M (y) to x.

This contradicts our assumption that y prefers x to M (y), so we are done.

25

3.5

Exercises

3.1. Suppose we are given the following input for Selecting Compatible Intervals: [1, 10],

[2, 4], [3, 6], [5, 7], [8, 11], [9, 10]. What does the optimal greedy algorithm return?

3.2. Consider the Knapsack problem, where for each item, we must either pick it entirely

or none of it all (i.e., xi ∈ {0, 1} for all i). Show that the greedy algorithm (picking in

non-increasing order of vi /wi ) is no longer optimal.

3.3. Write the pseudocode for the greedy algorithm for Selecting Compatible Intervals. The

algorithm should run in O(n log n) time.

3.4. Consider the Selecting Compatible Intervals problem. Instead of sorting by end time,

suppose we sort by non-decreasing start time, duration (i.e., length of the interval),

or number of conflicts with other events. For each of these three algorithms, give an

instance where the algorithm does not return an optimal solution.

3.5. For the Fractional Knapsack problem, prove that the assumption that all vi /wi values

are unique can be made without loss of generality.

3.6. Let {1, . . . , n} denote a set of n customers, where each customer has a distinct processing time ti . In any ordering of the customers, the waiting time of a customer i is

the sum of processing times of customers that appear before i in the order. Give an

O(n log n)-time algorithm that returns an ordering such that the sum (or average) of

waiting times is minimized.

3.7. Suppose we are given n intervals (events). Our goal is to partition the events into

groups such that all events in each group are compatible, and the number of groups is

minimized. Give an O(n2 )-time algorithm for this problem.

3.8. Suppose we are given n intervals. We say a point x stabs an interval [s, t] if s ≤ x ≤ t.

Give an O(n log n)-time algorithm that returns a minimum set of points that stabs all

intervals.

3.9. Suppose there are an unlimited number of coins in each of the following denominations:

50, 20, 1. The problem input is an integer n, and our goal is to select the smallest

number of coins such that their total value is n. The greedy algorithm is to repeatedly

select the denomination that is as large as possible.

(a) Give an instance where the greedy algorithm does not return an optimal solution.

(b) Now suppose the denominations are 10, 5, 1. Show that the greedy algorithm

always returns an optimal solution.

3.10. Consider the Stable Matching problem. For any student x and company y, we say that

(x, y) is valid if there exists a stable matching that matches x and y. For each student

x, let f (x) denote the highest company on x’s preference such that (x, f (x)) is valid.

Similarly, for any company y, let g(y) denote the lowest valid student on y’s preference

list such that (g(y), y) is valid.

26

(a) Prove that the Gale-Shapley algorithm always matches x with f (x).

(b) Prove that the Gale-Shapley algorithm always matches y with g(y).

3.11. In an undirected graph, a subset of vertices is an independent set if no edge of the

graph has both endpoints in the subset. Let T = (V, E) be a tree. Give an O(n)-time

algorithm that returns a maximum independent set (i.e., one with the most vertices)

of T .

3.12. A subset of edges in a graph is a matching if no two edges in the subset share an

endpoint. Let T = (V, E) be a tree. Give an O(n)-time algorithm that returns a

matching of maximum size in T .

27

4

Dynamic Programming

In this chapter, we study an algorithmic technique known as dynamic programming (DP)

that often works when greedy doesn’t. The idea is to solve a sequence of increasingly

larger subproblems by using solutions to smaller, overlapping subproblems. Technically,

the algorithms in this chapter only compute the value of an optimal solution (rather than

the optimal solution itself) but as we’ll see, recovering the optimal solution itself is usually

a straightforward extension.1

Furthermore, instead of the usual “theorem, proof” analysis, we’ll start by analyzing a

recurrence that drives the entire algorithm. If we can convince ourselves that the recurrence

is correct, then it’s usually relatively straightforward to see that the algorithm is correct as

well. Analyzing the running time of a DP algorithm is usually not super difficult either.

4.1

Sum of Array

Problem statement. Let A be an array of n integers. Our goal is to find the sum of

elements in A.

Analysis. For any i ∈ {1, . . . , n}, consider the subproblem of finding the sum of elements

in A[1 : i]. We’ll store the solution to this subproblem in an array OPT[i]; notice that

OPT[1] = A[1] and for i ≥ 2, OPT[i] satisfies the following recurrence:

OPT[i] = OPT[i − 1] + A[i].

Our algorithm will use this recurrence to compute OPT[1] through OPT[n] and return OPT[n]

at the end.

Algorithm. Populate an array d[1, . . . , n], according to the recurrence for OPT above.

That is, initialize d[1] = A[1] and for i ≥ 2, set d[i] = d[i − 1] + A[i]. At the end, return

d[n]. Computing each d[i] takes O(1) time, and since d has length n, the total running time

is O(n).

Algorithm 10 Sum of Array

Input: Array A of n integers.

1: d ← A

2: for i ← 2, . . . , n do

3:

d[i] ← d[i − 1] + A[i]

4: return d[n]

4.2

Longest Increasing Subsequence

Problem statement. Let A be an array of n integers. Our goal is to find the longest

increasing subsequence (LIS) in A. For example, the LIS of [4, 1, 5, 2, 3, 0] is [1, 2, 3], and the

LIS of [3, 2, 1] is [3] (or [2], or [1]).

1

It’s the same process as using BFS to find shortest paths rather than just distances.

28

Analysis. For any index i, let OPT[i] denote the length of the longest increasing subsequence of A that must end with A[i], so OPT[1] = 1. Intuitively, to calculate OPT[i] for

i ≥ 2, we should find the “best” entry in A to “come from” before jumping to A[i]. We’re

allowed to jump from A[j] to A[i] if j < i and A[j] < A[i], and we want the subsequence

ending with A[j] to be as long as possible. So more formally, for any i ≥ 2, OPT[i] satisfies

the following recurrence:

OPT[i] = 1 + max OPT[j].

(1)

1≤j<i

A[j]<A[i]

(If the max is taken over the empty set, that means there are no numbers in A[1 : i − 1]

smaller than A[i], so OPT[i] = 1.) We don’t know where the optimal solution OPT ends,

but it must end somewhere, and the recurrence allows us to calculate the best way to end

at every position. So OPT = maxi OPT[i].

Algorithm. We maintain an array d[1, . . . , n], and compute d[i] according to Eq. (1).

When computing d[i] we scan A[1 : i − 1], so the overall running time is O(n2 ). Once we have

d[i] = OPT[i] for all i, we return max(d).

Algorithm 11 Longest Increasing Subsequence

Input: Array A of n integers.

1: d[1, . . . , n] ← [1, . . . , 1]

2: for i ← 2, . . . , n do

3:

for j ← 1, . . . , i − 1 do

4:

if A[j] < A[i] and d[i] < 1 + d[j] then

5:

d[i] ← 1 + d[j]

6: return max(d)

. append A[i] to the LIS ending at j

To obtain the actual longest subsequence, and not just its length, we can maintain an

array p that tracks pointers. In particular, if d[i] is set to 1 + d[j], then we set p[i] = j,

indicating that the LIS ending at i includes j immediately before i. By following these

pointers, we can recover the subsequence itself in O(n) time.

4.3

Knapsack

Problem statement. Suppose there are n items {1, 2, . . . , n}, where each item i has

integer weight wi > 0 and value vi > 0. We have a knapsack of capacity B. Our goal is to

select a subset of items with maximum total value, subject to the constraint that the total

weight of selected items is at most B.2

Analysis. For any i ∈ {1, . . . , n} and j ∈ {0, 1, . . . , B}, let OPT[i][j] denote the optimal

value for the subproblem in which we could only select from items {1, . . . , i} and the knapsack only has capacity j. Notice that the subset of items Sij corresponding to OPT[i][j]

either contains item i, or it doesn’t (depending on which decision leads to a more profitable

2

Recall that we solved the fractional version of this problem in Sec. 3.2.

29

outcome). If wi > j, then Sij must ignore i, so OPT[i][j] = OPT[i − 1][j]. Otherwise,

OPT[i][j] is either vi + OPT[i − 1][j − wi ] (meaning Sij includes i) or OPT[i − 1][j] (meaning

Sij ignores i). This yields the following recurrence:

(

OPT[i − 1][j] if wi > j

OPT[i][j] =

(2)

max(vi + OPT[i − 1][j − wi ], OPT[i − 1][j]) otherwise.

The value of the optimal solution is OPT[n][B].

Algorithm. The algorithm fills out a 2-dimensional array d[1, . . . , n][0, . . . , B] according

to Eq. (2). Notice that the values in each row depend on those in the previous row. We

return d[n][B] as the value of an optimal solution. The array has n(B + 1) entries, and

computing each one takes O(1) time, so the total running time is O(nB).

Algorithm 12 Knapsack

Input: n items with weights w and values v, capacity B

1: d[1, . . . , n][0, . . . , B] ← [0, . . . , 0][0, . . . , 0]

2: for j ← 1, . . . , B do

3:

if w1 ≤ j then d[1][j] ← v1

4: for i ← 2, . . . , n do

5:

for j ← 1, . . . , B do

6:

if wi > j then

7:

d[i][j] ← d[i − 1][j]

8:

else

9:

d[i][j] ← max(vi + d[i − 1][j − wi ], d[i − 1][j])

10: return d[n][B]

. populate the first row

. we must ignore i

. we can include i

By tracing back through the array d starting at d[n][B], we can construct the optimal

subset of items. At any point, if d[i][j] = d[i − 1][j], then we can exclude item i from the

solution. Otherwise, we must have d[i][j] = vi + d[i − 1][j − wi ], and this means we include

item i in the solution.

Remark. Despite its polynomial-like running time of O(nB), Algorithm 12 does not run

in polynomial time. This is because the input length of the number B is not B, but rather

log B. For example, specifying the number 512 only requires 9 bits, not 512.

4.4

Edit Distance

Problem statement. Let A[1, . . . , m] and B[1, . . . , n] be two strings. There are three

valid operations we can perform on A: insert a character, delete a character, and replace one

character with another. (A replacement is equivalent to an insertion followed by a deletion,

but it only costs a single operation.) Our goal is to convert A into B using a minimum

number of operations as possible (known as the edit distance).

30

Analysis. Let OPT[i][j] denote the edit distance between A[1, . . . , i] and B[1, . . . , j]. Notice that OPT[i][j] corresponds to a procedure that edits A[1, . . . , i] such that its last character is B[j], so it must make one of the following three choices:

1. Insert B[j] after A[1, . . . , i] for a cost of 1; then turn A[1, . . . , i] into B[1, . . . , j − 1].

2. Delete A[i] for a cost of 1; then turn A[1, . . . , i − 1] into B[1, . . . , j].

3. Replace A[i] with B[j] for a cost of 0 (if A[i] = B[j]) or 1 (otherwise); then turn

A[1, . . . , i − 1] into B[1, . . . , j − 1].

Thus, OPT[i][j] satisfies the following recurrence:

1 + OPT[i][j − 1]

OPT[i][j] = min 1 + OPT[i − 1][j]

δ(i, j) + OPT[i − 1][j − 1]

(3)

where δ(i, j) = 0 if A[i] = B[j] and 1 otherwise. For the base cases, we have OPT[i][0] = i

(i.e., delete all i characters of A to obtain the empty string) and OPT[0][j] = j (i.e., insert

all j characters of B to obtain B). The overall edit distance is OPT[m][n].

Algorithm. The algorithm fills out an array d[0, . . . , m][0, . . . , n] according to (3) and

returns d[m][n]. Each of the O(mn) entries takes O(1) time to compute, so the total running

time is O(mn). To recover the optimal sequence of operations, we can use the same technique

of tracing pointers through the table, as we’ve already described multiple times.

4.5

Independent Set in Trees

Problem statement. Let T = (V, E) be a tree on n vertices, where each vertex u has

weight w(u) > 0. A subset of vertices S is an independent set if noPtwo vertices in S share

an edge. Our goal is to find an independent set S that maximizes u∈S w(u); such a set is

known as a maximum independent set (MIS).

Analysis. Let’s first establish some notation. We can turn T into a rooted tree by arbitrarily selecting some vertex r as the root. For any vertex u, let T (u) denote the subtree rooted

at u (so T = T (r)), and let C(u) denote the set of children of u. Also let OPTin [u], OPTout [u]

denote the MIS of T (u) that includes/excludes u, respectively.

If u is a leaf, then T (u) contains u and no edges, so OPTin [u] = w(u) and OPTout [u] = 0.

Now suppose u is not a leaf. The MIS corresponding to OPTin [u] includes u, so it cannot

include any v ∈ C(u), but it can include descendants of these v. This means

X

OPTin [u] = w(u) +

OPTout [v].

(4)

v∈C(u)

On the other hand, the MIS corresponding to OPTout [u] excludes u, which means it is free

to either include or exclude each v ∈ C(u), which implies

X

OPTout [u] =

max(OPTin [v], OPTout [v]).

(5)

v∈C(u)

31

In the end, the maximum independent set of T = T (r) either contains r or it doesn’t, so we

return max(OPTin [r], OPTout [r]).

Algorithm. The algorithm maintains two arrays din [1, . . . , n] and dout [1, . . . , n], calculated

according to Eq. (4) and Eq. (5), respectively. When calculating din [u] and dout [u], we need

to ensure that we have already calculated din [v] and dout [v] for every v ∈ C(u). One way to

do this is to run a DFS (see Sec. 2.2) starting at the root r, and process V in increasing post