Prof. Dr. Oliver Wendt

Dr. habil. Mahdi Moeini, M.Sc. Manuel Hermes

Business Information Systems & Operations Research

Basics of Data Science

Summer Semester 2022

Technische Universität Kaiserslautern

www.bisor.de

Organizational Stuff

1

General

•

Part 0: Data -> Information -> Knowledge: Concepts in Science and

Engineering

•

Part 1: Organizing the “Data Lake” (from data mining to data

fishing)

•

Part 2: Stochastic Models on structured attribute data

•

Part 3: Getting Ready for the Digital Twin

•

Lectures and exercises (no tutorials).

•

OLAT PW:

2

Prof. Dr. Oliver Wendt

Dr. habil. Mahdi Moeini

Business Information Systems & Operations Research

Basics of Data Science

Summer Semester 2022

https://www.billyloizou.com/blog/bridging-the-gap-between-data-creativity

Summary:

In this section, we will see:

• What is Data Science?

• What is/are Data/Data Set?

• Sources of Data

• Ecosystem of Data Science

• Legal, Ethical, and Social Issues

• Tasks of Data Science

Basics of Data Science

2

What is Data Science?

• Explosion of data:

o Social networks,

o Internet of Things, ….

• Heterogeneity:

o big and unstructured data that might be noisy, ….

• Technologies:

o huge storage capacity, clouds,

o computing power,

o algorithms,

o statistics and computation techniques

Basics of Data Science

3

What is Data Science?

trends.google.com (accessed March 29, 2022)

Basics of Data Science

4

What is Data Science?

Data Science vs. Machine Learning vs. Big Data

• Common point: focus on improving decision making through

analyzing data

• Difference:

o Machine Learning: focus on algorithms

o Big Data: focus on structured data

o Data Science: focus on unstructured data

Basics of Data Science

5

What is Data Science?

Data Science Venn Diagram

http://drewconway.com/zia/2013/3/26/the-data-science-venn-diagram

Jake Vander Plas. Python Data Science Handbook. O'Reilly Media, (2016)

Basics of Data Science

6

What is Data Science?

A First Try to Define Data Science

“A data scientist is someone who is better at statistics than any

software engineer and better at software engineering than any

statistician.”

Josh Wills, Director of Data Science at Cloudera

“A data scientist is someone who is worse at statistics than any

statistician and worse at software engineering than any software

engineer.”

Will Cukierski, Data Scientist at Kaggle

“The field of people who decide to print ‘Data Scientist’ on their

business cards and get a salary bump.”

https://www.prooffreader.com/2016/09/battle-of-data-science-venn-diagrams.html

Basics of Data Science

7

What is Data Science?

According to David Donoho, data science activities are classified as

follows:

• Data Gathering, Preparation, and Exploration

• Data Representation and Transformation

• Computing with Data

• Data Visualization and Presentation

• Data Modeling

• Science about Data Science

David Donoho. 50 Years of Data Science, Journal of Computational and Graphical Statistics, 26:4, 745-766, (2017).

Basics of Data Science

8

What is Data Science?

Definition:

Data Science concerns the recognition, formalization, and

exploitation of data phenomenology emerging from digital

transformation of business, society and science itself.

Definition:

Data Science holds a set of principles, problem definitions,

algorithms, and processes with the objective of extracting

nontrivial and useful patterns from large data sets.

David Donoho. Data Science: The End of Theory?

John D. Kelleher, Brendan Tierney. Data Science. The MIT Press, (2018)

Basics of Data Science

9

What is Data Science?

Some Examples: Data Science in History

Mid 19-th century:

● devastating outbreaks of Cholera had plagued London.

● Dr John Snow prepared a dot map for the Soho district in 1854.

● The clustering pattern from the map showed that the Broad

Street pump is likely the source of contamination.

● Using this evidence, the authorities disabled the pump, and

consequently they could decline Cholera incidence in the

neighborhood.

Basics of Data Science

10

What is Data Science?

Some Examples: Data Science Today

• Precision medicine

• Data Science in Sales and Marketing

• Governments Using Data Science

• Data Science in Professional Sports

• Predicting elections

N. Silver. The Signal and the Noise. Penguin (2012).

E. A. Ashley. Towards Precision Medicine. Nature Reviews Generics (2017).

John D. Kelleher, Brendan Tierney. Data Science. The MIT Press, (2018)

Basics of Data Science

11

What is Data Science?

The Hype Cycle

https://en.wikipedia.org/wiki/Hype_cycle

Jeremykemp at English Wikipedia, CC BY-SA 3.0, https://commons.wikimedia.org/w/index.php?curid=10547051

Basics of Data Science

12

What is Data Science?

Some Myths about Data Science

• Data science is an autonomous process that finds the answers

to our problems

• Every data science project requires big data and should use

deep learning

• Data science is easy to do

• Data science pays for itself in a very short time

John D. Kelleher, Brendan Tierney. Data Science. The MIT Press, (2018)

Basics of Data Science

13

What is/are Data/Data Set?

• A datum or a piece of information is an abstraction of a realworld entity (i.e., a person, an object, or an event).

• Individual abstraction: variable, feature, and attribute

• Attribute: Each entity is described by several attributes.

• A data set consists of the data concerning a collection of entities,

such that each entity is described in terms of a collection of

attributes.

Hint: “data set” and “analytics record”: are often used as

equivalent terms.

https://www.merriam-webster.com/dictionary/datum

John D. Kelleher, Brendan Tierney. Data Science. The MIT Press, (2018)

Basics of Data Science

14

What is/are Data/Data Set?

• The standard attribute types: numeric, nominal, and ordinal.

• Numeric attributes are used to describe measurable quantities,

which are integer or real values.

• Nominal (categorical) attributes take typically values from a

finite collection or set.

• Ordinal attributes are used to rank over the class of objects.

John D. Kelleher, Brendan Tierney. Data Science. The MIT Press, (2018)

Basics of Data Science

15

What is/are Data/Data Set?

• Structured data: are data that can be stored in a table or

spreadsheet, such that each instance/row in the table has the

same structure and set of attributes.

• Unstructured data: are data where each instance in the data set

may have its own internal and specific structure, such that this

so-called structure can be different for each instance.

John D. Kelleher, Brendan Tierney. Data Science. The MIT Press, (2018)

Basics of Data Science

16

What is/are Data/Data Set?

• Raw data: when attributes are raw abstractions regarding an

object or an event

• Derived data: if we derive them from other pieces of data

• Types of raw data:

o captured data: when we perform a direct measurement or an

observation to collect the data.

o exhaust data: these are by-product that we obtain from a process,

where the primary objective is not capturing data.

Kitchin, Rob. The Data Revolution: Big Data, Open Data, Data Infrastructures, and Their Consequences. Sage, (2014).

John D. Kelleher, Brendan Tierney. Data Science. The MIT Press, (2018)

Basics of Data Science

17

What is/are Data/Data Set?

• Metadata: this is the data that describes other data

Example:

US National Security Agency’s (NSA) surveillance program PRISM

collected a large collection of metadata about people’s phone

conversations, i.e., the agency was not recording the content of

phone calls (i.e., there was no wiretapping) but actually the NSA

was collecting the data regarding the calls, e.g., who the recipient

was, the duration of the call, etc.

John D. Kelleher, Brendan Tierney. Data Science. The MIT Press, (2018)

Pomerantz, Jeffrey. Metadata. Cambridge, MA: MIT Press (2015).

Basics of Data Science

18

Sources of Data

Typically, 80% of project time is spent on getting data ready

Problems:

Unclear variable names, missing

values, misspelled text,

20%

numbers as text (in spreadsheet),

outliers, etc.

Example: Emoji

Unicode number: U+1F914

HTML-code: 🤔

CSS-code: \1F914

John D. Kelleher, Brendan Tierney. Data Science. The MIT Press, (2018)

https://unicode-table.com/en/1F914/

Basics of Data Science

80%

19

Sources of Data

• In-house data: when the data is ready, e.g., your company

provides it.

o Advantages: it is fast and ready, and you can use the data in the frame

of your company and cooperate with people who collected and created

the data.

o Potential disappointments: It might happen that the data is not well

gathered, or not well documented, not well maintained, the person who

gathered them already left the company, what you actually need is not

in the data, ….

Poulson Barton. Data Science Foundations: Fundamentals. (2019)

Basics of Data Science

20

Sources of Data

Open data: is a kind of data that is (1) gratis and (2) free to use.

• Government, e.g.,

o The home of the U.S. Government’s open data (https://www.data.gov/)

o The global open data index (https://index.okfn.org)

• Science, e.g.,

o Nature: https://www.nature.com/sdata

o The Open Science Data Cloud (OSDC):

https://www.opensciencedatacloud.org

• Social Media, e.g., Google trends (https://trends.google.com/) and

Yahoo finance (https://finance.yahoo.com)

Basics of Data Science

21

Sources of Data

Other sources of data (with some overlaps):

• Application Programming Interface (API)

• Data Scraping (be careful!)

• Creating Data (be careful!)

• Passive Collection of Data (be careful!)

• Generating Data

Poulson Barton. Data Science Foundations: Fundamentals. (2019)

Basics of Data Science

22

Ecosystem of Data Science

Data Science Ecosystem: The term refers to the set of

programming languages, software packages and tools, methods

and algorithms, general infrastructure, etc. that an organization

uses to gather, store, analyze, and get maximum advantage from

data in the data science projects of the organization.

There are different sets of technologies used for the purpose of

data science:

● commercial products, ● open-source tools, ● mixture of opensource tools and commercial products.

https://online.hbs.edu/blog/post/data-ecosystem

John D. Kelleher, Brendan Tierney. Data Science. The MIT Press, (2018)

Basics of Data Science

23

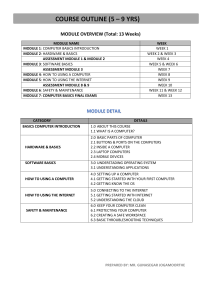

Ecosystem of Data Science

A typical data

architecture for

data science.

John D. Kelleher, Brendan Tierney. Data Science. The MIT Press, (2018)

Basics of Data Science

24

Ecosystem of Data Science

• The data sources layer: e.g., as online transaction processing

(OLTP) systems including banking, finance, call center, etc.

• The data-storage layer: the data sharing, data warehousing,

and data analytics across an organization, which has two parts:

o Most organizations use a data-sharing software.

o Managing (storage and analytics) of big data, by, e.g., Hadoop.

• The applications layer: the ready data is used in analyzing the

specific challenge of the data project.

John D. Kelleher, Brendan Tierney. Data Science. The MIT Press, (2018)

Basics of Data Science

25

Legal, Ethical, and Social Issues

• Legal Issues: for example, privacy laws:

o The EU's General Data Protection Regulation (GDPR), California

Consumer Privacy Act (CCPA), etc.

If an organization violates seriously the policies of the GDPR, then

there might be billions of fine for the concerned organization.

• Ethical Issues: concerning authenticity, fairness (equality,

equity, and need), etc.

• Social Issues: public opinion should be respected and taken into

consideration.

David Martens. Data Science Ethics: Concepts, Techniques and Cautionary Tales. Oxford University Press (2022)

Poulson Barton. Data Science Foundations: Fundamentals. (2019)

Basics of Data Science

26

Legal, Ethical, and Social Issues

https://unctad.org/page/data-protection-and-privacy-legislation-worldwide (accessed December 2021)

Basics of Data Science

27

Legal, Ethical, and Social Issues

Data science in interaction with human and artificial intelligence:

● Recommendations: after processing the data, algorithm make

recommendations, but human decides to take or leave.

● Human-in-the-Loop decisions: algorithms make and execute

their own decisions, but humans are present to control.

● Human-Accessible decisions: algorithms make decisions

automatically and execute them, but the process should be

accessible and interpretable.

● Machine-Centric decisions: machine are communicating with

together, e.g., Internet of Things (IoT).

David Martens. Data Science Ethics: Concepts, Techniques and Cautionary Tales. Oxford University Press (2022)

Poulson Barton. Data Science Foundations: Fundamentals. (2019)

Basics of Data Science

28

Standard Tasks of Data Science

Most data science projects belong to one of the following classes

of tasks:

• Clustering

• Anomaly (outlier) detection

• Association-rule mining

• Prediction

John D. Kelleher, Brendan Tierney. Data Science. The MIT Press, (2018)

Basics of Data Science

29

Standard Tasks of Data Science

• Clustering:

Example: through identifying customers and their preferences

and needs, data science can support marketing and sales

campaign of companies via targeted marketing.

The standard data science approach: formulate the problem as a

clustering task, where clustering sorts the instances of a data set

into subgroups of similar instances. For this purpose, we need to

know number of subgroups (e.g., via some domain knowledge)

and a range of attributes that describe customers for clustering,

e.g., demographic information (age, gender, etc.), location (ZIP

code), and so on.

John D. Kelleher, Brendan Tierney. Data Science. The MIT Press, (2018)

Basics of Data Science

30

Standard Tasks of Data Science

• Anomaly (outlier) detection:

In anomaly detection (or outlier analysis), we search for and

identify instances that do not match and conform to the typical

instance in a data set, e.g., fraudulent activities, fraudulent credit

card transactions.

A typical approach:

1. Using domain expertise, define some rules.

2. Then, use, e.g., SQL (or another language) to check business

databases or data warehouse.

Another approach: training a prediction model to classify

instances as anomalous versus normal.

John D. Kelleher, Brendan Tierney. Data Science. The MIT Press, (2018)

Basics of Data Science

31

Standard Tasks of Data Science

• Association-rule mining:

For example, data science can be used in cross-selling, where the

vendor suggests to customers, who are currently buying some

products, if they are also interested to buy other similar, related,

or even complementary products.

For the purpose of cross-selling, we need to identify associations

between products. This can be done by unsupervised-dataanalysis techniques.

John D. Kelleher, Brendan Tierney. Data Science. The MIT Press, (2018)

Basics of Data Science

32

Standard Tasks of Data Science

• Prediction (classification):

Example: In customer relationship management (CRM), a typical

task consists in performing propensity modeling, i.e., estimating

the likelihood that a customer will make a decision, e.g., leaving a

service.

Customer churn: when customers leave one service, e.g., cell

phone company, to join another one.

Using classification models (and training them), data science task

is to help in detecting (predicting) churns, i.e., classifying a

customer as a churn risk or not.

John D. Kelleher, Brendan Tierney. Data Science. The MIT Press, (2018)

Basics of Data Science

33

Standard Tasks of Data Science

• Prediction (regression):

Example: Price prediction, which consists in estimating the price

of a product at some point in future.

• A typical approach: Using regression because price prediction

consists in estimating the value of a continuous attribute.

John D. Kelleher, Brendan Tierney. Data Science. The MIT Press, (2018)

Basics of Data Science

34

Legal, Ethical, and Social Issues

Basics of Data Science

Legal, Ethical, and Social Issues

Basics of Data Science

Prof. Dr. Oliver Wendt

Dr. habil. Mahdi Moeini

Business Information Systems & Operations Research

Basics of Data Science

Summer Semester 2022

Part 1, Section 2 → Relational Database Models: Modeling and

Querying Structured Attributes of Objects

Part 1: Organizing the “Data Lake” (from data mining to data fishing)

• Relational Database Models: Modeling and Querying Structured Attributes

of Objects

• Graph- and Network-based Data Models: Modeling and Querying

Structured Relations of Objects

• Information Retrieval: Document Mining and Querying of ill-structured Data

• Streaming Data and High Frequency Distributed Sensor Data

• The Semantic Web: Ontologist’s Dream (or nightmare?) of how to integrate

evolving heterogeneous data lakes

Basics of Data Science

2

Relational Database Models:

Modeling and Querying

Structured Attributes of

Objects

Basics of Data Science

3

Summary:

In this section, we will see:

• Introduction

• The Relational Model

• Relational Database Management System (RDBMS)

• How to Design a Relational Database

• Database Normalization

• Other Types of Databases

Basics of Data Science

4

Introduction

• Data Storage Problem

• One-dimensional array

https://www.weforum.org/agenda/2015/02/a-brief-history-of-big-data-everyone-should-read/

Basics of Data Science

5

Using a List to Organize Data

Holidays Pictures:

Alexanderplatz.jpg

Brandenburg Gate.jpg

Eiffel Tower.jpg

London Eye.jpg

Louvre.jpg

Images from Internet.

Basics of Data Science

6

Organize Data in Table:

Country

City

Picture

Date

Person

Germany

Berlin

Brandenburg

Gate.jpg

1.7.2021

Joshua

Germany

Berlin

Alexanderplatz.jpg

1.7.2021

Hans

England

London

London Eye.jpg

1.9.2021

Hans

France

Paris

Eiffel Tower.jpg

1.8.2021

Joshua

France

Paris

Louvre.jpg

1.8.2021

Hans

Basics of Data Science

7

Pros and Cons of a Table Structure:

• It is easy to add attributes with additional columns

• It is easy to add new records with additional rows

• We have to store repetitive information

• It is not easy to accommodate special circumstances

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

8

Relational Data Bases:

Pictures

Picture#

FileName

Location

Date

001

Brandenburg

Gate.jpg

1

1.7.2021

002

Alexanderplatz.jpg

1

1.7.2021

003

Eiffel Tower.jpg

2

1.8.2021

004

Louvre.jpg

2

1.8.2021

005

London Eye.jpg

3

1.9.2021

Locations

People

Picture#

Person

001

Hans

002

Joshua

003

Joshua

004

Hans

Location

City

Country

005

Hans

1

Berlin

Germany

005

Joshua

2

Paris

France

005

Sarah

3

London

England

Basics of Data Science

9

The Relational Model

Basics of Data Science

10

The Relational Model:

• It was originally developed by data scientist E.F. Codd in his paper: "A

Relational Model of Data for Large Shared Data Banks“, which was

published in 1970

• Key points:

o The retrieval of information is separated from its storage

o Using some rules data is organized across multiple tables that are related

to each other

Ramon A. Mata-Toledo and Pauline K. Cushman. Fundamentals of Relational Databases. McGraw-Hill (2000)

Andreas Meier and Michael Kaufmann. SQL & NoSQL Databases. Springer (2019)

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

11

The Pictures Database:

Pictures

Picture#

FileName

Location

Date

001

Brandenburg Gate.jpg

1

1.7.2021

People

002

Alexanderplatz.jpg

1

1.7.2021

Picture#

Person

003

Eiffel Tower.jpg

2

1.8.2021

001

Hans

004

Louvre.jpg

2

1.8.2021

002

Joshua

005

London Eye.jpg

3

1.9.2021

003

Joshua

006

River Thames.jpg

3

1.9.2021

004

Hans

005

Hans

005

Joshua

005

Sarah

Locations

Location

City

Country

1

Berlin

Germany

2

Paris

France

3

London

England

Basics of Data Science

12

The Pictures Database:

Pictures

Picture#

FileName

Location

Date

001

Brandenburg Gate.jpg

1

1.7.2021

People

002

Alexanderplatz.jpg

1

1.7.2021

Picture#

Person

003

Eiffel Tower.jpg

2

1.8.2021

001

Hans

004

Louvre.jpg

2

1.8.2021

002

Joshua

005

London Eye.jpg

3

1.9.2021

003

Joshua

006

River Thames.jpg

3

1.9.2021

004

Hans

005

Hans

005

Joshua

005

Sarah

Locations

Location

City

Country

1

Berlin

Germany

2

Paris

France

3

London

England

Basics of Data Science

13

The Pictures Database:

Pictures

Picture#

FileName

Location

Date

001

Brandenburg Gate.jpg

1

1.7.2021

People

002

Alexanderplatz.jpg

1

1.7.2021

Picture#

Person

003

Eiffel Tower.jpg

2

1.8.2021

001

Hans

004

Louvre.jpg

2

1.8.2021

002

Joshua

005

London Eye.jpg

3

1.9.2021

003

Joshua

006

River Thames.jpg

3

1.9.2021

004

Hans

005

Hans

005

Joshua

005

Sarah

Locations

Location

City

Country

1

Berlin

Germany

2

Paris

France

3

London

England

Basics of Data Science

14

Relational Database

Management System (RDBMS)

Basics of Data Science

15

Some RDBMS systems and sellers

• Microsoft SQL Server (SQL: Structured Query Language)

• PostgreSQL

• Azure SQL Database

• IBM Db2

• Oracle

• MySQL

• SQLite

https://realpython.com/python-sql-libraries/

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

16

RDBMS Tasks

• Creating and modifying the structure of the data

• Defining names for tables and column

• Creating key-value columns and building relationships

• Manipulating records and performing CRUD operations

o Create new records of data

o Read data that exists

o Update values of data

o Delete records from the database

I. Robinson, J. Webber, and E. Eifrem. Graph Databases New Opportunities for Connected Data. O'Reilly (2015)

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

17

Further RDBMS Tasks

• Performing regular backups

• Maintaining copies of the database

• Controlling access permissions

• Creating reports including visualizations

• Creating forms

How to interact with a RDBMS?

• Part 1: Graphical interface

• Part 2: Coding with SQL (Structured Query Language)

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

18

Database Components

• Relations

• Domains

• Tuples

Alternative terms for database components

• Tables

• Columns

• Records

Andreas Meier and Michael Kaufmann. SQL & NoSQL Databases. Springer (2019)

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

19

Data Table

Person Name

Favorite Color Eye Color

Columns, Fields, or Attributes

Records or Rows

Person Name

Person Name

Favorite Color Eye Color

Basics of Data Science

Favorite Color Eye Color

20

How to Design a

Relational Database

Basics of Data Science

21

How to Design a Relational Database

• Find out which information that should be stored

• Pay attention to what you want to extract or get out of the database

• Create table groups collecting information

• Hint: imagine tables as "nouns" and columns as "adjectives“ of the nouns

Ramon A. Mata-Toledo and Pauline K. Cushman. Fundamentals of Relational Databases. McGraw-Hill (2000)

Andreas Meier and Michael Kaufmann. SQL & NoSQL Databases. Springer (2019)

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

22

Example: Entity Relationship Diagram

Customers

Orders

CustomerID

FirstName

LastName

StreetAddress

City

State

Zip

OrderID

CustomerID

ProductName

Quantity

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

23

Example: Entity Relationship Diagram

One-to Many Relationship

Customers

CustomerID

FirstName

LastName

StreetAddress

City

State

Zip

1

Orders

N

Basics of Data Science

OrderID

CustomerID

ProductName

Quantity

24

Example: Entity Relationship Diagram

One-to Many Relationship

Customers

CustomerID

FirstName

LastName

StreetAddress

City

State

Zip

1

Orders

OrderID

CustomerID

ProductName

Quantity

Basics of Data Science

25

Example: Entity Relationship Diagram

• Crow's Foot Notation

One-to Many Relationship: Crow’s Foot Notation

Customers

CustomerID

FirstName

LastName

StreetAddress

City

State

Zip

Orders

OrderID

CustomerID

ProductName

Quantity

Gordon C. Everest, "Basic Data Structure Models Explained With A Common Example" Computing Systems, Proceedings Fifth Texas

Conference on Computing Systems, Austin, TX, 1976 October 18-19, pages 39-46.

Basics of Data Science

26

Example: Entity Relationship Diagram

Products

ProductName

PartNumber

Size

Color

Price

Supplier

QuantityInStock

Suppliers

int

int

int

int

int

int

int

SupplierName

PhoneNumber

StreetAddress

City

State

Zip

int

int

int

int

int

int

Allen G. Taylor. SQL For Dummies, 9th Edition (2019)

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

27

Database Diagram: Data Types

Order

Name

BirthDate

Salary

Number

Text

Date

Currency

Number

Text

Date

Currency

Number

Text

Date

currency

Benefits of Data Types

• Efficient storage

• Data consistency and improved quality

Ramon A. Mata-Toledo and Pauline K. Cushman.

Fundamentals of Relational Databases. McGrawHill (2000)

Andreas Meier and Michael Kaufmann. SQL &

NoSQL Databases. Springer (2019)

• Improved performance

Adam Wilbert. Relational Databases: Essential

Training. (2019)

Basics of Data Science

28

Data Type Categories

• Character or text: char(5), nchar(20), varchar(100),

• Numerical data: tinyint, int, decimal/float

• Currency, times, dates,

• Other data types: geographic coordinates, binary files, etc.

Ramon A. Mata-Toledo and Pauline K. Cushman. Fundamentals of Relational Databases. McGraw-Hill (2000)

Andreas Meier and Michael Kaufmann. SQL & NoSQL Databases. Springer (2019)

Allen G. Taylor. SQL For Dummies, 9th Edition (2019)

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

29

Example: Data Types

Products

Suppliers

ProductName

varchar(100)

PartNumber

int

Size

varchar(20)

Color

varchar(20)

Price

decimal

Supplier

varchar(100)

QuantityInStock

int

SupplierID

SupplierName

PhoneNumber

StreetAddress

City

State

Zip

int

varchar(100)

char(15)

varchar(100)

varchar(50)

char(3)

char(5)

Allen G. Taylor. SQL For Dummies, 9th Edition (2019)

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

30

Primary Key

• Definition: A primary key is the column or columns that contain values

that are used to uniquely identify each row in a table.

• Guaranteed to be unique (forever)

Some ways to define primary keys:

o Natural keys: there may already be unique identifiers in your data

o Composite keys: concatenation of multiple columns

o Surrogate keys: created just for the database (product id, …).

https://www.ibm.com/docs/en/iodg/11.3?topic=reference-primary-keys

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

31

Example: Determining a Primary Key

FirstName

LastName

FavoriteColor

Hans

Kurz

Green

Hans

Long

Yellow

Joshua

Müller

Green

PersonalID (PK)

FirstName

LastName

FavoriteColor

001

Hans

Kurz

Green

002

Hans

Long

Yellow

003

Joshua

Müller

Green

Basics of Data Science

32

Some Notes on Naming Tables and Columns:

• Consistency

• Capitalization

• Spaces

• Avoid using reserved Words

The command keywords should not be used in your defined names

• Avoid acronyms

Avoid acronyms, use full and legible terms

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

33

Integrity and Validity

Data Integrity ensures that information is identical to its source, and the

information has not been, in an accidental or malicious way, altered, modified,

or destroyed.

Validation is about the evaluations used to determine compliance and

accordance with security requirements.

• Data Validation: Invalid data is not allowed to be in database and the user

receives error message in case of inappropriate inputs/values.

• Unique Values: When a value can appear only once, e.g., primary key.

• Business Rules: Take into account your organization’s constraints.

https://oa.mo.gov/sites/default/files/CC-DataIntegrityandValidation.pdf

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

34

Example: Unique Constraint

Products

ProductName

PartNumber

Size

Color

Price

Supplier

QuantityInStock

Suppliers

varchar(100) UNIQUE

int

varchar(20)

varchar(20)

decimal

varchar(100)

int

SupplierID

SupplierName

PhoneNumber

StreetAddress

City

State

Zip

int

varchar(100)

char(15)

varchar(100)

varchar(50)

char(3)

char(5)

In creating the table [Products], you should add the following lines:

•

In MS SQL Server: CONSTRAINT [UK_Products_ProductName] UNIQUE (

[ProductName]

),

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

35

• NULL values: indicate that data is not known, is not specified, or is not

applicable.

• NOT NULL values: indicate a required column.

Example: Birthdate of customers or employees?

People

FirstName

Birthdate

Joshua

April 25, 1990

Albert

Valentin

July 14, 2000

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

36

Indexes

• Indexes are added to any column which is used in frequent searches.

o Clustered indexes: primary keys

o Non-clustered indexes: all other indexes

• Issues in adding too many indexes

o It will reduce speed in adding new records

o Note: you can still search on non-indexed fields

Andreas Meier and Michael Kaufmann. SQL & NoSQL Databases. Springer (2019)

Allen G. Taylor. SQL For Dummies, 9th Edition (2019)

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

37

Example: Creating Index

Products

ProductName

PartNumber

Size

Color

Price

Supplier

QuantityInStock

•

Suppliers

varchar(100) UNIQUE

int

varchar(20)

varchar(20)

decimal

varchar(100)

int

SupplierID

SupplierName

PhoneNumber

StreetAddress

City

State

Zip

int

varchar(100)

char(15)

varchar(100)

varchar(50)

char(3)

char(5)

In MS SQL Server: CREATE INDEX [idx_Suppliers_SupplierName]

ON [Suppliers] ([SupplierName])

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

38

Check (Integrity) Constraints

These are built directly into the design of the table and then entry data is

checked and validated before being saved to the table.

• Numerical Checks: It can include a range of acceptable values.

• Character Checks: It can be used to limit possible cases.

Andreas Meier and Michael Kaufmann. SQL & NoSQL Databases. Springer (2019)

Allen G. Taylor. SQL For Dummies, 9th Edition (2019)

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

39

Example: Check Constraint

Products

ProductName

PartNumber

Size

Color

Price

Supplier

QuantityInStock

•

Suppliers

varchar(100) UNIQUE

int

varchar(20)

varchar(20)

decimal

varchar(100)

int

SupplierID

SupplierName

PhoneNumber

StreetAddress

City

State

Zip

int

varchar(100)

char(15)

varchar(100)

varchar(50)

char(3)

char(5)

In MS SQL Server: [State] char(3) NOT NULL

CONTRAINT CHK_State CHECK (State = 'RLP' OR State = 'NRW'),

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

40

Relationships

Basics of Data Science

41

The Pictures Database:

Pictures

Picture#

FileName

Location

Date

001

Brandenburg Gate.jpg

1

1.7.2021

People

002

Alexanderplatz.jpg

1

1.7.2021

Picture#

Person

003

Eiffel Tower.jpg

2

1.8.2021

001

Hans

004

Louvre.jpg

2

1.8.2021

002

Joshua

005

London Eye.jpg

3

1.9.2021

003

Joshua

004

Hans

005

Hans

005

Joshua

005

Sarah

Locations

Location

City

Country

1

Berlin

Germany

2

Paris

France

3

London

England

Basics of Data Science

42

Example: Creating Relationships and Primary Key (PK)

Pictures

Pictures#

FileName

Location

Date

People

(PK)

Pictures#

Person

(PK)

(PK)

Locations

Location

City

Country

(PK)

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

43

Example: Creating Relationships and Links

Pictures

Pictures#

FileName

Location

Date

People

(PK)

Pictures#

Person

(PK)

(PK)

Locations

Location

City

Country

(PK)

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

44

Example: Creating Relationships and Foreign Key (FK)

Pictures

Pictures#

FileName

Location

Date

People

(PK)

Pictures# (PK) (FK)

Person

(PK)

(FK)

Locations

Location

City

Country

(PK)

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

45

Some Notes:

• Generally, relationships are created on the foreign key (FK)

• Same data types for the FK and PK columns

• Same or different name for the FK and PK columns

Ramon A. Mata-Toledo and Pauline K. Cushman. Fundamentals of Relational Databases. McGraw-Hill (2000)

Andreas Meier and Michael Kaufmann. SQL & NoSQL Databases. Springer (2019)

Allen G. Taylor. SQL For Dummies, 9th Edition (2019)

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

46

Exercise: Create relationship between the following tables.

Hint: First, you need to change one of the columns in the Products table.

Products

ProductName

PartNumber

Size

Color

Price

Supplier

QuantityInStock

Suppliers

varchar(100) UNIQUE

int

varchar(20)

varchar(20)

decimal

varchar(100)

int

Basics of Data Science

SupplierID

SupplierName

PhoneNumber

StreetAddress

City

State

Zip

int

varchar(100)

char(15)

varchar(100)

varchar(50)

char(3)

char(5)

47

Optionality

Cardinality

• The minimum number of

related records

• The maximum number of

related records

• Usually, 0 or 1

• Usually, 1 or many (N)

Example:

Example:

• If a course must have a

responsible, optionality= 1

• If a course can have only one

responsible, cardinality = 1

• If a course might have a

responsible, optionality= 0

• If a course can have several

responsible, cardinality = N

1 .. N means the range of

Optionality=1 (must case) .. Cardinality = N (unspecified maximum)

Basics of Data Science

48

Example: Database Diagram and Optionality-Cardinality

Pictures

Pictures#

FileName

Location

Date

1 .. 1

0 .. N

(PK)

People

Pictures# (PK) (FK)

Person

(PK)

(FK)

0 .. N

Locations

1 .. 1

Andreas Meier and Michael Kaufmann. SQL & NoSQL Databases. Springer (2019)

Allen G. Taylor. SQL For Dummies, 9th Edition (2019)

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

Location

City

Country

(PK)

49

Example: Database Diagram and Optionality-Cardinality

Pictures

Pictures#

FileName

Location

Date

1 .. 1

0 .. N

(PK)

People

Pictures# (PK) (FK)

Person

(PK)

(FK)

0 .. N

1 .. 1

Locations

1 .. 1

Andreas Meier and Michael Kaufmann. SQL & NoSQL Databases. Springer (2019)

Allen G. Taylor. SQL For Dummies, 9th Edition (2019)

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

Location

City

Country

(PK)

50

Optionality

Cardinality

1 = NOT NULL

1 = UNIQUE constraint

0 = NULL

N = No Constraint

Optionality .. Cardinality

1 .. 1

Not Null + Unique

0 .. N

Null + Not Unique

1 .. N

Not Null + Not Unique

0 .. 1

Null + Unique

Andreas Meier and Michael Kaufmann. SQL & NoSQL Databases. Springer (2019)

Allen G. Taylor. SQL For Dummies, 9th Edition (2019)

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

51

One-to-Many Relationships

Library Database

BookLoans

LibraryUsers

CardNumber

CardNumber

UserName

50001

Valentin

50002

Laura

50003

Christian

BookName

CheckoutDate

50001

Operations Research

15.10.2021

50001

SQL & NoSQL

Databases

01.11.2021

50001

SQL For Dummies

01.12.2021

Ramon A. Mata-Toledo and Pauline K. Cushman. Fundamentals of Relational Databases. McGraw-Hill (2000)

Andreas Meier and Michael Kaufmann. SQL & NoSQL Databases. Springer (2019)

Allen G. Taylor. SQL For Dummies, 9th Edition (2019)

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

52

One-to-One Relationships

Employee Database

Employees

1 .. 1

1 .. 1

EmployeeID

(PK)

FirstName

LastName

Position

OfficeNumber

HumanResources

EmployeeID

Salary

JobRating

(PK)

Ramon A. Mata-Toledo and Pauline K. Cushman. Fundamentals of Relational Databases. McGraw-Hill (2000)

Andreas Meier and Michael Kaufmann. SQL & NoSQL Databases. Springer (2019)

Allen G. Taylor. SQL For Dummies, 9th Edition (2019)

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

53

Many-to-Many Relationships

Example: Class Schedule Database

Students

0 .. N

0 .. N

StudentID

(PK)

StudentName

Courses

CourseID

CourseName

RoomName

(PK)

Ramon A. Mata-Toledo and Pauline K. Cushman. Fundamentals of Relational Databases. McGraw-Hill (2000)

Andreas Meier and Michael Kaufmann. SQL & NoSQL Databases. Springer (2019)

Allen G. Taylor. SQL For Dummies, 9th Edition (2019)

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

54

Example: Class Schedule Database

0 .. N

StudentCourses

CourseID

StudentID

Grade

0 .. N

(PK)

(PK)

Students

Courses

StudentID

(PK)

StudentName

CourseID

CourseName

RoomName

1 .. 1

1 .. 1

(PK)

Ramon A. Mata-Toledo and Pauline K. Cushman. Fundamentals of Relational Databases. McGraw-Hill (2000)

Andreas Meier and Michael Kaufmann. SQL & NoSQL Databases. Springer (2019)

Allen G. Taylor. SQL For Dummies, 9th Edition (2019)

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

55

Self Joins (Recursive Relationships)

• Other names: recursive relationship, self-referencing relationship

• Relationship rules and types: the same rules and types between two

tables

Employees Table

EmployeeID (PK)

Name

SupervisorID

1008

Maxim

1009

Joshua

1008

1020

Sven

1008

1021

Sarah

1020

Ramon A. Mata-Toledo and Pauline K. Cushman.

Fundamentals of Relational Databases. McGraw-Hill (2000)

Andreas Meier and Michael Kaufmann. SQL & NoSQL

Databases. Springer (2019)

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

56

Employee Organizational Chart

Maxim

Self Join Diagram

Employees

Joshua

Sven

EmployeeID

(PK)

Name

Sarah

SupervisorID

(FK)

Check constraint:

SupervisorID ≠ EmployeeID

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

57

Cascade Updates and Deletes:

Example:

Pictures

Picture# FileName

Location

Date

001

Brandenburg Gate.jpg

1

1.7.2021

002

Alexanderplatz.jpg

1

1.7.2021

Locations

003

Eiffel Tower.jpg

2

1.8.2021

Location

City

Country

004

Louvre.jpg

2

1.8.2021

1

Berlin

Germany

005

London Eye.jpg

3

1.9.2021

2

Paris

France

London

England

3

Basics of Data Science

7

58

Cascade Updates and Deletes

Pictures

Picture# FileName

Location

Date

001

Brandenburg Gate.jpg

1

1.7.2021

002

Alexanderplatz.jpg

1

1.7.2021

003

Eiffel Tower.jpg

2

7 1.8.2021

Location

City

Country

004

Louvre.jpg

2

7 1.8.2021

1

Berlin

Germany

005

London Eye.jpg

3

1.9.2021

2

Paris

France

London

England

Locations

3

Basics of Data Science

7

59

Cascade Updates and Deletes

Pictures

Picture# FileName

Location

Date

001

Brandenburg Gate.jpg

1

1.7.2021

002

Alexanderplatz.jpg

1

1.7.2021

003

Eiffel Tower.jpg

2

7 1.8.2021

Location

City

Country

004

Louvre.jpg

2

7 1.8.2021

1

Berlin

Germany

005

London Eye.jpg

3

1.9.2021

2

Paris

France

London

England

Locations

3

Basics of Data Science

7

60

How to implement cascade changes

• Note that cascade update and delete don’t concern insertion of new data

• If you choose to switch off the cascade functionality, then you can still

protect data integrity, e.g., from accidental changes

• How to activate: depends on the platform that you use

• In SQL: ON UPDATE CASCADE and ON DELETE CASCADE

Ramon A. Mata-Toledo and Pauline K. Cushman. Fundamentals of Relational Databases. McGraw-Hill (2000)

Andreas Meier and Michael Kaufmann. SQL & NoSQL Databases. Springer (2019)

Allen G. Taylor. SQL For Dummies, 9th Edition (2019)

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

61

Database Normalization

Basics of Data Science

62

Database Normalization

• Normalization consists of a set of rules that describe proper design of

database.

• The rules for table structure are called "normal forms" (NFs).

• There are first, second, and third normal forms that should be satisfied in

order.

• A database has a good if it design satisfies "third normal form" (3NF).

First Normal Form (1NF)

• It requires that all fields of table include a single piece of data

Ramon A. Mata-Toledo and Pauline K. Cushman. Fundamentals of Relational Databases. McGraw-Hill (2000)

Allen G. Taylor. SQL For Dummies, 9th Edition (2019)

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

63

Example 1: not satisfied 1NF

Picture#

FileName

Person

001

Brandenburg Gate.jpg

Hans

002

Alexanderplatz.jpg

Joshua

003

Eiffel Tower.jpg

Joshua

004

Louvre.jpg

Hans

005

London Eye.jpg

Hans, Joshua, Sarah

006

River Thames.jpg

A bad solution to the issue:

Picture#

FileName

Person1

001

Brandenburg Gate.jpg

Hans

002

Alexanderplatz.jpg

Joshua

003

Eiffel Tower.jpg

Joshua

004

Louvre.jpg

Hans

005

London Eye.jpg

Hans

006

River Thames.jpg

Basics of Data Science

Person2

Person3

Joshua

Sarah

64

Example 1: satisfying 1NF

Pictures

People

Picture#

FileName

Location

Date

Picture#

Person

001

Brandenburg Gate.jpg

1

1.7.2021

001

Hans

002

Alexanderplatz.jpg

1

1.7.2021

002

Joshua

003

Eiffel Tower.jpg

2

1.8.2021

003

Joshua

004

Louvre.jpg

2

1.8.2021

004

Hans

005

London Eye.jpg

3

1.9.2021

005

Hans

005

Joshua

005

Sarah

Ramon A. Mata-Toledo and Pauline K. Cushman. Fundamentals of Relational Databases. McGraw-Hill (2000)

Allen G. Taylor. SQL For Dummies, 9th Edition (2019)

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

65

Example 2: satisfying 1NF

Address

Gottlieb Daimler Str. 42, Kaiserslautern, RLP 67663

Street

Building

City

State

PostalCode

Gottlieb Daimler

Str.

42

Kaiserslautern

RLP

67663

Ramon A. Mata-Toledo and Pauline K. Cushman. Fundamentals of Relational Databases. McGraw-Hill (2000)

Allen G. Taylor. SQL For Dummies, 9th Edition (2019)

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

66

Second Normal Form (2NF)

• A table fulfills the 2NF if all of the fields in the primary key are required to

determine the other fields, i.e., the non-key fields.

People

People

Picture# (PK)

Person (PK)

Picture# (PK)

Person (PK)

LastName

001

Hans

001

Hans

Schmidt

002

Joshua

002

Joshua

Schmidt

003

Joshua

003

Joshua

Schmidt

004

Hans

004

Hans

Schmidt

005

Hans

005

Hans

Schmidt

005

Joshua

005

Joshua

Schmidt

005

Sarah

005

Sarah

Woods

Basics of Data Science

67

Example: satisfying 2NF

People

Picture# (PK)

Person (PK)

001

1

002

2

003

2

004

1

005

1

005

2

005

3

Person

Basics of Data Science

Person (PK)

FirstName

LastName

1

Hans

Schmidt

2

Joshua

Schmidt

3

Sarah

Woods

68

Third Normal Form

• A table fulfills 3NF if all of the non-key fields are independent from any

other non-key field.

Example: 3NF is violated

Person

Picture# (PK)

FirstName

LastName

StateAbbv

StateName

1

Hans

Schmidt

RLP

Rheinland-Pfalz

2

Joshua

Schmidt

BWG

Baden-Württemberg

3

Sarah

Woods

NRW

Nordrhein-Westfalen

Ramon A. Mata-Toledo and Pauline K. Cushman. Fundamentals of Relational Databases. McGraw-Hill (2000)

Allen G. Taylor. SQL For Dummies, 9th Edition (2019)

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

69

Example: satisfying 3NF

Sate

Person

Picture# (PK)

FirstName

LastName

StateAbbv

1

Hans

Schmidt

RLP

2

Joshua

Schmidt

BWG

3

Sarah

Woods

NRW

Basics of Data Science

StateAbbv (PK)

StateName

RLP

Rheinland-Pfalz

BWG

BadenWürttemberg

NRW

NordrheinWestfalen

70

Denormalization

• The objective of normalization process consists in removing redundant

information from the database and make the database work properly.

• In contrast: the aim of denormalization is to introduce redundancy and

violates deliberately one of the normalization forms if database design has

a good reason to do so, e.g., this might be done for the objective of

increasing performance in some application contexts.

Allen G. Taylor. SQL For Dummies, 9th Edition (2019)

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

71

Example: denormalizing a Table

Normalized tables:

Sate

Person

Picture# (PK)

FirstName

LastName

StateAbbv

1

Hans

Schmidt

RLP

2

Joshua

Schmidt

BWG

3

Sarah

Woods

NRW

Basics of Data Science

StateAbbv (PK)

StateName

RLP

Rheinland-Pfalz

BWG

BadenWürttemberg

NRW

NordrheinWestfalen

72

Example denormalizing a Table

Picture# (PK)

FirstName

LastName

StateAbbv

StateName

1

Hans

Schmidt

RLP

Rheinland-Pfalz

2

Joshua

Schmidt

BWG

Baden-Württemberg

3

Sarah

Woods

NRW

Nordrhein-Westfalen

Basics of Data Science

73

Other Types of Databases

Basics of Data Science

74

Graph Databases (GD)

• Nodes and edges are used to store information.

• In a GD, each node can have relationships with (connected to) any other

node.

• In a GD, nodes can be used to represent different kinds and types of

information.

• Application area: typically used for modeling, representing, and studying

social networks.

Andreas Meier and Michael Kaufmann. SQL & NoSQL Databases. Springer (2019)

Allen G. Taylor. SQL For Dummies, 9th Edition (2019)

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

75

Example:

Married to

Has played

Friends with

Has played

Parent of

Has played

Friends with

Basics of Data Science

Parent of

● Birthplace

● Age

● Gender

● Job title

● Salary

76

Document Databases (DD)

• Document databases are used to store documents, where each document

represents a single object

• Document databases Support files that are in different formats

• In Document databases, a variety of operations can be performed on

documents, e.g., reading, classifying, ….

Andreas Meier and Michael Kaufmann. SQL & NoSQL Databases. Springer (2019)

Allen G. Taylor. SQL For Dummies, 9th Edition (2019)

Adam Wilbert. Relational Databases: Essential Training. (2019)

Basics of Data Science

77

Example: Document Databases

Basics of Data Science

78

NoSQL Databases

(Not relational)

Basics of Data Science

79

Prof. Dr. Oliver Wendt

Dr. habil. Mahdi Moeini

Business Information Systems & Operations Research

Basics of Data Science

Summer Semester 2022

Part 1, Section 3 → Graph- and Network-based Data Models:

Modeling and Querying Structured Relations of Objects

Part 1: Organizing the “Data Lake” (from data mining to data fishing)

• Relational Database Models: Modeling and Querying Structured Attributes

of Objects

• Graph- and Network-based Data Models: Modeling and Querying

Structured Relations of Objects

• Information Retrieval: Document Mining and Querying of ill-structured Data

• Streaming Data and High Frequency Distributed Sensor Data

• The Semantic Web: Ontologist’s Dream (or nightmare?) of how to integrate

evolving heterogeneous data lakes

Basics of Data Science

2

Summary:

In this section, we will see:

• A short introduction.

• What is a “graph database”?

• Why do we need “graph database”?

• How can we use a “graph database”?

If you are interested in this topic, for further and deeper knowledge on graph

databases, please refer to references and books on graph databases, e.g.,

● Ian Robinson, Jim Webber, and Emil Eifrem. Graph Databases New Opportunities for Connected Data. O'Reilly (2015)

● Andreas Meier and Michael Kaufmann. SQL & NoSQL Databases. Springer (2019)

● Dave Bechberger, Josh Perryman. Graph Databases in Action. Manning (2020)

Basics of Data Science

3

NoSQL Databases

https://hostingdata.co.uk/nosql-database/

Basics of Data Science

4

Basic structure of a relational database management system.

Andreas Meier and Michael Kaufmann. SQL & NoSQL Databases. Springer (2019)

Basics of Data Science

5

Variety of sources for Big Data

Andreas Meier and Michael Kaufmann. SQL & NoSQL Databases. Springer (2019)

Basics of Data Science

6

NoSQL: Nonrelational SQL, Not Only SQL, No to SQL?

The term NoSQL is basically used for nonrelational data management systems

that meet the following conditions:

(1) We don’t store data in table data structures.

(2) The database querying language is not SQL.

NoSQL databases support various database models.

Andreas Meier and Michael Kaufmann. SQL & NoSQL Databases. Springer (2019)

Ian Robinson, Jim Webber, and Emil Eifrem. Graph Databases New Opportunities for Connected Data. O'Reilly (2015)

Basics of Data Science

7

Basic structure of a NoSQL database management system

• Mostly, NoSQL database

management systems use

Massively distributed

storage architecture.

• Multiple consistency

models, e.g., strong

consistency, weak

consistency, etc.

Andreas Meier and Michael Kaufmann. SQL & NoSQL Databases. Springer (2019)

Basics of Data Science

8

The definition of NoSQL databases based on the web-based NoSQL Archive:

A Web-based storage system is a NoSQL database system if the following

requirements are met:

• Model: it does not use relational database model.

• At least three Vs: volume, variety, and velocity.

• Schema: no fixed database schema.

• Architecture: it supports horizontal scaling and massively distributed web

applications.

• Replication: data replication is supported.

• Consistency assurance: consistency is ensured.

NoSQL Archive: http://nosql-database.org/, retrieved February 17, 2015

Andreas Meier and Michael Kaufmann. SQL & NoSQL Databases. Springer (2019)

Basics of Data Science

9

Three different NoSQL databases.

Andreas Meier and Michael Kaufmann. SQL & NoSQL Databases. Springer (2019)

Ian Robinson, Jim Webber, and Emil Eifrem. Graph Databases New Opportunities for Connected Data. O'Reilly (2015)

Adam Fowler. NoSQL For Dummies, 9th Edition (2015)

Basics of Data Science

10

Graph Databases

Basics of Data Science

11

A graph is a network composed of nodes (also called vertices) and connections,

the so-called edges (or arcs, if directed).

a

f

b

g

d

c

h

•

•

•

•

•

•

e

Adjacent

• Cycle

Path

• Tree

Degree of a node

• Forest

Connected graph

Disconnected graph

Directed Graph

i

HMM: OR, 7.1 u. 7.2

Basics of Data Science

12

• Graph databases leverage relationships in highly connected data with the

objective of generating insights.

• Indeed, when we have connected data with a significant size or value, using

graph databases is the best choice to represent and query connected data.

• Large companies realized the importance of graphs and graph data bases

longtime ago, but in recent years using graph infrastructures became more

and more common and used by many organizations.

• Despite this renaissance of graph data and graph thinking to use in

information management, it is interesting and important to note that the

graph theory itself is not new.

Ian Robinson, Jim Webber, and Emil Eifrem. Graph Databases New Opportunities for Connected Data. O'Reilly (2015)

Basics of Data Science

13

What Is a Graph?

In a formal language, a graph is a collection or set of vertices (nodes) and edges

that connect the vertices (nodes).

Example: A small social graph

Ian Robinson, Jim Webber, and Emil Eifrem. Graph Databases New Opportunities for Connected Data. O'Reilly (2015)

Basics of Data Science

14

Example: A simple graph model for

publishing messages in social network.

Relationships: CURRENT and PREVIOUS.

Question: How can you identify Ruth's timeline?

Ian Robinson, Jim Webber, and Emil Eifrem. Graph Databases New Opportunities for Connected Data. O'Reilly (2015)

Basics of Data Science

15

Why Graph Databases?

(i) Relational Databases Lack Relationships

• Initially, the relational databases were designed to codify tabular structures.

Even though this task does very well, the relational databases struggle

when they try to model the ad hoc relationships that we encounter in the

real world.

• In the relational databases, there exist relationships, but only at modeling

stage just for the purpose of joining tables. Moreover, this becomes an

issue in highly connected domains.

Ian Robinson, Jim Webber, and Emil Eifrem. Graph Databases New Opportunities for Connected Data. O'Reilly (2015)

Basics of Data Science

16

Why Graph Databases?

(ii) NoSQL Databases Also Lack Relationships

•

Most of the NoSQL databases store collections of disconnected objects

(whether documents, values, or columns).

• In the NoSQL databases, we can use aggregate’s identifiers to add

relationships. But this can become quickly excessively expensive.

• Moreover, the NoSQL databases don’t support operations to point

backward.

Ian Robinson, Jim Webber, and Emil Eifrem. Graph Databases New Opportunities for Connected Data. O'Reilly (2015)

Basics of Data Science

17

Why Graph Databases?

(iii) Graph Databases Embrace Relationships

• In the previous models that we studied, if there is any implicit connection in

the data, the data models and the databases are blind to these connections.

But, in the graph world, connected data is stored truly as connected data.

• The graph models are flexible in the sense that they allow to add new nodes

and new relationships without having any need to migrate data or to

compromise the existing network, i.e., the original data and its intent

remain unchanged.

Ian Robinson, Jim Webber, and Emil Eifrem. Graph Databases New Opportunities for Connected Data. O'Reilly (2015)

Basics of Data Science

18

Example:

Ian Robinson, Jim Webber, and Emil Eifrem. Graph Databases New Opportunities for Connected Data. O'Reilly (2015)

Basics of Data Science

19

Graph Databases

A graph database management system (or for short, a graph database) is an

online database management system that can perform Create, Read, Update,

and Delete (CRUD) methods on a graph data models.

https://www.avolutionsoftware.com/abacus/the-graph-database-advantage-for-enterprise-architects/

Ian Robinson, Jim Webber, and Emil Eifrem. Graph Databases New Opportunities for Connected Data. O'Reilly (2015)

Basics of Data Science

20

Properties of graph Databases

• (a) The underlying storage:

There are graph databases that use native graph storage which is optimized

and designed for storing and managing graphs. However, some other graph

databases do not use native graph storage

• (b) The processing engine:

In some definitions, the connected nodes of a graph point physically "point"

to each other in the database. Such a graph database use a so-called indexfree adjacency or native graph processing.

In a broader definition, which we use in this course too, we assume a graph

database as a one that can perform CRUD operations on a graph data.

Ian Robinson, Jim Webber, and Emil Eifrem. Graph Databases New Opportunities for Connected Data. O'Reilly (2015)

Basics of Data Science

21

Figure: An overview of some of the graph databases.

Ian Robinson, Jim Webber, and Emil Eifrem. Graph Databases New Opportunities for Connected Data. O'Reilly (2015)

Basics of Data Science

22

Graph Compute Engines

A graph compute engine is a technology that is used to run graph

computational algorithms (such as identifying clusters, …) on large datasets.

Some Graph Compute Engines: Cassovary, Pegasus, and Giraph.

https://github.com/twitter/cassovary

http://www.cs.cmu.edu/~pegasus/

https://giraph.apache.org/

Ian Robinson, Jim Webber, and Emil Eifrem. Graph Databases New Opportunities for Connected Data. O'Reilly (2015)

Basics of Data Science

23

Data Modeling with Graphs

• Question: how do we model as graphs?

Models and Goals

•

What is “modeling”? An abstracting process which is motivated by a

specific goal, purpose, or need.

• How to model? There are no unique and natural way!

Ian Robinson, Jim Webber, and Emil Eifrem. Graph Databases New Opportunities for Connected Data. O'Reilly (2015)

Basics of Data Science

24

Labels and Paths in the Graph

• In a given network, the nodes might play one or more roles, e.g., some

nodes might show users, whereas others might represent orders or maybe

products, etc. To attribute roles to a node, we can use “labels”. Since a

node of a given graph can take various roles (simultaneously), we might

need to associate more than one label to a given node.

• Using labels, we can ask database to perform different tasks, e.g., find all

the nodes labeled “product”.

• In a graph model, a natural representation of relationships is done by

“paths”. Hence, querying (or traversing) a given graph model is done

through following paths.

Ian Robinson, Jim Webber, and Emil Eifrem. Graph Databases New Opportunities for Connected Data. O'Reilly (2015)

Basics of Data Science

25

The Labeled Property Graph Model

• A labeled property graph is composed of nodes, relationships, labels, and

properties.

• Nodes hold properties.

• Nodes can have one or more labels to group nodes and indicate their role(s).

• Nodes are connected by relationships, which are named, have direction and

point from a start node to an end node.

• Similar to the case of nodes, relationships can have properties too.

In addition to a labeled property graph model, we need a query language to

create, manipulate, and query data in a graph database.

Ian Robinson, Jim Webber, and Emil Eifrem. Graph Databases New Opportunities for Connected Data. O'Reilly (2015)

Basics of Data Science

26

Which Query Language?

• Which graph database query language? → Cypher

• Why Cypher? → Standard and widely deployed, easy to learn and

understand (if you have a background in SQL).

• There are other graph database query languages, e.g., SPARQL and Gremlin.

https://neo4j.com/developer/cypher/

Ian Robinson, Jim Webber, and Emil Eifrem. Graph Databases New Opportunities for Connected Data. O'Reilly (2015)

Basics of Data Science

27

Querying Graphs: An Introduction to Cypher

• Using Cypher, we can ask the database to search for data that corresponds

and matches a given pattern.

Identifiers: Ian, Jim, and Emil

Example: ASCII art representation of this diagram in Cypher:

(emil)<-[:KNOWS]-(jim)-[:KNOWS]->(ian)-[:KNOWS]->(emil)

Ian Robinson, Jim Webber, and Emil Eifrem. Graph Databases New Opportunities for Connected Data. O'Reilly (2015)

Basics of Data Science

28

Some Notation:

• We draw nodes with parentheses.

•

We draw relationships with --> and <-- (where the signs < and > indicate

direction of relationship). Moreover, between the dashes, set off by

square brackets [] and put a colon and then the name of the relationship.

• Similarly, we put a colon as prefix to node labels.

• We use curly braces, i.e., {} to specify node (and relationship) property

key-value pairs.

Ian Robinson, Jim Webber, and Emil Eifrem. Graph Databases New Opportunities for Connected Data. O'Reilly (2015)

Basics of Data Science

29

(emil:Person {name:'Emil'})

<-[:KNOWS]-(jim:Person {name:'Jim'})

-[:KNOWS]->(ian:Person {name:'Ian'})

-[:KNOWS]->(emil)

• Identifiers: Ian, Jim, and Emil

• Property: name

• Label: Person

Example: The identifier “Emil” is assigned to a node in the dataset, where this

nodes has the “label Person” and a “name property” with the value “Emil”.

Ian Robinson, Jim Webber, and Emil Eifrem. Graph Databases New Opportunities for Connected Data. O'Reilly (2015)

Basics of Data Science

30

Cypher is made up with clauses/keywords, for example: MATCH clause that

is followed by a RETURN clause.

Example 1: Find Person nodes in the graph that have a name of ‘Tom Hanks'.

MATCH (tom:Person {name: 'Tom Hanks’})

RETURN tom

Example 2: Find which ‘Movie’s Tom Hanks has directed.

MATCH (:Person {name: 'Tom Hanks'})-[:DIRECTED]->(movie:Movie)

RETURN movie

https://neo4j.com/developer/cypher/querying/

https://gist.github.com/DaniSancas/1d5265fc159a95ff457b940fc5046887

Ian Robinson, Jim Webber, and Emil Eifrem. Graph Databases New Opportunities for Connected Data. O'Reilly (2015)

Basics of Data Science

31

Example 3: In the following Cypher query,

we use these clauses to find the mutual

friends of a user whose name is Jim.

MATCH (a:Person {name:'Jim'})-[:KNOWS]->(b)-[:KNOWS]->(c),

(a)-[:KNOWS]->(c)

RETURN b, c

Alternatively:

MATCH (a:Person)-[:KNOWS]->(b)-[:KNOWS]->(c), (a)-[:KNOWS]->(c)

WHERE a.name = 'Jim'

RETURN b, c

Ian Robinson, Jim Webber, and Emil Eifrem. Graph Databases New Opportunities for Connected Data. O'Reilly (2015)

Basics of Data Science

32

The RETURN clause

Using this clause, we specify which nodes, properties, and relationships in the

matched data must be returned to the user.

Some other Cypher clauses

• WHERE: used for filtering results that match a pattern.

• CREATE and CREATE UNIQUE: used for creating nodes and relationships.

• DELETE: to removes nodes, properties, and relationships.

• FOREACH: to performs an update action on a list.

• START: we use it to specify one or more explicit starting points (i.e., nodes

or relationships) in the given graph.

Ian Robinson, Jim Webber, and Emil Eifrem. Graph Databases New Opportunities for Connected Data. O'Reilly (2015)

Basics of Data Science

33

Appendix:

• Some definitions from graph theory

(for self study)

Basics of Data Science

34

A graph G is a network composed of nodes (also called vertices) and connections,

the so-called edges (or arcs if directed).

We may denote such a graph with G=(V,E), where

V is the set of vertices (nodes) and E is the set of edges.

v2

v1

Suppose that the graph G has n vertices and m edges.

v3

Let V={v1, …, vn} is the set of vertices and E={e1, …, em}

Each edge is defined by two nodes, for example: e1 = (v1, v2).

v4

Two nodes vi and vj are adjacent if they are connected by an edge.

Basics of Data Science

35

(*) Degree of a vertex is the number of edges passing from it. Example: degree

of v3 is 3.

(*) A path is a sequence of edges that connect a sequence of (adjacent)

vertices.

Example: v2, v1 , v3, v4

v2

v3

v1

Basics of Data Science

v4

36

(*) A cycle is a sequence of vertices starting and ending at a same vertex. The

length of a cycle is the number of edges in the cycle.

Example: {v1, v2 , v3 , v1} define a cycle of length 3.

v2

v1

v3

Basics of Data Science

v4

37

Some definitions:

(*) Connected graph: There is a path between any two vertices.

(*) Disconnected graph: There are at least 2 vertices such that

there is no path for connecting them.

Basics of Data Science

38

(*) Tree: Any connected graph that has no cycle.

Example:

(*) Forest: a set of trees.

Example:

Basics of Data Science

39

(*) Complete graph (or a Clique): All vertices are adjacent to each other.

Example:

(*) Planar graph: The edges do not cross (i.e., do not overlap).

Example:

Basics of Data Science

40

(*) Directed Graphs:

(*) Weighted graph: is a graph whose vertices or edges have been assigned

weights.

v2

20

15

v1

v4

v3

5

Basics of Data Science

50

41

Prof. Dr. Oliver Wendt

Dr. habil. Mahdi Moeini

Business Information Systems & Operations Research

Basics of Data Science

Summer Semester 2022

Part 1, Section 4 → Information Retrieval: Document Mining

and Querying of ill-structured Data

Part 1: Organizing the “Data Lake” (from data mining to data fishing)

• Relational Database Models: Modeling and Querying Structured Attributes

of Objects

• Graph- and Network-based Data Models: Modeling and Querying

Structured Relations of Objects

• Information Retrieval: Document Mining and Querying of ill-structured Data

• Streaming Data and High Frequency Distributed Sensor Data

• The Semantic Web: Ontologist’s Dream (or nightmare?) of how to integrate

evolving heterogeneous data lakes

Basics of Data Science

2

Summary:

In this section, we will see:

• A short introduction

• What is a “document database”?

• Why do we need “document database”?

• How can we use a “document database”?

Basics of Data Science

3

Information Retrieval (IR): Information retrieval might be defined as follows:

• “Information retrieval (IR) is finding material (usually documents) of an

unstructured nature (usually text) that satisfies an information need from

within large collections (usually stored on computers).”

• The term “unstructured data” means the data that does not have a

structure which is clear, semantically apparent, and easy-to-understand for