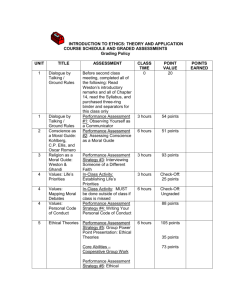

Ethics for Engineers - Martin Peterson - Ethics for Engineers-Oxford University Press (2019)

advertisement