ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

Chapter 1

Complex Number and

Complex Variables

Objectives

After completing this chapter, you will be able to:

▪

▪

▪

Differentiate Complex Number with Complex

Number.

Learn the different operations and relations

involving Complex Number.

Learn what Polar Form is.

Introduction

Functions of (x, y) that depend only on the combination (x + iy) are called functions of

a complex variable and functions of this kind that can be expanded in power series in

this variable are of particular interest.

This combination (x + iy) is generally called z, and we can define such functions as z n,

exp(z), sin z, and all the standard functions of z as well as of x.

They are defined in exactly the same way the only difference being that they are

actually complex valued functions, that is, they are vectors in this two dimensional

complex number space, each with a real and an imaginary part (or component).

Most of the standard functions we have previously discussed have the property that

their values are real when their arguments are real. The obvious exception is the square

root function, which becomes imaginary for negative arguments.

Since we can multiply z by itself and by any other complex number, we can form any

polynomial in z and any power series as well. We define the exponential and sine

functions of z by their power series expansions which converge everywhere in the

complex plane.

Since all the operations that produce standard functions can be applied to complex

functions we can produce all the standard functions of a complex variable by the same

steps as go to producing standard functions of real variables.

1

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

Complex Number

A complex number is a number that can be expressed in the form a + bi,

where a and b are real numbers, and i represents the imaginary unit, satisfying the

equation i2 = −1. Because no real number satisfies this equation, i is called an imaginary

number. For the complex number a + bi, a is called the real part, and b is called

the imaginary part.

Complex numbers allow solutions to certain equations that have no solutions in real

numbers. For example, the equation

has no real solution, since the square of a real number cannot be negative. Complex

numbers, however, provide a solution to this problem. The idea is to extend the real

numbers with an indeterminate i (sometimes called the imaginary unit) taken to satisfy

the relation i2 = −1, so that solutions to equations like the preceding one can be found.

In this case, the solutions are −1 + 3i and −1 − 3i, as can be verified using the fact

that i2 = −1:

A complex number can be visually represented as a pair of

numbers (a, b) forming a vector on a diagram called

an Argand diagram, representing the complex plane. "Re"

is the real axis, "Im" is the imaginary axis,

and i satisfies i2 = −1.

According to the fundamental theorem of algebra, all polynomial equations with

real or complex coefficients in a single variable have a solution in complex numbers. In

contrast, some polynomial equations with real coefficients have no solution in real

numbers. The 16th-century Italian mathematician Gerolamo Cardano is credited with

introducing complex numbers—in his attempts to find solutions to cubic equations.

2

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

Formally, the complex number system can be defined as the algebraic extension of the

ordinary real numbers by an imaginary number i. This means that complex numbers can

be added, subtracted and multiplied as polynomials in the variable i, under the rule

that i2 = −1. Furthermore, complex numbers can also be divided by nonzero complex

numbers.

Based on the concept of real numbers, a complex number is a number of the

form a + bi, where a and b are real numbers and i is an indeterminate satisfying i2 = −1.

For example, 2 + 3i is a complex number.

This way, a complex number is defined as a polynomial with real coefficients in the

single indeterminate i, for which the relation i2 + 1 = 0 is imposed. Based on this

definition, complex numbers can be added and multiplied, using the addition and

multiplication for polynomials. The relation i2 + 1 = 0 induces the equalities i4k =

1, i4k+1 = i, i4k+2 = −1, and i4k+3 = −i, which hold for all integers k; these allow the

reduction of any polynomial that results from the addition and multiplication of complex

numbers to a linear polynomial in i, again of the form a + bi with real coefficients a, b.

The real number a is called the real part of the complex number a + bi; the real

number b is called its imaginary part. To emphasize, the imaginary part does not

include a factor i; that is, the imaginary part is b, not bi.

Cartesian Complex Plane

A complex number z can thus be identified with

an ordered pair (Re(z), Im(z)) of real numbers, which in

turn may be interpreted as coordinates of a point in a

two-dimensional space. The most immediate space is

the Euclidean plane with suitable coordinates, which is

then called complex plane or Argand diagram named

after Jean-Robert Argand. Another prominent space on

which the coordinates may be projected is the twodimensional surface of a sphere, which is then

called Riemann sphere.

The definition of the complex numbers involving two arbitrary real values

immediately suggests the use of Cartesian coordinates in the complex plane. The

horizontal (real) axis is generally used to display the real part, with increasing values to

the right, and the imaginary part marks the vertical (imaginary) axis, with increasing

values upwards.

3

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

A charted number may be either viewed as the coordinatized point, or as a position

vector from the origin to this point. The coordinate values of a complex number z can

hence be expressed its Cartesian, rectangular, or algebraic form.

Notably, the operations of addition and multiplication take on a very natural geometric

character, when complex numbers are viewed as position vectors: addition corresponds

to vector addition, while multiplication corresponds to multiplying their magnitudes and

adding the angles they make with the real axis. Viewed in this way, the multiplication of

a complex number by i corresponds to rotating the position vector counterclockwise by

a quarter turn (90°) about the origin—a fact which can be expressed algebraically as

follows:

Polar Complex Plane

It is a two-dimensional coordinate system in which

each point on a plane is determined by a distance from a

reference point and an angle from a reference direction.

The reference point (analogous to the origin of

a Cartesian coordinate system) is called the pole, and

the ray from the pole in the reference direction is the polar

axis. The distance from the pole is called the radial coordinate, radial distance or

simply radius, and the angle is called the angular coordinate, polar angle, or azimuth.

The radial coordinate is often denoted by r or ρ, and the angular coordinate by φ, θ, or t.

Angles in polar notation are generally expressed in either degrees or radians (2π rad

being equal to 360°).

Converting Between Polar And Cartesian Coordinates

The polar coordinates r and φ can be converted to the Cartesian

coordinates x and y by using the trigonometric functions sine and cosine:

The Cartesian coordinates x and y can be converted to polar

coordinates r and φ with r ≥ 0 and φ in the interval (−π, π] by:

(as in the Pythagorean theorem or the Euclidean norm), and

4

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

where atan2 is a common variation on the arctangent function defined as

If r is calculated first as above, then this formula for φ may be stated a little more

simply using the standard arccosine function:

The value of φ above is the principal value of the complex number

function arg applied to x + iy. An angle in the range [0, 2π) may be obtained by adding

2π to the value in case it is negative (in other words when y is negative).

Modulus And Argument

An alternative option for coordinates in the complex

plane is the polar coordinate system that uses the distance of

the point z from the origin (O), and the angle subtended

between the positive real axis and the line segment Oz in a

counterclockwise sense. This leads to the polar form of

complex numbers.

The absolute value (or modulus or magnitude) of a complex

number z = x + yi is

If z is a real number (that is, if y = 0), then r = |x|. That is, the absolute value of a

real number equals its absolute value as a complex number.

5

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

By Pythagoras' theorem, the absolute value of a complex number is the distance

to the origin of the point representing the complex number in the complex plane.

The argument of z (in many applications referred to as the "phase" φ) is the

angle of the radius Oz with the positive real axis, and is written as arg(z). As with the

modulus, the argument can be found from the rectangular form x+yi —by applying the

inverse tangent to the quotient of imaginary-by-real parts. By using a half-angle identity,

a single branch of the arctan suffices to cover the range of the arg-function, (−π, π], and

avoids a more subtle case-by-case analysis

Normally, as given above, the principal value in the interval (−π, π] is

chosen. Values in the range [0, 2π) are obtained by adding 2π—if the value is

negative. The value of φ is expressed in radians in this article. It can increase by

any integer multiple of 2π and still give the same angle, viewed as subtended by

the rays of the positive real axis and from the origin through z. Hence, the arg

function is sometimes considered as multivalued. The polar angle for the

complex number 0 is indeterminate, but arbitrary choice of the polar angle 0 is

common.

The value of φ equals the result of atan2:

Together, r and φ give another way of representing complex numbers, the polar

form, as the combination of modulus and argument fully specify the position of a point

on the plane. Recovering the original rectangular co-ordinates from the polar form is

done by the formula called trigonometric form

Using Euler's formula this can be written as

Using the cis function, this is sometimes abbreviated to

6

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

In angle notation, often used in electronics to represent a phasor with

amplitude r and phase φ, it is written as

Equality

Two complex numbers are equal if and only if both their real and imaginary parts are

equal. That is, complex numbers z1 and z2 are equal if and only if Re(z1) =

Re(z2) and Im(z1) = Im(z2). Nonzero complex numbers written in polar form are equal if

and only if they have the same magnitude and their arguments differ by an integer

multiple of 2π.

Ordering

Since complex numbers are naturally thought of as existing on a two-dimensional plane,

there is no natural linear ordering on the set of complex numbers. In fact, there is

no linear ordering on the complex numbers that is compatible with addition and

multiplication – the complex numbers cannot have the structure of an ordered field. This

is because any square in an ordered field is at least 0, but i2 = −1.

Conjugate

The complex conjugate of the complex number z = x + yi is given by x − yi. It is

denoted by either 𝑧 or z*. This unary operation on complex numbers cannot be

expressed by applying only their basic operations addition, subtraction, multiplication

and division.

Geometrically, 𝑧 is the "reflection" of z about the real axis. Conjugating twice gives the

original complex number

7

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

The product of a complex number z = x + y and its conjugate is known as the absolute

square. It is always a positive real number and equals the square of the magnitude of

each:

This property can be used to convert a fraction with a complex denominator to an

equivalent fraction with a real denominator by expanding both numerator and

denominator of the fraction by the conjugate of the given denominator. This process is

sometimes called "rationalization" of the denominator (although the denominator in the

final expression might be an irrational real number), because it resembles the method to

remove roots from simple expressions in a denominator.

The real and imaginary parts of a complex number z can be extracted using the

conjugation:

Moreover, a complex number is real if and only if it equals its own conjugate.

Conjugation distributes over the basic complex arithmetic operations:

Conjugation is also employed in inversive geometry, a branch of geometry studying

reflections more general than ones about a line. In the network analysis of electrical

circuits, the complex conjugate is used in finding the equivalent impedance when

the maximum power transfer theorem is looked for.

Addition And Subtraction

Two complex numbers a and b are most easily added by separately adding their

real and imaginary parts of the summands. That is to say:

8

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

Multiplication

Since the real part, the imaginary part, and the indeterminate i in a complex number are

all considered as numbers in themselves, two complex numbers, given as z = x + yi

and w = u + vi are multiplied under the rules of the distributive property,

the commutative properties and the defining property i2 = -1 in the following way

Reciprocal And Division

Multiplication And Division In Polar Form

Formulas for multiplication, division and exponentiation are simpler in polar form

than the corresponding formulas in Cartesian coordinates. Given two complex

numbers z1 = r1(cos φ1 + i sin φ1) and z2 = r2(cos φ2 + i sin φ2), because of the

trigonometric identities

9

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

In other words, the absolute values are multiplied and the arguments are added to yield

the polar form of the product. For example, multiplying by i corresponds to a quarterturn counter-clockwise, which gives back i2 = −1. The picture at the right illustrates the

multiplication of

Since the real and imaginary parts of 5 + 5i are equal, the argument of that number is

45 degrees, or π/4 (in radian). On the other hand, it is also the sum of the angles at the

origin of the red and blue triangles are arctan (1/3) and arctan(1/2), respectively. Thus,

the formula

holds. As the arctan function can be approximated highly efficiently, formulas like this –

known as Machin-like formulas – are used for high-precision approximations of π.

Similarly, division is given by

Video Links

Introduction to complex numbers

• https://www.youtube.com/watch?v=SP-YJe7Vldo

Complex Variables and Functions

• https://www.youtube.com/watch?v=iUhwCfz18os&lis

t=PLdgVBOaXkb9CNMqbsL9GTWwU542DiRrPB

Complex Variables and Functions

• https://www.youtube.com/watch?v=iUhwCfz18os&lis

t=PLdgVBOaXkb9CNMqbsL9GTWwU542DiRrPB

References

•

https://ocw.mit.edu/ans7870/18/18.013a/textbook

/HTML/chapter18/section02.html

•

https://en.wikipedia.org/wiki/Complex_number#:

~:text=A%20complex%20number%20is%20a,is%

20called%20an%20imaginary%20number.

10

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

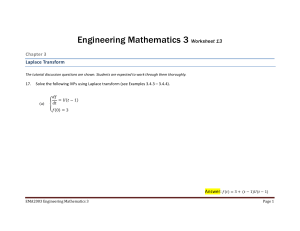

Chapter 2

Laplace and Inverse

Laplace Transform

Objectives

After completing this chapter, you will be able to:

▪

▪

▪

Identify and Understand the Laplace and Inverse

Laplace Transform

Lists and apply the Properties and Theorem of

Laplace Transform

Convert Laplace Transform into Inverse Laplace

Transform

Introduction

In mathematics, the Laplace transform, named after its inventor Pierre-Simon

Laplace (/ləˈplɑːs/), is an integral transform that converts a function of a real

variable t (often time) to a function of a complex variable s complex frequency). The

transform has many applications in science and engineering because it is a tool for

solving differential equations. In particular, it transforms differential equations into

algebraic equations and convolution into multiplication.

The Laplace transform is named after mathematician and astronomer Pierre-Simon

Laplace, who used a similar transform in his work on probability theory. Laplace wrote

extensively about the use of generating functions in Essai philosophique sur les

probabilités (1814), and the integral form of the Laplace transform evolved naturally as a

result.

Laplace's use of generating functions was similar to what is now known as the ztransform, and he gave little attention to the continuous variable case which was

discussed by Niels Henrik Abel. The theory was further developed in the 19th and early

20th centuries by Mathias Lerch, Oliver Heaviside, and Thomas Bromwich.

The current widespread use of the transform (mainly in engineering) came about during

and soon after World War II, replacing the earlier Heaviside operational calculus. The

advantages of the Laplace transform had been emphasized by Gustav Doetsch to whom

the name Laplace Transform is apparently due.

Laplace Transform

1

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

The Laplace transform of a function f(t), defined for all real numbers t ≥ 0, is the

function F(s), which is a unilateral transform defined by

where s is a complex number frequency parameter

An alternate notation for the Laplace transform is ℒ {𝑓} instead of F.

The meaning of the integral depends on types of functions of interest. A necessary

condition for existence of the integral is that f must be locally integrable on [0, ∞). For

locally integrable functions that decay at infinity or are of exponential type, the integral

can be understood to be a (proper) Lebesgue integral. However, for many applications it

is necessary to regard it as a conditionally convergent improper integral at ∞. Still more

generally, the integral can be understood in a weak sense, and this is dealt with below.

One can define the Laplace transform of a finite Borel measure μ by the Lebesgue

integral

An important special case is where μ is a probability measure, for example, the Dirac

delta functions. In operational calculus, the Laplace transform of a measure is often

treated as though the measure came from a probability density function f. In that case, to

avoid potential confusion, one often writes

This limit emphasizes that any point mass located at 0 is entirely captured by the Laplace

transform. Although with the Lebesgue integral, it is not necessary to take such a limit, it

does appear more naturally in connection with the Laplace–Stieltjes transform.

2

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

Bilateral Laplace transform

When one says "the Laplace transform" without qualification, the unilateral or one-sided

transform is usually intended. The Laplace transform can be alternatively defined as

the bilateral Laplace transform, or two-sided Laplace transform, by extending the limits of

integration to be the entire real axis. If that is done, the common unilateral transform

simply becomes a special case of the bilateral transform, where the definition of the

function being transformed is multiplied by the Heaviside step function.

The bilateral Laplace transform F(s) is defined as follows:

Probability theory

In pure and applied probability, the Laplace transform is defined as an expected

value. If X is a random variable with probability density function f, then the Laplace

transform of f is given by the expectation

By convention, this is referred to as the Laplace transform of the random variable X itself.

Here, replacing s by −t gives the moment generating function of X. The Laplace

transform has applications throughout probability theory, including first passage

times of stochastic processes such as Markov chains, and renewal theory.

Of particular use is the ability to recover the cumulative distribution function of a

continuous random variable X, by means of the Laplace transform as follows:

3

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

Properties And Theorems

The Laplace transform has a number of properties that make it useful for analyzing

linear dynamical systems. The most significant advantage is that differentiation becomes

multiplication,

and integration becomes

division,

by s (reminiscent

of

the

way logarithms change multiplication to addition of logarithms).

Because of this property, the Laplace variable s is also known as operator variable in

the L domain: either derivative operator or (for s−1) integration operator. The transform

turns integral equations and differential equations to polynomial equations, which are

much easier to solve. Once solved, use of the inverse Laplace transform reverts to the

original domain.

Given the functions f(t) and g(t), and their respective Laplace transforms F(s) and G(s),

Computation Of The Laplace Transform Of A Function's Derivative

It is often convenient to use the differentiation property of the Laplace transform to find

the transform of a function's derivative. This can be derived from the basic expression

for a Laplace transform as follows:

4

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

Table Of Selected Laplace Transforms

The following table provides Laplace transforms for many common functions of a single

variable. For definitions and explanations, see the Explanatory Notes at the end of the

table.

Because the Laplace transform is a linear operator,

Using this linearity, and various trigonometric, hyperbolic, and complex number (etc.)

properties and/or identities, some Laplace transforms can be obtained from others more

quickly than by using the definition directly.

The unilateral Laplace transform takes as input a function whose time domain is the nonnegative reals, which is why all of the time domain functions in the table below are

multiples of the Heaviside step function, u(t).

The entries of the table that involve a time delay τ are required to be causal (meaning

that τ > 0). A causal system is a system where the impulse response h(t) is zero for all

time t prior to t = 0. In general, the region of convergence for causal systems is not the

same as that of anticausal systems.

5

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

6

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

7

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

Inverse Laplace Transform

In mathematics, the inverse Laplace transform of a function F(s) is the piecewisecontinuous and exponentially-restricted real function f(t) which has the property:

It can be proven that, if a function F(s) has the inverse Laplace transform f(t), then f(t) is

uniquely determined (considering functions which differ from each other only on a point

set having Lebesgue measure zero as the same). This result was first proven

by Mathias Lerch in 1903 and is known as Lerch's theorem.

The Laplace transform and the inverse Laplace transform together have a number of

properties that make them useful for analyzing linear dynamical systems.

Mellin's Inverse Formula

An integral formula for the inverse Laplace transform, called the Mellin's inverse

formula, the Bromwich integral, or the Fourier–Mellin integral, is given by the line

integral:

where the integration is done along the vertical line Re(s) = γ in the complex plane such

that γ is greater than the real part of all singularities of F(s) and F(s) is bounded on the

line, for example if contour path is in the region of convergence. If all singularities are in

the left half-plane, or F(s) is an entire function, then γ can be set to zero and the above

inverse integral formula becomes identical to the inverse Fourier transform.

In practice, computing the complex integral can be done by using the Cauchy residue

theorem.

Post's Inversion Formula

Post's inversion formula for Laplace transforms, named after Emil Post, is a simplelooking but usually impractical formula for evaluating an inverse Laplace transform.

The statement of the formula is as follows: Let f(t) be a continuous function on the

interval [0, ∞) of exponential order, i.e.

8

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

for some real number b. Then for all s > b, the Laplace transform for f(t) exists and is

infinitely differentiable with respect to s. Furthermore, if F(s) is the Laplace transform

of f(t), then the inverse Laplace transform of F(s) is given by

for t > 0, where F(k) is the k-th derivative of F with respect to s.

As can be seen from the formula, the need to evaluate derivatives of arbitrarily high

orders renders this formula impractical for most purposes.

With the advent of powerful personal computers, the main efforts to use this formula have

come from dealing with approximations or asymptotic analysis of the Inverse Laplace

transform, using the Grunwald–Letnikov differ integral to evaluate the derivatives.

Post's inversion has attracted interest due to the improvement in computational science

and the fact that it is not necessary to know where the poles of F(s) lie, which make it

possible to calculate the asymptotic behavior for big x using inverse Mellin transforms for

several arithmetical functions related to the Riemann hypothesis.

Video Links

Laplace transform

• https://www.youtube.com/watch?v=OiNh2DswFt4

Inverse Laplace Transform Example

• https://www.youtube.com/watch?v=c6YnYr8KsSo

Inverse Laplace Transform Example

• https://www.youtube.com/watch?v=c6YnYr8KsSo

References

•

•

•

https://en.wikipedia.org/wiki/Laplace_transform

https://en.wikipedia.org/wiki/Inverse_Laplace_tra

nsform

https://web.stanford.edu/~boyd/ee102/laplace.pd

f

9

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

Chapter 3

Power Series

Objectives

After completing this chapter, you will be able to:

•

Illustrate the interval of convergence for a

power series.

• Differentiate and integrate a power series to

obtain other power series.

• Find Maclaurin series for a function.

• Find Taylor series for a function.

Introduction

In this module you will learn to represent power series algebraically

and graphically. The graphical representation of power series can be used to

illustrate the amazing concept that certain power series converge to well-known

functions on certain intervals. In the first lesson you will start with a power series

and determine the function represented by the series. In the last two lessons

you will begin with a function and find its power series representation.

Power Series

In this lesson you will study several power series and discover that on the

intervals where they converge, they are equal to certain well known functions.

Defining Power Series

A power series is a series in which each term is a constant times a power

of x or a power of (x - a) where a is a constant.

Suppose each ck represents some constant. Then the infinite series

is a power series centered at x = 0 and the infinite series

1

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

is a power series centered at x = a.

Finding Partial Sums Of A Power Ser Ies

Consider the power series

Although you cannot enter infinitely many terms of this series in the Y=

Editor, you can graph partial sums of the series because each partial sum is a

polynomial with a finite number of terms.

Defining An Infinite Geometric Series

Recall that an infinite geometric series can be written as a + ar + ar2 + ar3

+ ... + ark…, where a represents the first term and r represents the common

ratio of the series. If | r | < 1, the infinite geometric series a + ar + ar 2 + ar3 + ... +

ark + ... converges to a/(1-r).

The power series is a geometric series with first term 1 and common ratio x.

This means that the power series converges when | x | < 1 and converges to

1/(1-x) on the interval (-1, 1).

Visualizing Convergence

The graphs of several partial sums can illustrate the interval of

convergence for an infinite series.

•

Graph the second-, third-, and fourth-degree polynomials that

•

represent partial sums of

Graph y=1/(1-x)

2

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

•

Change the graphing style for y=1/(1-x)

from the partial sums.

to "thick" to distinguish it

On the interval (-1,1) the partial sums are close to y=1/(1-x). The interval

(-1,1) is called the interval of convergence for this power series because as the

number of terms in the partial sums increases, the partial sums converge to

y=1/(1-x) on that interval.

Maclaurin Series

In the previous lesson you explored several power series and their

relationships to the functions to which they converge. In this lesson you will start

with a function and find the power series that best converges to that function for

values of x near zero.

Suppose f is some function. A second-degree polynomial p(x) = ax2 + bx + c that

satisfies p(0)

= f(0),

p'(0) = f'(0), and p"(0) = f"(0), gives a good approximation of f near x = 0. The procedure

below illustrates the method by finding a quadratic polynomial that satisfies these

conditions for the function f(x) = ex.

Let f(x) = ex and p(x) = ax2 + bx + c.

Use the fact that f and p are equal at x = 0 to find the value of c.

f(x) = ex

f(0) = e0 = 1

p(x) = ax2 + bx + c

p(0) = a(0)2 + b(0) + c = c

If c = 1, the function and the polynomial have the same value at x = 0, so p(x)

= ax2 + bx + 1.

Using a similar procedure, set the first derivatives equal when x = 0 and solve for b.

f '(x) = ex

f '(0) = e0 = 1

p '(x) = 2ax + b

p '(0) = 2a(0) + b = b

3

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

If b = 1, the function and the polynomial will have the same slope at x = 0, so p(x)

= ax2 + x + 1.

Set the second derivatives equal when x = 0 and solve for a.

f "(x) = ex

f "(0) = e0 = 1

p "(x) = 2a

p "(0) = 2a

If a = 1/2, the function and the polynomial will have the same concavity at x = 0.

approximates y = ex near x = 0.

So

Graphs Of The Function And The Approximating Quadratic Polynomial

•

Graph the functions Y1 = e^X and Y2 = (1/2)X2 + X + 1 in a [-5, 5, 1] x [-2, 10,1]

window.

The parabola has the same value, the same slope, and the same concavity

as y = ex when x = 0, and the quadratic polynomial is a good approximation

for y = ex when x is near 0.

Taylor Series

In the previous lesson, you found Maclaurin series that approximate

functions near x = 0. This lesson investigates how to find a series that

approximates a function near x = a, where a is any real number.

Given a function f that has all its higher order derivatives, the series

, where

is called the Taylor series for f centered at a. The Taylor series is a power series that

approximates the function f near x = a.

4

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

The partial sum is called the nth-order Taylor polynomial for f centered at a.

Every Maclaurin series is a Taylor series centered at zero.

The Taylor Polynomial Of Ex Centered At 1

The second-order Taylor polynomial centered at 1 for the function f(x) = ex can

be found by using a procedure similar to the procedure given.

The coefficient of the term (x - 1)k in the Taylor polynomial is given by

.

This formula is very similar to the formula for finding the coefficient of xk in a Maclaurin

polynomial where the derivative is evaluated at 0. In this Taylor polynomial, the

derivative is evaluated at 1, the center of the series.

The coefficients of the second-order Taylor polynomial centered at 1 for ex are

f(1) = e

f '(1) = e

So the second-order Taylor polynomial for ex centered at 1 is

and near x = 1, ex P2(x).

,

The Taylor series for ex centered at 1 is similar to the Maclaurin series

for e found in last topic. However, the terms in the Taylor series have powers of (x - 1)

rather than powers of x and the coefficients contain the values of the derivatives

evaluated at x = 1 rather than evaluated at x = 0.

x

Graphing the function and the polynomial illustrate that the polynomial is a good

approximation near x = 1.

5

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

•

Graph y = ex and

in a [-2, 3, 1] x [-3, 10, 1] window.

The second-order Maclaurin polynomial you found in last topic,

,

x

x

is tangent to f(x) = e at x = 0 and has the same concavity as f(x) = e at that point. The

polynomial

, which is centered at x = 1, is tangent to f(x)

x

= e at x = 1 and has the same concavity as f(x) = ex at that point.

Video Links

Power Series

•

•

https://www.youtube.com/watch?v=EGni2-m5yxM

https://www.youtube.com/watch?v=DlBQcj_zQk0

Maclaurin and Taylor Series

•

•

https://www.youtube.com/watch?v=LDBnS4c7YbA

https://www.youtube.com/watch?v=3d6DsjIBzJ4

References

•

http://education.ti.com/html/t3_free_courses/calcu

lus84_online/mod24/mod24_1.html

•

Kreyszig, Erwin (2011). Advanced Engineering

Mathematics, 10th ed. Wiley

https://blogs.ubc.ca/infiniteseriesmodule/units/unit3-power-series/taylor-series/the-taylor-series/

https://blogs.ubc.ca/infiniteseriesmodule/units/unit3-power-series/power-series/the-power-series/

•

•

6

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

Chapter 4

Fourier Series

Objectives

After completing this chapter, you will be able to:

▪

▪

▪

Define Fourier Series.

Identify Complex-valued Function.

Identify a superposition of an infinite number of sine

and cosine function.

INTRODUCTION

In mathematics, a Fourier series is a periodic function composed of harmonically

related sinusoids, combined by a weighted summation. With appropriate weights, one

cycle (or period) of the summation can be made to approximate an arbitrary function in

that interval. As such, the summation is a synthesis of another function. The discretetime Fourier transform is an example of Fourier series. The process of deriving the

weights that describe a given function is a form of Fourier analysis. For functions on

unbounded intervals, the analysis and synthesis analogies are Fourier transform and

inverse transform.

Definition of Fourier Series

Consider a real-valued function, s(x) that is integrable on an interval of length P,

which will be the period of the Fourier series. Common examples of analysis intervals

are:

The analysis process determines the weights, indexed by integer n, which is also the

number of cycles of the 𝒏𝒕𝒉 harmonic in the analysis interval. Therefore, the length of a

cycle, in the units of x, is P/n. And the corresponding harmonic frequency is n/P. The

𝑛

𝑛

𝒏𝒕𝒉 harmonic are sin(2𝜋𝑥 𝑃) andcos(2𝜋𝑥 𝑃), and their amplitudes (weights) are found by

integration over the interval of length P.

1

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

•

If s(x) is P-periodic, then any interval of that length is sufficient.

•

𝑎0 and 𝑏0 can be reduced to 𝑎0 =𝑃 ∫𝑝 𝑠(𝑥 )𝑑𝑥 and 𝑏0 = 0.

•

Many texts choose P=2𝝅 to simplify the argument of the sinusoid functions.

2

The synthesis process (the actual Fourier series) is:

In general, integer N is theoretically infinite. Even so, the series might not

converge or exactly equate to s(x) at all values of x in the analysis interval. For the

"well-behaved" functions typical of physical processes, equality is customarily assumed.

2

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

And definitions

the sine and cosine pairs

can be expressed as a single sinusoid with a phase offset, analogous to the conversion

between orthogonal (Cartesian) and polar coordinates:

The customary form for generalizing to complex-valued

(next section) is obtained

using Euler's formula to split the cosine function into complex exponentials.

Here, complex conjugation is denoted by an asterisk:

3

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

Complex-valued functions

If s(x) is a complex-valued function of a real variable x both components (real

and imaginary part) are real-valued functions that can be represented by a Fourier

series. The two sets of coefficients and the partial sum are given by:

Video Links

Fourier Series

•

https://www.youtube.com/watch?v=vA9dfINW4Rg

Computing Fourier Series

•

https://www.youtube.com/watch?v=KfRE744AFEE

Fourier Series Introduction

•

https://www.khanacademy.org/science/electricalengineering/ee-signals/ee-fourier-series/v/eefourier-series-intro

4

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

References

•

•

•

https://eng.libretexts.org/Bookshelves/Electrical

_Engineering/Book%3A_Electrical_Engineering_

(Johnson)/04%3A_Frequency_Domain/4.02%3A_

Complex_Fourier_Series

https://en.wikipedia.org/wiki/Fourier_series

https://eng.libretexts.org/Bookshelves/Electrical

_Engineering/Book%3A_Electrical_Engineering_

(Johnson)/04%3A_Frequency_Domain/4.03%3A_

Classic_Fourier_Series

5

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

Chapter 5

Fourier Transform

Objectives

After completing this chapter, you will be able to:

▪

▪

▪

Identify the Fourier

Understand the characteristic of a Fourier Transform

Identify the branches of Fourier

Introduction

We’re about to make the transition from Fourier series to the Fourier transform.

“Transition” is the appropriate word, for in the approach we’ll take the Fourier transform

emerges as we pass from periodic to nonperiodic functions. To make the trip we’ll view

a nonperiodic function (which can be just about anything) as a limiting case of a periodic

function as the period becomes longer and longer. Actually, this process doesn’t

immediately produce the desired result. It takes a little extra tinkering to coax the

Fourier transform out of the Fourier series, but it’s an interesting approach. Fourier was

elected to the Académie des Sciences in 1817. During Fourier's eight remaining years

in Paris, he resumed his mathematical researches, publishing a number of important

articles. Fourier's work triggered later contributions on trigonometric series and the

theory of functions of real variable.

The Fourier transform is crucial to any discussion of time series analysis, and this

chapter discusses the definition of the transform and begins introducing some of the

ways it is useful.

As an aside, I don’t know if this is the best way of motivating the definition of the Fourier

transform, but I don’t know a better way and most sources you’re likely to check will just

present the formula as a done deal. It’s true that, in the end, it’s the formula and what

we can do with it that we want to get to, so if you don’t find the (brief) discussion to

follow to your tastes, I am not offended. called, variously, the top hat function (because

of its graph), the indicator function, or the characteristic function for the interval (−1/2,

1/2). While we have defined Π(±1/2) = 0, other common conventions are either to have

Π(±1/2) = 1 or Π(±1/2) = 1/2. And some people don’t define Π at ±1/2 at all, leaving two

holes in the domain. I don’t want to get dragged into this dispute. It almost never

matters, though for some purposes the choice Π(±1/2) = 1/2 makes the most sense.

We’ll deal with this on an exceptional basis if and when it comes up.

1

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

Π(t) is not periodic. It doesn’t have a Fourier series. In problems you experimented a

little with periodizations, and I want to do that with Π but for a specific purpose. As a

periodic version of Π(t) we repeat the nonzero part of the function at regular intervals,

separated by (long) intervals where the function is zero. We can think of such a function

arising when we flip a switch on for a second at a time, and do so repeatedly, and we

keep it off for a long time in between the times it’s on. (One often hears the term duty

cycle associated with this sort of thing.) Here’s a plot of Π(t) periodized to have period

15.

Here are some plots of the Fourier coefficients of periodized rectangle functions with

periods 2, 4, and 16, respectively. Because the function is real and even, in each case

the Fourier coefficients are real, so these are plots of the actual coefficients, not their

square magnitudes.

We see that as the period increases the frequencies are getting closer and closer

together and it looks as though the coefficients are tracking some definite curve. (But

we’ll see that there’s an important issue here of vertical scaling.) We can analyze what’s

going on in this particular example, and combine that with some general statements to

lead us on. Recall that for a general function f(t) of period T the Fourier series has the

form

so that the frequencies are 0, ±1/T, ±2/T, . . .. Points in the spectrum are spaced 1/T

apart and, indeed, in the pictures above the spectrum is getting more tightly packed as

the period T increases. The n-th Fourier coefficient is given by

2

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

We see that as the period increases the frequencies are getting closer and closer

together and it looks as though the coefficients are tracking some definite curve. (But

we’ll see that there’s an important issue here of vertical scaling.) We can analyze what’s

going on in this particular example, and combine that with some general statements to

lead us on.

Recall that for a general function f(t) of period T the Fourier series has the form

so that the frequencies are 0, ±1/T, ±2/T, . . .. Points in the spectrum are spaced 1/T

apart and, indeed, in the pictures above the spectrum is getting more tightly packed as

the period T increases. The n-th Fourier coefficient is given by

Here’s a graph. You can now certainly see the continuous curve that the plots of

the discrete, scaled Fourier coefficients are shadowing.

The function sin πx/πx (written now with a generic variable x) comes up so often

in this subject that it’s given a name, sinc:

3

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

How general is this? We would be led to the same idea — scale the Fourier

coefficients by T — if we had started off periodizing just about any function with the

intention of letting T → ∞. Suppose f(t) is zero outside of |t| ≤ 1/2. (Any interval will do,

we just want to suppose a function is zero outside some interval so we can periodize.)

We periodize f(t) to have period T and compute the Fourier coefficients:

How big is this? We can estimate

Where

Fourier transform defined There you have it. We now define the Fourier

transform of a function f(t) to be

For now, just take this as a formal definition; we’ll discuss later when such an

integral exists. We assume that f(t) is defined for all real numbers t. For any s ∈ R,

integrating f(t) against e−2πist with respect to t produces a complex valued function of

s, that is, the Fourier transform ˆf(s) is a complex-valued function of s ∈ R. If t has

dimension time then to make st dimensionless in the exponential e−2πist s must have

dimension 1/time.

While the Fourier transform takes flight from the desire to find spectral

information on a nonperiodic function, the extra complications and extra richness of

what results will soon make it seem like we’re in a much different world. The definition

just given is a good one because of the richness and despite the complications. Periodic

functions are great, but there’s more bang than buzz in the world to analyze. The

spectrum of a periodic function is a discrete set of frequencies, possibly an infinite set

(when there’s a corner) but always a discrete set. By contrast, the Fourier transform of a

nonperiodic signal produces a continuous spectrum, or a continuum of frequencies. It

may be that ˆf(s) is identically zero for |s| sufficiently large — an important class of

4

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

signals called bandlimited — or it may be that the nonzero values of ˆf(s) extend to ±∞,

or it may be that ˆf(s) is zero for just a few values of s. The Fourier transform analyzes a

signal into its frequency components. We haven’t yet considered how the corresponding

synthesis goes. How can we recover f(t) in the time domain from ˆf(s) in the frequency

domain?

Recovering f(t) from ˆf(s) We can push the ideas on nonperiodic functions as

limits of periodic functions a little further and discover how we might obtain f(t) from its

transform ˆf(s). Again suppose f(t) is zero outside some interval and periodize it to have

(large) period T. We expand f(t) in a Fourier series,

The inverse Fourier transform defined, and Fourier inversion, too The

integral we’ve just come up with can stand on its own as a “transform”, and so we define

the inverse Fourier transform of a function g(s) to be

Again, we’re treating this formally for the moment, withholding a discussion of

conditions under which the integral makes sense. In the same spirit, we’ve also

produced the Fourier inversion theorem. That is

A quick summary Let’s summarize what we’ve done here, partly as a guide to

what we’d like to do next. There’s so much involved, all of importance, that it’s hard to

avoid saying everything at once. Realize that it will take some time before everything is

in place.

• The domain of the Fourier transform is the set of real numbers s. One says that

ˆf is defined on the frequency domain, and that the original signal f(t) is defined on the

time domain (or the spatial domain, depending on the context). For a (nonperiodic)

signal defined on the whole real line we generally do not have a discrete set of

frequencies, as in the periodic case, but rather a continuum of frequencies.2 (We still do

call them “frequencies”, however.) The set of all frequencies is the spectrum of f(t).

◦ Not all frequencies need occur, i.e., ˆf(s) might be zero for some values of s.

Furthermore, it might be that there aren’t any frequencies outside of a certain range,

i.e.,

5

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

The Inverse Fourier Transform is defined by

Now remember that ˆf (s) is a transformed, complex-valued function, and while it

may be “equivalent” to f(t) it has very different properties. Is it really true that when ˆf(s)

exists we can just plug it into the formula for the inverse Fourier transform — which is

also an improper integral that looks the same as the forward transform except for the

minus sign — and really get back f(t)? Really? That’s worth wondering about.

• The square magnitude | ˆf(s)| 2 is called the power spectrum (especially in

connection with its use in communications) or the spectral power density (especially in

connection with its use in optics) or the energy spectrum (especially in every other

connection). An important relation between the energy of the signal in the time domain

and the energy spectrum in the frequency domain is given by Parseval’s identity for

Fourier transforms:

A warning on notations: None is perfect, all are in use Depending on the

operation to be performed, or on the context, it’s often useful to have alternate notations

for the Fourier transform. But here’s a warning, which is the start of a complaint, which

is the prelude to a full blown rant. Diddling with notation seems to be an unavoidable

hassle in this subject. Flipping back and forth between a transform and its inverse,

naming the variables in the different domains (even writing or not writing the variables),

changing plus signs to minus signs, taking complex conjugates, these are all routine

day-to-day operations and they can cause endless muddles if you are not careful, and

sometimes even if you are careful. You will believe me when we have some examples,

and you will hear me complain about it frequently

Here’s one example of a common convention:

If the function is called f then one often uses the corresponding capital letter, F,

to denote the Fourier transform. So one sees a and A, z and Z, and everything in

between. Note, however, that one typically uses different names for the variable for the

two functions, as in f(x) (or f(t)) and F(s). This ‘capital letter notation’ is very common in

engineering but often confuses people when ‘duality’ is invoked, to be explained below

And then there’s this:

Since taking the Fourier transform is an operation that is applied to a function to

produce a new function, it’s also sometimes convenient to indicate this by a kind of

“operational” notation. For example, it’s common to write Ff(s) for ˆf(s), and so, to repeat

the full definition

6

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

This is often the most unambiguous notation. Similarly, the operation of taking

the inverse Fourier transform is then denoted by F −1, and so

Finally, a function and its Fourier transform are said to constitute a “Fourier pair”,;

this is concept of ‘duality’ to be explained more precisely later. There have been various

notations devised to indicate this sibling relationship. One is

A warning on definitions Our definition of the Fourier transform is a standard one,

but it’s not the only one. The question is where to put the 2π: in the exponential, as we

have done; or perhaps as a factor out front; or perhaps left out completely. There’s also

a question of which the Fourier transform is and which is the inverse, i.e., which gets the

minus sign in the exponential. All of the various conventions are in day-to-day use in the

professions, and I only mention this now because when you’re talking with a friend over

drinks about the Fourier transform, be sure you both know which conventions are being

followed. I’d hate to see that kind of misunderstanding get in the way of a beautiful

friendship. Following the helpful summary provided by T. W. K¨orner in his book Fourier

analysis, I will summarize the many irritating variations. To be general, let’s write

Getting To Know Your Fourier Transform

In one way, at least, our study of the Fourier transform will run the same course as your

study of calculus. When you learned calculus it was necessary to learn the derivative

and integral formulas for specific functions and types of functions (powers, exponentials,

trig functions), and also to learn the general principles and rules of differentiation and

integration that allow you to work with combinations of functions (product rule, chain

rule, inverse functions). It will be the same thing for us now. We’ll need to have a

storehouse of specific functions and their transforms that we can call on, and we’ll need

to develop general principles and results on how the Fourier transform operates.

The triangle function Consider next the “triangle function”, defined by

7

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

For the Fourier transform we compute (using integration by parts, and the factoring trick

for the sine function):

It’s no accident that the Fourier transform of the triangle function turns out to be

the square of the Fourier transform of the rect function. It has to do with convolution, an

operation we have seen for Fourier series and will see anew for Fourier transforms in

the next chapter.

The exponential decay another commonly occurring function is the (one-sided)

exponential decay, defined by where a is a positive constant. This function models a

signal that is zero, switched on, and then decays exponentially. Here are graphs for a =

2, 1.5, 1.0, 0.5, 0.25

Which is which? If you can’t say, see the discussion on scaling the independent

variable at the end of this section. Back to the exponential decay, we can calculate its

Fourier transform directly

8

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

Which is which? You’ll soon learn to spot that immediately, relative to the pictures in the

time domain, and it’s an important issue. Also note that |Ff(s)| 2 is an even function of s

even though Ff(s) is not. We’ll see why later. The shape of |Ff(s)| 2 is that of a “bell

curve”, though this is not Gaussian, a function we’ll discuss just below. The curve is

known as a Lorenz profile and comes up in analyzing the transition probabilities and

lifetime of the excited state in atoms.

How does the graph of f(ax) compare with the graph of f(x)? Let me remind you of

some elementary lore on scaling the independent variable in a function and how scaling

affect its graph. The question is how the graph of f(ax) compares with the graph of f(x)

when 0 <a< 1 and when a > 1; I’m talking about any generic function f(x) here. This is

very simple, especially compared to what we’ve done and what we’re going to do, but

you’ll want it at your fingertips and everyone has to think about it for a few seconds.

Here’s how to spend those few seconds.

Consider, for example, the graph of f(2x). The graph of f(2x), compared with the graph

of f(x), is squeezed. Why? Think about what happens when you plot the graph of f(2x)

over, say, −1 ≤ x ≤ 1. When x goes from −1 to 1, 2x goes from −2 to 2, so while you’re

plotting f(2x) over the interval from −1 to 1 you have to compute the values of f(x) from

−2 to 2. That’s more of the function in less space, as it were, so the graph of f(2x) is a

squeezed version of the graph of f(x). Clear? Similar reasoning shows that the graph of

f(x/2) is stretched. If x goes from −1 to 1 then x/2 goes from −1/2 to 1/2, so while you’re

plotting f(x/2) over the interval −1 to 1 you have to compute the values of f(x) from −1/2

to 1/2. That’s less of the function in more space, so the graph of f(x/2) is a stretched

version of the graph of f(x).

For Whom The Bell Curve Tolls

Let’s next consider the Gaussian

function and its Fourier transform. We’ll

need this for many examples and problems.

This function, the famous “bell shaped

curve”, was used by Gauss for various

statistical problems. It has some striking

properties with respect to the Fourier

transform which, on the one hand, give it a

special role within Fourier analysis, and on

the other hand allow Fourier methods to be

applied to other areas where the function

comes up. We’ll see an application to

probability and statistics in this graph.

9

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

For various applications one throws in extra factors to modify particular

properties of the function. We’ll do this too, and there’s not a complete agreement on

what’s best. There is an agreement that before anything else happens, one has to know

the amazing equation.

Now, the function f(x) = e−x2 does not have an elementary antiderivative, so this

integral cannot be found directly by an appeal to the Fundamental Theorem of Calculus.

The fact that it can be evaluated exactly is one of the most famous tricks in

mathematics. It’s due to Euler, and you shouldn’t go through life not having seen it. And

even if you have seen it, it’s worth seeing again; see the discussion following this

section.

Evaluation Of The Gaussian Integral

We want to evaluate Now we make a change of variables, introducing polar

coordinates, (r, θ). First, what about the limits of integration? To let both x and y range

from −∞ to ∞ is to describe the entire plane, and to describe the entire plane in polar

coordinates is to let r go from 0 to ∞ and θ go from 0 to 2π. Next, e−(x2+y2) becomes

e−r2 and the area element dx dy becomes r dr dθ. It’s the extra factor of r in the area

element that makes all the difference. With the change to polar coordinates we have

General Properties And Formulas

We’ve started to build a storehouse of specific transforms. Let’s now proceed along the

other path awhile and develop some general properties. For this discussion — and

indeed for much of our work over the next few lectures — we are going to abandon all

worries about transforms existing, integrals converging, and whatever other worries you

might be carrying. Relax and enjoy the ride.

Fourier Transform Pairs And Duality

One striking feature of the Fourier transform and the inverse Fourier transform is the

symmetry between the two formulas, something you don’t see for Fourier series. For

Fourier series the coefficients are given by an integral (a transform of f(t) into ˆf(n)), but

the “inverse transform” is the series itself. The Fourier transforms F and F −1 are the

same except for the minus sign in the exponential.5 In words, we can say that if you

replace s by −s in the formula for the Fourier transform then you’re taking the inverse

Fourier transform. Likewise, if you replace t by −t in the formula for the inverse Fourier

transform then you’re taking the Fourier transform. That is

10

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

This might be a little confusing because you generally want to think of the two variables,

s and t, as somehow associated with separate and different domains, one domain for

the forward transform and one for the inverse transform, one for time and one for

frequency, while in each of these formulas one variable is used in both domains. You

have to get over this kind of confusion, because it’s going to come up again. Think

purely in terms of the math: The transform is an operation on a function that produces a

new function. To write down the formula I have to evaluate the transform at a variable,

but it’s only a variable and it doesn’t matter what I call it as long as I keep its role in the

formula straight. Also be observant what the notation in the formula says and, just as

important, what it doesn’t say. The first formula, for example, says what happens when

you first take the Fourier transform of f and then evaluate it at −s, it’s not a formula for

F(f(−s)) as in “first change s to −s in the formula for f and then take the transform”. I

could have written the first displayed equation as (Ff)(−s) = F −1f(s), with an extra

parentheses around the Ff to emphasize this, but I thought that looked too clumsy. Just

be careful, please.

are sometimes referred to as the “duality” property of the transforms. One also says that

“the Fourier transform pair f and Ff are related by duality”, meaning exactly these

relations. They look like different statements but you can get from one to the other. We’ll

set this up a little differently in the next section.

Duality and reversed signals There’s a slightly different take on duality that I prefer

because it suppresses the variables and so I find it easier to remember. Starting with a

signal f(t) define the reversed signal f – by

Duality and reversed signals There’s a slightly different take on duality that I prefer

because it suppresses the variables and so I find it easier to remember. Starting with a

signal f(t) define the reversed signal f – by

This identity is somewhat interesting in itself, as a variant of Fourier inversion. You can

check it directly from the integral definitions, or from our earlier duality results.6 Of

course then also

11

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

Even And Odd Symmetries And The Fourier Transform

We’ve already had a number of occasions to use even and odd symmetries of

functions. In the case of real-valued functions the conditions have obvious

interpretations in terms of the symmetries of the graphs; the graph of an even function is

symmetric about the y-axis and the graph of an odd function is symmetric through the

origin. The (algebraic) definitions of even and odd apply to complex-valued as well as to

realvalued functions, however, though the geometric picture is lacking when the function

is complex-valued because we can’t draw the graph. A function can be even, odd, or

neither, but it can’t be both unless it’s identically zero. How are symmetries of a function

reflected in properties of its Fourier transform? I won’t give a complete accounting, but

here are a few important cases.

We can refine this if the function f(t) itself has symmetry. For example, combining the

last two results and remembering that a complex number is real if it’s equal to its

conjugate and is purely imaginary if it’s equal to minus its conjugate, we have:

• If f is real valued and even then its Fourier transform is even and real valued.

• If f is real valued and odd function then its Fourier transform is odd and purely

imaginary.

We saw this first point in action for Fourier transform of the rect function Π(t) and for the

triangle function Λ(t). Both functions are even and their Fourier transforms, sinc and

sinc2, respectively, are even and real. Good thing it worked out that way.

Linearity

One of the simplest and most frequently invoked properties of the Fourier transform is

that it is linear (operating on functions). This means:

The Shift Theorem

A shift of the variable t (a delay in time) has a simple effect on the Fourier transform. We

would expect the magnitude of the Fourier transform |Ff(s)| to stay the same, since

shifting the original signal in time should not change the energy at any point in the

spectrum. Hence the only change should be a phase shift in Ff(s), and that’s exactly

what happens.

12

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

The Stretch (Similarity) Theorem

How does the Fourier transform change if we stretch or shrink the variable in the time

domain? More precisely, we want to know if we scale t to at what happens to the

Fourier transform of f(at). First suppose a > 0. Then

If a < 0 the limits of integration are reversed when we make the substitution u = ax, and

so the resulting transform is (−1/a)Ff(s/a). Since −a is positive when a is negative, we

can combine the two cases and present the Stretch Theorem in its full glory:

This is also sometimes called the Similarity Theorem because changing the variable

from x to ax is a change of scale, also known as a similarity

There’s an important observation that goes with the stretch theorem. Let’s take a to be

positive, just to be definite. If a is large (bigger than 1, at least) then the graph of f(at) is

squeezed horizontally compared to f(t). Something different is happening in the

frequency domain, in fact in two ways. The Fourier transform is (1/a)F(s/a). If a is large

then F(s/a) is stretched out compared to F(s), rather than squeezed in. Furthermore,

multiplying by 1/a, since the transform is (1/a)F(a/s), also squashes down the values of

the transform. The opposite happens if a is small (less than 1). In that case the graph of

f(at) is stretched out horizontally compared to f(t), while the Fourier transform is

compressed horizontally and stretched vertically. The phrase that’s often used to

describe this phenomenon is that a signal cannot be localized (meaning concentrated at

a point) in both the time domain and the frequency domain. We will see more precise

formulations of this principle.

To sum up, a function stretched out in the time domain is squeezed in the frequency

domain, and vice versa. This is somewhat analogous to what happens to the spectrum

of a periodic function for long or short periods. Say the period is T, and recall that the

points in the spectrum are spaced 1/T apart, a fact we’ve used several times. If T is

large then it’s fair to think of the function as spread out in the time domain — it goes a

long time before repeating. But then since 1/T is small, the spectrum is squeezed. On

the other hand, if T is small then the function is squeezed in the time domain — it goes

13

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

only a short time before repeating — while the spectrum is spread out, since 1/T is

large.

Video Links

What is the Fourier Transform

•

https://www.youtube.com/watch?v=spUNpyF58BY

Fourier series From heat flow to circle drawings

•

https://www.youtube.com/watch?v=r6sGWTCMz2k

Fourier Transform

•

https://www.youtube.com/watch?v=ykNtIbtCR-8

References

•

•

•

•

•

https://ieeexplore.ieee.org/stamp/stamp.jsp?arn

umber=7389485

file:///C:/Users/Jhoy/Downloads/Chapter%202_F

ourier%20Transform.pdf

http://web.ipac.caltech.edu/staff/fmasci/home/as

tro_refs/TheFourierTransform.pdf

http://www.math.ncku.edu.tw/~rchen/2016%20Te

aching/Chapter%202_Fourier%20Transform.pdf

http://www0.cs.ucl.ac.uk/teaching/GZ05/03fourier.pdf

14

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

Chapter 6

Power Series Solution Of

Differential Equations

Objectives

After completing this chapter, you will be able to:

▪

▪

Understand the Power Series

Solve Power Series of Differential Equations

In mathematics, the power series method is used to seek a power series solution to

certain differential equations. In general, such a solution assumes a power series with

unknown coefficients, then substitutes that solution into the differential equation to find

a recurrence relation for the coefficients.

We want to continue the discussion of the previous lecture with the focus now on power

series, i.e., where the terms in the series contain powers of some parameter, typically a

dynamical variable, and we are expanding about the point where that parameter

vanishes. (A special case is the geometric series we discussed in Lecture 2.) The power

series then defines a function of that parameter. The standard examples are the Taylor

and Maclaurin series mentioned at the beginning of the previous lecture corresponding

to expanding in a series about x = 0 or 0 x x = , The coefficients n b are related to the

derivatives of the underlying, at least when the series is convergent, i.e., when the

series actually defines the function. Some specific examples of power series are

These series uniquely define the corresponding functions, whenever (i.e., at values of x

where) the series converges. When we apply the convergence tests of the previous

lecture to these expressions, we will define a range for the variable x within which the

series converges, i.e., a range in which the series expansion makes mathematical (and

physical) sense. This range for x is called the interval of convergence (for the power

1

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

series expansion). For values of x outside of this interval we will need to find different

expressions to define the underlying functions, i.e., the power series serves to define

the function only in the interval of convergence.

So the series absolutely converges for x 2 . At the endpoints, =1, x = 2 , the series

does not absolutely converge since both the p and s parameters of Eq. (2.29) vanish.

For x =−2 all terms in the series are positive and the series diverges ( S S 1 1 (− = − =

2 2 ) ( ) ). At the other endpoint, =1, x = 2 , we must be more careful due to the

alternating signs. We apply test 5) (from Lecture 2) to this series with (2 1 ) ( ) n n n n a

b = = − . Since lim 0 n n → a , the series again diverges. We can see both of these

results explicitly by looking at the series

Hence the function S x 1 ( ) is well defined (by the series, i.e., the series converges)

only on the open interval − 2 2 x (open means excluding the end points). For S x 2 (

) we find from the ratio test that

So the ratio test says that the series absolutely converges for x 1. At the endpoint x

=−1 the signs are all negative and the series diverges ) , just the negative Harmonic

series). At the other endpoint, x =1 , the signs alternate, test 5) is satisfied (and the

series converges conditionally. Hence the function is well defined (by the power series)

only on the semi-open interval. Note again that we must treat the endpoints carefully.

The third series may look like it is missing every other term (the even powers) and

therefore you may be confused about how to apply the ratio test. This is not an issue.

The idea of the ratio test is to always consider 2 contiguous terms in the series.

2

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

Alternatively we can (re)write the series as (note that now every value of n contributes

to the sum)

The series converges absolutely everywhere, − x ( S x 3 ( ) is just the

power series expansion of the sine function sin x ).

Thus the series absolutely converges for x the open interval x . Since p = 1 2 1, 4 S

diverges at the end points. For x =−1 there are no alternating signs so S4 (−1) also

diverges. At x =−3 we have ( 1 1 ), which satisfies test 5) and the series conditionally

converges. Hence the interval of convergence for S x 4 ( ) is x .

Now that we have verified that we know how to determine the interval of convergence,

we should restate what is important about the interval. For values of x within that

interval of convergence the following statements are all true (and equivalent).

To further develop this discussion we state the following theorems (without proof), which

are true in the interval of convergence where the (infinite) power series can be treated

like a polynomial.

1. We can integrate or differentiate the series term-by-term to find a definition of the

corresponding integral or derivative of the function defined by the original series,

i.e., S x ( ) or S x dx ( ) . The resulting series has the same interval of

convergence as the original series, except perhaps for the behavior at the

endpoints (see below). [This is why series are so useful. Note that the effect of

integrating or taking a derivative is to introduce a factor of 1/(n+1) or n in the term

bn x n±1 , which does not change the interval of convergence (you should

convince yourself of this fact).]

2. If we have two series defining two functions with known intervals of convergence,

we can add, subtract or multiply the two series to define the corresponding

functions, S x S x 1 2 ( ) ( ) or S x S x 1 2 ( ) ( ) . The new series

convergences in the overlap (or common) interval of the original 2 intervals of

convergence. We can also think about the function defined by dividing the two

series, which with some manipulation we can express as a corresponding power

series. This function is well defined in the overlap interval of the original series

except points where the series in the denominator vanishes. At these points the

function may still be defined if the series in the numerator also vanishes

3

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

(appropriately quickly). We are guaranteed that there is an interval where the

new function and its series expansion make sense, but we must explicitly

calculate it.

3. We can substitute one series into another series if the value of the first series is

within the interval of convergence of the second,

4. The power series expansion of a function is UNIQUE! No matter how you

determine the coefficients n b in n n b x , once you have found them you are

done. [This is a really important point for the Lazy but Smart!]

So let’s develop power series expansions for the functions we know and love.

We know the general form is given by Eq. (3.1). If we apply the Maclaurin

expansion definition to x e , we find (recall the essential property of the

exponential, x x de dx e = )

For this power series the interval of convergence is all values of x, x ,

In a similar fashion we can quickly demonstrate that

Again the interval of convergence is all values of x, x . ASIDE: Note that with

the definition 2 i −1, i −1 , it follows from Eqs. (3.9) and (3.11) that

4

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

This last result is called Euler’s formula (and is extremely useful!). We can obtain

more useful relations by using the four theorems above. Recall our discussion in the last

lecture of the geometric and Harmonic series. We had

Note that the integral changes the behavior at one endpoint from divergent in the

geometric case to conditionally convergent in the harmonic case, an example of the

change at the endpoints noted above in item I).

which we could have obtained directly by differentiation of (1 ) p + x . In this context the

reader is encouraged to think some about this use of the factorial function even when

the argument is not an integer, i.e., the expansion in Eq. (3.19) is useful even when n is

not an integer (but m is). Another tool for obtaining a series expansion arises from

switching from a Maclaurin series expansion to a Taylor series expansion, i.e., expand

about a point other than the origin. Consider the function ln x . It is poorly behaved at x

= 0 , but is well behaved at x =1 . So we can use Eq. (3.15) in the form

5

ADVANCED ENGINEERING MATHEMATICS FOR ECE MODULE

The interval of convergence is then given, i.e., the series converges absolutely for 0 2

x and converges conditionally at x = 2. To repeat, the function defined by a power

series expansion is well behaved within the interval of convergence. But clearly it is

important to consider what it means when the series expansion for a function diverges.

In general, the series will diverge where the original function is singular as is the case

for ln x at x = 0 in Eq. (3.20). However, the relationship between the series and the

function does not always work going the other way. A divergent series does not

necessarily mean a singular function. The various possibilities are the following.

1. The series diverges and the function is singular at the same point. This typically

occurs at the boundary of the interval of convergence as in the example of ln x at

x = 0.

2. The series may be divergent, but the function is well behaved. For example ln x

is well behaved for x 2 but the (specific) power series expansion in Eq. (3.20)

diverges. Similarly the function 1 1( + x) is well behaved everywhere except the

single point x =−1 while the power series expansion in Eq. (3.14) diverges for x

1 . The mathematics behind this behavior is most easily understood in the

formalism of complex variables as we will discuss next (where we will develop