A data-driven fault detection and diagnosis scheme for air handling units in building HVAC systems considering undefined states

advertisement

Journal of Building Engineering 35 (2021) 102111

Contents lists available at ScienceDirect

Journal of Building Engineering

journal homepage: http://www.elsevier.com/locate/jobe

A data-driven fault detection and diagnosis scheme for air handling units in

building HVAC systems considering undefined states

Woo-Seung Yun, Won-Hwa Hong, Hyuncheol Seo *

School of Architectural, Civil, Environmental and Energy Engineering, Kyungpook National University, Daegu, Republic of Korea

A R T I C L E I N F O

A B S T R A C T

Keywords:

HVAC systems

Fault detection and diagnosis

Supervised auto-encoder

Air handling units

Data-driven model

Artificial neural network

Fault detection in heating, ventilation, and air conditioning (HVAC) systems is essential because faults lead to

energy wastage, shortened lifespan of equipment, and uncomfortable indoor environments. In this study, we

proposed a data-driven fault detection and diagnosis (FDD) scheme for air handling units (AHUs) in building

HVAC systems to enable reliable maintenance by considering undefined states. We aimed to determine whether a

neural-network-based FDD model can provide significant inferences for input variables using the supervised

auto-encoder (SAE). We evaluated the fitness of the proposed FDD model based on the reconstruction error of the

SAE. In addition, fault diagnosis is only performed by the FDD model if it can provide significant inferences for

input variables; otherwise, feedback regarding the FDD model is provided. The experimental data of ASHRAE RP1312 were used to evaluate the performance of the proposed scheme. Furthermore, we compared the perfor­

mance of the proposed model with those of well-known data-driven approaches for fault diagnosis. Our results

showed that the scheme can distinguish between undefined and defined data with high performance. Further­

more, the proposed scheme has a higher FDD performance for the defined states than that of the control models.

Therefore, the proposed scheme can facilitate the maintenance of the AHU systems in building HVAC systems.

1. Introduction

The energy consumption in the building sector accounts for

approximately 20–40% of the total energy consumption [1,2], and

heating, ventilation, and air conditioning (HVAC) systems consume

approximately 50–60% of the total energy consumed by the building [3,

4]. The faults of HVAC systems cause wastage of energy, shortened

lifespan of equipment, and uncomfortable indoor environments [5–7].

In a commercial building in Hong Kong, 20.9% of all variable air volume

(VAV) terminals of the HVAC system worked abnormally [8]. In a survey

in the U.S., 65% of residential cooling systems and 71% of commercial

cooling systems had faults [9]. Furthermore, an abnormal operation of

air conditioning systems can cause approximately 5–30% increase in

energy consumption [10–12]. Therefore, early detection of faults in

HVAC systems is crucial, not only for comfortable indoor environments,

but also for prevention of energy waste.

In the last decades, a considerable amount of fault detection and

diagnosis (FDD) methods have been developed for building energy

systems, such as economizers, chillers, air handling units (AHUs), VAV

terminals, and HVAC system level [13]. Conventional FDD models

evaluate the system based on the discriminating equation and model

pre-established through data analysis. However, HVAC systems, the

AHU in particular, are a result of onsite construction and operate with

various mechanical parts integrated in and connected to the building.

Thus, it is difficult to standardize such systems because the equipment

and physical composition are different depending on the site. Defining

the faults of non-standard products requires considerable time and cost.

Moreover, even if the faults for various states are defined, undefined

states (operational conditions or faults of new patterns) that have not

been pre-trained may occur because of variations of complex environ­

mental factors, such as aging equipment, ambient temperature, and

occupant behaviors. Based on these characteristics, it is difficult to

provide sufficient training data that cover all possible situations for the

FDD of the AHU system. Although excellent diagnostic performance can

be achieved when an FDD model is predefined, for the reasons discussed

above, frequent misclassifications may occur unless the undefined states

generated in the AHU are considered, thereby decreasing the reliability

of the model. Additionally, malfunctions and deterioration that can be

detected early and counteracted may not be recognized, leading to

increased future maintenance costs and excessive energy consumption.

In the literature, various studies have attempted to address the

* Corresponding author.

E-mail address: charles@knu.ac.kr (H. Seo).

https://doi.org/10.1016/j.jobe.2020.102111

Received 6 July 2020; Received in revised form 5 November 2020; Accepted 18 December 2020

Available online 21 December 2020

2352-7102/© 2020 Elsevier Ltd. All rights reserved.

W.-S. Yun et al.

Journal of Building Engineering 35 (2021) 102111

CCE

OA

EA

ReLu

RMSProp

TP

FP

FN

SVM

RBF

ELU

Abbreviations

HVAC

VAV

FDD

AHU

DB

SA

SAE

ANN

RMSE

MSE

Heating, ventilation, and air conditioning

Variable air volume

Fault detection and diagnosis

Air handling unit

Database

Supply air

Supervised auto-encoder

Artificial neural network

Root-mean-square error

Mean squared error

limitation of training data. Keigo et al. [14] proposed a combination

approach based on a semi-supervised method to identify building energy

faults with limited labeled data by leveraging domain knowledge. Zhao

et al. [15,16] proposed a diagnostic Bayesian networks-based method

for AHU fault diagnosis. The proposed method simulates the FDD

Categorical cross-entropy

Outdoor air

Exhaust air

Rectified linear unit

Root mean square propagation

True positive

False positive

False negative

Support vector machine

Radial basis function

Exponential linear unit

process of experts using physical laws, expert knowledge/experiences,

operation and maintenance records, historical and real-time measure­

ments, etc. Hence, the method does not require fault data. Yan et al. [17]

proposed a semi-supervised learning FDD framework for AHUs to

address the limitation of labeled data. The proposed framework was

Fig. 1. Flowchart of the proposed FDD scheme.

2

W.-S. Yun et al.

Journal of Building Engineering 35 (2021) 102111

designed to secure the FDD performance of the model with a limited

number of data by repeatedly inserting confidently labeled testing

samples into the training pool. Yan et al. [18] proposed an AHU FDD

framework using generative adversarial network (GAN) to handle

insufficient amount of faulty training samples. The proposed framework

re-balances the training dataset by enriching the faulty training samples

using GAN. The re-balanced dataset is then used to train supervised

classifiers.

The aforementioned methods cannot structurally consider undefined

states. Additionally, the reliability of the methods degrades when FDD is

performed under the undefined state, and some methods have recently

been developed to address this problem. Li et al. [19] proposed an expert

knowledge-based unseen fault identification (EK-UFI) method to iden­

tify undefined faults by employing the similarities between known and

unknown faults. Yan et al. [20] proposed an FDD framework for AHUs

based on Hidden Markov models (HMMs) to handle undefined faults. To

identify new fault types, a robust statistical method is developed to

compare current HMM observations with those expected from existing

states to obtain potential new types, and then confirm new types by

checking whether the observations have a significant change. Although

these methods can handle undefined states, they were designed to

heavily rely on expert knowledge; hence, they require a deep under­

standing of the causal relationships between faults and symptoms of the

building energy systems [13].

Therefore, in this study, we proposed a data-driven FDD scheme to

is illustrated in Fig. 1. The scheme consists of three steps: 1) offline

model preparation, 2) FDD by the model, and 3) model feedback.

In the offline model preparation step, the FDD model is trained using

the training database (DB), and the threshold is set to find the undefined

state. In the FDD step, the state of the AHU system is determined by the

FDD model. In the model feedback step, the training DB is updated by

newly labeling the input data of the undefined state after manual system

check. Then, the model preparation step for model retraining is per­

formed. The fault diagnosis performance of the model can be enhanced

by repeating the above steps.

2.1. FDD model

The model inputs are the real-time monitoring data of the system,

and the output is the system state judgment result (“undefined state,”

“fault-free,” and “fault”). “Undefined state” is the output when the FDD

model cannot perform significant inferences about the system state.

“Fault-free” and “fault” are the output in the defined state in which the

model can determine the system status. “Fault-free” is the output when

the system has no fault. “Fault” means that the system has a fault, and

the fault type is the output. The model is composed of three parts: pre­

processing, operation, and post-processing units. Algorithm. 1 details

the pseudocode for the FDD step.

Algorithm. 1. Pseudocode for the FDD step

enable reliable maintenance in the AHU by considering undefined states.

The proposed scheme determines whether the FDD model can signifi­

cantly infer input variables, and the FDD model performs fault diagnosis

only when it can perform significant inferences on input variables;

otherwise, the model considers the state of the system as undefined, and

feedback is provided by retraining the FDD model. Through this process,

the model can achieve desirable reliability in undefined states.

2.1.1. Preprocessing unit

The preprocessing unit processes the system monitoring data to a

form appropriate for model input. Thus, data filtering through steadystate detection and standardization are performed. Steady-state detec­

tion is performed to filter the transient-state data that occur due to the

operation, stopping, or change of the operation conditions of the air

conditioning system. The transient-state data increase the complexity of

the model and degrade FDD performance [21].

In this study, a steady-state filtering method [15,21–23] based on the

change rate of the reference variable was used. Five reference variables

were set as follows: cooling and heating coil control signal, SA duct

2. Proposed scheme

The proposed FDD scheme has two objectives: 1) acquire search

performance for the undefined state and 2) acquire the FDD perfor­

mance for the defined state. Hence, the structure of the proposed scheme

3

W.-S. Yun et al.

Journal of Building Engineering 35 (2021) 102111

statistic pressure, SA temperature, supply fan speed [15]. The

steady-state indicator is the sum of the change rates of the reference

variables. When the indicator exceeds the threshold, which is set in

advance by referring to data under the steady-state, it is determined as

transient-state with severe variation; when the indicator is lower than

the threshold, it is determined as steady-state.

Following data filtering, the data are standardized as

̂

x i,j =

Xj =

xi,j − X j

“reliability for predicted label,” which is used to determine whether the

FDD model can perform significant inferences about the input variables.

For the operation unit, the supervised auto-encoder (SAE) was

applied, which is a type of artificial neural network (ANN); SAE jointly

predicts target labels and reconstructs inputs [24]. The reconstruction

function of inputs is the same as that of an auto-encoder. A recon­

struction error of the auto-encoder can be used to identify anomalies

[25]. The reconstruction error represents the difference between the

inputs reconstructed by the auto-encoder and the original inputs. During

training, the auto-encoder learns the relationships among the input

variables to reconstruct the inputs. During testing, the auto-encoder

accurately reconstructs the inputs when the correlations among the

input variables are similar to those of the training data (low recon­

struction error); otherwise, the inputs cannot be reconstructed accu­

rately (high reconstruction error) [25].

Considering these characteristics, the reconstruction error of SAE

was used as the reliability indicator for the predicted label. A low

reconstruction error is derived in the defined state, for which the SAE

was trained, and a high reconstruction error is derived in the undefined

state, for which the SAE was not trained. When the reconstruction error

is high, the predicted label of the operation unit is not reliable; thus, the

FDD model cannot make a meaningful judgment.

The structure of the operation unit is illustrated in Fig. 2. SAE derives

the predicted label and the reconstructed inputs while the operation unit

derives the reconstruction error by calculating the root-mean-square

error (RMSE) of the reconstructed inputs and the original inputs.

The SAE is composed of an input layer, a hidden layer, and an output

layer. The input layer receives input data from the preprocessing unit

and delivers it to the hidden layer. Each node of the input layer repre­

sents the individual variable of the inputs. The hidden layer is the

encoder, which extracts common features required for the reconstruc­

tion of inputs and the derivation of the predicted label and delivers them

to the output layer. The output layer derives the predicted label using

the information derived from the hidden layer and reconstructs the in­

puts. Thus, the output layer is composed of a classifier and decoder in

parallel. The classifier receives the extracted features from the encoder,

outputs the predicted label, and solves a multiclass classification prob­

lem. The decoder receives the extracted features from the encoder and

reconstructs the inputs. Each node of the decoder represents individual

variables of the reconstructed inputs.

(1)

σj

N

1 ∑

xi,j

N i=1

(2)

√̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅

√

)

N (

√ 1 ∑

σj = √

xi,j − X j

N − 1 i=1

(3)

where ̂

x i,j is the ith standardized value of the feature j, Xj is the mean of

the feature j, σ j is the standard deviation of the feature j, and xi,j is the ith

value of the feature j.

2.1.2. Operation unit

The operation unit derives the basic information required for FDD.

The input values of the operation unit are the data processed by the

preprocessing unit. The operation unit outputs two values, which are

required to determine the system state. The first output value is the

“predicted label” (fault-free or fault type), which is used to determine

the system state when FDD is possible. The second output value is

2.1.3. Post-processing unit

The post-processing unit finally determines the system state. The

inputs of the post-processing unit are the data (predicted label and

reliability for the predicted label) derived by the operation unit, and the

output is the result of the system state judgment (“Undefined state,”

“fault-free,” or “fault”). The post-processing unit determines that the

predicted label of the operation unit is unreliable when the reliability for

the predicted label (reconstruction error of SAE) exceeds the threshold

(error tolerance limit); otherwise, it determines that the predicted label

of the operation unit is reliable. The threshold is set based on the

training data in the offline model preparation process. When the pre­

dicted label is unreliable, the post-processing unit determines that the

model cannot diagnose the system state and finally derives the “unde­

fined state.” Through this process, the model reduces the false alarm rate

due to lack of training data. When the predicted label is reliable, the

post-processing unit determines that the model can diagnose the system

state and derives the predicted label as an FDD result.

2.2. Offline model preparation

Fig. 2. Structure of the operation unit.

The model is prepared for FDD in the offline model preparation step,

which is performed in two stages: 1) training of the operation unit and 2)

4

W.-S. Yun et al.

Journal of Building Engineering 35 (2021) 102111

setting the threshold for searching the undefined state. The pseudocode

for the offline model preparation step is shown in Algorithm. 2.

Algorithm. 2.

to obtain the final loss. Training is performed to make the final loss close

to 0. In this process, the weights and biases of the SAE are optimized to

satisfy the two objectives of the predicted label derivation and input

reconstruction. The epoch is set through early stopping to prevent

Pseudocode for the offline model preparation step

overfitting [27].

2.2.2. Threshold setting

The threshold for finding the undefined state is set by the three-sigma

rule [28] using Eq. (4), based on the reconstruction error of the training

set. The threshold is adjusted appropriately whenever the training DB is

updated.

2.2.1. Training of the operation unit

In the training process of the operation unit, the SAE of the operation

unit is trained using the training DB. Here, the DB is built, the dataset is

prepared, and SAE training is performed. The initial DB can be built

through the building commissioning process; the initial DB consists of

AHU monitoring data in the fault-free and fault states and the corre­

sponding labels. The fault data can be obtained by simulating the faults

that frequently occur in the AHU.

Once the DB is built, the dataset for SAE training is prepared, for

which data preprocessing and dataset division are performed. Data

preprocessing is performed as in the preprocessing unit of the model.

The pre-processed data are divided into training and validation datasets:

the training set is used to optimize the parameters of SAE, and the

validation set is used to prevent overfitting of SAE. During training, the

parameters of the SAE are optimized for two tasks: reconstruction of

inputs and derivation of predicted labels.

The SAE training process is illustrated in Fig. 3. lossa is the loss of the

decoder, which is the mean squared error (MSE) of the original and

reconstructed inputs; lossb is the loss of the classifier, which is the

standard categorical cross-entropy (CCE) [26] for the target and pre­

dicted labels. These two losses are summed with the weights wa and wb

threshold = X + 3σ

X=

N

1 ∑

xi

N i=1

√̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅

√

N (

)2

√ 1 ∑

σ=√

xi − X

N − 1 i=1

(4)

(5)

(6)

where threshold is the tolerance limit for the reconstruction error, X is

the average reconstruction error of the training set, σ is the standard

deviation of the training set reconstruction error, and xi is the recon­

struction error of the ith data.

The three-sigma rule states that “for normal distributions, 99.7% of

observations will fall within three standard deviations of the mean”

5

W.-S. Yun et al.

Journal of Building Engineering 35 (2021) 102111

Fig. 3. SAE training process.

[28]. Therefore, the threshold set by the three-sigma rule is a value

covering most reconstruction errors for data in the defined states under

the assumption that the reconstruction error follows a normal distribu­

tion. This is considered to be suitable for searching for undefined states.

classification model in an actual application because some misclassified

results based on outliers will inevitably occur. To combat this problem,

the model feedback step is executed only when the same result persists

for more than a certain number of iterations (n) in the FDD step. For

example, if an output value in the FDD step is {“fault-free,” “fault-free,”

“fault-free,” “fault A,” “fault-free,” “fault-free,” “fault-free”} in chrono­

logical order and n is set to three, then the data corresponding to “fault

A′′ are judged to be outliers and only the data corresponding to “faultfree,” excluding those corresponding to “fault A,” are used in the model

feedback process.

The objective of this feedback process is to secure model perfor­

mance by continuously updating the training data. Once the training DB

is updated, model retraining is performed. Model feedbacks are repeated

with a specific cycle. The pseudocode for the model feedback step is

2.3. Model feedback

In the model feedback step, the training DB is updated for model

retraining. If the FDD result of the model is “undefined state,” the state is

defined through system check, and the data are newly labeled. Then, the

labeled dataset is updated to the training DB. When the FDD result of the

model is “defined state,” the corresponding dataset is updated to the

training DB.

However, it is practically impossible to obtain a 0% error rate in a

6

W.-S. Yun et al.

Journal of Building Engineering 35 (2021) 102111

to be occupied from 6:00 to 18:00, and the data were measured every

minute. The proposed scheme was evaluated using data in summer and

winter when the cooling/heating load is large. In summer, the supply air

temperature was set to 55 ◦ F, and the indoor temperature was main­

shown in Algorithm. 3.

Algorithm. 3.

Pseudocode for the model feedback step.

tained at 72 ◦ F [35]. Fault-free data obtained from AHU-B account for

approximately 56.1% (23 days) of the summer dataset. Additionally, 18

types of fault data obtained from AHU-B account for approximately

2.4% (daily) of the summer dataset. In winter, the supply air tempera­

ture was set to 65 ◦ F, and the indoor temperature was maintained at

70 ◦ F. The supply air static pressure set point was 1.4 psi for both

summer and winter, and the supply fan was controlled accordingly. The

return fan was operated with a speed tracking control sequence (80% of

the supply fan speed) [35,36]. In winter, fault-free data accounted for

approximately 62.5% (20 days) of the dataset, and 12 types of fault data

accounted for approximately 3.1% (daily) of the dataset.

The fault types and severities to be trained by the model were

selected considering the ease of fault mimicry and time constraints

during the building commissioning process. Seven major faults in each

season were classified as defined states, and the other faults were clas­

sified as undefined states. The defined states are listed in Table 1, which

were used to evaluate the FDD performance for data from the defined

3. Scheme implementation

The proposed FDD scheme was implemented in Python with Ten­

sorFlow. The scheme implementation process consists of FDD model

building, operation unit training, and threshold setting.

3.1. Experimental data

The proposed scheme was evaluated using the experimental data of

ASHRAE 1312-RP. In 1312-RP, the major faults of the VAV system,

which is a representative AHU system, were experimentally simulated,

and the fault-free and fault data acquired from the experiment were

provided [29]. Most studies on FDD for AHU have verified model per­

formance using this data [15–17,19–22,30–33].

The 1312-RP project acquired fault-free and fault data from a facility

comprising two AHUs and eight zones. Each AHU was in charge of four

zones. AHU-A was used to acquire the fault data, and AHU-B was used to

acquire the fault-free data for comparison. These two systems were

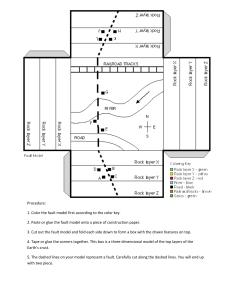

configured with the same structure, and the used AHU is shown in Fig. 4.

The AHU is largely composed of fans, a cooling coil, a heating coil, coil

control valves, various dampers, and sensors. The AHU is interconnected

through four zones and ducts, and a VAV unit is installed in individual

zones.

The experiment of 1312-RP was performed in spring, summer, and

winter. In each season, the fault states were simulated for 2–3 weeks.

The faults were simulated manually in AHU-A. AHU-B was operated in

the fault-free state for comparison. The system operation was scheduled

Table 1

Defined states.

Season

Label

Fault description

Summer

Fault 1

Fault 2

Fault 3

Fault 4

Fault 5

Fault 6

Fault 7

Fault 1

Fault 2

Fault 3

Fault 4

Fault 5

Fault 6

Fault 7

OA Damper Leak (45% Open)

OA Damper Leak (55% Open)

EA Damper Stuck (Fully Open)

Cooling Coil Valve Stuck (Fully Closed)

Cooling Coil Valve Control unstable

Return Fan at fixed speed

AHU Duct Leaking (after SF)

EA Damper Stuck (Fully Open)

EA Damper Stuck (Fully Closed)

Heating Coil Reduced Capacity (Stage 1)

Heating Coil Reduced Capacity (Stage 2)

Heating Coil Reduced Capacity (Stage 3)

OA Damper Leak (52% Open)

OA Damper Leak (62% Open)

Winter

Table 2

Divided datasets.

Classification

Training set

Validation set

Test set

a

b

Fig. 4. Configuration of the AHU for data acquisition [34].

7

Fault-free data

80% of the fault-free data for

traininga

20% of the fault-free data for

traininga

Fault-free data for testb

Fault data

Defined

states

Undefined

states

60%

–

20%

–

20%

100%

Summer: 2007/8/23–2007/8/25, Winter: 2008/1/30–2008/2/1.

Summer: 2007/8/26–2007/9/8, Winter: 2008/2/2–2008/2/15.

W.-S. Yun et al.

Journal of Building Engineering 35 (2021) 102111

Table 3

Evaluation scenarios.

Season

Scenario

Objects of evaluation

Summer

S1

S2-1

S2-2

S2-3

S2-4

S2-5

S2-6

S2-7

S2-8

S2-9

S2-10

S2-11

W1

W2-1

W2-2

W2-3

W2-4

W2-5

Defined state - Fault-free and defined faults (fault 1 to 7)

Undefined fault - OA Damper Stuck (Fully Closed)

Undefined fault - EA Damper Stuck (Fully Closed)

Undefined fault - Cooling Coil Valve Stuck (15% Open)

Undefined fault - Cooling Coil Valve Stuck (65% Open)

Undefined fault - Cooling Coil Valve Stuck (Fully Open)

Undefined fault - Cooling Coil Valve Reverse Action

Undefined fault - Heating Coil Valve Leaking (0.4 GPM)

Undefined fault - Heating Coil Valve Leaking (1.0 GPM)

Undefined fault - Heating Coil Valve Leaking (2.0 GPM)

Undefined fault - Return Fan Complete Failure

Undefined fault - AHU Duct Leaking (before SF)

Defined state - Fault-free and defined faults (fault 1 to 7)

Undefined fault - OA Damper Stuck (Fully Closed)

Undefined fault - Cooling Coil Valve Stuck (Fully Open)

Undefined fault - Heating Coil Valve Fouling (Stage 1)

Undefined fault - Heating Coil Valve Fouling (Stage 2)

Undefined fault - Cooling Coil Valve Stuck (20% Open)

Winter

Fig. 5. Loss of operation unit in the training process (summer).

Table 4

Input variables of the model.

No.

Input variables

1

2

3

4

5

6

7

8

9

10

11

12

13

Heating coil energy consumption (W)

Cooling coil energy consumption (W)

Supply fan energy consumption (W)

Return fan energy consumption (W)

Supply fan speed (%)

Return fan speed (%)

Supply air duct static pressure (psi)

Supply air flow rate (CFM)

Return air flow rate (CFM)

Outdoor air flow rate (CFM)

Exhaust air damper control signal (%)

Recirculated air damper control signal (%)

Outside air damper control signal (%)

Fig. 6. Loss of operation unit in the training process (winter).

days were used for training. The fault-free data for 14 days were clas­

sified as the test set. The training and validation sets were randomly

sampled with 80% and 20% of the fault-free data for training, respec­

tively. All the data of the undefined states were classified as test set. The

data of the defined states were randomly sampled with 60%, 20%, and

20% for the training, validation, and test sets, respectively.

state for which the model was trained. The other faults were undefined

states, which were used to evaluate the search performance of data from

the undefined state for which the model was not trained.

The data were used after preprocessing, which was performed as

described in Section 2.1.1. The threshold for the steady-state detection

was set by referring to the slope of the steady-state section. The selected

reference section for summer was 2008/9/4 14:00-18:00 [22] when the

system was operated in the steady-state, and the selected reference

section for winter was 2008/2/17 14:00-18:00. The threshold was set to

three times the standard deviation of the sum of the slopes under

steady-state conditions [22].

The filtered data were divided into three datasets after standardi­

zation. The first was the training set, which was used for model training.

The second was the validation set, which was used to check whether the

training was performed appropriately by determining the occurrence of

overfitting in the model training process. The third was the test set,

which was used to evaluate the performance of the trained model.

The data were classified as shown in Table 2. Considering the time

constraint in the commissioning process, the fault-free data for three

3.2. Model building

The first step in building the model is to select the input variables.

When many types of input variables are needed for the model, the

introduction cost and model complexity increase because of the

increased number of sensors to be installed. Therefore, the appropriate

input variables should be selected to minimize the number of required

input variables while securing the accuracy of the model. In this study,

13 input variables were selected as shown in Table 4 based on the usual

sensors installed in AHU [33] and potential sensors [22] that can be

installed for FDD.

Next, the hyperparameters required for implementing the SAE of the

operation unit were set heuristically. The input layer consisted of 13

nodes, which was the same as the number of types of input variables.

Table 5

Hyperparameters for training.

Hyperparameter

Setting

Optimizer

Batch size

Epoch

Weight (wa ) of the loss of the decoder

RMSProp [40]

128

Early stopping

1

Weight (wb ) of the loss of the classifier

Fig. 7. Reconstruction error distributions of the training set and validation

set (summer).

1

8

W.-S. Yun et al.

Journal of Building Engineering 35 (2021) 102111

summer and winter, respectively. S2 and W2 are the scenarios for

verifying the first evaluation factor (is the search performance for the

undefined state secured?). S2 comprises S2-1 to S2-11, which include

data for the 11 undefined states of summer. W2 comprises W2-1 to W25, which include data for five undefined states of winter.

4.1.2. Performance evaluation metrics

The performance of the proposed scheme was measured in terms of

the precision, sensitivity, and F1 score given as

Fig. 8. Reconstruction error distributions of the training set and validation

set (winter).

precision =

TP

TP + FP

(7)

The encoder consisted of 300 nodes, and the rectified linear unit (ReLu)

was used as the activation function of the encoder layer. In addition,

batch-normalization and dropout were applied for generalization per­

formance [37,38]. The classifier consisted of eight nodes, and each node

denoted the fault-free state and seven faults. The softmax function [39]

was applied as the activation function of the classifier. The decoder

comprised 13 nodes, which is the same number of the input variable

types, and each node represents the reconstructed input variable. The

linear unit was applied as the activation function of the decoder.

sensitivity =

TP

TP + FN

(8)

precision⋅sensitivity

F1score = 2⋅

precision + sensitivity

(9)

where true positive (TP) is the case of correct classification of the actual

true data by the model, false positive (FP) is the case where actual false

data are misclassified as true by the model, and false negative (FN) is the

case where actual true data are misclassified as false by the model.

Positive denotes the data with the targeted labels while negative denotes

the data with all other labels [21]. Precision means the ratio of data

samples that are actually positive among the data samples that were

determined as positive by the model. Sensitivity is the ratio of data

samples that were determined as positive among the actually positive

data. The F1 score expresses the model performance as a number when

the number of data by category is unbalanced. The F1 score is calculated

by the weighted harmonic mean of precision and sensitivity.

In scenarios S1 and W1, the FDD performance of the model for the

data of defined states was evaluated by precision, sensitivity, and F1

score. Higher values of these three indices indicate a more excellent

model performance. The final performance of the model was evaluated

using the mean of the index for each label. In scenarios S2 and W2,

sensitivity was used to evaluate whether the model can find undefined

states. A higher sensitivity means that the model can find the undefined

states accurately (higher search performance).

3.3. Operation unit training

The operation unit was trained using the training process proposed in

Section 2.2.1. The model for the cooling mode of the AHU system was

trained using the summer data, and for the heating mode, the model was

trained using the winter data individually. The hyperparameters

required for training were configured through an empirical method

using a validation set, as shown in Table 5.

Figs. 5 and 6 illustrate the operation unit training results of summer

and winter, respectively. They depict losses according to the epoch of

the training and validation sets. It can be seen that as the training pro­

gressed, the losses of the training and validation sets approached zero,

indicating that the training of the operation unit was performed

appropriately.

3.4. Threshold setting

4.1.3. Control model

The FDD performance of the model for the data of defined states was

comparatively evaluated against a model based on support vector ma­

chine (SVM) and another based on an ANN. The SVM-based model

performed FDD using the radial basis function (RBF) kernel SVM. The

hyperparameter of the SVM-based model was set through grid search.

The ANN-based model used multi-layer perceptron including one hidden

layer comprising 300 nodes. An exponential linear unit (ELU) was used

as the activation function of the hidden layer of the ANN. A softmax

function was used as the activation function of the output layer to

perform multiclass classification.

The threshold was set after training of the operation unit by applying

the three-sigma rule to the reconstruction error of the training set.

Figs. 7 and 8 show the reconstruction error distribution and threshold of

each model. The solid line indicates the reconstruction error distribution

of the training set, which is expressed as the kernel density estimate. The

dashed line indicates the reconstruction error distribution of the vali­

dation set. The one-point dashed line indicates the threshold. Most of the

validation set reconstruction errors were lower than the threshold. This

means that the validation set was the data of defined states, and the FDD

model can significantly infer the input variables of the validation set.

4. Performance evaluation

The performance of the proposed FDD scheme was evaluated in this

section. The following two evaluation factors were set considering the

purpose of the model: 1) is the search performance for the undefined

state secured? 2) is the FDD performance for the defined state secured?

4.1. Evaluation method

4.1.1. Evaluation scenario

The scenario was established as shown in Table 3 to evaluate the

model. S1 and W1 are the scenarios for verifying the second evaluation

factor (is the FDD performance for the defined state secured?). S1 and

W1 include the data of the types of fault-free and defined states for

Fig. 9. Sensitivity for the undefined states in summer (scenario S2).

9

W.-S. Yun et al.

Journal of Building Engineering 35 (2021) 102111

no fault, and “Fault 1” to “Fault 7” indicate the trained faults. The last

row indicates the mean value of the index for each label. Every result

was evaluated using the mean value after 30 repeated tests. For every

value, a value close to 1 means good performance, and a value close to

0 means poor performance.

In terms of precision, the proposed scheme showed better perfor­

mance than that of the control models in general. In particular, it

showed higher precision than that of the control models for faults 5 and

7. The mean precision was 0.99, which means that 99% of the labels

predicted by the proposed scheme were correct. The mean sensitivity

was 0.969, which means that the proposed scheme could diagnose the

correct fault type at the probability of approximately 97% when defined

states occurred. The mean F1 score was 0.978, which is higher by

approximately 0.1 than the control models because of the relatively high

precision. This means that the proposed scheme showed better FDD

performance than the control models for the defined states.

Table 7 shows the FDD results for scenario W1. The proposed scheme

showed higher precision than that of the control models in every label

except faults 3 and 5. The performance difference was particularly large

for fault 6. The mean precision was 0.965, which means that 96.5% of

the results predicted by the proposed scheme were correct. The mean

sensitivity was 0.971, which means that the proposed scheme correctly

diagnosed the data of defined states at a probability of approximately

97% on an average. The mean F1 score was 0.968, indicating a higher

performance than that of the control models. The evaluation results for

scenarios S1 and W1 showed that the proposed scheme had a higher FDD

performance than that of the control models for defined states, con­

firming that the second objective was achieved.

Fig. 10. Sensitivity for the undefined states in winter (scenario W2).

4.2. Evaluation results

4.2.1. Search performance for undefined states

The first objective of the proposed scheme is to secure the search

performance for the undefined states. Hence, scenarios S2 and W2

composed of undefined states were established, and the evaluation re­

sults are shown below. Figs. 9 and 10 illustrate the sensitivity of the FDD

model for undefined states in summer and winter, respectively. Sensi­

tivity means the ratio of data samples that were determined as the un­

defined state by the FDD model among data samples from the actually

undefined state. A higher sensitivity means that the model can find the

undefined states accurately (higher search performance).

For every scenario, the proposed scheme showed a sensitivity of 0.9

or higher. The mean sensitivities for summer and winter were 0.987 and

0.956, respectively. This means that the proposed scheme searched the

data of undefined states in summer and winter well at the probabilities

of approximately 98.7% and 95.6%, respectively. This confirms that the

proposed scheme achieved the first objective.

5. Conclusion

In this paper, a data-driven FDD scheme was proposed for consid­

ering undefined states to enable reliable maintenance in the AHU. The

proposed scheme was designed to determine whether the FDD model

based on a NN can make significant inferences for input variables using

the SAE. In the proposed scheme, the FDD model performs fault

4.2.2. FDD performance for defined states

The second objective of the proposed scheme is to achieve FDD

performance for the defined states. Thus, scenarios S1 and W1 were

established, and the evaluation results are as follows. Table 6 presents

the FDD result for scenario S1. “Fault-free” indicates a normal state with

Table 6

Diagnosis result for defined states in summer (scenario S1).

Categories

Fault-free

Fault 1

Fault 2

Fault 3

Fault 4

Fault 5

Fault 6

Fault 7

Mean

a

Precision

Sensitivity

F1 score

SVM

ANN

SAEa

SVM

ANN

SAE

SVM

ANN

SAE

1.000

0.988

0.982

1.000

1.000

0.426

1.000

0.387

0.848

1.000

0.991

0.888

1.000

0.999

0.178

1.000

0.446

0.813

1.000

0.991

1.000

1.000

1.000

0.996

1.000

0.933

0.990

0.956

0.999

1.000

1.000

1.000

0.945

1.000

0.995

0.987

0.965

0.997

1.000

1.000

1.000

0.956

1.000

0.996

0.989

0.935

0.994

0.993

0.996

0.973

0.891

0.976

0.995

0.969

0.977

0.993

0.991

1.000

1.000

0.470

1.000

0.517

0.868

0.982

0.994

0.933

1.000

0.999

0.299

1.000

0.586

0.849

0.966

0.993

0.996

0.998

0.986

0.939

0.988

0.961

0.978

SAE: FDD model of the proposed scheme.

Table 7

Diagnosis result for the defined states in winter (scenario W1).

Categories

Fault-free

Fault 1

Fault 2

Fault 3

Fault 4

Fault 5

Fault 6

Fault 7

Mean

Precision

Sensitivity

F1 score

SVM

ANN

SAE

SVM

ANN

SAE

SVM

ANN

SAE

1.000

1.000

1.000

0.961

0.942

0.932

0.611

0.964

0.926

1.000

1.000

1.000

0.863

0.775

0.764

0.378

0.584

0.796

1.000

1.000

1.000

0.917

0.957

0.885

0.974

0.988

0.965

0.989

0.997

0.999

0.949

1.000

0.941

0.987

0.998

0.982

0.944

1.000

1.000

0.948

1.000

0.935

0.989

0.999

0.977

0.981

0.997

0.992

0.912

0.999

0.902

0.987

0.998

0.971

0.994

0.999

1.000

0.955

0.965

0.936

0.751

0.977

0.947

0.971

1.000

1.000

0.897

0.870

0.814

0.527

0.711

0.849

0.990

0.998

0.996

0.914

0.978

0.893

0.980

0.993

0.968

10

Journal of Building Engineering 35 (2021) 102111

W.-S. Yun et al.

diagnosis only in the case where the reconstruction error of the SAE is

within the error tolerance limit. Otherwise, the feedback of the FDD

model is performed through retraining of the inputs.

Scenario-based performance evaluation was performed using the

experimental data of ASHRAE RP-1312. The proposed scheme was

compared with well-known data-driven approaches to test its capabil­

ities on fault diagnosis. The FDD model correctly searched the fault data

of undefined states in summer and winter at the probabilities of 98.7%

and 95.6%, respectively. This means that the proposed scheme can

distinguish the data of undefined and defined states with high perfor­

mance. For the fault-free and fault data of the defined states, the pro­

posed model showed F1 scores of 0.978 and 0.968 in summer and

winter, respectively. This suggests that the proposed scheme has a

higher FDD performance for the defined states than that of the control

models. Therefore, the proposed scheme can perform reliable FDD on

the AHU system by taking into account undefined situations, and it is

expected to facilitate the maintenance of AHU systems.

[3] L. Pérez-Lombard, J. Ortiz, C. Pout, A review on buildings energy consumption

information, Energy Build. 40 (3) (2008) 394–398, https://doi.org/10.1016/j.

enbuild.2007.03.007.

[4] Korea Energy Economics Institute, 2017 energy consumption survey. http://www.

keei.re.kr/keei/download/ECS2017.pdf, 2018.

[5] A. Beghi, R. Brignoli, L. Cecchinato, G. Menegazzo, M. Rampazzo, A data-driven

approach for fault diagnosis in HVAC chiller systems, in: 2015 IEEE Conference on

Control Applications (CCA), IEEE, 2015, pp. 966–971, https://doi.org/10.1109/

CCA.2015.7320737.

[6] D. Chakraborty, H. Elzarka, Early detection of faults in HVAC systems using an

XGBoost model with a dynamic threshold, Energy Build. 185 (2019) 326–344,

https://doi.org/10.1016/j.enbuild.2018.12.032.

[7] A. Beghi, R. Brignoli, L. Cecchinato, G. Menegazzo, M. Rampazzo, F. Simmini,

Data-driven fault detection and diagnosis for HVAC water chillers, Contr. Eng.

Pract. 53 (2016) 79–91, https://doi.org/10.1016/j.conengprac.2016.04.018.

[8] J. Qin, S. Wang, A fault detection and diagnosis strategy of VAV air-conditioning

systems for improved energy and control performances, Energy Build. 37 (10)

(2005) 1035–1048, https://doi.org/10.1016/j.enbuild.2004.12.011.

[9] T. Downey, J. Proctor, What can 13,000 air conditioners tell us, Proc. 2002 ACEEE

Summer Study on Energy Efficiency Build. 1 (2002) 53–67.

[10] K.W. Roth, D. Westphalen, M.Y. Feng, P. Llana, L. Quartararo, Energy Impact of

Commercial Building Controls and Performance Diagnostics: Market

Characterization, Energy Impact of Building Faults and Energy Savings Potential,

Prepared by TAIX LLC for the US Department of Energy, 2005.

[11] N.E. Fernandez, S. Katipamula, W. Wang, Y. Xie, M. Zhao, C.D. Corbin, Impacts of

Commercial Building Controls on Energy Savings and Peak Load Reduction, PNNL,

Richland, WA, 2017, https://doi.org/10.2172/1400347.

[12] S. Katipamula, M.R. Brambley, Methods for fault detection, diagnostics, and

prognostics for building systems—a review, part I, HVAC R Res. 11 (1) (2005)

3–25, https://doi.org/10.1080/10789669.2005.10391123.

[13] Y. Zhao, T. Li, X. Zhang, C. Zhang, Artificial intelligence-based fault detection and

diagnosis methods for building energy systems: advantages, challenges and the

future, Renew. Sustain. Energy Rev. 109 (2019) 85–101, https://doi.org/10.1016/

j.rser.2019.04.021.

[14] K. Yoshida, M. Inui, T. Yairi, K. Machida, M. Shioya, Y. Masukawa, Identification of

causal variables for building energy fault detection by semi-supervised LDA and

decision boundary analysis, in: 2008 IEEE International Conference on Data

Mining Workshops, IEEE, 2008, pp. 164–173, https://doi.org/10.1109/

ICDMW.2008.44.

[15] Y. Zhao, J. Wen, F. Xiao, X. Yang, S. Wang, Diagnostic Bayesian networks for

diagnosing air handling units faults–part I: faults in dampers, fans, filters and

sensors, Appl. Therm. Eng. 111 (2017) 1272–1286, https://doi.org/10.1016/j.

applthermaleng.2015.09.121.

[16] Y. Zhao, J. Wen, S. Wang, Diagnostic Bayesian networks for diagnosing air

handling units faults–Part II: faults in coils and sensors, Appl. Therm. Eng. 90

(2015) 145–157, https://doi.org/10.1016/j.applthermaleng.2015.07.001.

[17] K. Yan, C. Zhong, Z. Ji, J. Huang, Semi-supervised learning for early detection and

diagnosis of various air handling unit faults, Energy Build. 181 (2018) 75–83,

https://doi.org/10.1016/j.enbuild.2018.10.016.

[18] K. Yan, J. Huang, W. Shen, Z. Ji, Unsupervised learning for fault detection and

diagnosis of air handling units, Energy Build. 210 (2020), 109689, https://doi.org/

10.1016/j.enbuild.2019.109689.

[19] D. Li, Y. Zhou, G. Hu, C.J. Spanos, Identifying unseen faults for smart buildings by

incorporating expert knowledge with data, IEEE Trans. Autom. Sci. Eng. 16 (3)

(2018) 1412–1425, https://doi.org/10.1109/TASE.2018.2876611.

[20] Y. Yan, P.B. Luh, K.R. Pattipati, Fault diagnosis of components and sensors in

HVAC air handling systems with new types of faults, IEEE Access 6 (2018)

21682–21696, https://doi.org/10.1109/ACCESS.2018.2806373.

[21] R. Yan, Z. Ma, Y. Zhao, G. Kokogiannakis, A decision tree based data-driven

diagnostic strategy for air handling units, Energy Build. 133 (2016) 37–45, https://

doi.org/10.1016/j.enbuild.2016.09.039.

[22] L. Shun, A Model-Based Fault Detection and Diagnostic Methodology for Secondary

HVAC Systems, Doctoral dissertation, Drexel University, 2009, http://hdl.handle.

net/1860/3136.

[23] W.Y. Lee, J.M. House, N.H. Kyong, Subsystem level fault diagnosis of a building’s

air-handling unit using general regression neural networks, Appl. Energy 77 (2)

(2004) 153–170, https://doi.org/10.1016/S0306-2619(03)00107-7.

[24] L. Le, A. Patterson, M. White, Supervised autoencoders: improving generalization

performance with unsupervised regularizer. Advances in Neural Information

Processing Systems, 2018, pp. 107–117.

[25] A. Borghesi, A. Bartolini, M. Lombardi, M. Milano, L. Benini, A semisupervised

autoencoder-based approach for anomaly detection in high performance

computing systems, Eng. Appl. Artif. Intell. 85 (2019) 634–644, https://doi.org/

10.1016/j.engappai.2019.07.008.

[26] Y. Ho, S. Wookey, The real-world-weight cross-entropy loss function: modeling the

costs of mislabeling, IEEE Access 8 (2019) 4806–4813, https://doi.org/10.1109/

ACCESS.2019.2962617.

[27] L. Prechelt, Early stopping-but when?, in: Neural Networks: Tricks of the Trade

Springer, Berlin, Heidelberg, 1998, pp. 55–69, https://doi.org/10.1007/3-54049430-8_3.

[28] J. Duignan, A Dictionary of Business Research Methods, Oxford University Press,

2016.

[29] J. Wen, S. Li, Tools for Evaluating Fault Detection and Diagnostic Methods for AirHandling Units, ASHRAE 1312-RP, 2011.

[30] M. Yuwono, Y. Guo, J. Wall, J. Li, S. West, G. Platt, S.W. Su, Unsupervised feature

selection using swarm intelligence and consensus clustering for automatic fault

Authorship statement

Manuscript title: A data-driven fault detection and diagnosis scheme

for air handling units in building HVAC systems considering undefined

states

All persons who meet authorship criteria are listed as authors, and all

authors certify that they have participated sufficiently in the work to

take public responsibility for the content, including participation in the

concept, design, analysis, writing, or revision of the manuscript.

Furthermore, each author certifies that this material or similar material

has not been and will not be submitted to or published in any other

publication before its appearance in the Hong Kong Journal of Occu­

pational Therapy.

Authorship contributions Please indicate the specific contributions

made by each author

The name of each author must appear at least once in each of the

three categories below

Category 1 Conception and design of study: _Hyuncheol Seo _, _ WonHwa Hong_,_, Acquisition of data: _Hyuncheol Seo _, Woo-Seung Yun,

Analysis and/or interpretation of data: Woo-Seung Yun _, Category 2

Drafting the manuscript: Woo-Seung Yun _, _ Hyuncheol Seo Revising

the manuscript critically for important intellectual content: _Hyuncheol

Seo_, Won-Hwa Hong. Category 3 Approval of the version of the

manuscript to be published (the names of all authors must be listed):

Hyuncheol Seo_, __Woo-Seung Yun__, _Won-Hwa Hong.

Declaration of competing interest

The authors declare that they have no known competing financial

interests or personal relationships that could have appeared to influence

the work reported in this paper.

Acknowledgments

This work was supported by the National Research Foundation of

Korea (NRF) grant funded by the Korean government (MSIT) (No.

2020R1C1C1007127). This work was supported by the Human Re­

sources Program in Energy Technology of the Korea Institute of Energy

Technology Evaluation and Planning(KETEP) granted financial resource

from the Ministry of Trade, Industry & Energy, Republic of Korea (No.

20204010600060).

References

[1] P. Beiter, M. Elchinger, T. Tian, 2016 renewable Energy Data Book (No. DOE/GO102016-4904), National Renewable Energy Lab.(NREL), Golden, CO (United

States), 2017, https://doi.org/10.2172/1466900.

[2] S. Friedrich, Energy efficiency in buildings in EU countries, CESifo DICE Rep. 11

(2) (2013) 57–59.

11

W.-S. Yun et al.

[31]

[32]

[33]

[34]

Journal of Building Engineering 35 (2021) 102111

[35] S. Li, J. Wen, Description of Fault Test in Summer of 2007. ASHRAE 1312 Report,

2007.

[36] S. Li, J. Wen, Description of Fault Test in Winter of 2008. ASHRAE 1312 Report,

2008.

[37] S. Ioffe, C. Szegedy, Batch normalization: accelerating deep network training by

reducing internal covariate shift, Proc. 32nd Int. Confer. Machine Learn. PMLR 37

(2015) 448–456.

[38] N. Srivastava, G. Hinton, A. Krizhevsky, I. Sutskever, R. Salakhutdinov, Dropout: a

simple way to prevent neural networks from overfitting, J. Mach. Learn. Res. 15 (1)

(2014) 1929–1958.

[39] I. Goodfellow, Y. Bengio, A. Courville, Deep Learning, MIT press, Cambridge, MA,

2016.

[40] T. Tieleman, G. Hinton, Lecture 6.5-rmsprop: Divide the Gradient by a Running

Average of its Recent Magnitude. COURSERA: Neural Networks for Machine

Learning, 2012.

detection and diagnosis in heating ventilation and air conditioning systems, Appl.

Soft Comput. 34 (2015) 402–425, https://doi.org/10.1016/j.asoc.2015.05.030.

C. Zhong, K. Yan, Y. Dai, N. Jin, B. Lou, Energy efficiency solutions for buildings:

automated fault diagnosis of air handling units using generative adversarial

networks, Energies 12 (3) (2019) 527, https://doi.org/10.3390/en12030527.

S. Li, J. Wen, A model-based fault detection and diagnostic methodology based on

PCA method and wavelet transform, Energy Build. 68 (2014) 63–71, https://doi.

org/10.1016/j.enbuild.2013.08.044.

S. Li, J. Wen, Application of pattern matching method for detecting faults in air

handling unit system, Autom. ConStruct. 43 (2014) 49–58, https://doi.org/

10.1016/j.autcon.2014.03.002.

B.A. Price, T.F. Smith, Development and Validation of Optimal Strategies for

Building HVAC Systems. Dept. Mech. Eng., Univ. Iowa, Tech. Rep. ME-TEF-03-001,

Iowa City, IA, USA, 2003.

12