Parallel Computing: Fibonacci, Protein Folding, Matrix Multiplication

advertisement

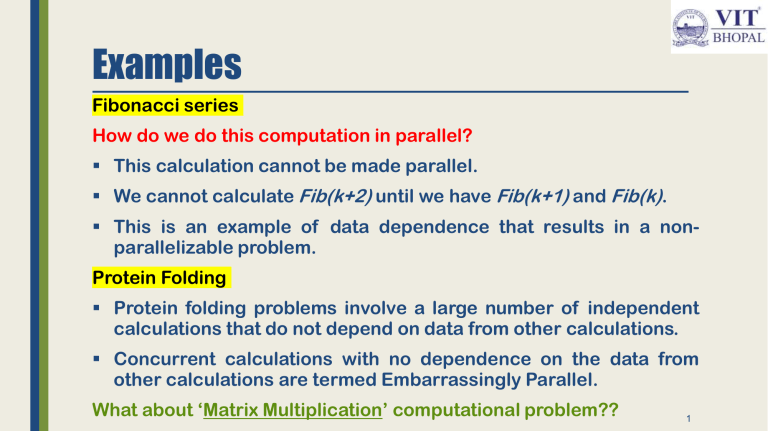

Examples Fibonacci series How do we do this computation in parallel? This calculation cannot be made parallel. We cannot calculate Fib(k+2) until we have Fib(k+1) and Fib(k). This is an example of data dependence that results in a nonparallelizable problem. Protein Folding Protein folding problems involve a large number of independent calculations that do not depend on data from other calculations. Concurrent calculations with no dependence on the data from other calculations are termed Embarrassingly Parallel. What about ‘Matrix Multiplication’ computational problem?? 1 Time complexity analysis: in Sequential Matrix Addition: For two matrices A and B of size m × n, the time complexity of matrix addition is O(mn). Matrix Multiplication: Given two matrices: • A of size m×p • B of size p×n • Thus, the time complexity of multiplication is O(mnp). standard matrix 2 Parallel Mode - “Practical MATMUL” • Using P processors, we can split the task so that each processor handles a fraction of the matrix multiplication. The time complexity then becomes O(n3 / P). Note: User can't keep reducing the time indefinitely by just adding more processors due to communication overhead, synchronization, and other factors. 3 Distributed Memory System • Clusters (most popular) • A collection of commodity systems. • Connected by a commodity interconnection network. • Nodes of a cluster are individual computers joined by a communication network. a.k.a. hybrid systems 4 CPU vs GPU – A view 5 CPUs: Latency Oriented Design 6 GPUs: Throughput Oriented Design 7 8 Work Distribution Strategies Usecase: TensorFlow 1. tf.distribute.MirroredStrategy 2. tf.distribute.Strategy 3. tf.distribute.experimental.MultiWorkerMirroredStrategy 4. tf.distribute.experimental.CentralStorageStrategy 5. tf.distribute.experimental.ParameterServerStrategy 6. tf.distribute.TPUStrategy 7. tf.distribute.experimental.experimental_distribute_datasets_from _function Task: Explore how each strategy works and what are the use cases for each strategy in ML!! 9 Parallelism Scalability 10 Load Balance • The total amount of time to complete a parallel job is limited by the thread that takes the longest to finish. 11