PACS AND IMAGING

INFORMATICS

PACS AND IMAGING

INFORMATICS

BASIC PRINCIPLES

AND APPLICATIONS

Second Edition

H. K. Huang, D.Sc., FRCR (Hon.), FAIMBE

Professor of Radiology and Biomedical Engineering

University of Southern California

Chair Professor of Medical Informatics

The Hong Kong Polytechnic University

Honorary Professor, Shanghai Institute of Technical Physics

The Chinese Academy of Sciences

A John Wiley & Sons, Inc., Publication

Copyright © 2010 by John Wiley & Sons, Inc. All rights reserved

Published by John Wiley & Sons, Inc., Hoboken, New Jersey

Published simultaneously in Canada

No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by

any means, electronic, mechanical, photocopying, recording, scanning, or otherwise, except as permitted

under Section 107 or 108 of the 1976 United States Copyright Act, without either the prior written

permission of the Publisher, or authorization through payment of the appropriate per-copy fee to the

Copyright Clearance Center, Inc., 222 Rosewood Drive, Danvers, MA 01923, (978) 750-8400, fax (978)

750-4470, or on the web at www.copyright.com. Requests to the Publisher for permission should be

addressed to the Permissions Department, John Wiley & Sons, Inc., 111 River Street, Hoboken, NJ 07030,

(201) 748-6011, fax (201) 748-6008, or online at www.wiley.com/go/permissions.

Limit of Liability/Disclaimer of Warranty: While the publisher and author have used their best efforts in

preparing this book, they make no representations or warranties with respect to the accuracy or completeness

of the contents of this book and specifically disclaim any implied warranties of merchantability or fitness for a

particular purpose. No warranty may be created or extended by sales representatives or written sales materials.

The advice and strategies contained herein may not be suitable for your situation. You should consult with a

professional where appropriate. Neither the publisher nor author shall be liable for any loss of profit or any

other commercial damages, including but not limited to special, incidental, consequential, or other damages.

For general information on our other products and services or for technical support, please cantact our

Customer Care Department within the United States at (800) 762-2974, outside the United States at (317)

572-3993 or fax (317) 572-4002.

Wiley also publishes its books in a variety of electronic formats. Some content that appears in print may not

be available in electronic formats. For more information about Wiley products, visit our web site at

www.wiley.com.

Library of Congress Cataloging-in-Publication Data:

Huang, H. K., 1939–

PACS and imaging informatics : basic principles and applications / H.K. Huang. – 2nd ed. rev.

p. ; cm.

Includes bibliographical references and index.

ISBN 978-0-470-37372-9 (cloth)

1. Picture archiving and communication systems in medicine. 2. Imaging systems in medicine. I. Title.

II. Title: Picture archiving and communication system and imaging informatics.

[DNLM: 1. Radiology Information Systems. 2. Diagnostic Imaging. 3. Medical Records Systems,

Computerized. WN 26.5 H874p 2010]

R857.P52H82 2010

616.07 54–dc22

2009022463

Printed in the United States of America

10 9 8 7 6 5 4 3 2 1

To my wife, Fong, for her support and understanding,

my daughter, Cammy, for her growing wisdom and ambitious spirit, and my

grandchildren Tilden and Calleigh, for their calming innocence.

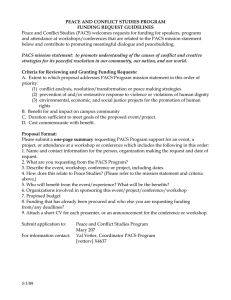

INTRODUCTION

Chapter 1

PART I MEDICAL IMAGING PRINCIPLES

Imaging Basics

Chapter 2

2-D Images

Chapter 3

4-D Images &

Image Fusion

Chapter 5

3-D Images

Chapter 4

Compression

Chapter 6

PART II PACS FUNDAMENTALS

Communication &

Networks

Chapter 8

PACS

Fundamentals

Chapter 7

PACS Server &

Archive

Chapter 11

Image/Data

Acquisition Gateway

Chapter 10

DICOM, HL7 and

IHE

Chapter 9

Display

Workstation

Chapter 12

HIS/RIS/PACS

Integration & ePR

Chapter 13

PART III PACS OPERATION

Telemedicine &

Teleradiology

Chapter 15

Data Management

& Web-Based PACS

Chapter 14

Fault-Tolerant &

Enterprise PACS

Chapter 16

Image/Data

Security

Chapter 17

Clinical Experience/

Pitfalls/Bottlenecks

Chapter 19

Implementation &

Evaluation

Chapter 18

PART IV PACS- AND DICOM-BASED IMAGING INFORMATICS

Medical Imaging

Informatics

Chapter 20

Computing &

Data Grid

Chapter 21

Image-Based CAD

Chapter 25

Multimedia ePR

Chapter 22

CAD-PACS

Integration

Chapter 26

DICOM RT

ePR

Chapter 23

Biometric Tracking

Chapter 27

Image-Assisted

Surgery ePR

Chapter 24

Education/

Learning

Chapter 28

CONTENTS IN BRIEF

FOREWORD

PREFACE

PREFACE OF THE LAST EDITION

ACKNOWLEDGMENTS

H. K. HUANG SHORT BIOGRAPHY

LIST OF ACRONYMS

1.

Introduction

xxxix

xliii

xlvii

li

liii

lv

1

PART I MEDICAL IMAGING PRINCIPLES

2. Digital Medical Image Fundamentals

3. Two-Dimensional Medical Imaging

4. Three-Dimensional Medical Imaging

5. Four-Dimensionality, Multimodality, and Fusion of Medical Imaging

6. Image Compression

31

33

63

107

147

175

PART II PACS FUNDAMENTALS

7. Picture Archiving and Communication System Components and Workflow

8. Communications and Networking

9. Industrial Standards (HL7 and DICOM) and Integrating the Healthcare

Enterprise (IHE)

10. Image Acquisition Gateway

11. PCS Server and Archive

12. Display Workstation

13. Integration of HIS, RIS, PACS, and ePR

217

219

237

PART III PACS OPERATION

14. PACS Data Management and Web-Based Image Distribution

15. Telemedicine and Teleradiology

16. Fault-Tolerant PACS and Enterprise PACS

17. Image/Data Security

18. PACS Clinical Implementation, Acceptance, and Evaluation

19. PACS Clinical Experience, Pitfalls, and Bottlenecks

409

411

441

473

519

559

599

PART IV PACS- AND DICOM-BASED IMAGING INFORMATICS

20. DICOM-Based Medical Imaging Informatics

21. Data Grid for PACS and Medical Imaging Informatics

22. Multimedia Electronic Patient Record (ePR) System

23. Multimedia Electronic Patient Record System in Radiation Therapy

24. Multimedia Electronic Patient Record (ePR) System for Image-Assisted

Spinal Surgery

25. Computer-Aided Diagnosis (CAD) and Image-Guided Decision Support

629

631

649

685

709

269

301

325

349

387

735

775

vii

viii

26.

27.

28.

CONTENTS IN BRIEF

Integration of Computer-Aided Diagnosis (CAD) with PACS

Location Tracking and Verification System in Clinical Environment

New Directions in PACS and Medical Imaging Informatics Training

PACS AND IMAGING INFORMATICS GLOSSARY

INDEX

807

861

893

927

933

CONTENTS

FOREWORD

xxxix

PREFACE

xliii

PREFACE OF THE LAST EDITION

xlvii

ACKNOWLEDGMENTS

H. K. HUANG SHORT BIOGRAPHY

LIST OF ACRONYMS

1.

Introduction

1.1

1.2

1.3

1.4

1.5

li

liii

lv

1

Introduction

1

Some Historical Remarks on Picture Archiving and Communication

Systems (PACS)

2

1.2.1

Concepts, Conferences, and Early Research Projects

2

1.2.1.1

Concepts and Conferences

2

1.2.1.2

Early Funded Research Projects by the U.S.

Federal Government

5

1.2.2

PACS Evolution

7

1.2.2.1

In the Beginning

7

1.2.2.2

Large-Scale PACS

9

1.2.3

Standards

10

1.2.4

Early Key Technologies

12

1.2.5

Medical Imaging Modality, PACS, and Imaging

Informatics R&D Progress Over Time

14

What is PACS?

17

1.3.1

PACS Design Concept

17

1.3.2

PACS Infrastructure Design

18

PACS Implementation Strategies

20

1.4.1

Background

20

1.4.2

Six PACS Implementation Models

20

1.4.2.1

Home-Grown Model

20

1.4.2.2

Two-Team Effort Model

20

1.4.2.3

Turnkey Model

21

1.4.2.4

Partnership Model

21

1.4.2.5

The Application Service Provider (ASP)

Model

21

1.4.2.6

Open Source Model

21

A Global View of PACS Development

22

ix

x

CONTENTS

1.6

PART I

2.

1.5.1

The United States

1.5.2

Europe

1.5.3

Asia

Organization of the Book

MEDICAL IMAGING PRINCIPLES

22

22

23

24

31

Digital Medical Image Fundamentals

33

2.1

33

33

33

34

34

35

35

Terminology

2.1.1

Digital Image

2.1.2

Digitization and Digital Capture

2.1.3

Digital Medical Image

2.1.4

Image Size

2.1.5

Histogram

2.1.6

Image Display

2.2

Density Resolution, Spatial Resolution, and Signal-to-Noise

Ratio

2.3

Test Objects and Patterns for Measurement of Image Quality

2.4

Image in the Spatial Domain and the Frequency Domain

2.4.1

Frequency Components of an Image

2.4.2

The Fourier Transform Pair

2.4.3

The Discrete Fourier Transform

2.5

Measurement of Image Quality

2.5.1

Measurement of Sharpness

2.5.1.1

Point Spread Function (PSF)

2.5.1.2

Line Spread Function (LSF)

2.5.1.3

Edge Spread Function (ESF)

2.5.1.4

Modulation Transfer Function (MTF)

2.5.1.5

Mathematical Relationship among ESF,

LSF, and MTF

2.5.1.6

Relationship between Input Image, MTF,

and Output Image

2.5.2

Measurement of Noise

3. Two-Dimensional Medical Imaging

3.1

3.2

3.3

Principles of Conventional Projection Radiography

3.1.1

Radiology Workflow

3.1.2

Standard Procedures Used in Conventional Projection

Radiography

3.1.3

Analog Image Receptor

3.1.3.1

Image Intensifier Tube

3.1.3.2

Screen/Film Combination Cassette

3.1.3.3

X-ray Film

Digital Fuorography and Laser Film Scanner

3.2.1

Basic Principles of Analog-to-Digital Conversion

3.2.2

Video/CCD Scanning System and Digital Fluorography

3.2.3

Laser Film Scanner

Imaging Plate Technology

35

40

43

43

47

48

48

49

49

50

50

51

52

52

58

63

63

63

64

66

66

68

69

71

71

72

73

74

CONTENTS

3.3.1

3.4

3.5

3.6

3.7

3.8

3.9

4.

Principle of the Laser-Stimulated Luminescence

Phosphor Plate

3.3.2

Computed Radiography System Block Diagram and

Principle of Operation

3.3.3

Operating Characteristics of the CR System

3.3.4

Background Removal

3.3.4.1

What Is Background Removal?

3.3.4.2

Advantages of Background Removal in

Digital Radiography

Digital Radiography

3.4.1

Some Disadvantages of the Computed Radiography

System

3.4.2

Digital Radiography Image Capture

Full-Field Direct Digital Mammography

3.5.1

Screen/Film Cassette and Digital Mammography

3.5.2

Slot-Scanning Full-field Direct Digital Mammography

Digital Radiography and PACS

3.6.1

Integration of Digital Radiography with PACS

3.6.2

Applications of Digital Radiography in Clinical

Environment

Nuclear Medicine Imaging

3.7.1

Principles of Nuclear Medicine Scanning

3.7.2

The Gamma Camera and Associated Imaging System

2-D Ultrasound Imaging

3.8.1

Principles of B-Mode Ultrasound Scanning

3.8.2

System Block Diagram and Operational Procedure

3.8.3

Sampling Modes and Image Display

3.8.4

Color Doppler Ultrasound Imaging

3.8.5

Cine Loop Ultrasound Imaging

2-D Light Imaging

3.9.1

The Digital Chain

3.9.2

Color Image and Color Memory

3.9.3

2-D Microscopic Image

3.9.3.1

Instrumentation

3.9.3.2

Resolution

3.9.3.3

Contrast

3.9.3.4

Motorized Stage Assembly

3.9.3.5

Automatic Focusing

3.9.4

2-D Endoscopic Imaging

xi

76

76

76

81

81

82

83

83

84

88

88

88

89

89

91

92

92

93

93

94

95

96

97

98

98

98

98

99

99

101

103

103

103

104

Three-Dimensional Medical Imaging

107

4.1

107

107

110

111

111

112

112

4.2

2-D Image Reconstruction From 1-D Projections

4.1.1

The 2-D Fourier Projection Theorem

4.1.2

Algebraic Reconstruction Method

4.1.3

The Filtered (Convolution) Back-projection Method

4.1.3.1

A Numerical Example

4.1.3.2

Mathematical Formulation

3-D Image Reconstruction From 3-D Data Set

xii

CONTENTS

4.3

4.4

4.5

4.6

4.7

4.8

4.9

5.

4.2.1

3-D Data Acquisition

4.2.2

3-D Image Reconstruction

Transmission X-ray Computed Tomography (CT)

4.3.1

Convention X-ray CT

4.3.2

Spiral (Helical) CT

4.3.3

Multislice CT

4.3.3.1

Principles

4.3.3.2

Terminology Used in Multislice XCT

4.3.3.3

Whole Body CT Scan

4.3.4

Components and Data Flow of a CT Scanner

4.3.5

CT Image Data

4.3.5.1

Isotropic Image and Slice Thickness

4.3.5.2

Image Data File Size

4.3.5.3

Data Flow/Postprocessing

Single Photon Emission Computed Tomography

4.4.1

Emission Computed Tomography (ECT)

4.4.2

Single Photon Emission CT (SPECT)

Positron Emission Tomography (PET)

3-D Ultrasound Imaging (3-D US)

Magnetic Resonance Imaging (MRI)

4.7.1

MR Imaging Basics

4.7.2

Magnetic Resonance Image Production

4.7.3

Steps in Producing an MR Image

4.7.4

MR Imaging (MRI)

4.7.5

Other Types of Images from MR Signals

4.7.5.1

MR Angiography (MRA)

4.7.5.2

Other Pulse-Sequences Image

3-D Light Imaging

3-D Micro Imaging and Small Animal Imaging Center

112

113

113

113

114

117

117

118

120

120

122

122

122

122

122

122

127

128

129

132

132

132

133

134

134

135

136

138

140

Four-Dimensionality, Multimodality, and Fusion of Medical Imaging

147

5.1

147

147

149

149

151

151

152

152

152

154

154

5.2

5.3

Basics of 4-D, Multimodality, and Fusion of Medical Imaging

5.1.1

From 3-D to 4-D Imaging

5.1.2

Multimodality 3-D and 4-D Imaging

5.1.3

Image Registration

5.1.4

Image Fusion

5.1.5

Display of 4-D Medical Images and Fusion Images

4-D Medical Imaging

5.2.1

4-D Ultrasound Imaging

5.2.2

4-D X-ray CT Imaging

5.2.3

4-D PET Imaging

Multimodality Imaging

5.3.1

2-D and 3-D Anatomy—An Integrated Multimodality

Paradigm for Cancer in Dense Breasts

5.3.1.1

2-D US, Digital Mammography, 3-D US,

and 3-D MRI for Breast Exam

154

154

CONTENTS

Use of Volumetric 3-D Automated Fullbreast US (AFBUS) and Dedicated Breast

MRI for Defining Extent of Breast Cancer

5.3.2

Multimodality 2-D and 3-D Imaging in Radiation

Therapy (RT)

5.3.2.1

Radiation Therapy Workflow

5.3.2.2

2-D and 3-D RT Image Registration

5.3.3

Fusion of 3-D MRI and 3-D CT Images for RT

Application

5.4

Fusion of High-Dimensional Medical Imaging

5.4.1

Software versus Hardware Multimodality Image

Fusion

5.4.2

Combined PET-CT Scanner for Hardware Image

Fusion

5.4.3

Some Examples of PET-CT Hardware Image Fusion

5.4.4

Multimodality 3-D Micro Scanners—Software Image

Fusion

6. Image Compression

xiii

5.3.1.2

6.1

6.2

6.3

6.4

6.5

6.6

Terminology

Background

Error-Free Compression

6.3.1

Background Removal

6.3.2

Run-Length Coding

6.3.3

Huffman Coding

Two-Dimensional Irreversible (Lossy) Image Compression

6.4.1

Background

6.4.2

Block Compression Technique

6.4.2.1

Two-dimensional Forward Discrete Cosine

Transform

6.4.2.2

Bit Allocation Table and Quantization

6.4.2.3

DCT Coding and Entropy Coding

6.4.2.4

Decoding and Inverse Transform

6.4.3

Full-frame Compression

Measurement of the Difference between the Original and the

Reconstructed Image

6.5.1

Quantitative Parameters

6.5.1.1

Normalized Mean-Square Error

6.5.1.2

Peak Signal-to-Noise Ratio

6.5.2

Qualitative Measurement: Difference Image and Its

Histogram

6.5.3

Acceptable Compression Ratio

6.5.4

Receiver Operating Characteristic Analysis

Three-Dimensional Image Compression

6.6.1

Background

6.6.2

Basic Wavelet Theory and Multiresolution Analysis

6.6.3

One-, Two-, and Three-dimensional Wavelet

Transform

6.6.3.1

One-dimensional (1-D) Wavelet Transform

155

156

156

157

163

163

163

164

168

171

175

175

176

178

178

179

182

184

184

187

187

187

188

188

189

191

191

191

192

193

193

194

196

196

199

200

200

xiv

CONTENTS

6.6.3.2

6.6.3.3

6.7

6.8

6.9

PART II

7.

Two-dimensional (2-D) Wavelet Transform

Three-dimensional (3-D) Wavelet

Transform

6.6.4

Three-dimensional Image Compression with Wavelet

Transform

6.6.4.1

The Block Diagram

6.6.4.2

Mathematical Formulation of the

Three-dimensional Wavelet Transform

6.6.4.3

Wavelet Filter Selection

6.6.4.4

Quantization

6.6.4.5

Entropy Coding

6.6.4.6

Some Results

6.6.4.7

Wavelet Compression in Teleradiology

Color Image Compression

6.7.1

Examples of Color Images Used in Radiology

6.7.2

The Color Space

6.7.3

Compression of Color Ultrasound Images

Four-Dimensional Image Compression and Compression of

Fused Image

6.8.1

Four-dimensional Image Compression

6.8.1.1

MPEG (Motion Picture Experts Group)

Compression Method

6.8.1.2

Paradigm Shift in Video Encoding and

Decoding

6.8.1.3

Basic Concept of Video Compression

6.8.2

Compression of Fused Image

DICOM Standard and Food and Drug Administration (FDA)

Guidelines

6.9.1

FDA Compression Requirement

6.9.2

DICOM Standard in Image Compression

PACS FUNDAMENTALS

Picture Archiving and Communication System Components and

Workflow

7.1

7.2

7.3

PACS Components

7.1.1

Data and Image Acquisition Gateways

7.1.2

PACS Server and Archive

7.1.3

Display Workstations

7.1.4

Application Servers

7.1.5

System Networks

PACS Infrastructure Design Concept

7.2.1

Industry Standards

7.2.2

Connectivity and Open Architecture

7.2.3

Reliability

7.2.4

Security

A Generic PACS Workflow

201

201

203

203

203

205

205

206

206

207

208

208

209

210

210

210

210

210

211

213

213

213

214

217

219

219

219

221

222

223

223

224

224

225

225

226

226

CONTENTS

7.4

7.5

7.6

7.7

8.

Current PACS Architectures

7.4.1

Stand-alone PACS Model and General Data Flow

7.4.2

Client-Server PACS Model and Data Flow

7.4.3

Web-Based Model

PACS and Teleradiology

7.5.1

Pure Teleradiology Model

7.5.2

PACS and Teleradiology Combined Model

Enterprise PACS and ePR System with Image Distribution

Summary of PACS Components and Workflow

xv

228

228

229

231

231

232

232

234

235

Communications and Networking

237

8.1

237

237

239

242

242

8.2

8.3

8.4

8.5

8.6

Background

8.1.1

Terminology

8.1.2

Network Standards

8.1.3

Network Technology

8.1.3.1

Ethernet and Gigabit Ethernet

8.1.3.2

ATM (Asynchronous Transfer Mode)

Technology

8.1.3.3

Ethernet and Internet

8.1.4

Network Components for Connection

Cable Plan

8.2.1

Types of Networking Cables

8.2.2

The Hub Room

8.2.3

Cables for Input Devices

8.2.4

Cables for Image Distribution

Digital Communication Networks

8.3.1

Background

8.3.2

Design Criteria

8.3.2.1

Speed of Transmission

8.3.2.2

Standardization

8.3.2.3

Fault Tolerance

8.3.2.4

Security

8.3.2.5

Costs

PACS Network Design

8.4.1

External Networks

8.4.1.1

Manufacturer’s Image Acquisition Device

Network

8.4.1.2

Hospital and Radiology Information

Networks

8.4.1.3

Research and Other Networks

8.4.1.4

The Internet

8.4.1.5

Imaging Workstation (WS) Networks

8.4.2

Internal Networks

Examples of PACS Networks

8.5.1

An Earlier PACS Network at UCSF

8.5.2

Network Architecture at IPILab, USC

Internet 2

8.6.1

Image Data Communication

243

244

245

245

245

246

247

248

249

249

250

250

250

250

251

251

251

251

251

252

252

252

252

252

253

253

254

257

257

xvi

CONTENTS

8.6.2

8.6.3

8.7

8.8

9.

What Is Internet 2 (I2)?

An Example of I2 Performance

8.6.3.1

Connections of Three International Sites

8.6.3.2

Some Observations

8.6.4

Enterprise Teleradiology

8.6.5

Current Status

Wireless Networks

8.7.1

Wireless LAN (WLAN)

8.7.1.1

The Technology

8.7.1.2

WLAN Security, Mobility, Data Throughput,

and Site Materials

8.7.1.3

Performance

8.7.2

Wireless WAN (WWAN)

8.7.2.1

The Technology

8.7.2.2

Performance

Self-Scaling Wide Area Networks

8.8.1

Concept of Self-scaling Wide Area Networks

8.8.2

Design of Self-scaling WAN in Healthcare

Environment

8.8.2.1

Healthcare Application

8.8.2.2

The Self-Scalar

Industrial Standards (HL7 and DICOM) and Integrating the

Healthcare Enterprise (IHE)

9.1

9.2

9.3

9.4

Industrial Standards and Workflow Profiles

The Health Level 7 (HL7) Standard

9.2.1

Health Level 7

9.2.2

An Example

9.2.3

New Trend in HL7

From ACR-NEMA to DICOM and DICOM Document

9.3.1

ACR-NEMA and DICOM

9.3.2

DICOM Document

The DICOM 3.0 Standard

9.4.1

DICOM Data Format

9.4.1.1

DICOM Model of the Real World

9.4.1.2

DICOM File Format

9.4.2

Object Class and Service Class

9.4.2.1

Object Class

9.4.2.2

DICOM Services

9.4.3

DICOM Communication

9.4.4

DICOM Conformance

9.4.5

Examples of Using DICOM

9.4.5.1

Send and Receive

9.4.5.2

Query and Retrieve

9.4.6

New Features in DICOM

9.4.6.1

Visible Light (VL) Image

9.4.6.2

Mammography CAD (Computer-Aided

Detection)

257

258

258

260

260

261

261

261

261

262

263

263

263

264

265

265

266

266

266

269

269

270

270

271

272

273

273

274

275

275

278

278

279

279

280

281

283

283

283

285

285

286

287

CONTENTS

9.5

9.6

9.7

10.

9.4.6.3

Waveform IOD

9.4.6.4

Structured Reporting (SR) Object

9.4.6.5

Content Mapping Resource

IHE (Integrating The Healthcare Enterprise)

9.5.1

What Is IHE?

9.5.2

IHE Technical Framework and Integration Profiles

9.5.3

IHE Profiles

9.5.3.1

Domain: Anatomic Pathology

9.5.3.2

Domain: Cardiology

9.5.3.3

Domain: Eye Care

9.5.3.4

Domain: IT Infrastructure

9.5.3.5

Domain: Laboratory

9.5.3.6

Domain: Patient Care Coordination

9.5.3.7

Domain: Patient Care Devices

9.5.3.8

Domain: Radiation Oncology

9.5.3.9

Domain: Radiology

9.5.4

Some Examples of IHE Workflow Profiles

9.5.5

The Future of IHE

9.5.5.1

Multidisciplinary Effort

9.5.5.2

International Expansion

Other Standards and Software

9.6.1

UNIX Operating System

9.6.2

Windows NT/XP Operating Systems

9.6.3

C and C++ Programming Languages

9.6.4

SQL (Structural Query Language)

9.6.5

XML (Extensible Markup Language)

Summary of HL7, DICOM, and IHE

xvii

287

287

287

287

288

288

289

289

289

290

290

291

291

292

292

292

293

293

293

295

295

296

296

297

297

298

299

Image Acquisition Gateway

301

10.1

10.2

301

303

303

304

10.3

10.4

Background

DICOM-Compliant Image Acquisition Gateway

10.2.1

DICOM Compliance

10.2.2

DICOM-Based PACS Image Acquisition Gateway

10.2.2.1

Gateway Computer Components and

Database Management

10.2.2.2

Determination of the End of an Image

Series

Automatic Image Recovery Scheme for DICOM Conformance

Device

10.3.1

Missing Images

10.3.2

Automatic Image Recovery Scheme

10.3.2.1

Basis for the Image Recovery Scheme

10.3.2.2

The Image Recovery Algorithm

Interface of a PACS Module with the Gateway Computer

10.4.1

PACS Modality Gateway and HI-PACS (Hospital

Integrated) Gateway

10.4.2

Example 1: Interface the US Module with the PACS

Gateway

304

308

310

310

311

311

311

313

313

313

xviii

CONTENTS

10.4.3

10.5

10.6

10.7

11.

Example 2: Interface the 3-D Dedicated Breast

MRI Module with the PACS

DICOM or PACS Broker

10.5.1

Concept of the PACS Broker

10.5.2

An Example of Implementation of a PACS Broker

Image Preprocessing

10.6.1

Computed Radiography (CR) and Digital Radiography

(DR)

10.6.1.1

Reformatting

10.6.1.2

Background Removal

10.6.1.3

Automatic Orientation

10.6.1.4

Lookup Table Generation

10.6.2

Digitized X-ray Images

10.6.3

Digital Mammography

10.6.4

Sectional Images—CT, MR, and US

Clinical Operation and Reliability of the Gateway

10.7.1

The Weaknesses of the Gateway as a Single Point of

Failure

10.7.2

A Fail-Safe Gateway Design

315

315

315

315

317

317

317

318

318

320

320

321

321

322

322

322

PCS Server and Archive

325

11.1

325

11.2

11.3

11.4

Image Management Design Concept

11.1.1

Local Storage Management via PACS Intercomponent

Communication

11.1.2

PACS Server and Archive System Configuration

11.1.2.1

The Archive Server

11.1.2.2

The Database System

11.1.2.3

The Archive Storage

11.1.2.4

Backup Archive

11.1.2.5

Communication Networks

Functions of the PACS Server and Archive Server

11.2.1

Image Receiving

11.2.2

Image Stacking

11.2.3

Image Routing

11.2.4

Image Archiving

11.2.5

Study Grouping

11.2.6

RIS and HIS Interfacing

11.2.7

PACS Database Updates

11.2.8

Image Retrieving

11.2.9

Image Prefetching

PACS Archive Server System Operations

DICOM-Compliant PACS Archive Server

11.4.1

Advantages of a DICOM-Compliant PACS Archive

Server

11.4.2

DICOM Communications in PACS Environment

11.4.3

DICOM-Compliant Image Acquisition Gateways

11.4.3.1

Push Mode

11.4.3.2

Pull Mode

325

327

327

329

330

330

330

330

331

331

332

333

333

333

333

334

334

334

335

335

336

336

337

337

CONTENTS

xix

11.5

Dicom PACS Archive Server Hardware and Software

338

11.5.1

Hardware Components

338

11.5.1.1

RAID

339

11.5.1.2

SAN (Storage Area Network)

339

11.5.1.3

DLT

339

11.5.2

Archive Server Software

340

11.5.2.1

Image Receiving

340

11.5.2.2

Data Insert and PACS Database

340

11.5.2.3

Image Routing

341

11.5.2.4

Image Send

341

11.5.2.5

Image Query/Retrieve

341

11.5.2.6

Retrieve/Send

341

11.5.3

An Example

341

11.6

Backup Archive Server

343

11.6.1

Backup Archive Using an Application Service Provider

(ASP) Model

343

11.6.2

General Architecture

343

11.6.3

Recovery Procedure

344

11.6.4

Key Features

345

11.6.5

General Setup Procedures of the ASP Model

346

11.6.6

The Data Grid Model

347

12. Display Workstation

349

12.1

12.2

12.3

12.4

12.5

Basics of a Display Workstation

12.1.1

Image Display Board

12.1.2

Display Monitor

12.1.3

Resolution

12.1.4

Luminance and Contrast

12.1.5

Human Perception

12.1.6

Color Display

Ergonomics of Image Workstations

12.2.1

Glare

12.2.2

Ambient Illuminance

12.2.3

Acoustic Noise due to Hardware

Evolution of Medical Image Display Technologies

Types of Image Workstation

12.4.1

Diagnostic Workstation

12.4.2

Review Workstation

12.4.3

Analysis Workstation

12.4.4

Digitizing, Printing, and CD Copying Workstation

12.4.5

Interactive Teaching Workstation

12.4.6

Desktop Workstation

Image Display and Measurement Functions

12.5.1

Zoom and Scroll

12.5.2

Window and Level

12.5.3

Histogram Modification

12.5.4

Image Reverse

12.5.5

Distance, Area, and Average Gray Level

Measurements

349

350

352

353

353

354

354

355

355

355

356

356

356

357

357

358

361

361

361

362

362

362

362

364

365

xx

CONTENTS

12.5.6

12.6

12.7

12.8

12.9

13.

Optimization of Image Perception in Soft Copy

Display

12.5.6.1

Background Removal

12.5.6.2

Anatomical Regions of Interest

12.5.6.3

Gamma Curve Correction

12.5.7

Montage

Workstation Graphic User Interface (GUI) and Basic Display

Functions

12.6.1

Basic Software Functions in a Display Workstation

12.6.2

Workstation User Interface

DICOM PC-based Display Workstation

12.7.1

Hardware Configuration

12.7.1.1

Host Computer

12.7.1.2

Display Devices

12.7.1.3

Networking Equipment

12.7.2

Software System

12.7.2.1

Software Architecture

12.7.2.2

Software Modules in the Application

Interface Layer

Recent Developments in PACS Workstations

12.8.1

Postprocessing Workflow

12.8.2

Multidimensional Image Display

12.8.3

Specialized Postprocessing Workstation

Summary of PACS Workstations

365

365

365

365

369

369

369

369

370

370

371

371

371

374

374

375

377

377

378

379

383

Integration of HIS, RIS, PACS, and ePR

387

13.1

13.2

13.3

387

390

390

391

391

391

391

391

393

393

393

394

395

395

13.4

Hospital Information System

Radiology Information System

Interfacing PACS with HIS and RIS

13.3.1

Workstation Emulation

13.3.2

Database-to-Database Transfer

13.3.3

Interface Engine

13.3.4

Reasons for Interfacing PACS with HIS and RIS

13.3.4.1

Diagnostic Process

13.3.4.2

PACS Image Management

13.3.4.3

RIS Administration

13.3.4.4

Research and Training

13.3.5

Some Common Guidelines

13.3.6

Common Data in HIS, RIS, and PACS

13.3.7

Implementation of RIS–PACS Interface

13.3.7.1

Trigger Mechanism between Two

Databases

13.3.7.2

Query Protocol

13.3.8

An Example: The IHE Patient Information

Reconciliation Profile

Interfacing PACS with Other Medical Databases

13.4.1

Multimedia Medical Data

13.4.2

Multimedia in the Radiology Environment

395

395

396

399

399

400

CONTENTS

13.4.3

13.5

PART III

14.

An Example: The IHE Radiology Information

Integration Profile

13.4.4

Integration of Heterogeneous Databases

13.4.4.1

Interfacing Digital Voice Dictation or

Recognition System with PACS

13.4.4.2

Impact of the Voice Recognition System and

DICOM Structured Reporting to the

Dictaphone System

Electronic Patient Record (ePR)

13.5.1

Current Status of ePR

13.5.2

ePR with Image Distribution

13.5.2.1

The Concept

13.5.2.2

The Benefits

13.5.2.3

Some Special Features and Applications

in ePR with Image Distribution

xxi

PACS OPERATION

400

401

401

403

403

403

404

404

405

406

409

PACS Data Management and Web-Based Image Distribution

411

14.1

411

411

413

413

414

414

415

415

416

416

417

14.2

14.3

14.4

14.5

PACS Data Management

14.1.1

Concept of the Patient Folder Manager

14.1.2

On-line Radiology Reports

Patient Folder Management

14.2.1

Archive Management

14.2.1.1

Event Triggering

14.2.1.2

Image Prefetching

14.2.1.3

Job Prioritization

14.2.1.4

Storage Allocation

14.2.2

Network Management

14.2.3

Display Server Management

14.2.3.1

IHE Presentation of Grouped Procedures

(PGP) Profile

14.2.3.2

IHE Key Image Note Profile

14.2.3.3

IHE Simple Image and Numeric Report

Profile

Distributed Image File Server

Web Server

14.4.1

Web Technology

14.4.2

Concept of the Web Server in PACS Environment

Component-Based Web Server for Image Distribution and

Display

14.5.1

Component Technologies

14.5.2

The Architecture of Component-Based Web Server

14.5.3

The Data Flow of the Component-Based Web Server

14.5.3.1

Query/Retrieve DICOM Image/Data Resided

in the Web Server

14.5.3.2

Query/Retrieve DICOM Image/Data Resided

in the PACS Archive Server

418

419

420

421

421

421

423

424

425

425

425

426

426

xxii

CONTENTS

14.5.4

Component-Based Architecture of Diagnostic Display

Workstation

14.5.5

Performance Evaluation

14.6

Wireless Remote Management of Clinical Image Workflow

14.6.1

Remote Management of Clinical Image Workflow

14.6.2

Design of the Wireless Remote Management of Clinical

Image Workflow System

14.6.2.1

Clinical Needs Assessment

14.6.2.2

PDA Server and Graphical User

Interface (GUI) Development

14.6.2.3

Disaster Recovery Design

14.6.3

Clinical Experience

14.6.3.1

Lessons Learned at Saint John’s Health

Center (SJHC)

14.6.3.2

DICOM Transfer of Performance Results in

Disaster Recovery Scenarios

14.6.4

Summary of Wireless Remote Management of Clinical

Image Workflow

14.7

Summary of PACS Data Management and Web-Based Image

Distribution

15. Telemedicine and Teleradiology

15.1

15.2

15.3

15.4

15.5

15.6

Introduction

Telemedicine and Teleradiology

Teleradiology

15.3.1

Background

15.3.1.1

Why Do We Need Teleradiology?

15.3.1.2

What Is Teleradiology?

15.3.1.3

Teleradiology and PACS

15.3.2

Teleradiology Components

15.3.2.1

Image Capture

15.3.2.2

Data Reformatting

15.3.2.3

Image Storage

15.3.2.4

Display Workstation

15.3.2.5

Communication Networks

15.3.2.6

User Friendliness

Teleradiology Models

15.4.1

Off-hour Reading

15.4.2

ASP Model

15.4.3

Web-Based Teleradiology

15.4.4

PACS and Teleradiology Combined

Some Important Issues in Teleradiology

15.5.1

Image Compression

15.5.2

Image Data Privacy, Authenticity, and Integrity

15.5.3

Teleradiology Trade-off Parameters

15.5.4

Medical-Legal Issues

Tele-breast Imaging

15.6.1

Why Do We Need Tele-breast Imaging?

15.6.2

Concept of the Expert Center

427

429

431

431

432

432

432

433

436

436

437

437

438

441

441

442

444

444

444

445

446

446

447

448

448

448

448

450

451

451

452

453

453

455

455

455

456

457

458

458

458

CONTENTS

15.7

15.8

15.9

16.

15.6.3

Technical Issues

15.6.4

Multimodality Tele-Breast Imaging

Telemicroscopy

15.7.1

Telemicroscopy and Teleradiology

15.7.2

Telemicroscopy Applications

Real-Time Teleconsultation System

15.8.1

Background

15.8.2

System Requirements

15.8.3

Teleconsultation System Design

15.8.3.1

Image Display Workflow

15.8.3.2

Communication Requirements during

Teleconsultation

15.8.3.3

Hardware Configuration of the

Teleconsultation System

15.8.3.4

Software Architecture

15.8.4

Teleconsultation Procedure and Protocol

Summary of Teleradiology

xxiii

459

460

461

461

462

463

463

463

464

464

465

465

465

466

468

Fault-Tolerant PACS and Enterprise PACS

473

16.1

16.2

16.3

473

476

478

478

479

480

480

480

481

16.4

16.5

16.6

16.7

16.8

Introduction

Causes of a System Failure

No Loss of Image Data

16.3.1

Redundant Storage at Component Levels

16.3.2

The Backup Archive System

16.3.3

The Database System

No Interruption of PACS Data Flow

16.4.1

PACS Data Flow

16.4.2

Possible Failure Situations in PACS Data Flow

16.4.3

Methods to Protect Data Flow Continuity from

Hardware Component Failure

16.4.3.1

Hardware Solutions and Drawbacks

16.4.3.2

Software Solutions and Drawbacks

Current PACS Technology to Address Fault Tolerance

Clinical Experiences with Archive Server Downtime:

A Case Study

16.6.1

Background

16.6.2

PACS Server Downtime Experience

16.6.2.1

Hard Disk Failure

16.6.2.2

Mother Board Failure

16.6.3

Effects of Downtime

16.6.3.1

At the Management Level

16.6.3.2

At the Local Workstation Level

16.6.4

Downtime for over 24 Hours

16.6.5

Impact of the Downtime on Clinical Operation

Concept of Continuously Available PACS Design

CA PACS Server Design and Implementation

16.8.1

Hardware Components and CA Design Criteria

16.8.1.1

Hardware Components in an Image Server

482

482

482

483

484

484

485

485

485

486

486

486

486

487

487

488

488

488

xxiv

CONTENTS

16.8.1.2

Design Criteria

488

Architecture of the CA Image Server

490

16.8.2.1

The Triple Modular Redundant Server

490

16.8.2.2

The Complete System Architecture

492

16.8.3

Applications of the CA Image Server

495

16.8.3.1

PACS and Teleradiology

495

16.8.3.2

Off-site Backup Archive—Application Service

Provider Model (ASP)

495

16.8.4

Summary of Fault Tolerance and Fail Over

496

16.8.5

The Merit of the CA Image Server

496

Enterprise PACS

496

16.9.1

Background

496

16.9.2

Concept of Enterprise-Level PACS

497

Design of Enterprise-Level PACS

498

16.10.1 Conceptual Design

498

16.10.1.1 IT Supports

499

16.10.1.2 Operation Options

501

16.10.1.3 Architectural Options

502

16.10.2 Financial and Business Models

503

The Hong Kong Hospital Authority Healthcare Enterprise PACS

with Web-Based ePR Image Distribution

505

16.11.1 Design and Implementation Plan

505

16.11.2 Hospitals in a Cluster Joining the HKHA Enterprise

System

508

16.11.2.1 Background

508

16.11.2.2 Data Migration

509

16.11.3 Case Study of ePR with Image Distribution—The

Radiologist’s Point of View

511

Summary of Fault-Tolerant PACS and Enterprise PACS with

Image Distribution

512

16.8.2

16.9

16.10

16.11

16.12

17.

Image/Data Security

519

17.1

519

519

521

523

523

524

524

17.2

17.3

Introduction and Background

17.1.1

Introduction

17.1.2

Background

Image/Data Security Methods

17.2.1

Four Steps in Digital Signature and Digital Envelope

17.2.2

General Methodology

Digital Envelope

17.3.1

Image Signature, Envelope, Encryption, and

Embedding

17.3.1.1

Image Preprocessing

17.3.1.2

Hashing (Image Digest)

17.3.1.3

Digital Signature

17.3.1.4

Digital Envelope

17.3.1.5

Data Embedding

17.3.2

Data Extraction and Decryption

17.3.3

Methods of Evaluation of the Digital Envelope

525

525

525

527

527

529

529

532

CONTENTS

17.3.3.1

17.3.3.2

17.4

17.5

17.6

17.7

17.8

17.9

17.10

Robustness of the Hash Function

Percentage of the Pixel Changed in Data

Embedding

17.3.3.3

Time Required to Run the Complete

Image/Data Security Assurance

17.3.4

Limitations of the Digital Envelope Method

DICOM Security Profiles

17.4.1

Current DICOM Security Profiles

17.4.1.1

Secure Use Profiles

17.4.1.2

Secure Transport Connection Profiles

17.4.1.3

Digital Signature Profiles

17.4.1.4

Media Security Profiles

17.4.2

Some New DICOM Security on the Horizon

HIPAA and Its Impacts on PACS Security

PACS Security Server and Authority for Assuring Image

Authenticity and Integrity

17.6.1

Comparison of Image-Embedded DE Method and

DICOM Security

17.6.2

An Image Security System in a PACS

Environment

Lossless Digital Signature Embedding Methods for Assuring

2-D and 3-D Medical Image Integrity

17.7.1

Lossless Digital Signature Embedding

17.7.2

Procedures and Methods

17.7.3

General LDSE Method

Two-dimensional and Three-dimensional Lossless Digital

Signature Embedding Method

17.8.1

Two-dimensional LDSERS Algorithm

17.8.1.1

General 2-D Method

17.8.1.2

Signing and Embedding

17.8.1.3

Extracting and Verifying

17.8.2

Three-dimensional LDSERS Algorithm

17.8.2.1

General 3-D LDSE Method

17.8.2.2

Signing and Embedding

17.8.2.3

Extracting and Verifying

From a Three-dimensional Volume to Two-dimensional

Image(S)

17.9.1

Extract Sectional Images from 3-D Volume with

LDSERS

17.9.2

Two-dimensional LDSERS Results

17.9.2.1

Embedded LDSERS Pattern

17.9.2.2

Time Performance of 2-D LDSERS

17.9.3

Three-dimensional LDSERS Results

17.9.3.1

Embedded LDSERS Pattern

17.9.3.2

Time Performance of 3-D LDSERS

Application of Two-dimensional and Three-dimensional

LDSE in Clinical Image Data Flow

xxv

532

532

532

533

533

533

533

533

534

534

534

535

536

536

538

540

540

541

541

542

542

542

543

544

544

544

544

545

545

545

546

546

548

548

548

549

550

xxvi

CONTENTS

17.10.1

17.11

18.

Application of the LDSE Method in a Large Medical

Imaging System like PACS

550

17.10.2 Integration of the Three-dimensional LDSE Method with

Two IHE Profiles

553

17.10.2.1 Integration of the Three-dimensional LDSE

Method with Key Image Note

553

17.10.2.2 Integration of Three-dimensional LDSE

with Three-dimensional Postprocessing

Workflow

553

Summary and Significance of Image Security

554

PACS Clinical Implementation, Acceptance, and Evaluation

559

18.1

559

559

562

565

565

567

567

569

569

569

18.2

18.3

18.4

18.5

18.6

18.7

Planning

18.1.1

18.1.2

18.1.3

to Install a PACS

Cost Analysis

Film-Based Operation

Digital-Based Operation

18.1.3.1

Planning a Digital-Based Operation

18.1.3.2

Training

18.1.3.3

Checklist for Implementation of a PACS

Manufacturer’s PACS Implementation Strategy

18.2.1

System Architecture

18.2.2

Implementation Strategy

18.2.3

Integration of an Existing In-house PACS with a New

Manufacturer’s PACS

Templates for PACS RFP

PACS Implementation Strategy

18.4.1

Risk Assessment Analysis

18.4.2

Phases Implementation

18.4.3

Development of Work Groups

18.4.4

Implementation Management

Implementation

18.5.1

Site Preparation

18.5.2

Define Required PACS Equipment

18.5.2.1

Workstation Requirements

18.5.2.2

Storage Capacity

18.5.2.3

Storage Types and Image Data Migration

18.5.3

Develop the Workflow

18.5.4

Develop the Training Program

18.5.5

On Line: Go Live

18.5.6

Burn-in Period and System Management

System Acceptance

18.6.1

Resources and Tools

18.6.2

Acceptance Criteria

18.6.2.1

Quality Assurance

18.6.2.2

Technical Testing

18.6.3

Implement the Acceptance Testing

Clinical PACS Implementation Case Studies

570

571

571

571

572

572

573

573

573

574

575

575

575

575

576

576

577

577

578

578

580

582

583

583

CONTENTS

18.7.1

18.8

18.9

19.

Saint John’s Healthcare Center, Santa Monica,

California

18.7.2

University of California, Los Angeles

18.7.3

University of Southern California, Los Angeles

18.7.4

Hong Kong New Territories West Cluster (NTWC):

Tuen Mun (TMH) and Pok Oi (POH) Hospitals

18.7.5

Summary of Case Studies

PACS System Evaluation

18.8.1

Subsystem Throughput Analysis

18.8.1.1

Residence Time

18.8.1.2

Prioritizing

18.8.2

System Efficiency Analysis

18.8.2.1

Image Delivery Performance

18.8.2.2

System Availability

18.8.2.3

User Acceptance

18.8.3

Image Quality Evaluation—ROC Method

18.8.3.1

Image Collection

18.8.3.2

Truth Determination

18.8.3.3

Observer Testing and Viewing

Environment

18.8.3.4

Observer Viewing Sequences

18.8.3.5

Statistical Analysis

Summary of PACS Implementation, Acceptance, and Evaluation

xxvii

584

585

586

587

589

589

589

590

591

591

591

592

593

593

593

594

594

594

595

596

PACS Clinical Experience, Pitfalls, and Bottlenecks

599

19.1

599

600

601

603

603

603

603

604

605

605

605

605

605

607

607

608

608

611

612

19.2

19.3

19.4

Clinical

19.1.1

19.1.2

19.1.3

Experience at the Baltimore VA Medical Center

Benefits

Costs

Savings

19.1.3.1

Film Operation Costs

19.1.3.2

Space Costs

19.1.3.3

Personnel Costs

19.1.4

Cost-Benefit Analysis

Clinical Experience at St. John’s Healthcare Center

19.2.1

St. John’s Healthcare Center’s PACS

19.2.2

Backup Archive

19.2.2.1

The Second Copy

19.2.2.2

The Third Copy

19.2.2.3

Off-site Third Copy Archive Server

19.2.3

ASP Backup Archive and Disaster Recovery

University of California, Los Angeles

19.3.1

Legacy PACS Archive Data Migration

19.3.2

ASP Backup Archive

University of Southern California

19.4.1

The Ugly Side of PACS: Living through a PACS

Divorce

19.4.2

Is Divorce Really Necessary? The Evaluation Process

19.4.3

Coping with a PACS Divorce

612

613

613

xxviii

CONTENTS

19.4.3.1

19.4.3.2

19.4.3.3

19.4.3.4

19.5

19.6

19.7

19.8

PART IV

20.

Preparing for the Divorce

Business Negotiation

Data Migration

Clinical Workflow Modification and New

Training

19.4.4

Summary of USC and Remaining Issues

PACS Pitfalls

19.5.1

During Image Acquisition

19.5.1.1

Human Errors at Imaging Acquisition

Devices

19.5.1.2

Procedure Errors at Imaging Acquisition

Devices

19.5.2

At the Workstation

19.5.2.1

Human Error

19.5.2.2

Display System Deficiency

PACS Bottlenecks

19.6.1

Network Contention

19.6.1.1

Redesigning the Network Architecture

19.6.1.2

Operational Environment

19.6.2

Slow Response at Workstations

19.6.3

Slow Response from the Archive Server

DICOM Conformance

19.7.1

Incompatibility in DICOM Conformance Statement

19.7.2

Methods of Remedy

Summary of PACS Clinical Experience, Pitfalls, and

Bottlenecks

PACS- AND DICOM-BASED IMAGING INFORMATICS

613

614

614

614

615

615

615

615

618

620

620

620

621

622

622

623

624

625

626

626

627

627

629

DICOM-Based Medical Imaging Informatics

631

20.1

20.2

632

632

632

633

633

634

634

634

635

635

638

638

638

638

639

639

640

640

20.3

20.4

The Medical Imaging Informatics Infrastructure (MIII) Platform

HIS/RIS/PACS, Medical Images, ePR, and Related Data

20.2.1

HIS/RIS/PACS and Related Data

20.2.2

Other Types of Medical Images

20.2.3

ePR and Related Data/Images

Imaging Informatics Tools

20.3.1

Image Processing, Analysis, and Statistical Methods

20.3.2

Visualization and Graphical User Interface

20.3.3

Three-dimensional Rendering and Image Fusion

20.3.3.1

3-D Rendering

20.3.3.2

Image Fusion

20.3.4

Data Security

20.3.5

Communication Networks

Database and Knowledge Base Management

20.4.1

Grid Computing and Data Grid

20.4.2

Content-Based Image Indexing

20.4.3

Data Mining

20.4.3.1

The Concept of Data Mining

CONTENTS

20.4.3.2

20.5

20.6

20.7

21.

An Example of Discovering the Average

Hand Image

20.4.4

Computer-Aided Diagnosis (CAD)

20.4.5

Image-Intensive Radiation Therapy Planning and

Treatment

20.4.6

Surgical Simulation and Modeling

Application Middleware

Customized Software and System Integration

20.6.1

Customized Software and Data

20.6.2

System Integration

Summary of Medical Image Informatics Infrastructure (MIII)

xxix

Data Grid for PACS and Medical Imaging Informatics

21.1

21.2

21.3

21.4

21.5

21.6

641

643

643

644

644

645

645

645

645

649

Distributed Computing

649

21.1.1

The Concept of Distributed Computing

649

21.1.2

Distributed Computing in PACS Environment

651

Grid Computing

653

21.2.1

The Concept of Grid Computing

653

21.2.2

Current Grid Computing Technology

653

21.2.3

Grid Technology and the Globus Toolkit

655

21.2.4

Integrating DICOM Technology with the Globus

Toolkit

656

Data Grid

656

21.3.1

Data Grid Infrastructure in the Image Processing and

Informatics Laboratory (IPILab)

656

21.3.2

Data Grid for the Enterprise PACS

656

21.3.3

Roles of the Data Grid in the Enterprise PACS Daily

Clinical Operation

660

Fault-Tolerant Data Grid for PACS Archive and Backup,

Query/Retrieval, and Disaster Recovery

661

21.4.1

Archive and Backup

662

21.4.2

Query/Retrieve (Q/R)

663

21.4.3

Disaster Recovery—Three Tasks of the Data

Grid When the PACS Server or Archive Fails

663

Data Grid as the Image Fault-Tolerant Archive in Image-Based

Clinical Trials

663

21.5.1

Image-Based Clinical Trial and Data Grid

663

21.5.2

The Role of a Radiology Core in Imaging-Based Clinical

Trials

665

21.5.3

Data Grid for Clinical Trials—Image Storage and

Backup

667

21.5.4

Data Migration from the Backup Archive to the Data

Grid

668

21.5.5

Data Grid for Multiple Clinical Trials

670

A Specific Clinical Application—Dedicated Breast MRI

Enterprise Data Grid

670

21.6.1

Data Grid for Dedicated Breast MRI

671

xxx

CONTENTS

21.6.2

21.7

21.8

21.9

22.

Functions of a Dedicated Breast MRI Enterprise Data

Grid

671

21.6.3

Components in the Enterprise Breast Imaging Data

Grid (BIDG)

672

21.6.4

Breast Imaging Data Grid (BIDG) Workflows in Archive

and Backup, Query/Retrieve, and Disaster Recovery

672

Two Considerations in Administrating the Data Grid

674

21.7.1

Image/Data Security in Data Grid

674

21.7.2

Sociotechnical Considerations in Administrating the

Data Grid

674

21.7.2.1

Sociotechnical Considerations

674

21.7.2.2

For Whom the Data Grid Tolls?

677

Current Research and Development Activities in Data Grid

677

21.8.1

IHE Cross-Enterprise Document Sharing (XDS)

678

21.8.2

IBM Grid Medical Archive Solution (GMAS)

678

21.8.3

Cancer Biomedical Informatics Grid (caBIG)

680

21.8.4

Medical Imaging and Computing for Unified

Information Sharing (MEDICUS)

680

Summary of Data Grid

680

Multimedia Electronic Patient Record (ePR) System

22.1

22.2

22.3

22.4

22.5

685

The Electronic Patient Record (ePR) System

685

22.1.1

Purpose of the ePR System

685

22.1.2

Integrating the ePR with Images

686

22.1.3

The Multimedia ePR System

687

The Veterans Affairs Healthcare Enterprise (VAHE) ePR

with Images

687

22.2.1

Background

687

22.2.2

The VistA Information System Architecture

688

22.2.3

The VistA Imaging

688

22.2.3.1

Current Status

688

22.2.3.2

VistA Imaging Work Flow

689

22.2.3.3

VistA Imaging Operation

691

Integrating Images into the ePR of the Hospital Authority (HA) of

Hong Kong

693

22.3.1

Background

693

22.3.2

Clinical Information Systems in the HA

694

22.3.3

Rationales for Image Distribution

694

22.3.3.1

Patient Mobility

694

22.3.3.2

Remote Access to Images

694

22.3.4

Design Criteria

694

22.3.5

The ePR with Image Distribution Architecture and

Workflow Steps

697

22.3.6

Implementation Experiences and Lessons Learned

697

The Multimedia ePR System for Patient Treatment

698

22.4.1

Fundamental Concepts

698

22.4.2

Infrastructure and Basic Components

698

The Multimedia ePR System for Radiation Therapy Treatment

700

CONTENTS

22.5.1

22.5.2

Background

Basic Components

22.5.2.1

The ePR Software Organization

22.5.2.2

Database Schema

22.5.2.3

Data Model

22.5.2.4

Data Flow Model

The Multimedia ePR System for Minimally Invasive

Spinal Surgery

22.6.1

Background

22.6.2

Basic Components

22.6.2.1

The ePR Software Organization

22.6.2.2

Database Schema

22.6.2.3

Data Model

22.6.2.4

Data Flow Model

Summary of the Multimedia ePR System

700

700

700

702

703

703

Multimedia Electronic Patient Record System in Radiation Therapy

709

23.1

23.2

23.3

709

710

712

712

713

22.6

22.7

23.

xxxi

23.4

23.5

23.6

23.7

23.8

Background in Radiation Therapy Planning and Treatment

Radiation Therapy Workflow

The ePR Data Model and DICOM RT Objects

23.3.1

The ePR Data Model

23.3.2

DICOM RT Objects

Infrastructure, Workflow, and Components of the Multimedia

ePR in RT

23.4.1

DICOM RT Based ePR System Architectural Design

23.4.2

DICOM RT Objects’ Input

23.4.3

DICOM RT Gateway

23.4.4

DICOM RT Archive Server

23.4.5

RT Web-Based ePR Server

23.4.6

RT Web Client Workstation (WS)

Database Schema

23.5.1

Database Schema of the RT Archive Server

23.5.2

Data Schema of the RT Web Server

Graphical User Interface Design

Validation of the Concept of Multimedia ePR System in RT

23.7.1

Integration of the ePR System

23.7.1.1

The RT ePR Prototype

23.7.1.2

Hardware and Software

23.7.1.3

Graphical User Interface (GUI) in the WS

23.7.2

Data Collection

23.7.3

Multimedia Electronic Patient Record of a Sample

RT Patient

Advantages of the Multimedia ePR System in RT for

Daily Clinical Practice

23.8.1

Communication between Isolated Information Systems

and Archival of Information

23.8.2

Information Sharing

23.8.3

A Model of Comprehensive Electronic Patient Record

703

703

704

704

705

705

707

707

714

714

714

716

716

717

719

719

720

721

721

723

723

723

723

725

725

725

729

729

730

730

xxxii

CONTENTS

23.9

23.10

24.

Utilization of the Multimedia ePR System in RT for

Image-Assisted Knowledge Discovery and Decision Support

Summary of the Multimedia ePR in Radiation Therapy

Multimedia Electronic Patient Record (ePR) System for

Image-Assisted Spinal Surgery

24.1

24.2

24.3

24.4

24.5

24.6

Integration of Medical Diagnosis with Image-Assisted Surgery

Treatment

24.1.1

Bridging the Gap between Diagnostic Images and

Surgical Treatment

24.1.2

Minimally Invasive Spinal Surgery (MISS)

24.1.3

Minimally Invasive Spinal Surgery Procedure

24.1.4

The Algorithm of Spine Care

24.1.5

Rationale of the Development of the Multimedia ePR

System for Image-Assisted MISS

24.1.6

The Goals of the ePR

Minimally Invasive Spinal Surgery Workflow

24.2.1

General MISS Workflow

24.2.2

Clinical Site for Developing the MISS

Multimedia ePR System for Image-Assisted MISS Workflow

and Data Model

24.3.1

Data Model and Standards

24.3.2

The ePR Data Flow

24.3.2.1

Pre-Op Workflow

24.3.2.2

Intra-Op Workflow

24.3.2.3

Post-Op Workflow

Minimally Invasive Spinal Surgery ePR System Architecture

24.4.1

Overall ePR System Architecture for MISS

24.4.2

Four Major Components in the MISS ePR System

24.4.2.1

Integration Unit (IU)

24.4.2.2

The Tandem Gateway Server

24.4.2.3

The Tandem ePR Server

24.4.2.4

Visualization and Display

Pre-Op Authoring Module

24.5.1

Workflow Analysis

24.5.2

Participants in the Surgical Planning

24.5.3

Significance of Pre-Op Data Organization

24.5.3.1

Organization of the Pre-Op Data

24.5.3.2

Surgical Whiteboard Data

24.5.4

Graphical User Interface

24.5.4.1

Editing

24.5.4.2

The Neuronavigator Tool for Image

Correlation

24.5.4.3

Pre-Op Display

24.5.4.4

Extraction of Clinical History for Display

Intra-Op Module

24.6.1

The Intra-Op Module

24.6.2

Participants in the OR

730

731

735

735

735

736

738

741

744

744

745

745

746

746

746

747

748

748

749

749

749

750

750

751

752

754

754

754

755

757

757

757

757

758

760

760

761

761

761

761

CONTENTS

24.7

24.8

24.9

25.

24.6.3

24.6.4

24.6.5

24.6.6

24.6.7

Post-Op

24.7.1

24.7.2

24.7.3

24.7.4

Data Acquired during Surgery

Internal Architecture of the Integration Unit (IU)

Interaction with the Gateway

Graphical User Interface

Rule-Based Alert Mechanism

Module

Post-Op Module

Participants in the Post-Op Module Activities

Patient in the Recovery Area

Post-Op Documentation—The Graphical User

Interface (GUI)

24.7.5

Follow-up Pain Surveys

System Deployment and User Training and Support

24.8.1

System Deployment

24.8.1.1

Planning and Design Phase

24.8.1.2

Hardware Installation

24.8.1.3

Software Installation

24.8.1.4

Special Software for Training

24.8.2

Training and Supports for Clinical Users

24.8.3

Experience Gained and Lessons Learned

Summary of Developing the Multimedia ePR System for

Image-Assisted Spinal Surgery

Computer-Aided Diagnosis (CAD) and Image-Guided Decision

Support

25.1

25.2

25.3

25.4

25.5

Computer-Aided Diagnosis (CAD)

25.1.1

CAD Overview

25.1.2

CAD Research and Development (R&D)

Computer-Aided Detection and Diagnosis (CAD) without PACS

25.2.1

CAD without PACS and without Digital Image

25.2.2

CAD without PACS but with Digital Image

Conceptual Methods of Integrating CAD with DICOM

PACS and MIII

25.3.1

PACS WS Q/R, CAD WS Detect

25.3.2

CAD WS Q/R and Detect

25.3.3

PACS WS with CAD Software

25.3.4

Integration of CAD Server with PACS or MIII

Computer-Aided Detection of Small Acute Intracranial

Hemorrhage (AIH) on Computer Tomography (CT) of Brain

25.4.1

Clinical Aspect of Small Acute Intracranial

Hemorrhage (AIH) on CT of Brain

25.4.2

Development of the CAD Algorithm for AIH on CT

25.4.2.1

Data Collection and Radiologist Readings

25.4.2.2

The CAD System Development

Evaluation of the CAD for AIH

25.5.1

Rationale of Evaluation of a CAD System

25.5.2

MRMC ROC Analysis for CAD Evaluation

25.5.2.1

Data Collection

xxxiii

761

762

763

763

764

764

764

765

765

765

766

766

766

766

767

768

768

768

769

772

775

775

775

776

779

779

780

781

781

781

784

784

784

785

785

785

786

794

794

795

795

xxxiv

CONTENTS

25.6

25.7

26.

25.5.2.2

ROC Observers

795

25.5.2.3

ROC Procedure

795

25.5.2.4

ROC Data Analysis

796

25.5.3

Effect of CAD-Assisted Reading on Clinicians’

Performance

797

25.5.3.1

Clinicians Improved Performance

with CAD

797

25.5.3.2

Sensitivity, Specificity, Positive, and Negative

Predictive Values

797

25.5.3.3

Change of Diagnostic Decision after CAD

Assistance

799

25.5.3.4

Some Clinical Considerations

799

From System Evaluation to Clinical Practice

800

25.6.1

Further Clinical Evaluation

800

25.6.2

Next Steps for Deployment of the CAD System for

AIH in Clinical Environment

801

Summary of Computer-Assisted Diagnosis

803

Integration of Computer-Aided Diagnosis (CAD) with PACS

807

26.1

26.2

807

808

809

810

810

810

811

811

813

813

813

813

813

813

813

814

814

814

815

817

817

26.3

26.4

26.5

26.6

The Need for CAD-PACS Integration

DICOM Standard and IHE Workflow Profiles

26.2.1

DICOM Structured Reporting (DICOM SR)

26.2.2

IHE Profiles

The CAD–PACS© Integration Toolkit

26.3.1

Current CAD Workflow

26.3.2

Concept

26.3.3

The Infrastructure

26.3.4

Functions of the Three Editions

26.3.4.1

DICOM-SCTM , 1st Edition

26.3.4.2

DICOM–PACS–IHETM , 2nd Edition

26.3.4.3

DICOM–CAD–IHETM , 3rd Edition

26.3.5

Data Flow of the Three Editions

26.3.5.1

DICOM-SCTM , 1st Edition

26.3.5.2

DICOM–PACS–IHETM , 2nd Edition

26.3.5.3

DICOM–CAD–IHETM , 3rd Edition

Integrating Using the CAD–PACS Toolkit

26.4.1

Cases of DICOM SR Already Available

26.4.2

Integration of Commercial CADs with PACS

DICOM SR and the CAD–PACS Integration Toolkit

26.5.1

Multiple Sclerosis (MS) on MRI

26.5.2

Generation of the DICOM SR Document from a CAD

Report

Integration of CAD with PACS for Multiple Sclerosis

(MS) on MRI

26.6.1

Connecting the DICOM SR with the CAD–PACS

Toolkit

26.6.2

Integration of PACS with CAD for MS

818

820

820

821

CONTENTS

26.7

26.8

xxxv

Integration of CAD with PACS for Bone Age Assessment of

Children—The Digital Hand Atlas

822

26.7.1

Bone Age Assessment of Children

822

26.7.1.1

Classical Method of Bone Age Assessment of

Children from a Hand Radiograph

822

26.7.1.2

Rationale for the Development of a CAD

Method for Bone Age Assessment

823

26.7.2

Data Collection

823

26.7.2.1

Subject Recruitment

823

26.7.2.2

Case Selection Criteria

824

26.7.2.3

Image Acquisition

824

26.7.2.4

Image Interpretation

824

26.7.2.5

Film Digitization

824

26.7.2.6

Data Collection Summary

824

26.7.3

Methods of Analysis

828

26.7.3.1

Statistical Analysis

828

26.7.3.2

Radiologists’ Interpretation

828

26.7.3.3

Cross-racial Comparisons

830

26.7.3.4

Development of the Digital Hand Atlas for

Clinical Evaluation

831

Integration of CAD with PACS for Bone Age Assessment of

Children—The CAD System

833

26.8.1

The CAD System Based on Fuzzy Logic for Bone Age

Assessment

833

26.8.2

Fuzzy System Architecture

835

26.8.2.1

Knowledge Base Derived from the DHA

835

26.8.2.2

Phalangeal Fuzzy Subsystem

836

26.8.2.3

Carpal Bone Fuzzy Subsystem

838

26.8.2.4

Wrist Joint Fuzzy Subsystem

838

26.8.3

Fuzzy Integration of Three Regions

839

26.8.4

Validation of the CAD and Comparison of the CAD

Result with the Radiologists’ Assessment

840

26.8.4.1

Validation of the CAD

840

26.8.4.2

Comparison of CAD versus Radiologists’

Assessment of Bone Age

840

26.8.5

Clinical Evaluation of the CAD System for Bone Age

Assessment

844

26.8.5.1

BAA Evaluation in the Clinical

Environment

844

26.8.5.2

Clinical Evaluation Workflow Design

845

26.8.5.3

Web-Based BAA Clinical Evaluation

System

848

26.8.6

Integrating CAD for Bone Age Assessment with Other

Informatics Systems

850

26.8.6.1

DICOM Structured Reporting

851

26.8.6.2

Integration of Content-Based DICOM SR

with CAD

851

26.8.6.3

Computational Services in Data Grid

852

xxxvi

CONTENTS

26.9

26.10

27.

Research and Development Trends in CAD–PACS Integration

Summary of CAD–PACS Integration

Location Tracking and Verification System in Clinical Environment

27.1

27.2

27.3

27.4

27.5

27.6

27.7

27.8

Need for the Location Tracking and Verification System in

Clinical Environment

27.1.1

HIPAA Compliance and JCAHO Mandate

27.1.2

Rationale for Developing the LTVS

Clinical Environment Workflow

27.2.1

Need for a Clinical Environment Workflow Study

27.2.2

The Healthcare Consultation Center II (HCC II)

Available Tracking and Biometric Technologies

27.3.1

Wireless Tracking

27.3.2

Biometrics Verification System

Location Tracking and Verification System (LTVS) Design

27.4.1

Design Concept

27.4.2

System Infrastructure

27.4.3

Facial Recognition System Module: Registering,

Adding, Deleting, and Verifying Facial Images

27.4.4

Location Tracking Module: Design and Methodology

27.4.5

LTVS Application Design and Functionality

27.4.5.1

Application Design

27.4.5.2

LTVS Operation

27.4.5.3

LTVS User Interface Examples

27.4.6

Lessons Learned from the LVTS Prototype

27.4.6.1

Address Clinical Needs in the Outpatient

Imaging Center

27.4.6.2