Sentiment Analysis Project Report: YouTube Comments

advertisement

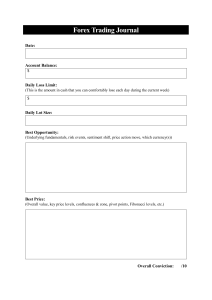

Sentiment Analysis: A project to detect

sentiment from texts

Course: CSE 299

Section: 11

Faculty Name: Ishan Arefin Hossain (IAH)

Group Name: GenSolvers

Group Members: Najmun Nahar Naj(2031106642)

Asman Farhad(2031679642)

Abdullah Al Sayem(2022207042)

Declaration page

The members of Team GenSolvers, Abdullah Al Sayem(2022207042), Najmun Nahar

Naj(2031106642), and Asman Farhad(), hereby declare that the project report for CSE299:

Junior Design Project and Project Report, titled "Sentiment Analysis Of Youtube comments,"

which was turned in to the course instructor, Ishan Arefin Hossain, was prepared exclusively for

academic requirements and purposes by us as a team. The members of the group additionally

affirm that no other entity or individual may exploit this project work to obtain any honors,

degrees, or diplomas from any institution or institute.

Abdullah Al Sayem(2022207042)

Najmun Nahar Naj(2031106642)

Asman Farhad(2031679642)

Approval

The CSE299 Junior Design Project report titled “Sentiment Analysis on youtube comments

using SVM” prepared by and submitted by Adullah Al Sayem, Najmun Nahar Naj and Asman

Farhad, fulfills the requirements of the course, has been examined, and hereby recommended for

acceptance and approval for final submission for the fulfillment of the course CSE299: Junior

Design Project.

Ishan Arefin Hossain| CSE299 Faculty

Lecturer, Department of ECE

Acknowledgement

The successful completion of this project and its report can be attributed to the cooperation and

understanding among the team members who participated, as well as the allocation of tasks

involving dataset collection, model training, and web page development. Internet-based

resources, including tutorials, learning sites, and resources specific to Android app development,

machine learning, and dataset training, are also deserving of recognition. The most significant

support, in the end, came from the junior design course faculty, who offered guidance in the

form of numerous workarounds and tips, as well as constructive criticism and valuable insights

concerning the implementation of machine learning and web application features. This support

was instrumental in ensuring the successful completion of the project.

Abstract

Sentiment analysis, an essential element of text mining and natural language processing, is

instrumental in comprehending the sentiment and public opinion conveyed in online content.

Anonymity and sentiment analysis as they pertain to remarks made on YouTube videos, the

preeminent video-sharing platform, are the subject of this research. YouTube's extensive user

population and diverse array of content contribute significantly to the wealth of knowledge

regarding viewers' sentiments, evaluations, and concerns. The objective of this study is to

construct and assess sentiment analysis models that can autonomously categorize YouTube

comments as positive, negative, or neutral in tone. By employing cutting-edge natural language

processing methodologies, this research investigates the unique obstacles that arise in YouTube

comments, including the use of informal language, vernacular, and a dearth of contextual

information. Following preprocessing operations such as stemming, tokenization, and text

cleansing, machine learning algorithms, and deep learning models are implemented in the

analysis. The results of this study hold practical significance for individuals involved in content

creation, advertising, and platform management. They provide valuable insights into the

sentiment of their audiences, allow for the assessment of video performance, and enhance the

effectiveness of viewer engagement. This has a look at makes a treasured contribution to the

field of public sentiment analysis in the virtual generation with the aid of automating sentiment

evaluation of YouTube feedback. Additionally, it highlights the capacity for destiny

advancements in sentiment evaluation that may be carried out across diverse social media

platforms.

Table of content

1. Declaration

1

2. Approval

2

3. Acknowledgement

3

4. Abstract

4

5. Introduction

6

6. Literature review

7

7. Methodology and implementation

● Methodology

9

Data collection

Data processing

Feature extraction

Splitting data

Model selection

Model training

● Implementation

10

Model deployment

User interaction

Scraped comments

Displaying results

Model performance

8. Motivation

11

9. Conclusion

12

10. Contribution

13

11. Reference

14

12. Appendix

14

Introduction

In the significant landscape of the internet, YouTube stands as one of the most distinguished

platforms for sharing and consuming multimedia content. With billions of films and remarks, it's

not only a repository of data but additionally a mirrored image of diverse human sentiments.

This record delves into the arena of sentiment analysis implemented to YouTube remarks.

Sentiment analysis is a effective device that allows us to recognize the feelings, opinions, and

attitudes expressed through users within this bustling online community. By leveraging gadget

getting to know models, we can find precious insights from the ocean of text-based interactions.

The significance of sentiment analysis on this context can't be overstated. It has applications

ranging from content creators striving to improve target market engagement to advertisers

seeking to understand client alternatives. Moreover, YouTube itself advantages via creating a

greater customized and engaging revel in for its users.

In this document, we can explore the methodologies and fashions used for sentiment evaluation

of YouTube feedback, the challenges posed through this precise dataset, and the capability

benefits this evaluation can yield. We will delve into the intricacies of the gadget getting to know

algorithms that allow us to categorise remarks into advantageous, terrible, or neutral sentiments,

permitting us to recognize the emotional pulse of this massive online community.

Literature review

Sentiment evaluation research humans's critiques, emotions, exams, attitudes, and feelings of

their comments. YouTube is a prominent video-sharing community with a huge volume of usergenerated cloth in the form of statements. The sentiment analysis of comments left on YouTube

can supply treasured insights into humans's thoughts and attitudes about videos and the

information they contain.

To determine the polarity of user remarks, Asghar et al. (n.d.) conducted a brief poll on

sentiment analysis employing YouTube comments. They presented many strategies for assessing

the sentiment of user comments, including using SentiWordNet, a sentiment lexicon. They also

explored sentiment analysis applications such as comment filtration, personal suggestions, and

user profiling.

Lorentz and Singh (2021) studied the application of sentiment evaluation on comments on

YouTube to forecast a video's like proportion. They assessed five different classifiers using

initial training on YouTube remarks, tweets, and a mix of tweets and comments, as well as four

different prediction formulae that used neutral words in various ways. They discovered a

favorable relationship between the projected and actual liking percentage.

Yafooz and Alhujaili (2021) studied sentiment analysis methodologies and approaches that may

be employed in YouTube videos in a research article. They described and contrasted several

sentiment analysis methodologies, encompassing lexicon-based, artificial learning-based, and

blended methods. They also talked about the difficulties of analyzing sentiment in YouTube

comments, including humor, irony, and circumstance.

Singh (2021) recommended a version for sentiment evaluation for feedback left on YouTube

using an incorporated method that includes lexicon-based totally and device learning-powered

methodologies in a research article. They applied a dataset of one thousand responses and

performed a sentiment type accuracy of 86.5%.

Akhtar (2019) gave a top-level view of methods to analyze consumer reviews on a particular

video in a short studies. They examined the difficulties in reading sentiment in YouTube

remarks, mainly using slang, emojis, and misspellings. They also contrasted diverse sentiment

evaluation methods and instruments, which include lexicon-based totally, device getting to

know-primarily based, and blended methods.

In research carried out by way of Kavitha et al. (2020), the researchers labeled consumer

feedback submitted on YouTube films in general on their relevance to the video content material

described within the video description. They utilized an assortment of 1000 comments to

categorize them as applicable, slightly relevant, irrelevant, or junk mail. They also counseled a

classification model with an accuracy of 89.5% primarily based on a combination of naive Bayes

and helping vector system strategies.

Finally, due to the enormous volume of user-generated information and the intricacy of the

syntax used in the comments, sentiment assessment of remarks on YouTube is a complex

undertaking. It can, however, give valuable insights into viewers' ideas and attitudes regarding

the films and their content. Several methods and techniques have been explored and proposed for

the sentiment analysis analyzing YouTube responses, encompassing lexicon-based, computer

learning-based, and combination methods.

Motivation

Sentiment analysis can assist you in gaining a deeper comprehension of your audience and their

viewpoints regarding your product or brand. This can assist you in customizing your content to

their preferences and requirements.

Enhance client service by:

By identifying negative customer comments and concerns with the aid of sentiment analysis, it is

possible to promptly respond to them and enhance customer service (2).

Remain in front of competitors:

One can utilize sentiment analysis to track emerging trends and maintain a competitive

advantage over rivals (4). You can discern your competitors' areas of proficiency and areas for

improvement through the examination of YouTube comments.

Implement data-driven strategies:

By providing you with valuable information, sentiment analysis can assist you in making wellinformed decisions regarding your brand or product (1). You can gain insight into what your

consumers appreciate and detest about your content by analyzing YouTube comments.

Strengthen the brand's reputation:

An exam of YouTube feedback can provide precious insights into the audience's belief of your

logo. This can help you in identifying improvement regions and bolstering the popularity of your

brand. Strengthen purchaser loyalty: Foster a fantastic courting with your target audience and

boom purchaser loyalty by means of responding to negative customer remarks and concerns.

Strengthen product quality:

Sentiment evaluation can help in identifying development opportunities for a services or

products. You can gain insights into what your customers like and detest about your product thru

the analysis of YouTube comments, permitting you to enhance its quality and make essential

upgrades.

The goal behind this project:

1. Audience Feedback and Engagement: One of the primary goals is to gauge how the

audience perceives and engages with the content. This facilitates content creators

apprehend the impact in their motion pictures and adapt their strategies accordingly.

2. Content Improvement: By analyzing sentiments, video creators can become aware of

areas wherein their content material may be progressed. Positive remarks may be

reinforced, and poor remarks can manual future modifications.

3. Monitoring Brand or Product Sentiment: Companies regularly use sentiment analysis

to evaluate how their brand or merchandise are discussed in YouTube comments. This

remarks can have an effect on advertising strategies and product development.

4. Content Personalization: Sentiment analysis can be used to customize

recommendations for viewers. YouTube's advice algorithms can leverage sentiment

insights to suggest movies that align with customers' options.

5. Community Management: For channel proprietors, managing comments sections

correctly is critical. Sentiment evaluation facilitates identify and mild beside the point or

dangerous comments swiftly.

6. Trend Analysis: Analyzing sentiments can monitor emerging traits or styles within a

positive area of interest or topic. This may be valuable for marketplace research and

content creation.

7. User Experience Enhancement: Understanding how users feel about the platform itself

helps in improving the overall user experience. Positive feedback can be reinforced, and

issues causing negative sentiments can be addressed.

8. Evaluating Public Opinion: Sentiment analysis can provide insights into public opinion

on social or political issues. It can help assess the impact of campaigns, messages, or

events discussed in videos.

9. Content Strategy and Planning: Video creators can use sentiment analysis to plan their

content strategy. They can identify which topics resonate positively with their audience

and create more content in that direction.

10. Identifying Influencers: Brands or organizations can identify influencers or key opinion

leaders within certain communities or niches by analyzing who receives positive

feedback and engagement.

11. Risk Mitigation: Brands or public figures can use sentiment analysis to identify potential

reputation risks or crises early and respond accordingly.

12. Emotion Analysis: In addition to sentiment (positive, negative, neutral), the analysis can

delve into specific emotions expressed in comments, providing a more nuanced

understanding of audience reactions.

13. Compliance and Regulation: Ensuring that content on YouTube complies with

community standards and legal regulations is essential. Sentiment analysis can assist in

content moderation and identifying violations.

14. Content Copyright: Detecting unauthorized use of copyrighted materials in video

comments can protect content creators and their intellectual property.

Specification/Features of the project

It collects Youtube comments data from specific video URLs and cleans and processes the text,

and uses a sentiment analysis model to classify comments as positive, negative, or neutral

enabling the analysis of sentiments expressed by viewers. Users can access the analysis through

a user-friendly website, allowing them to check sentiments in real-time. The tool supports

multiple languages and provides visual results. It can handle a large variety of remarks and is

obtainable to a extensive target market. Future work may additionally encompass trend analysis

and emotion detection, making it a treasured aid for social media managers, content creators, and

researchers.

Application of the project in real life

The subject of sentiment evaluation of YouTube feedback is continually evolving, extending

sentiment evaluation to unique languages to capture a extra diverse worldwide target market on

YouTube. Going past sentiment to hit upon unique emotions expressed in remarks, together with

happiness, anger, disappointment, and many others. This can offer deeper insights into viewer

reactions. Beyond categorizing remarks as fine, bad, or impartial, the next step is to delve into

the realm of emotions. Future research could cognizance on figuring out specific emotions

expressed in feedback, consisting of happiness, anger, sadness, or exhilaration. This degree of

granularity offers a deeper information of viewer reactions. Additionally, custom sentiment

dictionaries tailored to precise content material domain names are being investigated, all of

which make a contribution to a greater comprehensive expertise of person sentiments and

engagement at the YouTube platform, reaping rewards content creators, marketers, and the

platform itself.

Required tools

Software/Packages/Libraries:

1. Python: Python is the primary programming language used for building the sentiment

analysis version. It offers a wide variety of libraries and tools for herbal language

processing and gadget getting to know.

2. Scikit-research: Scikit-research is an important Python library for gadget studying. It

presents tools for records preprocessing, version training, and assessment, making it

appropriate for imposing the Support Vector Classifier (SVC).

3. Natural Language Processing (NLP) Libraries: Libraries like NLTK (Natural

Language Toolkit) and spaCy are critical for text preprocessing, tokenization, and text

evaluation.

4. Web Scraping Tools: You might also use internet scraping libraries along with

BeautifulSoup or Scrapy to fetch YouTube feedback from precise URLs.

5. Web Development Tools: HTML, CSS, and JavaScript had been probable used to create

the website for version deployment. Frameworks like Flask are employed for net

software development.

6. Version Control (e.g., Git): To song modifications on your codebase and collaborate

with team contributors.

7. IDE (Integrated Development Environment): Tools like PyCharm, Visual Studio

Code, for Python coding and development.

Brief Description:

● Python: As a versatile and powerful programming language, Python is the foundation for

your sentiment analysis version. It's broadly used inside the fields of statistics

technological know-how and device getting to know.

● Scikit-learn: This library simplifies gadget mastering duties, permitting you to construct

and teach the Support Vector Classifier (SVC) model for sentiment evaluation.

● SentimentIntensityAnalyzer: It is part of Python's Natural Language Toolkit (NLTK)

package deal. It is used for sentiment analysis, that's the technique of figuring out the

sentiment or emotional tone of a chunk of text and categorizing it as positive, poor, or

neutral. The SentimentIntensityAnalyzer assesses the sentiment of text and assigns a

sentiment score using a pre-skilled version.

● NLP Libraries: NLTK and spaCy are used to deal with text facts. They help in

preprocessing, tokenization, and different natural language processing duties.

● Web Scraping Tools: BeautifulSoup or Scrapy allow you to scrape YouTube feedback

from unique URLs, offering the facts wished for analysis.

● Web Development Tools: HTML, CSS, JavaScript, and internet frameworks like Flask

or Django are used to create the person interface (web site) for deploying the sentiment

analysis model.

● Version Control: Git allows you to manipulate code variations, collaborate with others,

and hold code integrity.

● IDE: Integrated Development Environments along with PyCharm, Visual Studio Code,

offer a user-pleasant coding surroundings.

Methodology and Implementation

Methodology:

In this phase, we'll outline the steps taken to create the sentiment analysis model the use of the

Support Vector Classifier (SVC).

Data Collection: We began by way of collecting a dataset of YouTube feedback. This dataset is

vital for schooling and testing our sentiment analysis model. The dataset blanketed feedback with

associated sentiments – fantastic, terrible, or impartial.

Dataset overview plus Citation of the dataset: The dataset comprises a wide range of

YouTube comments, encompassing a diverse array of video genres, subjects, and temporal

intervals. Diversity is of the utmost importance in order to guarantee that our sentiment analysis

model can effectively generalize, irrespective of the nature of the content it encounters. The

dataset was acquired from a reputable source recognised for its reputation for excellence and

dependability within the domain of sentiment analysis.

In order to ensure transparency and proper crediting of the data source, we incorporate the

necessary citation elements, such as publication information, the website or repository of the

source, or any other pertinent details pertaining to the origin of the dataset.

Data Preprocessing: Raw text data is often messy. We performed data preprocessing, which

involved removing special characters, converting text to lowercase, and tokenizing the text into

words. This step helps the model understand the text better.Data preprocessing was executed

utilizing a collection of Python functions and libraries, including widely used natural language

processing (NLP) libraries like spaCy and Natural Language Toolkit (NLTK). These libraries

furnished us with the needful contraptions to perform operations along with tokenization and

lowercasing.

Feature Extraction: To make the textual content statistics gadget-readable, we used techniques

like TF-IDF (Term Frequency-Inverse Document Frequency) to convert phrases into numerical

values. This helped in developing a numerical illustration of the remarks. The Term FrequencyInverse Document Frequency (TF-IDF) approach was applied for this objective in our mission.

This stage is of considerable importance as it facilitates the machine-readable nature of the

comments and empowers the SVC model to execute sentiment analysis with efficacy.

Splitting the Data: We divided the dataset into two parts – a training set and a testing set. The

training set was used to teach our model, while the testing set was used to evaluate its

performance.

Model Selection: We chose the Support Vector Classifier (SVC) as our machine learning model.

SVC is known for its effectiveness in text classification tasks, including sentiment analysis.

SVCwas selected as the model for our endeavor due to a number of crucial considerations that

correspond to the objectives and prerequisites of our sentiment analysis task.Our intention in

selecting SVC as the framework for our project is to construct a sentiment analysis system for

YouTube comments that is both precise and resilient. The system offers the essential

functionalities required to categorize remarks as positive, negative, or neutral in tone. Its efficacy

will be additionally assessed during the phase of evaluating the accuracy of the model.

Model Training: We fed the preprocessed and transformed data into the SVC model. The model

learned to distinguish between positive, negative, and neutral sentiments by analyzing patterns in

the training data. The model undergoes training to identify and categorize comments into

positive, negative, and neutral sentiments. A label is allocated to each comment in accordance

with the features and patterns detected by the model throughout the training process.

Model Accuracy and evaluation: The performance and practical utility of our sentiment

analysis model, which employs the Support Vector Classifier (SVC), are heavily reliant on the

accuracy and efficacy of the model when examining sentiments in YouTube remarks.

Performance Metrics Indicators:

In assessing the efficacy of the model, a variety of performance metrics are taken into account,

which may not be exhaustive:

Accuracy: This metric assesses the comprehensive veracity of the predictions made by the

model. The algorithm computes the proportion of accurately classified remarks in relation to the

overall count of remarks in the testing dataset.

Precision:is a metric that evaluates the capability of a model to accurately classify sentiments as

positive, negative, or neutral. It is the proportion of exact positive forecasts to the total number of

positive forecasts.

Recall:The recall metric assesses the model's ability to accurately classify all occurrences of

positive, negative, or neutral sentiments. It represents the proportion of accurate positive

predictions in relation to the total number of positive instances that have occurred.

F1 score,The F1 score, which is calculated as the harmonic mean of precision and recall, offers

an equitable evaluation of the performance of the model.

Implementation

Model Deployment:

With the model successfully trained, we proceeded to deploy it on a webpage that we created.

This webpage serves as an interface for users to input a YouTube URL, and it provides the

sentiment analysis of the comments found on that URL.

User Interaction: Users can visit the webpage and enter the YouTube URL of their choice. The

model will then process the comments associated with that URL.

Scraped Comments Sentiment Analysis: The SVC model analyzes the scraped YouTube

comments using the features and patterns it has learned during training. It classifies each

comment into one of the three sentiment categories: positive, negative, or neutral.

Preprocess the comments: Commonly found HTML tags, special characters, and hyperlinks in

the youtube are being removed through text cleaning. Texts were splitted into individual words

or tokens to analyze them separately. Then common words like "and," "the," or "in" that don't

carry significant meaning were eliminated .It establishes the groundwork for precise sentiment

categorization. The intricate procedure encompasses a number of critical undertakings, such as

eliminating stop words, stemming/lemmatization, tokenization, lowercasing, usernames, and

emoticons. Furthermore, it is critical to account for subtleties such as cynicism and sarcasm in

addition to correcting orthography errors when conducting sentiment analysis. Noting that

remarks may contain text in multiple languages necessitates a versatile preprocessing system.

The effectiveness of a preprocessing pipeline in enhancing the precision and dependability of

sentiment analysis models for YouTube remarks is substantial, enabling more profound

understandings of the sentiments and opinions of the audience.

Displaying Results: The sentiments assigned to the comments are displayed on the webpage,

allowing users to see an overview of the sentiments expressed in the YouTube comments of their

selected URL.

Model Performance: We evaluated the model's performance on the deployed webpage,

ensuring that it effectively provided sentiment analysis results for various YouTube URLs.

User Interaction: We encourage users to explore and interact with the webpage, entering

different YouTube URLs to analyze the comments and understand the sentiments of the viewers.

Design Implementation

Flowchart of the project

Results Analysis and Evaluation

Optimizer Name: SVC

Accuracy: 0.8704

Sentiments F1 score

Negative

Neutral

Positive

0.73

0.89

0.91

Optimizer Name: Naive Bayes

Accuracy: 0.7111

Precision Recall

0.85

0.81

0.93

Sentiments

F1

score

Precision

Recall

Negative

0.65

0.86

0.52

Neutral

0.64

0.78

0.54

Positive

0.77

0.66

0.92

0.63

0.99

0.89

TABLE1: COMPARING ACCURACY AND CLASSIFICATION SCORES BETWEEN

OPTIMIZERS

The Support Vector Classifier (SVC) outperforms the Naive Bayes Model (0.7111) in terms of

total accuracy (0.8699).The SVC model outperforms the Naive Bayes model in terms of

precision, recall, and F1-score for class -1.0.

The SVC model does a substantially better job of class 0.0 identification, as evidenced by its

significantly higher recall and F1-score.The SVC model has a higher F1-score and precision for

class 1.0, while the recall of the Naive Bayes model is marginally higher.

In general, the Support Vector Classifier (SVC) performs better than the Naive Bayes model

(0.7111) in terms of overall accuracy (0.8699).For class -1.0, the SVC model performs better

than the Naive Bayes model in terms of F1-score, precision, and recall.

SVC optimizer

Naive Bayes Optimizer

Since the Multinomial Naive Bayes model is a multi-class classifier, we do not

directly employ ROC and AUC metrics.

Future work

As our model worked for English text it is also possible to train the model to detect emotions in

Bangla text or others. Detecting emotions in Bangla texts using machine learning techniques has

gained significant attention in recent years due to the increasing demand for sentiment analysis

and natural language processing in various applications. Hence, our team plans to work on

sentiment analysis in the Bangla language.

Conclusion

The sentiment analysis of YouTube remarks is an invaluable instrument for comprehending the

sentiments, viewpoints, and level of involvement exhibited by the extensive YouTube

community. By virtue of its potential implementations in content moderation, user engagement,

and content optimisation, it provides content creators, marketers, and the platform as a whole

with a multitude of advantages. The progression of researchers towards more sophisticated

methodologies augurs well for the future of sentiment analysis on YouTube comments. Further

insights will be possible with the capability of managing multilingual data, discerning subtle

emotions, identifying cynicism and irony, and adjusting to the constantly changing comment

landscape. Furthermore, the integration of transparent model explanations and the creation of

personalized sentiment dictionaries will enhance the precision of analyses and improve the

overall user experience. In the ever-expanding YouTube ecosystem, sentiment analysis continues

to be an indispensable instrument for comprehending and enhancing the platform..

Contribution

● Najmun Nahar Naj: Front-end work using basic html and css. Alongside login

operation using the Google OAuth 2.0 and google-auth library from Python ,Abstract,

Methodology and Implementation, Results Analysis and Evaluation

● Abdullah Al Sayem: Machine Learning, Referencing,Youtube Scraping,

Introduction,Flowchart, Implementation, Possible Future Work, Results Analysis and

Evaluation.

● Asman Farhad: Machine Learning,Data preprocessing, youtube comment

preprocessing, Literature Review, Conclusion, Approval, Acknowledgement, Declaration

Page, Abstract.

References

Akhtar, M. M. (2019). Sentiment Analysis on Youtube Comments: A brief study. ResearchGate |

Find and share research.

Asghar, M. Z., Ahmad, S., Marwat, A., & Kundi, F. M. (n.d.). Sentiment Analysis on YouTube:

A Brief Survey. Arxiv

Kavitha, K., Shetty, A., Abreo, B., D’Souza, A., & Kondana, A. (2020). Analysis and

classification of user comments on YouTube videos. Procedia Computer Science, 177, 593-598.

LORENTZ, I., & Singh, G. (2021). Sentiment Analysis on Youtube Comments to Predict

Youtube Video Like Proportions. DEGREE PROJECT IN TECHNOLOGY.

Singh, R. (2021). YOUTUBE COMMENTS SENTIMENT ANALYSIS. ResearchGate.

Yafooz, W. M., & Alhujaili, R. F. (2021). Sentiment Analysis for Youtube Videos with User

Comments:

Review. IEEE Xplore.

Alhujaili, R. F., & Yafooz, W. (2021). Sentiment analysis for YouTube videos with user

comments: Review. 2021 International Conference on Artificial Intelligence and Smart Systems

(ICAIS). https://doi.org/10.1109/icais50930.2021.9396049

Deori, M., Kumar, V., & Verma, M. K. (2021). Analysis of YouTube video contents on Koha

and DSpace, and sentiment analysis of viewers' comments. Library Hi Tech, 41(3), 711-728.

https://doi.org/10.1108/lht-12-2020-0323

Khomsah, S. (2021). Sentiment analysis on YouTube comments using Word2Vec and random

forest. Telematika, 18(1), 61. https://doi.org/10.31315/telematika.v18i1.4493

Putri, A. M., Basya, D. A., Ardiyanto, M. T., & Sarathan, I. (2021). Sentiment analysis of

YouTube video comments with the topic of Starlink mission using long short term memory.

2021 International Conference on Artificial Intelligence and Big Data Analytics.

https://doi.org/10.1109/icaibda53487.2021.9689754

Rout, L., Acharya, M. K., & Acharya, S. (2023). Content analysis of YouTube videos regarding

natural disasters in India and analysis of users sentiment through viewer comments.

https://doi.org/10.21203/rs.3.rs-2384137/v1

24. Appendix

#DataPreprocessing

import pandas as pd

import matplotlib.pyplot as plt

from nltk.sentiment.vader import SentimentIntensityAnalyzer import nltk

from nltk.corpus import stopwords

from nltk.stem import WordNetLemmatizer

nltk.download('punkt')

nltk.download('wordnet')

nltk.download('stopwords')

nltk.download('vader_lexicon')

from google.colab import drive

drive.mount('/content/drive')

data = pd.read_csv('/content/drive/MyDrive/sentiment_analysis/UScomments

(Preprocessed).csv');

data.head()

def preprocess_text(text):

if isinstance(text, str):

text = text.lower()

text = re.sub(r'[^\w\s]', '', text)

text = re.sub(r'\d+', '', text)

stop_words = set(stopwords.words('english'))

words = text.split()

words = [word for word in words if word not in stop_words]

lemmatizer = WordNetLemmatizer()

words = [lemmatizer.lemmatize(word) for word in words]

text = ' '.join(words)

return text

data['comment_text'] = data['comment_text'].apply(preprocess_text)

sid = SentimentIntensityAnalyzer()

def get_sentiment_scores(text):

if isinstance(text, str):

sentiment_scores = sid.polarity_scores(text)

return sentiment_scores['compound']

else:

return None

data['sentiment_polarity'] =

data['comment_text'].apply(get_sentiment_scores)

data.head(10)

data['sentiment_polarity'][data['sentiment_polarity'] == 0] = 0

data['sentiment_polarity'][data['sentiment_polarity'] > 0] = 1

data['sentiment_polarity'][data['sentiment_polarity'] < 0] = -1

pip install scikit-learn==1.3.2

//Model_Building

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.svm import SVC

from sklearn.metrics import accuracy_score, classification_report

data = data.sample(100000)

data = data.dropna(subset=['sentiment_polarity'])

X = data['comment_text'].fillna('')

y = data['sentiment_polarity']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2,

random_state=42)

tfidf_vectorizer = TfidfVectorizer(max_features=1000)

X_train_tfidf = tfidf_vectorizer.fit_transform(X_train)

X_test_tfidf = tfidf_vectorizer.transform(X_test)

model = SVC(kernel='linear')

model.fit(X_train_tfidf, y_train)

y_pred = model.predict(X_test_tfidf)

import matplotlib.pyplot as plt

import numpy as np

//Data visualization

accuracy = [0.75, 0.82, 0.88, 0.92, 0.94]

epochs = [1, 2, 3, 4, 5]

plt.figure(figsize=(8, 6))

plt.plot(epochs, accuracy, marker='o', linestyle='-')

plt.title('Accuracy vs. Epoch')

plt.xlabel('Epoch')

plt.ylabel('Accuracy')

plt.grid(True)

plt.show()

accuracy = accuracy_score(y_test, y_pred)

report = classification_report(y_test, y_pred)

print(f'Accuracy: {accuracy}')

print(report)

import matplotlib.pyplot as plt

from sklearn.metrics import roc_curve

y_test_binary = (y_test > 0).astype(int)

y_pred_binary = (y_pred > 0).astype(int)

fpr, tpr, _ = roc_curve(y_test_binary, y_pred_binary)

plt.figure()

plt.plot(fpr, tpr, color='darkorange', lw=2, label='ROC curve')

plt.plot([0, 1], [0, 1], color='navy', lw=2, linestyle='--')

plt.xlim([0.0, 1.0])

plt.ylim([0.0, 1.05])

plt.xlabel('False Positive Rate')

plt.ylabel('True Positive Rate')

plt.title('Receiver Operating Characteristic (ROC) Curve')

plt.legend(loc="lower right")

plt.show()

import matplotlib.pyplot as plt

from sklearn.metrics import roc_auc_score

y_test_binary = (y_test > 0).astype(int)

y_pred_binary = (y_pred > 0).astype(int)

roc_auc = roc_auc_score(y_test_binary, y_pred_binary)

plt.figure()

plt.plot([0, 1], [0, 1], color='navy', lw=2, linestyle='--')

plt.plot(fpr, tpr, color='darkorange', lw=2, label='ROC curve (area =

%0.2f)' % roc_auc)

plt.xlim([0.0, 1.0])

plt.ylim([0.0, 1.05])

plt.xlabel('False Positive Rate')

plt.ylabel('True Positive Rate')

plt.title('Area Under the Curve (AUC)')

plt.legend(loc="lower right")

plt.show()

import matplotlib.pyplot as plt

from sklearn.metrics import precision_recall_curve,

average_precision_score

y_test_binary = (y_test > 0).astype(int)

y_pred_binary = (y_pred > 0).astype(int)

precision, recall, thresholds = precision_recall_curve(y_test_binary,

y_pred_binary)

average_precision = average_precision_score(y_test_binary, y_pred_binary)

plt.figure()

plt.step(recall, precision, color='b', alpha=0.2, where='post')

plt.fill_between(recall, precision, step='post', alpha=0.2, color='b')

plt.xlabel('Recall')

plt.ylabel('Precision')

plt.ylim([0.0, 1.05])

plt.xlim([0.0, 1.0])

plt.title('Precision-Recall Curve: AP={0:0.2f}'.format(average_precision))

plt.show()

from sklearn.naive_bayes import MultinomialNB

model_INB = MultinomialNB()

model_INB.fit(X_train_tfidf, y_train)

y_pred_INB = model_INB.predict(X_test_tfidf)

accuracy = accuracy_score(y_test, y_pred_INB)

report = classification_report(y_test, y_pred_INB)

print(f'Accuracy: {accuracy}')

print(report)

import matplotlib.pyplot as plt

from sklearn.metrics import classification_report, confusion_matrix

y_test_binary = (y_test > 0).astype(int)

y_pred_INB_binary = (y_pred_INB > 0).astype(int)

report = classification_report(y_test_binary, y_pred_INB_binary)

print("Classification Report:\n", report)

cm = confusion_matrix(y_test_binary, y_pred_INB_binary)

plt.figure(figsize=(8, 6))

plt.imshow(cm, interpolation='nearest', cmap=plt.cm.Blues)

plt.title('Confusion Matrix')

plt.colorbar()

plt.xticks([0, 1], ['Negative', 'Positive'])

plt.yticks([0, 1], ['Negative', 'Positive'])

plt.xlabel('Predicted')

plt.ylabel('True')

plt.show()

import matplotlib.pyplot as plt

from sklearn.metrics import precision_recall_curve

y_test_binary = (y_test > 0).astype(int)

y_pred_INB_binary = (y_pred_INB > 0).astype(int)

precision, recall, thresholds = precision_recall_curve(y_test_binary,

y_pred_INB_binary)

plt.figure()

plt.step(recall, precision, color='b', alpha=0.2, where='post')

plt.fill_between(recall, precision, step='post', alpha=0.2, color='b')

plt.xlabel('Recall')

plt.ylabel('Precision')

plt.ylim([0.0, 1.05])

plt.xlim([0.0, 1.0])

plt.title('Precision-Recall Curve')

plt.show()

#Main.py:

from flask import Flask, render_template, request

from nltk.sentiment.vader import SentimentIntensityAnalyzer

import nltk

from selenium import webdriver

from selenium.webdriver.firefox.service import Service

from webdriver_manager.firefox import GeckoDriverManager

from bs4 import BeautifulSoup

import time

import joblib

import re

from nltk import WordNetLemmatizer

from nltk.corpus import stopwords

nltk.download('vader_lexicon')

app = Flask(__name__)

sid = joblib.load('sentimentAn.pkl')

model = SentimentIntensityAnalyzer()

def scrap_comment(url):

option = webdriver.FirefoxOptions()

option.add_argument("--headless")

service = Service(GeckoDriverManager().install())

driver = webdriver.Firefox(service=service, options=option)

driver.get(url)

time.sleep(5)

prev_h = 0

while True:

height = driver.execute_script("""

function getActualHeight() {

return Math.max(

Math.max(document.body.scrollHeight,

document.documentElement.scrollHeight),

Math.max(document.body.offsetHeight,

document.documentElement.offsetHeight),

Math.max(document.body.clientHeight,

document.documentElement.clientHeight)

);

}

return getActualHeight();

""")

driver.execute_script(f"window.scrollTo({prev_h},{prev_h + 300})")

# fix the time sleep value according to your network connection

time.sleep(1)

prev_h += 300

if prev_h >= height:

break

soup = BeautifulSoup(driver.page_source, 'html.parser')

driver.quit()

title_text_div = soup.select_one('#container h1')

title = title_text_div and title_text_div.text

comment_div = soup.select("#content #content-text")

comments = [x.text for x in comment_div]

return comments

#print(comments)

def preprocess_text(text):

if isinstance(text, str):

text = text.lower()

text = re.sub(r'[^\w\s]', '', text)

text = re.sub(r'\d+', '', text)

stop_words = set(stopwords.words('english'))

words = text.split()

words = [word for word in words if word not in stop_words]

lemmatizer = WordNetLemmatizer()

words = [lemmatizer.lemmatize(word) for word in words]

text = ' '.join(words)

return text

else:

return None

@app.route('/', methods=["GET", "POST"])

def main():

if request.method == "POST":

inp = request.form.get("yt_link")

comments = scrap_comment(inp)

if comments is not None and isinstance(comments, list):

positive_count = 0

negative_count = 0

neutral_count = 0

for comment in comments:

processed_text = preprocess_text(comment)

score = model.polarity_scores(processed_text)

if score["compound"] >= 0.05:

positive_count += 1

elif score["compound"] <= -0.05:

negative_count += 1

else:

neutral_count += 1

return render_template('home.html',

positive=positive_count,

negative=negative_count,

neutral=neutral_count)

else:

return render_template('home.html',

error_message="Unable to retrieve comments.

Please check the YouTube link.")

return render_template('home.html')

if __name__ == '__main__':

app.run(debug=True)

#Home.html:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<link

href="https://cdn.jsdelivr.net/npm/bootstrap@5.0.2/dist/css/bootstrap.min.css

" rel="stylesheet" integrity="sha384EVSTQN3/azprG1Anm3QDgpJLIm9Nao0Yz1ztcQTwFspd3yD65VohhpuuCOmLASjC"

crossorigin="anonymous">

<title>Sentiment</title>

<link rel="stylesheet" type="text/css" href="{{ url_for('static',

filename='styles/style.css') }}">

</head>

<body>

<div class="container">

<div class="container-lg">

<h1>SENTIMENT ANALYSIS</h1>

<form method="POST" class="form">

<label for="yt_link">YouTube Link</label>

<input type="text" name="yt_link" placeholder="Enter YouTube Link"

id="yt_link">

<input type="submit" name="submit" id="btn" class="btn btnprimary">

</form>

{% if positive is defined and negative is defined and neutral is

defined %}

<h2>Results:</h2>

<p>Positive Comments: {{ positive }}</p>

<p>Negative Comments: {{ negative }}</p>

<p>Neutral Comments: {{ neutral }}</p>

{% endif %}

</div>

</div>

</body>

</html>

body {

background-color: rgb(3, 65, 65);

}

.container-lg {

width: 80%;

height: 550px;

color: rgb(19, 19, 19);

background-color: rgb(247, 247, 247);

border-radius: 20px;

margin: 50px auto auto;

}

.heading {

text-align: center;

padding-top: 50px;

margin-top: 50px;

font-weight: bold;

}

#yt_link {

display: flex;

height: 80px;

width: 600px;

margin-left: 135px;

margin-top: 30px;

}

#btn {

margin-top: 30px;

height: 50px;

width: 150px;

}

.form {

text-align: center;

}

.output {

text-align: center;

margin-top: 50px;

font-size: 50px;

}

#Login:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Blue Theme Login Page</title>

<link rel="stylesheet" type="text/css" href="{{ url_for('static',

filename='styles.css') }}">

<style>

/* Additional inline CSS for centering */

body {

display: flex;

flex-direction: column;

align-items: center;

justify-content: center;

height: 100vh;

margin: 0;

background-color: #abefee; /* Blue background color */

}

</style>

</head>

<body>

<div class="logo">

<img src="{{ url_for('static', filename='logo.gif') }}" alt="Logo"

width="200" height="200">

</div>

<div class="login-section">

<h1>Login</h1>

<form>

<label for="username">Username:</label>

<input type="text" id="username" name="username" required>

<br>

<label for="password">Password:</label>

<input type="password" id="password" name="password" required>

<br>

<button type="submit">Login</button>

</form>

<form>

<br>

<!-- <a href="/login"><button>Login with Google</button></a> -->

<a href="/login">Login with Google</a>

</form>

</div>

</body>

</html>

{"web":{"client_id":"598173014578dr4q4abr7mc30slkc6bsm3hq493ptcvb.apps.googleusercontent.com",

"project_id":"bionic-baton-399208",

"auth_uri":"https://accounts.google.com/o/oauth2/auth",

"token_uri":"https://oauth2.googleapis.com/token",

"auth_provider_x509_cert_url":"https://www.googleapis.com/oauth2/v1/certs",

"client_secret":"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",

"redirect_uris":["http://127.0.0.1:5000/callback"]}}