Numerical Linear Algebra Solutions Manual for Instructors

advertisement

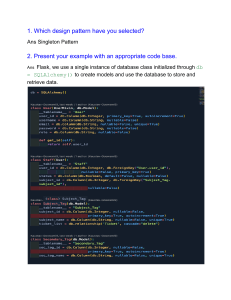

Numerical Linear Algebra

Solutions Manual for Instructors

Grégoire Allaire

Sidi Mahmoud Kaber

Contents

2

Exercises of chapter 2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3

3

Exercises of chapter 3 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

4

Exercises of chapter 4 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

5

Exercises of chapter 5 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

6

Exercises of chapter 6 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

7

Exercises of chapter 7 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

8

Exercises of chapter 8 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

9

Exercises of chapter 9 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

10

Exercises of chapter 10 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

Preface

This is the solution manual for the exercises of our book, “Numerical linear

algebra”.

G.A., S.M.K.

Paris, July 13, 2007.

2

Exercises of chapter 2

Solution of Exercise 2.3 The determinant of A is rigorously equal to e.

However, when this number is very small, Matlab may find a wrong value

because of rounding errors. For instance with e = 10−20 we obtain

>> e=1.e-20;n=5;p=NonsingularMat(n);

>> A=p*diag([ones(n-1,1); e])*inv(p);

>> det(A)

ans =

-1.5086e-14

Recall that the constant eps contains the floating point relative accuracy of

Matlab

>> eps

ans =

2.2204e-16

Solution of Exercise 2.4 The rank is equal to 2. We note that the multiplication of A by a nonsingular matrix does not change its rank. Assume that

A has size m × n, and let C be a non singular matrix of size m × m. Since C

is non singular, the vector spaces Im (A) and Im (CA) are in one-to-one correspondence and have thus the same dimension. Indeed, to each x ∈ Im (A),

we map Cx ∈ Im (CA) and to each x ∈ Im (CA), we map C −1 x ∈ Im (A).

Solution of Exercise 2.5 We build a matrix NR of size 2 × 10 containing

different values of n and the rank of the corresponding matrix A.

1. >>

>>

>>

>>

>>

NR

NR=[];

for n=1:10

A=rand(8,n)*rand(n,6);NR =[NR [n;rank(A)]];

end;

NR

=

1

2

3

4

5

6

7

8

9

10

4

CHAPTER 2.

EXERCISES OF CHAPTER 2

1

2

3

4

5

6

6

6

6

6

We remark that the rank of A is equal to n for n ≤ 6, and equal to 6 for

n ≥ 6.

2. With matrix BinChanceMat, we obtain

>> NR

NR =

1

1

2

2

3

3

4

4

5

4

6

5

7

6

8

6

9

6

10

6

This time around, we observe that the rank of A is always less than or

equal to 6.

3. Let us show that rk (AB) ≤ min( rk (A), rk (B)). Assume the dimensions

of A and B are compatible for the product AB to make sense. Since

Im (AB) ⊂ Im (A), we have rk (AB) ≤ rk (A). Besides, according to the

rank theorem

dim Ker B + rk B = dim Ker (AB) + rk (AB).

Since Ker (B) ⊂ Ker (AB), we have the upper bound rk (AB) ≤ rk (B),

thereby proving the result.

Matrices m × n defined by function rand have a great probability of being

of maximal rank, that is, of rank equal to min(m, n). With the random

function BinChanceMat, this probability is smaller, which explains the

observed results.

P

Solution of Exercise 2.6 Finding the rank of ni=1 ui uti .

1. Case when A is defined using the function rand. Using the following instructions

>> n=5;A=zeros(n,n);r=5;

>> for i=1:r

u=rand(n,1);A=A+u*u’;

end;

>> rank(A)

ans =

5

we “almost always” obtain that the rank of A is equal to r.

2. Case when A is defined using the function BinChanceMat. Using the following instructions

>> A=zeros(n,n);

>> for i=1:r

u=BinChanceMat(n,1);A=A+u*u’;

end;

rank(A)

ans =

4

5

we merely obtain that the rank of A is less than or equal to r.

3. Explanation. For any x ∈ Rn , we have

Ax =

r

X

(ui uti )x =

i=1

r

X

i=1

hx, ui iui .

So the vectors ui span the image of A and, consequently, the rank of A is

always less than or equal to r. If these vectors are linearly independent in

Rn (which is very likely with rand and more unlikely with BinChanceMat),

then they form a basis of the image of A. The dimension of this space is

therefore r.

Solution of Exercise 2.8

1. >> A=[1:3;4:6;7:9;10:12]; det(A’*A)

ans =

0

>> B=[-1 2 3;4:6;7:9;10:12]; det(B’*B)

ans =

216

We remark that the matrix At A is singular, while B t B is not.

2. The rank of A is equal to 2 and the rank of B is equal to 3.

3. Let X be a matrix of size m × n, with m ≥ n. The rank theorem implies

that dim Ker X + rk X = n. The following alternative holds true:

• either the rank of X is maximal, that is, equal to n, which is equivalent

to say that X is injective ( Ker X = {0}) and thus the square matrix

X ∗ X is also injective, hence non singular since

X ∗ Xx = 0 =⇒ 0 = hX ∗ Xx, xi = kXxk2 =⇒ Xx = 0 =⇒ x = 0,

• or rk (X) < n and thus X is not injective, and neither is the square

matrix X ∗ X.

4. This time, both matrices AAt and BB t are singular. Indeed, for a matrix

X of size m × n, with m < n, even if its rank is maximal, that is, equal

to m, we have dim Ker X = n − m and X is never injective. Thus the

square matrix X ∗ X is always singular.

Solution of Exercise 2.9 Rank of A + uut .

>> A=MatRank(20,20,17); Q=null(A’);

>> size(Q)

ans =

20

3

>> u=Q(:,2); rank(A+u*u’)

ans =

18

6

CHAPTER 2.

EXERCISES OF CHAPTER 2

We notice that the rank of the matrix A + uut is equal to r + 1. Indeed for

any x ∈ Rn , we have

(A + uut )x = Ax + uut x = Ax + hx, uiu,

and since u ∈ Ker (At ) = ( Im A)⊥ , hx, uiu is orthogonal to Im A and therefore the dimension of Im (A + uut ) is equal to r + 1.

Solution of Exercise 2.13 The function triu returns the upper triangular part of a matrix. Similarly, the lower triangular part is obtained by the

function tril.

>> n=5;A=rand(n,n);A=triu(A)-diag(diag(A))

A =

0

0

0

0

0

0.1942

0

0

0

0

0.0856

0.9793

0

0

0

0.7285

0.2508

0.1685

0

0

0.2183

0.9558

0.4082

0.6033

0

To get the successive powers of A, we use the variable ans as follows.

>> A*A

ans =

0

0

0

0

0

0

0

0

0

0

0.1902

0

0

0

0

0.0631

0.1650

0

0

0

0.6601

0.5511

0.1017

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0.0320

0

0

0

0

0.1157

0.0996

0

0

0

>> ans*A

ans =

We see that An is equal to the zero matrix. Such a matrix is said to be

nilpotent. Explanation: since all the eigenvalues of A are zero, its characteristic

polynomial is pA (x) = (−1)n xn and by the Cayley–Hamilton theorem, we

have indeed pA (A) = 0.

Solution of Exercise 2.14 We give the results for m = n = 6.

1. >> H=hilb(6);eig(H)

ans =

0.0000

0.0000

7

0.0006

0.0163

0.2424

1.6189

2. >> [P,D]=eig(H); P

P =

-0.0012

-0.0111

0.0622

0.2403

0.0356

0.1797

-0.4908

-0.6977

-0.2407

-0.6042

0.5355

-0.2314

0.6255

0.4436

0.4170

0.1329

-0.6898

0.4415

-0.0470

0.3627

0.2716

-0.4591

-0.5407

0.5028

3. >> i=3;u=P(:,i);l=D(i,i);norm(H*u-l*u)

ans =

1.9525e-16

-0.6145

0.2111

0.3659

0.3947

0.3882

0.3707

0.7487

0.4407

0.3207

0.2543

0.2115

0.1814

Solution of Exercise 2.15 The eigenvalues of A are those of D, that is, 2

(with multiplicity two), 3 and 4.

1. >> specA=eig(A)

specA =

4.0000

3.0000

2.0000

2.0000

2. >> n=3;eig(A^n)

ans =

64.0000

27.0000

8.0000 + 0.0000i

8.0000 - 0.0000i

Matlab makes an error by adding a (small) imaginary part to the double

eigenvalue 2

>> imag(ans)

ans =

1.0e-05 *

0

0

0.5384

-0.5384

For n = 10, we get

>> n=10;eig(A^n), imag(ans)

ans =

8

CHAPTER 2.

EXERCISES OF CHAPTER 2

1.0e+06 *

1.0486

0.0590

0.0010 + 0.0000i

0.0010 - 0.0000i

ans =

0

0

0.0185

-0.0185

This time, the error is not negligible anymore.

3. >> [P1,D1]=eig(A);P1’*P1

ans =

1.0000

0.6404

-0.9562

-0.9562

0.6404

1.0000

-0.8319

-0.8319

-0.9562

-0.8319

1.0000

1.0000

-0.9562

-0.8319

1.0000

1.0000

The matrix is not diagonalizable in an orthonormal basis of eigenvectors.

Solution of Exercise 2.16 Spectra of A and At .

>> n=10;A=rand(n,n);[eig(A) eig(A’)]

ans =

4.5713

4.5713

-0.6828

-0.6828

-0.0581 + 0.4900i -0.0581 + 0.4900i

-0.0581 - 0.4900i -0.0581 - 0.4900i

0.6038 + 0.4022i

0.6038 + 0.4022i

0.6038 - 0.4022i

0.6038 - 0.4022i

0.2680 + 0.4556i

0.2680 + 0.4556i

0.2680 - 0.4556i

0.2680 - 0.4556i

0.1435

0.5497

0.5497

0.1435

We notice that for various examples, the matrices A and At have the same

spectrum. This is indeed the case since λ ∈ σ(A) is equivalent to say that

(A − λI) is singular, which is in turn equivalent to (A − λI)t being singular,

wherefrom the result is proved.

Solution of Exercise 2.17 We notice that for several calls

u=rand(n,1);v=rand(n,1);A=eye(n,n)+u*v’;eig(A)

(where n varies) the spectrum of the matrix In + uv t consists of a simple

eigenvalue and the eigenvalue 1 with multiplicity n − 1. Let us show that

the simple eigenvalue is indeed equal to 1 + ut v. If either u or v is zero, the

result is obvious. Assume that neither one is zero. The spectrum of In + uv t

9

consists on the one hand of the eigenvalue 1 corresponding to eigenvectors in

the hyperplane V orthogonal to v since for all w ∈ V , we have

(In + uv t )w = w + uv t w = w + hv, wiu = w,

and, on the other hand, of the eigenvalue 1 + ut v corresponding to the eigenvector u since

(In + uv t )u = u + uv t u = (1 + v t u)u.

Solution of Exercise 2.19 We remark that AAt and At A have the same

nonzero eigenvalues. Explanation: assume that A is of size m × n and let

A = V Σ̃U ∗ be its SVD factorization. Since AA∗ = V Σ̃ Σ̃ ∗ V ∗ , the eigenvalues

of this matrix are those of the diagonal matrix Σ̃ Σ̃ ∗ , of size m × m. Similarly,

the eigenvalues of A∗ A are those of the diagonal matrix Σ̃ ∗ Σ̃, of size n × n.

It is clear that the eigenvalues of the largest matrix are those of the smallest

matrix plus zeros. More generally, the nonzero eigenvalues of the two matrices

AB and BA are the same (see Lemma 2.7.2 in the book).

Solution of Exercise 2.21 We fix n = 100 in the following examples (the

observations are the same whatever the value of n).

1. >> A=SymmetricMat(n);[P,D]=eig(A);

>> X=zeros(n,n);for k=1:n, X=X+D(k,k)*P(:,k)*P(:,k)’; end;

>> norm(A-X)

ans =

1.5874e-15

Pn

We notice that the symmetric matrix A is equal to k=1 λi ui uti .

2. >> D(1,2)=1;B=P*D*inv(P);[P,D]=eig(B);

>> X=zeros(n,n);for k=1:n, X=X+D(k,k)*P(:,k)*P(:,k)’; end;

>> norm(B-X)

ans =

8.0345

Pn

This time the matrices B and k=1 µi vi vit do not coincide.

3. Explanation: the symmetric matrix A is diagonalizable in an orthonormal

basis of eigenvectors. In other words, the matrix P computed by eig is

orthogonal. We therefore have A = P DP t . Calling ui the columns of P ,

we have P D = [λ1 u1 | . . . |λn un ] and A reads

t

u1

n

X

A = [λ1 u1 | . . . |λn un ] ... =

λi ui uti .

k=1

utn

Solution of Exercise 2.22 We remark that for various values of n the rank

of A is equal to n.

10

CHAPTER 2.

EXERCISES OF CHAPTER 2

1. For any x ∈ Rn , we have Ax = vut x = hx, uiv. Wherefrom we infer that

if u and v are nonzero, the image of A is the line generated by v and thus

the rank of A is equal to 1.

2. Conversely: let A ∈ Mn (R) be a matrix of rank r = 1. The SVD factorization of A reduces to A = µ1 v1 ut1 , thus the result is proved.

Solution of Exercise 2.24 For the matrix A1

>>

>>

>>

>>

A1=[1,2,3;3,2,1;4,2,1];

A1*A1

A1*ans

A1*ans

%

%

%

%

A

A2

A3

...

The limit is (seems to be!) a matrix with all entries equal to +∞. We may

also use the power function, for instance, compute A1^100.

For the matrix A2 , the limit is

ans =

0.5000

0

0.5000

0

1.0000

0

0.5000

0

0.5000

For the matrix A3 , the limit is the zero matrix. For the matrix A4 , we obtain

two accumulation points, i.e., the ’limit’ oscillates between

0.5000

0

0.5000

0

1.0000

0

0.5000

0

0.5000

-0.5000

0

-0.5000

0

1.0000

0

-0.5000

0

-0.5000

and

Each matrix Ai is diagonalizable and reads Ai = Pi Di Pi−1 . The powers of Ai

are therefore equal to

k

0

λi,1

0 Pi−1 .

Aki = Pi Dik Pi−1 = Pi 0

λki,2

0

0

λki,3

The behavior, at infinity, of the powers of these four matrices is accounted for

by studying their eigenvalues.

• The spectral radius of A1 is > 1, there exists an eigenvalue λ1,j of modulus

> 1 and such that |λk1,j | tends to +∞.

• The spectral radius of matrix A3 is < 1. For all j, |λk2,j | tends to 0, and so

is the case for Ak3 .

11

• The eigenvalues of A2 are 1/2, 1 and 1, we infer that D2k tends to

diag(0, 1, 1).

• The eigenvalues of A4 are −1, 1/2 and 1. The presence of the eigenvalue

−1 accounts for the “oscillations’ of the sequence Ak .

Solution of Exercise 2.25

>>

>>

>>

>>

>>

>>

>>

>>

>>

>>

>>

% Unit circle

t=0:0.01:2*pi;x=cos(t)’;y=sin(t)’;

A =[-1.25

0.75; 0.75

-1.25];

% Image of the unit circle by A

for i=1:length(t)

v=[x(i);y(i)]; w=A*v; Ax(i)=w(1); Ay(i)=w(2);

end

% Plot of the circle and its image; see Figure 2.2

plot(x,y,Ax,Ay,’.’,’MarkerSize’,10,’LineWidth’,3)

axis([-2 2 -2 2]);grid on; axis equal;

set(gca,’XTick’,-2:1:2,’YTick’,-2:1:2,’FontSize’,24);

We compute the singular value decomposition of the matrix

>> [V,S,U] = svd(A)

V =

-0.7071

0.7071

0.7071

0.7071

S =

2.0000

0

0

0.5000

U =

0.7071

-0.7071

-0.7071

-0.7071

% the singular values of

% the matrix are 2 and 1/2

Solution of Exercise 2.26 The matrix B may be defined by B=[zeros(m,m)

A;A’ zeros(n,n)]. Example of calculation:

>> n=5;m=3;

>> A=rand(m,n);B=[zeros(m,m) A;A’ zeros(n,n)];

>> [V S U]=svd(A);

>> diag(S)’, eig(B)’

ans =

1.7251 0.6388 0.4008

ans =

-1.7251 -0.6388 -0.4008 -0.0000 0.0000 0.4008 0.6388 1.7251

It seems that the nonzero eigenvalues of B are equal to plus and minus the singular values of A. Let us prove this is actually the case. Let λ be an eigenvalue

of the symmetric matrix B. There exist two vectors x ∈ Rm and y ∈ Rn such

that (x, y) 6= (0m , 0n ), Ay = λx and At x = λy. We infer that At Ay = λ2 y.

We note that y = 0 =⇒ λ = 0 and thus if the eigenvalue λ is nonzero, y is an

12

CHAPTER 2.

EXERCISES OF CHAPTER 2

eigenvector of At A corresponding to the eigenvalue λ2 . This means that |λ|

is a singular value of A. Reciprocally, if µ is a nonzero singular value of A,

there exists a nonzero vector u ∈ Rn such that At Au = µ2 u. We set y = u

and x = µ1 :

x

Ay

x

B

=

=µ

,

y

At x

y

which proves that µ is an eigenvalue of B.

Solution of Exercise 2.27 Pseudo-inverse matrix.

>> a =[1 -1 4; 2 -2 0;

>> p=pinv(a)

p =

-0.0822

0.1925

0.0822

-0.1925

0.1737

-0.1784

>> p*a

ans =

1.0000

-0.0000

-0.0000

1.0000

0.0000

0.0000

>> a*p

ans =

0.5305

-0.3286

-0.3286

0.7700

0.3756

0.2629

0.0000

0.0000

>> a*p*a

ans =

1.0000

-1.0000

2.0000

-2.0000

3.0000

-3.0000

-1.0000

-1.0000

>> p*a*p

ans =

-0.0822

0.1925

0.0822

-0.1925

0.1737

-0.1784

3 -3

5;-1 -1 0];

0.0657

-0.0657

0.0610

-0.5000

-0.5000

-0.0000

0.0000

0.0000

1.0000

0.3756

0.2629

0.6995

-0.0000

-0.0000

-0.0000

-0.0000

1.0000

4.0000

0.0000

5.0000

-0.0000

0.0657

-0.0657

0.0610

-0.5000

-0.5000

-0.0000

We note that A† A = I3 , which makes sense since rk (A) = 3. We also notice

that A† AA† = A† and AA† A = A (Moore-Penrose conditions).

Solution of Exercise 2.28 We note that both quantities are equal. Let us

show that it is actually the case. Let

V Σ̃U ∗ be the SVD factorization

A=

Σ 0

and Σ = diag (µ1 , . . . , µr ). By

of matrix A ∈ Mm,n (R) with Σ̃ =

0 0

13

†

†

∗

†

definition A = U Σ̃ V with Σ̃ =

we obtain the inequalities

Σ −t

0

0

. Computing the trace of AA† ,

0

tr (AA† ) = tr (V Σ̃ Σ̃ † V ∗ ) = tr (Σ̃ Σ̃ † ) = tr (ΣΣ −t ),

wherefrom we get the result tr (ΣΣ −t ) = r.

3

Exercises of chapter 3

Solution of Exercise 3.1 Numerical experiments show that each one of

these norms is larger than m = maxi,j |Ai,j |. Let us prove this result, which is

obvious for the Frobenius norm. Let i0 and j0 be indices such that m = |Ai0 ,j0 |.

The p norms being subordinate, we have

1/p

n

X

kAxkp

kAei0 kp

≥ |Ai0 ,j0 |

|Ai0 ,j |p

kAkp = max

≥

= kAei0 kp =

x6=0 kxkp

kei0 kp

j=1

where ei is the i-th vector of the canonical basis.

Solution of Exercise 3.2 Numerical experiments show that each one of

−1

these norms is larger than m = (mini |Ti,i |) . Let us prove this result: for

each of the considered norms, we have

kT −1k ≥ max |(T −1 )i,j | ≥ max |(T −1 )i,i | = max

i,j

i

i

1

1

=

= m,

|Ti,i |

mini |Ti,i |

because, for a triangular matrix, the diagonal entries of its inverse are the

inverse of its diagonal entries.

Solution of Exercise 3.4

>> n=35;u=rand(n,1);v=rand(n,1);

>> fprintf(’norm of uvt = %f norm of u = %f norm of v = %f \n’,...

norm(u*v’),norm(u),norm(v))

norm of uvt = 10.413866 norm of u = 3.011554 norm of v = 3.457971

We note that kuv t k2 = kuk2 kvk2 . Justification:

kuv t k2 = max kuv t xk2 = max khx, viuk2 = kuk2 max |hx, vi|.

kxk2 =1

kxk2 =1

kxk2 =1

We conclude by remarking that maxkxk2 =1 |hx, vi| = kvk2 , since by the

Cauchy–Schwarz inequality, we have |hx, vi| ≤ kvk2 kxk2 and the supremum

16

CHAPTER 3.

EXERCISES OF CHAPTER 3

is attained for all vectors x = αv, α ∈ Rn . We have the same relation for the

Frobenius norm:

sX X

sX X

t

t

2

|(uv )i,j | =

|ui |2 |vj |2 = kuk2 kvk2 = kukF kvkF .

kuv kF =

i

j

i

j

The same formula does not hold true for the norms k.k1 and k.k∞ because for

each of these norms, we have for u = v = (1, . . . , 1)t ,

• kuv t k∞ = n and kuk∞ = kvk∞ = 1.

• kuv t k1 = n and kuk∞ = kvk∞ = n.

Solution of Exercise 3.5 The vector v is an eigenvector of AAt associated

with the smallest singular value of A. Consider A = V ΣU ∗ the SVD factorization of matrix A. We know, on the one hand (see Remark 2.7.5) that the

columns of V are the eigenvectors of AAt , and on the other hand (see equality

(5.17)) that

kA−1 k2 = kA−1 vn k.

Solution of Exercise 3.6

1. Define ϕA (x) = kAxk for x ∈ Rn . This function obviously satisfies the

triangular inequality and the homogeneity relation. It remains to check

that ϕA (x) = 0, implies x = 0. This is true if and only if A is injective.

function y=normA(A,u)

if size(A,2)~=size(u,1)

error(’normA::incompatibility of dimensions’);

else

y=norm(A*u)

end;

2. function y=normAs(A,x)

if size(A,2)~=size(x,1)

error(’normAs::incompatible dimensions’);

else

if size(x,2)~=1

error(’normAs:: x must be a vector ’);

end;

y=sqrt(x’*A*x)

end;

p

Define ϕA (x) = hAx, xi for x ∈ Rn . This function is real valued if A

is assumed to be positive semi definite, i.e., for all x ∈ Rn , hAx, xi ≥ 0.

Under this minimal assumption let us check the three norm properties.

• The homogeneity is satisfied: ϕA (λx) = |λ|ϕA (x) for all λ ∈ R.

• Only the zero vector has zero norm: ϕA (x) = 0 =⇒ x = 0. This is true

if and only if A is positive definite.

17

• The triangular inequality ϕA (x + y) ≤ ϕA (x) + ϕA (y) is satisfied if

and only if

hAx, yi + hAy, xi ≤ 2ϕA (x)ϕA (y),

or, equivalently, if and only if

h(A + At )x, yi ≤ 2ϕA (x)ϕA (y).

(3.1)

Now, we distinguish two cases:

– A is symmetric and, then, condition (3.1) is equivalent to

hAx, yi ≤ ϕA (x)ϕA (y)

(3.2)

which is nothing but Cauchy–Schwarz inequality for the bilinear

form hAx, yi. To prove (3.2), we decompose

x and y in anP

orthonorP

mal basis ui of eigenvectors of A: x = ni=1 xi ui and y = ni=1 yi ui .

Then (3.2) is simply

v

v

u n

u n

n

X

uX

uX

λ i xi y i ≤ t

λi x2i t

λi yi2 ,

i=1

i=1

i=1

which holds true by virtue of the discrete Cauchy–Schwarz inequality.

– A is nonsymmetric, inequality ( 3.1) is nonetheless true. In fact,

using (3.2) for the symmetric matrix A + At , we have

p

p

h(A+At )x, yi ≤ h(A + At )x, xi h(A + At )y, yi = 2ϕA (x)ϕA (y),

since hAt x, xi = hx, Axi = hAx, xi.

Remark: when A is symmetric and positive definite, (x, y) 7−→ hAx, yi

defines a scalar product on Rn .

Solution of Exercise 3.9 Best k-rank approximation.

1. >> m=10;n=7;r=5;A=MatRank(m,n,r);

>> [V,S,U] = svd(A);S=diag(S);

>> for k=r-1:-1:1

>>

[v,s,u] = svds(A,k);

>>

fprintf(’For k = %i:: error^2 =

>> k,norm(v*s*u’-A,’fro’)^2,k,S(k+1))

>> end

>> For k = 4:: error^2 = 0.006658 and

>> For k = 3:: error^2 = 0.099486 and

>> For k = 2:: error^2 = 0.888564 and

>> For k = 1:: error^2 =P1.890766 and

r

We note that kA − Ak k2F = i=k+1 µ2i .

%f

and S(%i)=%f\n’,...

S(4)=0.081598

S(3)=0.304677

S(2)=0.888300

S(1)=1.001101

18

CHAPTER 3.

EXERCISES OF CHAPTER 3

2. Since in the proof of Proposition 3.2.1, we write A − Ak = V DU ∗ where

D ∈ Mm,n (R) is the diagonal matrix diag (0, . . . , 0, µk+1 , . . . , µr , 0, . . . , 0).

The Frobenius norm being invariant by unitary transformation, we have

v

u X

u r

kA − Ak kF = kDkF = t

µ2i ,

i=k+1

hence the result is proved.

Solution of Exercise 3.10

1. C is the diagonal matrix formed by entries ci .

2. We have

M ẏ(t)

M ẏ(t)

0

M ż(t) =

=

=

M ÿ(t)

−C ẏ(t) − Ky(t)

−K

M

−C

y(t)

ẏ(t)

.

Since matrix M is nonsingular, we deduce (3.14) with

0

I

A=

.

−M −1 K −M −1 C

3. With the data

1 0

M = 0 2

0 0

of the problem, we have

0

2 −1 0

1/2 0

0

0 , K = −1 2 −1 , C = 0 1/2 0 .

1

0 −1 1

0

0 1/2

The script above produces Figures 3.1-3.2. We observe that the masses go

back to their equilibrium positions and stay at rest (zero velocity).

>>

>>

>>

>>

>>

>>

>>

>>

>>

>>

>>

>>

>>

>>

>>

K=laplacian1dD(3)/16; K(3,3)=1;M=diag([1 2 1]);

C=diag([.5 .5 .5]);

A=[zeros(size(K)) eye(size(K));-inv(M)*K -inv(M)*C];

pos0=[-1 0 1];

% Initial positions

Z0=[-.1 0 .1];

% perturbation of the positions

Z0=[Z0 -1 0 1]’;

% perturbation of the velocities

velocities=[];positions=[];time=0:0.1:30;

for t=time;

% Solution (positions and velocities) at instant t

Z=expm(A*t)*Z0;

positions=[positions;Z(1:3)’+pos0];

velocities=[velocities;Z(4:6)’];

end

plot(time, positions,’MarkerSize’,10,’LineWidth’,3)

plot(time, velocities,’MarkerSize’,10,’LineWidth’,3)

19

2

1.5

1

0.5

0

−0.5

−1

−1.5

−2

0

m3

m

2

m1

5

10

15

20

25

30

Fig. 3.1. Positions of the masses as functions of time.

1

0.5

0

−0.5

−1

0

5

10

15

20

25

30

Fig. 3.2. Velocities of the masses as functions of time.

4

Exercises of chapter 4

Solution of Exercise 4.3

1. If A is a band matrix, of half-bandwidth p, there are at most 2p + 1

nonzero elements on a row of A. The scalar product of u and a row of

A is carried out in at most 2p + 1 multiplications (we shall not consider

boundary effects due to the first and last rows that contain less than

2p + 1 each. The cost of a product Au is thus, asymptotically, of (2p + 1)n

multiplications.

2. If A and B are two band matrices, AB is also a band matrix, of halfbandwidth 2p. Indeed, on the one hand we have

(AB)i,j =

n

X

k=1

ai,k bk,j =

X

ai,k bk,j ,

i−p≤k ≤i+p

j−p≤k≤j+p

and on the other hand

i − j > 2p

i−p > j +p

|i − j| > 2p ⇐⇒

or

⇐⇒

or

,

i − j < −2p

i+p<j −p

so (AB)i,j = 0 if i + p < j − p or i − p > j + p. The matrix AB having a

band structure, we do not have to determine n2 scalars but only (4p + 1)n

scalars, each one of them is equal to the scalar product of two vectors

having at most (2p+1) nonzero entries each, which is carried out in at most

(2p + 1) operations. The total cost is therefore less than (2p + 1)(4p + 1)n

operations. A more accurate computation of the scalar product of row i

of matrix A and column j of matrix B is done in

• 0 operation if j < i − p,

• 1 operation if j = i − p,

.

• ..

22

CHAPTER 4.

EXERCISES OF CHAPTER 4

• 2p + 1 operations if j = i,

.

• ..

• 1 operation if j = i + p,

• 0 operation if j > i + p,

that is, overall (2p+1)+2(1+. . .+2p) = (2p+1)2 operations. The number

of operations required by the calculation of AB is thereby equivalent to

(2p + 1)2 n.

Solution of Exercise 4.4 Matlab allowing for recursiveness (a function can

call itself), the Strassen algorithm is easily programmed.

function c=strassen(a,b)

% warning: matrices are square

% of size n × n with n = 2k

n=size(a,1);

if n==1

c=a*b;

else

m=n/2;

% we decompose the matrix a in 4 blocks

a11=a(1:m,1:m);a12=a(1:m,m+1:n);

a21=a(m+1:n,1:m);a22=a(m+1:n,m+1:n);

% same for b

b11=b(1:m,1:m);b12=b(1:m,m+1:n);

b21=b(m+1:n,1:m);b22=b(m+1:n,m+1:n);

% the Strassen calculation rule

m1=strassen(a12-a22,b21+b22);m2=strassen(a11+a22,b11+b22);

m3=strassen(a11-a21,b11+b12);m4=strassen(a11+a12,b22

);

m5=strassen(a11

,b12-b22);m6=strassen(a22

,b21-b11);

m7=strassen(a21+a22,b11

);

% we define c=a*b by blocks

c=[m1+m2-m4+m6,m4+m5;m6+m7,m2-m3+m5-m7];

end

We check on some examples that the function is indeed working

>> k=5;n=2^k;a=rand(n,n);b=rand(n,n);c=a*b;d=strassen(a,b);

>> norm(c-d)

ans =

1.9112e-13

We compare with the computation done by MatMult.

>> k=5;n=2^k;a=rand(n,n);b=rand(n,n);

tic;c=strassen(a,b);t1=toc

t1 =

0.7815

23

>> tic;d=MatMult(a,b);t2=toc

t2 =

0.0050

We remark that function strassen is much slower than function MatMult.

This is due to the recursiveness using a lot of memory.

Solution of Exercise 4.5 We may compute X −1 defined by (4.4) in six

matrix multiplications and two matrix inversions

>> A=rand(n,n);B=rand(n,n);

% matrices of size n × n

>> C=rand(n,n);D=rand(n,n);

>> tic;

% initialization of a time counter

>> Am1=inv(A);

>> M=Am1*B;

>> Delta=D-C*M;

>> N=C*Am1;

>> Deltam1=inv(Delta);

>> P=Deltam1*N;

>> inverseX=[Am1+M*P, -M*Deltam1; -P, Deltam1];

>> t1=toc;

% time elapsed since the last tic call

>> X=[A B;C D];

>> tic;invX=inv(X);t2=toc;

>> n=100;norm(inverseX-invX, ’inf’) % we check the computations

ans =

2.4219e-10

For small values of n the standard Matlab inversion of X is faster.

>> fprintf(’computation by the Schur complement = %f \n’,t1)

computation by the Schur complement = 0.012005

>> fprintf(’computation by inversion of X = %f \n’,t2)

computation by inversion of X = 0.008171

5

Exercises of chapter 5

Solution of Exercise 5.1

1. eps is the floating point relative accuracy of Matlab in double precision

>> eps

ans =

2.2204e-16

>> a=eps;b=0.5*eps;X=[2, 1;2, 1];

>> A=[2, 1;2, 1+a];norm(A-X)

>> B=[2, 1;2, 1+b];norm(X-B)

>> ans =

2.2204e-16

>> ans =

0

In simple precision the accuracy is

>> eps(’single’)

ans =

1.1921e-07

2. realmax is the largest floating point number, and realmin the smallest

one

>> rM=realmax, 1.0001*rM,

rM =

1.7977e+308

ans =

Inf

This produces an overflow.

>> rm=realmin, .0001*rm

rm =

2.2251e-308

26

CHAPTER 5.

EXERCISES OF CHAPTER 5

ans =

2.2251e-312

There is no underflow; according to the Matlab help, the number .0001*rm

is a “denormal number”. Consult a IEEE floating point documentation.

3. Infinity and “Not a number”

>> A=[1 0 0 3]; B=[5 -1 1 1]./A

Warning: Divide by zero.

B =

5.0000

-Inf

Inf

>> isinf(B)

ans =

0

1

1

0

>> C=A.*B

ans =

5

NaN

NaN

1

>> isnan(C)

ans =

0

1

1

0

0.3333

4. >> A=[1 1; 1 1+eps];inv(A), rank(A)

Warning: Matrix is close to singular or badly scaled.

Results may be inaccurate. RCOND = 5.551115e-17.

ans =

1.0e+15 *

4.5036

-4.5036

-4.5036

4.5036

ans =

1

The matrix A is invertible, but very ill conditioned, hence the response of

Matlab is false (the real rank is 2).

>> B=[1 1; 1 1+.5*eps];inv(B), rank(B)

Warning: Matrix is singular to working precision.

ans =

Inf

Inf

Inf

Inf

ans =

1

The matrix B is singular for Matlab.

Solution of Exercise 5.4 We store the upper triangular matrix A column

by column, starting with the first one, in a vector u. The mapping between

indices is Ai,j = ui+j(j−1)/2 . The program is quite similar to the previous one:

27

function aU=storeU()

fprintf(’Storage of an upper triangular matrix’)

fprintf(’the matrix is stored column by column’)

n=input(’enter the dimension n of the square matrix’)

for j=1:n

fprintf(’column %i \n’,j)

ii=j*(j-1)/2;

for i=1:j

fprintf(’enter element (%i,%i) of the matrix’,i,j)

aU(i+ii)=input(’ ’);

end;

end;

Solution of Exercise 5.5 Starting from the dimension s of the vector storing

a triangular square matrix A, one has to determine the size n of the latter.

Since n ≥ 1, we have

√

−1 + 1 + 8s

2

.

s = n(n + 1)/2 ⇐⇒ n + n − 2s = 0 =⇒ n =

2

function c=storeLpU(a,b)

% product of two triangular matrices a and b

% a: stored by storeL

% b: stored by storeU

% c=ab: full matrix

m=length(a);s=length(b);

if m~=s, then error("incompatible dimensions");end;

n=round((-1+sqrt(1+8*m))/2);

c=zeros(n,n);

for i=1:n

ii=i*(i-1)/2;

for j=1:n

jj=j*(j-1)/2;

s=0;

for k=1:min(i,j)

s=s+a(k+ii)*b(k+jj);

end;

c(i,j)=s;

end;

end;

Solution of Exercise 5.6

1. The matrix Q is obtained by

>> A=[1:5;5:9;10:14];Q=null(A’)

Q =

0.4527

28

CHAPTER 5.

EXERCISES OF CHAPTER 5

-0.8148

0.3621

2. a) > >b=[5; 9; 4];x=A\b

Warning: Rank deficient, rank = 2, tol =

1.9294e-14.

x =

-1.8443

0

0

0

1.6967

Matlab warns us of a problem: it can not compute the rank of A.

>> A*x-b

ans =

1.6393

-2.9508

1.3115

The vector x = A\b returned by Matlab is not a solution of equation

Ax = b.

>> Q’*b

ans =

-3.6214

b) For b=[1; 1; 1], x = A\b is indeed a solution of equation Ax = b

and Qt b = 0.

c) Justification. For any matrix A of size m × n and b ∈ Rm , we have

(denoting by q1 , . . . , qs the columns of Q):

Qt b = 0 ⇐⇒ hqi , bi = 0,

∀i = 1, . . . , s

t ⊥

⇐⇒ b ∈ ( Ker A ) ⇐⇒ b ∈ Im A.

d) function y=InTheImage(A,b)

kerAt=null(A’);

if (size(kerAt,1)==size(b,1))

if norm(kerAt’*b) > 1.e-6

y=’no’;

else

y=’yes’;

end;

else

error(’Dimension problem’)

end;

Some trials:

>> A=[1 2 3; 4 5 6; 7 8 9];b=[1;1;1];;InTheImage(A,b)

ans =

yes

>> b=[1;2;1];InTheImage(A,b)

29

ans =

no

Solution of Exercise 5.7 The computation of x by the Cramer formulas is

performed by the following function

function y=cramer(A,b)

n=size(A,1);d=det(A);

for i=1:n

sol(i)=det([A(:,1:i-1), b, A(:,i+1:n)])

end;

y=sol’/d;

We carry out the calculations for n = 20, 40, 60 and 80.

>> for n=20:20:80;

>> b=ones(n,1);c=1:n;A=c’*ones(size(c));A=A+A’;

>> s=norm(A,’inf’);

>> for i=1:n, A(i,i)=s;end;

>>

x=cramer(A,b);

>>

sol=A\b;

>>

norm(sol-x’);

>>

[n

norm(sol-x’)]

>> end;

ans =

20.0000

0.0000

ans =

40.0000

0.0000

ans =

60.0000

0.0000

ans =

80

NaN

Explanation: for n = 80, Matlab cannot execute the requested calculations

anymore, determinants are too large (Nan means ’not a number’)

>> det(A)

ans =

Inf

Solution of Exercise 5.8 The matrix A is always invertible ( det (A) = 1)

and

In −B

−1

.

A =

0 n In

We infer that

condF (A)2 = kAk2F kA−1 k2F = (2n + kBk2F )2 ,

i.e., condF (A) = 2n + kBk2F . The Frobenius norm and condition number of a

matrix X are given by norm(X,’fro’) and cond(X,’fro’).

30

CHAPTER 5.

EXERCISES OF CHAPTER 5

Solution of Exercise 5.9 We check that cond2 (Hn ) is very large even for

small values of n:

>> cond(hilb(5)), cond(hilb(10))

ans =

4.7661e+05

ans =

In Figure 5.1, we display the graph generated by the following instructions

>>

>>

>>

>>

n=10;x=(1:n)’;for i=1:n,y(i)=cond(hilb(i)); end;

plot(x,log(y),’-+’,x,3.45*x-4.1,’MarkerSize’,10,’LineWidth’,3)

grid on;

set(gca,’XTick’,1:2:10,’YTick’,-5:10:35,’FontSize’,24);

The curve n 7→ ln cond2 (Hn ) is almost a straight line of slope close to

3.45. Wherefrom we deduce the approximation cond2 (Hn ) ≈ e3.45n . Actually

35

25

15

5

−5

1

3

5

7

9

Fig. 5.1. Condition number of the Hilbert matrix, n 7→ ln cond2 (Hn ).

on can prove the equivalence cond2 (Hn ) ≈ e

matrices are very much ill-conditioned.

7n

2

, which implies that Hilbert

Solution of Exercise 5.10

function y=Lnorm(A)

% computes the 2-norm of a lower triangular matrix A

if norm(A-tril(A))>1.e-10

error(’the matrix is not lower triangular.’)

end;

n=size(A,1);y=zeros(n,1);x=ones(n,1);

y(1)=A(1,1)*x(1);

31

for i=2:n

s=A(i,1:i-1)*x(1:i-1);

if abs(A(i,i)+s)<abs(A(i,i)-s)

x(i)=-1;

end;

y(i)=A(i,i)*x(i)+s;

end;

y=y/sqrt(n);

y=norm(y);

Some tests:

>> n=20;a=tril(rand(n,n));[Lnorm(a), norm(a)]

ans =

5.8862

6.6578

>> n=40;a=tril(rand(n,n));[Lnorm(a), norm(a)]

ans =

11.9732

13.1621

>> n=80;a=tril(rand(n,n));[Lnorm(a), norm(a)]

ans =

23.7755

26.2176

We notice a fairly good agreement of the two computations.

Solution of Exercise 5.11

function y=LnormAm1(A)

% computes the 2-norm of A−1

n=size(A,1);y=zeros(n,1);

y(1)=1/A(1,1);

for i=2:n

s=A(i,1:i-1)*y(1:i-1);

y(i)=-(sign(s)+s)/A(i,i);

end;

y=y/sqrt(n);

y=norm(y);

Some tests:

>> n=20;a=LowNonsingularMat(n);[LnormAm1(a), norm(inv(a))]

ans =

0.1056

0.1126

>> n=40;a=LowNonsingularMat(n);[LnormAm1(a), norm(inv(a))]

ans =

0.0525

0.0552

>> n=80;a=LowNonsingularMat(n);[LnormAm1(a), norm(inv(a))]

ans =

0.0252

0.0260

32

CHAPTER 5.

EXERCISES OF CHAPTER 5

Here as well, we observe a fairly good agreement of both computations.

Solution of Exercise 5.12

function y=Lcond(A)

y=Lnorm(A)*LnormAm1(A);

Some tests:

>> n=20;a=LowNonsingularMat(n);[Lcond(a), cond(a)]

ans =

1.5809

1.6801

>> n=40;a=LowNonsingularMat(n);[Lcond(a), cond(a)]

ans =

1.5717

1.6543

>> n=80;a=LowNonsingularMat(n);[Lcond(a), cond(a)]

ans =

1.5438

1.5946

Solution of Exercise 5.14

1. We notice that for various values of n the spectrum of the matrix is made

up (or seems to be made up) of the only values 1.618 and -0.618.

2. Instead of solving system Ax = b, we solve the equivalent system

M −1 Ax = M −1 b: this may be worthwhile at least when the latter system

is better conditioned than the original one.

3. a) We have

u

u

u

u

i.e.,

= λM

or equivalently A

=λ

M −1 A

v

v

v

v

(1 − λ)Cu + Dt v = 0

Cu + Dt v = λCu

⇔

Du

= λDC −1 Dt v

Du

= λDC −1 Dt v

We deduce that Du = λDC −1 (λ − 1)Cu = λ(λ − 1)Du, so that

(λ2 − λ − 1)Du = 0.

b) The last relation shows that:

• either Du = 0 and thus DC −1 Dt v = 0, that is, v = 0. Then we

deduce that Cu = λCu, so λ = 1, since the eigenvector (u, v)t is

nonzero and accordingly u 6= 0.

√

• or λ2 − λ − 1 = 0, that is, λ = (1 ± 5)/2.

√

Thus,

the eigenvalues of M −1 A belong to the set {(1 − 5)/2, 1, (1 +

√

5)/2} independently of the dimension n.

c) If M −1 A is symmetric, we have

√

|λmax |

5+1

=√

cond2 (M −1 A) =

≈ 2.62.

|λmin |

5−1

Solution of Exercise 5.17 We put the data in an array

33

>> X=[1990

1997

1998

939

972

1047

1058

1991

1999

1006

1071

1992

1993

2000; ...

1022

1016

1083];

1994

1995

1996 ...

1011

1038 ...

Reproduction of Figure 1.2.

years=X(1,:);cost=X(2,:);

m=length(years);

t0=years;

A(1,1)=m;A(1,2)=sum(years);A(2,1)=A(1,2);A(2,2)=sum(years.*years);

b(1)=sum(cost);b(2)=years*cost’;

xx=A\b’;

pt=xx(1)+years*xx(2);

plot(years,cost,’-+’,years,pt,’MarkerSize’,10,’LineWidth’,3)

grid on

set(gca,’XTick’,1990:2:2000,’YTick’,900:50:1100,’FontSize’,24);

Reproduction of Figure 1.3. We now use the functions polyfit and polyval.

p = polyfit(years,cost,4);

f = polyval(p,years);

plot(years,cost,’-+’,years,f,’MarkerSize’,10,’LineWidth’,3)

grid on

set(gca,’XTick’,1990:2:2000,’YTick’,900:50:1100,’FontSize’,24);

Solution of Exercise 5.18

1. We define a function f

f=inline(’sin(x)-sin(2*x)’);

Let p(x) = a + bx + cx2 . The vector u = (a, b, c)t is a solution of At Au =

At b, with

1 x1 x21

x1

1 x2 x22

x2

A = . . . , b = . .

.. .. ..

..

1 xn x2n

>>

>>

>>

>>

>>

>>

xn

n=100;x=4.*rand(n,1);x=sort(x);y=f(x);

A1=[ones(n,1) x x.*x];

coef=(A1’*A1)(A’*b)(A1’*y);

sol1=coef(1)+coef(2)*x+coef(3)*x.*x;

norm(sol1-y)

ans =

4.7731

The same results are obtained with the instructions

34

CHAPTER 5.

EXERCISES OF CHAPTER 5

>> n=100;x=4.*rand(n,1);x=sort(x);y=f(x);

>> p = polyfit(x,y,2);

>> fp = polyval(p,x);norm(fp-y)

2. We write p(x) = a + b cos(x) + c sin(x). The vector u = (a, b, c)t is a

solution of system At Au = At b with

1 cos(x1 ) sin(x1 )

1 cos(x2 ) sin(x2 )

A=.

.

..

..

..

.

.

1

cos(xn )

sin(xn )

>> cx=cos(x);sx=sin(x);

>> b=y;A=[ones(n,1) cx sx];

>> coef=(A’*A)\(A’*b);

>> sol2=coef(1)+coef(2)*cx+coef(3)*sx;

>> norm(sol2-y)

ans =

4.3472

The error is smaller. This was to be expected, since in view of the representation of function f at points xi , it seemed more suitable to seek a

combination of trigonometric functions than a combination of monomials.

Both approximations p and q as well as the cloud of points X,f(X) are

displayed in Figure 5.2.

2

1.5

1

0.5

0

−0.5

−1

−1.5

−2

0 0.5 1 1.5 2 2.5 3 3.5 4

Fig. 5.2. The two approximations p (+) and q (–) in Exercise 5.18.

6

Exercises of chapter 6

Solution of Exercise 6.1 We obtain

det(A)

ans =

- 9.517E-16

instead of 0, because of rounding errors.

Solution of Exercise 6.2 Comparison of the LU and Cholesky methods.

1. function [L,U]=LUfacto(A)

[m,n]=size(A);

if m~=n, error(’the matrix is not square’), end;

small=1.e-16;

for k=1:n-1

if abs(A(k,k))<small, error(’error: zero pivot’), end;

for i=k+1:n

A(i,k)=A(i,k)/A(k,k);

A(i,k+1:n)=A(i,k+1:n)-A(i,k)*A(k,k+1:n);

end;

end;

U=triu(A);L=A-U+diag(ones(n,1));

2. function A=Cholesky(A)

[m,n]=size(A);

if m~=n, error(’the matrix is not square’), end;

small=1.e-8;

if norm(A-A’,’inf’)>small

error(’nonsymmetric matrix’)

end;

for j=1:n

A(j,j)=A(j,j)-A(j,1:j-1)*A(j,1:j-1)’;

if A(j,j)< small, error(’nonpositive matrix’), end;

if abs(A(j,j))< small, error(’nondefinite matrix’), end;

36

CHAPTER 6.

EXERCISES OF CHAPTER 6

A(j,j)=sqrt(A(j,j));

for i=j+1:n

A(i,j)=A(i,j)-A(j,1:j-1)*A(i,1:j-1)’;

A(i,j)=A(i,j)/A(j,j);

end;

end;

A=tril(A);

3. The instructions below produce Figure 6.1.

>>

>>

>>

>>

>>

>>

>>

>>

>>

for i=1:50

n=10*i;A=pdSMat(n);b=ones(n,1);

tic;[L U]=LUfacto(A);xlu=BackSub(U,ForwSub(L,b));tlu(i)=toc;

tic;B=Cholesky(A);xcho=BackSub(B’,ForwSub(B,b));tcho(i)=toc;

end;

x=10*(1:50)’; % we represent CPU(LU) and 2*CPU(Cholesky)

plot(x,tlu,x,2*tcho,’+’,’MarkerSize’,10,’LineWidth’,3)

grid on;

set(gca,’XTick’,0:100:500,’YTick’,0:1:4,’FontSize’,24);

4

3

2

1

0

0

100 200 300 400 500

Fig. 6.1. Comparison of the LU and Cholesky methods computational time.

We remark that the curves are almost superimposed and therefore the

Cholesky method is twice as fast as the LU method.

Solution of Exercise 6.4 Influence of row permutations.

>> e=1.E-16;A=[e 1 1;1 -1 1; 1 0 1];b=[2 0 1]’;

>> [L U]=LUfacto(A);[w z p]=lu(A);[l u]=LUfacto(p*A);

>> x1=BackSub(U,ForwSub(L,b));

?? Error using ==> BackSub

singular matrix

>> det(U)

ans =

0

37

>> x2=BackSub(u,ForwSub(l,p*b))

x2 =

-0.0000

1.0000

1.0000

This striking difference (the first algorithm fails while the second one is successful) can be explained by the occurrence of too large entries in L and U

>> [norm(L) norm(U) norm(l) norm(u)]

ans =

1.0e+16 *

1.4142

1.4142

0.0000

0.0000

Indeed, the permutation matrix p exchanges the first and second rows of A.

This permutation avoids dividing by too small pivots in the computation of

the LU factorization; see the importance of pivoting in Exercise 6.3.

Solution of Exercise 6.7 2D Finite difference Laplacian.

1. Approximation of the Laplacian

a) Write the Taylor expansions.

b) Idem.

c) It is clear that

−ui−1,j + 2ui,j − ui+1,j −ui,j−1 + 2ui,j − ui,j+1

+

+O(h2 )+O(k 2 ),

h2

k2

which yields the following second-order approximation of the Laplacian

−ui−1,j + 2ui,j − ui+1,j

−ui,j−1 + 2ui,j − ui,j+1

−∆u(xi , yj ) ≈

+

.

h2

k2

2. See next question.

3. We find

4 −1 0 . . . 0

..

..

..

.

.

−1 4

.

.

.

.

.

.

.

B= 0

.

.

. 0

.

.

.

.

.

.

. −1 4 −1

−∆u(xi , yj )=

0 . . . 0 −1 4

The matrix of the complete system is

B

−IN

0

...

0

..

..

.

−IN

B

−IN

.

1

..

..

..

.

A= 2

.

.

.

0

0

h .

.

. . −I

..

B

−IN

N

0

...

0

−IN

B

38

CHAPTER 6.

EXERCISES OF CHAPTER 6

We notice that this matrix is tridiagonal by blocks: each diagonal block is

tridiagonal and each out-of-diagonal block is diagonal (it is equal to minus

the identity).

a) The following function defines A by blocks.

function A=laplacian2dD(n)

% Computes the 2D Laplacian matrix

% defined on the unit square

% with Dirichlet boundary conditions

% n = number of internal points in x = number of internal points in y

I0=-eye(n,n);

B0=2*eye(n,n)-diag(ones(n-1,1),1);B0=B0+B0’;

O=zeros(n,n);

A=[B0 I0;I0 B0];

X=O;

for j=3:n

A=[A [X I0]’;X I0 B0];

X=[X O];

end;

A=A*(n+1)*(n+1);

The instruction spy(laplacian2dD(5)) gives Figure 6.2. We may also

5

10

15

20

25

5

10

15

20

25

Fig. 6.2. Display of the 2D Laplacian matrix (N = 5).

define A pointwise, without using the block structure

function A=laplacian2dDBis(n)

A=4*eye(n*n,n*n);

for i=1:n*n-1

A(i,i+1)=-1;

A(i+1,i)=-1;

39

end;

for i=1:n-1

A(n*i,n*i+1)=0;

A(n*i+1,n*i)=0;

end;

for i=1:(n-1)*(n-1)

A(i,i+n)=-1;

A(i+n,i)=-1;

end;

A=A*(n+1)*(n+1);

b) function b=laplacian2dDRHS(n,frhs)

% Computes the RHS of the 2D laplacian problem

xgrid=(1:n)/(n+1);ygrid=(1:n)/(n+1);

xx=xgrid’*ones(1,n); % each column of xx contains xgrid

yy=ones(n,1)*ygrid; % each row of yy contains ygrid

frhs=str2func(frhs);

b=frhs(xx,yy);

b=b(:);

4. Validation. Example of a right-hand side f and of a solution u to validate

the scheme indexprocRHS

function fxy=RHS(x,y)

fxy=2*(x.*(1-x) + y.*(1-y));

function uxy=exactu(x,y);

uxy=x.*(1-x).*y.*(1-y);

% f (x, y) = 2x(1 − x) + 2y(1 − y)

% u(x, y) = x(1 − x)y(1 − y)

The following function gives an approximate solution of −∆u = f on the

unit square, with Dirichlet homogeneous boundary conditions. The reader

is invited to make the necessary changes to solve the same problem in a

square ]a, b[×]c, d[ with non-homogeneous Dirichlet boundary conditions.

function sol=Solve2dLaplacian(n,f2d)

% solves the Laplacian in 2D

% in the unit square

% with homogenous Dirichlet boundary conditions

% f is the LHS

A=laplacian2dD(n);

b=laplacian2dDRHS(n,f2d);

sol=A\b;

% sol is a vector

sol=reshape(sol,n,n);

% sol is a matrix

Call example (the result is displayed in Figure 6.3)

>>

>>

>>

>>

>>

n=10;

sol=Solve2dLaplacian(n,’RHS’);

x=(1:n)/(n+1);

surf(x,x,sol,’MarkerSize’,10,’LineWidth’,3)

set(gca,’XTick’,0:.25:1,’YTick’,0:.25:1,’FontSize’,20);

40

CHAPTER 6.

EXERCISES OF CHAPTER 6

>> xx=x’*ones(1,n);yy=ones(n,1)*x;

>> exact=exactu(xx,yy);

>> norm(exact-sol)

ans =

1.0488e-016

0.06

0.04

0.02

0

1

1

0.5

0.5

0 0

Fig. 6.3. Computation of the solution (for N = 10) of problem (6.7)-(6.8) with

f (x, y) = 2x(1 − x) + 2y(1 − y) and g = 0.

5. Convergence.

a) f (x, y) = 2π 2 u(x, y)−2π[(y−1) sin(πy) cos(πx)+(x−1) sin(πx) cos(πy)]

b) The limit value N0 = 80 depends on the computer used.

c) The matrix is formed of N times block B plus 2(N − 1) times matrix

−IN , hence we have Ne = N (3N − 2) + 2(N − 1)N = 5N 2 − 4N . The

following function builds A by exploiting the sparse structure of this

matrix.

function A=laplacian2dDSparse(n)

% Computing the sparse 2D Laplacian matrix

% in the unit square

% Dirichlet boundary conditions

% n = number of internal points i

% x = numbrer of internal points in x

% construction of block 1

i=1;

ii=[1,1,1];jj=[i,i+1,i+n];uu=[4,-1,-1];

for i=2:n-1

ii=[ii,i,i,i,i];jj=[jj,i-1,i,i+1,i+n];

uu=[uu,-1,4,-1,-1];

end;

41

i=n;

ii=[ii,i,i,i];jj=[jj,i-1,i,i+n];uu=[uu,-1,4,-1];

% construction of blocks from 2 to n-1

for k=2:n-1

I=(k-1)*n;

i=I+1;

ii=[ii,i,i,i,i];jj=[jj,i-n,i,i+1,i+n];

uu=[uu, -1,4,-1,-1];

for i=I+2:I+n-1

ii=[ii,i,i,i,i,i];jj=[jj,i-n,i-1,i,i+1,i+n];

uu=[uu,-1,-1,4,-1,-1];

end;

i=I+n;

ii=[ii,i,i,i,i];jj=[jj,i-n,i-1,i,i+n];

uu=[uu,-1,-1,4,-1];

end;

% construction of block n

i=(n-1)*n+1;

ii=[ii,i,i,i];jj=[jj,i-n,i,i+1];

uu=[uu,-1,4,-1];

for i=n*(n-1)+2:n*n-1

ii=[ii,i,i,i,i];jj=[jj,i-n,i-1,i,i+1];

uu=[uu,-1,-1,4,-1];

end;

i=n*n;

ii=[ii,i,i,i];jj=[jj,i-n,i-1,i];uu=[uu,-1,-1,4];

uu=uu*(n+1)*(n+1);

A=sparse(ii,jj,uu);

Analysis of the error: the result of the instructions below is displayed

in Figure 6.4. The curve representing the logarithm of the error in

terms of the logarithm of N turns out to be a straight line whose

slope is approximately equal to −1.86. Theory predicts a slope equal

to 2.

>> for k=1:10

>>

n=5*k;

>>

sol=Solve2dLaplacianSparse(n,’RHS2’);

>>

xgrid=(1:n)/(n+1);ygrid=(1:n)/(n+1);

>>

xx=xgrid’*ones(1,n);yy=ones(n,1)*ygrid;

>>

exact=exactu2(xx,yy);

>>

err(k)=norm(exact(:)-sol(:),’inf’);

>> end;

>> plot(log(5*(1:10)),log(err),’-+’,’MarkerSize’,10,’LineWidth’,3)

6. a) Computing the spectrum of A

>> n=20;A=laplacian2dD(n);[P,D]=eig(A);Spectrum=diag(D);

b) Sorting the spectrum

42

CHAPTER 6.

EXERCISES OF CHAPTER 6

−5

−6

−7

−8

−9

1.5

2

2.5

3

3.5

4

Fig. 6.4. Convergence of the finite difference method: log(error) in terms of log(n).

>> [ArrangedSpec, I]=sort(Spectrum);

>> Nbre=4;

>> % Computing the first eigenvalues

>> vp=ArrangedSpec(1:Nbre);

>> % and corresponding eigenvectors

>> VectorsP(:,1:Nbre)=P(:,I(1:Nbre));

To represent one of the eigenvectors, for instance, the first one,

>> j=1;X1=reshape(VectorsP(:,j),n,n);x=(1:n)/(n+1);

>> surfc(x,x,X1)

The reader can check that the n2 eigenvalues of A are precisely

jπh 4

iπh

) + sin2 (

) , 1 ≤ i, j ≤ N.

λi,j = 2 sin2 (

h

2

2

In Figure 6.5, we display iso-contours of the first four eigenvectors

(we should say eigenfunctions) of the discretized Laplacian in 10 × 10

points. These eigenfunctions oscillate more and more.

c) The function ϕ(x, y) = sin(αx) sin(βy) satisfies the boundary condition if and only if there exist two integers i and i such that α = iπ and

β = jπ. We deduce that the eigenvalues of the continuous problem

are

λi,j = (i2 + j 2 )π 2 , (i, j) ∈ N2

associated with eigenfunctions

ϕi,j (x, y) = sin(iπx) sin(jπy).

Hence, the first four eigenvalues are:

• a simple eigenvalue λ1,1 = π 2 associated with eigenfunction ϕ1,1 (x, y) =

sin(πx) sin(πy). The curve displayed in Figure 6.5 (top left) is proportional to ϕ1,1 ;

43

0

0.2

−0.05

0

−0.1

1

−0.2

1 1

0.5

0 0

0.5

0.2

0.1

0

0

−0.2

1

−0.1

1 1

0.5

0 0

0.5

0.5

0.5

0 0

0 0

0.5

0.5

1

1

Fig. 6.5. The first four (from left to right and from top to bottom) eigenfunctions

of the discretized Laplacian on a 20 × 20 regular grid.

• a double eigenvalue λ2,1 = λ1,2 = 5π 2 . The corresponding eigen

subspace is spanned by ϕ1,2 (x, y) = sin(πx) sin(2πy) and ϕ2,1 (x, y) =

sin(2πx) sin(πy). The surfaces displayed on Figure 6.5 (top right

and bottom left) are proportional to ϕ1,2 + ϕ2,1 and ϕ1,2 − ϕ2,1 ;

• a simple eigenvalue λ2,2 = 8π 2 associated with eigenfunction

ϕ2,2 (x, y) = sin(2πx) sin(2πy). The surface displayed in Figure 6.5

(bottom right) is proportional to −ϕ2,2 .

Solution of Exercise 6.8

>> n=5;A=laplacian2dD(n);

>> [L,U]=lu(A);

>> figure(1);spy(L);figure(2);spy(U);

We experimentally check on Figure 6.6 that the LU factorization preserves the

band structure of matrices (which is rigorously proved in Proposition 6.2.1),

but it “fills” this band. There are more nonzero entries in L and U than

in the original matrix A. We observe the same behavior with the Cholesky

factorization.

44

CHAPTER 6.

EXERCISES OF CHAPTER 6

5

5

10

10

15

15

20

20

25

5

10

15

20

25

25

5

10

15

20

25

Fig. 6.6. Matrices L (left) and U (right) of the LU factorization for the discrete

Laplacian matrix of Figure 6.2.

Solution of Exercise 6.10 Incomplete LU preconditioning.

1. It suffices to add tests to program LUfacto

function [L,U]=ILUfacto(A,tolerance)

% Incomplete LU factorization

%nargin = number of input arguments of the function

if nargin==1, tolerance=1.E-4;end; % default tolerance

[m,n]=size(A);

if m~=n, error(’the matrix is not square’), end;

small=1.e-12;

for k=1:n-1

if abs(A(k,k))<small, error(’error: zero pivot’), end;

for i=k+1:n

if abs(A(i,k)) > tolerance

A(i,k)=A(i,k)/A(k,k);

end

for j=k+1:n

if abs(A(i,j)) > tolerance

A(i,j)=A(i,j)-A(i,k)*A(k,j);

end;

end;

end;

end;

U=triu(A);L=A-U+diag(ones(n,1));

2. Since M = L̃Ũ is an approximation of A, a preconditioning of A is M −1 =

Ũ −1 L̃−1 .

>> A=laplacian2dD(10);[LI,UI]=ILUfacto(A);

>> [cond(A), cond(inv(UI)*inv(LI)*A)]

ans =

48.3742

5.1277

7

Exercises of chapter 7

Solution of Exercise 7.1

1. To compute the minimal norm solution of the least square problem we

use the pseudo-inverse of A as follows:

>> x1=pinv(A)*b1;x2=pinv(A)*b2;

>> norm(A*x1-b1),norm(A*x2-b2)

ans =

2.9873e-015

ans =

2.7255

This shows that b1 belongs to the image of A, but not b2 .

2. All solutions are of the form xi + x, where x ∈ Ker A.

>> X=null(A);d=size(X,2)

d =

2.

>> u=X(:,1);v=X(:,2);

% searching other solutions

% dimension of the null space of A

The columns of X form a basis of the null space. The solutions of problem

minx∈R3 kAx−bi k2 are vectors x = xi +αu+βv with α and β real numbers.

Solution of Exercise 7.2

1. >>

>>

>>

>>

>>

A=reshape(1:6,3,2);A=[A eye(size(A)); -eye(size(A)) -A];

b0=[2 4 3 -2 -4 -3]’;x0=pinv(A)*b0;

e=1.E-2;b=b0+e*rand(6,1);x=pinv(A)*b;

xerror=norm(x-x0)/norm(x0)

xerror =

0.0034

>> berror=norm(b-b0)/norm(b0)

berror =

0.0022

46

CHAPTER 7.

>>

>>

>>

>>

EXERCISES OF CHAPTER 7

eta=norm(A)*norm(x0)/norm(A*x0);

costheta=norm(A*x0)/norm(b0);

Cb=cond(A)/eta/costheta

Cb =

5.3982

The amplification coefficient is moderate since b0 belongs to the image of

A:

>> norm(A*x0-b0)

ans =

3.8202e-015

2. >>

>>

>>

>>

b1=[3 0 -2 -3 0 2]’;x1=pinv(A)*b1;

e=1.E-2;b=b1+e*rand(6,1);x=pinv(A)*b;

[x1 x]

% display the solutions

ans =

-0.0000

0.0032

0.0000

-0.0004

-0.0000

0.0030

0.0000

-0.0027

>> xerror=norm(x-x1)/norm(x1)

xerror =

5.1718e+011

>> costheta=norm(A*x1)/norm(b1)

costheta =

1.5053e-015

>> eta=norm(A)*norm(x1)/norm(A*x1);

>> costheta=norm(A*x1)/norm(b1);

>> Cb=cond(A)/eta/costheta

% coefficient C b is infinite

Cb =

7.2400e+014

This is due to the fact that b1 is orthogonal to the image of A. Indeed,

>> A’*b1

ans =

0

0

0

0

we infer that b1 ∈ Ker (At ) = ( Im A)⊥ .

3. >> for i=1:100;

>>

b2=b0*i/100+b1*(1-i/100);

>>

x2=pinv(A)*b2;

>>

e=1.E-2;b=b2+e*rand(6,1);x=pinv(A)*b;

>>

eta=norm(A)*norm(x2)/norm(A*x2);

47

>>

costheta=norm(A*x2)/norm(b2);

>>

coefCb(i)=cond(A)/eta/costheta;

>> end

>> xx=(1:100)/100;

plot(xx, coefCb,’-.’,’MarkerSize’,10,’LineWidth’,3)

As can be checked on Figure 7.1 the coefficient Cb decreases as the entry

of the right-hand side b, in the “direction” of ( Im A)⊥ , gets smaller.

400

350

300

250

200

150

100

50

0

0

0.2

0.4

0.6

0.8

1

Fig. 7.1. Least squares fitting method: amplification coefficient Cb .

Solution of Exercise 7.3

1. We compute the partial derivatives

∂E

(a1 , . . . , an ) = −2

∂αk

Z

1

0

n

X

ϕk (x) f (x) −

ai ϕi (x) dx.

i=1

The matrix and the right-hand side of the system are thus defined by

Z 1

Z 1

f (x)ϕi (x)dx.

ϕi (x)ϕj (x)dx, bi =

Ai,j =

0

0

2. For the canonical basis of Pn−1 , we have

Ai,j

1

=

,

i+j −1

bi =

Z

1

xi−1 f (x)dx.

0

We recognize the Hilbert matrix of size n × n. Since this matrix is very

ill-conditioned (see Exercise 5.9); solving system Aa = b is delicate.

48

CHAPTER 7.

EXERCISES OF CHAPTER 7

R1

3. We define on the set X of functions such that 0 f 2 (x)dx < ∞, the following scalar product

Z 1

hf, gi =

f (x)g(x)dx

(7.1)

0

that makes X a Hilbert space. The sought basis is simply obtained by

applying the Gram–Schmidt orthonormalization (in the sense of scalar

n−1

product (7.1)) procedure to the canonical basis (xi )i=0

.

For an orthonormal basis, A is just the identity! The computation

of a is

R1

explicit since ai = bi . However, the coefficients bi = 0 f (x)ϕi (x)dx are

usually computed through an approximate formula (or quadrature).

Solution of Exercise 7.4 Note that the symmetric matrix At A is positive

definite, since Ker (A) = {0}.

1. We obtain the following computational times:

a) % solution of the normal equations by Cholesky

>> tic;Apb=A’*b;B=chol(A’*A);x1=B\(B’\Apb);t1=toc

t1 =

0.0017

b) % solution of the system by the QR method

tic;[n,p]=size(A);[Q,R]=qr(A);c=Q’*b;x2=R(1:p,1:p)\c(1:p);t2=toc

>> tic;[n,p]=size(A);[Q,R]=qr(A);

>> c=Q’*b;x2=R(1:p,1:p)\c(1:p);t2=toc% calculation of the solution

0.0204

c) We write

min kAx − bk2 = minn kV ΣU ∗ x − bk2 = minn kΣU ∗ x − V ∗ bk2

x∈Rn

x∈R

= minn kΣy − ck2 ,

y∈R

x∈R

setting y = U ∗ x, c = V ∗ b

% solution of the system by the SVD method

>> tic;[n,p]=size(A);

[U,S,V]=svd(A);

% A = U SV t factorization

u=U’*b;x3=V*(u(1:p)./diag(S));t3=toc

t3 =

0.0409

In view of these results, we note that the Cholesky method is the fastest

and the SVD method is the slowest. The three methods give the same

minimum (as they should)

% computation of the minimum

norm(A*x1-b)

% or norm(ApA*x1-Apb)

ans =

7.6876

norm(A*x2-b)

% or norm(c(p+1:n))

49

ans =

7.6876

norm(A*x3-b)

ans =

7.6876

We can also compare the solutions

norm(x1-x2),norm(x1-x3),norm(x2-x3)

ans =

0.0000017

ans =

0.0000017

ans =

3.468E-13

The solution obtained by the Cholesky method seems to be slightly different from the other solutions.

2. >> e=1.e-5;P=[1 1 0;0 1 -1; 1 0 -1];

>> A=P*diag([e,1,1/e])*inv(P);b=ones(3,1);

>> Apb=A’*b;B=chol(A’*A);x1=B\(B’\Apb);

>> [n,p]=size(A);[Q,R]=qr(A);c=Q’*b;

>> x2=R(1:p,1:p)\c(1:p);

>> [x1 x2]

ans =

1.0e+04 *

0.0004

5.0001

0.0001

0.0001

0.0003

5.0000

The two solutions are completely different. The correct solution is the one

given by the QR method as we now check:

>> [norm(A*x1-b) norm(A*x2-b)]

ans =

0.5773

0.0000

Explanation: the matrix A is ill-conditioned cond(A)=1.5000e+10 and

At A is even more so: cond(A’*A)=2.0118e+16. However, this latter matrix is used in solving the normal equations by the Cholesky method.

Conclusion: it is better to use the QR method.

8

Exercises of chapter 8

Solution of Exercise 8.1

A=[1 2 2 1;-1 2 1 0;0 1 -2 2;1 2 1 2];b=ones(4,1);det(A)

ans =

- 4.

This matrix A is thus nonsingular.

1. We recognize the Jacobi method.

>> A=[1 2 2 1;-1 2 1 0;0 1 -2 2;1 2 1 2];b=ones(4,1);

>> M=diag(diag(A));N=M-A; B=inv(M)*N;c=inv(M)*b;

>> x0=ones(4,1);x=x0;

>> for i=1:300, x=B*x+c; if ~(mod(i,100)) , x’,end; end;

ans =

1.0e+14 *

-3.2751

ans =

1.0e+28 *

0.6894

0.9632

-2.0558

-2.1683

-1.9569

ans =

1.0e+42 *

-1.4680

-0.0939

-1.4909

3.7985

6.4370

-0.8940

The sequence seems to diverge. And indeed, it diverges since the spectral

radius of B is larger than 1.

>> max(abs(eig(B)))

ans =

1.3846

2. We recognize the Gauss-Seidel method. Same observations.

52

CHAPTER 8.

EXERCISES OF CHAPTER 8

3. >> M=2*tril(A);N=M-A; B=inv(M)*N;c=inv(M)*b;

>> x0=ones(4,1);x=x0;

>> for i=1:300, x=B*x+c; if ~(mod(i,100)), x’,end; end;

ans =

0.5000

1.0000

-0.5000

-0.5000

ans =

0.5000

1.0000

-0.5000

-0.5000

ans =

0.5000

1.0000

-0.5000

-0.5000

The sequence converges

>> A*ans’

ans =

1.0000

1.0000

1.0000

1.0000

Explanation: this time the spectral radius of B is less than 1.

>> max(abs(eig(B)))

ans =

0.8895

Solution of Exercise 8.2 The convergence of the Jacobi method is checked

by the following program.

function cvg=JacobiCvg(A)

D=diag(A);

if min(abs(D)) <1.E-12

error(’Jacobi is not definite’);

end;

M=diag(D);N=M-A; B=inv(M)*N;

% B is also defined by

if max(abs(eig(B))) > 1

% B=eye(size(A))-inv(M)*A

cvg=0;

else

cvg=1;

end;

The convergence of the Gauss-Seidel method is checked by the following program.

function cvg=GaussSeidelCvg(A)

D=diag(A);

if min(abs(D)) <1.E-12

error(’Gauss-Seidel is not definite’);

end;

M=tril(A);N=M-A; B=inv(M)*N;

if max(abs(eig(B))) > 1

53

cvg=0;

else

cvg=1;

end;

For the matrix A1 the Gauss-Seidel method converges, but the Jacobi method

diverges:

>> A1=[1 2 3 4;4 5 6 7;4 3 2 0;0 2 3 4];

>> JacobiCvg(A1), GaussSeidelCvg(A1)

ans =

0

ans =

1

For the matrix A2 , it is the Jacobi method that converges and the Gauss-Seidel

method that diverges:

>> A2=[2 4 -4 1;2 2 2 0;2 2 1 0;2 0 0 2];

>> JacobiCvg(A2), GaussSeidelCvg(A2)

ans =

1.

ans =

0.

Conclusion: we cannot compare these two methods for an arbitrary matrix.

However, for tridiagonal matrices we have Theorem 8.3.1.

Solution of Exercise 8.3 Numerical experiments show that the Jacobi

and Gauss-Seidel methods always converge for this type of matrix. Let us

prove that these methods actually converge for all diagonally strictly dominant

matrix.

• The characteristic polynomial of the Jacobi matrix is

pJ (λ) = det [D−1 (E + F ) − λI] = − det (D −1 ) det (λD − E − F ).

If A = D − E − F is diagonally strictly dominant, then, for all λ ∈ C such

that |λ| > 1, the matrix λD − E − F is also diagonally strictly dominant

and is thus nonsingular, det (λD − E − F ) 6= 0. Hence, there exists no

λ ∈ C, |λ| > 1 such that pJ (λ) = 0, which proves that %(J ) < 1.

• Apply the same reasoning to the Gauss-Seidel method, while noting that

pG1 (λ) = − det (D−1 ) det (λD − λE − F ).

Solution of Exercise 8.4

>> A=[5 1 1 1;0 4 -1 1;2 1 5 1; -2 1 0 4];b=[8 4 9 3]’;

>> sol=A\b;M1=[3 0 0 0;0 3 0 0;2 1 3 0;-2 1 0 4];

>> N=M1-A;B1=inv(M1)*N;c=inv(M1)*b;

54

CHAPTER 8.

EXERCISES OF CHAPTER 8

>> x=zeros(4,1);for i=1:20, x=B1*x+c;end;norm(x-sol)

ans =

0.0522

>> M2=[4 0 0 0;0 4 0 0;2 1 4 0;-2 1 0 4];N=M2-A;B2=inv(M2)*N;

>> c=inv(M2)*b;x=zeros(4,1);for i=1:20, x=B2*x+c;end;norm(x-sol)

ans =

3.5548e-09

The second method converges faster to the solution. Explanation:

>> [max(abs(eig(B1))), max(abs(eig(B2)))]

ans =

0.8234

0.3560

The spectral radius of the matrix of the second method is smaller than that

of the matrix of the first method.

Solution of Exercise 8.3 Numerical experiments show that the Jacobi

and Gauss-Seidel methods always converge for this type of matrix. Let us

prove that these methods actually converge for all diagonally strictly dominant

matrix.

• The characteristic polynomial of the Jacobi matrix is

pJ (λ) = det [D−1 (E + F ) − λI] = − det (D −1 ) det (λD − E − F ).

If A = D − E − F is diagonally strictly dominant, then, for all λ ∈ C such

that |λ| > 1, the matrix λD − E − F is also diagonally strictly dominant

and is thus nonsingular, det (λD − E − F ) 6= 0. Hence, there exists no

λ ∈ C, |λ| > 1 such that pJ (λ) = 0, which proves that %(J ) < 1.

• Apply the same reasoning to the Gauss-Seidel method, while noting that

pG1 (λ) = − det (D−1 ) det (λD − λE − F ).

Solution of Exercise 8.4

>> A=[5 1 1 1;0 4 -1 1;2 1 5 1; -2 1 0 4];b=[8 4 9 3]’;

>> sol=A\b;M1=[3 0 0 0;0 3 0 0;2 1 3 0;-2 1 0 4];

>> N=M1-A;B1=inv(M1)*N;c=inv(M1)*b;

>> x=zeros(4,1);for i=1:20, x=B1*x+c;end;norm(x-sol)

ans =

0.0522

>> M2=[4 0 0 0;0 4 0 0;2 1 4 0;-2 1 0 4];N=M2-A;B2=inv(M2)*N;

>> c=inv(M2)*b;x=zeros(4,1);for i=1:20, x=B2*x+c;end;norm(x-sol)

ans =

3.5548e-09

The second method converges faster to the solution. Explanation:

55

>> [max(abs(eig(B1))), max(abs(eig(B2)))]

ans =

0.8234

0.3560

The spectral radius of the matrix of the second method is smaller than that

of the matrix of the first method.

Solution of Exercise 8.5 Example of implementation of the Jacobi method.

function [x, iter]=Jacobi(A,b,tol,MaxIter,x)

% Computes by the Jacobi method the solution of Ax=b

% tol = ε of the termination criterion

% MaxIter = maximum number of iterations

% x = x0

[m,n]=size(A);

if m~=n, error(’the matrix is not square’), end;

if abs(det(A)) < 1.e-12

error(’the matrix is singular’)

end;

if ~(JacobiCvg(A)) , error(’Jacobi will not converge’); end;

% nargin = number of input arguments of the function

% default values of the arguments

if nargin==4 , x=zeros(size(b));end;

if nargin==3 , x=zeros(size(b));MaxIter=200;end;

if nargin==2 , x=zeros(size(b));MaxIter=200;tol=1.e-4;end;

M=diag(diag(A));

% Initialization

iter=0;r=b-A*x;

% Iterations

while (norm(r)>tol)&(iter<MaxIter)

y=M\r;

x=x+y;

r=r-A*y;

iter=iter+1;

end;

We obtain the following results

>> n=20;A=laplacian1dD(n);xx=(1:n)’/(n+1);b=xx.*sin(xx);sol=A\ b;

>> tol =1.e-2;x=Jacobi(A,b,tol,1000);norm(x-sol), norm(inv(A))*tol

ans =

0.0010

ans =

0.0010

>> tol =1.e-3;x=Jacobi(A,b,tol,1000);norm(x-sol), norm(inv(A))*tol

ans =

1.0148e-04

56

CHAPTER 8.

EXERCISES OF CHAPTER 8

ans =

1.0151e-04

>> tol =1.e-4;x=Jacobi(A,b,tol,1000);norm(x-sol), norm(inv(A))*tol

ans =

1.0148e-05

ans =

1.0151e-05

which show, on the one hand, that we can approximate very accurately the

exact solution, provided tol is small enough and MaxIter is large enough,

and on the other hand that the upper bound (8.6) is very sharp.

Solution of Exercise 8.5 Example of implementation of the Jacobi method.

function [x, iter]=Jacobi(A,b,tol,MaxIter,x)

% Computes by the Jacobi method the solution of Ax=b

% tol = ε of the termination criterion

% MaxIter = maximum number of iterations

% x = x0

[m,n]=size(A);

if m~=n, error(’the matrix is not square’), end;

if abs(det(A)) < 1.e-12

error(’the matrix is singular’)

end;

if ~(JacobiCvg(A)) , error(’Jacobi will not converge’); end;

% nargin = number of input arguments of the function

% default values of the arguments

if nargin==4 , x=zeros(size(b));end;

if nargin==3 , x=zeros(size(b));MaxIter=200;end;

if nargin==2 , x=zeros(size(b));MaxIter=200;tol=1.e-4;end;

M=diag(diag(A));

% Initialization

iter=0;r=b-A*x;

% Iterations

while (norm(r)>tol)&(iter<MaxIter)

y=M\r;

x=x+y;

r=r-A*y;

iter=iter+1;

end;

We obtain the following results

>> n=20;A=laplacian1dD(n);xx=(1:n)’/(n+1);b=xx.*sin(xx);sol=A\ b;

>> tol =1.e-2;x=Jacobi(A,b,tol,1000);norm(x-sol), norm(inv(A))*tol

ans =

0.0010

57

ans =

0.0010

>> tol =1.e-3;x=Jacobi(A,b,tol,1000);norm(x-sol), norm(inv(A))*tol

ans =

1.0148e-04

ans =

1.0151e-04

>> tol =1.e-4;x=Jacobi(A,b,tol,1000);norm(x-sol), norm(inv(A))*tol

ans =

1.0148e-05

ans =

1.0151e-05

which show, on the one hand, that we can approximate very accurately the

exact solution, provided tol is small enough and MaxIter is large enough,

and on the other hand that the upper bound (8.6) is very sharp.

Solution of Exercise 8.7

1. We start by programming the method.

function [x, iter]=RelaxJacobi(A,b,w,tol,MaxIter,x)

[m,n]=size(A);

if m~=n, error(’the matrix is not suqare’), end;

if abs(det(A)) < 1.e-12

error(’the matrix is singular’)

end;

if ~w, error(’omega=0’);end;

% nargin = number of input arguments of the function

% Default values of the arguments

if nargin==5 , x=zeros(size(b));end;

if nargin==4 , x=zeros(size(b));MaxIter=200;end;

if nargin==3 , x=zeros(size(b));MaxIter=200;tol=1.e-4;end;

if nargin==2 , x=zeros(size(b));MaxIter=200;tol=1.e-4;w=1;end;

D=diag(diag(A));

M=D/w;

% Initialization

iter=0;r=b-A*x;

% Iterations

while (norm(r)>tol)&(iter<MaxIter)

y=M\r;

x=x+y;

r=r-A*y;

iter=iter+1;

end;

if isnan(norm(r))

% this test is justified later

iter=MaxIter;

58

CHAPTER 8.

EXERCISES OF CHAPTER 8

end

Figure 8.1 is obtained by the following script

>>

>>

>>

>>

>>

>>

>>

>>

>>

>>

n=10;A=laplacian1dD(n);xx=(1:n)’/(n+1);b=xx.*sin(xx);sol=A\b;

pas=0.1;

for i=1:20

omega(i)=i*pas;

[x, iter]=RelaxJacobi(A,b,omega(i),1.e-4,1000,zeros(size(b)));

itera(i)=iter;

end;

plot(omega,itera,’-+’,’MarkerSize’,10,’LineWidth’,3)

grid on

set(gca,’XTick’,0:.4:2,’YTick’,200:200:1000,’FontSize’,24);

1000

800

600

400

200

0

0.4

0.8

1.2

1.6

2

Fig. 8.1. Relaxation of the Jacobi method: number of iterations in terms of ω.

It seems that the optimal value is close to ω = 1, i.e., the Jacobi method

is optimal. For ω = 0.3 and ω = 1.7 we have

>> [x, iter]=RelaxJacobi(A,b,.3,1.e-4,1000,zeros(size(b)));

>> [iter, norm(x), norm(A*x-b)]

ans =

737.0000

0.0847

0.0001

>> [x, iter]=RelaxJacobi(A,b,1.7,1.e-4,1000,zeros(size(b)));

>> [iter, norm(x), norm(A*x-b)]

ans =

1000

NaN

NaN

If, for ω = 0.3, the computed solution is a good approximation of the exact

solution, it is not the case for ω = 1.7. The algorithm has in fact diverged,

since it computes whimsical values of order 10305 . Note that to calculate

kAxb k2 , Matlab returns the value Nan which means “not a number”. This

justifies the test placed at the end of function RelaxJacobi which checks

that the values computed are real numbers and not Nan.

59

2. Theoretical analysis.

a) The Jacobi matrix is J = D −1 (E + F ) and the relaxed Jacobi matrix

is

o

D −1 n 1 − ω

n

o

D + E + F = D−1 (1 − ω)D + ωE + ωF .

Jω =

ω

ω

They are linked by the relation