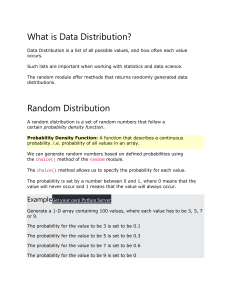

1. INTRODUCTION MACHINE LEARNING: Machine learning (ML) is a branch of artificial intelligence (AI) that enables computers to “self-learn” from training data and improve over time, without being explicitly programmed. Machine learning algorithms are able to detect patterns in data and learn from them, in order to make their own predictions. In short, machine learning algorithms and models learn through experience. Machine learning is the ability of the machines (i.e., computers or ideally computer programs) to lean from the past behaviour or data and to predict the future outcomes without being explicitly programmed to do so. Machine learning algorithms are constantly analysing and learning from the data to improve their future predictions and outcomes automatically. 1 TYPES OF MACHINE LEARNING : The types of machine learning algorithms differ in their approach, the type of data they input and output, and the type of task or problem that they are intended to solve. Broadly Machine Learning can be categorized into four categories. I. Supervised Learning II. Unsupervised Learning III. Reinforcement Learning IV. Semi-supervised Learning Machine learning enables analysis of massive quantities of data. While it generally delivers faster, more accurate results in order to identify profitable opportunities or dangerous risks, it may also require additional time and resources to train it properly. Supervised Learning: Supervised Learning is a type of learning in which we are given a data set and we already know what are correct output should look like, having the idea that there is a relationship between the input and output. Basically, it is learning task of learning a function that maps an input to an output based on example input-output pairs. It infers a function from labeled training data consisting of a set of training examples. Unsupervised Learning: Unsupervised Learning is a type of learning that allows us to approach problems with little or no idea what our problem should look like. We can derive the structure by clustering the data based on a relationship among the variables in data. With unsupervised learning there is no feedback based on prediction result. Basically, it is a type of selforganized learning that helps in finding previously unknown patterns in data set without pre-existing label. 2 Reinforcement Learning: Reinforcement learning is a learning method that interacts with its environment by producing actions and discovers errors or rewards. Trial and error search and delayed reward are the most relevant characteristics of reinforcement learning. This method allows machines and software agents to automatically determine the ideal behavior within a specific context in order to maximize its performance. Simple reward feedback is required for the agent to learn which action is best. Semi-Supervised Learning: Semi-supervised learning fall somewhere in between supervised and unsupervised learning, since they use both labelled and unlabelled data for training – typically a small amount of labelled data and a large amount of unlabelled data. The systems that use this method are able to considerably improve learning accuracy. Usually, semi-supervised learning is chosen when the acquired labelled data requires skilled and relevant resources in order to train it / learn from it. Otherwise, acquiring unlabelled data generally doesn’t require additional resources. MACHINE LEARNING MODELS: Machine Learning models can be understood as a program that has been trained to find patterns within new data and make predictions. These models are represented as a mathematical function that takes requests in the form of input data, makes predictions on input data, and then provides an output in response. First, these models are trained over a set of data, and then they are provided an algorithm to reason over data, extract the pattern from feed data and learn from those data. Once these models get trained, they can be used to predict the unseen dataset. 3 4 2.DATA INTRODUCTION: Data is a crucial component in the field of Machine Learning. It refers to the set of observations or measurements that can be used to train a machine-learning model. The quality and quantity of data available for training and testing play a significant role in determining the performance of a machine-learning model. Data can be in various forms such as numerical, categorical, or timeseries data, and can come from various sources such as databases, spreadsheets, or APIs. Machine learning algorithms use data to learn patterns and relationships between input variables and target outputs, which can then be used for prediction or classification tasks. Data is typically divided into two types: i. Labeled Data ii. Unlabeled Data LIMITATIONS OF TRADITIONAL DATA ANALYSIS Data analytics is being used in each and every industry for improving the performance of the companies just like in the example of a cricket match where the captain and coach analyze the performance of the opponent team and then device strategies for winning the match. If we begin to list down the areas where data analytics is being used the list would be infinitely long, so some of the major industries include banking, finance, insurance, telecom, healthcare, aerospace, retailers, internet, e-commerce companies, etc. Now, let us talk about the reasons as to why traditional analytics paved the way for machine learning. Limitations of traditional analytics: Involved Huge Efforts, Time Consuming 5 The traditional data analytics involved huge efforts from the statisticians and it was very time consuming as it involved a lot of human intervention, where each job had to be done manually. This became a liability for the companies because of additional costs borne by the statisticians and, they failed to provide accurate results. Static Data Model The models which these statisticians created were static in nature because of which the models needed to be revamped and re-calibrated periodically for making better predictions. This became an additional cost for the companies and they were struggling with the performance of the models. Struggle to Manage the Exponential Growth in the Volume, Velocity, and Variability of Data With the exponential growth in the volume, velocity, and variability of data, traditional analytics struggled to manage and incorporate and integrate the data using their traditional methods. As a result of which their models struggled with the performance. Lack of Skilled resources Lack of skilled resources was one of the major reasons for the downfall of traditional analytics. Companies found it difficult to get good resources and also, they lacked knowledge of advanced tools where data analytics could be done easily. Rise of Machine Learning: Human Intervention Reduced, Dynamic Model With the advent of machine learning, the challenges faced by traditional analytics were catered to. Machine Learning uses complex statistical methods and new-age tools for providing better and accurate solutions to the problems. 6 Using modern techniques and statistical methods we can create the capability for the machines to learn and make good and accurate predictions using complex machine learning algorithms. Modern machine learning algorithms help the companies to make better and accurate data-driven decisions where there are very few human efforts involved. The models created by these techniques are dynamic and cater to the changing variables. For example, with the traditional analytics statisticians had to perform each step of their analysis using the available analytical tools manually. Also, the process was not streamlined and as a result of which, they had to spend too much time back and forth the initial steps. The models created were also not dynamic, suppose they created a model based on their available data, so after each month or once in a quarter they had to check the performance of the model based on the new data and make changes to their models. This proved to be an additional cost for the companies as it involved hiring a data analyst for updating and maintaining the model. But with machine learning, the steps involved in creating the model were pre-defined and for each step, there were different algorithms. The models created based on the algorithms were more powerful and more accurate as they were built using complex statistical techniques. Human intervention was reduced a lot and as the process was streamlined, it saved a lot of time and money for the companies. Additionally, machine learning used new-age tools which made better predictions and accurate results in very less time. Managing the Exponential Growth in the Volume, Velocity, and Variability of Data One more area where the traditional analytics faltered was working with volumes of data. With the increase in volume, velocity, and variability of data, the old tools (e.g. MiniTab, Matlab, IBM SPSS, etc) failed to manage the data. For example, if the data was structured the tools were able to manage and analyze the data to some extent (i.e. the volume of 7 the data) but beyond that, the tools faltered. Also, for unstructured data, the tools had no answer at all, and there very few techniques or methods are known to the statisticians. But with modern-day tools (e.g. Python, R, Knime, Julia, etc.) and techniques, we can manage the mammoth data, be it structured or unstructured, Machine Learning had a solution for each of these problems. This is what the evolution of data analytics is all about. Computing is getting better and better with time. We had talked about the advantages of machine learning and how it is impacting our lives today, now let us talk about the challenges in machine learning. Limitations of Machine Learning: Large Data Requirement Fetching relevant data for training our model becomes a challenge. For making the model make better predictions, we have to train the model using enough data which is significant to the problem. In case there is not sufficient data available, the model build wouldn't be able to perform correctly. Lack of Trained Resources Using different ML techniques, we get our results; but interpretation and understanding of the results vary from person to person. At times it might become a major challenge if we do not have a trained resource, even if he had applied the correct algorithm based on the person’s judgement and understanding the solution might not be correct. Additionally, the selection of the correct algorithm depends solely on the data scientist’s decision. If that person is not a trained resource, the solution might not be correct as the proper and correct algorithm was not selected. Companies do face the challenge of finding a good resource that is sound technically and statistically. 8 Reliance on the Results Obtained by the ML techniques We tend to rely on the results obtained by the ML techniques more compared to our judgement and experience. Suppose we build a model using different variables as selected by the ML algorithm, and the algorithm doesn’t consider a variable that is according to the data scientist critical for the model. So, such kind of situations does occur if we rely completely on ML techniques. Treatment of Missing Fields Lastly, when we have missing fields in our data, we use machine learning to replace those missing values with some alternate values. Imputing those missing values at times might bias our data and hence impact our models and in turn the results. The conclusions and inferences might change if we use the missing data, so we treat them using ML techniques selected by the data scientists. The selection of the imputing technique depends on the data scientist's judgement and becomes a disadvantage for the model. We have talked about missing data but, what do we mean by that? Missing data: Whenever the data is not available or not present for any fields in the data, we say that the data is missing for that field. It is sometimes represented by a “Blank” or “N/A” or “n.a.” (Not Available) or even “-”. This is can be the case for both structured and unstructured data. Suppose for example, when we look at the bowling statistics for M.S. Dhoni in T20 internationals, we get missing data. The screenshot is given below for your reference. The data is not available since Dhoni hasn’t bowled in any of the T20 matches, but when we structure that data we get data missing for Dhoni. 9 There might be n number of reasons as to why some of the values for a variable is missing, it might be unavailability of data or the data was not captured, etc. But as a data scientist, when we work on building a model using the datasets we always have to replace those missing values with some other value in such a way that it won’t impact or have very little impact on our model. There are different techniques available for imputing or replacing those missing values which we are going to discuss later. After ML, what next? As we are making progress in data science and computing, new-age technologies are making a breakthrough. Researchers are constantly working on next-level techniques where there is minimalistic human intervention involved and better and more accurate results. Deep learning and now AI is a breakthrough in the world of data science. It is becoming better and better where deep learning-based techniques are reducing the time and costs for the data analysis. The progress can be summarized in the diagram given below. 10 11 3. INTRODUCTION TO PYTHON INTRODUCTION Python is a High-Level Programming Language, with high-level inbuilt data structures and dynamic binding. It is interpreted and an objectoriented programming language. Python distinguishes itself from other programming languages in its easy to write and understand syntax, which makes it charming to both beginners and experienced folks alike. The extensive applicability and library support of Python allow highly versatile and scalable software and products to be built on top of it in the real world. DATA TYPES: 12 ARITHMETIC OPERATORS: Some examples are shown below: 13 BASIC LIBRARIES IN PYTHON: The basic libraries in python are as follows: I. Pandas II. Numpy III. Matplotlib PANDAS: Pandas is a BSD (Berkeley Software Distribution) licensed opensource library. This popular library is widely used in the field of data science. They are primarily used for data analysis, manipulation, cleaning, etc. 14 Pandas allow for simple data modeling and data analysis operations without the need to switch to another language such as R. Usually, Python libraries use the following types of data: Data in a dataset. Time series containing both ordered and unordered data. Rows and columns of matrix data are labelled. Unlabeled information Any other type of statistical information Pandas can do a wide range of tasks, including: The data frame can be sliced using Pandas. Data frame joining and merging can be done using Pandas. Columns from two data frames can be concatenated using Pandas. In a data frame, index values can be changed using Pandas. In a column, the headers can be changed using Pandas. Data conversion into various forms can also be done using Pandas and many more. 15 2. NUMPY: Numpy is one of the most widely used open source python libraries, focusing on scientific competition. It features built in mathematical functions for matrices and multi dimensional data Numerical python is defined by the term Numpy. It can be used in linear algebra, as a multi dimensional container for generic data and as a random number generator, among other things. Some of the important functions in NUmpy are arcsin(), arcos(),tan(),rad() etc., Numpy array is a python object which defines an N-dimensional array with rows and columns. In python, Numpay array is preferred over lists because it takes up less memory and is faster and more convenient to use . 16 Features: 1. Interactive: Numpy is a very interactive and user-friendly library. 2. Mathematics: NumPy simplifies the implementation of difficult mathematical equations. 3. Intuitive: It makes coding and understanding topics a breeze. 4. A lot of Interaction: There is a lot of interaction in it because it is widely utilised, hence there is a lot of open source contribution. The NumPy interface can be used to represent images, sound waves, and other binary raw streams as an N-dimensional array of real values for visualization. Numpy knowledge is required for full-stack developers to implement this library for machine learning. CONDITIONAL STATEMENTS: if Statement The if statement is a conditional statement in python, that is used to determine whether a block of code will be executed or not. Meaning if the program finds the condition defined in the if statement to be true, it will go ahead and execute the code block inside the if statement. Syntax: if condition: # execute code block 17 if-else Statement As discussed above, the if statement executes the code block when the condition is true. Similarly, the else statement works in conjuncture with the if statement to execute a code block when the defined if condition is false. Syntax: if condition: # execute code if condition is true else: # execute code if condition if False if-elif-else ladder The elif statement is used to check for multiple conditions and execute the code block within if any of the conditions evaluate to be true. The elif statement is similar to the else statement in the context that it is optional but unlike the else statement, there can be multiple elif statements in a code block following an if statement if condition1: # execute this statement elif condition2: 18 # execute this statement .. .. else: # if non of the above conditions evaluate to True execute this statement Nested if Statements: A nested if statement is considered as if within another if statement(s). These are generally used to check for multiple conditions. Syntax: if condition1: if condition2: # execute code if both condition1and condition2 are True DESCRIPTIVE STATISTICS: Descriptive statistics is a means of describing features of a data set by generating summaries about data samples. It's often depicted as a summary of data shown that explains the contents of data. For example, 19 a population census may include descriptive statistics regarding the ratio of men and women in a specific city. TYPES OF DISCRIPTIVE STATISTICS: All descriptive statistics are either measures of central tendency or measures of variability, also known as measures of dispersion. Central Tendency Measures of central tendency focus on the average or middle values of data sets, whereas measures of variability focus on the dispersion of data. These two measures use graphs, tables and general discussions to help people understand the meaning of the analyzed data. Measures of central tendency describe the center position of a distribution for a data set. A person analyzes the frequency of each data point in the distribution and describes it using the mean, median, or mode, which measures the most common patterns of the analyzed data set. Measures of Variability Measures of variability (or the measures of spread) aid in analyzing how dispersed the distribution is for a set of data. For example, while the measures of central tendency may give a person the average of a data set, it does not describe how the data is distributed within the set. So while the average of the data maybe 65 out of 100, there can still be data points at both 1 and 100. Measures of variability help communicate this by describing the shape and spread of the data set. Range, quartiles, absolute deviation, and variance are all examples of measures of variability. 20 Consider the following data set: 5, 19, 24, 62, 91, 100. The range of that data set is 95, which is calculated by subtracting the lowest number (5) in the data set from the highest (100). Distribution Distribution (or frequency distribution) refers to the quantity of times a data point occurs. Alternatively, it is the measurement of a data point failing to occur. Consider a data set: male, male, female, female, female, other. The distribution of this data can be classified as: The number of males in the data set is 2. The number of females in the data set is 3. The number of individuals identifying as other is 1. The number of non-males is 4. 21 4.DATA EXPLORATION AND PREPROCESSING TARGET VARIABLE: The target variable is the feature of a dataset that you want to understand more clearly. It is the variable that the user would want to predict using the rest of the dataset. In most situations, a supervised machine learning algorithm is used to derive the target variable. Such an algorithm uses historical data to learn patterns and uncover relationships between other parts of your dataset and the target. Target variables may vary depending on the goal and available data. Data exploration, also known as exploratory data analysis (EDA), is a process where users look at and understand their data with statistical and visualization methods. This step helps identifying patterns and problems in the dataset, as well as deciding which model or algorithm to use in subsequent steps. Data exploration, also known as exploratory data analysis (EDA), is a process where users look at and understand their data with statistical and visualization methods. This step helps identifying patterns and problems in the dataset, as well as deciding which model or algorithm to use in subsequent steps. 22