BABU BANARSI DAS INSTITUTE OF TECHNOLOGY, GHAZIABAD

(AFFILIATED TO U.P. TECHNICAL UNIVERSITY, LUCKNOW)

(Established in 2000)

“REAL TIME SPEAKER RECOGNITION”

A project report submitted in partial fulfillment of the requirement for the

Award of Degree of

Bachelor of Technology

In

Electronics and Communication Engineering

Academic session 2008-2012

Submitted By:

Project guides:

Rohit Singh (0803531072)

Mr. Prashant Sharma

Harish Kumar (0803531409)

Mr. Anil Kumar Pandey

Samreen Zehra (0803531074)

DEPARTMENT OF ELECTRONICS AND COMMUNICATION ENGINEERING

MAY 2012

1

CERTIFICATE

This is to certify that Rohit Singh, Harish Kumar and Samreen Zehra of Department of

Electronics and Communication Engineering of this institute have carried out together the

project work presented in this report entitled „Real Time Speaker Recognition‟ in partial

fulfillment of award of degree of Bachelor of Technology in Electronics and Communication

Engineering from UPTU, Lucknow under our supervision. The report embodies results of

their works and studies carried out by the students themselves and the content of report does

not form the part of any other degree to these candidates or to anybody else.

Project guides:

Mr. Prashant Sharma (AP, ECE)

Mr. Anil Kumar Pandey (AP, ECE)

2

ACKNOWLEDGEMENT

We have taken efforts in this project. However, it would not have been possible without the

kind support and help of many individuals. We would like to extend our sincere thanks to all

of them.

We are highly indebted to Mr. K. L. Pursnani, Head of Department Electronic and

Communication Engineering, BBDIT, Ghaziabad, for his guidance and constant supervision

as well as for providing necessary information regarding the project & for his support in

completing the project.

We would like to express our special gratitude and thanks to our project guides, Mr. Prashant

Sharma and Mr. Anil Kumar Pandey for giving us such attention and time. Both of our guides

had taken great pain and efforts to help us the best way without whom this project would ever

be realized.

We would like to express our gratitude towards our parents and friends for their kind cooperation and encouragement which help us in completion of this project.

Our thanks and appreciations also go to our college in developing the project and people who

have willingly helped us out with their abilities.

Rohit Singh (0803531072)

Harish Kumar (0803531409)

Samreen Zehra (0803531074)

3

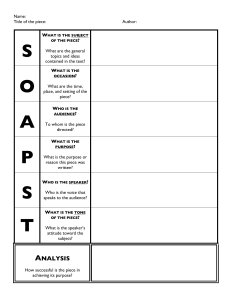

CONTENTS

1. Introduction…………………………………………………………………………...7

1.1 Project overview……………………………..…………………....………..……..7

1.2 Application…………………...………………………………...………………...10

2. Methodology……………...………………………………………….………………11

2.1 Algorithm………………………………………………...………………………11

2.2 Flow Chart……………………………………………...………………………...12

3. Identification background……………………………………………………….….13

3.1 DSP Fundamentals………………………………………………...……………..13

3.1.1

Basic Definitions………...………………………………….………...…..13

3.1.2

Convolution………………………………………………..……………...14

3.1.3

Discrete Fourier Transform………………………………...……………..14

3.2 Human Speech Production Model…………………………………...…………...15

3.2.1

Anatomy..………………………………………………….……………...15

3.2.2

Vocal Model……………………………………….…………………..….17

3.3 Speaker Identification……………………………………...……………………..18

4. Speech Feature Extraction……………………………………………………….…21

4.1 Introduction…………………………………………………………………...…..21

4.2 Short term analysis…..……………………………………………...…………....22

4.3 Cepstrum…………………………………………………………………...…….23

4.3.1

Delta Cepstrum……………..……………………………………….……24

4.4 Mel-Frequency Cepstrum Coefficients……………………………………….......25

4.4.1

Computing of mel cepstrum coefficient……………………………..……27

4.5 Framing and Windowing………..............................................................................29

5. Vector Quantization……………………………………………..………………......30

6. K-means………………………………………………………………………….…..33

6.1 Mean shift clustering…….…………………………………………...……...…...35

6.2 Bilateral filtering…….………………………………………………………........36

6.3 Speaker matching…………………..……………………………………..……….36

7. Euclidean distance………………………………………………………………...…...38

7.1 One dimension…………………………………………………………...……….40

7.2 Two dimension…………………….…………………………………..…………40

4

7.3Three dimension………….………………………………………………………..40

7.4 N dimensions………………………………………………………………….......40

7.5 Squared euclidean distance …………………....……………………...………….40

7.6 Decision making……………………………………………………...…………..41

8 Result and Conclusion…………………………………………………………………..42

8.1 Result…………………………………………………………………………......42

8.2 Pruning Basis…………………...…………………………………………...…...42

8.2.1 Static Pruning…………………………………………………………43

8.2.2 Adaptive Pruning……………………………………………………..44

8.3 Conclusion…………………………………………………………….....……….44

5

LIST OF FIGURES

Name of Figure

Page No

1.1 Identification Taxonomy………………………………………………………………..….8

3.1 Vocal Tract Model…………………………………………………………………….….16

3.2 Multi tube Lossless Model…………………...…………………………………..……….17

3.3 Source Filter Model……………………………………….………………….…………..18

3.4 Enrollment Phase…………………………………………………………….…………...19

3.5 Identification Phase………………………………………………………..……………...20

4.1 Short Term Analysis……………………………………………………….……………..23

4.2 Speech Magnitude Spectrum…………………………………………….……………….25

4.3 Cepstrum………………………………………………………………………….………25

4.4 Computing of mel-cepstrum coefficient……………………………………….…………27

4.5 Triangular Filter used to compute mel-cepstrum ………………………………..……….28

4.6 Quasi Stationary………………………………………………………………..…………29

5.1 Vector Quantization of Two Speakers………………………………………..…………..31

7.1 Decision Process…………………………………………………………….……………41

8.1 Distance stabilization……………………………………………………….…………….42

8.2 Evaluation of static variant using different pruning intervals…………………..………...43

8.3 Examples of the matching score distribution………………………………..……………44

6

CHAPTER 1

INTRODUCTION

1.1 Project overview

The human speech conveys different types of information. The primary type is the meaning or

words, which speaker tries to pass to the listener. But the other types that are also included in

the speech are information about language being spoken, speaker emotions, gender and

identity of the speaker. The goal of automatic speaker recognition is to extract, characterize

and recognize the information about speaker identity. Speaker recognition is usually divided

into two different branches:

Speaker verification

Speaker identification.

Speaker verification task is to verify the claimed identity of person from his voice. This

process involves only binary decision about claimed identity. In speaker identification there is

no identity claim and the system decides who the speaking person is.

Speaker identification can be further divided into two branches. Open-set speaker

identification decides to whom of the registered speakers‟ unknown speech sample belongs or

makes a conclusion that the speech sample is unknown. In this work,

we deal with the closed-set speaker identification, which is a decision making process of who

of the registered speakers is most likely the author of the unknown speech sample. Depending

on the algorithm used for the identification, the task can also be divided into text-dependent

and text-independent identification. The difference is that in the first case the system knows

the text spoken by the person while in the second case the system must be able to recognize

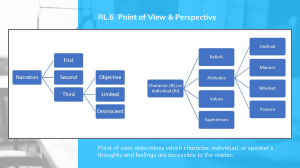

the speaker from any text. This taxonomy is

Represented in Figure 1.1

7

Speaker Recognition

Speaker verification

Speaker identification

Closed set Identification

Text independent identification

Open set Identification

Text dependent identification

Fig. 1.1 Identification Taxonomy

Speaker recognition is basically divided into two-classification: speaker recognition and

speaker identification and it is the method of automatically identify who is speaking on the

basis of individual information integrated in speech waves. Speaker recognition is widely

applicable in use of speaker‟s voice to verify their identity and control access to services such

as banking by telephone,

database access services, voice dialing telephone shopping,

information services, voice mail, security control for secret information areas, and remote

access to

computer AT and T and TI with Sprint have started field tests and actual

application of speaker recognition technology; many customers are already being used by

Sprint‟s Voice Phone Card.

8

Speaker recognition technology is the most potential technology to create new services that

will make our everyday lives more secured. Another important application of speaker

recognition technology is for forensic purposes. Speaker recognition has been seen an

appealing research field for the last decades which still yields a number of unsolved problems.

The main aim of this project is speaker identification, which consists of comparing a speech

signal from an unknown speaker to a database of known speaker. The system can recognize

the speaker, which has been trained with a number of speakers. Below figure shows the

fundamental formation of speaker identification and verification systems. Where the speaker

identification is the process of determining which registered speaker provides a given

speech. On the other hand, speaker verification is the process of rejecting or accepting the

identity claim of a speaker. In most of the applications, voice is use as the key to confirm the

identities of a speaker is classified as speaker verification.

Adding the open set identification case in which a reference model for an unknown speaker

may not exist can also modify above formation of speaker identification and verification

system. This is usually the case in forensic application. In this circumstances, an added

decision alternative, the unknown does not match any of the models, is required. Other

threshold examination can be used in both verification and identification process to decide

if the match is close enough to acknowledge the decision or if more speech data are

needed.

Speaker recognition can also divide into two methods, text-dependent and text independent

methods. In text dependent method the speaker has to say key words or sentences having the

same text for both training and recognition trials. Whereas in the text independent does not

rely on a specific text being speaks. Formerly text dependent methods were widely in

application, but later text independent is in use. Both text dependent and text independent

methods share a problem however.

By playing back the recorded voice of registered speakers this system can be easily deceived.

There are different technique is used to cope up with such problems. Such as a small set of

words or digits are used as input and each user is provoked to thorough a specified

sequence of key words that is randomly selected every time the system is used. Still this

method is not completely reliable. This method can be deceived with the highly developed

electronics recording system that can repeat secrete key words in a request order. Practical

9

applications for automatic speaker identification are obviously various kinds of security

systems. Human voice can serve as a key for any security objects, and it is not so easy in

general to lose or forget it. Another important property of speech is that it can be transmitted

by telephone channel, for example. This provides an ability to automatically identify speakers

and provide access to security objects by telephone. Nowadays, this approach begins to be

used for telephone credit card purchases and bank transactions. Human voice can also be used

to prove identity during access to any physical facilities by storing speaker model in a small

chip, which can be used as an access tag, and used instead of a pin code. Another important

application for speaker identification is to monitor people by their voices. For instance, it is

useful in information retrieval by speaker indexing of some recorded debates or news, and

then retrieving speech only for interesting speakers. It can also be used to monitor criminals in

common places by identifying them by voices. In fact, all these examples are actually

examples of real time systems. For any identification system to be useful in practice, the time

response, or time spent on the identification should be minimized. Growing size of speaker

database is its major limitation.

1.2 Application

Practical applications for automatic speaker identification are obviously various kinds of

security systems. Human voice can serve as a key for any security objects, and it is not so

easy in general to lose or forget it. Another important property of speech is that it can be

transmitted by telephone channel, for example. This provides an ability to automatically

identify speakers and provide access to security objects by telephone. Nowadays, this

approach begins to be used for telephone credit card purchases and bank transactions. Human

voice can also be used to prove identity during access to any physical facilities by storing

speaker model in a small chip, which can be used as an access tag, and used instead of a pin

code. Another important application for speaker identification is to monitor people by their

voices. For instance, it is useful in information retrieval by speaker indexing of some recorded

debates or news, and then retrieving speech only for interesting speakers. It can also be used

to monitor criminals in common places by identifying them by voices.

In fact, all these examples are actually examples of real time systems. For any identification

system to be useful in practice, the time response, or time spent on the identification should be

minimized. Growing size of speaker database is its major limitation.

10

CHAPTER 2

METHODOLOGY

2.1 Algorithm

1. Record the test sound through microphone.

2. Convert the recorded sound into .wav format.

3. Load recorded sound files from database.

4. Extract features from test file for recognition.

5. Extract features from all the files stored in the database.

6. Find out the centroids of the test file by any clustering algorithm.

7. Find out the centroids of the sample files stored in database so that codebooks can be

generated.

8. Calculate the Euclidean distance between the test file and individual samples of

the

database.

9. Find out the sample having minimum distance with the test file.

10. The sample corresponding to the minimum distance is most likely the author of the test

sound.

11

2.2 Flowchart

12

CHAPTER 3

IDENTIFICATION BACKGROUND

In this chapter we discuss theoretical background for speaker identification. We start from the

digital signal processing theory. Then we move to the anatomy of human voice production

organs and discuss the basic properties of the human speech production mechanism and

techniques for its modeling. This model will be used in the next chapter when we will discuss

techniques for the extraction of the speaker characteristics from the speech signal.

3.1 DSP Fundamentals

According to its abbreviation, Digital Signal Processing (DSP) is a part of computer science,

which operates with special kind of data – signals. In most cases, these signals are obtained

from various sensors, such as microphone or camera. DSP is the mathematics, mixed with the

algorithms and special techniques used to manipulate with these signals, converted to the

digital form.

3.1.1 Basic Definitions

By signal we mean here a relation of how one parameter is related to another parameter. One

of these parameters is called independent parameter (usually it is time), and the other one is

called dependent, and represents what we are measuring. Since both of these parameters

belong to the continuous range of values, we call such signal continuous signal. When

continuous signal is passed through an Analog-To-Digital converter (ADC)it is said to be

discrete or digitized signal. Conversion works in the following way: every time period, which

occurs with frequency, called sampling frequency, signal value is taken and quantized, by

selecting an appropriate value from the range of7possible values. This range is called

quantization precision and usually represented as an amount of bits available to store signal

value. Based on the sampling theorem, proved by Nyquist in 1940, digital signal can contain

frequency components only up to one half of the sampling rate. Generally, continuous signals

are what we have in nature while discrete signals exist mostly inside computers. Signals that

use time as the independent parameter are said to be in the time domain, while signals that use

13

frequency as the independent parameter are said to be in the frequency domain. One of the

important definitions used in DSP is the definition of linear system. By system we mean here

any process that produces output signal in response on a given input signal. A system is called

linear if it satisfies the following three properties: homogeneity, additivity and shift

invariance.

3.1.2 Convolution

An impulse is a signal composed of all zeros except one non-zero point. Every signal can be

decomposed into a group of impulses, each of them then passed through a linear system and

the resulting output components are synthesized or added together. The resulting signal is

exactly the same as obtained by passing the original signal through the system. Every impulse

can be represented as a shifted and scaled delta function, which is a normalized impulse, that

is, sample number zero has a value of one and all other samples have a value of zero. When

the delta function is passed through a linear system, its output is called impulse response. If

two systems are different they will have different impulse responses. Scaled and shifted

impulse response and scaling and shifting of the input are identical to the scaling and shifting

of the output. It means that knowing systems impulse response we know everything about the

system. Convolution is a formal mathematical operation, which is used to describe

Relationship between three signals of interest: input and output signals, and the impulse

response of the system. It is usually said that the output signal is the input signal convolved

with the system‟s impulse response. Mathematics behind the convolution does not restrict

how long the impulse response is. It only says that the size of the output signal is the size of

the input signal plus the size of the impulse response minus one. Convolution is very

important concept in DSP. Based on the properties of linear systems it provides the way of

combining two signals to form a third signal. A lot of mathematics behind the DSP is based

on the convolution.

3.1.3 Discrete Fourier Transform

Fourier transform belongs to the family of linear transforms widely used in DSP based on

decomposing signal into sinusoids (sine and cosine waves).Usually in DSP we use the

Discrete Fourier Transform (DFT), a special kind of Fourier transform used to deal with a

periodic discrete signals. Actually there are an infinite number of ways how signal can be

14

decomposed but sinusoids are selected because of their sinusoidal fidelity that means that

sinusoidal input to the linear system will produce sinusoidal output, only the amplitude and

phase may change, frequency and shape remain the same.

3.2 Human Speech Production Model

Undoubtedly, ability to speak is the most important way for humans to communicate between

each other. Speech conveys various kind of information, which is essentially the meaning of

information speaking person wants to impart, individual information representing speaker and

also some emotional filling. Speech production begins with the initial formalization of the

idea which speaker wants to impart to the listener. Then speaker converts this idea into the

appropriate order of words and phrases according to the language. Finally, his brain produces

motor nerve commands, which move the vocal organs in an appropriate way. Understanding

of how human produce sounds forms the basis of speaker identification.

3.2.1 Anatomy

The sound is an acoustic pressure formed of compressions and rarefactions of air molecules

that originate from movements of human anatomical structures. Most important components

of the human speech production system are the lungs (source of air during speech), trachea

(windpipe), larynx or its most important part vocal cords (organ of voice production), nasal

cavity (nose), soft palate or velum (allows passage of air through the nasal cavity), hard

palate (enables consonant articulation), tongue, teeth and lips. All these components, called

articulators by speech scientists, move to different positions to produce various sounds. Based

on their production, speech sounds can also be divided into consonants and voiced and

unvoiced vowels.

From the technical point of view, it is more useful to think about speech production system in

terms of acoustic filtering operations that affect the air going from the lungs. There are three

main cavities that comprise the main acoustic filter. According to they are nasal, oral and

pharyngeal cavities.

The articulators are responsible for changing the properties of the system and form its output.

Combination of these cavities and articulators is called vocal tract. Its simplified acoustic

15

model is represented in Figure 3.1.

Fig. 3.1 Vocal Tract Model

Speech production can be divided into three stages: first stage is the sound source production,

second stage is the articulation by vocal tract, and the third stage is sound radiation or

propagation from the lips and/or nostrils. A voiced sound is generated by vibratory motion of

the vocal cords powered by the airflow generated by expiration. The frequency of oscillation

of vocal cords is called the fundamental frequency. Another type of sounds -unvoiced sound

is produced by turbulent airflow passing through a narrow constriction in the vocal tract.

In a speaker recognition task, we are interested in the physical properties of human vocal

tract. In general it is assumed that vocal tract carries most of the speaker related information.

However, all parts of human vocal tract described above can serve as speaker dependent

characteristics. Starting from the size and power of lungs, length and flexibility of trachea and

ending by the size, shape and other physical characteristics of tongue, teeth and lips. Such

characteristics are called physical distinguishing factors. Another aspects of speech

production that could be useful indiscriminating between speakers are called learned factors,

which includes peaking rate, dialect, and prosodic effects.

16

3.2.2 Vocal Model

In order to develop an automatic speaker identification system, we should construct

reasonable model of human speech production system. Having such a model, we can extract

its properties from the signal and, using them, we can decide whether or not two signals

belong to the same model and as a result to the same speaker.

Modeling process is usually divided into two parts: the excitation (or source) modeling and

the vocal tract modeling. This approach is based on the assumption of independence of the

source and the vocal tract models. Let us look first at the continuous-time vocal tract model

called multi tube lossless model, which is based on the fact that production of speech is

characterized by changing the vocal tract shape. Because the formalization of such a timevarying vocal-tract shape model is quite complex, in practice it is simplified to the series of

concatenated lossless acoustic tubes with varying cross-sectional areas, as shown in Figure.

This model consists of a sequence of tubes with cross-sectional areas Ak and lengths Lk. In

practice the lengths of tubes assumed to be equal. If a large amount of short tubes is used,

then we can approach to the continuously varying cross-sectional area, but at the cost of more

complex model. Tract model serves as a transition to the more general discrete-time model,

also known as source-filter model, which is shown in Figure.

Fig. 3.2 Multi tube Lossless Model

17

In this model, the voice source is either a periodic pulse stream or uncorrelated white noise, or

a combination of these. This assumption is based on the evidence from human anatomy that

all types of sounds, which can be produced by humans, are divided into three general

categories: voiced, unvoiced and combination of these two. Voiced signals can be modeled as

a basic or fundamental frequency signal filtered by the vocal tract and unvoiced as a white

noise also filtered by the vocal tract. Here E(z) represents the excitation function, H(z)

represents the transfer function, ands(n) is the output of the whole speech production system

[8]. Finally, we can think about vocal tract as a digital filter, which affects source signal and

about produced sound output as a filter output. Then based on the digital filter theory we can

extract the parameters of the system from its output

Fig.3.3 Source Filter Model

3.3 Speaker Identification

The human speech conveys different types of information. The primary type is the meaning or

words, which speaker tries to pass to the listener. But the other types that are also included in

the speech are information about language being spoken, speaker emotions, gender and

identity of the speaker. The goal of automatic speaker recognition is to extract, characterize

and recognize the information about speaker identity. Speaker recognition is usually divided

into two different branches, speaker verification and speaker identification. Speaker

verification task is to verify the claimed identity of person from his voice. This process

involves only binary decision about claimed identity. In speaker identification there is no

identity claim and the system decides who the speaking person is Speaker identification can

18

be further divided into two branches. Open-set speaker identification decides to whom of the

registered speakers‟ unknown speech sample belongs or makes a conclusion that the speech

sample is unknown. In this work, we deal with the closed-set speaker identification, which is

a decision making process of whom of the registered speakers is most likely the author of the

unknown speech sample. Depending on the algorithm used for the identification, the task can

also be divided into text-dependent and text-independent identification. The difference is that

in the first case the system knows the text spoken by the person while in the second case the

system must be able to recognize the speaker from any text.

The process of speaker identification is divided into two main phases.

1) Speaker enrollment

2) Speaker Identification

During the first phase, speaker enrollment, speech samples are collected from

The speakers and they are used to train their models. The collection of enrolled models is also

called a speaker database. Then in the enrollment phase, these features are modeled and stored

in the speaker database. This process is represented in following figure.

Fig. 3.4 Enrollment Phase

The next phase of speech recognition is identification phase which is shown below in the

figure. In the second phase, identification phase, a test sample from an unknown speaker is

compared against the speaker database. Both phases include the same first step, Feature

extraction, which is used to extract speaker dependent characteristics from speech. The main

purpose of this step is to reduce the amount of test data while retaining speaker discriminative

information.

19

Fig. 3.5 Identification Phase

However, these two phases are closely related. For instance, identification algorithm usually

depends on the modeling algorithm used in the enrollment phase. This thesis mostly

concentrates on the algorithms in the identification phase and their optimization.

20

CHAPTER 4

SPEECH FEATURE EXTRACTION

4.1 Introduction

The acoustic speech signal contains different kind of information about speaker. This includes

“high-level” properties such as dialect, context, speaking style, emotional state of speaker and

many others. A great amount of work has been already done in trying to develop

identification algorithms based on the methods used by humans to identify speaker. But these

efforts are mostly impractical because of their complexity and difficulty in measuring the

speaker discriminative properties used by humans. More useful approach is based on the

“low-level” properties of the speech signal such as pitch (fundamental frequency of the vocal

cord vibrations), intensity, formant frequencies and their bandwidths, spectral correlations,

short-time spectrum and others. From the automatic speaker recognition task point of view, it

is useful to think about speech signal as a sequence of features that characterize both the

speaker as well as the speech. It is an important step in recognition process to extract

sufficient information for good discrimination in a form and size which is amenable for

effective modeling. The amount of data, generated during the speech production, is quite large

while the essential characteristics of the speech process change relatively slowly and

therefore, they require less data.

According to these matters feature extraction is a process of reducing data while retaining

speaker discriminative information.

When dealing with speech signals there are some criteria that the extracted features should

meet. Some of them are listed below:

discriminate between speakers while being tolerant of intra-speaker variability,

easy to measure,

stable over time,

occur naturally and frequently in speech,

change little from one speaking environment to another,

Not be susceptible to mimicry.

21

For MFCC feature extraction, we use the melcepst function from Voicebox. Because of its

nature, the speech signal is a slowly varying signal or quasi-stationary. It means that when

speech is examined over a sufficiently short period of time (20-30 milliseconds) it has quite

stable acoustic characteristics. It leads to the useful concept of describing human speech

signal, called “short-term analysis”, where only a portion of the signal is used to extract signal

features at one time. It works in the following way: predefined length window (usually 20-30

milliseconds) is moved along the signal with an overlapping (usually 30-50% of the window

length) between the adjacent frames. Overlapping is needed to avoid losing of information.

Parts of the signal formed in such a way are called frames. In order to prevent an abrupt

change at the end points of the frame, it is usually multiplied by a window function. The

operation of dividing signal into short intervals is called windowing and such segments are

called windowed frames (or sometime just frames). There are several window functions used

in speaker recognition area but the most popular is Hamming window function, which is

described by the following equation:

2𝑛𝜋

𝑊 𝑛 = 0.54 − 0.46cos

(

)

𝑁−1

Where N is the size of the window or frame. A set of features extracted from one frame is

called feature vector.

4.2 Short Term Analysis

Frame1, Frame2, Frame3, … Frame N the speech signal is slowly varying over time (quasistationary), that is when the signal is examined over a short period of time (5-100msec), the

signal is fairly stationary. Therefore speech signals are often analyzed in short time segments,

which is referred to as short-time spectral analysis.

22

Fig. 4.1 Short Term Analysis

4.3 Cepstrum

The speech signal

s(n) can be represented as a “quickly varying” source signal

e(n)

convolved with the “slowly varying” impulse response h(n) of the vocal tract represented as a

linear filter. We have access only to the output (speech signal) and it is often desirable to

eliminate one of the components. Separation of the source and the filter parameters from the

mixed output is in general difficult problem when these components are combined using not

linear operation, but there are various techniques appropriate for components combined

linearly. The cepstrum is representation of the signal where these two components are

resolved into two additive parts. It is computed by taking the inverse DFT of the logarithm of

the magnitude spectrum of the frame. This is represented in the following equation:

23

𝐶𝑒𝑝𝑠𝑡𝑟𝑢𝑚 𝑓𝑟𝑎𝑚𝑒 = 𝐼𝐷𝐹𝑇 log 𝐷𝐹𝑇 𝑓𝑟𝑎𝑚𝑒

Some explanation of the algorithm is therefore needed. By moving to the frequency domain

we are changing from the convolution to the multiplication. Then by taking logarithm we are

moving from the multiplication to the addition. That is desired division into additive

components. Then we can apply linear operator inverse DFT, knowing that the transform will

operate individually on these two parts and knowing what Fourier transform will do with

quickly varying and slowly varying parts. Namely it will put them into different, hopefully

separate parts in new, also called quefrency axis.

4.3.1 Delta Cepstrum

The cepstral coefficients provide a good representation of the local spectral properties

of the framed speech. But, it is well known that a large amount of information resides

in the transitions from one segment of speech to another. An improved representation can

be obtained by extending the analysis to include information about the temporal cepstral

derivative. Delta Cepstrum is used to catch the changes between the different frames. Delta

Cepstrum defined as:

∆𝐶𝑠 𝑛; 𝑚 =

1

{𝐶 𝑛; 𝑚 + 1 − 𝐶𝑠 (𝑛; 𝑚 − 1)}

2 𝑠

𝑖 = 1, … , 𝑄

The results of the feature extraction are a series of vectors characteristic of the timevarying spectral properties of the speech signal.

24

Fig.4.2 Speech Magnitude Spectrum

We can see that the speech magnitude spectrum is combined from slow and quickly varying

parts. But there is still one problem: multiplication is not a linear operation. We can solve it

by taking logarithm from the multiplication as described earlier. Finally, let us look at the

result of the inverse DFT in Figure.

Fig.4.3Cepstrum

We can see that two components are clearly distinctive now.

4.4 Mel-Frequency Cepstrum Coefficients

In this project we are using Mel Frequency Cepstral Coefficient. Mel frequency Cepstral

Coefficients are coefficients that represent audio based on perception. This coefficient has a

great success in speaker recognition application. It is derived from the Fourier Transform of

the audio clip. In this technique the frequency bands are positioned logarithmically, whereas

in the Fourier Transform the frequency bands are not positioned logarithmically. As the

frequency bands are positioned logarithmically in MFCC, it approximates the human

25

system response more closely than any other system. These coefficients allow better

processing of data. In the Mel Frequency Cepstral Coefficients the calculation of the Mel

Cepstrum is same as the real Cepstrum except the Mel Cepstrum‟s frequency scale is warped

to keep up a correspondence to the Mel scale. The Mel scale was projected by Stevens,

Volkmann and Newman in 1937. The Mel scale is mainly based on the study of observing the

pitch or frequency Perceived by the human. The scale is divided into the units mel. In this test

the listener or test person started out hearing a frequency of 1000 Hz, and labeled it 1000 Mel

for reference. Then the listeners were asked to change the frequency till it reaches to the

frequency twice the reference frequency. Then this frequency labeled 2000 Mel. The same

procedure repeated for the half the frequency, then this frequency labeled as 500 Mel, and so

on. On this basis the normal frequency is mapped into the Mel frequency. The Mel scale is

normally a linear mapping below 1000 Hz and logarithmically spaced above 1000 Hz. Figure

below shows the example of normal frequency is mapped into the Mel frequency.

Mel-frequency cepstrum coefficients (MFCC) are well known features used to describe

speech signal. They are based on the known evidence that the information carried by lowfrequency components of the speech signal is phonetically more important for humans than

carried by high-frequency components. Technique of computing MFCC is based on the shortterm analysis, and thus from each frame a MFCC vector is computed. MFCC extraction is

similar to the cepstrum calculation except that one special step is inserted, namely the

frequency axis is warped according to the mel-scale. Summing up, the process of extracting

MFCC from continuous speech is illustrated in Figure.

A “mel” is a unit of special measure or scale of perceived pitch of a tone. It does not

correspond linearly to the normal frequency, indeed it is approximately linear below 1 kHz

and logarithmic above.

26

4.4.1Computing of melcepstrum coefficients

Fig. 4.4 Computing of mel-cepstrum coefficient

Figure above shows the calculation of the Mel Cepstrum Coefficients. Here we are using the

bank filter to warping the Mel frequency. Utilizing the bank filter is much more convenient to

do Mel frequency warping, with filters centered according to Mel frequency. According to the

Mel frequency the width of the triangular filters vary and so the log total energy in a critical

band around the center frequency is included. After warping are a number of coefficients.

Finally we are using the Inverse Discrete Fourier Transformer for the cepstral coefficients

calculation. In this step we are transforming the log of the quefrench domain coefficients to

the frequency domain. Where N is the length of the DFT we used in the cepstrum section.

To place more emphasize on the low frequencies one special step before inverse DFT in

calculation of cepstrum is inserted, namely mel-scaling. A “mel” is a unit of special measure

or scale of perceived pitch of a tone. It does not correspond linearly to the normal frequency,

indeed it is approximately linear below 1 kHz and logarithmic above. This approach is based

on the psychophysical studies of human perception of the frequency content of sounds. One

27

useful way to create mel-spectrum is to use a filter bank, one filter for each desired melfrequency component. Every filter in this bank has triangular band pass frequency response.

Such filters compute the average spectrum around each center frequency with increasing

bandwidths, as displayed in Figure.

Fig. 4.5 Triangular Filter used to compute mel-cepstrum

This filter bank is applied in frequency domain and therefore, it simply amounts to taking

these triangular filters on the spectrum. In practice the last step of taking inverse DFT is

replaced by taking discrete cosine transform(DCT) for computational efficiency.

The number of resulting mel-frequency cepstrum coefficients is practically chosen relatively

low, in the order of 12 to 20 coefficients. The zeroth coefficient is usually dropped out

because it represents the average log-energy of the frame and carries only a little speaker

specific information.

However, MFCC are not equally important in speaker identification and thus some

coefficients weighting might by applied to acquire more precise result. Different approach to

the computation of MFCC than described in this work is represented in that is simplified by

omitting filter bank analysis.

28

4.5 Framing and Windowing

The speech signal is slowly varying over time (quasi-stationary) that is when the signal is

examined over a short period of time (5-100msec), the signal is fairly stationary. Therefore

speech signals are often analyzed in short time segments, which are referred to as

short-time spectral analysis. This practically means that the signal is blocked in frames

of typically 20-30 msec. Adjacent frames typically overlap each other with 30-50%,

this is done in order not to lose any information due to the windowing.

Fig 4.6 Quasi Stationary

After the signal has been framed, each frame is multiplied with a window function w(n) with

length N, where N is the length of the frame. Typically the Hamming window is used:

2𝜋𝑛

𝑊 𝑛 = 0.54 − 0.46cos

(

)

𝑁−1

Where 0 ≤ 𝑛 ≤ 𝑁 − 1

The windowing is done to avoid problems due to truncation of the signal as windowing helps

in the smoothing of the signal.

29

CHAPTER 5

VECTOR QUANTIONZATION

A speaker recognition system must able to estimate probability distributions of the

computed feature vectors. Storing every single vector that generate from the training mode

is impossible, since these distributions are defined over a high-dimensional space. It is

often easier to start by quantizing each feature vector to one of a relatively small number of

template vectors, with a process called vector quantization. VQ is a process of taking a large

set of feature vectors and producing a smaller set of measure vectors that represents the

centroids of the distribution.

The technique of VQ consists of extracting a small number of representative feature vectors

as an efficient means of characterizing the speaker specific features. By means of VQ, storing

every single vector that we generate from the training is impossible.

Vector quantization (VQ) is a process of mapping vectors from a vector space to a finite

number of regions in that space. These regions are called clusters and represented by their

central vectors or centroids. A set of centroids, which represents the whole vector space, is

called a codebook. In speaker identification, VQ is applied on the set of feature vectors

extracted from the speech sample and as a result, the speaker codebook is generated. Such

codebook has a significantly smaller size than extracted vector set and referred as a speaker

model.

Actually, there is some disagreement in the literature about approach used in VQ. Some

authors consider it as a template matching approach because VQ ignores all temporal

variations and simply uses global averages (centroids). Other authors consider it as a

stochastic or probabilistic method, because VQ uses centroids to estimate the modes of a

probability distribution. Theoretically it is possible that every cluster, defined by its centroid,

models particular component of the speech. But practically, however, VQ creates

unrealistically clusters with rigid boundaries in a sense that every vector belongs to one and

only one cluster. Mathematically a VQ task is defined as follows: given a set of feature

vectors, find a partitioning of the feature vector space into the predefined number of regions,

which do not overlap with each other and added together form the whole feature vector space.

Every vector inside such region is represented by the corresponding centroid. The process of

VQ for two speakers is represented in Figure.

30

Fig. 5.1 Vector Quantization of Two Speakers

There are two important design issues in VQ: the method for generating the codebook and

codebook size. Known clustering algorithms for codebook generation are:

Generalized Lloyd algorithm (GLA),

Self-organizing maps (SOM),

Pair wise nearest neighbor (PNN),

Iterative splitting technique (SPLIT),

Randomized local search (RLS).

K means

According to, iterative splitting technique should be used when the running time is important

but RLS is simpler to implement and generates better codebooks in the case of speaker

31

identification task. Codebook size is a trade-off between running time and identification

accuracy. With large size, identification accuracy is high but at the cost of running time and

vice versa. Experimental result obtained in is that saturation point choice is 64 vectors in

codebook. The quantization distortion (quality of quantization) is usually computed as the

sum of squared distances between vector and its representative (centroid). The well-known

distance measures are Euclidean, city block distance.

32

CHAPTER 6

K MEANS

The K-means algorithm partitions the T feature vectors into M centroids. The algorithm first

chooses M cluster-centroids among the T feature vectors. Then each feature vector is assigned

to the nearest centroid, and the new centroids are calculated. This procedure is continued until

a stopping criterion is met, that is the mean square error between the feature vectors and the

cluster-centroids is below a certain threshold or there is no more change in the cluster-center

assignment.

In data

mining, k-means

clustering is

a

method

of cluster

analysis which

aims

to partition n observations into k clusters in which each observation belongs to the cluster

with the nearest mean. This results into a partitioning of the data space into cells. The problem

is computationally difficult (NP-hard), however there are efficient heuristic algorithms that

are commonly employed and converge fast to a local optimum. These are usually similar to

the expectation-maximization algorithm for mixtures of Gaussian distributions via an iterative

refinement approach employed by both algorithms. Additionally, they both use cluster centers

to model the data, however k-means clustering tends to find clusters of comparable spatial

extent, while the expectation-maximization mechanism allows clusters to have different

shapes.

The most common algorithm uses an iterative refinement technique. Due to its ubiquity it is

often called the k-means algorithm; it is also referred to as Lloyd's algorithm, particularly in

the computer science community.

Given an initial set of k means m1(1),…,mk(1) (see below), the algorithm proceeds by

alternating between two steps:

33

(𝑡)

Where each 𝑥𝑝 goes into exactly one 𝑆𝑖 , even if it could go in two of them. Update step:

Calculate the new means to be the centroid of the observations in the cluster.

The algorithm is deemed to have converged when the assignments no longer change.

Commonly used initialization methods are Forgy and Random Partition. The Forgy method

randomly chooses k observations from the data set and uses these as the initial means. The

Random Partition method first randomly assigns a cluster to each observation and then

proceeds to the Update step, thus computing the initial means to be the centroid of the

cluster's randomly assigned points. The Forgy method tends to spread the initial means out,

while Random Partition places all of them close to the center of the data set. According to

Hamerly et al., the Random Partition method is generally preferable for algorithms such as the

k-harmonic means and fuzzy k-means. For expectation maximization and standard k-means

algorithms, the Forgy method of initialization is preferable.

Given a set of observations (x1, x2, …, xn), where each observation is a d-dimensional real

vector, k-means

clustering

aims

to

partition

the n observations

into k sets

(k ≤ n) S = {S1, S2, …, Sk} so as to minimize the within-cluster sum of squares (WCSS):

Where μi is the mean of points in Si.

The two key features of k-means which make it efficient are often regarded as its biggest

drawbacks:

Euclidean distance is used as a metric and variance is used as a measure of cluster scatter.

The number of clusters k is an input parameter: an inappropriate choice of k may yield

poor results. That is why, when performing k-means, it is important to run diagnostic

checks for determining the number of clusters in the data set.

A key limitation of k-means is its cluster model. The concept is based on spherical clusters

that are separable in a way so that the mean value converges towards the cluster center. The

clusters are expected to be of similar size, so that the assignment to the nearest cluster center

34

is the correct assignment. When for example applying k-means with a value of

onto

the well-known Iris flower data set, the result often fails to separate the three Iris species

contained in the data set. With

, the two visible clusters (one containing two species)

will be discovered, whereas with

parts. In fact,

one of the two clusters will be split into two even

is more appropriate for this data set, despite the data set containing

3 classes. As with any other clustering algorithm, the k-means result relies on the data set to

satisfy the assumptions made by the clustering algorithms. It works very well on some data

sets, while failing miserably on others.

The result of k-means can also be seen as the Voronoi cells of the cluster means. Since data is

split halfway between cluster means, this can lead to suboptimal splits as can be seen in the

"mouse"

example.

The

Gaussian

models

used

by

the Expectation-maximization

algorithm (which can be seen as a generalization of k-means) are more flexible here by having

both variances and covariance. The EM result is thus able to accommodate clusters of variable

size much better than k-means as well as correlated cluster.

k-means clustering in particular when using heuristics such as Lloyd's algorithm is rather easy

to implement and apply even on large data sets. As such, it has been successfully used in

various

topics,

ranging

from market

segmentation, computer

vision, geostatistics

and astronomy to agriculture. It often is used as a preprocessing step for other algorithms, for

example to find a starting configuration.

k-means clustering, and its associated expectation-maximization algorithm, is a special case of

a Gaussian mixture model, specifically, the limit of taking all covariance as diagonal, equal,

and small. It is often easy to generalize a k-means problem into a Gaussian mixture model.

6.1Mean shift clustering

Basic mean shift clustering algorithms maintain a set of data points the same size as the input

data set. Initially, this set is copied from the input set. Then this set is iteratively replaced by

the mean of those points in the set that are within a given distance of that point. By

contrast, k-means restricts this updated set to k points usually much less than the number of

points in the input data set, and replaces each point in this set by the mean of all points in

the input set that are closer to that point than any other (e.g. within the Voronoi partition of

each updating point). A mean shift algorithm that is similar then to k-means, called likelihood

mean shift, replaces the set of points undergoing replacement by the mean of all points in the

35

input set that are within a given distance of the changing set. One of the advantages of mean

shift over k-means is that there is no need to choose the number of clusters, because mean

shift is likely to find only a few clusters if indeed only a small number exist. However, mean

shift can be much slower than k-means. Mean shift has soft variants much as k-means does.

6.2 Bilateral filtering

k-means implicitly assumes that the ordering of the input data set does not matter.

The bilateral filter is similar to K-means and mean shift in that it maintains a set of data points

that are iteratively replaced by means. However, the bilateral filter restricts the calculation of

the (kernel weighted) mean to include only points that are close in the ordering of the input

data. This makes it applicable to problems such as image denoising, where the spatial

arrangement of pixels in an image is of critical importance.

6.3 Speaker Matching

During the matching a matching score is computed between extracted feature vectors and

every speaker codebook enrolled in the system. Commonly it is done as a partitioning

extracted feature vectors, using centroids from speaker codebook, and calculating matching

score as a quantization distortion.

Another choice for matching score is mean squared error (MSE), which is computed as the

sum of the squared distances between the vector and nearest centroid divided by number of

vectors extracted from the speech sample.

The quantization distortion (quality of quantization) is usually computed as the sum of

squared distances between vector and its representative (centroid). The well-known distance

measures are Euclidean, city block distance, weighted Euclidean and Mahalanobis. They are

represented in the following equations:

36

Where x and y are multi-dimensional feature vectors and D is a weighting matrix. When D is

a covariance matrix weighted Euclidean distance also called Mahalanobis distance. Weighted

Euclidean distance where D is a diagonal matrix and consists of diagonal elements of

covariance matrix is more appropriate, in a sense that it provides more accurate identification

result. The reason for such result is that because of their nature not all components in feature

vectors are equally important and weighted distance might give more precise result.

37

CHAPTER 7

EUCLIDEAN DISTANCE

In mathematics, the Euclidean distance or Euclidean metric is the "ordinary" distance between

two points that one would measure with a ruler, and is given by the Pythagorean formula. By

using this formula as distance, Euclidean space (or even any inner product space) becomes

a metric space. The associated norm is called the Euclidean norm. Older literature refers to the

metric as Pythagorean metric.

The Euclidean distance between points p and q is the length of the line segment connecting

them (

).

In Cartesian

coordinates,

if p = (p1, p2,..., pn)

and q = (q1, q2,..., qn)

are

two

points

in Euclidean n-space, then the distance from p to q, or from q to p is given by:

The position of a point in a Euclidean n-space is a Euclidean vector. So, p and q are Euclidean

vectors, starting from the origin of the space, and their tips indicate two points. The Euclidean

norm, or Euclidean length, or magnitude of a vector measures the length of the vector:

Where the last equation involves the dot product.

A vector can be described as a directed line segment from the origin of the Euclidean space

(vector tail), to a point in that space (vector tip). If we consider that its length is actually the

distance from its tail to its tip, it becomes clear that the Euclidean norm of a vector is just a

special case of Euclidean distance: the Euclidean distance between its tail and its tip.

38

The distance between points p and q may have a direction (e.g. from p to q), so it may be

represented by another vector, given by:

In a three-dimensional space (n=3), this is an arrow from p to q, which can be also regarded as

the position of q relative to p. It may be also called a displacement vector if p and q represent

two positions of the same point at two successive instants of time.

The Euclidean distance between p and q is just the Euclidean length of this distance (or

displacement) vector:

Which is equivalent to equation 1, and also to:

7.1One dimension

In one dimension, the distance between two points on the real line is the absolute value of

their numerical difference. Thus if x and y are two points on the real line, then the distance

between them is given by:

In one dimension, there is a single homogeneous, translation-invariant metric (in other words,

a distance that is induced by a norm), up to a scale factor of length, which is the Euclidean

distance. In higher dimensions there are other possible norms.

39

7.2 Two dimensions

In the Euclidean plane, if p = (p1, p2) and q = (q1, q2) then the distance is given by

This is equivalent to the Pythagorean theorem.

Alternatively, it follows from (2) that if the polar coordinates of the point p are (r1, θ1) and

those of q are (r2, θ2), then the distance between the points is

7.3 Three dimensions

In three-dimensional Euclidean space, the distance is

7.4 N dimensions

In general, for an n-dimensional space, the distance is

7.5 Squared Euclidean Distance

The standard Euclidean distance can be squared in order to place progressively greater weight

on objects that are further apart. In this case, the equation becomes

40

Squared Euclidean Distance is not a metric as it does not satisfy the triangle inequality,

however it is frequently used in optimization problems in which distances only have to be

compared.

It is also referred to as quadrance within the field of rational trigonometry.

7.6 Decision making

The next step after computing of matching scores for every speaker model enrolled in the

system is the process of assigning the exact classification mark for the input speech. This

process depends on the selected matching and modeling algorithms. In template matching,

decision is based on the computed distances, whereas in stochastic matching it is based on the

computed probabilities.

Fig. 7.1 Decision Process

In the recognition phase an unknown speaker, represented by a sequence of feature vectors is

compared with the codebooks in the database. For each codebook a distortion measure is

computed, and the speaker with the lowest distortion is chosen as the most likely speaker.

41

CHAPTER 8

RESULT AND CONCLUSION

8.1 Result

We show the results of our experiments. Every chart is preceded by the short explanation

what we measured and why and followed by the short discussion about results.

8.2 Pruning Basis

First, we start from the basis for speaker pruning. We ran a few tests to see how the matching

function behaves during the identification and when it stabilizes. Chart in Figure 7.1

represents the variation of matching score depending on the available test vectors for 20

different speakers. One vector refers to the one analysis frame.

Fig: 8.1 Distance stabilization

In this figure, the bold line represents the owner of the test sample. It can be seen that at the

beginning the matching score for correct speaker is somewhere among the other scores. But

after large enough amount of new vectors extracted from the test speech, it becomes close

with only few score sand at the end it becomes the smallest score. Actually, this is the

42

underlying reason for speaker pruning: when we have more data we can drop some of the

models from identification.

8.2.1 Static pruning

In the next experiment, we consider the trade-off between identification error rate and average

time spent on the identification for static pruning. By varying the pruning interval or number

of pruned speakers we expect different error rates and different identification times. From

several runs with different parameter combination we can plot the error rate as a function of

average identification time. To obtain such dependency, we fixed three values for pruning

interval and were varying the number of pruned speakers. The results are shown in Figure 7.3.

Fig: 8.2Evaluation of static variant using different pruning intervals

From this figure we can see that all curves follow almost the same shape. It is because in

order to have fast identification we have to choose either small pruning interval or large

number of pruned speakers. On the other hand, in order to have low error rate we have to

choose large interval or small number of pruned speakers. The main conclusion from this

figure is that these two parameters compensate each other.

43

8.2.2 Adaptive pruning

The idea of adaptive pruning is based on the assumption that distribution of matching score

follows more or less the Gaussian curve. In Figure 7.4 we can see the distributions of

matching scores for two typical identifications.

Fig: 8.3 Examples of the matching score distribution

From this figure we can see that distribution is not exactly follows the Gaussian curve but its

shape is almost the same. In the next experiment, we consider the trade-off between

identification error rate and average time spenton the identification for adaptive pruning. By

fixing the parameter ŋ and varying the pruning interval we obtained desired dependency.

8.3 Conclusion

The goal of this project was to create a speaker recognition system, and apply it to a speech of

an unknown speaker. By investigating the extracted features of the unknown speech and

then compare them to the stored extracted features for each different speaker in order to

identify the unknown speaker.

44

The feature extraction is done by using MFCC (Mel Frequency Cepstral Coefficients). The

function „melcepst‟ is used to calculate the melcepstrum of a signal. The speaker was modeled

using Vector Quantization (VQ). A VQ codebook is generated by clustering the training

feature vectors of each speaker and then stored in the speaker database. In this method, the

K means algorithm is used to do the clustering. In the recognition stage, a distortion measure

which based on the minimizing the Euclidean distance was used when matching an unknown

speaker with the speaker database.

During this project, we have found out that the VQ based clustering approach provides

us with the faster speaker identification process.

45