Assignment 1

Due 11:59pm Monday, October 16th - to be submitted through Crowdmark

General instructions: You will be asked to answer questions using R, by hand, or a combination of the two.

It is expected that you answer the questions by hand unless otherwise requested in the question. When

using R, please present all R code you used as well as the results obtained. Someone reading your work must

be able to take your code, copy it into R, and obtain identical results. You must always show your work

unless otherwise noted.

Please note that the TAs may not be marking all questions in this assignment. The exact

questions that will be marked will not be determined until after the due date. Because of this,

all questions will say 10 marks until we start marking (this is a Crowdmark default).

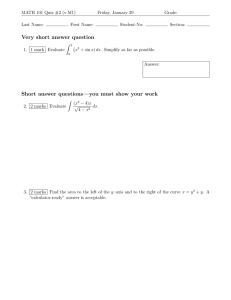

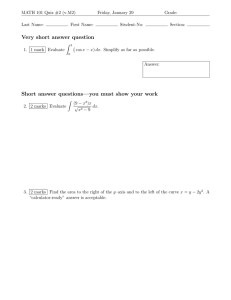

Question 1 [2 marks]

Please use R to answer this question.

Take a random sample of size 50 (n = 50) from the population of favourite numbers in the survey_data

dataset. Note that the first row of the data is a header row (i.e., it contains the names of the variables). To

do this in R, use the following code but you must replace 123456789 in set.seed() with your student ID

number. This will generate a sample uniquely attached to your ID.

data <- read.csv("survey_data.csv", header=T)

set.seed(123456789)

fav_number_sample <- sample(data$fav_number, 50)

Now complete the following steps in order for your sample of favourite numbers. Your solutions should

include the code you used to solve the problem along with the final answer.

Steps:

1.

2.

3.

4.

Add 10 to each data value.

Remove the 5th data value.

Divide the remaining numbers by 3 more than the standard deviation of the original data values.

Calculate the median of the resulting numbers.

# (fav_number_sample+10)

# (fav_number_sample+10)[-5]

# (fav_number_sample+10)[-5]/(sd(fav_number_sample)+3)

median((fav_number_sample+10)[-5]/(sd(fav_number_sample)+3))

The final answer I get is 364.4617709. Yours will be different.

2 marks for correct answer, 0 otherwise (see excel spreadsheet for final answers by IDs)

Question 2 [6 marks]

(a) [2 marks] Using R and the survey_data dataset, create a pie chart to show the distribution of students

1

who were born in each month.

table(data$month_born)

##

##

##

##

##

April

20

May

16

August

11

November

18

December February

23

17

October September

17

18

January

16

July

17

June

19

March

9

pie(c(16, 17, 9, 20, 16, 19, 17, 11, 18, 17, 18, 23),

labels=c("Jan", "Feb", "Mar", "Apr", "May", "June",

"July", "Aug", "Sept", "Oct", "Nov", "Dec"))

Apr

Mar

May

Feb

June

Jan

July

Dec

Aug

Nov

Sept

Oct

Deduct 1 mark for simple coding mistakes

(b) [2 marks] Using R and the survey_data dataset, create a bar chart to show the distribution of students

who were born in each month.

barplot(c(16, 17, 9, 20, 16, 19, 17, 11, 18, 17, 18, 23),

names.arg=c("Jan", "Feb", "Mar", "Apr", "May", "June",

"July", "Aug", "Sept", "Oct", "Nov", "Dec"))

2

20

15

10

5

0

Jan

Mar

May

July

Sept

Nov

Deduct 1 mark for simple coding mistakes

(c) [2 marks] Compare your charts from parts (a) and (b). Which chart is better at displaying the

information? Explain your reasoning.

Most of the wedges/slices from the pie chart appear to be somewhat similar in size (the eye is bad at judging

relative areas and reading angles - both of which are needed for pie charts). The bar chart is better at

displaying this information because it is easier to see the differences.

1 mark for stating bar chart is better, 1 mark for appropriate reason

Question 3 [10 marks]

Below is a plot of how long it took 20 students to eat one cookie (in seconds). Use this data to answer the

following questions.

##

##

##

##

##

##

##

##

##

##

The decimal point is 1 digit(s) to the right of the |

0

1

2

3

4

5

6

|

|

|

|

|

|

|

24555779

00

045

000

55

0

0

(a) [4 marks] Calculate the mean, median, and mode.

Mean =

2+4+5+···+60

20

= 21.15, median = Q2 = 15, mode = {5, 30}.

1 mark for mean, 1 mark for median, 2 marks for mode

(b) [2 marks] Compare the mean and the median from part (a). What does this tell us about the shape of

the data?

The mean is larger than the median. This suggests that the data is right skewed (we also see this in the plot).

1 mark for comparison, 1 mark for right skewed

3

(c) [2 marks] Calculate the first and third quartiles.

Q1 = 6, Q3 = 30

1 mark for each

(d) [2 marks] Determine whether 60 is an outlier.

IQR = Q3 − Q1 = 30 − 6 = 24

U L = Q3 + 1.5 × IQR = 30 + 1.5 × 24 = 66

Since 60 < 66, it is not an outlier.

1 mark for UL, 1 mark for correct answer (not outlier)

Question 4 [18 marks]

The data from Question 3 was actually a random sample taken from the survey_data variable containing

the recorded times of how long it took students to eat one cookie (in seconds). Use the original dataset for

this variable, in its entirety, to answer the following questions (i.e., eat_cookies_secs).

(a) [2 marks] Using R, create a histogram of this dataset.

hist(data$eat_cookie_secs)

20 40 60 80

0

Frequency

120

Histogram of data$eat_cookie_secs

0

100

200

300

400

500

600

data$eat_cookie_secs

Deduct 1 mark for simple coding mistakes

(b) [2 marks] What does the second bar (from the y-axis) of the histogram from part (a) represent? Explain

using non-statistical language (i.e., use language that a wide audience will understand).

The bar represents the number of students who ate one cookie in 50 to 100 seconds. It appears that about 40

students were able to do so.

Deduct 1 mark for simple mistakes (not using non-statistical language; choosing a different bar, etc.)

(c) [2 marks] Using R, create a boxplot of this dataset.

boxplot(data$eat_cookie_secs)

4

500

300

100

0

Deduct 1 mark for simple coding mistakes

(d) [3 marks] Describe the shape, center and spread of the boxplot from part (c) using appropriate numerical

measures.

Shape: skewed right

Center: median is hard to tell visually here (any guess between 20 to 60 seconds is fine)

Spread: IQR is about 50 and the range is 600 seconds

1 mark for shape, 1 for center, 1 for spread (IQR or range)

median(data$eat_cookie_secs)

## [1] 30

IQR(data$eat_cookie_secs)

## [1] 50

range(data$eat_cookie_secs)

## [1]

0 600

(e) [2 marks] Using R and one line of code, give the five-number summary of this dataset. What extra

information is provided?

summary(data$eat_cookie_secs)

##

##

Min. 1st Qu.

0.00

10.00

Median

30.00

Mean 3rd Qu.

43.26

60.00

Max.

600.00

The mean is extra.

1 mark for summary function, 1 mark for noting the mean

(f) [2 marks] It appears that one student ate one cookie in 600 seconds. Without calculating the upper

limit, is this value an outlier? Explain your reasoning.

Yes, it is an outlier as shown in both the histogram (the bar is much further right than the rest of the bars)

and the boxplot (it is a dot above the whisker which is the largest non-outlier).

1 mark for stating yes, 1 mark for appropriate reason (using histogram OR boxplot OR both)

5

(g) [2 marks] Provide two possible reasons why the value from part (f) is in the dataset.

It could be a typo (perhaps they meant to type 60 seconds), a very large cookie, etc.

1 mark for each appropriate reason

(g) [3 marks] Is the random sample from Question 3 a “good” representation of the population from

Question 4? Explain your reasoning using statistics.

No, it is not a good representation. In Question 3, x̄ = 21.15 and Q2 = 15. But in Question 4, x̄ = 43.26

and Q2 = 30. The random sample failed to capture values from the right tail, so it’s under-representing the

population.

1 mark for stating no, 1 mark for comparing statistics, 1 mark for recognizing underrepresentation

Question 5 [16 marks]

To answer the following questions parts, use the next line of code:

Q5_data <- data[data$exercise_per_week <=7, ]

For this question only (i.e., Question 5), you are going to use “Q5_data” instead of “data”.

(a) [2 marks] Using R, calculate the correlation between pulse rate and average coffee consumed per day.

Note that you need to include use="complete.obs" in the function to get an answer. Interpret the

number you calculate.

cor(Q5_data$pulse_rate, Q5_data$avg_coffee_per_day, use="complete.obs")

## [1] 0.1893126

The correlation is 0.1893126. It is weak and positive.

1 mark for correlation, 1 mark for correct interpretation

(b) [1 mark] Explain what the argument use="complete.obs" from part (a) does. Type help(cor) or

?cor to open the help file which provides you with more information about the function. Note that you

may need to include this argument again when calculating correlations.

The argument handles missing values by deleting them on a case-by-case basis. That is, only complete

observations are used to calculate the correlation.

1 mark for appropriate explanation

(c) [5 marks] Using R, create side-by-side boxplots of the pulse rate (p) against the number of exercise days

per week (e) using the command boxplot(p~e). Note that using this command “as is” will not work

for you – you need to fill in the appropriate terms for p and e. Compare the pulse rates for students

who do not exercise and students who exercise every day of the week. Be sure to mention shape, center,

and spread, along with any outliers.

boxplot(Q5_data$pulse_rate ~ Q5_data$exercise_per_week)

6

140

120

100

80

60

40

Q5_data$pulse_rate

0

1

2

3

4

5

6

7

Q5_data$exercise_per_week

Shape: No exercise days (0) is symmetric and all exercise days (7) is skewed left.

Center: The median pulse rate for all exercise days is slightly lower than the median pulse rate for no exercise

days. The mean pulse rate for all exercise days should be lower than the mean pulse rate for no exercise days

(due to the shape).

Spread: The IQR (range) for no exercise days is larger (smaller) than the IQR (range) for all exercise days.

Outliers: There are outliers for both, although only outliers above the IQR for no exercise days.

1 mark for boxplot, 1 mark for shape, 1 for center, 1 for spread (IQR or range), 1 for outliers

(d) [2 marks] Explain what the line of code at the start of this question (Question 5) does and why we

needed to use it. Hint: Create the boxplot from part (c) using “data” instead of “Q5_data” to help

you.

100

80

60

40

data$pulse_rate

120

140

boxplot(data$pulse_rate ~ data$exercise_per_week)

0

1

2

3

4

5

6

7

30

150

data$exercise_per_week

It removed the values of 30 and 150 for the number of exercise days per week. We had to use it since there

7

are only 7 days in a week (i.e., the maximum can only be 7).

1 mark for recognizing the removes values, 1 mark for explaining why (max of 7)

(e) [2 marks] Using R, calculate the correlation between pulse rate and average coffee consumed per day,

for students who do not exercise.

no_exercise <- Q5_data[Q5_data$exercise_per_week=="0",]

cor(no_exercise$pulse_rate, no_exercise$avg_coffee_per_day)

## [1] 0.2869316

The correlation is 0.2869316.

1 mark for filtering data correctly, 1 mark for correlation

(f) [2 marks] Using R, calculate the correlation between pulse rate and average coffee consumed per day,

for students who exercise every day of the week.

most_exercise <- Q5_data[Q5_data$exercise_per_week=="7",]

cor(most_exercise$pulse_rate, most_exercise$avg_coffee_per_day, use="complete.obs")

## [1] 0.6251409

The correlation is 0.6251409.

1 mark for filtering data correctly, 1 mark for correlation

(g) [2 marks] Comment on your results from parts (e) and (f).

The correlation between pulse rate and average coffee consumed per day is larger (i.e., stronger) for students

who exercise every day of the week compared to students who do not exercise. Both of these are larger than

the correlation calculated in part (a).

Based on these results, it appears that coffee consumption may be affecting pulse rate more for

people who vigorously exercise (i.e., exercise every day of the week). In general, it is known that people who

exercise more (e.g., athletes) have lower pulse rates, so the coffee consumption may be "jolting" their pulse

rates. However, there could be other lurking/confounding variables that are influencing these results as well.

1 mark for comparing correlations correctly, 1 mark for recognizing/interpreting what it means contextually

Question 6 [16 marks]

Please use "data" (i.e., the original survey_data dataset) for this question. Please include the

argument xlim=c(0,250) in any plots you are asked to create for this question.

(a) [1 mark] Using R, plot a scatterplot of students’ height (response) against shoe size (explanatory

variate).

plot(data$shoe_size_cm, data$height_cm, xlim = c(0,250))

8

200

190

180

170

150

160

data$height_cm

0

50

100

150

200

250

data$shoe_size_cm

(b) [4 marks] Using R, build a linear model with height as the response variate and shoe size as the

explanatory variate. What is the intercept, slope, and coefficient of determination for this model?

summary(lm(height_cm ~ shoe_size_cm, data))

##

##

##

##

##

##

##

##

##

##

##

##

##

##

##

##

##

##

Call:

lm(formula = height_cm ~ shoe_size_cm, data = data)

Residuals:

Min

1Q

-19.575 -7.603

Median

-1.622

3Q

7.330

Max

30.359

Coefficients:

Estimate Std. Error t value

Pr(>|t|)

(Intercept) 168.43012

1.49272 112.834 <0.0000000000000002 ***

shoe_size_cm

0.04769

0.05077

0.939

0.349

--Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 10.16 on 199 degrees of freedom

Multiple R-squared: 0.004413,

Adjusted R-squared: -0.00059

F-statistic: 0.8821 on 1 and 199 DF, p-value: 0.3488

The intercept is a = 168.43012, slope is b = 0.04769, and coefficient of determination is r2 = 0.004413 =

0.4413%.

1 mark for fitting linear model correctly, 1 mark for intercept, 1 for slope, 1 for coef

(c) [1 mark] Using R, draw the least-squares regression line from part (b) on the scatterplot from part (a).

plot(data$shoe_size_cm, data$height_cm, xlim = c(0,250))

abline(168.43012, 0.04769, col="red")

9

200

190

180

170

150

160

data$height_cm

0

50

100

150

200

250

data$shoe_size_cm

(d) [2 marks] Calculate (or predict) the average height of a student with a shoe size of 24.7cm.

ŷ = 168.43012 + 0.04769 × shoe size = 168.43012 + 0.04769(24.7) = 169.6081cm

Deduct 1 mark for simple calculation errors

(e) [6 marks] Repeat parts (b) and (c) after removing the 160th row from the dataset.

Q6_data <- data[-160,]

summary(lm(height_cm ~ shoe_size_cm, Q6_data))

##

##

##

##

##

##

##

##

##

##

##

##

##

##

##

##

##

##

Call:

lm(formula = height_cm ~ shoe_size_cm, data = Q6_data)

Residuals:

Min

1Q

-19.047 -7.595

Median

-1.031

3Q

6.845

Max

29.869

Coefficients:

Estimate Std. Error t value

Pr(>|t|)

(Intercept) 150.4679

3.4475 43.645 < 0.0000000000000002 ***

shoe_size_cm

0.7741

0.1361

5.689

0.0000000455 ***

--Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 9.442 on 198 degrees of freedom

Multiple R-squared: 0.1405, Adjusted R-squared: 0.1361

F-statistic: 32.36 on 1 and 198 DF, p-value: 0.00000004554

plot(Q6_data$shoe_size_cm, Q6_data$height_cm, xlim = c(0,250))

abline(150.4679, 0.7741, col="red")

10

200

190

180

170

160

150

Q6_data$height_cm

0

50

100

150

200

250

Q6_data$shoe_size_cm

The intercept is a = 150.4679, slope is b = 0.7741, and coefficient of determination is r2 = 0.1405 = 14%.

1 mark for removing row, 1 mark for fitting linear model correctly, 1 mark for intercept, 1 for slope, 1 for

coef, 1 mark for plot with line of best fit

(f) [2 marks] Is the 160th data point an influential observation? Explain your reasoning.

Yes, it is an influential observation because when we removed it from the dataset in part (e) the line of best

fit changed markedly. It also drastically changed the coefficient of determination (i.e., the correlation) and

the slope.

1 mark for stating yes, 1 mark for appropriate reason

Question 7 [5 marks]

The contents of this assignment illustrate how we can use the methods learned in class to analyze the data

collected in the Course Survey! Imagine that you are asked to help create the next version of the Course

Survey – that is, the survey that future STAT 202 students will fill out and analyze in their first assignment.

What questions would you incorporate? Please provide a few ideas for questions and, potentially, the questions

themselves. If you can think of more questions and want to include them as well, that would be great (but

keep in mind that we are looking for quality over quantity)!

Note that it may help you to think of how you would analyze the data collected from your questions.

Answers will vary here.

11