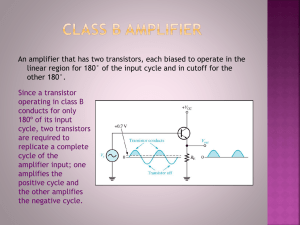

EE-940 Advanced RF Measurements Submitted To: Dr. Nosherwan Shoaib Submitted By: Engr. Irfan Mehmood A SUMMARY OF TESTING RF POWER AMPLIFIER DESIGNS The main focus of the webinar is on the testing of power amplifiers. The process flow involves starting with research and design phase. This phase is started simulation and emulating the power amplifier design. Then after getting the hardware in the lab, the characterization process can be started. In this phase highly accurate and flexible test and measurement solution is required. The complete desired RF performance is achievable once the complete behaviour and characteristics of the device under test are finally known. Usually when there is new emerging technology coming in, like we are going into higher frequencies and other upgradation in the hardware, then largest dynamic range is required to know the true capabilities of the new technology. When the device gets ready you want to test how it performs over temperature and frequency variations. This all involves characterization processes. Large measurements are done and not only on a few devices but on large number of devices before finally putting the device under mass production. In this process it is ensured that all requirements are met before releasing the design to production In production, it is to be ensured that the device really meets the specifications and it is shipped as a good device to the customers. After starting with R&D through the different process flows, performance optimization is done. This can be done with different techniques, different topologies for amplifier as well as with linearization to get better performance. All this involves different testing scenarios. Discussing the design phase, Cadence software is used in which demonstration of device is shown in the middle of the top window. At right and left, different blocks of R&S are present. These blocks act as data sources and data sinks. These enable the ability to send or get signal from R&S hardware to simulation and back again. All this is possible as R&S has developed all different digital standards. WinIQSIM2 tool enables the creation of signals of various standards from 5G to WiFi and many others. These signals can be transferred into the Cadence Visual System Simulator (VSS) which is being used for different blocks in the design. The result is then transferred over to R&S VSE (Vector Signal Explorer) which is a signal analysis software where you can have a look at the modulation performance going through the complete simulations. An early understanding about the linearization capabilities of your design can be done as well. As it adds the ability to run a direct DPD linearization in conjunction with the simulation. In the simulation phase, it is understood how does this correlate to real hardware measurement. WinIQSIM2 as well as VSE is used conjunction with the Cadence software, and those two tools, WinIQSIM2 and VSE, are using the same calculation methods, the same algorithms as used inside of the instruments, (Inside of a vector signal generator and a vector signal analyzer). There is already a direct correlation given by using exactly the same methods. And you can basically replace then the simulated world with the real hardware. Now for the real hardware verification and characterization, there is a lot of topics to be measured to be addressed. And ideally, we want to have one tool to measure as much as possible in order to simplify the testing process. There is a long list of different RF parameters from frequency coverage to power consumption, to understand the gain, the amplification characteristics, all the way to noise figure. All of these can be done with just one tool, a vector network analyzer. Many of those items are directly derived from S-parameter. And S-parameter is the basic measurement a vector-network analyzer does. When we look at compression point and power-added efficiency, what is the measurement we want to do here? We want to understand the maximum output power versus the useful power range. Why do we differentiate? Because the maximum output power is already way into saturation. We see already very large non-linear effects. And typically what is characterized is to say what is the 1 dB compression point? Meaning how far can we use the amplifier in the linear way before it really goes into compression. And typically, a 1 dB compression is shown as a threshold here. There are also others which are using 3 dB compression point. So, this is freely definable and, in the end, dependent on the target application. A VNA with its power sweep can easily do that measurement by sweeping the input power and measure the result on the output side. Ideally, this is done with a power sweep moving up and going down to understand the history of the design of the amplifier because there can be a different performance like a different characteristic in the power transfer function. Another factor is Power-added efficiency(PAR) that is to understand what is the power consumption at a certain output power, at a certain gain. This is a similar measurement for the VNA point, but we have to add also the capability to measure the power which is going into the device as the power consumption as the power supply basically. And we are taking the relationship between the output signal and the input signal and take this relative to the power provided to the amplifier. Another important point for amplifiers is the intermodulation. An amplifier has non-linearities, and typically there can happen different intermodulation when we have a two-tone signal or any type of scenario. Typically for intermodulation, a two-tone scenario is used. There are different methods. Two-tones are used, and then you want to understand the behavior across frequency, across level. What is offered or what is performed typically is to use a two-tone and step this over the frequency range shown in the plot. The other thing is to keep one tone static and just move the second to go right and left to change the offset or variable spacing, as it is called here. The third one is to keep this position in the frequency range constant, but we also have one tone fixed and the other one changing in its power going up and down to see the effect of the non-linearity in the intermodulation. In a network analyzer, this can be done all in a very quick manner with dedicated tools to run those intermodulation measurements. What is required to do so are different sources in the analyzer to generate the two-tone signals, and ideally to have an internal combiner so that one output port of the vector analyzer already gives you the sum signal and you do not have to use an external combiner where additional calibration is required. Another important measurement to understand the linearity of the amplifier is called the intercept point measurement. It is often called IP3, intercept point, third order, or TOI, third order intercept. It all describes basically the same. It's a mathematical approximation of the comparison between the linear behavior as well as how fast the third order harmonic is increasing. And typically, this is not a measurement which can be done directly on the point, but it is really a mathematical, a theoretical point based on the crossover of the wanted curve, of the wanted gain translation shown here in blue, and the red curve, which basically shows how the third order harmonic is increasing with higher power. The harmonic is increasing faster at a certain output level, and this way it creates a crossover point. And the further out this is, the better the characteristic of the amplifier. Another topic is the harmonics. You basically just run a CW signal into the PA and you measure all of its output. Within a vector network analyzer, you can use a frequency conversion measurement method because you know exactly where the harmonics are at the second, the 2x, 3x, 4x, and so on. Now, we are now at the target frequency of the original input frequency. This can be easily done using frequency conversion measurements. When you want to look at, are there any other spurs around. Well, then a spectrum analyzer mode in the VNA comes very handy because this gives you the full spectrum view and you can see if there are any other spurs out there. In intermodulation measurements step, we've actually connected the device under test, our amplifier, and now we're going to configure the intermodulation measurements and look at the performance of our DUT. We can see that the two tones now are at a much higher power level because we've got the amplification of the device under test, about 25 dBs. In the top window, what we're going to do is add measurement quantities. Back in the measurement menu, we can see underneath the wizard, we have the measurement parameters. We'll start with main tone, where we can look at both the lower or the upper tone reference to the input or the output of the device under test. Here lower tone is chosen. We have the intermodulation products. You can see all the relevant products are displayed here. In this particular example, I'm just going to choose a third order lower tone. Then the intercept points shows in exactly the same way. In the scale menu, we can auto scale all of these to a common scale. Let's just maximize that. For ease to be able to read off the values, let's just put a marker on here. Now we can see that at this particular frequency, see, around 3.75 gigahertz. We have a third order lower tone of intermodulation that's around about -35 dB. Then from that we can calculate the intercept point in this particular case, then at around about 38 dB. Another very important characteristic of a power amplifier or also of a low noise amplifier is the noise figure, or also called noise factor in the linear world. The noise figure basically describes the added noise by the amplifier itself. Let's have a look at an example. On the bottom left, you see an input signal with a certain signal strength, and there is always some background noise. Now, when this signal goes through the amplifier, everything is amplified with the gain of the amplifier. So the signal is amplified, as well as the wideband noise. Unfortunately, the amplifier itself also adds some wideband noise. This results in a limited dynamic range, limited signal-to-noise ratio, shown on the right plot with the red arrow showing that the signal-to-noise ratio is a certain way reduced compared to the input signal. Obviously, this should be as small as possible. The added noise by the amplifier should be as small as possible, and especially when we are talking about low-noise amplifiers, often used in the receive path of an RF design. The noise figure should be as low as possible. What we need to understand for the noise figure when we have to measure it, we basically look at the output noise versus the input noise in conjunction with the gain, as shown also in the formula of the noise factor shown here in linear terms. To measure it, we can use two different approaches. One is with a spectrum analyzer, the other one with a vector network analyzer. Let's have a quick look how to do this with the spectrum analyzer. There we use also a noise source which is very well characterized and we know exactly what noise comes out when we turn it on or we have it off. It's also a component which is in the loop and we connect it to the device under test, as shown here on the plot. And we make a measurement of the noise coming from the device under test after amplifying the noise from the noise source or having the noise source turned off. And when we take those two measurements into account, you can draw the graph as shown here on the bottom right. And taking these two measurements, you can take a line through it and can in this way also calculate back, derive the noise added just by the device by the amplifier. There is also an application node referred here giving you more background information on this. The second method typically used with a vector network analyzer is called the cold source method. We are using here the vector network analyzer as a source as well as a measurement receiver and measure noise while the source is turned off and measure gain while the source is turned on. The calculations are based on noise-temperature representation, meaning we are looking at not the noise each component adds to it, but the temperature, which is the corresponding effect to it. Also, easy to remember cold source method, looking at temperature representation here. And what is important to have really accurate measurement results and also repeatable data is a good calibration of the vector network analyzer. Meaning the calibration of the noise temperature of the source on the left side and the receiver on the right side. And then you see here the formula through which you can derive the noise factor. Now, when we look at physically how to connect to the device, it really depends on the stage of the device. There you want to do probably already the first different characterization before putting it into different dyes, sawing it, separating the different modules, and packaging it. The first step typically is on wafer testing and wafer verification. Focus for this is at first to understand that the wafer run really was successful and is working as expected. This is typically done using DC, so voltage behavior, as well as some basic RF parameters. This is done together using manual or different stages of automation, wafer probers, in order to connect to the DUT on the wafer. When you want to make measurements and, on an amplifier, typically the amplifier gets warm when it's being used. And when you think about the amplifier in a full stage, there is some cooling. Obviously, on a wafer, there is no possibility for cooling. So we need to take care on the signal path already not to overheat the device. And that is why we are focusing not on standard S-parameters, but on pulsed S-parameters in order to limit the self-heating of the device. The other topic is when we look at on-wafer, typically there is no matching network around it. So load-pull meaning to optimize to set a certain impedance on the input and on the output may be important. Very often on wafer testing is done in combination with load-pull measurements. In the end, you can use tuners in a classic load-pull design to create a certain impedance on the input and on the output. They are typically used in between the vector network analyzer of a test instrument and to the device on the test. There can be different methods as passive and active load pull. It is important to understand here why we want to use it because the transistors, the core of our amplifier, are highly dependent on its load impedance, on the input, or on the output. Load pull allows us to basically provide any an impedance to the device and the test we want and to understand the behavior across different impedances, but also to match to a 50 Ohms world. Unfortunately, in this area, small signal characterization of the PA is not valid anymore because of non-linear effects, the behavior changes. But we still can measure with a VNA director wave quantities describing the performance and the characteristics of the device. And also similar to on wafer testing, a raw PA dye and also the insert of an MMIC are typically not 50-ohm devices. But the whole world and all the RF design is typically based on a 50-ohm design. There is a matching network required. In order to characterize the device. Another topic is you need to design a device model. We talked in the very beginning about simulation and design early phases. Well, when you take the PA into a larger environment, into a complete RF system, and you are starting to simulate this, you need a good model for the amplifier. There are two methods to calculate those. One is called a compact modeling approach, where you are basically measuring small signal effects. You're looking at the linear behavior, you describe this, and you create a model based on the behavior, meaning, Oh, there is a certain capacitance effect here, there is a resistance here, and you create a model as shown here, for example, in this fat compact model. The other approach is called a behavioral model. We are looking at the PA in this way as a black box. And we just want to describe the transfer function from the input to the output and don't want to go into the detail of the different effects inside of the transistor as before. This approach also takes into account harmonics and different impedance effects. So what we derived before from load-pull measurements. And it's typically measured with a non-linear vector-network analyzer approach and also works not only for the linear, but for the non-linear range of the device under test. And there are different systems. Another term which is often used in the industry is called X-parameters, which in the end is just another term for PhD behavioral model. Now we want to transition from CW-based measurement to modulated measurements to modulated scenarios. Why? Within the traditional approach, using a VNA for CW measurements, you can get a lot of information and characteristics out of the device. But when we look at really wideband modulation schemes, as we see today with 5G, ultra-wideband, or the latest WiFi additions. You have a high band with a couple of hundred megahertz, sometimes even up to gigahertz range. And then the behavior of the amplifier, not exactly can be modeled, can be verified with just CW testing. Ideally, you really want to use the target scenario, the target signal, in order to understand the behavior of your device. The test setup typically looks like this shown here, where we use a signal generator, a vector signal generator with the wideband modulation capabilities and a spectrum analyzer on the other side, being able to demodulate. In order to get really accurate power results and also gain measurements, you combine this also with power meters, input, and on the output side. The signal-to-noise ratio of the device. So if we are talking about really high power levels, we are getting close to the saturation point of the amplifier and see some compression. So, the performance is not as good anymore and we see an increase of EVM. Below this, it's the ideal section where we have the best-performance EVM, and that should be ideally as wide as possible. On the lower end, we see already that the signal-to-noise ratio is degrading based on lower input powers, based on also lower signal levels. And this also EVM increases again. And that's why it's called this bathtube curve. Last but not least, distortion measurements we mentioned also already on the VNA side with CW approaches where you basically sweep the CW tone through the input to do AM to AM distortion or AM to PM distortion, so amplitude distortion or phase distortion. In a modulated world, we do not have to sweep the input power, but we can make benefit of the signal fidelity due to its modulation. And while just capturing a certain time duration, we typically have a large variance on the power levels and a nice distribution and can directly derive from there also the AM to AM and AM to PM curves as shown here in these plots. Also, a common scenario is to use different typologies. For example, envelope tracking, Do-R-T scenarios, Do-R-T amplifiers. Or just lately, they are starting to look more and more into load modulated balanced amplifiers, L-MBAs in short. And not to forget linearization, which can be done, for example, with digital predistortion. When we talk about waveform engineering, we're basically talking about different amplifier classes like Class A, class B, et cetera. It is defined by the time duration it is conducting power and the longer the conduction angle, so the time it is conducting power, is the more linear the amplifier acts, but the lower the efficiency is. We see this in the table below. And on the right side in the diagram, we see the different classes and its duration, conduction angle based on a sinusoidal input. Another optimization method is crest factor reduction. Crest factor describes the ratio between the peak and the average power in the signal. As we see on the CCDF, those peaks are pretty rare, so they are not coming that often. But in order to transmit the whole signal very linear, we need to keep those peaks still in the linear range of the amplifier. On the downside or as a challenge, we really need to have a high-speed tracker, which is typically somewhat in the range of three to five X the signal bandwidth. So, when we talk about high-speed signals with 100 megahertz, we need a tracker which is able to follow that at a rate of 300 to 500 megahertz. Very commonly used in, let's say, on infrastructure scenarios is a Dorothy amplifier. It is already invented a long time ago, but it's a very well-known and very well-researched efficiency enhancement method. It is also combining two amplifiers. One is used for the main, so basically for the R-MS power, and the other one is used for the peak power. As we have only RMS, we don't really care for those high peaks we talked before on the crest factor reduction, we can use this closer to saturation in order to get more efficiency out of it and leave the auxiliary amplifier with a larger headroom for the peaks. And this one is then also trimmed for more linearity. And in this way, typically there are uses of different amplifier classes. Typically, these are using a differentially biased devices with different behaviors. The other side is the digital Dorothy. In this way, we are not having one, but two different channels driving the amplifier. So we split the signal not analog, but in the digital domain already. And drive in this way already two separated signals to the main and to the auxiliary amplifier. In this way, we can better optimize the signal behavior and better position the amplifiers used in conjunction together, and this creates better efficiency. We are splitting the signal digitally and manipulate the phase and the amplitude between the two channels and create measurements to see where is the best point in collaborating between the two amplifiers. This can not only be done for saturated power, but obviously also for efficiency, ACLR, and any other matrix you are interested in. Unfortunately, what is best for efficiency is typically not best for saturated power, typically not best for ACLR. But with these measurement campaigns, you know exactly when you put a splitter at a certain position, what you're getting out of this. Based on the usage of the Dorothy design, you can optimize your overall design by selecting based on all those measurement data and you know exactly what you're getting. Let me show you here a possible outcome of a Dorothy design with a conventional mode of operation shown on the left, and even a dual input mode operation where we are using the Dorothy design really in its best possible way. What you see in the two plots is the gain compression on the top and the phase variance on the bottom. Ideally, on the phase variance, you want to have it as flat as possible. And on the gain compression, you want this to be put out as much as possible. So, you want to have a linear behavior as long as possible. And the dual input mode shows you more than a DB of extending the capabilities. This enables you to say, Well, I can better spec my device, or I can take a different device with the same setup and a smaller device, so to say, and save energy, save power in this way, also save cost. Another possibility would be, I leave the specifications as they are for performance, but extend the bandwidth over which they are possible. Talking about increasing bandwidth over which a certain performance is possible, that is also one reason why more and more are starting to look at load-modulated balanced amplifiers. It's a new topology where typically, again, two amplifiers are used in a balanced performance or two balanced amplifiers, plus control signal power, which is injected on the output, which is creating our load modulation, so to say. And this concept offers really large bandwidth coverage, even all the way up to 100 %. Unfortunately, the power which is coming in from the CSP port is going directly through and needs to be very linear. So, it has a high requirement on that CSP input. It is already started to look at a variant of the L-MBA, which is called an orthogonal L-MBA. And this uses a different input for the control signal, not on the load side, but also on the input side on the left, so to say. And adding a fixed or a tuned load on the output. This enables a more efficient usage of the L-MBA structure. Obviously, the tuned load on the output can also be used for large variants, also for measurement campaign in order to best understand what should be used there, also created by an active load modulation. Okay, so much about the different topologies. Let's have a look at linearization methods. And again here, there's a lot of research in there. The target, what we want to reach for linearization is to have it as linear as long as possible. Am to AM should be really like a flat line until we hit something like a brick wall. So it doesn't get any further. We are really at the saturation point at the amplifier. And the AM to PM, also there is no distortion. So again, here a flat line. Different methods are out there: Cartesian polar feedback, analog digital predistortion, and feed-forward. A lot of them have been researched. I want to focus here then a little bit more on the digital predistortion because this is what is used very commonly nowadays. The benefit of having digital predistortion in such a scenario is also that it allows us to use more variants and more flexibility on the typology. Because some of those topologies, including LNBA, show certain interesting special behavior when transmitting a signal, and these can be compensated with the two predistortion. There is a close collaboration ideally given between those two in order to get best efficiency. So predistortion really is done in a way to compensate the DUT performance, the DUT characteristics. In the plot here, we see with blue, the performance, the classic compression curve of an amplifier. Red is what we would like to see, and with those orange dotted lines, we see the DPD-manipulated input signal in order to compensate for the distortion for the saturation of the amplifier. So, if we want to use an amplifier close to compression where efficiency is highest, but non-linearity also is highest, so we need to compensate, we need to use any linearization. Ideally, when you're designing a PA, you want to understand how well you can linearize this. How good can my PA behave with a EVM characteristic or with ACLR when we have proper predistortion? Typically, predistortion is not the main skill set in this environment when we are designing amplifiers, but we need to understand it. Direct DPD, as we offer this in our systems, in our instruments, gives you an easy way to understand how good an amplifier can behave with an ideal predistortion by basically manipulating the input signal in an iterative process and comparing the output signal when it comes from the device under test here on the right side, comparing here with the input signal and what is the variance and manipulating sample by sample the input. In order to create a pre-distorted signal. This is done in an iterative process and optimizing the signal. We even have a hard clipping. This can have two effects. It can be a configuration of the direct DPD configuration, or it can be simply the saturation point of the power amplifier. Now, let's move on to device characterization. In R&D, we were looking and testing four design goals to reach the design goals. In characterization, we want to ensure that not only one device or a small sample meets the design goals, but a larger sample before we will release the product for production. What we are looking here for is to verify to verify on a larger scale, on a larger number of samples, and to verify also the data sheet performance figures, and even more. To better understand the characteristics of the device over frequency, over level, and also over temperature. So, it's a larger measurement campaign which is typically fully automated. And it also includes to get a certain of lifecycle testing included to understand the durability of the design. The test requirements for the RF characteristics are basically very similar to what we've done already in research and design. Now, in characterization, we are looking at the same RF matrix as in research and development. And this way also we need a similar test setup from the function block point of view. Here we show on the plot a network analyzer to do CW measurements, to do also matching measurements. And on the other side we have a signal generator, vector signal generator and vector signal analyzer for the modulation measurements. And obviously also a power supply which is also able to measure the power consumption going into the device for efficiency measurements. Active devices call for a diversified characterization using CW and modulated tests. And typically, they are done, as shown on the last slide, with different instrument setups: vector network analyzer or vector signal generator, vector signal analyser on the other side. And both have their pros and cons and their strengths, their individual strengths. In a complete characterization setup, sometimes even both sides are used or often even both sides are used. And in this way, then there might be a change in connection by a standard setup or a switching in between them. Not ideal. The goal should be to have one connection and run all the different testing. That also enables you to run the testing in a faster, smoother way with a higher measurement speed because we are doing a large measurement campaign and typically this can take quite some time. Using a calibration, as we use in the VNA also for the modulation part, would give us also more accuracy on EVM with something which is called a vector-corrected EVM. And in this way, it is also optimized with one connection for automated testing because there is no manual needed. So how can this look like? What we are proposing here is a combination of the setup with the signal generator on the left, signal analyzer on the right, and the VNA at the bottom. The connection between them is using a coupler. And once everything is calibrated, you can run all the different measurements in an automated setup with the individual instruments with best characterization capabilities as we have calibrated out the effect of the coupler all the way down to the DUT port, not only for the VNA, but also for the signal generator and analyzer. So basically, one can say the effect of the measurement setup is de-embedded by using the proper functions inside of the signal generator and analyzer, which offer this ability for deembedding. In lifecycle testing, typically we do also stress testing using defined Halt techniques, highly accelerated lifecycle tests, as they are called, doing stress with vibration, temperature, humidity, but also with a high input power. What we are doing is we are increasing the stress gradually and check the performance of the PA. This on one side helps to understand what can be done or how long can it sustain it. But on the other side, it also derives the ability to understand the behavior of the PA before it breaks. This enables also to add a warning system, a monitoring system in an overall system based on knowing, okay, if a biasing point is changing with high power or over time, this will indicate the end of the life cycle of the product. As a next step, we want to look into the production requirements. Well, in production, it's not really about measuring accurately each component. It's about ensuring proper functioning of the components and meeting the target specifications. While the focus is on the throughput and on the speed of measurements for reducing the cost. And in this way, repeatability from one sample to the next is more important than absolute level accuracy or absolute measurement accuracy. Testing and production is typically done in two different stages. At first, testing is done on the wafer level, where we want to make sure that the wafer run is successful before we spend the additional money for dizing it, putting it into dice, and even packaging for final testing. And then this is the full RF testing in the package device typically conducted. The test procedures which are followed are often based on the characterization testing. What we are doing is we don't want to go obviously through the full process, but it's a very much limited set of characterization tests which is also used in production. So, where we are looking at critical performance points as well as also classic analog and digital behavior of a MMIC, for example. So, we want to understand at a certain output power, what is the power consumption? And if those two matches to what we learned in characterization, what a good device performs, then we know that this device works and is according to specifications. Parallel testing is an important topic in order to increase throughput by using multiple devices at the same time. Multiport test instruments, like shown here, a multiport vector network analyzer, enable really multisite test in parallel as they are offering multi-receive passes or multi-transmit passes in one instrument. It's not a switched approach where one after the other is done, but it's really testing in parallel in order to improve the throughput. In contrast to in characterization, in production typically, we want to focus on CW-only or modulationonly approaches. There is no combination typically between VNA as well as modulation setup, but just one or the other in order to simplify the test setup and also to reduce cost. An important point in production is that typically we have also load boards and we have more signal conditioning in between the device and the test towards the input and to the output through the measurement receiver. So, calibration all the way down to the DUT is an important topic and also to run some de-embedding of those effects. And the absolute accuracy is really just based on the calibration of the whole system. One downside which typically comes in with longer cables, longer signal routing, is that we are losing power there, unfortunately. We have signal losses, signal attenuation. And that means we are losing dynamic range. So, it's a balance. What we need to cover with measurement accuracy, what is needed, and also to still have enough signal-to-noise ratio to meet those measurement accuracies. But on the other side, to minimize the cost by taking, let's say, a more economic version of what was used in R&D, for example. And that's why really repeatability and reduced measurement uncertainty is important here in this way and unfortunately, higher output power or sensitivity can cope with this. There are various amplifier topologies out there, but in general, the different process steps in research and development, in characterization and in production are pretty much the same, no matter if we are talking about a CMOS-based amplifier or a gallium nitrite-based amplifier. The combination of CW and modulator testing gives us the most comprehensive understanding of the performance of the device. And obviously, special scenarios based on the application of the device are typically coming in in order to ensure proper functioning, best efficiency, best fitting into the target application. Last but not least, linearization, post-digital predistortion, for example, is an important point as it helps us to get more efficient designs using amplifiers closer to its compression point, but also to offer a wider range of possibilities in order to optimize the amplifier topologies by using different linearization techniques. And this gives us a more freedom in the PA design. Reference: Webinar: A summary of testing RF power amplifier designs https://www.rohde-schwarz.com/us/knowledge-center/webinars/webinar-a-summaryof-testing-rf-power-amplifier-designs-register_255375.html