FRAUDULENT SIGNATURE

DETECTION

Sayak Mukherjee, Yash Sinha, Aakriti Jain, Tamizharasi T .

Abstract – The widespread use of digital signatures has led to an increase in counterfeit digital signatures. As they are the key

to authenticating important documents for a person, forged signatures are primarily used to perpetrate fraud. Manual methods are

becoming extinct and inefficient, as technology continues to advance daily. Machine Learning is one of the most important

technologies because it is gaining importance in every discipline, including the detection of fraudulent signatures. This project

focuses primarily on Deep Learning techniques and has considered ten distinct classification algorithms to accurately determine

whether a digital signature is authentic or forged. We have conducted additional exploratory data analysis to construct our own

dataset using the signatures of VIT students and to document the model's output. Using various metrics and hyperparameters,

the results will be analysed and compared. Additionally, the analysis will include comparisons to existing models.to the fact that it

takes a novel approach and has the ability to enhance the quality of the travel experience

Index Terms— exploratory data analysis , EDA , machine learning , deep learning , CNN models , model layering

—————————— ——————————

1 INTRODUCTION

We are well aware that advancing technologies bring with them an equal number of hazards.

Cyberattacks are one such extremely extensive and vital area that requires attention. There is yet another

subdomain of digital signature fraud even here. Signatures have been a crucial form of authentication for

the longest period. With the increasing significance of digitalization in our daily lives, signatures have

shifted to a digital format. They are used in nearly every industry today, from daily transactions and

document verifications to emerging technologies such as blockchain. Therefore, it is essential to preserve

the integrity and dependability of digital signatures, preventing any form of deception or abuse. This

project's objective is to identify the most effective convolutional neural network model that we have

developed. Based on its characteristics, a dataset that can autonomously classify a digital signature as

authentic or forged.

This paper consists of two major sections. Initially, we plan to construct a model utilising digital image

processing and deep learning technologies.

(CNNs). This requires extensive research and experimentation until we arrive at a model that classifies the

vast array of signatures in our dataset with the highest degree of precision. In the second half, we will

analyse and compare the results, taking into account a variety of metrics and hyperparameter tuning in

order to obtain the best outcomes for various scenarios. In addition to precision and recall, we attempted to

maximise the true positive and true negative rates. For a complete analysis, the comparison also

incorporates existing models and studies.————————————————

Sayak Mukherjee is currently pursuing a bachelor’s degree program in Computer Science and Engineering in VIT University, India

Yash Sinha is currently pursuing a bachelor’s degree program in Computer Science and Engineering in VIT University, India

Aakriti Jain is currently pursuing a bachelor’s degree program in Computer Science and Engineering in VIT University, India

Dr. Tamizharasi T is an Assistant Professor Sr. in the School

● of Computer Science and Engineering in VIT

University,India

●

●

●

●

IJSER © 2017

http://www.ijser.org

is available as in the case of forgery detection. In this paper, the authors have trained a SNN

with CNN as a subnetwork. In the SNN, the input image is given the first convolutional

2 LITERATURE SURVEY

layer where different filters are applied to it. To normalize local input regions, local

Response Normalization is applied. After normalization, the image input to the next pooling

In [1], the authors propose a method of image forgery detection based on the determination

layer with The output of the first convolutional layer, local normalization and pooling layer

ofrescaling or rotation operations that have been performed on the image. The authors first

is used as aninput to the second convolutional layer having 256 different filters. In addition

calculate that rotation produces a rescaling with a factor of 1/cos x. First, they extract the

to this a dropout layer is used to avoid overfitting. To enhance the formed vector, the

edges using the Laplacian operator. The 1D discrete fourier transform of each line extracted

authors propose the use of different statistical measures such as mean and standard

in the last step is calculated. From this they obtain the frequency at which rotation induced

deviation which greatly improves the accuracy of detection. The main advantage is that it

peak occurs. The rotation angle is calculated from this. To detect forgery, the steps followed

requires little training data, no preprocessing. The disadvantage is that the output is the

are: edge detection, blocking, FT and checking if image block is rotated or rescaled. A

distance of an image from a class rather than a probability value.

histogram of peak frequencies is then made by using binning. Non zero bins are likely to be

forged components. The advantage of this algorithm is that it supports blind detection of

In [3], the authors aim to find an offline signature verification process based on a single

image rotation and rescaling without any additional features and parameters of the image.

genuine signature of a candidate. To solve the challenge of detecting fraudulent signatures

The research gap is that post-processing is done such as JPEG coding with a low-quality

using a single sample, the authors convert a single signature into a set of overlappingsub-

factor, detection becomes more difficult. Moreover, to evade rescaling/rotation detection,

images and then use feature extraction using CNN to expand the number of features. They

more sophisticated interpolation methods can be used, and image manipulations may be

also generate several forged samples to train the algorithm to identify forgery features in

done to make the rescaling traces undetectable.

different signatures. The main advantage of this method was the use of sub-images to

In [2], a Siamese Neural Network is used to make predictions when very little training data

prevent a small number of local features from influencing the outcome. The authors also

generate a color-coded saliency map over each image sub-block to make the results of

steps in this methodology can be summed as preprocessing by background clearing, noise

classification more easily interpretable by professionals such as forensic police who make

removal, centering, resizing and normalization; followed by data augmentation using

use of this application. Therefore the entire methodology can be summed up as Data

Cycle-GAN and then verification using CapsNET. The main disadvantage of this

cleaning and noise removal, followed by data augmentation and classification. This is

methodology is that it gave comparatively poor results on the writer independent approach

followed by rotationof the image sub-blocks by predefined angles clockwise and repetition

that the writer dependent approach.

of the entire process. This rotation helps eliminate rotation-dependent features of

handwriting such a slant inhandwriting and helps increase the accuracy of the classification

This paper [5], proposes the use of convolution neural networks( CNN) supported by

task. The only disadvantage of this method was its relatively poor performance in

additional feature extraction methods to classify forged offline signatures. Offline

classifying Chinese signatures due tothe complex structure of Chinese characters.

signatures are harder to verify due to external criteria like age, health condition etc of the

person who is signing. In this system, the input layer to CNN contains the image data. The

In [4], the authors have proposed a deep learning-based data augmentation method to solve

image is passed to the convo layer which performs feature extraction by calculating dot

the problem of having a limited number of forged and genuine signatures. This method is

product between repetitive fields. The output of this layer, a single integer of output volume

based on the cycle-GAN deep learning method. In this method, a GAN approach is taken to

is sent to the pooling layer which is used to reduce the spatial volume of the image after

learn the mapping between input and target images that are similar to each other using a

convolution. Followed by this is a fully connected layer and softmax layer before the final

combination of supervised regressing and adversarial loss. Another advantage of this

output layer. The authors have also deployed this model using the Django framework for

method is its overcoming adversarial attacks while training a CNN by using CapsNET

ease of use. The main advantage of this method is the use of transfer learning by using

verifier to model spatial relations accurately. Adversarial attack is the manipulation of

the Alex NET CNNand GoogLeNet as a base pretrained model. The main disadvantage of

Image vectors in a specific manner to intentionally cause the model to misclassify the

this method is that the authors have not used any custom feature extraction methods as

instance. Using CapsNET vectors approach reduces this threat considerably.Therefore the

compared to the previous papers so the accuracy scores are lesser.

IJSER © 2017

http://www.ijser.org

correspondingstatic sample. The neural network model can learn how online information

The approach in [6] is based on CNN (Convolutional Neural Network) which is trained in

is transformed into offline information so that the synthetic signatures can be used to

a supervised manner so that it can learn the representation of the offline manuscripts using

improve the

the input from the online representation. The model is expected to predict the

recognition rates. The images are inverted so that the white background corresponds to

reason why color based classification is not dealt with in the hyperspectral analysis based

pixel intensity 0. The input is also normalized so that the model can predict the data more

approach. In [8], many different types of algorithms were used such as Support Vector

accurately. Each of the pixels is divided by the standard deviation of all pixel intensities.

Machines (SVM), Multi Layer Perceptron(MLP) and Convolutional Neural Network (CNN)

The leaky rectified linear units are used as the activation function for all the convolutional

models. The accuracy and time will be compared to know the best model for the digit

networks. The model was trained using the Adam optimiser to minimize the minimum

recognition.The images in the input data are reshaped to present it in 2D images i.e. (28, 28,

squared error loss for 100 epochs. Two different experiments are done for the result. The

1). The pixelvalues have been normalized, so that the input features will range between 0 to

first experiment is to measure the similarity between synthetic and real signatures, two

1. For the MLP different activation functions were used, dense layers of different

different protocols are used to train the system i.e. mono-session and multi-session. The

specification and dropout layers were used. Neural network with 4 hidden layers and an

second experiment tests whether synthetically increasing the enrolment dataset leads to

output layer with 10 units is used. To introduce non linearity to the model Rectified Linear

better recognition performance. The results are compared in the terms of Equal Error Rate

Unit activationfunction was used apart from the dense layer. For the CNN different layers

(EER) and Detection Error Tradeoff (DET). In [7], a Convolutional Neural Network is used

with the softmax layer. Leaky Rectified Linear Unit activation function has been used to

to develop the model with many different layers and neurons in order to predict if the given

increase the non-linearity. The dropout layer is there to drop some of the neurons which are

handwritten signature is forged or not. They have experimented with two different filter

chosen randomly so that the model can be simplified.. The flattening layer is there to

sizes

generate a column matrix from the 2 dimensional matrix. The last layer functions return

i.e. 3x3 to 5x5 in our CNN architectures. So for each of the filter sizes, three different sets of

probability distribution over all the 10 classes and the class with the maximum probability

convolutional layers are used, i.e. 2, 4 and 6 convolutional layers with alternating Rectified

is the output. In [9], the handwritten signatures are collected and some unique features are

Linear Unit and varying filters. Leaky Rectified Linear Unit is used as the activation

extracted to create a knowledge base of each and every individual. A standard database of

function.Each of the CNN architecture is separately trained and tested for blue and black

signatures for every individual is needed for evaluating performance of the signature

inks.Appropriate selection of architecture and training parameters has been done to aid the

verification system and also for comparing the result obtained using other techniques. There

Convolutional Neural Network. The CNN classifies the ink pixels to detect ink mismatch in

are many preprocessing techniques that were used, first the image was converted from RGB

an input hyperspectral image. The ink pixels in the hyperspectral image are labeled for the

to Grayscale. The Digital Image Processing (DIP) needs to be converted to grayscale image.

sake of visualization of any potential ink mismatch in the document. The blue and black

This decreases the computational complexity of DIP drastically and helps in running

inks can be distinguished by visual inspection for using color image processing, that is the

the

image processing algorithms in a very smooth way. For Noise Removal, a known form of

made by the authors with one person making the originals and the other person making the

noise is introduced in very small quantities, this ensures that the threshold of noise level

forgeries. They have used Tensorflow for the model backend. They split the dataset in an

inthe image increases and hence is easily detectable by the denoising filters. The grayscale

8:2 ratio for training and testing which obtained them an accuracy of 98%. The future

image format is converted into bitmap where image file format is used to store digital

enhancements suggested by the authors would be to do comprehensive research on loss

images.Raster images are in general also referred to as a bitmap. The system must be able

functions and derive a custom loss function which would predict the user to which the

to maintainhigh performance regardless of the size. It is important that the system must be

signature belongs to, and whether it is a forgery or not. In [10], the researchers have

insensitive enough for the correction in the signature image. The image is rescaled to 256 x

prepared synthetic signature databases that allow the integration of a large number of users

256 in this case. They have used the Keras Python Library for implementing the

under a wide variety of situations. Synthetic databases can be useful until an equivalent real

Convolutional Neural Network. The model that was derived has been verified using

database is developed. They have taken a corpus of datasets from the internet. The majority

accuracy and loss metrics tosee how well the model has fit the data. The image goes through

of the databases that they are using have been collected in laboratories. They have the digital

3 convolutions and max pooling layers which are in an alternating fashion. The dataset was

signatures of different languages. There are different methods of the verification system

IJSER © 2017

http://www.ijser.org

such as Western Signatures Verification Systems, Chinese Signature Verification, Japanese

identification stage. They achieved an accuracy of 97.70% in identification. An analysis has

Signature Verification, Arabic Signature Verification and Signature Verification in Indic

been carried out of the weaknesses and strengths of the most common publicly available

Languages. The multiscript signature verification system used in several different scripts

offline and online signature databases. ASV systems have traditionally been developed to

can be verified by using the same ASV. Signatures are typically executed in the original

cope with Western based signatures.

script and can be aggregated to a common database. The authors presented two offline

signature verification experiments: first without initial script identification and second with

The images in [11] are stored in a directory which is compatible with the Python Keras

initial script identification for English, Hindi and Bengali. The system developed for this

Library. The researchers preprocessed the images by resizing them to a resolution of 512

study utilized chain code features and signature gradients along with support vector based

X

classification. The study consisted of eight offline public signature datasets, available in five

512. The jpeg images, if any, had their content decoded to RGB grids of pixels with channels

different scripts. The authors showed that similar results are obtained when merging the

and were sent in RGB form to the CNN model.The CNN was implemented in python using

datasets or keeping them singular, thereby implying multiscript verification as a

the Keras library with the TensorFlow backend and consequently the model learns

generalized problem. High accuracies 99.41%, 98.45%, 97.75% were obtained by studying

the

different techniques. The authors applied a foreground and background technique for the

patterns associated with the signatures. The image passes through a continuous sequence

reach the output layer where the sigmoid activation function is applied for one last time and

of convolution and max pooling layers. A predefined number of feature maps are fed into

this becomes the output of the neural network. This is followed by the phase of back

a maxpooling layer, which in turn produce feature maps from the feature maps generated

propagation which is used for calculating the errors. The number of output layers is 2.

byprevious layers. This process continues till the fourth max pooling layer. After this the

Gradient descent algorithm was used for minimization of errors.

feature map is flattened and directed towards the fully connected layers wherein after

multiple iterations of forward and backpropagation a trained model is obtained which can

The researchers in [13] extensively reviewed the Learned iterative shrinkage and

be used to make predictions. The Dataset used by the researchers is a collection of 10

Thresholding Algorithm(LISTA). Each iteration of the ISTA constitutes one linear

signatures,with 5 real and 5 forged signatures per person. In addition, they had a kaggle

operationfollowed by a nonlinear soft-thresholding operation, which mimics the

dataset with 30 real signatures

ReLU activationfunction. One can come up with a deep network by mapping each

The software the researchers in [12] used to develop the system was the WingWare Python

iteration to a network layerand stacking the layers together, which is equivalent to

IDE and the Anaconda IDE in addition to the required library modules. In the data

executing an ISTA iteration multiple Times. After unrolling the ISTA into a network, the

acquisitionphase, the researchers used the signature dataset collected from Sigcomp 2011

network is trained using training samplesthrough backpropagation.

explored other

unrolling

They

database. This database comprises 239 genuine signatures and 123 forged signatures. Of the

further

239 genuine signatures, 219 were directed towards training and 20 went towards the testing

likeDURR(dynamically unfolding recurrent restorer) which is capable of

algorithms

set. Similarly, of the 123 forged signatures, 103 were used in training and the rest were kept

providing high qualityreconstruction of images that possess much sharper details.

for testing purposes. In the image processing phase, The researchers concluded that there

Further, it generalizes when theimage is highly degraded.

was no need for image pre-processing and feature selection techniques. Only data

augmentation is needed. In the training stage, an image is retrieved from the signature

Traditional data augmentation approaches discussed in this paper [14] are based on a mix

database and sent towards the neural network. A 6-layer multi-perceptron(MLP) neural

of affine picture transformations and color change, are quick and simple to apply, and have

network was used. The image pixelsare first fed to the input layer. The size of the first

beenshown to be effective in expanding the training set. Even though such approaches have

hidden layer was set to 13. The size of the second hidden layer was 7, The third hidden layer

been effectively implemented in a variety of industries, they are subject to adversarial

was 5 and the fourth hidden layer was 4.These nodes are further sent to the first hidden

attacks. Furthermore, they do not provide any new visual elements to the pictures that

layer. The hidden layers use the sigmoid activation function. The input pixels eventually

might

considerably increase the algorithm's learning skills as well as the networks' generalization

findings that are novel to the human eye as well. GANs may synthesize pictures from

abilities. Methods based on deep learning models, on the other hand, frequently provide

scratch in any category, and combining GANs with other approaches can provide gratifying

IJSER © 2017

http://www.ijser.org

results (for example, combining random erasing with image inpainting through GAN).

adversarial networks(GANs). One other crucial step in preprocessing during CNN training

However, GANs have limitations: computation time is long, there are evident difficulties

is patch selection. A number of algorithms with the concept of thresholding and color

with counting,a lack of perspective, and difficulty coordinating global structure. The texture

deconvolution were used to extract pixels from the regions of interest. The main post-

and color transfer issues are intertwined with the style transfer issue. Classic style transfer

processing method applied image waspatch aggregation. A patch aggregation approach

methods are comparable to traditional texture transfer methods, but they include a color

takes into consideration the characteristics and the labels of all the patches extracted by the

transfer as well. Transfer learning, which, like GANs, employs deep learning models, has

CNN to predict the final image label.

recently gained in prominence. An algorithm developed for creating artistic-style

photographs allows the information and style of the image to be separated and recombined.

In [16], The problems and inconsistencies related to manual static signatures are specified.

When the substance of one image is combined with the style of another, the result is an

These inconsistencies may arise due to the ever-changing behavioral metric of the person in

entirely new image that combines the characteristics of both photos. However, they too have

consideration and may lead to inconsistent data. Hence, verification and authentication for

limitations and they are highly dependent on the structure of the image.

thesignature may take a long time as the errors are comparatively higher. Thus, Inconsistent

signature leads to higher false rejection rates for an individual who did not sign in a

This analysis in [15] focuses on the influence of various pre- and post-processing approaches

consistent way. The proposed implementation is - an image goes through a series of

used in deep learning frameworks to deal with the extremely complex patterns found in

convolution and max pooling layers which are in an alternating fashion. When the image

histology pictures. Many of the techniques discussed in the pair, particularly the post-

goes through convolution process, a predefined number of feature maps are created which

processing approaches, were not confined to histological image analysis but were used

arefed into a max pooling layer, which creates pooled feature maps from the feature maps

in practically any discipline of image analysis. In the scope of pre-processing, the algorithm

received from the convolution layer which is before it. This pooled feature map is sent into

the researchers analyzed was the stain normalization algorithm. The stain normalization

the next convolution layer and this process continues until we reach the fourth max pooling

process standardizes the stain color appearance of a source image with respect to a reference

layer. The pooled feature map from the last max pooling layer is flattened and sent into

image. They range from global color normalization to color transfer using generative

the

fully connected layers. After several rounds of forward and backward propagation, the

of the second hidden layer was7, The third hidden layer was 5 and the fourth hidden layer

modelis trained and a prediction can now be made.

was 4. These nodes are further sent to the first hidden layer. The hidden layers use the

sigmoid activation function. The inputpixels eventually reach the output layer where the

In [17], the authors propose an off-line handwritten signature verification method based on

sigmoid activation function is applied for one last time and this becomes the output of the

an explainable deep learning method (deep convolutional neural network, DCNN) and

neural network. This is followed by the phase of back propagation which is used for

unique local feature extraction approach. Open-source dataset, Document Analysis and

calculating the errors. The number of output layers is 2.Gradient descent algorithm was

Recognition (ICDAR) 2011 SigComp has been used to train the system and verify a

used for minimization of errors.

questioned signature as genuine or a forgery. Testing and Training dataset has been kept

different to test the out-of-sample accuracy. In the data acquisition phase, the researchers

The paper numbered [18] proposes a method for the pre-processing of signatures to make

used the signature dataset collected from Sigcomp 2011 database. This database comprises

verification simple. It also proposed a novel method for signature recognition and signature

239 genuine signatures and 123 forged signatures. Of the 239 genuine signatures, 219 were

forgery detection with verification using Convolution Neural Network (CNN), Crest-

directed towards training and 20 went towards the testing set. Similarly, of the 123 forged

Trough method and SURF algorithm & Harris corner detection algorithm. After converting

signatures, 103 were used in training and the rest were kept for testing purposes. In the

all the training examples into the desired format, Pre-processing Algorithms on CC were

image processing phase, the researchers concluded that there was no need for image pre-

used. Signature verification system typically needs the answer of 5 sub-issues: data

processing and feature selection techniques. Only data augmentation is needed. In the

retrieval, pre-processing, feature extraction, identification method, and performance

training stage, an image is retrieved from the signature database and sent towards the

analysis. These were generally classified and applied to all the images. When the image is

neural network. A 6-layer multi-perceptron (MLP) neural network was used. The image

finally classified into one among the present classes of the topics. The henceforward system

pixels are first fed to the input layer. The size of the first hidden layer was set to 13. The size

proposes atechnique for the detection of the forgery within the same by checking the chosen

IJSER © 2017

http://www.ijser.org

options against the on the market set of pictures for the signature and produces a binary

The authors of paper [19] have proposed a new descreening technique for Frequency

result if it’s cast or not. Here two Algorithms are used for Forgery Detection: Harris

Domainfilters. It is based on the idea that the original screen pattern is easy to detect in the

Algorithm and Surf Algorithm. The proposed system attains an accuracy of 85-89% for

Fourier domain. So, the authors propose a new filter and how to create it in order to tackle

forgery detection and 90-94% for signature recognition.

this issue.

The descreen algorithm proposed in this paper works in the frequency domain. The basic

machine classifier to classify the images based on the features extract by the CNN. The

idea is that the screen pattern can be detected and properly removed because it is

datasets used in this paper are the LG2200 dataset, it contains 116,564 images from 676

intrinsically regular and periodic. In some sense, the screen signal can be associated with

people, and CASIA-Iris-Thousand dataset which contains 20,000 images from 1000 people.

some kinds of periodical noise. The main differences are that such "noisy" values cannot be

70% of the data is used for training and 30% for classification. The different

completely removed because it also carries out the original signal. Preserving, of course, the

softwares/libraries used in this paper are USIT v2.2 for image segmentation and

right amountof low frequency components it is possible to properly search the anomalous

normalization, PyTorch for training the CNN and LIBSVM for the SVM classifier.

peaks and deletethem in a suitable way. These peaks are properly characterized by some

peaks located at a given distance from the DC component. In such a manner the main low

In the first step, image segmentation is performed by using one of the most commonly used

pass component is preserved and the final recovered image is not too blurry.

circle detectors, the integro differential operator. This operator detects circular edges by

iteratively searching based on the parameters (x0, y0, r). This isolates the iris region of

In [20], the authors have applied frequency domain filters to generate enhanced images.

theeye from eyelashes, eyelids and sclera. Due to contraction and dilation of the pupil, the

Simulation outputs result in noise reduction, contrast enhancement, smoothening and

size of the iris can vary from image to image, this variation is accounted for in the

sharpening of the enhanced image. In frequency domain methods, the image is first

normalization step. The segmented iris revision is mapped to a fixed rectangular region

transferred into the frequency domain. All the enhancement operations are performed on

using the rubber sheet model. This is done by mapping the iris region from raw cartesian

the Fourier transform of the image. The procedures required to enhance an image using

coordinates to dimensionless polar coordinates. Normalization has the additional benefit of

frequency domain technique are: Transform the input image into the Fourier domain,

removing the rotations of the eye.

Multiply the Fourier transformed image by a filter and finally, Take the inverse Fourier

For feature extraction, the normalized images of the iris are fed into the CNN feature

transform of the image to get the resulting enhanced image.

extraction module, five CNNs (AlexNet, VGG, Google Inception, ResNet and DenseNet) are

used to extract features from the normalized iris images. Different layers in the CNN models

The steps employed by the authors, in [21], in training the iris classifier are preprocessing

the image differently, with later layers encoding finer and more abstract information

the dataset which includes performing image segmentation and normalization on the

and

dataset, using CNN to extract features from the images and finally using a support vector

earlier layers retaining coarser information. The feature extracted vectors from the CNNs

achieved the achieved accuracy of 98% and 98.2% on LG2200 and CASIA-Iris-Thousand

are fed into the SVM classifier, a multi-class SVM is used with “one against all” strategy.

dataset. VGG achieved the lowest accuracy of 92.7% and 93.1% due to its simple

architecture. Advantages: The authors achieved higher accuracy than traditional methods.

The performance of the system is reported using the Recognition Rate, which is calculated

Itis easier to use CNN based classifiers in large scale applications. CNN based classifiers are

as the proportion of correctly classified samples at a predefined False Acceptance Rate of

more secure for use as biometric locks. Disadvantages: The computational cost of training

0.1%. Gabor phase quadrant feature descriptor is used as the baseline feature descriptor, its

CNNs is much higher than traditional methods. In real time applications, traditional

accuracy is 91.1% on LG2200 and 90.7% on the CASIA-Iris-Thousand datasets.

methods are

faster than CNN based methods. Future works: Other neural network

architectures, such as Deep Belief Network (DBN), Stacked Auto Encoder (SAE) etc, can be

Authors found that among all five CNNs, DenseNet achieved the highest accuracy of 98.7%

used for feature extraction in iris recognition.

on LG2200 dataset and 98.8% on CASIA-Iris-Thousand dataset. ResNet and Inception

IJSER © 2017

http://www.ijser.org

the S vector in this case will be able to detect forgery but is at risk of misclassifying genuine

The authors in the [22], constructed a dataset by, first, gathering 20 signatures from 20

signatures, using subject’s signature and other’s signatures, using all original signatures and

different individuals and then after a month another batch of 20 signatures were gathered

using subject’s original and forged for the subject. The output from the CNN is used as the

from 10 individuals. Each signature was given to a forger to imitate five signatures. The

input of an AE. The scheme used in the paper is a five layer AE that is trained using the

signatures from the dataset are preprocessed by normalizing the alignment, size and length

samedata as input and output. To test the AE, a new signature is given to it and the resulting

of the signature before feature extraction. First each signature is aligned so that the starting

vectoris compared with the vector for the original signature, if the difference between them

point is set to (0, 0, 0, 0, 0), then an equation is used to normalize the alignment of the

is less than a predefined threshold then the signature is accepted as a valid one.

signatures. Finally, length and width of the signatures are normalized.

The results of the experiments show an increase of 13.7% percent accuracy when CNN based

CNNs have two parts, an automatic feature extractor and a trainable classifier. In this paper

feature extraction is compared with other methods. Advantages: CNN trained with forged

only the feature extractor is used. In the CNN feature extractor, the convolution layer uses

andgenuine signatures could generate an S vector that achieved better results than other

thenormalized signature as the input value. The output of the CNN is a vector called the

methods.

S-vector. The training data for the CNN is of four types: the genuine and forged signatures,

The trained model can be used in many different applications, without the need for

comparedwith AlexNet, ResNet and DenseNet, FSSNET, SAGP and PRAN algorithms.

retraining it. Disadvantages: The computational cost of training CNNs is much higher than

traditional methods.

Experiments on the Indian Pines data set resulted in AML being the best algorithm but all

The signatures that were taken on a smartphone and hence the method can’t be used with

of the algorithms were 1% to 8% in terms of the performance metrics of each other. On the

images of signatures on paper. Future works: Identifying forged signatures drawn on paper.

Pavia University data set, the AML algorithm was closely followed by the PRAN algorithm

and on the Salians dataset, again the ALM algorithm obtained the best results. Advantages:

In [23], First the original hyperspectral image is reduced by the use of PCA algorithm, this

The proposed algorithm performs better than all other test algorithms in all the tested data

algorithm reduces the number of dimensions required to represent the image by only

sets. Disadvantages: The proposed algorithm is more complex than other methods due to

keeping the useful features of the image. A probability weight distribution mechanism

the required multistep processing. Future works: Test the algorithm on other datasets and

called the attention mechanism is used to improve the quality of hidden layer features.

try to reduce the complexity of the involved steps.

Probability weight distribution mechanism calculates the features of remote sensing images

input at different times and pays attention to the features of target recognition. Next a

multiscale convolution kernel is used to alleviate the loss of features due to the use of

In paper [24], Point based methods have efficient memory requirements but they have

traditional convolution kernels. This multiscale kernel mines for features from multiple

irregular memory access patterns that introduce computation overheads. In point based

scales simultaneously. Bi-LSTM is then used to improve the correlation between deep

methods, neighboring points are not laid out contiguous in memory and since the 3D points

features. It also avoids the problem of gradient explosion and gradient disappearance. Bi-

are scattered in R3, the neighbors of a point need to be explicitly calculated and can’t be

LSTM uses twoLSTM, one for forward and one for backward to strengthen the semantic

directly indexed. The combination of these two factors cost 30% to 50% of total runtime.

information between deep features.

Voxel based models have regular memory access and good memory locality but they

Three data sets were used in this paper, Indian Pines (145 * 145 in size and reduced to 100

require high resolution in order to not lose information. On an average GPU with 12 GB of

dimensions with PCA), Pavia University scene (610 * 340 in size and reduced to 50

memory, the largest possible resolution is 64 which will lead to 42% of information loss

dimensions with PCA) and Salinas (which is 512 * 217 in size and reduced to 29 dimensions

and to retain

with PCA). Overall accuracy, average accuracy and the kappa coefficient are used as the

>90% information, at least 82 GB of memory is required, which is around 7 GPUs. This is

performance metrics to evaluate the model. The experiment was repeated 10 times and the

why voxel based models are prohibitive for deployment.

highest value was taken each time. The proposed AML algorithm in this paper was

IJSER © 2017

http://www.ijser.org

The model proposed by the author combines the advantages of point based and voxel based

methods. It does that by disentangling fine grained feature transformation and the coarse

grained neighbor aggregation so that each branch can be implemented efficiently and

effectively. Points are transformed into low resolution voxel grids, voxel based convolutions

are then performed, finally the result is devoxelised to get the points back.

The method was tested on following tasks: object part segmentation, indoor scene

segmentation and 3D object detection. It was found that the proposed methodology is

superior in all the tested tasks with lower latency and GPU memory consumption.

Advantages: Better results with lower memory latency and consumption. Disadvantages:

Need more testing to further prove the results. Future works: More testing on more

sophisticated tasks to get a better idea of advantages and disadvantages of the proposed

model.

IJSER © 2017

http://www.ijser.org

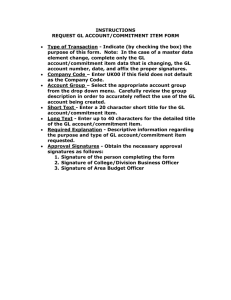

the most pivotal steps in any Machine Learning

model as the performance of the model greatly

3 CUSTOM DATASET

depends on how well processed the dataset is. For

In this final part of the project we have conducted

an exploratory data analysis and created a dataset

from VIT students whose signatures we have

acquired.

this purpose, we plan to have the following image

processing pipeline:

The raw image shall be read from the dataset

and converted to a grayscale image forapplying

morphological transformations.

These are few samples of the custom dataset that

we have created.

The rest of the raw dataset can be viewed from

the link given below:

https://drive.google.com/drive/folders/16tyIeylDF

19E4OjiJzwoUMrFGfQPHoq5?usp=sharing

1.

Noise removal can be achieved by

incorporating morphological

transformations.

2.

The image is then converted to a

binary image to have just pixel

values in the set{0,1} where 0 stands

for a black pixel and 1 stands for a

white one. (binarization)

4 IMAGE ACQUISITION

3.

The resultant pepper noise is removed

using a median filter. (median)

4.

The image is then inverted and

forwarded to the ML model.

The first step for any deep learning model is to

gather data and a lot of it. To do the same, we

researched datasets which were already prepared

and annotated, but we couldn’t find any such

6 PROPOSED METHOD

relevant datasets for our model. Hence, we

acquired 3 datasets in raw format which had to be

We have considered 10 deep learning models

processed and annotated later on.

having separate characteristics to train and

compare their output on our custom dataset

The primary purpose to use 3 datasets was to

have the required variety in our training data for

the model. These 3 datasets comprise of the

They are as follows :

VGG16

following features:

Dataset 1 has signatures in English without much

background noise.

VGG-16 is a deep convolutional neural network

architecture that was developed by the Visual

Dataset 2 has signatures in English with

background noise.

Geometry Group at the University of Oxford. It

Dataset 3 has signatures in Hindi and Bengali.

Large-Scale

was designed to participate in the ImageNet

Visual

Recognition

Challenge

(ILSVRC) in 2014, and it achieved outstanding

This gave us sufficient variety required to

performance

achieve a good enough accuracy and enable the

architecture consists of 16 layers, including 13

model to predict if a particular signature is genuine

convolutional layers, 5 maxpooling layers, and 3

or not in any language.

fully connected layers. The convolutional layers

on

this

task.

The

VGG-16

have small 3x3 filters, and they are arranged in a

way that makes the network deeper and narrower

5 IMAGE ENHANCEMENT

than previous architectures. VGG-16 has around

Pre-processing the data is considered to be one of

138 million parameters, making it a very large

IJSER © 2017

http://www.ijser.org

network that requires significant computational

layers

resources to train. However, it has proven to be

architecture of ResNet-50 is based on a series of

very effective at tasks such as image classification,

residual blocks, each of which contains two or

object detection, and image segmentation. VGG-

more

16 has been widely used as a pre-trained model

connection that bypasses the convolutional layers.

for transfer learning, where it is finetuned on new

The shortcut connections enable the information

datasets to perform specific tasks. It has also

to flow directly from the input of one block to the

served as a benchmark for comparing the

output of another block, which helps to alleviate

performance of newer architectures, such as

the vanishing gradient problem that can occur in

ResNet and Inception.

deep networks. This allows ResNet-50 to be much

VGG19

deeper than previous architectures, while still

VGG-19 is a deeper version of the VGG-16

maintaining good performance. ResNet-50 has

architecture, also developed by the Visual

been pre-trained on large-scale datasets, such as

Geometry Group at the University of Oxford. It

ImageNet, and has been shown to achieve state-

was introduced in the same paper as VGG16, and

of-the-art performance on a wide range of image

it consists of 19 layers, including 16 convolutional

classification tasks. It has also been used for

layers, 5 max-pooling layers, and 3 fully

transfer learning in a variety of computer vision

connected layers. The architecture of VGG-19 is

applications,

including

similar to VGG-16, with the only difference being

segmentation,

and

the number of convolutional layers. VGG-19 has

approximately 23 million parameters, ResNet-50

three additional convolutional layers compared to

is significantly smaller than VGG-16 and VGG-19,

VGG-16, which makes it even deeper and more

making it more computationally efficient.

complex. Similar to VGG-16, VGG-19 has 3x3

RESNET101

filters in all of its convolutional layers, which

ResNet-101 is a deeper version of the ResNet

makes

in

architecture, introduced by Microsoft Research in

capturing spatial features in images. However,

2016. Like ResNet-50, ResNet-101 is based on a

due to its increased depth, VGG-19 requires even

series of residual blocks, each of which contains

more computational resources to train and has

two or more convolutional layers and a shortcut

approximately 143 million parameters. VGG-19

connection. The main difference between ResNet-

has also been widely used as a pre-trained model

50 and ResNet-101 is the number of layers.

for transfer learning, especially in tasks such as

ResNet101 consists of 101 layers, including 100

image classification and object detection. Despite

convolutional layers and 1 fully connected layer.

its

This deeper architecture allows ResNet-101 to

the

architecture highly

complexity,

excellent

VGG-19

performance

has

demonstrated

fully

convolutional

connected

layers

image

and

object

layer.

a

The

shortcut

detection,

captioning.

With

capture more complex patterns and features in

classification benchmarks, and it remains a

images, and it has been shown to achieve even

popular

better performance than ResNet-50 on various

for

many

various

1

image

choice

on

effective

and

deep

learning

applications.

image classification tasks. ResNet-101 has been

RESNET50

pre-trained on large-scale datasets such as

ResNet-50 is a deep convolutional neural network

ImageNet and has been used as a pre-trained

architecture that was introduced by Microsoft

model for transfer learning in various computer

Research in 2015. The name ResNet comes from

vision applications, including object detection,

"residual network, " which refers to the use of

segmentation,

residual connections between layers to enable the

approximately 44 million parameters, ResNet-101

training of much deeper networks. ResNet-50

is larger and more computationally expensive

consists of 50 layers, including 49 convolutional

than ResNet-50, but it can provide even better

IJSER © 2017

http://www.ijser.org

and

image

captioning.

With

performance for more challenging tasks. Overall,

image classification and object recognition tasks.

ResNet-101

learning

Inception-v3 has also introduced several new

architecture that has demonstrated outstanding

techniques to improve the performance of the

performance on many image recognition and

network. For example, it uses factorized 7x7

computer vision tasks. Its ability to capture and

convolutions,

represent complex features has made it a popular

convolution into two smaller convolutions,

choice for a variety of deep learning applications.

reducing

RESNET152

improving

ResNet-152 is a deeper version of the ResNet

normalization and RMSprop optimization to

architecture,

Microsoft

improve training stability and speed. Inception-v3

Research in 2016. Like ResNet-50 and ResNet-101,

has been pre-trained on large-scale datasets such

ResNet-152 is based on residual blocks and

as ImageNet and has been shown to achieve state-

utilizes shortcut connections to enable the training

of-the-art

of very deep neural networks. The main difference

classification tasks. It has also been used for

between ResNet-152 and the other ResNet

transfer learning in a variety of computer vision

architectures is its depth. ResNet-152 consists of

applications,

152 layers, including 151 convolutional layers and

segmentation, and image captioning. Overall,

1 fully connected layer. This makes it one of the

Inception-v3

deepest neural networks that have been proposed

architecture that has demonstrated outstanding

for computer vision tasks. The additional layers in

performance on many computer vision tasks. Its

ResNet-152 enable it to capture even more

ability to capture both local features and global

complex features and patterns than ResNet-101,

patterns,

making it suitable for more challenging image

computational resources, has made it a popular

classification and object recognition tasks. It has

choice for a wide range of deep learning

been

applications.

is

a

also

shown

performance

powerful

introduced

to

on

achieve

various

deep

by

state-of-the-art

image

recognition

which

the

break

number

efficiency.

of

also

on

including

along

a

uses

various

object

powerful

with

its

a

7x7

parameters

It

performance

is

down

deep

efficient

and

batch

image

detection,

learning

use

of

INCEPTIONRESNETV2

benchmarks, including the ImageNet Large Scale

Inception-ResNet-v2 combines the multi-scale

Visual Recognition Challenge (ILSVRC). ResNet-

feature extraction of Inception with the residual

152 has also been widely used as a pre-trained

connections of ResNet. It consists of a series of

model for transfer learning in computer vision

Inception modules, each of which includes

applications

such

as

multiple parallel convolutional operations at

segmentation,

and

image

object

detection,

captioning.

With

different scales, along with residual connections

approximately 60 million parameters, ResNet-152

that bypass the convolutional layers. This

is larger and more computationally expensive

combination of techniques helps to improve the

than ResNet-50 and ResNet-101. However, its

accuracy of the network while maintaining its

ability to capture complex features and patterns

computational efficiency. Inception-ResNet-v2

makes it a powerful deep learning architecture for

also introduces new techniques to improve the

a wide range of computer vision tasks.

performance of the network, such as "dynamic

INCEPTIONV3

scaling" of the input images, which allows the

The Inception-v3 architecture consists of a series

network to adapt to images of different sizes. It

of Inception modules, each of which performs

also uses "label smoothing" during training, which

multiple parallel convolutional operations at

helps to reduce overfitting and improve the

different scales. The Inception module is designed

generalization ability of the network. Inception-

to capture both local features and global patterns

ResNet-v2 has been pre-trained on large-scale

in images, which makes it highly effective in

datasets such as ImageNet and has been shown to

IJSER © 2017

http://www.ijser.org

achieve state-of-the-art performance on various

MOBILENETV2

image classification and object recognition tasks.

One of the key improvements of MobileNetV2 is

It has also been used for transfer learning in a

the use of a new block structure called the

variety of computer vision applications, including

"inverted residual with linear bottleneck". This

object

image

block structure consists of a linear bottleneck

captioning. Overall, Inception-ResNet-v2 is a

layer, followed by a depthwise convolution layer,

powerful

that

and then a linear projection layer. This structure

combines the strengths of Inception and ResNet.

allows MobileNetV2 to capture both low-level

Its ability to capture both local features and global

and high-level features of the data, while still

patterns,

maintaining a high degree of computational

detection,

segmentation,

deep

learning

along

with

its

and

architecture

efficient

use

of

computational resources, has made it a popular

efficiency.

choice for a wide range of deep learning

activation function

applications.

convolutional activation", which reduces the

MOBILENET

computational cost of the pointwise convolution

MobileNet achieves this by using depthwise

by removing the need for an additional non-linear

separable convolutions, which consist of a

activation function. In addition, MobileNetV2

depthwise convolution followed by a pointwise

introduces several new techniques to further

convolution. The depthwise convolution applies a

improve its performance, including "shortcut

single filter to each input channel separately,

connections" between the bottleneck layers, which

while the pointwise convolution applies a 1x1

helps to reduce the vanishing gradient problem

convolution to combine the output of the

and improve the training stability of the network.

depthwise convolution. This approach reduces

It also uses "channel pruning" to reduce the

the number of parameters and computations

number of channels in the network without

required, while still allowing the network to

significantly impacting its accuracy. Overall,

capture complex patterns in the data. MobileNet

MobileNetV2 is a powerful deep learning

also uses "bottleneck" layers to further reduce the

architecture that has further improved the

computational complexity of the network. These

efficiency and performance of the MobileNet

layers use 1x1 convolutions to reduce the number

architecture. Its ability to capture both low-level

of input channels before applying the depthwise

and high-level features of the data, while still

separable

1x1

maintaining a high degree of computational

output

efficiency, makes it well-suited for a wide range of

convolution,

convolutions

again

to

and

then

expand

use

the

MobileNetV2

also

uses

a

new

called "linear pointwise

channels. MobileNet has been pre-trained on

mobile and embedded vision applications.

large-scale datasets such as ImageNet and has

NASNETLARGE

been

state-of-the-art

The NasNetLarge architecture consists of a series

performance on various image classification tasks.

of "normal" and "reduction" cells, each of which

It has also been used for transfer learning in a

contains multiple convolutional layers with

variety of computer vision applications, including

different filter sizes and dilation rates. The normal

object

facial

cells use skip connections to allow information to

recognition. Overall, MobileNet is a powerful

flow directly from earlier layers to later layers,

deep

been

while the reduction cells use max pooling to

optimized for mobile and embedded devices. Its

reduce the spatial size of the feature maps.

ability to achieve high accuracy with fewer

NasNetLarge

parameters and computational resources makes it

regularization" during training, which encourages

well-suited for a wide range of mobile and

diversity in the architectures produced by the

embedded vision applications.

search algorithm. This helps to prevent the

shown

to

detection,

learning

achieve

segmentation,

architecture

that

and

has

IJSER © 2017

http://www.ijser.org

also

includes

"path-level

algorithm from converging to a single, overfit

architecture and encourages the exploration of a

5

VGG16

wide range of architectures. NasNetLarge has

been pre-trained on large-scale datasets such as

ImageNet and has been shown to achieve state-ofthe-art

performance

on

various

image

classification tasks. It has also been used for

transfer learning in a variety of computer vision

applications,

including

object

detection,

VGG19

segmentation, and facial recognition. Overall,

NasNetLarge is a powerful deep learning

architecture that demonstrates the potential of

neural architecture search for automating the

process of architecture design. Its ability to

capture complex patterns in the data, along with

its high degree of computational efficiency, makes

it well-suited for a wide range of computer vision

RESNET50

applications.

RESNET101

RESNET152

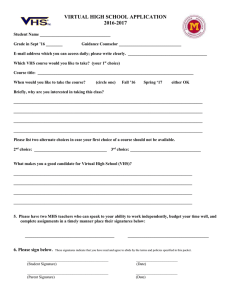

7 RESULTS AND DISCUSSION

The confusion matrix and the ROC curve has been

presented below :

IJSER © 2017

http://www.ijser.org

INCEPTIONV3

Using mobile net we try to distinguish fraud

signatures from real signatures

Identified fraud signature

INCEPTIONRESNETV2

Identified real signature

MOBILENET

MOBILE NET V2

PLOTTING THE MODEL VS MODEL ACCRACY

6 CONCLUSIONS

In this project, by utilizing various image

processing methods such as image acquisition,

image

NASNETLARGE

enhancement,

binarization,

noise

removal, image inversion, image restoration,

cropping and resizing etc, we realized how

Deep Learning promotes development of

really advanced machine learning models. We

compared 10 models on a custom dataset made

from the signatures of VIT students and

recorded the appropriate results Finally with

From the obtained result we can see the mobilenet

model works the best in the in our custom dataset.

proper knowledge of python programming

language and conceptual Deep Learning, we

were able to develop a fairly accurate model

which could predict and distinguish between

IJSER © 2017

http://www.ijser.org

real and fraudulent signature

2516.

Thus with this project we conclude that Image

6.

Melo, V.K., Bezerra, B.L.D., Impedovo, D.,

processing is an advanced field of Computer

Pirlo, G. and Lundgren, A., 2019. Deep

Vision and Deep Learning and can be used

learning

extensively for many modern applications

handwritten signatures based on online

With proper utilization, this field has huge

samples. IET Biometrics, 8(3), pp.215-220

potential to completely transform the way

7.

approach to generate offline

Khan, M.J., Yousaf, A., Abbas, A.

humans interact with its surroundings and

and Khurshid, K., 2018. Deep

among themselves. This project has opened

learning for automated forgery

new horizons for us to explore and has really

detection in hyperspectral document

given us boosting motivation to explore this

images. Journal of Electronic Imaging,

adventurous fieldof Deep Learning and Image

Processing.

27(5), p.053001.

8.

Pashine, S., Dixit, R. and Kushwah, R.,

2021. Handwritten Digit Recognition

7

using Machine and Deep Learning

REFERENCES

Algorithms. arXiv preprint

arXiv:2106.12614.

1.

Wei, Weimin, et al. "Estimation of

3.

4.

Diana, A. and Raimond, K., 2018.

Handwritten signature forgery

signatures with application to blind

detection using convolutional neural

detection of image forgery." IEEE

networks. Procedia computer science, 143,

Transactions on Information Forensics

pp.978-987.

10. Diaz, M., Ferrer, M.A., Impedovo, D.,

Jagtap, Amruta B., et al. "Verification of

Malik, M.I., Pirlo, G. and

genuine and forged offline signatures

Plamondon, R., 2019. A perspective

using SiameseNeural Network (SNN)."

analysis of handwritten signature

Multimedia Tools and Applications 79.47

technology. Acm Computing Surveys

(2020): 35109-35123.

(Csur), 51(6), pp.1-39.

Kao, Hsin-Hsiung, and Che-Yen Wen.

11. Alajrami, E., Ashqar, B.A., Abu-Nasser,

"An offline signature verification and

B.S.,

forgery detection method based on a

Barhoom, A.M. and Abu-Naser, S.S.,

single known sample and an

2020.

explainable deep learning approach."

verification

Applied Sciences 10.11 (2020): 3716.

International Journal of Academic

Yapıcı, Muhammed Mutlu, Adem

Multidisciplinary Research (IJAMR),

Tekerek, and Nurettin Topaloğlu. "Deep

learning-based data augmentation

5.

Gideon, S.J., Kandulna, A., Kujur, A.A.,

interpolation-related spectral

and Security 5.3 (2010): 507-517.

2.

9.

image rotation angle using

Khalil,

A.J.,

Musleh,

Handwritten

using

deep

M.M.,

signature

learning.

3(12).

12. Tahir, N.M., Ausat, A.N., Bature, U.I.,

method and signature verification

Abubakar, K.A. and Gambo, I., 2021.

system for offline handwritten

Off-line Handwritten Signature

signature." Pattern Analysis and

Verification System: Artificial Neural

Applications 24.1 (2021): 165-179.

Network Approach. International

Berlin, M. A., et al. "Human Signature

Journal of Intelligent Systems and

Verification Using Cnn with

Applications, 13, pp.45-57.

Deployment Using RoughDjango

13. Monga, V., Li, Y. and Eldar, Y.C., 2021.

Framework." Annals of the Romanian

Algorithm unrolling: Interpretable,

Society for Cell Biology (2021): 2506-

efficient deep learning for signal and

IJSER © 2017

http://www.ijser.org

image processing. IEEE Signal

23. Hosung Park, Changho Seo and

Processing Magazine, 38(2), pp.18-44.

Daeseon Choi

14. Mikołajczyk, A. and Grochowski, M.,

24. Small Sample Classification of

2018, May. Data augmentation for

Hyperspectral Remote Sensing Images

improving deep learning in image

Based on Sequential Joint Deeping

classification problem. In 2018

Learning Model - Zesong Wang, Cui

international interdisciplinary PhD

Zou and Weiwei Cai

workshop (IIPhDW) (pp. 117-122).

25. Point-Voxel CNN for Efficient 3D

IEEE.

Deep Learning - Zhijian Liu, Haotian

15. Salvi, M., Acharya, U.R., Molinari, F. and

Meiburger, K.M., 2021. The impact of preand

Tang, Yujun Lin and Song Han

26. A Survey on the New Generation of

post-image processing techniques on

Deep Learning in Image Processing Licheng Jiao andJin Zhao

deep learning frameworks: A

comprehensive review fordigital

pathology image analysis. Computers in

Biology and Medicine, 128, p.104129.

16. Handwritten Signature Verification

using Deep Learning. Eman Alajrami,

Belal A. M.Ashqar, Bassem S. AbuNasser, Ahmed J. Khalil, Musleh M.

Musleh, Alaa M. Barhoom, Samy S.

Abu-Naser

17. An Offline Signature Verification and

Forgery Detection Method Based on

a Single Known Sample and an

Explainable Deep Learning

Approach. Hsin-Hsiung Kao and

Che-Yen Wen

18. Offline Signature Recognition and

Forgery Detection using Deep

Learning. Jivesh Poddara , Vinanti

Parikha , Santosh Kumar Bhartia

19. A New De-Screening Technique in the

Frequency Domain - S Battiato, F. Stanco

20. Image Smoothening and Sharpening

using Frequency Domain Filtering

Technique - Swati Dewangan, Anuo

Kumar Sharma

21. Iris Recognition With Off-theShelf CNN Features: A Deep

Learning Perspective - Kien

Nguyen, Clinton Fookes, Arun

Ross and Sridha Sridharan

22. Forged Signature Distinction Using

Convolutional Neural Network for

Feature Extraction. - Seungsoo Nam,

IJSER © 2017

http://www.ijser.org