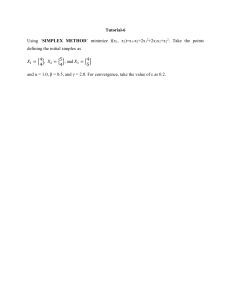

Introduction to Operations Research Textbook, 11th Edition

advertisement