Transportation Research Part C

Towards Efficient Airline Disruption Recovery with Deep Learning

--Manuscript Draft-Manuscript Number:

TRC-22-01050R1

Article Type:

Research Paper

Keywords:

Airline Scheduling; Disruptions; Deep Reinforcement Learning

Corresponding Author:

Xiaoqian Sun

Beijing, China

First Author:

Yida Ding

Order of Authors:

Yida Ding

Sebastian Wandelt

Guohua Wu

Yifan Xu

Xiaoqian Sun

Abstract:

Disruptions to airline schedules precipitate flight delays/cancellations and significant

losses for airline operations. The goal of the integrated airline recovery problem is to

develop an operational tool that provides the airline with an instant and cost-effective

solution concerning aircraft, crew members and passengers in face of the emerging

disruptions. In this paper, we formulate a decision recommendation framework which

incorporates various recovery decisions including aircraft and crew rerouting,

passenger reaccommodation, departure holding, flight cancellation and cruise speed

control. Given the computational hardness of solving the mixed-integer nonlinear

programming (MINP) model by the commercial solver (e.g., CPLEX), we establish a

novel solution framework by incorporating Deep Reinforcement Learning (DRL) to the

Variable Neighborhood Search (VNS) algorithm with well-designed neighborhood

structures and state evaluator. We utilize Proximal Policy Optimization (PPO) to train

the stochastic policy exploited to select neighborhood operations given the current

state throughout the Markov Decision Process (MDP). Experimental results show that

the objective value generated by our approach is within a 1.5% gap with respect to the

optimal/close-to-optimal objective of the CPLEX solver for the small-scale instances,

with significant improvement regarding runtime. The pre-trained DRL agent can

leverage features/weights obtained from the training process to accelerate the arrival

of objective convergence and further improve solution quality, which exhibits the

potential of achieving Transfer Learning (TL). Given the inherent intractability of the

problem on practical size instances, we propose a method to control the size of the

DRL agent’s action space to allow for efficient training process. We believe our study

contributes to the efforts of airlines in seeking efficient and cost-effective recovery

solutions.

Suggested Reviewers:

Henning Preis

TU Dresden

henning.preis@tu-dresden.de

Dr. Preis recently published in TRC on airline schedule recoveries in aviation.

Luis Cardaso

Rey Juan Carlos University

luis.cadarso@urjc.es

Dr. Cardaso has recently published in TRC on robust integrated airline planning.

Sameer Alam

Nanyang Technological University

sameeralam@ntu.edu.sg

Dr. Alam has recently published in TRC on machine learning in aviation.

Yassine Yaakoubi

McGill University

Powered by Editorial Manager® and ProduXion Manager® from Aries Systems Corporation

yassine.yaakoubi@mcgill.ca

Dr. Yaakoubi is an expert in Machine Learning for aviation optimization problem,

particularly crew pairing.

Response to Reviewers:

Please see our attached author response document.

Powered by Editorial Manager® and ProduXion Manager® from Aries Systems Corporation

Cover Letter

Dear editors,

This is the revision of our manuscript “Towards Efficient Airline Disruption Recovery with Reinforcement Learning”

(Manuscript ID: TRC-22-01050R1). We thank you and the two reviewers for the time spent on helping us to improve our

manuscript. In our author response, we describe in detail how we addressed the comments of the reviewers. We hope that these

changes allow you to accept our submission for your journal Transportation Research Part C.

Best regards,

Yida Ding, Sebastian Wandelt, Guohua Wu, Yifan Xu, and Xiaoqian Sun,

Response to Reviewers

Authors’ Response to Reviews of

Towards Efficient Airline Disruption Recovery with Reinforcement Learning

RC: Reviewers’ Comment,

AR: Authors’ Response,

Manuscript Text

Reviewer #1

General comments to the authors

RC:

Disruptions to airline schedules is a cause of significant losses for airline operations. In this regard,

the current manuscript proposes a decision recommendation framework for the airline recovery problem.

The underlying model is able to incorporate aircraft and crew rerouting, passenger re-accommodation,

departure holding, flight cancellation, while considering aircraft cruise speed decisions. While the research aims to solve a problem of high operational value, there are the following questions/ points which

should be addressed, in order to better understand this research work:

AR: Thank you for taking the time to review our manuscript and for the very constructive feedback. We have

addressed your concerns carefully in this revised version; the details are listed in our responses below:

Major comment #1

RC:

The literature review is documentative but does not include any discussions on the short comings of the

existing literature.

AR: Thank you for your suggestion. We have provided a discussion on the shortcomings of the existing literature

on aircraft recovery problem and integrated airline recovery problem. An excerpt from Section 2 (Literature

Review) is shown below:

The modelling and computational techniques for airline disruption management have been rapidly

developed in the literature. Previous efforts typically addressed irregular operations in a sequential

manner, such that issues related to the aircraft fleet, crew, and passengers are resolved respectively

(Barnhart, 2009). Section 2.1 first discusses research on the aircraft recovery problem, after which

Section 2.2 reviews the airline recovery literature covering multiple resources, and Section 2.3 discusses

the literature on the reinforcement learning approach for air transport management.

2.1. Aircraft recovery

As aircraft are the most constraining and expensive resource in aviation management, previous efforts

mainly focused on aircraft recovery which can be formulated as follows: given a flight schedule and

a set of disruptions, determine which flights to be cancelled or delayed, and re-assign the available

aircraft to the flights such that the disruption cost is minimized (Hassan et al., 2021).

Teodorović and Guberinić (1984) were the first authors that discussed the minimization of passenger

1

delays in the Aircraft Recovery Problem (ARP). The problem is formulated as a network flow problem

in which flights are represented by nodes and arcs indicate time losses per flight. However, aircraft

maintenance constraints are ignored and they assume that all itineraries contain only a single flight

leg. They utilized the branch & bound method to solve the problem with a network of eight flights

operated by three aircraft. This work was further extended in Teodorović and Stojković (1995) in which

constraints including crew and aircraft maintenance were incorporated into the model. Thengvall et al.

(2003) presented a multi-commodity flow model that considers flight delay, flight cancellation and

through flights with flight copies and extra arcs. A tailored heuristic is adopted to solve the Lagrangian

relaxation model. However, as the authors pointed out, a number of practical constraints cannot be

embedded in the model due to their scenario and time-dependent nature.

In Eggenberg et al. (2010), a general modeling approach based on the time-band network was proposed.

Each entity (i.e., an aircraft, a crew member or a passenger) is assigned with a specific recovery network

and the model considers entity-specific constraints. The column generation algorithm is utilized to

solve the majority of instances within 100 s, but the paper reports that the runtime exceeds one hour for

the worst case, which may not meet the demand of airlines in seeking instant recovery solutions. Liang

et al. (2018) incorporated exact delay decisions (instead of flight copies) based on airport slot resources

constraints, which is similar to our approach concerning the modeling of recovery decisions. Aircraft

maintenance swaps and several other recovery decisions are also implemented through the pricing

problem of column generation. However, realistic factors such as maintenance capacity at airports are

not considered in the proposed model.

2.2. Integrated recovery

In the recent decade, there has been a trend towards integrating more than one resource in recovery

models. The interdependencies between aircraft, crew, and passengers cannot be fully captured by

sequential optimization approaches, leading to sub-optimal recovery solutions. In this section, we

discuss some of these integrated models.

Petersen et al. (2012) proposed an integrated optimization model in which four different stages are

distinguished throughout the recovery horizon: schedule recovery, aircraft assignment, crew pairing,

and passenger assignment. The schedule is repaired by flying, delaying or cancelling flights, and

aircraft are assigned to the new schedule. Then, the crew is assigned to the aircraft rotations and the

passenger recovery ensures that all passengers reach their final destination. Upon testing their model on

data from a regional US carrier with 800 daily flights, the authors found that their integrated approach

always guarantees a feasible solution. However, the runtime of the proposed solution ranges between

20–30 min, which may not meet the demand of airlines seeking efficient recovery solutions. Besides,

the framework still works in a sequential manner rather than a fully integrated one, which may leads to

sub-optimal recovery solutions.

The time-space network representation has been frequently adopted in the integrated recovery problem

(Bratu and Barnhart, 2006; Lee et al., 2020; Marla et al., 2017), where the nodes in time-space networks

are associated with airport and time, and an arc between two nodes indicates either a flight or a ground

arc. Marla et al. (2017) incorporated flight planning (i.e., cruise speed control) to the set of traditional

recovery decisions, which is also considered in our approach. Departure time decisions are modelled

by creating copies of flight arcs, while the cruise speed decisions are modelled by creating a second set

of flight copies for different cruise speed alternatives for each departure time alternative. This approach

requires discretization of cruise speed options and greatly increases the size of generated networks,

2

leading to computationally intractable issues when dealing with large-scale instances. Accordingly, the

authors proposed an approximation model and tested their approach on data from a European airline

with 250 daily flights, concluding that their approach reduces total costs and passenger-related delay

costs for the airline.

In an attempt to avoid discretization of cruise speed options and reduce the size of generated networks,

Arıkan et al. (2017) managed to formulate the recovery decisions (e.g., flight timing, aircraft rerouting,

and cruise speed control) over a much smaller flight network with flight timing decisions in continuous

settings. Since all entities (aircraft, crew members and passengers) are transported through a common

flight network, the interdependencies among different entity types can be clearly captured. Based

on Aktürk et al. (2014), the authors implemented aircraft cruise speed control and proposed a conic

quadratic mixed-integer programming formulation. The model can evaluate the passenger delay costs

more realistically since it explicitly models each individual passenger. The authors test the model on a

network of a major U.S. airline and the optimality gap and runtime results show the feasibility of their

approach. In this paper, we propose our integrated recovery model based on Arıkan et al. (2017), and

we further introduce a set of realistic constraints regarding crew pairing process and passenger itinerary

generation. In general, the approach greatly enhances efficiency and scalability as compared to other

state-of-the-art studies.

In Hu et al. (2016), aircraft and passenger recovery decisions were integrated and a mixed-integer

programming model was developed based on the passenger reassignment relationship. A randomized

adaptive search algorithm was proposed accordingly to derive a recovery solution with reduced cost

and passenger disruption. Cadarso and Vaze (2021) took cruise speed as a decision variable with a

linear extra fuel cost in the objective function. Additionally, potential passenger losses are taken into

account and related airfare revenue is optimized as well in the presented integrated model.

2.3. Reinforcement learning approach for air transport management

In recent years, there has been growing interest in the use of Reinforcement Learning (RL) for solving

Air Transport Management (ATM) problems, which involves decision-making under uncertainty. RL

algorithms can learn from past experiences and make decisions in real time, allowing them to quickly

adapt to changing circumstances. Besides, RL algorithms can identify and exploit patterns in the data

to make more informed decisions, leading to improved efficiency compared to rule-based or heuristic

methods.

Ruan et al. (2021) used the RL-based Q-learning algorithm to solve the operational aircraft maintenance

routing problem. Upon benchmarking with several meta-heuristics, they concluded that the proposed

RL-based algorithm outperforms these meta-heuristics. Hu et al. (2021) proposed a RL-driven strategy

for solving the long-term aircraft maintenance problem, which learned the maintenance decision policies

for different aircraft maintenance scenarios. Experimental results on several simulated scenarios show

that the RL-driven strategy performs well in adjusting its decision principle for handling the variations

of mission reward. Kravaris et al. (2022) proposed a deep multi-agent RL method to handle demand

and capacity imbalances in actual air traffic management settings with many agents, which addressed

the challenges of scalability and complexity in the proposed problem. Pham et al. (2022) utilized the

RL approach to solve the conflict resolution problem with surrounding traffic and uncertainty in air

traffic management. The conflict resolution problem is formulated as a sequential decision-making

problem and they used the deterministic policy gradient algorithm to deal with a complex and large

action space.

3

To overcome the limitations of previous integrated recovery studies, we propose the RL approach in

which we interpret the problem as a sequential decision-making process and improve the behavior of

the agent by trial and error. Based on the aforementioned literature on applying RL to ATM problems,

we summarized three main advantages to use RL for the integrated recovery problem. First, since

RL is a typical agent-based model, the policy that the agent learned for a set of disruptions can be

reused for other sets of disruptions. Reapplying the learned policy could accelerate the convergence

process as compared to learning the policy from scratch (Lee et al., 2022; Šemrov et al., 2016), which

is also in accordance with our experimental results. Second, since RL is a simulation-based method,

it can handle complex assumptions in integrated recovery problem, where many realistic conditions

and factors could affect airline operations (Lee et al., 2022). By incorporating these conditions in the

simulation (i.e., environment), RL could solve the integrated recovery problem under realistic situations.

Third, RL is more flexible when designing the reward functions, which can meet various objectives than

traditional operations research methods. In our study, we define the reward as the change of objective

value after state transition. This function turns into a penalty if the next-state objective is higher than

the current-state objective.

Major comment #2

RC:

Is there any assumption in the type of aircraft considered and what model is used to calculate the fuel

burn (additional fuel cost)?

AR: Thank you for your question. We have clarified your point in our revised manuscript and listed our explanation

below:

Our approach considers the following types of commercial aircraft: single-aisle commercial jets, wide-body

commercial jets and regional jets. We adopt the cruise stage fuel flow model developed by the Base of

Aircraft Data (BADA) project of EUROCONTROL, the air traffic management organization of Europe (Poles,

2009). For a given mass and altitude, an aircraft’s cruise stage fuel burn rate (kg/min) as a function of speed v

(km/min) can be calculated as

fcr (v) = c1 v 3 + c2 v 2 + c3 /v + c4 /v 2

where coefficients ci > 0, i = 1, . . . , 4 are expressed in terms of aircraft-specific drag and fuel consumption

coefficients as well as the mass of aircraft, air density at a given altitude, and gravitational acceleration. These

coefficients can be obtained from the BADA user manual for 399 aircraft types (Nuic, 2010). Assuming that

the distance flown at cruise stage is fixed dcr , the total fuel consumption at cruise stage is formulated as

F (v) = dcr /v · fcr (v) = dcr (c1 v 2 + c2 v + c3 /v 2 + c4 /v 3 )

Note that F (v) is a strictly convex function and increases for velocities higher than its minimizer maximum

range cruise (MRC) speed. Finally, the additional fuel cost can be computed based on fuel price and the

difference between scheduled fuel consumption and realized fuel consumption.

Major comment #3

RC:

What is the basis for setting the minimum required connection time for all entities to 30 minutes (page

13)?

AR: Thank you for your question. We set the minimum required connection time to be 30 minutes based on the

state-of-the-art literature (Barnhart et al., 2014; Wang and Jacquillat, 2020), with the following three reasons:

4

• Safety. A minimum connection time of 30 minutes is considered sufficient to allow for the safe transfer

of passengers between flights, taking into account factors such as time to disembark, time to go through

security and immigration, and time to reach the gate for the next flight.

• Operational efficiency. A minimum connection time of 30 minutes is sufficient to allow for the

smooth and efficient operation of the airport/the airline’s flight schedules and the safe transfer of crew

members/passengers between flights. For aircraft, this includes time for the aircraft to park, time to

unload baggage and cargo, time to refuel and prepare the aircraft for the next flight, and time for ground

handling and maintenance activities. For crew members, this includes time for them to get to and from

their assigned aircraft, as well as time for any necessary rest and preparation before their next flight.

• Customer experience. A minimum connection time of 30 minutes is sufficient to provide passengers

with a reasonable level of comfort and convenience. This includes time to grab a bite to eat, use the

restroom, and make any necessary purchases.

Besides, we can also set this minimum connection time to be airport-dependent with a slight modification of

the parameter M M CTf g .

Major comment #4

RC:

Is would provide more information about the generated synthetic dataset if overall statistics on the assumed delays are available?

AR: Thank you for your suggestion. We have provided more information on the generated synthetic dataset in our

revised manuscript and discussed below:

Based on similar synthetic dataset generations in the literature (Arıkan et al., 2017; Barnhart et al., 2014), the

synthetic datasets in this study are generated according to a set of well-designed algorithms, which satisfy

most of the restrictions for entities (aircraft, crew members and passengers) in airline operations. Each

synthetic dataset contains a flight schedule, a passenger itinerary table, and a disruption scenario. The flight

schedule table contains the following information for each flight of the airline: tail number, flight, crew team,

departure airport, destination airport, schedule time of departure, schedule time of arrival, flight time, cruise

time, flight distance, tail capacity and number of passengers on the flight. The passenger itinerary table

contains the following information for each itinerary of the airline: flight(s) taken by each itinerary, departure

airport, destination airport, scheduled time of departure for the itinerary, scheduled time of arrival for the

itinerary, number of passengers of the itinerary. In our revised manuscript, we discuss how flight schedules,

crew routing and passenger itineraries are generated through Algorithm 4 - 6 in the Appendix.

As for disruption scenarios, we consider two types of scenarios based on the severity of disruption: a mild

disruption scenario (denoted as DS − ) represents a scenario where 10% of the flights are disrupted/delayed,

while a severe disruption scenario (denoted as DS + ) represents a scenario where 30% of the flights are

disrupted/delayed. This delay of scheduled departure time is assumed to be a random variable that follows a

uniform distribution over [0, 240] minutes. The overall statistics of the small/medium-scale synthetic datasets

are listed in the following Table 1.

Major comment #5

RC:

The manuscript provides an airline specific recovery model to handle disruptions and provides a recovery

framework. Are there any comments on how does this approach affect or potentially disrupt the schedules

of other airlines?

5

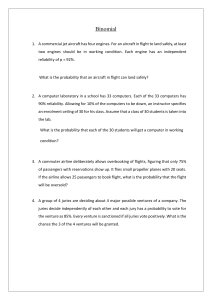

Table 1: Statistics of small/medium-scale datasets and disruption scenarios.

DS − Features

Dataset Features

DS + Features

Dataset

|F |

|A|

|U ac |

|U cr |

|U it |

|U ps |

≤ 2h

> 2h

|Df |

≤ 2h

> 2h

|Df |

AC05

16

4

5

6

17

2020

0

1

1

2

2

4

AC10

28

8

10

12

30

3731

1

1

2

7

1

8

AC15

42

12

15

21

47

5359

3

1

4

4

8

12

AC20

62

20

20

26

67

7416

4

2

6

10

8

18

AC25

73

21

25

34

88

8638

4

3

7

10

11

21

AC30

94

28

30

37

112

11415

3

6

9

16

12

28

AR: Thank you for your excellent comment. We have provided some discussion on how our approach affects or

potentially disrupts the schedules of other airlines below:

Overall, our approach provides a recovery framework for an airline to handle disruptions to its own operations,

with a focus on optimizing the recovery plan for the affected airline. However, our approach could potentially

have an impact on the schedules of other airlines in the following ways:

• Shared resources. Since airlines often share resources such as runways, gates, and air traffic control

systems, if one airline experiences a disruption that requires the use of these shared resources for a

longer period than expected, it can lead to delays and disruptions for other airlines that share these

resources.

• Flight delays/cancellations. If one airline experiences significant delays or cancellations due to

disruptions, it can lead to cascading effects on other airlines. For instance, if a flight is canceled,

passengers may be rerouted to other airlines’ flights, leading to an increase in demand and potentially

overbooking of other flights, causing further delays or disruptions.

Therefore, the disruption management of one airline could have an indirect impact on the operations of

other airlines, and this is an area that could be further explored in future research. We have added the above

discussion in the conclusion section in our revised manuscript.

Major comment #6

RC:

Usually, depending on the duration of the flight, the delays can be absorbed en-route. Is there any

consideration on this practical aspect of flight delays and delay absorption?

AR: Thank you for your excellent comment. The consideration of en-route delay absorption is a practical aspect

of the integrated recovery problem, which we have already considered in our modelling approach. We list the

related aspects below:

First, an airline can absorb delay en-route by cruise speed control. This is realized through constraints (17) -

6

(21) in the revised manuscript,

X

αf u =

βfug

f ∈ F, u ∈ U ac

g:(f,g)∈E

f ∈ F, u ∈ U ac

αf u Vf u ≤ vf u ≤ αf u V f u

X

δtf = Disf /Vf u −

Disf /vf u

f ∈F

u∈U ac

δtf ≤

X

X

u∈U ac

g:(f,g)∈E

f cf u = αf u Disf

βfug ∆Tfu

Λu1 vf2 u

f ∈F

Λu3

Λu4

+ Λu2 vf u + 2 + 3

vf u

vf u

!

f ∈ F, u ∈ U ac

These constraints present expressions for calculating the cruise time compression ∆Tfu and the fuel consumption f cf u of a flight f operated by aircraft u, in an attempt to model the trade-off between disruption cost and

extra fuel cost due to increased cruise speed (Arıkan, 2014).

Besides, the airline can take an alternative route for a disrupted flight, or hold the affected aircraft at an

intermediate airport. Additionally, the airline can also use reserve aircraft and crew to manage the delay. By

taking these measures, airlines can minimize the impact of the delay on the rest of the flight schedule, and

avoid cascading disruptions.

Major comment #7

RC:

What does one episode mean in context of the application of RL to the research problem?

AR: Thank you for your question. In our approach, an "episode" refers to a complete sequence of interactions

between the agent and its environment. An episode begins when the agent receives the initial state s0

of the environment (characterized by the aircraft assignment, crew team assignment and distance/degree

metrics for each flight string in our approach). Then the agent iteratively picks up an action at according

to current stochastic policy πθ and receives the reward rt until reaching the step limit Ts . This forms a

trajectory τ = {s0 , a0 , r1 , . . . , sTs −1 , aTs −1 , rTs } for the current episode. Finally, the PPO training algorithm

is utilized to optimize the loss function with respect to the trainable parameters θ and φ based on the series of

rewards. To sum up, we train the RL agent for once in each episode.

Major comment #8

RC:

How significant are the differences between the PPO-VNS model and the best solution generated by

CPLEX the results (Figure 7) for AC10DS + and AC15DS + ?

AR: Thank you for your comment. The objective values of PPO-VNS and CPLEX and the gap between them are

reported in the following Table 2.

For AC10DS + , the objective obtained by CPLEX is 55493.6 and the objective obtained by PPO-VNS is

55558.7, with a gap of (55558.7 − 55493.6)/55493.6 ∗ 100% = 0.12%. For AC15DS + , the objective

obtained by CPLEX is 194630.3 and the objective obtained by PPO-VNS is 194732.6, with a gap of

(194732.6 − 194630.3)/194630.3 ∗ 100% = 0.05%. The runtime of using CPLEX to solve both instances

is over 1000 s, while it only takes less than 0.05 s to solve both instances using PPO-VNS method.

7

Table 2: Comparision of the objective and runtime among PPO-VNS, CPLEX and VNS baselines in

small/medium-scale instances. The CPLEX relative optimality gaps (cgap) output by the solver are reported.

PPO-VNS

U-VNS

Instance

obj.

time

AC05DS −

23534.7 (0.57%)

0.022

obj.

DT-VNS

DG-VNS

time

obj.

time

24449.6 (4.48%)

0.028

24205.0 (3.43%)

0.029

obj.

CPLEX

time

obj.

24352.4 (4.06%)

0.029

23401.2

time

79

0.00%

cgap

AC05DS +

46975.5 (0.00%)

0.016

52586.8 (11.95%)

0.028

53110.2 (13.06%)

0.028

51270.2 (9.14%)

0.029

46975.5

1001

0.01%

AC10DS −

30973.3 (0.66%)

0.019

61968.1 (101.38%)

0.037

51554.0 (67.54%)

0.039

61895.4 (101.15%)

0.037

30771.2

22

0.00%

AC10DS +

55558.7 (0.12%)

0.022

55925.1 (0.78%)

0.036

55899.2 (0.73%)

0.038

55920.8 (0.77%)

0.038

55493.6

1003

0.02%

AC15DS −

44557.9 (0.62%)

0.047

46124.2 (4.16%)

0.053

46124.2 (4.16%)

0.054

46219.7 (4.38%)

0.055

44281.1

1002

0.01%

AC15DS +

194732.6 (0.05%)

0.049

195358.6 (0.37%)

0.052

195358.6 (0.37%)

0.057

195396.7 (0.39%)

0.055

194630.3

1004

0.03%

AC20DS −

94751.1 (0.00%)

0.091

96580.7 (1.93%)

0.076

96702.9 (2.06%)

0.076

96479.0 (1.82%)

0.075

94751.1

53

0.00%

AC20DS +

263584.7 (1.08%)

0.094

265366.9 (1.76%)

0.074

265464.5 (1.80%)

0.085

265562.1 (1.83%)

0.076

260781.2

111

0.00%

AC25DS −

89638.7 (1.46%)

0.125

91458.2 (3.52%)

0.100

91458.2 (3.52%)

0.102

91325.8 (3.37%)

0.101

88348.0

24

0.00%

AC25DS +

308576.0 (0.37%)

0.094

327379.0 (6.49%)

0.085

322679.8 (4.96%)

0.090

322469.4 (4.89%)

0.074

307424.4

1009

0.07%

AC30DS −

191884.8 (0.04%)

0.147

191884.8 (0.64%)

0.137

191884.8 (0.64%)

0.136

190586.8 (0.64%)

0.133

190662.1

1010

0.02%

AC30DS +

357254.8 (0.03%)

0.052

368408.5 (3.15%)

0.130

368687.5 (3.23%)

0.141

368579.2 (3.20%)

0.133

357154.7

1011

0.04%

Major comment #9

RC:

How is the reliability of the PPO-VNS model evaluated?

AR: Thank you for your comment. In Section 5, we perform a rich set of computational experiments to highlight

the reliability of PPO-VNS approach. An excerpt of the Section 5.3 (Comparison with exact method) is

shown below.

We compare our proposed heuristic solutions with the exact CPLEX solution regarding objective

value and runtime to demonstrate the efficiency and effectiveness of our proposed methodology. The

experimental results regarding the comparison of PPO-VNS, CPLEX and VNS baselines are visualized

in Table 2. As for the PPO-VNS method, the agent is pre-trained and we report the average testing

objective and runtime in Table 2. The number of training episodes ranges from 400 for smaller instance

AC05DS − to 1300 for larger instance AC30DS + . The training time ranges from ten seconds for

smaller instance AC05DS − to 70 seconds for larger instance AC30DS + . We compute the gap

of objective for each method with respect to the (close-to) optimal objective obtained by CPLEX.

Compared to the CPLEX results, the PPO-VNS method greatly reduces the runtime, which meets the

need of airlines in seeking instance solution; on the other hand, the gap between PPO-VNS and CPLEX

stay within 1.5% and is smaller than the gap between VNS baselines and CPLEX, which demonstrates

the accuracy and reliability of the deep reinforcement learning-based approach. Due to the potential

limitation of the evaluator embedded in all VNS-based methods, the objective values of VNS-based

methods cannot well approach the (close-to) optimal objective value generated by CPLEX, but the gap

is small for the majority of instances and exactly zero for a minority of instances (e.g., AC05DS + and

AC20DS − ).

In the training process of our experiments, PPO demonstrates great capability in efficiently exploring

the search space and avoiding to get trapped in a local minimum. We visualize the convergence process

of objective values of the PPO-VNS method for each instance in Figure 1, as compared with the levels

of objective values generated by CPLEX and VNS baseline methods. For each instance, the abscissa

is the training episode while the ordinate is the moving average of objective values in 100 episodes.

8

Mov. avg. of obj. in 100 episodes

AC05_DS

25000

70000

24750

24500

24250

AC15_DS

46500

46000

60000

U-VNS

DT-VNS

DG-VNS

PPO-VNS

CPLEX

45500

50000

24000

45000

23750

40000

44500

23500

250

Mov. avg. of obj. in 100 episodes

AC10_DS

500

750 1000 1250 1500 1750 2000

Episode

AC05_DS +

56000

30000

250

500

750 1000 1250 1500 1750 2000

52000

50000

750 1000 1250 1500 1750 2000

Episode

Episode

AC15_DS +

55800

195200

55700

195000

55600

194800

55500

500

750 1000 1250 1500 1750 2000

195400

48000

250

500

AC10_DS +

56000

55900

54000

250

Episode

250

500

750 1000 1250 1500 1750 2000

Episode

194600

U-VNS

DT-VNS

DG-VNS

PPO-VNS

CPLEX

250

500

750 1000 1250 1500 1750 2000

Episode

Figure 1: The convergence process of objective values of PPO-VNS method in each instance, with comparison

to the levels of objective values generated by CPLEX and VNS baseline methods.

The PPO agent is uninitialized at the first episode (i.e., the initial weights and biases of actor and critic

network are randomly determined), leading to a relatively weaker performance compared to VNS

baselines. As the training episodes pass by, the PPO agent manages to learn the critical trajectories to

largely decrease the objective value, while the VNS baselines remain the same levels without learning.

Eventually, the objective values of the PPO-VNS method keep close to the optimal objective obtained

by CPLEX or even coincide with it.

9

Reviewer #2

General comments to the authors

RC:

This manuscript focuses on an integrated airline recovery problem which is formulated as a mixed-integer

nonlinear optimization problem and solved using a combination of variable neighborhood search and

deep reinforcement learning. The topic of the paper is very important and the computational work is

competently done. The results are interesting enough to be published and the paper is written reasonably

well. However, the paper also suffers from some problems that should be addressed. The strength of the

paper is the computational results and the algorithmic development supporting that work. Below I list the

main issues.

AR: Thank you for your positive evaluation and the effort to review our submission. In addition, we would like to

thank you for your very constructive feedback. We have addressed your concerns carefully in this revised

version; the details are listed in our responses below.

Major comment #1

RC:

First, the paper makes many claims that are not accurate or can be misinterpreted by the readers. For

example, the abstract claims that "Experimental results show that the objective value generated by our

approach is within a 1.5% gap with respect to the optimal objective of the CPLEX solver, with significant

improvement regarding runtime.". But upon reading the results section, we see that this is only true for

the toy problems (those with less than 100 flights). And, as expected, the results section also shows that

there is no way to compare the performance to true optimal for realistic sized problems (Table 11 shows

the so-called "large-scale" instances, which really are small-to-medium sized airlines and for them there

is no optimality gap information available). This needs to be clarified. In the same vein, the authors find

that the DRL approach is hard to scale for these instances and resort to heuristic trimming of solution

space. This needs to be stated up front.

AR: Thanks for your excellent comments. We have carefully rewrote the claims on experimental results in the

abstract and introduction. An excerpt from the abstract is shown below:

Experimental results show that the objective value generated by our approach is within a 1.5% gap with

respect to the optimal/close-to-optimal objective of the CPLEX solver for the small-scale instances, with

significant improvement regarding runtime. The pre-trained DRL agent can leverage features/weights

obtained from the training process to accelerate the arrival of objective convergence and further improve

solution quality, which exhibits the potential of achieving Transfer Learning (TL). Given the inherent

intractability of the problem on practical size instances, we propose a method to control the size of the

DRL agent’s action space to allow for efficient training process. We believe our study contributes to the

efforts of airlines in seeking efficient and cost-effective recovery solutions.

Major comment #2

RC:

In an effort to simplify the model, many key practical factors are being ignored. For example, it seems

to me that by only focusing on passenger flow on each link, the passenger connections are being ignored.

Moreover, the passenger rebooking is completely being omitted, unless it is through the exact same route

as originally planned. Aircraft maintenance opportunities are modeled as must-nodes. In reality, that

is not a good way of modeling them since they are not must. The aircraft need to undergo periodic

maintenance, but the exact location or timing can be quite flexible (and cannot be enforced using must-

10

nodes). Similarly, and even more importantly, the link-based model of crew flow completely ignores

perhaps the biggest reason why crew recovery is hard: crews timing out due to delays is being ignored.

All in all, the model is weak as it ignores many practical realities that other studies (especially those based

on composite variables) already account for. By claiming to perform integrated airline recovery and by

allowing for all of these modeling simplifications, the paper comes across as trying to oversell the work.

A very careful acknowledgement of these limitations is essential.

AR: Thank you for your excellent and constructive comment. We have carefully considered the key practical

factors and acknowledged model limitations in our revised manuscript. We demonstrate our efforts on the

aforementioned four practical factors below:

1. Passenger connections. Passenger connection refers to the process by which an airline facilitates the

transfer of a passenger from one flight to another, typically as part of a multi-leg journey. In our revised

model, Constraints (12)

dtg ≥ atf + M inCTfug βfug − LATf 1 − βfug

u ∈ U, (f, g) ∈ CC u

ensure that if there is a positive flow of entity u (including aircraft, crew members and individual

passengers) between flight nodes f and g, the minimum connection time M inCTfug , should be provided

between these flights. In our revised VNS-based heuristic, the minimum connection time between

consecutive flights for passengers is also guaranteed.

2. Passenger rebooking. Passenger rebooking refers to the process by which an airline reassigns a

passenger to a recovered itinerary in response to a disrupted itinerary. In fact, our flight network-based

model indeed captures passenger rebooking. The source node su and sink node tu of passenger

u ∈ U ps ⊂ U contain information including origin/destination airport, earliest departure time and

latest arrival time of the passenger. In our manuscript, the flow balance constraints for each passenger

u ∈ U (i.e., Constraints (3))

X

X

u

βfug −

βgh

= Dgu

u ∈ U, g ∈ V

f :(f,g)∈E

h:(g,h)∈E

ensure that the passenger take a feasible recovered itinerary, i.e., a sequence of one or more non-stop

flights traversed from the passenger’s origin to the passenger’s destination. Note that the recovered

itinerary of a passenger may not be the scheduled itinerary of a passenger in case of disruptions, and

the actual reassigned flight(s) taken by the passenger may be completely different from the scheduled

flight(s) of the passenger. We also demonstrate the effect of passenger rebooking in the synthesized

example in Section 3.3, and we show an excerpt below:

As for the recovery itinerary, a large proportion of passengers of I11 (the itinerary with ID 11)

are rerouted to I00, since I11 is the itinerary with disrupted flight F09. Furthermore, a new

itinerary I16 is created to accommodate the passengers of I01 regarding the first flight leg, since

the original first flight leg F00 is fully seated in the recovery itinerary.

Our modelling approach regarding passenger rerouting/rebooking is in accordance with the state-of-theart studies on passenger recovery (Cadarso and Vaze, 2022; Hu et al., 2016; Maher, 2015). Besides, our

VNS-based heuristic is also able to reroute passengers of disrupted flights through alternative flights to

reach their destination.

11

3. Aircraft maintenance. We model aircraft maintenance as a must-node in the network based on (Arıkan

et al., 2017; Gürkan et al., 2016), indicating that it is a mandatory event that must occur in the schedule.

A must-node might represent a maintenance activity of an aircraft at a specific airport at a certain time

period. This must-node approach can be useful for modelling preventive maintenance, where aircraft

must be taken out of service for a scheduled maintenance check. As you have pointed out, in the

aircraft recovery literature, researchers also use flexible time windows approach to model maintenance

activities. This method can be used when the maintenance activities are complex and the location

or timing of the maintenance activities is uncertain. We choose the formal approach based on the

consideration of scheduled and preventive maintenance.

4. Crew duty constraints. We have modified our model to incorporate a set of realistic constraints

(Constraints (12) - (16)) regarding crew pairing, including minimum/maximum sit-times between

consecutive flights,

dtg ≥ atf + M inCTfug βfug − LATf 1 − βfug

u ∈ U, (f, g) ∈ CC u

dtg ≤ atf + M axCTfug βfug + LDTf 1 − βfug

u ∈ U, (f, g) ∈ CC u

maximum total flying time, maximum duty time and maximum number of landings,

X

X

βfug (atf − dtf ) ≤ M F T u

u ∈ U cr

f ∈F

g:(f,g)∈E

X

atf βfutu −

(f,tu )∈E

X

βfug

X

dtg βsuu g ≤ M DT u

u ∈ U cr

(su ,g)∈E

u

u ∈ U cr ∪ U ps

− 1 ≤ MNL

(f,g)∈E

Crews timing out due to delays refers to the situation in which an airline crew member exceeds the

maximum allowed duty time as a result of a flight delay, which is considered in our revised model

by incorporating Constraints (15). We also perform feasibility checks on crew duty constraints in the

VNS-based heuristics accordingly.

Major comment #3

RC:

The paper is incredibly long and hard to read as a result. Many parts can be eliminated with ease. For

example, the incremental contribution of Section 3.3 is minimal, yet it takes up 4 pages worth of valuable

space. There are other such opportunities to cut space as well (e.g., Tables 1 and 2 do not contribute much

and can go easily).

AR: Thank you for your comment. We have shorten Section 3.3 and remove Table 1 and 2 as you suggested.

Besides, several parts including the algorithms to generate datasets and the DRL training algorithm have

been moved to the Appendix. While our original submission has 37 pages, the revised version was shortened

significantly to 29 pages.

Major comment #4

RC:

The paper’s positioning vis-à-vis the literature needs work. For example, the literature review is poorly

structured. It reviews airline scheduling studies that use machine learning first. Then it reviews recovery

12

studies and then discusses integrated scheduling. I did not understand the reason why this ordering was

chosen and nor did I understand why this particular set of studies in recovery and integrated scheduling

were specifically considered to be relevant. Rather than giving a laundry-list of studies, some effort should

be put into identifying key literature themes and writing the review around those themes.

AR:

Thank you for your excellent comment. We have significantly improved the literature review by identifying

key literature themes and writing the review around those themes. An excerpt from Section 2 (Literature

Review) is shown below:

The modelling and computational techniques for airline disruption management have been rapidly

developed in the literature. Previous efforts typically addressed irregular operations in a sequential

manner, such that issues related to the aircraft fleet, crew, and passengers are resolved respectively

(Barnhart, 2009). Section 2.1 first discusses research on the aircraft recovery problem, after which

Section 2.2 reviews the airline recovery literature covering multiple resources, and Section 2.3 discusses

the literature on the reinforcement learning approach for air transport management.

2.1. Aircraft recovery

As aircraft are the most constraining and expensive resource in aviation management, previous efforts

mainly focused on aircraft recovery which can be formulated as follows: given a flight schedule and

a set of disruptions, determine which flights to be cancelled or delayed, and re-assign the available

aircraft to the flights such that the disruption cost is minimized (Hassan et al., 2021).

Teodorović and Guberinić (1984) were the first authors that discussed the minimization of passenger

delays in the Aircraft Recovery Problem (ARP). The problem is formulated as a network flow problem

in which flights are represented by nodes and arcs indicate time losses per flight. However, aircraft

maintenance constraints are ignored and they assume that all itineraries contain only a single flight

leg. They utilized the branch & bound method to solve the problem with a network of eight flights

operated by three aircraft. This work was further extended in Teodorović and Stojković (1995) in which

constraints including crew and aircraft maintenance were incorporated into the model. Thengvall et al.

(2003) presented a multi-commodity flow model that considers flight delay, flight cancellation and

through flights with flight copies and extra arcs. A tailored heuristic is adopted to solve the Lagrangian

relaxation model. However, as the authors pointed out, a number of practical constraints cannot be

embedded in the model due to their scenario and time-dependent nature.

In Eggenberg et al. (2010), a general modeling approach based on the time-band network was proposed.

Each entity (i.e., an aircraft, a crew member or a passenger) is assigned with a specific recovery network

and the model considers entity-specific constraints. The column generation algorithm is utilized to

solve the majority of instances within 100 s, but the paper reports that the runtime exceeds one hour for

the worst case, which may not meet the demand of airlines in seeking instant recovery solutions. Liang

et al. (2018) incorporated exact delay decisions (instead of flight copies) based on airport slot resources

constraints, which is similar to our approach concerning the modeling of recovery decisions. Aircraft

maintenance swaps and several other recovery decisions are also implemented through the pricing

problem of column generation. However, realistic factors such as maintenance capacity at airports are

not considered in the proposed model.

2.2. Integrated recovery

In the recent decade, there has been a trend towards integrating more than one resource in recovery

models. The interdependencies between aircraft, crew, and passengers cannot be fully captured by

13

sequential optimization approaches, leading to sub-optimal recovery solutions. In this section, we

discuss some of these integrated models.

Petersen et al. (2012) proposed an integrated optimization model in which four different stages are

distinguished throughout the recovery horizon: schedule recovery, aircraft assignment, crew pairing,

and passenger assignment. The schedule is repaired by flying, delaying or cancelling flights, and

aircraft are assigned to the new schedule. Then, the crew is assigned to the aircraft rotations and the

passenger recovery ensures that all passengers reach their final destination. Upon testing their model on

data from a regional US carrier with 800 daily flights, the authors found that their integrated approach

always guarantees a feasible solution. However, the runtime of the proposed solution ranges between

20–30 min, which may not meet the demand of airlines seeking efficient recovery solutions. Besides,

the framework still works in a sequential manner rather than a fully integrated one, which may leads to

sub-optimal recovery solutions.

The time-space network representation has been frequently adopted in the integrated recovery problem

(Bratu and Barnhart, 2006; Lee et al., 2020; Marla et al., 2017), where the nodes in time-space networks

are associated with airport and time, and an arc between two nodes indicates either a flight or a ground

arc. Marla et al. (2017) incorporated flight planning (i.e., cruise speed control) to the set of traditional

recovery decisions, which is also considered in our approach. Departure time decisions are modelled

by creating copies of flight arcs, while the cruise speed decisions are modelled by creating a second set

of flight copies for different cruise speed alternatives for each departure time alternative. This approach

requires discretization of cruise speed options and greatly increases the size of generated networks,

leading to computationally intractable issues when dealing with large-scale instances. Accordingly, the

authors proposed an approximation model and tested their approach on data from a European airline

with 250 daily flights, concluding that their approach reduces total costs and passenger-related delay

costs for the airline.

In an attempt to avoid discretization of cruise speed options and reduce the size of generated networks,

Arıkan et al. (2017) managed to formulate the recovery decisions (e.g., flight timing, aircraft rerouting,

and cruise speed control) over a much smaller flight network with flight timing decisions in continuous

settings. Since all entities (aircraft, crew members and passengers) are transported through a common

flight network, the interdependencies among different entity types can be clearly captured. Based

on Aktürk et al. (2014), the authors implemented aircraft cruise speed control and proposed a conic

quadratic mixed-integer programming formulation. The model can evaluate the passenger delay costs

more realistically since it explicitly models each individual passenger. The authors test the model on a

network of a major U.S. airline and the optimality gap and runtime results show the feasibility of their

approach. In this paper, we propose our integrated recovery model based on Arıkan et al. (2017), and

we further introduce a set of realistic constraints regarding crew pairing process and passenger itinerary

generation. In general, the approach greatly enhances efficiency and scalability as compared to other

state-of-the-art studies.

In Hu et al. (2016), aircraft and passenger recovery decisions were integrated and a mixed-integer

programming model was developed based on the passenger reassignment relationship. A randomized

adaptive search algorithm was proposed accordingly to derive a recovery solution with reduced cost

and passenger disruption. Cadarso and Vaze (2021) took cruise speed as a decision variable with a

linear extra fuel cost in the objective function. Additionally, potential passenger losses are taken into

account and related airfare revenue is optimized as well in the presented integrated model.

14

2.3. Reinforcement learning approach for air transport management

In recent years, there has been growing interest in the use of Reinforcement Learning (RL) for solving

Air Transport Management (ATM) problems, which involves decision-making under uncertainty. RL

algorithms can learn from past experiences and make decisions in real time, allowing them to quickly

adapt to changing circumstances. Besides, RL algorithms can identify and exploit patterns in the data

to make more informed decisions, leading to improved efficiency compared to rule-based or heuristic

methods.

Ruan et al. (2021) used the RL-based Q-learning algorithm to solve the operational aircraft maintenance

routing problem. Upon benchmarking with several meta-heuristics, they concluded that the proposed

RL-based algorithm outperforms these meta-heuristics. Hu et al. (2021) proposed a RL-driven strategy

for solving the long-term aircraft maintenance problem, which learned the maintenance decision policies

for different aircraft maintenance scenarios. Experimental results on several simulated scenarios show

that the RL-driven strategy performs well in adjusting its decision principle for handling the variations

of mission reward. Kravaris et al. (2022) proposed a deep multi-agent RL method to handle demand

and capacity imbalances in actual air traffic management settings with many agents, which addressed

the challenges of scalability and complexity in the proposed problem. Pham et al. (2022) utilized the

RL approach to solve the conflict resolution problem with surrounding traffic and uncertainty in air

traffic management. The conflict resolution problem is formulated as a sequential decision-making

problem and they used the deterministic policy gradient algorithm to deal with a complex and large

action space.

To overcome the limitations of previous integrated recovery studies, we propose the RL approach in

which we interpret the problem as a sequential decision-making process and improve the behavior of

the agent by trial and error. Based on the aforementioned literature on applying RL to ATM problems,

we summarized three main advantages to use RL for the integrated recovery problem. First, since

RL is a typical agent-based model, the policy that the agent learned for a set of disruptions can be

reused for other sets of disruptions. Reapplying the learned policy could accelerate the convergence

process as compared to learning the policy from scratch (Lee et al., 2022; Šemrov et al., 2016), which

is also in accordance with our experimental results. Second, since RL is a simulation-based method,

it can handle complex assumptions in integrated recovery problem, where many realistic conditions

and factors could affect airline operations (Lee et al., 2022). By incorporating these conditions in the

simulation (i.e., environment), RL could solve the integrated recovery problem under realistic situations.

Third, RL is more flexible when designing the reward functions, which can meet various objectives than

traditional operations research methods. In our study, we define the reward as the change of objective

value after state transition. This function turns into a penalty if the next-state objective is higher than

the current-state objective.

Major comment #5

RC:

More details should be provided on the extent of the offline DRL model training effort. That can be

computationally intensive.

AR: Thank you for your comments. We have provided the statistics on training time and episodes in our revised

manuscript. An excerpt from Section 5.5 (Transfer learning) of our revised manuscript is shown below:

In the following, we perform a set of experiments to verify the feasibility and advantage of transfer

15

learning. For each small/medium-scale dataset AC05 to AC20, we pre-train the PPO-VNS agent

with ten artificial disruption scenarios. Note that these artificial disruption scenarios are on top of the

same airline operational dataset (flight schedule and passenger itinerary), thus they share the same

flight network structure and can be interacted by the same PPO-VNS agent, i.e., the PPO-VNS agent

is network-specific in this case. These ten artificial disruption scenarios are sampled by the same

distribution, i.e., 10% of the flights experience a delay that is uniformly distributed over [0, 240] minutes.

We train the PPO-VNS agent for 500 episodes in each artificial disruption scenario in a sequential

manner, in an attempt to avoid overfitting on specific training scenarios and enhance generalization.

The training time ranges from 110 seconds for smaller dataset AC05 to 260 seconds for larger dataset

AC30. In the testing stage, we transfer the pre-trained agent to three testing scenarios (denoted as

DS0 , DS1 and DS2 ) respectively, which are sampled by the same distribution as training scenarios,

and keep optimizing the objective until convergence.

Major comment #6

RC:

Finally, there are a few typos (such as the examples given below in the minor comments) that need to be

fixed.

AR:

Thank you. All authors have proofread the revised manuscript and all typos have been fixed.

Minor comment #1

RC:

"Runway congest" in the first paragraph of the introduction seems like a typo.

AR:

Thank you. We have changed this phrase to "runway congestion".

Minor comment #2

RC:

Second extension mentioned at the bottom of page 2 seems to eliminate lots of feasible solutions in a

heuristic way. How do you justify imposing these constraints on crew and passenger itineraries? It seems

that constraining the problem sufficiently makes it much easier to solve than that in reality. Am I right?

AR: Thank you for your comment. The constraints regarding crew and passenger itineraries are imposed on the

evaluator in Section 4.2.1, where we discuss the implementaion of connection time constraints for crew and

passengers, crew duty constraints and passenger rerouting constraints. These efforts to constrain the problem

ensure feasibility of the heuristic solution and also reduce the problem complexity as compared with the

MINP formulation.

Minor comment #3

RC:

Is the optimal objective of the CPLEX supposed to be the true optimal or not? This is unclear from the

introduction and abstract.

AR: Thank you for your comment. The objective of the CPLEX is sometimes not the true optimal in the runtime

limit of 1000 s, and we report the optimality gap statistics of CPLEX in the last column of Table 8. We have

clarified this claim in the revised manuscript.

16

Minor comment #4

RC:

Claiming that the section review is comprehensive (as stated right before section 2) is misleading. It would

not be possible to provide comprehensive review of such vast topics like airline scheduling and disruption

management in such small space. I suggest toning down the big claim.

AR: Thank you. We have changed the claim into "Section 2 reviews the literature concerning airline disruption

recovery." in the revised manuscript.

Minor comment #5

RC:

"flights and preselected" in the paragraph after Table 1 seems like another typos.

AR: Thank you. We have changed the typo into "While the highly integrated model is also complex to solve,

flights are preselected to restrict the model size and a column and row generation algorithm is used to derive

solutions efficiently".

Minor comment #6

RC:

"Jiang and Barnhart (2009) develops" should be "Jiang and Barnhart (2009) develop" on page 6.

AR: Thank you. We have changed the typo into "Jiang and Barnhart (2009) develop a schedule re-optimization

model incorporating re-fleeting and re-timing decisions".

17

References

Aktürk, M.S., Atamtürk, A., Gürel, S., 2014. Aircraft rescheduling with cruise speed control. Operations

Research 62, 829–845.

Arıkan, U., 2014. Airline disruption management .

Arıkan, U., Gürel, S., Aktürk, M.S., 2017. Flight network-based approach for integrated airline recovery with

cruise speed control. Transportation Science 51, 1259–1287.

Barnhart, C., 2009. Irregular operations: Schedule recovery and robustness. The global airline industry ,

253–274.

Barnhart, C., Fearing, D., Vaze, V., 2014. Modeling passenger travel and delays in the national air transportation system. Operations Research 62, 580–601.

Bratu, S., Barnhart, C., 2006. Flight operations recovery: New approaches considering passenger recovery.

Journal of Scheduling 9, 279–298.

Cadarso, L., Vaze, V., 2021. Passenger-centric integrated airline schedule and aircraft recovery. Available at

SSRN 3814705 .

Cadarso, L., Vaze, V., 2022. Passenger-centric integrated airline schedule and aircraft recovery. Transportation

Science doi:.

Eggenberg, N., Salani, M., Bierlaire, M., 2010. Constraint-specific recovery network for solving airline

recovery problems. Computers & operations research 37, 1014–1026.

Gürkan, H., Gürel, S., Aktürk, M.S., 2016. An integrated approach for airline scheduling, aircraft fleeting and

routing with cruise speed control. Transportation Research Part C: Emerging Technologies 68, 38–57.

Hassan, L., Santos, B.F., Vink, J., 2021. Airline disruption management: A literature review and practical

challenges. Computers & Operations Research 127, 105137.

Hu, Y., Miao, X., Zhang, J., Liu, J., Pan, E., 2021. Reinforcement learning-driven maintenance strategy:

A novel solution for long-term aircraft maintenance decision optimization. Computers & Industrial

Engineering 153, 107056.

Hu, Y., Song, Y., Zhao, K., Xu, B., 2016. Integrated recovery of aircraft and passengers after airline operation

disruption based on a grasp algorithm. Transportation research part E: logistics and transportation review

87, 97–112.

Kravaris, T., Lentzos, K., Santipantakis, G., Vouros, G.A., Andrienko, G., Andrienko, N., Crook, I., Garcia,

J.M.C., Martinez, E.I., 2022. Explaining deep reinforcement learning decisions in complex multiagent

settings: towards enabling automation in air traffic flow management. Applied Intelligence , 1–36.

Lee, J., Lee, K., Moon, I., 2022. A reinforcement learning approach for multi-fleet aircraft recovery under

airline disruption. Applied Soft Computing 129, 109556.

Lee, J., Marla, L., Jacquillat, A., 2020. Dynamic disruption management in airline networks under airport

operating uncertainty. Transportation Science 54, 973–997.

18

Liang, Z., Xiao, F., Qian, X., Zhou, L., Jin, X., Lu, X., Karichery, S., 2018. A column generationbased heuristic for aircraft recovery problem with airport capacity constraints and maintenance flexibility.

Transportation Research Part B: Methodological 113, 70–90.

Maher, S., 2015. Solving the integrated airline recovery problem using column-and-row generation. Transportation Science 50, 150310112838004. doi:.

Marla, L., Vaaben, B., Barnhart, C., 2017. Integrated disruption management and flight planning to trade off

delays and fuel burn. Transportation Science 51, 88–111.

Nuic, A., 2010. User manual for the base of aircraft data (bada) revision 3.10. Atmosphere 2010, 001.

Petersen, J.D., Sölveling, G., Clarke, J.P., Johnson, E.L., Shebalov, S., 2012. An optimization approach to

airline integrated recovery. Transportation Science 46, 482–500.

Pham, D.T., Tran, P.N., Alam, S., Duong, V., Delahaye, D., 2022. Deep reinforcement learning based path

stretch vector resolution in dense traffic with uncertainties. Transportation research part C: emerging

technologies 135, 103463.

Poles, D., 2009. Base of aircraft data (bada) aircraft performance modelling report. EEC Technical/Scientific

Report 9, 1–68.

Ruan, J., Wang, Z., Chan, F.T., Patnaik, S., Tiwari, M.K., 2021. A reinforcement learning-based algorithm for

the aircraft maintenance routing problem. Expert Systems with Applications 169, 114399.

Šemrov, D., Marsetič, R., Žura, M., Todorovski, L., Srdic, A., 2016. Reinforcement learning approach for

train rescheduling on a single-track railway. Transportation Research Part B: Methodological 86, 250–267.

Teodorović, D., Guberinić, S., 1984. Optimal dispatching strategy on an airline network after a schedule

perturbation. European Journal of Operational Research 15, 178–182.

Teodorović, D., Stojković, G., 1995. Model to reduce airline schedule disturbances. Journal of Transportation

Engineering 121, 324–331.

Thengvall, B.G., Bard, J.F., Yu, G., 2003. A bundle algorithm approach for the aircraft schedule recovery

problem during hub closures. Transportation Science 37, 392–407.

Wang, K., Jacquillat, A., 2020. A stochastic integer programming approach to air traffic scheduling and

operations. Operations Research 68, 1375–1402.

19

Highlights

Highlights

1) A decision recommendation framework for efficient airline disruption recovery is proposed.

2) We develop a solution framework combining Deep RL with Variable Neighborhood Search.

3) Experiments on synthetic datasets and real airline data are reported.

4) Our heuristics shows significant reductions in runtime at acceptable solution qualities.

5) We contribute to efforts of airlines in seeking efficient and cost-effective recovery solutions.

Manuscript File

Click here to view linked References

Towards Efficient Airline Disruption Recovery with Reinforcement Learning

Abstract

Disruptions to airline schedules precipitate flight delays/cancellations and significant losses for

airline operations. The goal of the integrated airline recovery problem is to develop an operational

tool that provides the airline with an instant and cost-effective solution concerning aircraft, crew

members and passengers in face of the emerging disruptions. In this paper, we formulate a decision

recommendation framework which incorporates various recovery decisions including aircraft and

crew rerouting, passenger reaccommodation, departure holding, flight cancellation and cruise speed

control. Given the computational hardness of solving the mixed-integer nonlinear programming

(MINP) model by the commercial solver (e.g., CPLEX), we establish a novel solution framework

by incorporating Deep Reinforcement Learning (DRL) to the Variable Neighborhood Search (VNS)

algorithm with well-designed neighborhood structures and state evaluator. We utilize Proximal

Policy Optimization (PPO) to train the stochastic policy exploited to select neighborhood operations given the current state throughout the Markov Decision Process (MDP). Experimental results

show that the objective value generated by our approach is within a 1.5% gap with respect to the

optimal/close-to-optimal objective of the CPLEX solver for the small-scale instances, with significant improvement regarding runtime. The pre-trained DRL agent can leverage features/weights

obtained from the training process to accelerate the arrival of objective convergence and further

improve solution quality, which exhibits the potential of achieving Transfer Learning (TL). Given

the inherent intractability of the problem on practical size instances, we propose a method to control the size of the DRL agent’s action space to allow for efficient training process. We believe our

study contributes to the efforts of airlines in seeking efficient and cost-effective recovery solutions.

Keywords: Airline Scheduling, Disruptions, Deep Reinforcement Learning

1. Introduction

Deviation from an optimized airline schedule leads to significant losses for airlines. While causes

for such deviations are manifold, we discuss a few of these here. One of the major drivers of airline

delay and cancellations is adverse weather conditions, which prevent aircraft to be operated in a

normal environment. A second factor for disruption is congestion, particularly at hub airports operated under tight operational constraints. Such congestions may appear across the entire terminal

layout, including passenger security lanes, boarding, runway congestion, and others. Third, human

and mechanical failures can lead to an inability to operate aircraft as planned. For instance, such

cases include the sudden unavailability of sufficient crew members for a flight or the malfunction of

parts of an aircraft. These examples for disruption scenarios can all be described as rather local by

initial effect. Nevertheless, the ultimate extent of such a local phenomenon can reach much beyond

the initial scale, as failures and delay easily propagate downstream in the aviation system. This

propagation can possibly be explained by the combination of two major factors. First, most traditional airlines operate according to a hub-and-spoke model, where aircraft are routed through a set

March 3, 2023

1 INTRODUCTION

2

of centralized airports in a small number of cities. Such aggregation leads to so-called economies of

scale and allows airlines to cost-effectively serve a wider range of markets (O’Kelly and Bryan, 1998;

Campbell and O’Kelly, 2012). The presence of these single points of failure leads to a high degree

of vulnerability (O’Kelly, 2015). Given that hubs are under high operational pressure and have

significant potential for congestion, the system is inherently susceptible to disruptions. Second,

given the rising fuel cost, increase in competition from low-cost carriers, and various other adverse

ramifications, the airline business is under high economic pressure (Dennis, 2007; Zuidberg, 2014).

Accordingly, airline schedules are typically highly optimized with the goal to maximize the airline’s

profit under ideal conditions. Deviations from these ideal conditions inevitably lead to mismatches

across the global usage of airline’s resources.

Given that disruptions are extremely costly for airlines, with an estimated cost of 60 billion

per year - equivalent to eight percent of the worldwide airline revenue (Serrano and Kazda, 2017),

recent studies on airline scheduling have increasingly investigated the problems coming with disruptions (Janić, 2005; Kohl et al., 2007; Clausen et al., 2010; Sun and Wandelt, 2018; Rapajic,

2018; Ogunsina and Okolo, 2021). Conceptually, disruption can be divided into two phases from

the perspective of an airline. First, the emergency-like event of the disruption taking place and the

initial response of an airline. For example, such an event can be the closure of a runway, which

reduces the airport’s capacity if multiple runways are present. During the emergence phase, the

impact of the disruptions begins to turn from local to global. Accordingly, this phase is followed by

a time of recovery, in which the airline makes decisions to reduce the overall impact on the airline

and its passengers, involving passenger rebooking, aircraft rescheduling, and other actions.

In this study, we formulate a problem for integrated airline recovery and perform a thorough

investigation on how to solve the problem effectively for large-scale instances. The underlying model

is expressive in being able to incorporate aircraft and crew rerouting, passenger reaccommodation,

departure holding, flight cancellation, while considering aircraft cruise speed decisions. Given the

computational hardness of the model, we develop a set of solution techniques. First, following the

existing literature on related problems, we develop an exact solution technique using a programming

solver (CPLEX) with adequate preprocessing techniques. Second, we design a heuristic based on

Variable Neighborhood Search (VNS) which iteratively improves the best-known solution through

the enumeration and exploration of neighborhoods. Third, we develop a novel VNS guidance

framework based on Deep Reinforcement Learning (DRL), where neighborhood operations are

selected based on the policy obtained from reinforcement learning. These policies are learned ad-hoc

for the to-be-solved problem at hand. We perform a rich set of computational experiments, which

highlight the efficiency and effectiveness of the reinforcement learning-based solution technique.

While the primary focus of this study is related to the algorithmic approach to solving integrated

airline recovery problems, it also provides multiple extensions on existing literature regarding data

acquisition and model formulation, summarized below. First, we develop a set of compact algorithms to generate simulated airline operational datasets in an integrated manner, including flight

schedule, crew pairing and passenger itinerary. Despite the relatively low resolution of these simulated datasets as compared with the existing literature on airline scheduling, these datasets are

well-tailored for the airline disruption recovery model and subject to most of the regular constraints

in airline operations. These algorithms can also generate datasets of various scales, which supports

the scalability analysis of our proposed solution methodology. Second, we further introduce a set of

realistic constraints regarding crew pairing process and passenger itinerary generation, as supplementary to the work of Arıkan et al. (2017). The introduced constraints related to crew duty include

March 3, 2023

2 LITERATURE REVIEW

3

minimum/maximum sit-times between consecutive flights, maximum total flying time, maximum

duty time (i.e., flying times and the sit-times in duty) and maximum number of landings. The

introduced constraints related to passenger itinerary include minimum/maximum transfer time between consecutive flights and maximum number of connecting flights. The major contribution of

this study is summarized as follows:

• We develop a set of compact algorithms to generate realistic datasets tailored to the integrated

airline disruption recovery problem.

• We design a VNS-based heuristic which iteratively improves the best-known solution through

the enumeration and exploration of neighborhoods.

• We develop a novel DRL-based VNS guidance framework which selects neighborhood operations based on the pre-trained policy, and we demonstrate its efficiency and accuracy through

comparision with CPLEX results and VNS baseline results.

The remainder of this study is structured as follows. Section 2 reviews the literature concerning

airline disruption recovery. Section 3 introduces the model formulation and provides the relevant

intuition for the model through a synthesized example. Section 4 develops the three solution

techniques compared in this study, including an exact algorithm and two heuristics. Section 5

reports on a wide range of computational experiments which explore the trade-off between solution

optimality and computation time. Section 6 concludes this study and discusses a set of directions

for future work.

2. Literature Review

The modelling and computational techniques for airline disruption management have been

rapidly developed in the literature. Previous efforts typically addressed irregular operations in

a sequential manner, such that issues related to the aircraft fleet, crew, and passengers are resolved respectively (Barnhart, 2009). Section 2.1 first discusses research on the aircraft recovery