Uploaded by

Boitumelo Rethabile

Data-Intensive Applications: Reliability & Scalability

advertisement

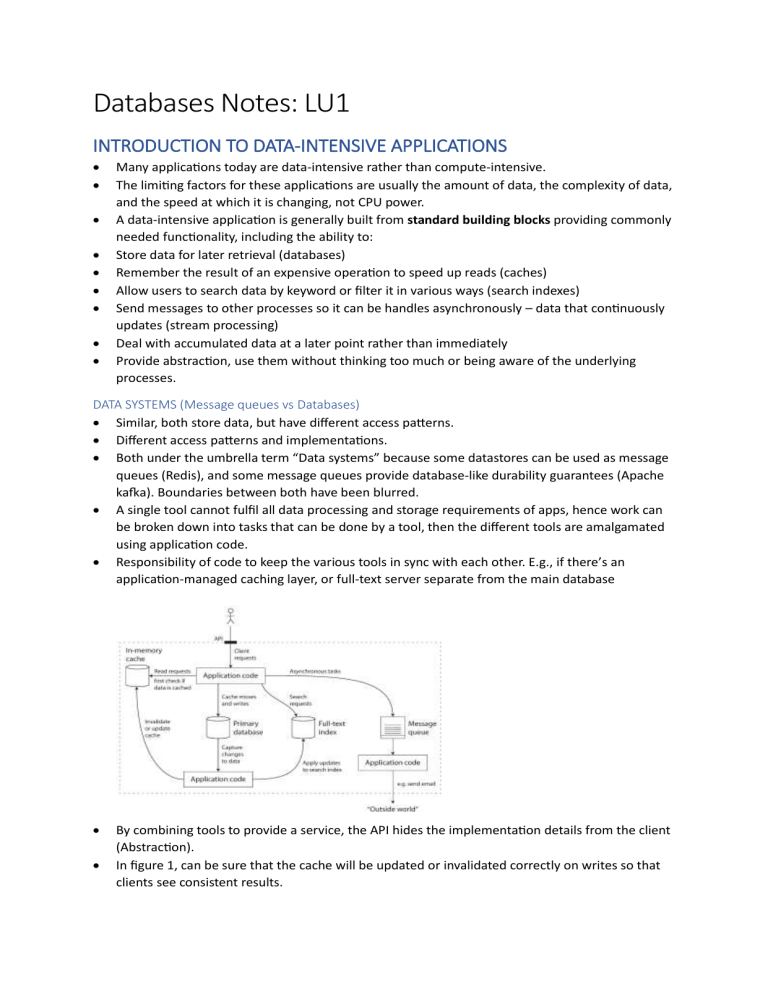

Databases Notes: LU1 INTRODUCTION TO DATA-INTENSIVE APPLICATIONS Many applications today are data-intensive rather than compute-intensive. The limiting factors for these applications are usually the amount of data, the complexity of data, and the speed at which it is changing, not CPU power. A data-intensive application is generally built from standard building blocks providing commonly needed functionality, including the ability to: Store data for later retrieval (databases) Remember the result of an expensive operation to speed up reads (caches) Allow users to search data by keyword or filter it in various ways (search indexes) Send messages to other processes so it can be handles asynchronously – data that continuously updates (stream processing) Deal with accumulated data at a later point rather than immediately Provide abstraction, use them without thinking too much or being aware of the underlying processes. DATA SYSTEMS (Message queues vs Databases) Similar, both store data, but have different access patterns. Different access patterns and implementations. Both under the umbrella term “Data systems” because some datastores can be used as message queues (Redis), and some message queues provide database-like durability guarantees (Apache kafka). Boundaries between both have been blurred. A single tool cannot fulfil all data processing and storage requirements of apps, hence work can be broken down into tasks that can be done by a tool, then the different tools are amalgamated using application code. Responsibility of code to keep the various tools in sync with each other. E.g., if there’s an application-managed caching layer, or full-text server separate from the main database By combining tools to provide a service, the API hides the implementation details from the client (Abstraction). In figure 1, can be sure that the cache will be updated or invalidated correctly on writes so that clients see consistent results. FACTORS THAT INFLUENCE THE DESIGN OF A DATA SYSTEM Skills and experience of people Legacy system dependencies Time scale for delivery Organisation’s tolerance for risk RELIABILITY The system should continue to work correctly (function at the desired level of performance) even in the face of adversity (faults and human error). EXPECTATIONS (Working Correctly) o Application performs the functions that are expected by the user. o Can tolerate mistakes by user who uses it in unexpected ways. o Performance is satisfactory for the required use case under expected load and data volume. o System prevents unauthorized access. FAULTS (What could go wrong) Fault: Component of system deviating from spec. Failure: System as a whole stops providing required service. Resilient system = Anticipate faults and cope with them. Fault tolerant suggests system can be tolerant of every possible fault, in reality not feasible. Can’t have zero probability of fault, but must design fault tolerance mechanisms to prevent failure. Trigger faults deliberately to find bugs and other faults. Therefore, ensure that fault-tolerant machinery is always tested, this increases the confidence that faults will be handled appropriately when encountered. HARDWARE FAULTS o o o Hard disks crash, RAM becomes faulty, Unplugging wrong network cable, power outage, etc. Add redundancy to individual hardware components to reduce failure rate of system. When one component dies, redundant component takes its place while broken component is being repaired. (For apps where availability is essential) - Set up disks in RAID configuration. - Dual power supply for servers. - Hot swapable CPUs. - Backup power and generators for data centers. Apps now use larger number of machines (increased data volumes and computing demands) = increase rate of hardware faults. Use software fault-tolerant techniques in addition to hardware redundancy, allows for system to tolerate loss of entire machine. SOFTWARE ERRORS o Systematic error in the system. Harder to anticipate since they are correlated across nodes, cause more system failures than hardware failures that are not correlated. E.g: - Software bug that causes instance of application server to crash when given bad input. - Runaway process that uses some shared resource (memory, disk space or network bandwidth) - Service that the system depends on that becomes slow and/or unresponsive. o Cascading failures, small fault in one component triggers fault in another component, which also triggers other faults. Solutions: - thorough testing - Process isolation. - Allow processes to crash and restart. - Measure, monitor and analyse system behaviour. HUMAN ERRORS Humans are not reliable, therefore make system reliable in spite of this: o o o o o o Design systems in a way that minimizes the opportunity for errors to occur. Well designed abstractions (APIs) make it easy for the system to do the right thing. But if the interfaces are too restrictive, people might find way to work around them, nullifying their benefit. Decouple places where people make the most mistakes from the places where they can cause failure. Provide non-production environment (sandbox) where people can experiment safely using real data without affecting the running system. Test thoroughly at all stages. Can use Automated testing, covers corner cases that rarely arise in normal operation. Allow quick and easy recovery from human error to minimize impact. Ensure that rolling back configuration changes is faster. Roll out new code gradually to ensure unexpected bugs affect subset of system. Set up detailed and clear monitoring, e.g performance metrics and error rates. Monitoring can show early warning signals and can attend to warnings ASAP. Implement good management practices and training. Bugs in apps cause loss of productivity which negatively affect organisations, hence have responsibility to ensure that user data is not corrupted. SCALABILTIY There should be reasonable ways to deal with the growth of the system (in data volume, traffic volume or its complexity). Ability of system to deal with increased load. If system grows in particular way, what are the options of coping with the growth, and how can computing resources be added to handle additional load? LOAD Described by load parameters – best choice of these parameters depends on system architecture. Can be: - Requests per second to web server - Ratio of reads and writes in db - Number of simultaneously active users in a chatroom PERFORMANCE Investigate what happens when load increases. - What happens to performance when increasing load parameters and keeping system resources unchanged? - How much do resources need to increase when load parameters are increased and want to keep performance the same? In Batch processing system performance = throughput – no. records processed/second or = time taken to run a job on dataset of certain size. In online systems, response time of service – time between client sending request and receiving response (note that don’t get same response time with every request) - Response time = distribution of values that can be measured - Varies because of random addition latency – context switch to background process, loss of network packets, etc. - Can use average response time to know typical response time, but does not inform on how many users experienced delay. - Percentiles. Sort response times from fastest to slowest, if median (50th percentile) r.t = 200m/s (for single request), half of requests are returned in less than 200m/s and other half take longer. - Look at higher percentiles (95, 99, 99.9) – tail latencies – to see how bad outliers are. If 95th percentile is 1.5sec, 95 out of 100 requests take less than 1.5sec and 5 out of 100 take longer. - Tail latencies = important b.c affect users experience. Amazon uses 99th percentile to describe response time for internal services. - Customers with slower requests = < data on accounts = many purchases = most valuable customers - Reducing response times at high percentiles = difficult because they are easily affected by random events out of your control. - Percentiles are used in Service Level Objectives and Service Level Agreements, where the expected performance and availability of service are defined. Queuing delays are sometimes responsible for large part of response time at high percentiles. Server can process small number of things in parallel, takes small number of slow requests to hold up process of subsequent requests – head of line blocking. Although subsequent tasks are faster to process, client will see slow r.t due to time waiting for prior request to complete. Must measure r.t on client side. COPING WITH LOAD Scaling Up (Vertical scaling) move to more powerful machine. Scaling Out (Horizontal scaling) distribute load across multiple smaller machines – AKA sharednothing architecture. Distributing stateful data systems from single node to distributed set up can become complex. Therefore, scale up until scaling cost require for system to be distributed. Elastic systems that can automatically add computing resources when an increase in load is detected. Useful if load is unpredictable. Scaling manually involves human analysing system capacity and decide to add more machines. Simpler. Architecture that scales well is built around the assumption of which operations will be common and which ones will be rare. Scaling is specific to a particular application. MAINTAINABILITY Other people should be able to productively work on the system when maintaining or adapting it to new use cases. Design software in a way that will minimize problems during maintenance, thus avoiding the creation of legacy software. OPERABILITY Make it easy for teams to keep system running smoothly. Good operations team typically responsible for: o Monitoring health of system and quickly restore service when it is in bad state. o Track down cause of problems, system failure and/or degraded performance. o Keep software platforms up to date. o Keep tabs on how different systems affect each other. Good operability means making routine tasks easy, allowing operations team to focus their efforts on high value activities. Things that data systems can do to make routine tasks easy: o Provide good visibility into runtime behaviour and internals of the system, with good monitoring. o Avoid dependency on individual machines. o Provide good support for automation and integration with standard. o Exhibit predictable behaviour, minimizing surprises. o Self-healing where appropriate, but also giving administrators manual control over the system state when needed. SIMPLICITY Make it easy for new engineers to understand the system by removing complexity. Complexity in larger projects slows down people who have to work on system and increase cost of maintenance. Symptoms of complexity: - Explosion of state space - Tight coupling of modules - Tangled dependencies - Inconsistent name and terminology Complexity = greater risk of introducing bugs when making a change (new developers do not understand hidden assumptions) Simplicity != reducing functionality, but removing accidental complexity (when complexity is not inherent in the problem that software solves, but arises from implementation) Tools for removing accidental complexity: - Abstraction (hide implementation detail behind simple-to-understand façade. Therefore, allowing for the abstraction to be re used instead of implementing similar thing multiple times. = Higher quality applications - High level programming languages = abstractions that hide machine code, CPU registers and syscalls. EVOLVABILITY Making change easy. AKA Agility. Agile working patterns provide framework that allows for adapting to change. Agile community has technical tools and patterns that aid in developing software in changing environment: TDD (Test-driven development) and refactoring. Ease to which a data system can be modified and adapt it to change is linked to simplicity and abstractions. Simple and easy to understand systems are easier to modify.