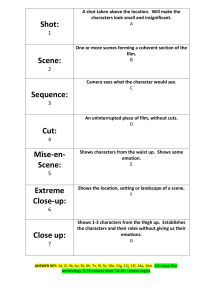

The Visual Story Creating the Visual Structure of Film, TV and Digital Media

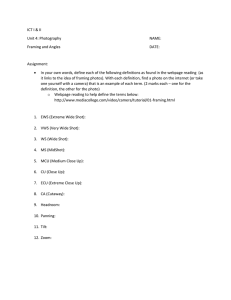

advertisement