Learning with Graphs - CS768

Lecture 1: Review of Machine Learning

5 August 2023

Lecturer: Abir De

Scribe: JV Aditya, Vaibhav

This lecture starts with an overview of Machine Learning and discusses how the basic principles apply (or don’t) to graphs. It proposes the basic task of Link Prediction and presents the

challenge of correctly demarcating a train-test split given one large ground-truth graph.

1

The Task

The standard Machine Learning task involves processing a set of features to obtain a desired result

according to a given specification. Practically, given a test dataset of features Dtest = {xi }, we

need to predict f (xi ) where f is the specification. Often, it is not possible to encode f directly

into standard functional forms. Thus, we have to resort to the process of learning the function

f from more data following the same specification. In other words, we use a training dataset

Dtrain = {(xi , yi )} to learn f . yi ’s are the expected outputs when the respective xi ’s are used as

input & are referred to as labels.

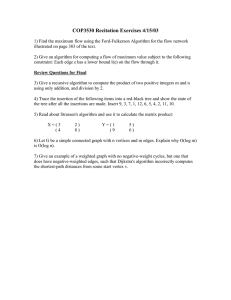

Figure 1: Machine Learning Pipeline

The model, objective and optimizer need to be mindfully designed to learn the function f

accurately from the training data. The model is usually domain-specific - LSTM or Transformer

for text, CNN for images or GNN for graphs.

2

Designing the Objective

Our aim is to design a suitable loss function L, minimizing (or optimizing) which will lead to

accurate performance on the test dataset. The optimization problem can be framed as such X

min

L(f (x), y)

(1)

f

(x,y)∈Dtest

1

This optimization is over a space of functions parametrized as per the architecture being used. The

function f is expected to be continuous and well-behaved in general.

The most desired objective is the fraction of test inputs that map exactly to the desired output.

X

min

I[y ̸= f (x)]

(2)

f

(x,y)∈Dtrain

Because we don’t have access to yi ’s from the test dataset, we can try optimizing the same objective

on a training dataset. However, that is not realistic since changing f slightly would drastically

change the objective’s value. In fact, it is possible to make the problem NP-hard for adversarially

constructed datasets. This hardness arises from the function space being too large. The following

directions can help us mitigate this issue • f should be parametrized with a finite number of parameters.

• I[y ̸= f (x)] should be replaced by a suitable continuous & tractable surrogate.

Let us limit our scope to the classification task. Specifically, assume that yi ∈ {−1, 1}. We

also wish to similarly constrain f (x) in [−1, 1]. Because f is supposed to be continuous, we can’t

constrain it to the binary range {−1, 1}. The following candidates can be considered P

1. Mean Absolute Error - (x,y)∈Dtrain |y − f (x)|

The motivation for this loss is that we want to penalize an instance with how far the predicted

label is from the expected label on the real line. Whenever f (x) > 0, we classify the input

with the positive label +1, otherwise we assign it the negative label −1.

Certain properties of this objective make it undesirable. In particular, suppose that for some

input x, the supposed label is y = +1.

• If f (x) = 0+ (> 0), the loss is 1− while the ideal loss according to identity objective

specified in (2) above would be 0 (because the prediction was made correctly).

• If f (x) = 0− (< 0), the loss is 1+ while the ideal loss according to the identity objective

is 1 (because the prediction was made incorrectly).

The ideal loss jumps from 0 to 1 while for the MAE objective, these two cases are virtually

the same. The MAE objective doesn’t simulate the identity objective well and hence isn’t a

good surrogate.

P

2. Hinge Loss - (x,y)∈Dtrain max(0, 1 − yf (x))

This loss tries to enforce a margin on the predictions. It requires that the positive predictions

be made with a score of +1 or higher, which will lead to a loss of 0. Otherwise, the loss

increases as we go towards negative f (x) values.

Because this loss simulates the jump in the identity loss using the margin, it acts as a great

surrogate.

2

3

Machine Learning on Graphs

For an arbitrary graph G : (V, E), one of the key challenges is to encode it from a discrete to a

continuous space so that the standard machine learning pipeline can be utilized. For a systematic

treatment of this and other challenges, we consider the task of Link Prediction.

3.1

Link Prediction

Consider the use-case of Facebook - given a huge graph with nodes representing users and edges

representing friendships, how do we recommend further "possible friends" to a user? In particular,

given a graph G : (V, E), we need to find a set of edges Efuture ∈ V × V − E which we expect

to form in the future. We can easily model this as the classification task discussed before with the

positive class corresponding to a "possible friendship". x(u, v) refers to features for the node pair

(u, v) and y(u, v) is the label representing friendship. This reduces to the following objective X

max(0, 1 − y(u, v)f (x(u, v))

(3)

(u,v)∈S

The primary challenges in dealing with this objective are summarized below • One would naively expect S (support for the summation of loss) to be V × V - the set of

all pairs of nodes. Suppose we go with this alternative. A strong enough model which fits

the data perfectly would learn the exact mappings of node pairs to label. For all edges in

V × V − E, the set where future edges are supposed to come from, the perfect model would

predict −1. This corresponds to prediction of 0 future edges, which is not productive. Hence,

S needs to be carefully chosen. This challenge is pretty specific to graphs; there’s a clear

demarcation between training and test sets otherwise.

• We simply added the individual hinge losses over all node pairs. However, this step doesn’t

have strong mathematical foundations. The assumption which allows us to do so in classical

machine learning is that the individual xi ’s are sampled i.i.d. (independent and identically

distributed) from the data distribution.

For a graph, this is naturally violated as nodes in the same graph have a certain degree of

co-occurrence (as a pair or not). This issue can’t be easily fixed in practice. One solution

to make the representation xuv conditionally i.i.d. with respect to the rest of the graph is to

model it as [zuv ; {zuu′ }; {zvv′ }] where zij represents raw features for the (i, j) pair. In reality,

we choose to go with the formulation as per (3) because it works well in practice.

3.2

Refining the objective’s support

The first challenge above requires serious consideration. In particular, we need to ensure that we

are training on the already existing edges with a positive label to learn what kinds of friendships

appear in reality.

3

We first demarcate some notation NE := V × V − E; Non-edges in the original graph

Etr := Training edges; must be in the original E set

NEtr := Non-edges used for training; correspond to the −1 label

Etest := Test pairs to be classified as edges; feature as non-edges during training (if at all)

To extract some information from existing friendships, one possible strategy is to artificially erase

some edges from E. In that case, Etr ∪ Etest = E and Etr ∩ Etest = ϕ. We don’t want to feed

incorrect labels to the model, hence Etest is not used directly during training. We allow the flipped

edge status to appear in features of neighboring edges, if required.

Another important task is the sampling of such test edges from E. We want the sample to be

representative of the entire edge set; otherwise the model won’t generalize well. Strategies to

sample train/test (non-)edges effectively have been discussed in future lectures.

4