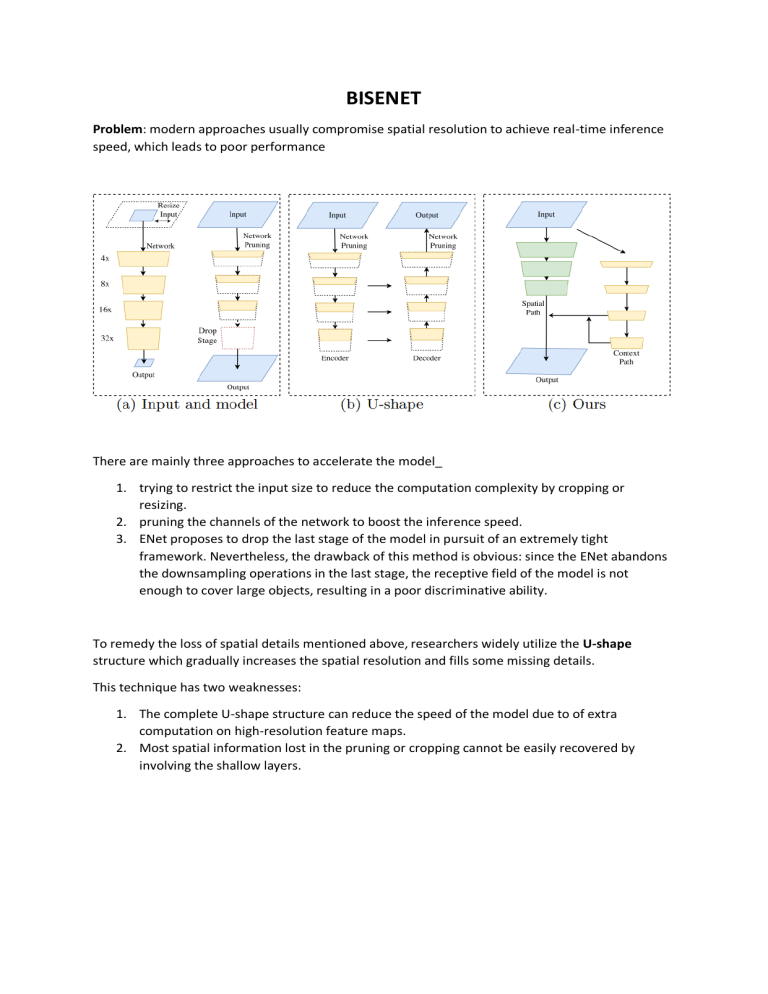

BISENET Problem: modern approaches usually compromise spatial resolution to achieve real-time inference speed, which leads to poor performance There are mainly three approaches to accelerate the model_ 1. trying to restrict the input size to reduce the computation complexity by cropping or resizing. 2. pruning the channels of the network to boost the inference speed. 3. ENet proposes to drop the last stage of the model in pursuit of an extremely tight framework. Nevertheless, the drawback of this method is obvious: since the ENet abandons the downsampling operations in the last stage, the receptive field of the model is not enough to cover large objects, resulting in a poor discriminative ability. To remedy the loss of spatial details mentioned above, researchers widely utilize the U-shape structure which gradually increases the spatial resolution and fills some missing details. This technique has two weaknesses: 1. The complete U-shape structure can reduce the speed of the model due to of extra computation on high-resolution feature maps. 2. Most spatial information lost in the pruning or cropping cannot be easily recovered by involving the shallow layers. Solution: a novel Bilateral Segmentation Network (BiSeNet). We first design a Spatial Path with a small stride to preserve the spatial information and generate high-resolution features. We stack only 3 convolution layers, each layer includes a convolution with stride = 2, followed by batch normalization and ReLU. Therefore, this path extracts the output feature maps that is 1/8 of the original image, which retains affluent spatial details. Meanwhile, a Context Path with a fast-downsampling strategy is employed to obtain sufficient receptive field. The Context Path utilizes lightweight model, like Xception (a model which utilises few parameters), that can downsample the feature map fast to obtain large receptive field, which encodes high level semantic context information. Then we add a global average pooling on the tail of the lightweight model, which can provide the maximum receptive field with global context information. Finally, we combine the up-sampled output feature of global pooling and the features of the lightweight model. We deploy U-shape structure to fuse the features of the last two stages, which is an incomplete U-shape style. N.B. These two paths compute concurrently, which considerably increase the efficiency On top of the two paths, In pursuit of better accuracy without loss of speed, we introduce: 1. Attention Refinement Module (ARM) to combine features efficiently and computes an attention vector to guide the feature learning. 2. Feature Fusion Module (FFM) to fuse the output feature of Spatial Path (low level) and the output feature of Context Path (high level). We first concatenate the output features of Spatial Path and Context Path and then we utilize the batch normalization to balance the scales of the features. Next, we pool the concatenated feature to a feature vector and compute a weight vector, like SENet (si basa su un meccanismo di squeeze and excitation, dove si concentra su canali più importantI e reduce la rilevanza di quelli meno imporanti). This weight vector can re-weight the features, which amounts to feature selection and combination. BISENET V2 Problem_1 The high accuracy of the methods used to cope with the task of sematic segmentation depends on their backbone networks. There are two main architectures as the backbone networks: 1. Dilation Backbone, removing the downsampling operations and upsampling the corresponding filter kernels to maintain high-resolution feature representation. 2. Encoder-Decoder Backbone, which adds extra top-down and lateral connections to recover the high-resolution feature representation in the decoder part. The problems of these architecture are: 1. In the dilation backbone, the dilation convolution is time-consuming and removing downsampling operation brings heavy computation complexity and memory footprint. 2. Numerous connections in the encoder-decoder architecture are less friendly to the memory access cost. However, the real-time semantic segmentation applications demand for an efficient inference speed. Solution_1 1. Input Restricting. Smaller input resolution results in less computation cost with the same network architecture 2. Channel Pruning. It is a straight-forward acceleration method, especially pruning channels in early stages to boost inference speed. But the problem_2 here is that they sacrifice the low-level details and spatial capacity leading to a dramatic accuracy decrease Solution_2 To achieve high accuracy and high efficiency simultaneously, we design the Bilateral Segmentation backbone network (BiSeNet V2) composed by: 1. One pathway is designed to capture the spatial details with wide channels and shallow layers, called Detail Branch. a. Works: It contains three stages, each layer of which is a convolution layer followed by batch normalization (Ioffe and Szegedy, 2015) and activation function. The first layer of each stage has a stride s = 2, while the other layers in the same stage have the same number of filters and output feature map size. Therefore, this branch extracts the output feature maps that are 1/8 of the original input. 2. The other pathway is introduced to extract the categorical semantics with narrow channels and deep layers, called Semantic Branch. The Semantic Branch simply requires a large receptive field to capture semantic context and can be made very lightweight with fewer channels and a fast-downsampling strategy. the Semantic Branch has a ratio of λ(λ < 1) channels of the Detail Branch, which makes this branch lightweight. a. we adopt the Stem Block as the first stage of the Semantic Branch, as illustrated in Figure 4. It uses two different downsampling manners to shrink the feature representation. And then the output feature of both branches are concatenated as the output b. The context embedding block uses the global average pooling and residual connection to embed the global contextual information efficiently c. The Gather-and-Expansion Layer consists of: i. a 3×3 convolution to efficiently aggregate feature responses and expand to a higher-dimensional space; ii. a 3 × 3 depth-wise convolution performed independently over each individual output channel of the expansion layer; iii. a 1×1 convolution as the projection layer to project the output of depthwise convolution into a low channel capacity space. When stide = 2, we adopt two 3×3 depth-wise convolution, which further enlarges the receptive field, and one 3×3 separable convolution as the shortcut 3. Due to the fast-downsampling strategy, the spatial dimensions of the Semantic Branch’s output are smaller than the Detail Branch. We need to upsample the output feature map of the Semantic Branch to match the output of the Detail Branch. We adopt the bidirectional aggregation method. Specifically, we design a Guided Aggregation Layer to merge both types of features effectively and improve the performance without increasing the inference complexity, we present a booster training strategy with a series of auxiliary prediction heads, which can be discarded in the inference phase. a. Bilateral guided aggregation employs the contextual information of Semantic Branch to guide the feature response of Detail Branch. With different scale guidance, we can capture different scale feature representation, which inherently encodes the multi-scale information. 4. Booster training trategy can enhance the feature representation in the training phase and can be discarded in the inference phase. Therefore, it increases little computation complexity in the inference phase