Uploaded by

johncenaneverloose

Statistical Distributions Tutorial: Inverse Gaussian, Normal, Exponential

advertisement

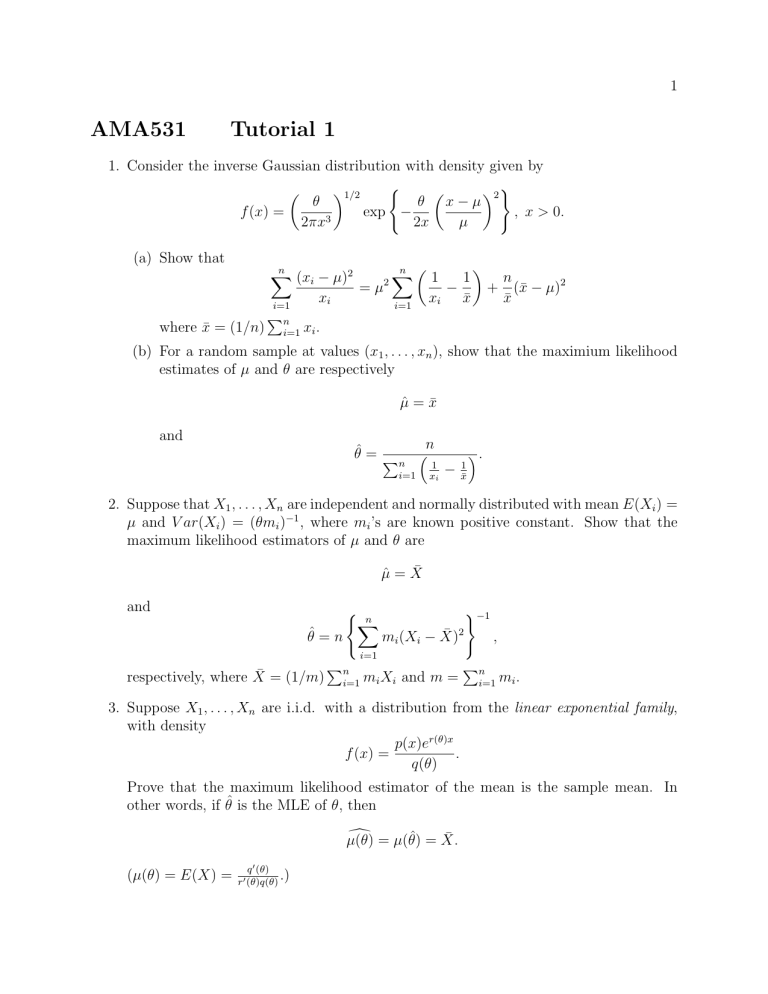

1 AMA531 Tutorial 1 1. Consider the inverse Gaussian distribution with density given by ( 1/2 2 ) θ θ x−µ f (x) = exp − , x > 0. 2πx3 2x µ (a) Show that n X (xi − µ)2 i=1 where x̄ = (1/n) Pn i=1 xi =µ 2 n X 1 i=1 1 − xi x̄ + n (x̄ − µ)2 x̄ xi . (b) For a random sample at values (x1 , . . . , xn ), show that the maximium likelihood estimates of µ and θ are respectively µ̂ = x̄ and n θ̂ = P n 1 i=1 xi − 1 x̄ . 2. Suppose that X1 , . . . , Xn are independent and normally distributed with mean E(Xi ) = µ and V ar(Xi ) = (θmi )−1 , where mi ’s are known positive constant. Show that the maximum likelihood estimators of µ and θ are µ̂ = X̄ and θ̂ = n ( n X )−1 mi (Xi − X̄)2 , i=1 respectively, where X̄ = (1/m) Pn i=1 mi Xi and m = Pn i=1 mi . 3. Suppose X1 , . . . , Xn are i.i.d. with a distribution from the linear exponential family, with density p(x)er(θ)x . f (x) = q(θ) Prove that the maximum likelihood estimator of the mean is the sample mean. In other words, if θ̂ is the MLE of θ, then d = µ(θ̂) = X̄. µ(θ) (µ(θ) = E(X) = q 0 (θ) .) r0 (θ)q(θ)