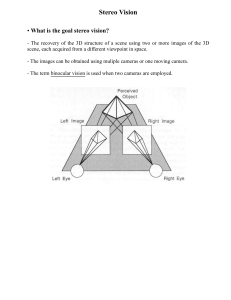

Towards a multimodal stereo rig for ADAS: State of art and algorithms jfbarrera@cvc.uab.cat Barrera Campo Fernando Centre de Visió per Computador Universitat Autònoma de Barcelona (UAB) 08193 Bellaterra (Barcelona). Catalonia, Spain Advisors: felipe.lumbreras@cvc.uab.cat angel.sappa@cvc.uab.cat Lumbreras Felipe Sappa Angel D. Abstract The current work presents a survey on state of the art on multimodal stereo rig for ADAS applications. The proposed survey is organized following a functional modular architecture. The basic requirements for each module have been identified trying to adapt current stereo algorithms to the proposed multimodal framework. All the implemented algorithms have been validated using ground truth data (classical stereo data). Preliminary results on a multimodal stereo rig are presented and main challenges still open are identified. Keywords: Multimodal, stereo vision, infrared imagery, and 3D reconstruction. 1. Introduction In the most recent study published by the World Health Organization (WHO, 2009) about road security, it has been concluded that “More than 1.2 million people die on the world roads every year, and as many as 50 million others are injured”. Mainly, the deaths occur in low-income and middle-income countries. In an attempt to remedy this situation the WHO periodically issues reports on road traffic injury prevention; it is a set of recommendations on how countries could improve road safety and reduce the death toll on their roads. The WHO aims to help emerging and developing economies to beat this situation. However, all these efforts have not been completely effective, and it still has much work to do. According to projections of the WHO for the 2030, the road traffic injuries will be the fifth leading cause of death in the world, around 3.6%, going up from ninth position in 2004 with 2.2%. This means that the number of injured in roads may overcome the amount of sick with either stomach cancer or HIV/AIDS. For this reason, during the last few years the automotive industry and many research centers are trying to develop Advanced Driver Assistance Systems or ADAS that increase road safety. The first step to accomplish this goal is to identify risky factors that influence crash involvement; these were classified by WHO (2004) in 10 categories: speed, pedestrian and c 2009 Fernando Barrera, Felipe Lumbreras, and Angel D. Sappa. cyclist, young drivers and riders, alcohol, medicinal and recreational drugs, drive fatigue, hand-held mobile phones, inadequate visibility, road-related factors, and vehicle-related risk factors, which are generally out of control. The above factors have different repercussions in the road safety, notice that, the statistics vary according to the country, as well as cultural, social, and economic aspects. These situations lead to many solutions and proposals in accordance with the problem to face, though atmospheric conditions like: precipitation, fog, rain, snow, wind, and other. These contribute to poor visibility, and therefore, it is considered a cause of crash involvement. Statistics show that driving at night is riskier in terms of crash involvements per distance traveled than driving during the day. This is due to the more prevalent use of alcohol by drivers at night, the effect of fatigue on the driving task, and the risk associated with the visibility reduction (Keall et al., 2005). In Europe nearly 50% of fatal car accidents take place at the night. Then, the risk of an accident is twice for nocturnal driving in comparison to do it during the day. The drivers could not see other road users, the darkness, raining, or fog prevents it react in time for avoiding the collision, even under high visibility the road traffic incident continues happening. Recent advances in Infra-Red (IR) imaging technology has allowed its use in applications beyond either military or law enforcement domain. Additionally, new IR commercial vision systems have been included in diverse technical and scientific applications. For instance, it is used in airports, coastal, and park safety. This type of sensor offers features that facilitate tasks such as detection of pedestrians, hot spots, differences in temperature, among others. This can significantly improve the performance of those systems where the persons are expected to play the principal role, for example: video surveillance applications, monitoring, and pedestrian detection. Nowadays, the literature about recovery of 3D scene structure suggests many models and techniques, its selection depends on different factors. Gheissari and Bab-Hadiashar (2008) proposed a set of criteria that affect the suitability of a model to the application, for instance: the nature of physical constrains, performance, scale and distribution of noise, complexity, among others. A relation undeniable is the established between the model and the kind of sensor, because it provides the initial information. In this sense, the automotive industry has selected Electro-Optical sensors (EO)1 as preferred ones, mainly, due to its low costs. Between these devices the IR cameras offers best capabilities, thus they will became the de facto standard for imagery at night time. This novel technology in the ADAS domain requires new methods or adaptation of previous ones, supposing a challenge and an opportunity. So a classic dichotomy in 3D reconstruction arises, stereo versus monocular systems. Although, during the last decade many valuable academic researches were published in journals, such as Saxena et al. (2009) that considered the problem of estimating 3D structure from a single image, this was possible joining Markov Random Field (MRF) and supervised learning theories. This enable to monocular systems to drive autonomous vehicles through unstructured in/outdoor environments; it is a complex task that requires a prior knowledge of the environment (Michels et al., 2005). A drawback is the way to estimate the relative 1. Electro-Optical sensors or EO includes night vision, infrared cameras, CCD/CMOS cameras, and proximity sensor. In general, it is a technology involving components, devices and systems, which operate by modification of the optical properties of a material by an electric field. 2 depths, while a well calibrated stereo rig can directly compute it from the pair, a monocular system must detect and track features, and then estimate the depth from their motion. Similar discussion was formulated in (Sappa et al., 2008). In conclusion, each one has its own advantages and disadvantages but today stereo systems offer a balance between accuracy, speed, and performance. At this point, it is possible to state the next question: Could a couple of sensors measuring different bands of the electromagnetic spectrum, as the visible and infrared, form a part of an Advanced Driver Assistance System, and help to reduce the amount of injuries and deaths in the roads? It is not an easy question but, the current work will show an approach toward a system of these characteristics; the main objective is to find advantages drawbacks, pitfalls, and potential opportunities. The fusion of data coming from different sensors, as the emissions registered at visible and infrared band, represents a special problem because it has been showed that the signals are weak correlated. Indeed, mild anti-correlation between visible and Long-Wave Infrared (LWIR) (Scribner et al., 1999). Therefore, many traditional techniques of image processing are not helpful, and require adjustments for correctly performing in each modality. Night vision systems started out as a military technology and its usefulness was always related to defense and national security topics. But, this conception changed due to good results reached in other knowledge area, for instance, ADAS. Nowadays, engineers have to simplify the IR devices for typical ADAS, thus several vehicles includes IR systems, and other Electro Optical sensors (EO) directly from manufactures. Notice that, this capacity has been installed, but it is not exploited beyond of simple thermal visualization of path. In order to address the above question the current work study the state of art in stereo vision compounds of visible and infrared spectrum sensors, and propose a framework to evaluate the best algorithms, towards a multi modal stereo system. This is organized as follows. We introduce the state of art, early researches in multimodal stereo. Next, it is summarized the main theoretical concepts about infrared technology, development, and its applications. As well as the formulation and equations that model a stereo system. Finally, it is presented a multimodal stereo system and its constitutive stages. The developmrnt of a multimodal stereo system is a complex task, for this reason, a traditional stereo system, based on color images, is initially analysed. Next, this framework is extended, giving support to another modality, thermal information. This incremental methodology was followed because the functions and approaches implemented need validation, then the metrics and protocols previously published are reused for measure the error, until a ground truth for multimodal is developed. 2. State of art The three-dimensional scene structure recovery has been an intense area of research for decades, but to our knowledge, there are a few works on depth estimation from infrared and visible spectrum imagery. This section reviews latest advances in multimodal stereo. Also, it is proposed a coarse grained classification of the reviewed multimodal systems. A review of the cited methods allows us to identify two types of data fusion: raw fusion, also referred as early, and high level fusion (late fusion). These concepts are commonly used in other computer vision fields. The raw fusion directly uses the sensing measurements to 3 evaluate a dissimilarity function. On the contrary, in the case of a high level fusion the data are not immediately processed. They are transformed to another representation of higher level of abstraction. Hence, this scheme needs more pre-processing than raw fusion. Several implementations of multimodal stereo systems with raw fusion could be found. Though, they share a common drawback. Until the moment, it has not been proposed a suitable function of dis/similarity that matches IR emission or thermal information with intensity. In order to face this problem several methods bounded the searching space into the images. The most frequent way is to define regions of interest (ROI); a slicing window with fixed size is moved over the region. Then, a similarity function is evaluated and its result stored for future operations. The idea is to measure the quality of the matching over regions, for instance: it is hoped that hot spots in the infrared images correspond to pedestrians. If the segmentation of images is stable on both modalities (infrared and visible spectrum), the pedestrian shapes are detected in it (those seem like equal), and its value of similarity will be maximum over corresponding regions. The last one is a conceptual example, but not always is necessary segmentation, and it could be replaced by a feature detector, as the blob detector, or whatever key point detector. The only restriction is that inputs of stereo algorithm must be raw values. The strategies discussed above increase the matching precision, but also the prior knowledge of scene improves the overall performance. For instance, algorithms that search certain shapes (heads, pedestrians, or vehicles) allow a voting schema, the regions with highest vote count are considered the best matching (Krotosky and Trivedi, 2007a). The multimodal fusion schemes, with a high level of abstraction, delay the data association until is pre-processed. In general, these approaches process each modality, as if they were independent streams; the motivation is simple, it is wished to avoid the registration or correspondence stage on the sensing data, for which it transforms the measurements to a common representation, for example, vectors of optical flow, shape descriptors, or regions. In recent years significant progress has been made in multimodal stereo for ADAS applications, in spite of the difficulty of acquire infrared devices2 . The papers based on two modalities (infrared and visible spectrum) are summarized in Table 1. Tasks such as registration and sensor fusion largely concentrate the researcher interest because they are required in whatever application. Notice that these are the first challenges to solve. The table 1 shows a list of researches in multimodal stereo; the most relevant and recent are discussed below. Mainly, those related to registration because are close to the aim of the current work. Krotosky and Trivedi (2007a) report a multimodal stereo system for pedestrian detection consisting of three different stereo pairs: (i) two color cameras and two IR cameras; (ii) two color and a single IR cameras; and (iii) a couple color and infrared cameras. The stereo rig, among color and infrared cameras are aligned in pitch, roll, and yaw. (i )—A configuration of two color and two infrared cameras is analyzed. In order to get a dense disparity map from both color-stereo and infrared-stereo imageries the correspondence algorithm by Konolige (1997) has been used. This approach is based on area correlation, so a Laplacian Of Gaussian (LOG) filter transforms the gray levels in the image to directed edge intensities over some smoothed region, the degree of similarity between the filtered imagery is measured with the Absolute Difference (AD) function. In 2. Infrered cameras can be bought only after being registered in U.S Department of Commerce, Bureau of Industry and Security. 4 Table 1: Review of multimodal stereo IR/VS rig applications. Applications Description References Registration Geometrically align images of the same scene taken by different sensors (modalities). (Kim et al., 2008),(Morin et al., 2008),(Zheng and Laganiere, 2007),(Istenic et al., 2007),(Zhang and Cui, 2006),(Krotosky and Trivedi, 2006),(Hild and Umeda, 2005),(Segvic, 2005),(Kyoung et al., 2005), and (Li and Zhou, 1995) Detection Detection of human shape into the visible spectrum and infrared imageries. (Krotosky and Trivedi, 2007a) (Han and Bhanu, 2007),(Bertozzi et al., 2005), and (Krotosky and Trivedi, 2007a). Tracking Detection and location of moving people (pedestrian, hot spots, or other body parts) in time using multiple modalities. (Trivedi et al., 2004), (Krotosky et al., 2004), (Krotosky and Trivedi, 2008), and (Krotosky and Trivedi, 2007b). 3D extraction Thermal mapping. (Prakash et al., 2006) order to optimize results the disparity range is quantized into 64 levels, the areas with low texture are detected with an interest operator (Moravec, 1979), and rejected. Finally, the unfeasible matches are detected and suppressed under left-right consistency constraint. So, the above algorithm for disparity computation is applied as much color as infrared pair. The author’s aim is getting a first approximation to the problem because it used a wellknown real time algorithm. (ii)—A multimodal stereo setup is presented; it is composed of a color-stereo rig paired with a single infrared camera. A dense stereo matching algorithm corresponding the color imageries is considered. For this purpose, the above explained method (Konolige, 1997) is used. Next, it is used a trifocal framework to register the color and infrared imageries with the disparity map. (iii)—The last multimodal stereo approach to pedestrian detection is a system with raw fusion in opposition to the ones introduced above. The authors use a previous implementation which is based in Mutual Information (Krotosky and Trivedi, 2007b). They propose to match regions in cross-spectral stereo images. The matching occurs by fixing a correspondence window along one reference image in the pair and sliding the window along the second image. The quality of the match between the two correspondence windows is obtained by measuring the mutual information between them. The weak correlation between the images implies high bad matching rate, then an additional restriction must be included. Assuming that the pedestrian’s shape belongs to at a specific plane. Hence, it is possible to define a voting matrix where a poor match would be widely distributed across a large number of different disparity values, whereas the disparity variation is small for a good matching. 5 Prakash et al. (2006) introduce a method that recover the 3D temperature map from a pair of thermal calibrated cameras. Firstly, the cameras are calibrated with a Matlab toolbox (Bouguet, 2000), hence intrinsic and extrinsic parameters are computed. The next task is to solve the correspondence problem. A surface with isotherms3 lines is assumed in order to match points. The main drawback of this approach is to suppose different temperature gradient on the object surface, otherwise the similarity measure will not be enough distinctive to detect correct matching. The used cost function is sum of squared difference or SAD. 3. Background The study of the nature of light has always been the most important source of progress in physics, as well as for the computer vision. This section introduces the basic concepts to understand infrared sensors, its evolution, physic properties, and its usefulness for ADAS. Later, also it is presented some ways for classifying of stereo systems, components, geometry models derived from camera location, and depth computation. 3.1 Infrared sensors: history, theory and evolution In 1800 the astronomer Sir Frederick William Herschel experimented with a new form of electromagnetic radiation, which was called infrared radiation. He built a crude monochromator, and measured the distribution of energy in sunlight. The Herschel’s experiment additionally showed the existence of a relation between temperature and color. In order to show this, he let that the sunlight passing through a glass prism. Clearly, it was dispersed into its constituent spectrum of colors. Next, an array of thermometers with blackened bulbs was used for measuring various color temperatures. It is hoped that the equipments used by Herschel’s have been improved several times due to new alloys and materials. Now there are two general classes of detectors: photon (or quantum) and thermal. In the first class of detectors the radiation is absorbed within the material by interaction with electrons. The observed electrical output signal results from the changed electronic energy distribution. These electrical property variations are measured to determine the amount of incidents optical power. Depending on the nature of interaction the photon detectors are divided into different types, the most important: intrinsic detector, extrinsic detectors, photoemissive detectors, and quantum. They have high performance but require cryogenic cooling. Therefore, IR systems based on semiconductor photodetectors are heavy, expensive and inconvenient to many applications, specially ADAS. In a thermal detector the incident radiation is absorbed by a material semiconductor, causing a change in the temperature of the material, or other physical property, its resultant change is used to generate an electrical output proportional to incident radiation. In this kind of sensor is necessary that at least one inherent electrical property change with temperature, and it could be measured. A traditional device used for thermal detector of low-cost are bolometers, they turn an incoming photon flux into heat, changing the electrical resistance of the detector element, whereas in a pyroelectric detector, for example, this flux changes the internal spontaneous polarization. Currently, thermal detectors are avail3. Points of equal temperature. 6 able for commercial applications, opposite to the based on photons, which are restricted to military uses. In contrast to photon detectors, the thermal detectors do not require cooling. In spite of it, the photon detectors are popularly believed to be rather speed, and selective wavelength in comparison with another type of detectors, this fact was exploited by the military industry. But, later in the ’90s, advances in micro miniaturization allowed arrays of bolometers or thermal detectors. It compensated the moderate sensitivity and low frame rate of thermal detectors. Large arrays let high quality imagery and good response time, also the manufacture cost to drop quickly (an extensive review in Rogalski (2002)). Infrared by definition refers to that part of the electromagnetic spectrum between the visible and microwave region, and their behavior is modeled for the next equations: υ= c , λ (1) where c is the speed of light, 3 × 1012 (m/sg); υ is the frequency (hz), and λ its wavelength (m). The energy is related to wavelength and frequency by the following equation: E = hυ = hc , λ (2) where, h is Planck constant, equal to 6.6 × 10−34 (joules sg). Notice that, light and electromagnetic waves of any frequency will heat surfaces that absorb them, and the infrared detectors measure the emissivity in this band, but it could occur in other bands, depending on physical properties of the objects (constitutive material). Humans at normal body temperature mainly radiate at wavelengths around 10μm, it corresponds to Long-Wave InfraRed band or LWIR (see the table 2). Table 2: General spectral bands based on atmospheric transmission and sensor technology Spectral Band Spectral Wavelenght (μm) Visible 0.4 - 0.7 Near InfraRed (NIR) 0.78 - 1.0 Short-Wave InfraRed (SWIR) 1-3 Mid-Wave InfraRed (MWIR) 3-5 Long-Wave InfraRed (LWIR) 8 - 12 The use of night vision devices should not be confused with thermal imaging. While, night vision devices convert ambient light photons into electrons, which are then amplified by a chemical and electrical process and then converted back into a visible ray of light. The thermal sensors create images detecting radiation that emits the objects. 3.2 Night vision in ADAS Night vision is a technology that was originated in military applications for producing a clear image on the darkest of night. As it was explained above, they need no light whatsoever to operate, and also have the ability to see through special conditions such as: fog, rain, haze, or smoke. Thus, it would be interesting for ADAS since road users can avoid potentially hazards. 7 Researchers have always been convinced that thermal imaging is an extremely useful technology. Nowadays, it can be found vehicles with IR equipment. This tendency is being followed by different car manufacturer. Then, new technical requirements have been formulated. Today, there are two different technologies on the market: One is called active, using near infrared laser and detectors, and the other passive, which only uses thermal infrared detector (Ahmed et al., 1994). The difference is notorious. Active systems beams infrared radiation into the area in front of the vehicle, for this purpose, usually it involves laser sources or just a light bulb in the near infrared range (NIR). Then, infrared radiation is reflected by objects, the road, humans and other road users. Later, the reflections are captured using a camera sensitive to a same region of the spectrum that was emitted, for example, a NIR camera. Whereas passive systems register relatives differences in heat, or infrared radiation emitted in the far infrared band (FIR), and it does not need a separate light source. The selection of the best night vision system for ADAS is not easy, different factors must be considered. Although, both systems are technically and economically feasible, the passive systems based on FIR offer advantages. It is not dependent on the power of the infrared beams, because those are not necessary. Then, it contains less components so it is less susceptible to breakdowns. FIR detects people and hot spot at a longer range. The major advantage of FIR is that it is not sensitive to the headlight of oncoming traffic, street lights and powerfully reflecting surfaces such as traffic signs. Since NIR systems (or passives) are based on the use of light beams with wavelength close to visible spectrum, two facts can happen. Firstly, the driver can be blinded for light ray reflection or dispel. Or, if an object is illuminated by two or more infrared beams, this could appear brightly on the screen. The worse case is when an infrared source directly illuminates a detector, situation frequent by the glare of oncoming cars (FLIR, 2008) (Schreiner, 1999). The setup of IR systems, in the context of ADAS, is another interesting topic to be mentioned. It includes camera position, display, and applications. They are discussed in more detail in next section: 3.2.1 Camera position The location of the sensor or camera is critical to obtain an acceptable image of the road. If the sensor or camera is positioned low (e.g., in the grill), the perspective of the road will be less than ideal, especially when driving on vertical curves. It is acceptable to position the sensor at the driver eye height, and it is preferable to place it above the driver’s eyes. Another aspect of camera position is that a lower position is more exposed to dirt. Glass interferes with the FIR wavelengths and cannot be placed in front of the sensor. Thus, the FIR sensor cannot be placed behind the windshield. However, early research, as the performed by BMW, concluded that the best position is at the left of the front bumper. This result could be contradictory but a new generation of FIR sensors is being developed, especially for ADAS. Table 3 presents the key points of researches performed by five car manufacturer. Other examples are: Renault NIR-contact analogue, which is placed at the inside rear view mirror, and the Daimler-Chrysler camera (NIR), which is placed high above the driver’s eyes (rear mirror). 8 Manufacturer General Motors and Volvo Fiat and Jaguar Autoliv Table 3: IR systems. Technology and setup FIR camera mounted behind the front grill and cover by a protective window. NIR camera placed just above the head of the driver (rear mirror) and light source is over the bar at the front of the car. FIR camera placed at the lower end of the windshield. Camera specification Raytheon IR-camera. Maximum sensitivity at 35◦ C. Field of view horizontally 11.25◦ and vertically 4◦ . The detection range for a pedestrian is 300 m. Active system NIR. Field of view - horizontally 45◦ . The detection range for a pedestrian is 150 m. Active system NIR. Field of view - horizontally 45◦ . The detection range for a pedestrian is 150 m. 3.2.2 Display and applications Initially, it is explored the feasibility that the systems of night vision use a mirror and projector over the dashboard and lower part of the driver’s windshield, this unit project real-time thermal images, which appears to float above the hood and below the driver’s line of sight. Perhaps, this visualization of the system is good, but to include these devices in the vehicles demand the development of expensive technologies and the users will not pay by them. A more realistic system is currently used in many vehicles; it consists of a liquid crystal display (LCD) embedded in the middle dashboard. The driver check the thermal images and other applications supplied by the vehicle computer. The current commercial applications, based on infrared images, are limited to display a stream of images, which correspond to events registered in real time by sensors. Although, to develop a system in real time is not simple task, the only operation of image processing is contrast enhancement. Recently, new research lines in night vision develop software that can identify pedestrian or critical situations. 3.3 Stereo vision Computational stereo refers to the problem of determining three-dimensional structure of a scene from two or more images taken from distinct viewpoints. It is a well-known technique to obtain depth information by optical triangulation. Other examples are: stereoscopy, active triangulation, depth from focus, and confocal microscopy. Stereo algorithms could be classified according to different criteria. A taxonomy for stereo matching is presented by Scharstein et al. (2001); they propose to categorize them into two groups, which will be explained briefly. Local methods attempt to match a pixel with its corresponding one in the other image. These algorithms find similarities between connected pixels through its neighborhood, surrounding pixels provide the information to 9 identify matches. Local methods are sensitive to noise, and ambiguities, such as: occluded regions, regions with uniform texture, repeated patterns, changes of view point or illumination. Global methods can be less sensitive to the mentioned problems since high-level descriptors provide additional information for ambiguous regions. These methods formulate the problem of matching in mathematical terms, more than local methods, which allows to introduce restrictions that model surfaces or maps of disparity. For instance: smoothness, continuity, among others. Nowadays, it is a still open research topic to find the best conditions, restrictions, or primitives to decrease the percentage of bad matching pixels. Some methods use heuristic rules, or functional to do it. Their main advantage is that scattered maps of disparity can be completed. This is performed by techniques such as: dynamic programming, intrinsic curve, graph cuts, nonlinear diffusion, belief propagation, deform model, and any other optimization or search procedure4 . The existing algorithms also are categorized into different groups, for instance, depending on the number of input images: multiple images or single image. In the first case, the images could be taken either by multiple sensors with different view points or by a single moving camera (or moving the scene, and holding the sensor fixed). Another classification could be obtained according to number of used sensors: monocular, bifocal, trifocal, and multi-ocular. The figure 1 shows a generic binocular system with nonverged geometry5 . The fundamental basis for stereo is the fact that every point in three-dimensional space is projected to a unique location in the images (see figure 1). Therefore, if it is possible to correspond the projections of a scene point in the images (IL and IR ), then it is certain that its spatial location on a world coordinate system O will be recovered. IR IL yR’ Y PL xL PR’ xR’ CR X O yL CL fR Z fL P Figure 1: A stereo camera setup. Assuming that: PL and PR are the projections of the 3D point P on the left and right images, and OL and OR are the optical center of cameras, on which two reference coordinate systems are centered (see figure 2). If also, a pinhole model for the cameras are supposed, and that the image plane arrays are made up of a perfect rectangular grid aligned. Then, the line segment CL CR is parallel to the x coordinate axis of both cameras. Under this 4. It refers to choosing the best element from some set of available alternatives. 5. Camera principal axes are parallel. 10 particular configuration the point P is defined by the intersecting ray from the optical centers OL and OR through their respective images P : PL and PR . P Z CL x x’ PL PR’ CR K OL T OR Figure 2: The geometry of nonvenged stereo. The depth Z is defined by a relationship of similarity between the triangles ΔOL CL PL with ΔP KOL , and ΔOR CR PR with ΔP KOR . CL PL CL OL = OL K KP By similar triangles- ΔOL CL PL with ΔP KOL , = OR K KP By similar triangles- ΔOR CR PR with ΔP KOR , CR PR CR OR ∴ KP = CL OL × Or Z = f OL K + OR K CL PL + CR PR T , d from 3 and 4, with CL OL = CR OR , (3) (4) (5) (6) where d is the disparity or displacement of a projected point in one image with respect to the other; in the nonverged geometry depicted in figure 2 it is the difference between the x coordinates: d = x − x (the last one is valid when the pixels x and x are indexes of a matrix). The baseline is defined as the line segment joining the optical centers OR and OL . 4. Problem formulation As it is mentioned in previous sections large number of accidents take place at night, and the IR sensor could help to improve the safety in those situations as well as during extreme weather conditions. Therefore, the research of new systems that exploit these novel technologies, which are available at market, and could help to road users avoid potential hazards is a open issue. In this way, it is proposed a framework for stereo correspondence that matches images of different modalities: thermal and color imagery. In order to obtain it, a first attempt is matching two images of same modality; color/color, because the 11 functions and methods require debugging. Afterwards, the framework will be extended to infrared images. Different stereo algorithms are briefly reviewed in the previous section, it could be concluded that dense stereo algorithms are the best choice, due to their fast response, easy hardware implementation, and suitability to ADAS. The disparity maps supplied by them are useful in several applications, such as navigation, detection, tracking, and others. In this section, the different modules that define the proposed multimodal framework are introduced. Figure 3 shows a flow chart of the modules, which are described in detail in next sections. • Image acquisition: the cameras were arranged as shown in figure 4, simulating their real position in a vehicle; and a dataset composed of several color and infrared images was generated. • Calibration: the next step is the cameras calibration, which compute the intrinsic and extrinsic parameters. This is an important stage, since the stereo algorithm is based on epipolar constraint. Therefore, the stability of system depends on the estimation of epipolar geometry, since, an error on the estimation, would prevent finding the right correspondence. • Rectification: is a transformation process used to project multiple images onto a common world reference system. It is used by the framework to simplify the problem of finding matching points between images. • Stereo Algorithm: consist of several steps to find the depth from a stereo pair of images; initially, in the visible spectrum, later, a multimodal pair (infrared and color). • Triangulation: the 3D position [X, Y, Z]T of a point P, can be recovered from its perspective projection on the image planes of the cameras, once the relative position and orientation of the two cameras are known and the projections are corresponded. Two approaches were studied to face the problem of sweep image. Sweep image is the strategy used to slice the correspondence window; this can be done in two ways: scan over epipolar lines or horizontally after rectification. In most camera configurations, finding correspondences requires a search in two dimensions. However, if the two cameras are aligned to have a common image plane, referred in the literature as rectification, the search is simplified to one dimension. The rectification stage builds a virtual system of aligned cameras, independently whether their base line are parallel to the horizontal axis of the images or not. Notice that, working with rectified images is computationally efficient because the slider window is moved in one direction (row direction). Unfortunately, ours stereo rig has the cameras far away (wide baseline), and the rectification stage, by effect of distance, changes the pixel values, in consequence the matching is more complex. We will show the cases where each ones is valid. The core of the framework is the stereo algorithm, which is composed of four stages: Matching cost computation, cost aggregation, disparity computation/optimization, and disparity refinement. The goal of these steps is to produce a univalued function in disparity space d(x, y) that best describes the shape of the surfaces in the scene. This can be viewed 12 as finding a surface embedded in the disparity space image that has some optimal property, such as lowest cost. Finally, the triangulation is the process that determines the location of a point an Euclidean space, knows their projections, intrinsic, and extrinsic camera parameters. Plane sweep IR Horizontal Calibration Rectification VS Epipolar Matching hi cost computation Cost (support) aggregation Disparity computation / optimization Disparity refinement Stereo Algorithm Triangulation Figure 3: Multimodal framework. 5. Images acquisition The image acquisition system is shown in figure 4. It consist of four cameras. A pair of cameras corresponding to a on the shelf stereo vision system (Bumblebee from Point Gray). Also, a conventional black and white (b/w) camera (VS1), and a IR camera (IR1)(Photon 160 of FLIR). ( 0 , y0, z0 ) RIR + tIR (x BIR VS1 VS2 VS3 BB (x0 , y0, z0 ) Bumblebee RBB andd TBB Figure 4: Camera setup. Bumblebee camera is used as a validation tool, providing the ground truth of the scene. It is related to multimodal stereo by a rotation RBB and a translation TBB . RBB and TBB are unknown parameters during the acquisition time and they need to be computed. The 13 main multimodal system is conformed of the b/w and infrared camera (VS1 and IR1). This is not the unique configuration, permuting the cameras is possible to have other multimodal stereo systems, for instance: VS2 with IR1 or VS3 with IR1. The main specifications of cameras used in current work are summarized in table 4. Table 4: Camera specifications. Specifications IR1 VS1 VS2 Image sensor type Thermal CCD CCD Resolution 164×129 752×480 640×480 Focal length 18 mm 6 mm Pixel size 51 μm 7.4 μm Image properties 14 bits 8 bits RGB VS3 CCD 640×480 6 mm 7.4 μm RGB 5.1 Experimental Results Next sections present in details both, the image acquisition system and the recorded data sets. 5.1.1 Image acquisition system and hardware The acquisition system was assembled with the cameras detailed in the table 4, simulating their real position on a vehicle. It was followed a schema like the one shown in fig. 4, its hardware implementation is depicted in figure 5(a). Since each camera delivers its own video stream and they were recorded in different PC. A mark videos synchronization was used. Furthermore, note that cameras record at different frame rate. The VS1 camera has a frame rate almost two times faster than the infrared camera (IR1). The mark was made with a blade and a pair of screws on the border; this helix was attached to a drill like a fan. Then, the 4 streams are synchronized using the position of the blade. In the infrared images, the screws are hot spots and shine points in color, due to the thermal differences and reflected light (see figure 5(b)). 5.1.2 Image dataset The image acquisition system presented above has been used for recording 4 sequences (2 indoor and 2 outdoor). These video sequences were used for validation the different algorithms developed thought the current work. Furthermore, the dataset (Scharstein and Szeliski, 2003), which consists of high-resolution stereo sequences with a complex geometry and a pixel-accurate ground-truth disparity data was concidered. In particular, the dataset called Teddy was used for validating the disparity maps generated by implementing different stereo algorithm (section 8). A couple the images of example in figures 6 and 8. The Bumblebee is a device speciality designed for computational modelling of scenes in 3-D from two images (fig. 7(a) and 7(b)). In the figure 4, this is represented by the VS2 and VS3 cameras, which are mechanically coupled. The Bumblebee is offered together with a framework coded in visual C++, allowing its modification for general proposes. Initially, the model 3-D of the scene (fig. 7(c) and 7(d)) was planned to be uses as ground 14 (a) Stereo rig. (b) Stream synchronizer. Figure 5: Image acquisition system and hardware. (b) IR camera. (a) VS1 camera. Figure 6: Multimodal dataset. truth for validating the result of the proposed algorithm. However, it is a better option to measure the error at a previous stage , and to avoid the additive effect of noise. Therefore, the validation is performed after the triangulation, over the disparity map, which is an intermediary representation. Nevertheless, the model generated by our algorithm is shown only for visual inspection. 6. Calibration Camera calibration is a necessary step in 3D computer vision in order to extract metric information from 2D images. Many works have been published by photogrammetry and computer vision communities, but none formally about calibration of multimodal systems. The multimodal stereo rig in the current work is built with an IR and color camera. Infrared calibration is tackled by two different procedures. Firstly, a thermal calibration must be performed, it means that sensed temperatures are equal to real, several approaches could be found, but generally the camera provider supply the software and calibration targets. Mainly, thermal calibration is useful in applications where temperature is a process variable, for example, thermal analysis of material, food, heat transfer, simulation, among others. The other one is camera calibration in the sense of computer vision. It is stated as follow: given one or more images estimate the intrinsic parameters, extrinsic parameters, or both. 15 (a) VS2 camera. (b) VS3 camera. (d) Corridor 3-D model. (c) Corridor 3-D model. Figure 7: Ground truth (Bumblebee). (a) Image 2. (b) Image 6. (c) Disparity Image 2. (d) Disparity image 6. Figure 8: Ground truth (Teddy). It is interesting to mention that previous researches avoid the camera calibration problem (intrinsic/extrinsic) by applying constrains on the scene. For instance, assuming cameras perfectly aligned or strategical camera set-up that cover the scene (Krotosky and Trivedi, 2007b), (Zheng and Laganiere, 2007), among other. These restrictions on the camera configuration are not considered in the current work because they are not valid in a real 16 environment (on board vision system, see 3.2.1). So, in this work it will not consider these restrictions or presumptions. Multimodal image calibration is summarized below. Firstly, Bouguet (2000) calibration software has been used, this toolbox assumes input images from each modality where a calibration board is visible in the scene. In typical visual setup, this is simply a matter of placing a checkerboard pattern in front of the camera. However, due to the large differences in visual and thermal imagery, some extra care needs to be taken to ensure the calibration board looks similar in each modality. A simple solution is to use a standard board and illuminate the scene with intensity halogen bulbs placed behind the cameras. This effectively warms the checkerboard pattern, making the visually dark checkers appear brighter in the thermal imagery. Placing the board under constant illumination reduces the blurring associated with thermal diffusion and keeps the checkerboard edge sharp, allowing to use whichever calibration toolbox, freely distributed on Internet. The previous procedure is well-known by the computer vision community. It uses available calibration tools and good results have been reported with them, but our experience showed that it is necessary some variations to reach optimal results, especially when the application depends on calibration results, such as in 3-d reconstruction. Furthermore, the IR cameras need a special attention, because their intrinsic parameters are slightly different to conventional cameras. For example, IR cameras have a small focal lengths, low resolution, and reduced field of view (FOV). 6.1 Experimental Results In order to compute the intrinsic and extrinsic camera parameter of the proposed multimodal stereo rig, the next procedures was followed. 6.1.1 Intrinsic parameters The procedure presented below is the standard method for calibrating a camera; this must be performed 3 times. The aim is to get as accurate as possible intrinsic parameters by extracting images of the initial sequence or video (see procedure 1). Procedure 1 Visible spectrum calibration. 1. Build a subset SV S1, V S2, V S3 of images for each sequence, where the calibration pattern is clearly defined. 2. Follow the standard calibration procedure (Bouguet, 2000). 3. Save the intrinsic camera parameter. For the calibration of IR cameras few changes were introduces to calibration toolbox. Firstly, the graphic interface is changed for points selection, now this shows two images: the edges detected and the original infrared image. The user could change the color map of infrared to enhance its contrast, thus easy identify the board from the background (see fig. 9(a)). 17 The temperature variation of objects that do not emit radiation is weak. Therefore, the magnitude of the gradient is small and edge detection is complicated. For this reason, a system where the users manually select the edges is develop. Also, it offers the possibility of undo and clear until edges are correctly marked. The user selects two points and the calibration tool join these points with a line. When the 4 edges are marked (see fig. 9(a)), the toolbox computes the intersection between the lines and the obtained points intersecting are used for calibration. Initially, the nails that join the calibration pattern to board (see fig. 9(c)) was used, however this approach does not work properly, since nail detection was not stable in sequence. finally, the calibration is performed with 4 points and using the procedure 2. Procedure 2 Infrared spectrum calibration. 1. Build a subset SIR1 of images where the edges of calibration board are differentiable of background. 2. Manually select 2 points for each edge, in total 8 points that describe 4 lines, the extended functions compute the intersection points and use them for the calibration (see fig 9(b)). 3. Follow the standard calibration procedure (Bouguet, 2000). 4. Save the intrinsic camera parameter. 6.1.2 Extrinsic parameters The procedure 3 to compute the extrinsic parameters of a multimodal stereo rig is similar to calibration of a general stereo rig. The Bouguet calibration toolbox have functions for calibrating a stereo system, which have been used. These functions return two results for the rotation (R) and translation (T ) values, (i) with optimization and (ii) without optimization. The best values for R and T were obtained without optimization and using the intrinsic parameters computed in the procedure 1 and 2. Procedure 3 Infrared and Visible spectrum calibration. 1. Load into the calibration toolbox the intrinsic parameter previously computed from procedures 1 and 2. 2. Build a subset Sm such that SmV S1 ⊆ SV S1 and SmIR1 ⊆ SIR1 given SmV S1 = SmIR1 . 3. Apply the calibration procedure 2; step 2, on the each subset (SmV S1 and SmIR1 ), using the extension of toolbox. 4. Compute the Rotation and Translation, combining the intrinsic parameters loaded in the step 1 and the points obtained in the previous step. 18 (a) IR points. (b) IR edges selection. (c) Initial approach. Figure 9: Infrared spectrum calibration. The table 5 and 6 show the intrinsic and extrinsic parameters of multimodal stereo rig shown in the figs. 4 and . Table 5: Camera calibration - Intrinsic parameters. Specifications Focal length Principal point Skew coefficient Distortion coefficients IR1 244.23 [81.50, 64.00] 0 [-0.40, 7.96, 0.09, -0.01, 0 ] VS1 954.94 [375.50, 239.50] 0 [-0.33 -0.02 0 0 0] VS2 836.80 [306.63, 240] 0 [-0.36 0.21 0 0 0] VS3 835.88 [322, 225.80] 0 [-0.34 0.20 0 0 0] 7. Rectification Given a pair of stereo images, the rectification procedure determines a transformation of each image plane such that pairs of conjugate epipolar lines become collinear and parallel to one of the image axes (usually the horizontal one). The rectified images could be interpreted as a new stereo rig, obtained by rotating the original cameras. It is assumed that the stereo rig is calibrated. In other words, the cameras intrinsic parameters of each camera and the extrinsic parameters of the acquisition system (R and 19 T ) are known. The rectification basically converts a general stereo configuration to a simple stereo configuration. The main advantage of rectification is to make simpler the computation of stereo correspondence, since the search is done along the horizontal lines into rectified images. Also, frequently dense stereo correspondence algorithms assume a simple stereo configuration as shown in the figure 2. In this section, an extension to the Fusiello et al. (2000) method, is proposed and implemented, to tackle the multimodal stereo system. 7.1 Camera model The camera model at section 3.3 describes the geometry of two views. Now, a projective camera model is assumed for infrared and spectrum visible cameras, see figure 10. So, a projective camera is modelled by its optical center C and its retinal plane or image plane (), at Z = f . A 3D point X is projected into an image point x given by the intersection of with the line containing C and X. The line containing C and orthogonal to is called the optical axis and its intersection with is the principal point p. The distance between C and p is the focal length (Hartley and Zisserman, 2004). Y X C X Y X x Z p Figure 10: Camera model. Let X = [X Y Z]T be the coordinates of X in the world reference frame and x = [x y]T the projection of X in the image plane (pixels). If the world and image points are represented by homogeneous vectors, then central projection is simply expressed as a linear mapping between their homogeneous coordinates. Let X̃ = [x y z 1]T and x̃ = [x y 1]T be the homogeneous coordinates of X and x respectively, then: x̃ = P X̃. (7) Table 6: Camera calibration - Extrinsic parameters. Parameter Rotation Translation Multimodal V S1 → IR1 V S1 ← IR1 ⎤ ⎡ ⎤ 0.996 −0.007 −0.080 0.997 0.016 0.065 ⎣ 0.018 0.990 0.133 ⎦ ⎣ −0.007 0.991 −0.129 ⎦ 0.078 −0.134 0.987 −0.066 0.128 0.989 ⎡ ⎤ ⎡ ⎤ 0.115 −0.122 ⎣ 0.005 ⎦ ⎣ 0.006 ⎦ −0.103 0.089 ⎡ 20 Bumblebee V S2 → V S3 V S2 ← V S3 ⎤ ⎡ ⎤ 0.999 0.004 0.000 0.999 −0.004 0.00 ⎣ −0.004 0.999 −0.006 ⎦ ⎣ 0.004 0.999 0.006 ⎦ −0.000 0.006 0.999 0.000 −0.006 0.999 ⎡ ⎤ ⎡ ⎤ −0.119 0.119 ⎣ 0.000 ⎦ ⎣ −0.000 ⎦ 0.002 −0.002 ⎡ The camera is therefore modeled by its perspective projection matrix P , which can be decomposed, using the QR factorization, into the product: P = K[R | t]. (8) The matrix K or camera calibration matrix depends on the intrinsic parameters only, and has the following form: ⎤ αx γ x0 K = ⎣ 0 αy x0 ⎦ , 0 0 1 ⎡ (9) where: αx = f mx and αy = f my represent the focal length of the camera in terms of pixel dimensions in the x and y direction respectively; f is the focal length; mx and my are the effective number of pixels per millimetre along the x and y axes; (x0 , y0 ) are the coordinates of the principal point; finally γ is the skew factor. In general, points in space will be expressed in terms of a different Euclidean coordinate frame, known as the world coordinate frame. Two coordinate frames are related via a rotation and a translation. If X is an inhomogeneous 3-vector representing the coordinates of a point in the world coordinate frame, and Xcam represents the same point in the camera coordinate frame, then it may write Xcam = R(X − C̃), where C̃ represents the coordinates of the camera centre in the world coordinate frame, and R is a 3 × 3 rotation matrix representing the orientation of the camera coordinate frame. This equation may be written in homogeneous coordinates as: Xcam = R −RC̃ 0 1 ⎛ ⎞ X ⎜ Y ⎟ ⎜ ⎟ = R −RC̃ X̃. ⎝ Z ⎠ 0 1 1 (10) Putting (10) together with (8) and (7) leads to the following equation: x = KR[I| − C̃]X, (11) where x is now in a world coordinate frame. It is often convenient not to make the camera centre explicit, and instead to represent the world to image transformation as Xcam = RX̃ + t. In this case the camera matrix is simply x = K[R|t]X, where t = −RC̃. (12) 7.2 Rectification of cameras matrices It is assumed that the stereo rig is calibrated, and the perspectives projection matrix P1 and P2 are known. The rectification process defines two new projective matrices P1 and P2 obtained by rotating the old ones around their optical centers until focal planes becomes coplanar, thereby containing the baseline. This ensures that epipoles are at infinity; hence, epipolar lines are parallel. To have horizontal epipolar lines, the baseline must be parallel to 21 the new X axis of both cameras. In addition, to have a proper rectification, corresponding points must have the same vertical coordinate. The calibration procedure explained in section 6 returns the following values: K1 , R1 , t1 , K2 , R2 , t2 to each cameras. Notice that, according to where the world reference is placed, either t1 or t2 is equal to [0 0 0]T ; the same happen for rotation results, one of them is equal to the identity matrix. In general, the equation (8) can be written as follow, since a general projective camera may be decomposed into blocks. ⎡ ⎤ ⎡ T ⎤ q11 q12 q13 q14 q1 q14 (13) Pn = ⎣ q21 q22 q23 q24 ⎦ = ⎣ q2T q24 ⎦ = [M m |pm 4 ], q31 q32 q33 q34 q3T q34 where M m is a 3 × 3 matrix and pm 4 a column vector of camera m. The coordinates of optical centres C1,2 are given by: c1 = −R1 K1−1 p14 , (14) c2 = (15) −R2 K2−1 p24 . In order to rectified the images is necessary to compute the new principal axes X n , Y n , and Z n . A special constrain is applied for computing X n , since theses axes must be parallel to the baseline, and to have horizontal epipolar lines, then: x̂n = (c2 − c1 ) . c2 − c1 (16) The new Y n axis is orthogonal to X n and old Z: ŷ n = (ẑ × x̂n ) , ẑ × x̂n (17) where old Z axis is third vector (r3T ) of rotation matrix R1 (camera 1): ⎡ T ⎤ r1 ⎣ R1 = r2T ⎦ . rT 3 (18) The new Z n axis is orthogonal to X n and Y n : ẑ n = (x̂n × ŷ n ) . x̂n × ŷ n (19) The previous procedure shows the steps for computing the new axes, but none image has been rectified. In order to do this image rectification, the new projective matrices P1n and P2n should be expressed in terms of their factorization. P1n = K1 [R∗ | − R∗ c1 ] = [M n1 |pn1 4 ], P2n ∗ ∗ = K1 [R | − R c1 ] = [M 22 n2 |pn2 4 ]. (20) (21) From eqs. (13), (20) and (21). It is needed to compute the transformation that mapping the image plane of P1 = [M 1 |p14 ] onto the image plane P1n = [M n1 |pn1 4 ]. In this point the problem can be seen in different ways: (i) it follows the original formulation (Fusiello et al., T T 1 1 P1n and P2 −→ P2n 2000), or (ii) Compute a linear transformation T1 such that P1 −→ are true (out approach). This transformation corresponds to the matrix R∗ , because the optical center of cameras C1 and C2 are not translated. Then, R∗ is bases, which spans the points of rectified images, and the vectors X n , Y n , and Z n their basis. They are linearly independent and can be written as the linear combination. ⎡ ⎤ x̂n R∗ = ⎣ ŷ n ⎦ , ẑ n (22) Finally, the image transformations T1 and T2 are expressed as follow: −1 T1 = M n1 (M 1 ) T2 = M n2 1 −1 (M ) (23) (24) The transformations are applied to the original images in order rectify them (see figure 11). It is not common that the value of rectified pixel, after the transformation, corresponds to its initial values. Therefore, the gray levels of the rectified image are computed by a bilinear interpolation. 7.3 Experimental Results The previous image rectification formulation has been coded in Matlab. Figure 11 shows some results. In the figures 11(a) and 11(b) a couple of examples, which were taken by the bumblebee (VS2 and VS3). It can be seen that these images are almost aligned, because the bumblebee cameras are calibrated and its principal rays parallel. Notice that, this can be appreciated by looking and comparing intrinsic and extrinsic camera parameters VS2 and VS3 (see tables 5 and 6). For example, the table 6 describes a base line horizontal, cameras aligned, while the row, yaw and pitch component indicates small rotations (V S2 → V S3 or V S2 ← V S3). In the figures 11(c) and 11(d), thin black bands surrounding the images, are visible. These bands are due to rotation, and mainly the displacement needed to align the camera planes until their final position. The figures 11(e) and 11(f) correspond to multimodal acquisition system (VS1 and IR1). As it was mentioned, their baseline (BIR ) is wide and the rotation marked. Hence, the band in the figures 11(g), and 11(h) is bigger than in the previous case. Notice that the size of the IR image is a quarter of the color one and for visualization purposes the bands were removed. So, a point from the rectified IR image could be found in the corresponding rectified one (VS1), only searching at a horizontal line that includes the initial point. 23 (a) Left bumblebee image. (b) Right bumblebee image. (c) Rectified left bumblebee image. (d) Rectified right bumblebee image. (e) IR image. (f) VS1 image. (g) Rectified IR image. (h) Rectified VS1 image. Figure 11: Rectification results. 8. Stereo algorithm In this section, the main stages of a stereo algorithm will be discussed. The Block matching approach has been followed for image correspondence. It is explained in detail below. The 24 implemented stereo algorithm was split up into: matching cost computation, aggregation, disparity computation, and disparity refinement, using as criteria the taxonomy presented by Scharstein et al. (2001). In order to evaluate the performance of each studied and implemented function images from Middlebury stereo dataset have been used, then images from our dataset have been tested. In general, the block matching methods seek to estimate disparity at a point in one image by comparing a small region about that point (the template), with a series of small regions extracted from the other image (the search region), This process is inefficient due to redundant computations; for example, it has been reported that a naive implementation of a block matching algorithm for an image of N pixels, a template size of n pixels, and a disparity search range of D pixels, has a complexity of O(NDn) operations. If some optimizations are included, the complexity is reduced to O(ND) operations, making it independent of the template size. These properties enable to block matching algorithms its hardware implementation, and they are attractive in real-time applications such as ADAS. 8.1 Matching cost computation For each pixel into the reference image and its putative matches into the second image, the degree of equality, trough a dissimilarity function is evaluated, these values are called matching cost, and they establishes a relation: f : Iref erence(i, j) → I2 (x, y) |x, y∈ search space of i, j , where it is weighted the cost that a pixel (i, j) in the reference image corresponds to other into the second image. If it is known the risk of matching pixels, then the correspondence problem could be formulated as an optimization problem (see fig. 12). The matching cost is computed by sliding a window of n × n (where n = 2k + 1 and k ∈ Z ∧ k > 1). The window center is slid on all the image, left to right and top to down. By each change at their position, a second window of size n × n is slid over the second image; in horizontal direction (rectified images), or epipolar direction when the images are not rectified. A dissimilarity function, as shown in the table 7, is used in order to detect potential matching. In general whatever relation could be used as dissimilarity function, whereas it has a minimum; formally: if f (x∗ ) f (x) when |x∗ − x| < ε then the point x∗ is the best match because the blocks are similar. The most common pixel-based matching costs for real time applications include squared intensity differences (SSD ) and absolute intensity differences (SAD ). Other traditional matching costs functions include normalized cross-correlation (NCC ), Pearson’s correlation, and znSSD. The statistical correlations are standard methods for matching two windows around a pixel of interest. Notice two facts, the normalization of a window, like performed by znSSD, compensates differences in gain and bias, improving the matching score, and the NCC function is statistically the optimal method for compensating Gaussian noise. The search for possible matches over whole epipolar line, or row, is inefficient and time consuming. Then, it is taken a set the pixel (collinear) that covers a small region, this action bounded the search space on an interval [dmin , dmax ]. These limits depend on scene and base line, For example, high values of dmax for indoors applications, it could be incorrect, because it is hopped that the objects are placed close. On the contrary, this could be correct in outdoor applications. 25 Table 7: Block-Matching functions Match metric Definition Absolute Differences (AD) CAD(x,y,d) = I1 (i, j) − I2 (i + d, j) (i,j)∈N (x,y) Sum of Absolute Differences (SAD) CSAD(x,y,d) = |I1 (i, j) − I2 (i + d, j)| (i,j)∈N (x,y) Sum of Squared Differences (SSD) CSSD(x,y,d) = (I1 (i, j) − I2 (i + d, j))2 (i,j)∈N (x,y) Zero normalized SSD (znSSD) CznSSD(x,y,d) = 1 (2n + 1)2 (i,j)∈N (x,y) Normalized Cross-Correlation (NCC) I1 (i, j) − I1 (N (x, y)) I2 (i, j) − I2 (N (x, y)) − σ1 σ2 (I1 (i, j) − I1 (N (x, y))) · (I2 (i + d, j) − I2 (N (x, y)) (i,j)∈N (x,y) CN CC(x,y,d) = (I1 (i, j) − I1 (N (x, y)))2 · (I2 (i + d, j) − I2 (N (x, y))2 (i,j)∈N (x,y) Pearson’s correlation (PC) CP C(x,y,d) = (i,j)∈N (x,y) (i,j)∈N (x,y) ⎛ 1 ⎝ I1 (x, y)I2 (x, y) − m∗n ⎛ 1 ⎝ I1 (x, y)2 − m∗n ⎞2 I1 (x, y)⎠ (i,j)∈N (x,y) I1 (x, y) (i,j)∈N (x,y) (i,j)∈N (x,y) ⎞ I2 (x, y)⎠ (i,j)∈N (x,y) ⎛ 1 ⎝ I2 (x, y)2 − m∗n ⎞2 I2 (x, y)⎠ (i,j)∈N (x,y) The set of cost values over all pixels and possible disparities shape the initial disparity space C0 (x, y, d), a example in figure 12(d). 8.2 Aggregation In the previous stage the cost of corresponding every pixel in reference image with another pixel in a search space in the second image. Then, a pixel has associated several cost values. Then, the aggregation step reduces this multidimensional space to only two dimensions (or matrix), simplifying the storage of cost matching registers. A review of the published literature on aggregation shows that it could be done, as a 2D or 3D convolution C(x, y, d) = w(x, y, d) ∗ C0 (x, y, d). In the case of rectangular windows: using box-filters (Scharstein et al., 2001); shiftable windows implemented using a separable sliding min-filter (Kimura et al., 1999); truncating the results of dissimilarity function (Yoon and Kweon, 2006). Different approaches were studied. Especially, the reported as the best: shiftable windows, summing the cost values, or take the maximum or minimum. Previously, It is shown that these approaches improve the result in a color stereo pair, but testing them in our framework, it does not obtains significant improvements, for this reason, these approaches finally are not followed. The results showed below was computed from the C0 (x, y, d) (without aggregation). Although, it required more time and disk space because all costs associated to a pixel into the reference image are kept. 26 (a) Left image (Iref erence (i = 333, j = 320)). (b) Right image (c) Matching cost of Iref erence (i = 333, j = 320) against I2 (i = 333, j = x) (d) Cost surface Iref erence (i = 333, j = x1 ) against I2 (i = 333, j = x2 ) Figure 12: Cost computation. 8.3 Disparity computation It remembers that at the previous subsection the matching cost of pixels in the reference image was computed. A dissimilarity function was used to evaluate the equality of a point and its surrounding. This operation is time consuming due to two facts: The testbed or framework was designed for an academic purpose, hence high performance and low latency were not the main aim. Secondly, it is needed debug and specially to understand the effect of dissimilarity function on the systems. For these reasons, every outcome of cost computation stage was storage. The disparity computation is fashioned as an optimization problem, where the cost C0 (x, y, d) is the variable to optimize, the disparity of each pixel is selected by the WTA (Winner-Takes-All) method without any global reasoning. The correct match of a pixel 27 is determined by position or coordinate where the dissimilarity function gets its minimum value (see figure 12(c)). The cost function not always has a global minimum, as the depicted in figure 12(c). This corresponds to an edge point, which is highly discriminative. The main problem in stereo is matching of points with a low salience. A textureless region produces a matching cost with a similar values for all pixels (see fig. 13(a)), then the cost function will be like a flat line. On the contrary, a textured region has a several of minimums (see fig. 13(b)). In the current work 3 rules are considered for to face these problems: • Matching cost threshold: if the minimum cost value is under a given threshold Tmin the matching is accepted. For instance figure 13(c) shows a valid minimum (or correspondence). • Matching cost average: in this rule, the area under the curve is computed, if this value is below of a threshold; then, the minimum is rejected and the point is marked as unmatched (see fig. 13(a)). • Matching cost interpolation: a variation of the sampling-insensitive calculation of Birchfield and Tomasi (1998) for sub-pixel interpolation was implemented, which is presented below. When a point in the world is imaged by two cameras, the intensity values of the corresponding pixels are in general different. Different factors contribute to this; the light reflected is not the same; the two cameras have different parameter; the intensities of the pixels are quantized, and noisy. Then, it is necessary to model this behaviour, Birchfield and Tomasi (1998) propose a measure of pixel dissimilarity that compares two pixels using the linearly interpolated intensity functions surrounding them. In this current work a similar approach has been explored, instead of operating over the intensity values, three values of matching cost are extracted: the minimum and two neighbours (left and right), see figure 13(d). These points Cm−1 , Cm , and Cm+1 are used to fit a quadratic function. The quadratic regression process return the coefficients a, b, and c of function fr (x) = ax2 + bx + c that fit the initial points with the minimum error. Next, it is minimized the function fr (x) over the domain [Cm−1 , Cm+1 ]. fr (x) is minimum where the cost function reach a inflexion point, indicating the position, coordinate, or index of conjugate point (match). Other models of regression different to ordinary least-squares regression could be used, but the computational cost becomes an important problem. Models based on splines, no lineal regression, among other generally fits better the tendency of data, however this operation must be performed for each pixel into the reference image, given that the size of image is n rows by m columns, the previous procedure is executed n × m times. 8.4 Disparity refinement computation A complete algorithm of stereo vision would have to include some procedure that refines the disparity map, early advances in computer vision elegantly express this problem in the language of Markov Random Fields (MRFs), resulting energy minimization problems. Recently, algorithms such as graph cuts and loopy belief propagation (LBP) have proven to 28 [Cost] [Cost] [Cost C ] Threshold Threshold [disparity] [disparity] (a) Deviation of cost curve. (b) Deviation of cost curve. [Cost] [Cost] In Interpolation Threshold Thre Minimum [disparity] Cm-1 Cm (c) Valid minimum Cm+1 [disparity] (d) Interpolation. Figure 13: Special cost matching conditions. be very powerful (Szeliski et al., 2008). For the current work only a left to right consistency checking and uniqueness validation are used to eliminate bad correspondences. Left-Right Consistency (LRC) check is performed to get rid of the half-occluded pixels. It is better to reject uncertain matches that to accept bad disparities. The LRC procedure is simple and effective for reducing the rate of bad matching. It consists of executing the previous steps by exchanging the reference image; thus two different disparity map are computed (dRight to lef t and dLef t to right ). Next, both maps are checked, if |dRight to lef t (x, y)− dLef t to right (x, y)| dmax varition , then the pair is rejected (Experimentally dmax varition = 3pixel). The points IR (x, y) and IL (x, y) with large variation in the right and left images are marked as does not match. Notice that at this point the correspondences are known, therefore this operation is valid. 8.5 Experimental Results This section, describes the quality metrics for evaluating the performance of stereo correspondence algorithms. Two measures proposed by Scharstein et al. (2001) have been used for evaluating the accuracy of the computed disparity maps. In order to evaluate the performance of current framework, the most relevant parameters will be varied. In this way, is quantitatively measured the proposed solution. In the figure 14 the different masks, which segment the ground truth (see in the the figure 8 the disparity map or ground truth), over each region are used to measured the error, using the equations (25) and (26). The regions considered are: occluded, no occluded, textured, textureless, near discontinuities, and all. As the table 8 shows. It compute the following two quality measures: 1. RMS (root-mean-squared) error (measured in disparity units) between the computed disparity map dC (x, y) and the ground truth map dT (x, y) , 29 (a) Occluded and discontinued regions and edges. (b) Occluded and textured regions (c) Occluded regions. Figure 14: Segmented regions. ⎞1 2 1 2 |dC (x, y) − dT (x, y)| ⎠ , R=⎝ N ⎛ (25) (x,y) where N is the total number of pixels. 2. Percentage of bad matching pixels, B= 1 (|dC (x, y) − dT (x, y)|) > δd ), N (26) (x,y) where δd is a disparity error tolerance. For these experiments δd = 1.0. Notices that the methodology followed for measuring the error or quality of proposed approach, is a common way to evaluate this kind of algorithms and ranking them. The regions cited in the table 8 are computed through simple binary operations between the reference image, figures 14(a), 14(b), and 14(c) with their ground truth (fig. 8(c)). The regions are defined as: textureless regions T where the squared horizontal intensity gradient averaged over a square window of a given size is below a given threshold; occluded regions O that are occluded in the matching image, and depth discontinuity regions D, edges dilated by a window of width 9 × 9. The table 9 shows the results of implemented dissimilarities functions: AD, SAD, SSD, znSSD, NCC, NMSDSSD, and pearson correlation. The window size was varied, late, measured their performance over each interest region. This result was obtained from the images shown in figures 8(a) and 8(b). The best results obtains for each dissimilarity function are shown in figure 15. 9. Discussion and concluding remarks As a general conclusion it could be stated that night vision represents a novel research field where the classical image processing techniques cannot be directly applied since the nature of the signal is different. The initially formulated question: “Could a couple of sensors measuring different bands of the electromagnetic spectrum, as the visible and infrared, form 30 Table 8: Quality metrics Name Symbol Description rms error all R RMS disparity error rms error nonocc RO RMS no occlucions rms error occ RO RMS at occlusions rms error textured RT RMS textured rms error textureless RT RMS textureless rms error discont RD RMS near discontinuities bad pixel all B Bad pixel percentage bad pixel nonocc BO Bad no occlucions bad pixel occ BO Bad at occlusions bad pixel textured BT Bad textured bad pixel textureless BT Bad textureless bad pixel discont BD Bad near discontinuities Figure 15: Dissimilarity function comparison. a part of an Advanced Driver Assistance System, and help to reduce the amount of injuries and deaths in the roads? ”can be affirmatively answered. Although the problem is really complex the research shows that it is feasible to develop a system that help the driver based on visible and infrared cameras. In other words, in the ADAS context is possible to register in a unique representation information from different sources: light reflected by an object together with its corresponding thermal emission. Several topics have been identified as future work. By sure that these topics will be the motivation of the research during the next years. The most important ones are summarized below: 31 Function Window size rms error all rms error nonocc rms error occ rms error textured rms error textredless rms error discont bad pixel all bad pixel nonocc bad pixel occ bad pixel textured bad pixel textredless bad pixel discont 3x3 23.86 22.73 30.64 22.40 24.95 21.27 97.21 97.06 98.23 96.90 98.16 96.11 9x9 24.31 23.06 31.75 23.01 23.37 22.32 97.29 97.18 98.07 97.27 96.53 96.96 AD 15x15 24.87 23.49 32.95 23.58 22.82 23.07 97.42 97.28 98.39 97.46 96.00 97.87 21x21 25.47 23.97 34.15 24.16 22.54 23.93 97.77 97.63 98.73 97.81 96.28 98.26 3x3 12.01 8.42 25.63 8.26 9.46 8.29 41.69 33.67 97.82 30.29 57.88 39.21 SAD 9x9 15x15 10.94 11.01 6.36 6.32 25.96 26.27 6.05 5.95 8.30 8.50 6.22 6.56 30.74 31.57 21.26 22.24 97.13 96.91 19.08 20.04 36.84 37.95 37.78 43.64 21x21 11.17 6.52 26.47 6.19 8.48 7.13 33.10 23.96 97.03 21.65 40.50 47.14 3x3 11.99 8.36 25.70 8.22 9.35 8.36 40.49 32.28 97.91 29.00 55.77 38.56 21x21 11.26 6.55 26.73 6.26 8.34 7.76 35.44 26.58 97.37 24.42 42.09 52.23 3x3 14.15 11.01 27.44 10.79 12.49 11.02 43.68 35.95 97.77 32.99 57.21 42.82 znSSD 9x9 15x15 10.94 11.20 5.54 5.70 27.25 27.84 5.43 5.52 6.26 6.85 8.01 8.23 26.38 29.97 16.24 20.34 97.31 97.30 15.59 19.21 20.86 28.48 43.32 49.49 21x21 11.55 6.18 28.26 5.91 7.86 8.57 33.64 24.52 97.45 22.76 37.12 51.85 Table 9: Quality results. SSD 9x9 15x15 11.03 11.11 6.49 6.42 26.06 26.43 6.22 6.12 8.17 8.26 6.86 7.29 31.11 32.92 21.66 23.73 97.27 97.20 19.72 21.78 35.52 37.72 42.80 49.23 3x3 16.52 14.29 27.47 13.67 18.14 13.54 54.80 48.61 98.06 45.25 72.73 53.48 NCC 9x9 15x15 11.00 10.84 6.21 5.81 26.42 26.52 5.90 5.53 8.07 7.54 8.49 8.15 26.54 29.59 16.47 20.03 97.07 96.50 15.70 18.88 21.97 28.25 42.80 48.82 21x21 11.09 6.26 26.63 5.96 8.12 8.47 33.26 24.16 96.94 22.35 37.16 51.26 3x3 15.57 13.06 27.29 12.42 16.99 12.42 49.07 42.11 97.75 38.76 66.14 47.95 NMSDSSD 9x9 15x15 10.97 10.91 5.91 5.66 26.81 26.97 5.61 5.35 7.71 7.55 8.03 7.82 26.15 29.40 16.01 19.78 97.06 96.68 15.32 18.63 21.01 28.02 41.75 48.06 21x21 11.20 6.11 27.24 5.77 8.12 8.06 33.12 23.99 97.00 22.16 37.09 50.73 3x3 16.52 14.28 27.47 13.66 18.12 13.54 54.80 48.62 98.06 45.26 72.73 53.50 Pearson 9x9 15x15 11.00 10.84 6.21 5.81 26.42 26.52 5.90 5.53 8.07 7.54 8.49 8.15 26.54 29.59 16.47 20.03 97.07 96.50 15.70 18.88 21.97 28.25 42.80 48.82 21x21 11.09 6.26 26.63 5.96 8.12 8.47 33.26 24.16 96.94 22.35 37.16 51.26 32 1. During this research the row fusion methodology has been fully explored; thus, according to the obtained results it is expected that a better performance could be reached if a high level fusion where used. This fusion should include high level data representations from both modalities; as examples it could be mentioned: segmented images, optical flow registration; mutual information; 2. Study the possibility to exploit the camera hardware in order to have a proper video synchronization (the IR video sequence has been recorded at 9 fps, while the VS at 20 fps). This asynchrony represents an additional source of error since moving objects in the scene are not captured at the same instant. 3. The use of 3D data, computed from the Bumblebee camera, will be considered in order to validate the obtained results. Furthermore, it should be mentioned that an additional validation process, that measure the performance of each individual stage, is needed. 4. A particular problem of the proposed multimodal system is related to the difference between camera resolution. The the IR camera has a lower resolution than the VS one, hence current triangulation algorithms should be extended to consider this challenge. In this work, it is performed a experimental study that compares different dissimilarity function, algorithms and approach, in order to build a multimodal system. Also, it is identified common problem areas for stereo algorithms in different modalities, special in the ADAS. A important contribution is the isolation achieved, between the different stage that compose a multimodal stereo system. Our framework summarizes the architecture of a generic stereo algorithm, at different levels: computational, functional, and structural, which is successful because in short term this can be extended to a complete dense multimodal testbed, that until the moment some stages support two modalities. References S.A. Ahmed, T.M. Hussain, and T.N. Saadawi. Active and passive infrared sensors for vehicular traffic control. In IEEE 44th Vehicular Technology Conference, pages 1393– 1397 vol.2, Jun 1994. M. Bertozzi, E. Binelli, A. Broggi, and M.D. Rose. Stereo vision-based approaches for pedestrian detection. In IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pages 15–16, June 2005. S. Birchfield and C. Tomasi. A pixel dissimilarity measure that is insensitive to image sampling. IEEE Transactions on Pattern Analysis and Machine Intelligence, 20(4):401– 406, Apr 1998. J. Bouguet. Matlab camera calibration toolbox. 2000. FLIR. Bmw incorporates thermal imaging cameras in its cars lowering the risk of nocturnal driving. Technical report, FLIR Commercial Vision Systems B.V., 2008. 33 A. Fusiello, E. Trucco, and A. Verri. A compact algorithm for rectification of stereo pairs. Machine Vision and Applications, 12(1):16–22, 2000. N. Gheissari and A. Bab-Hadiashar. A comparative study of model selection criteria for computer vision applications. Image and Vision Computing, 26(12):1636 – 1649, 2008. J. Han and B. Bhanu. Fusion of color and infrared video for moving human detection. Pattern Recognition, 40(6):1771 – 1784, 2007. R. Hartley and A. Zisserman. Multiple View Geometry in Computer Vision. Cambridge University Press, ISBN: 0521540518, second edition, 2004. M. Hild and G. Umeda. Image registration in stereo-based multi-modal imaging systems. In The 4th International Symposium on Image and Signal Processing and Analysis, pages 70–75, Sept. 2005. R. Istenic, D. Heric, S. Ribaric, and D. Zazula. Thermal and visual image registration in hough parameter space. In Systems, Signals and Image Processing, pages 106–109, June 2007. M.D. Keall, W.J. Frith, and T.L. Patterson. The contribution of alcohol to night time crash risk and other risks of night driving. Accident Analysis & Prevention, 37(5):816 – 824, 2005. ISSN 0001-4575. Y.S. Kim, J.H. Lee, and J.B. Ra. Multi-sensor image registration based on intensity and edge orientation information. Pattern Recognition, 41(11):3356 – 3365, 2008. S. Kimura, K. Nakano, Sinbo T., H. Yamaguchi, and E. Kawamura. A convolverbased real-time stereo machine (sazan). IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 1:1457, 1999. ISSN 1063-6919. doi: http://doi.ieeecomputersociety.org/10.1109/CVPR.1999.786978. K. Konolige. Small vision systems: Hardware and implementation. Eighth International Symposium Robotics Research, pages 111–116, 1997. S.J. Krotosky and M.M. Trivedi. On color-, infrared-, and multimodal-stereo approaches to pedestriandetection. IEEE Transactions on Intelligent Transportation Systems, 8(4): 619–629, Dec. 2007a. ISSN 1524-9050. S.J. Krotosky and M.M. Trivedi. Person surveillance using visual and infrared imagery. IEEE Transactions on Circuits and Systems for Video Technology, 18(8):1096–1105, Aug. 2008. S.J. Krotosky and M.M. Trivedi. Registration of multimodal stereo images using disparity voting from correspondence windows. In IEEE International Conference on Video and Signal Based Surveillance, pages 91–97, Nov. 2006. S.J. Krotosky and M.M. Trivedi. Mutual information based registration of multimodal stereo videos for person tracking. Computer Vision and Image Understanding, 106(2-3): 270 – 287, 2007b. Special issue on Advances in Vision Algorithms and Systems beyondthe Visible Spectrum. 34 S.J. Krotosky, S.Y. Cheng, and M.M. Trivedi. Face detection and head tracking using stereo and thermal infrared cameras for “smart ”airbags: A comparative analysis. In The 7th International IEEE Conference on Intelligent Transportation Systems, pages 17–22, Oct. 2004. S.K. Kyoung, J.H. Lee, and J.B. Ra. Robust multi-sensor image registration by enhancing statistical correlation. In 8th International Conference on Information Fusion, volume 1, pages 7 pp.–, July 2005. H. Li and Y. Zhou. Automatic eo/ir sensor image registration. In International Conference on Image Processing, volume 3, pages 240–243 vol.3, Oct 1995. J. Michels, A. Saxena, and A.Y. Ng. High speed obstacle avoidance using monocular vision and reinforcement learning. In Proceedings of the 22nd international conference on Machine learning, pages 593–600, New York, NY, USA, 2005. ACM. ISBN 1-59593-180-5. H. K. Moravec. Visual mapping by a robot rover. In Proceedings of the 6th International Joint Conference on Artificial Intelligence, pages 598–600, Tokyo, 1979. F. Morin, A. Torabi, and G.A. Bilodeau. Automatic registration of color and infrared videos using trajectories obtained from a multiple object tracking algorithm. In Canadian Conference on Computer and Robot Vision, pages 311–318, May 2008. S. Prakash, Lee P.Y., and T. Caelli. 3d mapping of surface temperature using thermal stereo. In 9th International Conference on Control, Automation, Robotics and Vision, pages 1–4, Dec. 2006. A. Rogalski. Infrared detectors: An overview. Infrared Physics & Technology, 43(3-5):187 – 210, 2002. A.D. Sappa, F. Dornaika, D. Ponsa, D. Geronimo, and A. Lopez. An efficient approach to onboard stereo vision system pose estimation. IEEE Transactions on Intelligent Transportation Systems, 9(3):476–490, Sept. 2008. A. Saxena, M. Sun, and A.Y. Ng. Make3d: Learning 3d scene structure from a single still image. IEEE Transactions on Pattern Analysis and Machine Intelligence, 31(5):824–840, 2009. ISSN 0162-8828. D. Scharstein and R. Szeliski. High-accuracy stereo depth maps using structured light. IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 1:195–202, June 2003. D. Scharstein, R. Szeliski, and R. Zabih. A taxonomy and evaluation of dense two-frame stereo correspondence algorithms. IEEE Workshop on Stereo and Multi-Baseline Vision, pages 131–140, 2001. K. Schreiner. Night vision: Infrared takes to the road. IEEE Computer Graphics and Applications, 19(5):6–10, 1999. 35 D. Scribner, P. Warren, and J. Schuler. Extending color vision methods to bands beyond the visible. In IEEE Workshop on Computer Vision Beyond the Visible Spectrum: Methods and Applications, pages 33–40, 1999. doi: 10.1109/CVBVS.1999.781092. S. Segvic. A multimodal image registration technique for structured polygonal scenes. In Proceedings of the 4th International Symposium on Image and Signal Processing and Analysis, pages 500–505, Sept. 2005. R. Szeliski, R. Zabih, D. Scharstein, O. Veksler, V. Kolmogorov, A. Agarwala, Tappen M.F., and Rother C. A comparative study of energy minimization methods for markov random fields with smoothness-based priors. IEEE Transactions on Pattern Analysis and Machine Intelligence, 30(6):1068–1080, 2008. M.M. Trivedi, Y. C. Shinko, E.M.C. Childers, and S.J. Krotosky. Occupant posture analysis with stereo and thermal infrared video: Algorithms and experimental evaluation. IEEE Transactions on Vehicular Technology, 53(6):1698–1712, Nov. 2004. ISSN 0018-9545. doi: 10.1109/TVT.2004.835526. World Health Organization WHO. World report on road traffic injury prevention. Technical report, Department of Violence & Injury Prevention & Disability (VIP), Geneva, 2004. World Health Organization WHO. Global status report on road safety. Technical report, Department of Violence & Injury Prevention & Disability (VIP), 20 Avenue Appia, 2009. K.J. Yoon and I.S. Kweon. Adaptive support-weight approach for correspondence search. IEEE Transactions on Pattern Analysis and Machine Intelligence, 28(4):650–656, April 2006. ISSN 0162-8828. doi: 10.1109/TPAMI.2006.70. Z.X. Zhang and P.Y. Cui. Information fusion based on optical flow field and feature extraction for solving registration problems. In International Conference on Machine Learning and Cybernetics, pages 4002–4007, Aug. 2006. L. Zheng and R. Laganiere. Registration of ir and eo video sequences based on frame difference. Canadian Conference on Computer and Robot Vision, pages 459–464, 2007. 36