This article was downloaded by: [University of Waterloo]

On: 31 October 2014, At: 09:23

Publisher: Taylor & Francis

Informa Ltd Registered in England and Wales Registered Number: 1072954 Registered

office: Mortimer House, 37-41 Mortimer Street, London W1T 3JH, UK

Communications in Statistics - Simulation

and Computation

Publication details, including instructions for authors and

subscription information:

http://www.tandfonline.com/loi/lssp20

A New Algorithm in Bayesian Model

Averaging in Regression Models

a

b

Tsai-Hung Fan , Guo-Tzau Wang & Jenn-Hwa Yu

c

a

Institute of Statistics, National Central University , Jhongli ,

Taiwan

b

National Center for High-performance Computing , Hsinchu ,

Taiwan

c

Department of Mathematics , National Central University ,

Jhongli , Taiwan

Published online: 17 Sep 2013.

To cite this article: Tsai-Hung Fan , Guo-Tzau Wang & Jenn-Hwa Yu (2014) A New Algorithm in

Bayesian Model Averaging in Regression Models, Communications in Statistics - Simulation and

Computation, 43:2, 315-328, DOI: 10.1080/03610918.2012.700750

To link to this article: http://dx.doi.org/10.1080/03610918.2012.700750

PLEASE SCROLL DOWN FOR ARTICLE

Taylor & Francis makes every effort to ensure the accuracy of all the information (the

“Content”) contained in the publications on our platform. However, Taylor & Francis,

our agents, and our licensors make no representations or warranties whatsoever as to

the accuracy, completeness, or suitability for any purpose of the Content. Any opinions

and views expressed in this publication are the opinions and views of the authors,

and are not the views of or endorsed by Taylor & Francis. The accuracy of the Content

should not be relied upon and should be independently verified with primary sources

of information. Taylor and Francis shall not be liable for any losses, actions, claims,

proceedings, demands, costs, expenses, damages, and other liabilities whatsoever or

howsoever caused arising directly or indirectly in connection with, in relation to or arising

out of the use of the Content.

This article may be used for research, teaching, and private study purposes. Any

substantial or systematic reproduction, redistribution, reselling, loan, sub-licensing,

systematic supply, or distribution in any form to anyone is expressly forbidden. Terms &

Downloaded by [University of Waterloo] at 09:23 31 October 2014

Conditions of access and use can be found at http://www.tandfonline.com/page/termsand-conditions

Communications in Statistics—Simulation and ComputationR , 43: 315–328, 2014

Copyright © Taylor & Francis Group, LLC

ISSN: 0361-0918 print / 1532-4141 online

DOI: 10.1080/03610918.2012.700750

A New Algorithm in Bayesian Model Averaging

in Regression Models

TSAI-HUNG FAN,1 GUO-TZAU WANG,2 AND JENN-HWA YU3

1

Institute of Statistics, National Central University, Jhongli, Taiwan

National Center for High-performance Computing, Hsinchu, Taiwan

3

Department of Mathematics, National Central University, Jhongli, Taiwan

Downloaded by [University of Waterloo] at 09:23 31 October 2014

2

We propose a new iterative algorithm, namely the model walking algorithm, to modify

the widely used Occam’s window method in Bayesian model averaging procedure. It is

verified, by simulation, that in the regression models, the proposed algorithm is much

more efficient in terms of computing time and the selected candidate models. Moreover,

it is not sensitive to the initial models.

Keywords Ayesian model averaging; Occam’s window; Regression models.

Mathematics Subject Classification

65C05.

Primary 62F15; 62J05; 65C60; Secondary

1. Introduction

One major concern in regression analysis is to determine the “best” subset of predictor

variables. Here, “best” means the model providing the most accurate predictions for new

cases. Traditionally, one is more interested in choosing a single “best” model based on

some model selection criteria such as Mallows’ Cp , forward selection, backward selection,

stepwise selection, etc. (cf. Montgomery et al. (2006)), and making prediction as if the

selected models were the true model. However, in many situations, there may not exist a

“best” model, or indeed there are several possibly appropriate models. Using only one model

may severely ignore the problem of model uncertainty and cause inaccurate predictions.

(See Draper (1995), Kass and Raftery (1995), Madigan and York (1995), and the references

therein.) Intuitively, it is therefore reasonable to consider the average result of all possible

combinations of predictors. The idea of model combination was first raised by Bernard

(1963). Madigan and Raftery (1994) first propose the Bayesian viewpoint to take into

account of model uncertainty in graphical models using Occam’s window. However, when

the number of predictors is large, the number of all possible models becomes huge and yet

many of which indeed are not of much contribution to the response variable. Alternatively,

Raftery et al. (1997) consider the Bayesian model averaging method in which average is

taken over a reduced sets of models according to their posterior probabilities. They apply

the “Occam’s window” criterion in regression models to exclude models with too small

Received October 28, 2011; Accepted June 4, 2012

Address correspondence to Prof. Tsai-Hung Fan, Ph.D., Institute of Statistics, National Central

University, Jhongli, Taiwan; E-mail: thfan@stat.ncu.edu.tw

315

Downloaded by [University of Waterloo] at 09:23 31 October 2014

316

Fan et al.

posterior probabilities to increase the computational efficiency. We refer their approach as

“Occam’s window”.

The algorithm in “Occam’s window” includes “down-algorithm” and “up-algorithm”

to search appropriate models. The up- and down-algorithms depend essentially on the size

of the “window”. For larger windows, more models are selected in a longer searching

procedure. It is more conservative in the sense that it usually includes unimportant models.

A small window on the other hand speeds up the searching procedure but consequently it is

very possible to exclude influential models. Due to computational burden, both algorithms

are performed only once which usually results in a situation that important models are

missed but unnecessary models are retained. Moreover, the “Occam’s window” algorithm

is conducted based on a specified initial subset of possible models which make certain

effect on either the final result or the cost of computation. In this article, we modify the upand down-algorithms in “Occam’s window” by an iterative searching procedure based on

relative probabilities among neighborhood models to determine the searching direction. It is

verified, by simulation, that in the usual regression models, the proposed algorithm, namely

the model walking algorithm hereafter, is much more efficient in terms of computing time

as well as the selected candidate models. Moreover, it is not sensitive to the initial models.

In the next section, we briefly outline the Occam’s window algorithm of Raftery et al.

(1997) and the proposed model walking algorithm is introduced in Section 3. Then, in

Section 4, we compare both algorithms via simulation based on regression models. Finally,

Section 5 closes with conclusions.

2. Bayesian Model Averaging in Linear Regression

In this section, we will give a brief review of the Bayesian model averaging method proposed

by Raftery et al. (1997). The original algorithm is applied to the regression models. Let

X1 , . . . , Xp be all the possible predictive variables in a multiple regression model. Typically,

one important and practical concern is to select variables that are of significant effect on the

response into the model. Therefore, each predictor could be or could not be included in the

model. Let M1 , . . . , MK denote all the K = 2p possible models and suppose that the set

of the predictive variables in model Mk is X(k) = {X1(k) , . . . , Xp(k)k } ⊆ {X1 , . . . , Xp }. Then,

the regression model in Mk is written by

Y = βk0 +

pk

βkj Xj(k) + k ,

j =1

where Y is the response variable, β k = (βk0 , βk1 , . . . , βkpk )t is the regression coefficient

vector under Mk , and k is the random error. Let θk be the set of all unknown parameters in

Mk and f (y|θk , Mk ) be the associated sampling distribution of Y given X(k) . (We assume

the predictors are all given throughout the article.) If π (θk |Mk ) denotes the prior density of

θk under Mk , then the posterior density of θk given Y = y is

π (θk |y, Mk ) =

where

f (y|θk , Mk )π (θk |Mk )

,

f (y|Mk )

f (y|Mk ) =

f (y|θk , Mk )π (θk |Mk )dθk

Bayesian Model Averaging in Regression Models

317

is the marginal density of the response Y in model Mk which is the true distribution of Y if

model Mk is the true model. Let P (Mk ) be the prior probability that model Mk is the true

model, then given Y = y and all predictors,

f (y|Mk )P (Mk )

P (Mk |y) = K

l=1 f (y|Ml )P (Ml )

Downloaded by [University of Waterloo] at 09:23 31 October 2014

is the posterior probability of Mk . The higher this probability, the more possible is

Mk . Denote the quantity of interest to be , such as the future observation(s) etc., and

f (|Mk , y, θk ) to be the conditional density of given y and θk under Mk . Then, the

predictive density of in Mk is

f (|y, Mk ) = f (|y, θk , Mk )π (θk |y, Mk )dθk .

Under Mk , the Bayesian predictive analysis of can then be derived from this distribution.

Thus, one can get the overall marginal predictive density, by integrating the results from all

possible models, as

f (|y) =

K

f (|y, Mk )P (Mk |y).

(1)

k=1

(1) indeed is the weighted average of all the predictive densities with respect to the model

posterior probabilities.

Note that computation of (1) is time consuming among all possible models. Madigan

and Raftery (1994) use Occam’s window to eliminate models with lower posterior probabilities so that the final predictive distribution only takes average over a smaller set of

models, say A. Hence, (1) is replaced by

f (|y, A) =

f (|y, Mk )P (Mk |y, A),

Mk ∈A

where

P (Mk |y, A) = P (Mk |y)

.

Ml ∈A P (Ml |y)

To determine A, they screen all models based on the following principles:

1. A model with posterior probability far below the “best” model should be eliminated.

2. A model containing “better” submodel(s) should be eliminated.

Here, the best model means the model with the highest posterior probability and a submodel means the one whose set of predictors is a subset of those in another model. More

precisely, Raftery et al. (1997) propose the down- and up-algorithm to implement the

Occam’s razor in regression models. Given a set of initial models I, the algorithm begins

with the down-algorithm by examining all submodels of each model in I based on Occam’s

razor. Assume M0 ⊂ M1 ∈ I. The Occam’s razor chooses the model(s) by the posterior

odds, P (M1 |y)/P (M0 |y), through a predetermined interval [OL , OR ]. The initial M1 is

selected and M0 is deleted if P (M1 |y)/P (M0 |y) > OR ; while it keeps M0 but eliminates

M1 if P (M1 |y)/P (M0 |y) < OL . Both models are retained when the posterior odds falls

into [OL , OR ]. Furthermore, if M1 has already been deleted (in any previous step), M0 is

318

Fan et al.

eliminated automatically. The up-algorithm is then proceeded using Occam’s razor again to

examine all the super-models (models that contain the original one) of each model retained

by the down-algorithm. The resulting models form the set A of candidate models. This

method only performs the down- and up-algorithm once, it may easily miss some probable

models unless adjusting OL and OR to enlarge the window size or including more models

into the initial set but the computational cost is heavily increased consequently. Details can

be seen in Madigan and Raftery (1994) and Raftery et al. (1997).

Downloaded by [University of Waterloo] at 09:23 31 October 2014

3. Model Walking Algorithm

To increase the computational efficiency, we propose a new algorithm to decide a better set

of models over which the average is taken. The basic idea is to begin with one single model

and only examines its neighborhood models with relatively higher posterior probabilities.

The neighborhood models of the model M are defined by its sub-neighborhood, N − (M),

and super-neighborhood, N + (M), respectively, where N − (M) contains all sub-models of

M with only one less predictive variable than M; and N + (M) contains all super-models

of M with only one more predictive variable than M. Define N − (M) = ∅ if M only has

one predictor; and N + (M) = ∅ if M includes all predictors. It is an iterative procedure.

Beginning with a prespecified initial model M, we first examine its sub-neighborhood

N − (M). For each M − ∈ N − (M), compare the posterior odds R − = P (M|y)/P (M − |y)

with RA− = PM /P (M − |y), where PM is the maximum posterior probability, which is updated at each iteration, among all models considered at the moment. For predetermined

threshold values B − and BA− , if R − < B − and RA− < BA− , it yields that M − is a “good”

model and we put it into C so that its neighborhood models deserve to be examined again;

otherwise, the searching procedure for M − is paused and set M − into D. Repeat this procedure for each model in N + (M), and leave M into D afterwards. Therefore, C contains

the good models whose neighborhood models are to be examined and D includes models

that either are not good (which should be eliminated after all) or the good models whose

neighborhood models have already been checked. The procedure continues to check the

neighborhood models of all models in C until no good model is left, i.e., C = ∅. The

resulting D is the set of final candidate models. The final task is to delete those models that

are not good. We follow the first principle above of Madigan and Raftery (1994) to exclude

maxM ∈D P (M |y)

> C.

D = M ∈ D :

P (M|y)

(2)

from D and get A = D − D , where C is a predetermined threshold value. The following

is the algorithm.

Step 1: Specification: specify the initial model M, the positive threshold values B − , B + ,

BA− , BA+ , and C.

Step 2: Initialization: let C = ∅ and PM = P (M|y).

Step 3: Searching in N − (M): for each M − ∈ N − (M), replace PM by max{PM , P (M − |y)}.

Move M − to C if P (M|y)/P (M − |y) < B − and PM /P (M − |y) < BA− ; otherwise, M −

is moved to D.

Step 4: Searching in N + (M): for each M + ∈ N + (M), substitute M − , N − (M), B − , and

BA− in Step 3 with M + , N + (M), B + and BA+ , respectively. Move M to D after the

search.

Step 5: Iterative search: if C = ∅, take M to be any model in C and repeat Step 3 and Step 4.

Step 6: Finalization: define A = D − D where D is given by (2).

Bayesian Model Averaging in Regression Models

319

It is recommended in Step 1 to choose a model whose predictive variables are highly

correlated with the response variable. This can be determined by choosing variables with

relatively higher sample correlation coefficients from the data, for example.

The model walking algorithm begins with only one single model, but enlarges the

searching area by “walking” around its neighborhood models; unlike the Occam’s window

that begins with several models but only searches over their sub- and super-models once.

With the limitation of the threshold values, for instance B − and B + control the relative

difference of posterior probabilities; and BA− and BA+ restrict the absolute difference from

the most probable model, it makes detailed searches in an area that is considerably reduced

from all possible models.

Downloaded by [University of Waterloo] at 09:23 31 October 2014

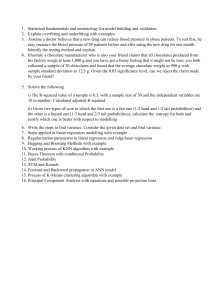

4. Comparison and Simulation

We compare the two algorithms via simulation in linear regression models. Each comparison

study is based on the same simulated datasets. In what follows, there are 10 possible

predictive variables (p = 10) and the data are simulated from

Y = β0 1 +

p

βj X j + ,

(3)

j =1

where 1 = (1, . . . , 1)tn is an n-vector of 1’s, Y = (Y1 , . . . , Yn )t , X j = (X1j , . . . , Xnj )t ,

and the random error vector = (1 , . . . , n )t follows the n-variate multivariate normal distribution, Nn (0, σ 2 I). Assume that the prior distribution of β = (β0 , β1 , . . . , β10 )t

2

is N11 (μ, σ 2 V ) and that of σ 2 is inverse-gamma, IG( ν2 , νλ

), where μ, V , ν, and

λ are hyperparameters. Following Raftery et al. (1997), let ν = 2.58, λ = 0.28, and

μ = (β0 , 0, . . . , 0)t , in which β0 is the usual least squares estimate of the constant

term β0 . The variance-covariance matrix V is a diagonal matrix with diagonal elements

−2

), where sY2 and si2 are the sample variances of

(σ 2 sY2 , σ 2 φ 2 s1−2 , σ 2 φ 2 s2−2 , . . . , σ 2 φ 2 s10

the response variable and the predictive variables correspondingly and φ = 2.85. Under

model Mk , let X (k) = (X k1 , . . . , X kpk ) be the corresponding design matrix, then given σ 2 ,

X (k) and all the above hyperparameters, the conditional marginal distribution of Y |σ 2 is

Nn X (k) μ, σ 2 k , where k = I n + X (k) V X t(k) . Therefore, after integrating out σ 2 with

2

) prior distribution, the marginal density of Y under Mk is

respect to the IG( ν2 , νλ

ν

f ( y|Mk ) =

(νλ) 2

ν

π n/2

2

ν+n

2

|

k

|1/2

[( y − X (k) μ)t

−1

k (y

− X (k) μ) + νλ]−

ν+n

2

.

(4)

(See Raftery et al. (1997) for details.) Suppose each model has equal prior probability, 2−10 .

To compare P (Mk |y), k = 1, . . . , K, we only need to compute (4). We consider various

values of n and σ in the simulation study. The true value of β is given in Table 1 from which

we see that variables X1 and X2 have no effect at all on Y. The predictive variables {xij },

Table 1

True values of the regression coefficients

β0

β1

β2

β3

β4

β5

β6

β7

β8

β9

β10

5.1

0

0

2.459

1.456

0.734

0.456

0.231

0.1342

0.052

0.00321

320

Fan et al.

i = 1, . . . , n, j = 1, . . . , 10, are generated independently from U (0, 30) for each combination of n and σ , and the response variables {yi }ni=1 are then simulated from (3). We apply

both Occam’s window and model walking algorithms to determine the final candidate sets of

models, denoted by Aold and Anew , respectively. Five hundred simulation runs are conducted

Table 2

Average numbers of examined models (V) and missing probabilities (L) using model

walking algorithm with good ini-tial model

B + /B −

Downloaded by [University of Waterloo] at 09:23 31 October 2014

n

40

σ

3

5

10

15

20

60

3

5

10

15

20

80

3

5

10

15

20

100

15

20

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

1/1

2/2

3/3

5/5

7.5/7.5

10/10

34.244

0.12978

31.928

0.14802

27.414

0.20324

30.492

0.19570

29.97

0.21166

36.506

0.07941

32.56

0.10580

26.86

0.14416

26.634

0.15511

24.666

0.17049

38.392

0.05996

34.344

0.08607

27.782

0.10889

23.086

0.13463

20.612

0.13897

21.678

0.11906

19.324

0.13135

38.632

0.08470

36.962

0.10112

34.864

0.13180

37.824

0.13441

37.034

0.14686

39.344

0.05509

36.658

0.06761

31.716

0.09420

32.324

0.10577

30.764

0.11376

41.35

0.03689

38.258

0.05401

31.262

0.07632

27.714

0.09326

24.848

0.09955

25.73

0.07985

23.494

0.08902

42.852

0.06431

42.356

0.07602

43.936

0.09382

44.936

0.10043

45.314

0.11190

42.558

0.04066

40.648

0.05134

36.714

0.07227

38.03

0.07944

37.1

0.08593

43.536

0.02922

42.086

0.03902

35.38

0.05428

32.832

0.06821

29.402

0.07799

29.968

0.06177

28.358

0.06382

52.786

0.04051

54.69

0.04582

63.144

0.05775

63.328

0.06216

65.738

0.06946

49.342

0.02814

49.238

0.03219

48.462

0.04450

50.898

0.05178

50.534

0.05703

48.476

0.01897

49.794

0.02391

44.326

0.03516

44.164

0.04466

39.14

0.05246

39.258

0.03945

37.2

0.04361

65.912

0.02529

70.104

0.02848

85.088

0.03468

89.226

0.03773

91.896

0.04270

58.078

0.01879

59.564

0.02090

60.76

0.02994

68.482

0.03271

68.418

0.03781

55.184

0.01375

57.092

0.01700

54.036

0.02431

58.7426

0.02910

54.636

0.03331

50.454

0.02635

48.082

0.03178

78.592

0.01695

85.444

0.01828

103.052

0.02274

112.56

0.02377

117.67

0.02755

66.532

0.01306

69.46

0.01466

72.404

0.02124

85.338

0.02230

87.072

0.02548

61.91

0.01047

64.734

0.01229

61.434

0.01854

71.726

0.02044

67.934

0.02387

61.962

0.01842

61.55

0.02203

Bayesian Model Averaging in Regression Models

321

in each case, and the average total probabilities of models in Acold and Acnew are recorded (denoted by L). These two sets are the models not selected by using the Occam’s window and the

proposed algorithm and we refer the probability by missing probability from now on. The average number of models searched by each algorithm is also marked (by V) which can roughly

represent the computational cost. For simplicity, we use C = 20, B + = B − , and BA+ =

Table 3

Average numbers of examined models (V) and missing probabilities (L) using up- and

down-algorithm with good initial model

OL−1 /OR

Downloaded by [University of Waterloo] at 09:23 31 October 2014

n

40

σ

3

5

10

15

20

60

3

5

10

15

20

80

3

5

10

15

20

100

15

20

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

5/5

10/5

10/10

15/10

15/15

20/15

67.594

0.28011

63.066

0.30386

58.104

0.35044

51.612

0.37831

55.6

0.39800

67.15

0.24025

59.668

0.26067

49.964

0.31177

41.374

0.34818

40.148

0.36042

69.268

0.20147

63.794

0.23043

49.004

0.27585

34.748

0.32236

31.27

0.34055

33.234

0.29871

30.196

0.31463

68.262

0.26847

64.162

0.29090

59.96

0.33432

55.804

0.36611

62.304

0.38259

67.532

0.22667

60.176

0.24742

50.562

0.29659

43.882

0.33114

43.268

0.35022

69.334

0.19267

63.916

0.21748

49.49

0.26183

36.456

0.30685

33.416

0.32687

34.75

0.28532

31.732

0.30155

121.488

0.17138

119.972

0.18235

125.418

0.20981

110.67

0.24235

136.148

0.25404

105.592

0.15791

98.962

0.16460

87.398

0.20517

77.226

0.22982

80.44

0.24696

100.738

0.14164

93.89

0.15354

78.884

0.19165

61.482

0.21879

58.58

0.23541

53.134

0.20785

51.284

0.22572

122.094

0.16660

120.83

0.17705

127.782

0.20275

115.484

0.23720

146.446

0.24705

106.264

0.15223

99.294

0.16071

88.294

0.20024

79.274

0.22409

83.462

0.24225

100.932

0.13712

94.184

0.14874

79.214

0.18722

62.92

0.21410

61.13

0.22984

54.696

0.20203

53.01

0.21984

198.894

0.07027

201.774

0.07291

229.108

0.08698

230.896

0.11252

306.692

0.12059

157.858

0.09760

150.014

0.10031

147.306

0.12902

135.314

0.14841

148.448

0.16468

136.582

0.09930

132.898

0.10282

121.774

0.13375

98.848

0.15713

100.292

0.16942

79.648

0.15644

82.482

0.16492

201.478

0.06810

202.476

0.06998

230.8

0.08309

241.72

0.10915

321.204

0.11663

157.858

0.09497

150.148

0.09773

148.242

0.12663

137.546

0.14510

153.136

0.16049

136.832

0.09697

133.34

0.10038

121.774

0.13198

100.446

0.15362

1001.762

0.16712

80.73

0.15383

83.81

0.16258

322

Fan et al.

BA− = 5C in the model walking algorithm and some popular choices of OL and OR for the

Occam’s window. We first select the initial model to be the one which includes all the predictive variables that have sample correlation coefficients at least 0.3 with the response variable

and the results of L and V of the two algorithms are listed in Tables 2 and 3, respectively. In

Table 4

Average numbers of examined models (V) and missing probabilities (L) using model

walking algorithm with inappropriate initial model

B + /B −

Downloaded by [University of Waterloo] at 09:23 31 October 2014

n

40

σ

3

5

10

15

20

60

3

5

10

15

20

80

3

5

10

15

20

100

15

10

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

1/1

2/2

3/3

5/5

7.5/7.5

10/10

67.562

0.12988

64.754

0.14400

58.824

0.19595

53.348

0.22335

50.654

0.23925

71.07

0.07973

66.874

0.10301

60.634

0.14656

54.54

0.17880

50.954

0.17862

73.688

0.05504

69.69

0.08219

62.318

0.10697

56.652

0.13749

522.392

0.15773

57.834

0.11592

53.794

0.12997

73.092

0.08274

70.122

0.10193

66.346

0.12872

61.364

0.14962

57.962

0.16863

75.27

0.05135

70.794

0.07424

66.456

0.09549

60.98

0.11569

56.358

0.12415

76.792

0.03541

73.94

0.05170

65.988

0.07685

62.096

0.09203

57.248

0.10515

62.29

0.07490

58.472

0.08486

78.196

0.05988

76.512

0.07462

75.846

0.09470

71.734

0.11041

69.854

0.12196

78.866

0.03936

75.622

0.05437

72.766

0.06876

68.276

0.08352

62.596

0.09372

79.538

0.02762

77.938

0.03671

70.364

0.05581

67.368

0.06886

62.754

0.7829

66.582

0.05615

63.384

0.06416

89.736

0.03875

90.218

0.04610

94.622

0.05909

95.33

0.06610

94.524

0.07358

85.78

0.02734

84.914

0.03408

85.118

0.04329

83.144

0.05251

76.62

0.06068

85.048

0.02009

85.736

0.02422

79.718

0.03611

78.784

0.04500

75.966

0.04949

75.438

0.03823

72.792

0.04300

105.482

0.02480

107.898

0.02874

117.122

0.03460

123.656

0.03861

125.71

0.04289

97.036

0.01849

97.32

0.02179

99.706

0.02856

101.53

0.03309

94.906

0.03929

93.708

0.01414

95.814

0.01637

90.972

0.02410

94.004

0.02933

91.086

0.03273

86.912

0.02603

84.97

0.03010

122.022

0.01597

125.682

0.01819

134.938

0.02300

148.946

0.03409

151.956

0.02714

107.534

0.01360

108.76

0.01524

112.656

0.02057

119.888

0.02234

112.65

0.02707

101.23

0.01068

104.588

0.01254

101.486

0.01693

107.82

0.02062

105.33

0.02292

98.214

0.02200

96.122

0.02200

Bayesian Model Averaging in Regression Models

323

contrast, we also consider the initial model to be the least appropriate one that only contains

variables X1 and X2 in the model and the corresponding results are shown in Tables 4 and 5.

We see from the tables that in both algorithms, the missing probabilities are increased

in a longer searching procedure as σ increases for each n. Conversely, for fixed σ they are

all decreased as the sample size increases. In the first case when the initial model is chosen

Table 5

Average numbers of examined models (V) and missing probabilities (L) using up- and

down-algorithm with inappropriate initial model

OL−1 /OR

Downloaded by [University of Waterloo] at 09:23 31 October 2014

n

40

σ

3

5

10

15

20

60

3

5

10

15

20

80

3

5

10

15

20

100

15

20

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

5/5

10/5

10/10

15/10

15/15

20/15

248.048

0.23420

237.278

0.25844

213.772

0.29613

182.064

0.32598

157.974

0.35110

224.866

0.20966

212.31

0.22515

185.55

0.26895

157.024

0.30426

132.132

0.32129

218.548

0.18899

213.88

0.20351

182.7

0.24378

147.086

0.27914

129.954

0.29758

151.22

0.26339

129.192

0.28102

248.762

0.22099

237.878

0.24112

213.772

0.28285

182.064

0.31193

158.604

0.33775

225.508

0.19224

212.31

0.21514

185.636

0.25314

157.244

0.29094

132.216

0.31051

219.276

0.17222

214.02

0.18859

182.7

0.23068

147.392

0.26514

130.004

0.28543

151.412

0.24643

129.192

0.26847

525.324

0.13124

522.872

0.13363

497.782

0.14950

458.644

0.17472

429.696

0.19222

427.06

0.12905

410.09

0.13238

369.498

0.15731

338.634

0.18463

298.628

0.20177

377.378

0.12042

371.8

0.12455

330.774

0.14862

285.134

0.17571

256.368

0.19325

268.802

0.16842

234.342

0.18811

525.324

0.12772

522.872

0.12868

497.782

0.14375

458.644

0.16970

429.696

0.18749

427.06

0.12417

410.886

0.12727

369.498

0.15226

339.676

0.17751

298.628

0.19811

377.378

0.11526

371.8

0.12039

330.774

0.14378

285.134

0.17181

256.368

0.18801

268.802

0.16292

234.758

0.18231

887.326

0.06049

891.446

0.05733

885.754

0.05866

866.938

0.06583

858.76

0.07325

681.566

0.08244

676.256

0.07607

641.302

0.08633

613.88

0.10093

587.51

0.11350

577.768

0.08295

566.414

0.08169

528.548

0.09428

494.258

0.10821

455.82

0.12315

437.48

0.11185

389.15

0.1270

887.326

0.05869

891.446

0.05422

885.754

0.05504

866.938

0.06314

858.76

0.07075

681.566

0.07981

676.256

0.07338

641.302

0.08391

614.434

0.09662

588.194

0.11059

577.768

0.08045

566.414

0.07958

528.548

0.09218

494.258

0.10585

437.48

0.10943

437.48

0.10943

389.15

0.12465

324

Fan et al.

meaningfully, the proposed algorithm yields missing models with probabilities almost all

under 10% except in small samples or in more uncertain cases with B + = 1. More models

are examined for larger threshold values and therefore less models are missed. On the other

hand, the Occam’s window can not ensure the missing probability under 10% even with

OL = 1/15 and OR = 15, and it must examine between 121 and 321 models on the average

Table 6

Average numbers of examined models (V) and missing probabilities (L) using model

walking algorithm with maximum initial model

B + /B −

Downloaded by [University of Waterloo] at 09:23 31 October 2014

n

40

σ

3

5

10

15

20

60

3

5

10

15

20

80

3

5

10

15

20

100

15

20

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

1/1

2/2

3/3

5/5

7.5/7.5

10/10

59.108

0.10650

61.176

0.13057

67.338

0.19582

75.216

0.21923

77.914

0.23237

54.572

0.07535

57.946

0.09837

64.18

0.14572

71.028

0.17477

74.168

0.18092

49.87

0.05197

55.774

0.08195

62.068

0.11696

68.29

0.15184

72.022

0.16170

66.05

0.12989

69.758

0.14975

64.786

0.06258

68.01

0.07980

77.33

0.11773

86.994

0.13455

91.18

0.14128

58.904

0.04216

63.068

0.05634

70.99

0.08885

80.216

0.10386

82.7

0.11403

52.932

0.03064

60.6

0.04160

68.326

0.06840

76.03

0.08995

80.026

0.09551

72.868

0.07369

77.598

0.08687

67.985

0.04507

72.228

0.05682

86.076

0.08076

97.322

0.09320

101.526

0.10251

61.832

0.02968

66.926

0.03795

76.366

0.06211

86.51

0.07535

89.424

0.08257

55.02

0.02151

63.748

0.02804

71.812

0.05055

82.152

0.06116

85.902

0.07064

77.666

0.05216

83.506

0.05804

74.396

0.02876

81.656

0.03617

101.784

0.05043

117.428

0.05672

122.41

0.06521

67.114

0.01774

74.07

0.02221

87.308

0.03753

100.406

0.04635

102.846

0.05274

59.176

0.01296

69.022

0.01787

80.65

0.02939

93.326

0.03713

97.71

0.04298

86.7

0.03221

93.282

0.03680

84.489

0.01804

95.172

0.02155

121.064

0.03020

144.344

0.03302

149.694

0.03986

73.356

0.01190

82.48

0.01424

99.888

0.02325

117.318

0.02883

120.78

0.03395

63.79

0.00876

75.672

0.01139

90.344

0.01882

106.16

0.02456

113.002

0.02780

97.764

0.02106

103.704

0.02593

94.036

0.01214

106.838

0.01400

136.144

0.01991

168.394

0.02019

174.608

0.02499

79.08

0.00861

90.148

0.00970

110.06

0.01652

132.622

0.01983

136.998

0.01994

67.614

0.00642

81.234

0.00805

98.458

0.01362

118.232

0.01725

124.756

0.01994

106.792

0.01994

115.27

0.01812

Bayesian Model Averaging in Regression Models

325

when n = 40. However, the model walking algorithm searches at most 117 models with less

than 3% missing probability in such extreme case. It performs much better than Occam’s

window when the initial model is poorly selected as shown in Tables 4 and 5. Again almost

all settings of B − yield missing probabilities under 10% (in fact only 4 are slightly above

10% when B + /B − ≥ 2). The corresponding searching procedures are relatively longer but

Table 7

Average numbers of examined models (V) and missing probabilities (L) using up- and

down-algorithm with maximum initial model

OL−1 /OR

Downloaded by [University of Waterloo] at 09:23 31 October 2014

n

40

σ

3

5

10

15

20

60

3

5

10

15

20

80

3

5

10

15

20

100

15

20

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

5/5

10/5

10/10

15/10

15/15

20/15

293.016

0.31055

343.512

0.32831

528.434

0.35868

664.596

0.37069

774.768

0.39011

229.32

0.25990

278.816

0.28134

449.434

0.32147

609.868

0.34335

705.598

0.36000

180.128

0.23036

248.984

0.25331

387.436

0.29592

550.41

0.31705

662.914

0.33015

498.464

0.29722

625.626

0.30846

313.242

0.29577

372.353

0.31185

572.438

0.34236

707.718

0.35566

819.124

0.37608

248.666

0.24404

297.336

0.26694

483.616

0.30745

650.204

0.32732

742.426

0.34731

192.59

0.21832

265.116

0.24256

416.206

0.28276

585.792

0.30213

695.08

0.31711

535.42

0.28104

661.12

0.29447

313.286

0.20430

372.452

0.20319

572.916

0.21803

708.252

0.22798

820.222

0.24465

248.666

0.18507

297.342

0.18603

483.82

0.21313

650.316

0.23190

742.782

0.24655

192.59

0.16973

265.126

0.17713

416.296

0.20140

585.856

0.21948

695.158

0.23029

535.442

0.21065

661.144

0.22128

322.874

0.19955

389.134

0.19630

595.132

0.21088

731.3

0.21961

842.342

0.23844

257.56

0.17897

305.692

0.18199

500.094

0.20814

668.392

0.22594

761.002

0.24178

200.96

0.16371

273.614

0.17212

432.946

0.19555

605.288

0.21432

711.986

0.22560

551.79

0.20536

678.366

0.21622

322.932

0.09286

389.512

0.08936

595.056

0.09496

732.926

0.09820

844.912

0.11184

257.56

0.12729

305.714

0.12227

500.326

0.13628

668.612

0.14753

761.536

0.16071

200.976

0.12678

273.63

0.12736

433.108

0.14045

605.43

0.15578

712.094

0.16675

551.868

0.15577

678.4

0.16722

328.91

0.09072

401.422

0.08537

610.508

0.09009

748.134

0.09400

859.116

0.10689

263.092

0.12488

312.33

0.11950

510.99

0.13337

681.082

0.14456

774.04

0.16387

206.304

0.12397

280.222

0.12471

444.092

0.13748

618.888

0.52215

724.09

0.16387

563.792

0.15266

689.386

0.16445

326

Fan et al.

still much less than those caused by Occam’s window which searches as much as 891

models. Overall speaking, the computational cost of Occam’s window is between 4 and

5 times as much as that caused by the model walking algorithm and it misses more models

on the average. Yet it seems that the proposed algorithm is less sensitive to the choice of

the initial model.

Table 8

Average numbers of examined models (V) and missing probabilities (L) using model

walking algorithm with minimum initial model

B + /B −

Downloaded by [University of Waterloo] at 09:23 31 October 2014

n

40

σ

3

5

10

15

20

60

3

5

10

15

20

80

3

5

10

15

20

100

15

20

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

1/1

2/2

3/3

5/5

7.5/7.5

10/10

51.806

0.11750

49.202

0.15417

43.406

0.20128

38.19

0.21734

34.12

0.24117

55.95

0.07823

51.858

0.10665

45.142

0.14406

39.416

0.17280

35.626

0.18278

58.226

0.05573

53.544

0.08181

47.074

0.11202

40.962

0.14380

36.992

0.15426

42.592

0.11700

37.874

0.13140

56.672

0.07940

55.68

0.09872

51.554

0.12835

46.744

0.14651

42.356

0.16898

59.866

0.04970

56.274

0.07204

51.044

0.09166

45.696

0.11601

41.752

0.12588

61.41

0.03540

57.718

0.04994

51.654

0.07696

46.084

0.09478

42.204

0.10549

47.308

0.07858

42.246

0.08629

61.942

0.06254

62.78

0.06916

61.218

0.09225

56.638

0.10802

54.116

0.12317

63.7

0.03776

61.33

0.05323

56.712

0.07034

52.558

0.08526

49.138

0.09394

64.582

0.02772

61.768

0.03598

56.158

0.05692

51.958

0.06917

47.764

0.08122

52.322

0.05822

47.27

0.06436

73.922

0.04111

76.702

0.04616

80.412

0.05814

79.854

0.06760

81.196

0.07324

71.532

0.02604

72.14

0.03353

69.876

0.04484

69.936

0.05177

65.706

0.06056

71.108

0.01927

69.71

0.02413

66.544

0.03659

64.422

0.04419

61.538

0.05012

63.508

0.03679

57.094

0.04363

90.882

0.02657

96.58

0.02779

105.462

0.03405

109.182

0.03925

113.266

0.04284

82.434

0.01819

86.796

0.02120

85.756

0.02908

90.77

0.03307

86.646

0.03825

79.842

0.01420

79.46

0.01654

78.586

0.02481

79.522

0.02957

78.078

0.03304

75.768

0.02603

70.304

0.0287

109.174

0.01686

113.858

0.01867

126.066

0.02220

136.048

0.02421

140.416

0.02710

93.946

0.01325

99.406

0.01466

100.392

0.02036

109.504

0.02241

95.112

0.02631

89.38

0.01067

90.01

0.01222

89.61

0.01798

95.112

0.02058

93.142

0.02330

87.974

0.01904

83.1

0.02039

Bayesian Model Averaging in Regression Models

327

To further justify the sensitivity of the initial models, we consider the two most extreme

initial models in addition, namely the one with all 10 predictive variables (maximum

model) and the one without any predictors (minimum model). Tables 6–9 demonstrate the

corresponding results. We see once again that the results in Tables 6 and 8 do not differ too

much. It confirms that the proposed method indeed is not influenced much by the specified

Table 9

Average numbers of examined models (V) and missing probabilities (L) using up- and

down-algorithm with minimum initial model

OL−1 /OR

Downloaded by [University of Waterloo] at 09:23 31 October 2014

n

40

σ

3

5

10

15

20

60

3

5

10

15

20

80

3

5

10

15

20

100

15

20

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

V

L

5/5

10/5

10/10

15/10

15/15

20/15

246.04

0.23243

236.666

0.25037

213.55

0.29414

185.952

0.32357

162.008

0.35031

220.686

0.21143

210.428

0.22620

184.044

0.26907

156.49

0.29563

143.44

0.31488

226.874

0.18316

206.234

0.20389

179.046

0.24765

154.592

0.27309

131.624

0.29611

152.15

0.26082

128.368

0.27584

246.04

0.22267

236.666

0.23812

213.55

0.27842

185.952

0.31147

162.008

0.33892

220.686

0.19254

210.428

0.21209

184.044

0.25347

156.49

0.28152

143.44

0.30366

226.874

0.17140

206.234

0.19039

179.046

0.23340

154.592

0.25945

131.624

0.28350

152.15

0.24644

128.368

0.26427

525.806

0.13172

522.346

0.13148

500.814

0.14667

460.214

0.17002

436.834

0.19139

412.27

0.12626

412.026

0.12937

378.278

0.15398

345.606

0.17538

315.502

0.19648

387.16

0.11941

359.69

0.12670

337.254

0.15022

294.214

0.17446

262.42

0.19008

270.342

0.16966

234.288

0.18635

525.806

0.12769

522.346

0.12727

500.814

0.14058

460.214

0.16552

436.834

0.18686

412.27

0.12158

412.026

0.12445

378.278

0.14863

345.606

0.17092

315.502

0.19260

387.16

0.11476

359.69

0.12372

337.254

0.14597

294.214

0.16941

262.42

0.18615

270.342

0.16449

234.288

0.18336

886.788

0.05628

886.956

0.05440

886.424

0.05437

869.598

0.06414

864.152

0.07178

665.526

0.08150

675.022

0.07473

649.65

0.08502

623.14

0.09767

588.818

0.11300

584.034

0.08227

569.504

0.08370

543.95

0.09314

500.766

0.10718

461.122

0.11977

433.338

0.11296

393.43

0.12724

886.788

0.05411

886.956

0.05166

886.424

0.05158

869.598

0.02421

864.152

665.526

0.07913

675.022

0.07305

649.65

0.08175

623.14

0.09558

588.818

0.11076

584.034

0.07998

569.504

0.08182

543.95

0.09023

500.766

0.10530

461.122

0.11771

433.338

010969

393.43

0.12509

328

Fan et al.

initial model. It is also observed that in Tables 7 and 9 when the predetermined windows

OL−1 /OR are small, the up- and down-algorithm seems to be less sensitive to the two

extreme initial models, but still the computational costs as well as the missing probabilities

are substantially large; while for larger OL−1 /OR , using maximum initial model must scan

considerably more models. One remark is that when σ increases for fixed n, the up- and

down-algorithm seems to search less models with higher missing probabilities, particularly

for large n; while when the sample sizes increases with σ fixed, it does not improve either in

the numbers of examined models or missing probabilities. The numbers of models searched

by the proposed algorithm may not be reduced much but the missing probabilities are all

within acceptable ranges.

Downloaded by [University of Waterloo] at 09:23 31 October 2014

5. Concluding Remark

Variable selection is one of the important issues in regression analysis. However, the single best model may not exist or there exit on the other hand many plausible models in

practice. Using only one model may severely ignore the problem of model uncertainty

and cause inaccurate predictions. Bayesian model averaging method is an alternative from

the prediction perspective. However, computational cost is too expensive when averaging

over all possible models as the number of predictors is large. We propose a model walk

algorithm by excluding models with small posterior probabilities to increase the computational efficiency. Simulation results show that the proposed method is successful in terms

of missing probabilities and computational time compared to the Occam’s window method

using down- and up-algorithms; and yet it is less sensitive to the choice of the initial model.

The proposed algorithm can be extended to more sophisticated models such as the longitudinal regression models or time series regression models. More general models such as

the regression models with t error distribution or the generalized linear models may also be

considered incorporated with the Markov chain Monte Carlo methods.

References

Bernard, G. A. (1963). New methods of quality control. Journal of the Royal Statistical Society:

Series A 126:255–258.

Draper, D. (1995). Assessment and propagation of model uncertainty (with discussion). Journal of

the Royal Statistical Society Series B 57:45–97.

Kass, R. E., Raftery, A. E. (1995). Bayes factors. Journal of the American Statistical Association

90:773–795.

Madigan, D., Raftery, A. E. (1994). Model selection and accounting for model uncertainty in graphical

models using Occam’s window. Journal of the American Statistical Association 89:1535–1546.

Madigan, D., York, J. (1995). Bayesian graphical models for discrete data. International Statistical

Review 63:215–232.

Montgomery, D. C., Peck, E. A., Vining, G. G. (2006). Introduction to Linear Regression Analysis.

4th ed. New York: Wiley.

Raftery, A. E., Madigan, D., Hoeting, J. A. (1997). Bayesian model averaging for linear regression

models. Journal of the American Statistical Association 92:179–191.