Lawrence J. Raphael, Gloria J. Borden, Katherine S. Harris - Speech Science Primer_ Physiology, Acoustics, and Perception of Speech , Sixth Edition (2011, Lippincott Williams & Wilkins)

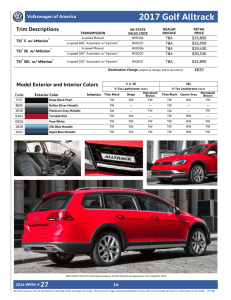

advertisement