High fidelity, physics-based sensor simulation for military and civil applications

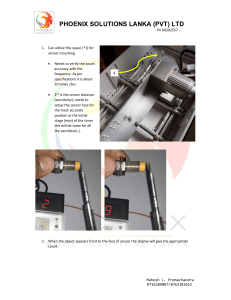

advertisement

Introduction Feature High fidelity, physics-based sensor simulation for military and civil applications Chris Blasband Jim Bleak and Gus Schultz The authors Chris Blasband, Jim Bleak and Gus Schultz are all based at Evans and Sutherland Computer Corporation, 600 Komas Drive, Salt Lake City, UT 84108 USA. Tel: (801) 588 1000; E-mail: cblasban@es.com Keywords Sensors, Simulation, Training Abstract As real-time, high-fidelity visual scene simulation has become ubiquitous in the training, modeling and simulation community, a growing need for more than “out-thewindow” scene simulation has developed. A strong requirement has developed for the ability to simulate the output of different types of sensors, especially electro-optical (EO), infrared (IR), night vision goggle (NVG) and radar systems. To satisfy the need for advanced sensor simulation, Evans & Sutherland (E&S) has developed a physics-based, dynamic, real-time sensor simulation which allows users to model advanced EO, IR and NVG devices that are fully correlated with the “out-the-window” visual view. In this paper, the unique sensor simulation capabilities of E&S will be described. A brief description of the physics employed, input and output are presented along with example images. Electronic access The Emerald Research Register for this journal is available at www.emeraldinsight.com/researchregister The current issue and full text archive of this journal is available at www.emeraldinsight.com/0260-2288.htm Sensor Review Volume 24 · Number 2 · 2004 · pp. 151–155 q Emerald Group Publishing Limited · ISSN 0260-2288 DOI 10.1108/02602280410525940 Until recently, the training experience for civil and military personnel has been focused on providing a realistic visual view or “out-thewindow” view as seen by a pilot, tank operator or soldier on the ground. The world is relying more and more heavily on sensors in everyday life. The military utilizes sensors in almost all operations, as most battles are fought at night. Civil airliners have sophisticated radar onboard for weather avoidance and terrain avoidance. Currently, many civil aircraft are being equipped with advanced infrared (IR) sensors to aid in landing under adverse weather conditions. As such, the requirement for realtime simulation (60 Hz update rates) of advanced sensors has become critical and will continue to grow over time. Real-time sensor simulation has become a reality because of advances in custom and commercial off-the-shelf (COTS) graphics hardware and software. The goal of simulating a passive sensor (electro-optical (EO), night vision goggle (NVG), IR) display in real-time is to provide the sensor operator or pilot with a realistic, accurate scene brightness distribution that correlates fully with the out-the-window view. The simulation of a passive sensor has two main components: (1) calculation of the “at-aperture” radiance scene, and (2) generation of sensor effects. The “at-aperture” radiance scene represents the amount of energy being received from all objects in the scene, through the atmosphere and into the sensor aperture. It is dependent upon the time of day, time of year, position on earth, material composition of the objects and terrain, weather, atmosphere, sensor waveband and other phenomenological components. Passive imaging sensors utilize optics, detectors, and signal and image processing techniques to process the at-aperture energy into a grayscale radiance image that can be displayed on a monitor or other display. The components of a sensor tend to blur, distort, and degrade the image, creating sensor effects. To properly simulate the final sensor display, both at-aperture radiance and sensor effects 151 High fidelity, physics-based sensor simulation Sensor Review Chris Blasband, Jim Bleak and Gus Schultz Volume 24 · Number 2 · 2004 · 151–155 must be accurately modeled. To satisfy the need for advanced passive sensor simulation in realtime, E&S has developed, as part of its EPXe technology [1], state-of-the-art software and hardware to generate very accurate, realistic EO, IR and NVG displays. the real-time community which requires quick turnaround time and utilizes visual, rgb textures in its real-time databases. To accurately material classify large areas in a reasonable amount of time, E&S has developed a semi-automated tool for generating material classified maps that are fully correlated with the visual texture. That is, the user specifies that a certain shade of green represents grass, whereas another shade of green is fallen leaves, and so on, for all visual textures in the database. The classified textures now give the additional information needed by sensor simulation products. The user need to set this up only once for any particular texture. Once each texture has been mapped to real materials, these mapping assignments can be used for any sensor simulation (EO, IR, NVG, radar, etc.) using those textures. Figure 1 shows a visual texture of a desert scene along with a material map generated with the E&S tool. Each gray value in the material map represents an unique material. Calculation of the “at-aperture” radiance scene Advanced physics computations must be employed to accurately calculate the amount of energy received at the sensor aperture. These physics computations must include detailed calculations of the reflectance and thermal emittance of all objects in a scene based on time of day, time of year, position on earth, observer/ sensor geometry, heat transfer boundary conditions and the specific sensor wavelength band of interest (e.g. mid-wave IR (MWIR), long-wave IR (LWIR), etc.). Real-time simulation requires “intelligent” implementation of the physics equations that govern energy generation and propagation. Proper modeling of the at-aperture radiance requires a full physics treatment of the terrain, man-made cultural features, targets, atmosphere, environment, and weather effects. In other words, everything in the scene must be considered. E&S has developed robust, real-time algorithms to calculate scene radiance based on complex scene dynamics and specific sensor parameters. Contributions from the sun, moon, stars, sky shine and man-made light/heat sources are all considered in the calculation of the at-aperture radiance. The interaction of these light/heat sources with natural and manmade materials is calculated for each pixel of the display. Radiance calculations Once the material of each texture element (texel) is known, the typical equation used by real-time toolkits to calculate the radiance is given by the following general equation: Rpixel ¼ Ldirect £ r £ cos ui £ tpath diffuse solar=lunar reflections þ Lambient £ r £ tpath ambient=skyshine reflections þ Lbb £ ð1 2 rÞ £ tpath þ Lpath thermal emission path emission and scattering Figure 1 Visual texture and material maps of terrain area Material classification of visual textures In general, the database required for sensors is no different than the visual database. However, sensors require knowledge of what real materials exist in the scene. This knowledge is critical for generating accurate quantitative rendering for all sensor simulations. The remote sensing community has advanced tools for material classifying imagery, however, these tools are typically not useful for 152 High fidelity, physics-based sensor simulation Sensor Review Chris Blasband, Jim Bleak and Gus Schultz Volume 24 · Number 2 · 2004 · 151–155 where Rpixel is the pixel apparent radiance (at observer), Ldirect the solar/lunar reflected radiance at surface, Lambient the skyshine reflected radiance at surface, Lbb the blackbody radiance at surface, Lpath the atmospheric path radiance between the surface and observer, ui the angle between the surface normal and direction to sun/moon, r the total hemispherical reflectance, and tpath the atmospheric transmission between the surface and observer. All parameters are integrated over the userspecified wavelength band. It should be noted that this equation can be used for EO, IR and NVG simulations since all parameters are integrated over the proper band. The most difficult computation in the radiance equation is the calculation of the thermal emission. While the blackbody radiance term is a good first approximation, it does not consider the heat transfer that occurs in nature. As such, this equation tends to produce very blocky images that do not accurately represent a true LWIR sensor. Heat transfer calculations are critical to generating a realistic thermal image. Surface properties Surface properties include reflectance (and/or emissivity) and solar absorptance for each material. For the purposes of real-time sensor simulations, all materials are assumed to be opaque, i.e. their transmissivity is assumed to be zero. With this assumption, the surface emissivity is always one minus the surface reflectivity. Reflectance/emissivity A set of spectral reflectance values is maintained for each material type. Spectral reflectance values in the waveband being simulated for a particular material type are used in the thermal calculation. Solar absorptance A single solar absorptance value is maintained for each material type. Spectral absorptance values have been averaged over the significant solar waveband into a single value. This average solar absorptance is used in the thermal calculation. Thermal calculations To satisfy the need for accurate thermal calculations, E&S has developed proprietary algorithms that solve the true heat transfer differential equation in real-time. These algorithms include a full solar and lunar ephemeris model that considers time of day, time of year and position on the earth. The result is that objects in simulated thermal images have a proper gradation across the surface as seen in real sensor imagery. As a part of its thermal calculations, specific parameters are calculated and stored for use by the real-time system. These parameters are defined below. Boundary conditions In addition to the material properties and the surface properties, boundary conditions must be specified for each particular material usage. These boundary conditions specify how that material type is used in the simulated environment (e.g. whether it is thick or thin, whether it is heated by its surrounding ambient temperature or by the current ground temperature, whether or not it is insulated from or highly conductive to its supporting temperature, its orientation and a specific temperature if its supporting environment is at a fixed temperature). Additionally, the orientation of the object is considered to account for solar loading, shadows falling on the material, etc. Diffusivity Diffusivity is defined as a material’s thermal conductivity divided by the product of its specific heat and density. A material’s diffusivity determines how efficient it is at internally transferring its thermal energy. k a¼ ðr £ C p Þ Support temperature The EPX sensor simulation allow one of the four different support temperature types to be specified: ambient air temperature, ambient ground temperature, a fixed temperature, or a host controlled temperature. where r is the diffusivity (m2/s), k is the thermal conductivity (W/m/8C), r is the density (kg/m3), and Cp is the specific heat (J/kg/8C) Secondary conductive heat transfer coefficient For each material, a secondary conductive heat transfer coefficient is defined that represents the ability of the support temperature to affect the 153 High fidelity, physics-based sensor simulation Sensor Review Chris Blasband, Jim Bleak and Gus Schultz Volume 24 · Number 2 · 2004 · 151–155 temperature at the back-side of the material usage. High secondary conductive heat transfer coefficients represent materials in “wet” contact and will force the temperature at the back side of the material usage to be very close to its defined support temperature. Material usages that are more insulated from their support temperature will have low secondary conductive heat transfer coefficients (1 or lower). Based on complex sensor/scene interactions, advanced algorithms are implemented to generate very accurate, in-band, at-aperture scene radiance values in real-time. Figure 2 shows an at-aperture, LWIR (8-12 m) simulated image of Fresno, CA, under clear atmospheric conditions for a summer day at noon. Thickness For each material, a representative thickness is defined. This thickness represents the thickness of the material from its surface to where its back side support temperature is applied. Thickness ranges from less than 1 mm for leaves, other vegetation, or very thin metal to over 0.5 m for thick concrete or the turret on a tank. Thicknesses that are specified to be greater than a material’s diurnal depth will show no appreciable change in the sensor image. Diurnal depth Diurnal depth is defined as: sffiffiffiffiffiffiffiffiffiffiffiffi 2k ; ðmÞ DD ¼ vrC h where k is the thermal conductivity (W/m/8C); v ¼ 2pp, with f ¼ 1=24 h; Ch is the specific heat (J/kg/8C); and r is the density (kg/m3) Atmospheric and meteorological calculations To properly generate a sensor scene, atmospheric and meteorological models must be incorporated into the calculation of the pixel radiance. E&S has proprietary algorithms to calculate the path transmission, path radiance and skyshine terms. Additionally, the real-time system can make use of data generated from government standard atmospheric codes such as MODTRAN. The sensor simulation software also predicts direct and diffuse solar, down-welling IR, air temperature, aerodynamic heating, convection, precipitation, and additional terms required in thermal modeling. Generation of sensor effects Modern imaging sensors utilize optics, detectors, and signal and image processing techniques to render a spatial image that can be displayed for a human operator. The optics, detectors, etc., tend to blur, distort, and degrade the image, creating sensor effects. Most sensor effects cannot be modeled in software in real-time. Complex processes such as blur, AC-coupling and automatic gain control (AGC) require a tremendous amount of processing. The simulation of these sensor effects through software only tends to reduce the frame rate to 15 Hz or below, which is unacceptable for most training applications. Real-time video processor In order to simulate the sensor effects in realtime, E&S has developed an unique hardware technology called the real-time video processor (RVP). The RVP is a sensor post processor which allows the users to model advanced Figure 2 Simulated thermal image of Fresno, CA Putting it all together As described above, true physics computations are utilized to generate accurate at-aperture radiance scenes in real-time for each pixel. 154 High fidelity, physics-based sensor simulation Sensor Review Chris Blasband, Jim Bleak and Gus Schultz Volume 24 · Number 2 · 2004 · 151–155 Figure 3 Simulated thermal image of Fresno, CA with and without sensor effects sensor effects found on modern EO, IR and NVG devices. Users have complete control of the RVP card through a user-friendly graphical user interface (GUI). Users can control the amount of noise, blur, AC-coupling, etc., through simple sliders and input controls. This allows users to model their exact sensor for real-time applications. The result is a very realistic image that accurately portrays the sensor being modeled. Figure 3 shows the Fresno, CA scene presented in Figure 2, now side-by-side with blur and noise added. Applications E&S’ real-time sensor simulation package can be used for a variety of applications including sensors found on unmanned aerial vehicles (UAVs), thermal imagers such as forward looking infrared (FLIR) devices and intensified imagers/night vision devices such as (NVGs). Additional applications include: . sensor design, . pilot training, . operator training, and . system analysis, etc. Conclusions Sensors have become a critical part of today’s world. From commercial airliners to most military vehicles, sensors are employed for a variety of applications including landing under adverse weather conditions, “seeing” through smoke, fog, etc., viewing the surrounding area at night under very low light conditions and guiding missiles to their targets. As sensors are so critical to the success of these types of missions and scenarios, it is critical that pilots and sensor operators be fully trained on the use of these sensors. To satisfy the needs of the training and simulation community, E&S has developed, as part of its EPX technology, complete end-to-end sensor simulation software and hardware that allow users to simulate a true sensor display in real-time. E&S employs novel techniques and algorithms to accurately model the physics required to simulate modern day sensors. This includes accurate modeling of the terrain, man-made cultural features, targets, atmosphere, environment, and weather effects. The result is a very immersive, realistic experience for anyone who requires training on today’s advanced civil and military sensors. Further reading Mayer, N (2000), Texel Based Material Encoding for Infrared Simulation, Evans & Sutherland Computer Corporation. Shumaker, D., Wood, J. and Thacker, C. (1988), Infrared Imaging Systems Analysis, Environmental Research Institute of Michigan. Note 155 1 For more information on EPX please visit Web site: www.es.com