PSI: Computational Architecture of Cognition, Motivation, Emotion

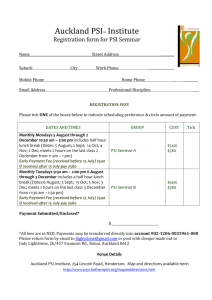

advertisement