Jerry R. Thomas - Research Methods in Physical Activity-Human Kinetics Publishers (2015)

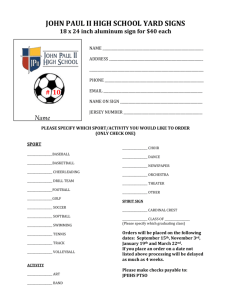

advertisement