Eigenfaces By Turk and Pentland

Sayantika Sengupta

Shankadip Bhattacharjee

Amitava Ghosh

Shashi Bhushan Singh

May 4, 2023

1 Abstract

Developing a near-real time computer system that can locate and track a subject’s

head and then recognise the person by comparing characteristics of the face to those

of the known individuals. This approach treats the face recognition problem as an

intrinsically 2-dimensional recognition problem. The system then functions by projecting face images onto a feature space that spans the significant variations among

the known face images. These significant features are called as the eigenfaces as they

are the eigenvectors for the set of faces and may not correspond to eyes, ears or

nose. An individual face can then be characterized by a weighted sum of the eigenfaces. To recognize a particular face, we can compare these weights to those of the

known individuals.

2 Introduction

Computational models of face recognition are interesting because they can contribute not only to theoretical insights but also to practical applications. Computers that recognize faces could be applied to a wide variety of problems, including

criminal identification, security systems, image and film processing, and humancomputer interactions. The ability to detect faces in photographs can also be extremely important. Unfortunately, developing a computational model of face recognition is quite difficult, as faces are complex,multidimensional class of objects and

stand in stark contrast to other artificial stimuli that are used in human and computer vision research.

Hence, people have tried developing a sort of early, pre-attentive pattern recognition capability that does not depend on having three-dimensional information or

detailed geometry. A computational model of face recognition have been developed that is fast, simple and accurate in constrained environments such an office

or household.

This approach decomposes face images into a small set of characteristic image features called eigenfaces which may be thought of as the principal components of the

1

initial training set of face images. Recognition is performed by projecting a new image into the subspace spanned by the eigenfaces(known as the face space) and then

classifying the face by comparing its position in face space with the positions of the

known individuals. Recognition under widely varying conditions can be achieved

by training on a limited number of characteristic views like a straight on view, a 45o

view and a profile view. This approach has advantages over other face recognition

schemes in its speed and simplicity, learning capacity, and insensitivity to a small or

gradual change in the face image.

3 The Eigenface Approach

Relevant information in a face image is extracted and encoded as efficiently as possible and compared to a database of similarly encoded models. A simple approach

to extracting the information contained in an image of a face is to somehow capture

the variation in a collection of face images and use this information to encode and

compare individual face images.

The approach to face recognition involves the following initialisation operations:(i) Acquire an initial set of face images(the training set). This is shown in Figure

1.

(ii) Calculate the eigenfaces from the training set (shown in Figure 3), keeping

only M images that correspond to the highest eigen values. These M images

define the face space. As new faces are experienced, the eigenfaces can be

updated or recalculated.

(iii) Calculate the corresponding distribution in M −dimensional weight space for

each known individual by projecting their face images onto the face space.

Having initialized the system, the following steps are then used to recognize new

face images:(i) Calculate a set of weights based on the input image and the M eigenfaces by

projecting the input image onto each of the eigenfaces.

(ii) Determine if the image is a face at all(whether known or unknown) by checking to see if the image is sufficiently close to the face space

(iii) If it is a face, classify the weight pattern as either a known person or unknown.

(iv) (Optional Step) Update the eigenfaces and the weight patterns.

(v) (Optional Step) If the same unknown face is seen several times, calculate its

characteristic weight and incorporate it into the known faces.

2

Figure 1: The face images used as training set

Figure 3: 7 eigenfaces calculated from the

training set as in Figure 1

Figure 2: The average face g

3

4 Calculating the Eigenfaces

A face image is a 2-dimensional N (= 256) by N array of intensity values. Thus, it

can also be considered as a vector of dimension N 2 . An ensemble of images, then,

maps to collection of points in this 256 by 256 = 65536-dimensional space. As the

images of the faces are similar in overall configuration, they won’t be randomly distributed in this huge space and hence can be described by a relatively low dimensional subspace. Here, the principal component analysis can be used to find the

vectors that can account for the distribution of face images within the entire image

space and also define the subspace of face images. Each of these vectors is a linear

combination of the original images and are also the eigenvectors of the covariance

matrix corresponding to the original face images(we get this using the principalcomponent analysis) and are also known as eigenfaces. Some examples of eigenfaces

are shown in Figure 3.

Let the training set of face images be a1 , a2 , . . . , aM .

M

1 P

an .

The average face of the set is g(say)=

M n=1

Each face differs from the average face by the vector bi = ai − g. An example training set is shown in Figure 1 and the average face g is shown in Figure 2. Principal

component analysis is applied to this set of large vectors, which seeks a set of M orthonormal vectors, ui .

M

P

The i th vector, ui , is chosen such that λi =

(uTi bn )2 is maximum, subject to:n=1

uTi uj = δi j =

(

1 if i = j

0 if i ̸= j

The vectors, ui and scalars λi are the eigenvectors and eigenvalues, respectively,

M

£

¤

P

of the covariance matrix C =

bTn bn = B B T , where B = b1 b2 . . . bM . The

n=1

matrix C is N 2 b y N 2 . So, it is extremely inconvenient to calculate its eigenvectors.

As, we have M << N 2 training faces, the dimension of the face space can be at most

M . But, we can evade this problem by observing a M by M matrix. Let us consider

the eigenvectors vi of B T B such that

B T B vi = µi vi

.

Then, we have:B B T B vi = µi B vi

. Thus, B vi are the eigenvectors of B B T = C with eigenvalues µi . Thus,

£ we only need ¤

to get the eigenvectors vi of the M by M matrix L = B T B . Since, B = b1 b2 . . . bM ,

hence, L i j = bTi bj . Thus, after finding the M eigenvectors (=vi ) of the matrix L, we

have:£

ui = B vi = b1

b2

...

M

X

¤

bM vi =

v i j bj

j =1

4

Thus, the M eigenfaces (=ui ) of the face space are calculated. It can be seen that the

calculations are greatly reduced from the order of the pixels (256 X 256) to order of

number of images of the training set, which is really low. Figure 3 shows the top 7

eigenfaces derived from the input images of Figure 1.

Figure 4: An original image and its projection onto the face space defined by eigenfaces of Figure 3

5

Figure 5: 3 images and their projections onto the the face space defined by the eigen

values of Figure. 3. The relative measures of distance from the face space of the images are 29.8, 58.5 and 5217.4 respectively. The first two images are from the original

training set.

6

5 Using Eigenfaces to Classify a Face Image

The eigenface images calculated from the eigenvectors of L span a P (≤ M )-dimensional

subspace of the original N 2 image space. The test cases have been based on M = 16

face images and P = 7 eigenfaces. A new face image f is transformed into its eigenface components by the following operation,

w i = uTi (f − g); 1 ≤ i ≤ P

£

¤

These components form a weight vector W T = w 1 w 2 . . . w P , that describes

the contribution of each eigenface in representing the input face image by treating

the P eigenfaces as a basis set for the face images. This vector maybe used to find

which predefined face classes, if any, best describes the face. The face classes F i

are calculated by averaging the results of the eigenface representation over a small

number of training face images (can also be a single image). The face class that

provides the best description of an input face image can be determined my finding

the face class i which minimizes the Euclidean distance

d i2 = ||(W − F i )||2

A face is then classified to belong to a class i when the minimum d i is below some

chosen threshold ϵ. Otherwise, the face is classified as unknown or can optionally

be used to create a new face class.

P

The projection of f onto the face space fp = Pi=1 w i ui . Hence, the distance d of f

from the face space is:d = ||f − fp ||2

.

Observing min d i and d , there can be 3 cases:(i) f is near face space and near a face class.

(ii) f is near face space but distant from all face classes.

(iii) f is distant from face space and all face classes.

For case (i), the person is recognized and identified. For case (ii), the image is of an

unknown person and for the last case, the image is not a face image. Figure 5 shows

some images and their projections into face space and gives a measure of distance

from the face space for each image.

7

6 Summary of the Eigenface Recognition Procedure

In short, the eigenface recognition procedure involves the following steps:(i) Collect a set of face images of known persons which includes multiple images

for each person with some variation in expression and lightning. (Say 4 images

of 10 people, so M = 40)

(ii) Calculate the (40 X 40) matrix L and find its eigenvectors vi and eigenvalues.

Then choose the P eigenvectors corresponding to the highest eigenvalues.

(Let P be 10, for this example)

(iii) Find the respective eigenfaces ui from eigenvectors vi of L.

(iv) For each known person, calculate the face class F i by averaging the results

of eigenface representation of the original 4 images of a person. Choose a

threshold ϵ1 which sets the maximum allowable distance from any face class,

and a threshold ϵ2 which sets the maximum allowable distance from the face

space.

(v) For each new input face, calculate its weight vector W and the distances d i

to each face class and the distance d from the face space. If the minimum

distance d k =( =min d i ) < ϵ1 and d < ϵ2 , classify the input face as a person

associated with the face class F k . If d k =( =min d i ) > ϵ1 and d < ϵ2 , then the

image can be classified as unknown and optionally, a new class can be begun.

(vi) If the new input face is classified as a known person, it can be added to the

original set of familiar face images and the eigenfaces (steps (i) to (iv)) can be

recalculated. This gives the opportunity to modify the face space as the system

encounters more images of known faces.

8

7 Mathematical proof of PCA

Let X be an n-dim random vector such that

X=

Pn

i =1 y i φi

where {φ1 , . . . , φn } forms an orthogonal basis of an n-dimensional space

y i = ⟨X , φi ⟩ = X ⊤ φi

∀i ∈ ⟨1, 2, . . . , n⟩

Now suppose we want to represent X with fewer basis vectors, i.e. say m (m<n). we

can do this by replacing the co-ordinate y m+1 , y m+2 , . . . , y n with some pre-selected

bi

P

Pm

X̂ (m) = m

i =1 y i φi + i =1 b i φi

The representation error

∆X (m) = X − X̂ (m) =

Pn

i =m+1 (y i

− b i )φi

Now we can measure this representation error by mean squared of the magnitude of ∆X i.e.

³³ X

´2 ´

n

E(|∆X |2 ) = E

(y i − b i )φi

i =m+1

³³ X

´⊤ ³ X

´´

n

n

=E

(y i − b i )φi

(y i − b i )φi

i =m+1

³³ X

n

=E

i =m+1

n

X

((y i − b i )2 φi T φ j )

j =m+1 i =m+1

E(|∆X |2 ) =

n

X

³

´

E (y i − b i )2

i =m+1

find b i for which E(|∆X |2 ) is minimum

∂ ¡ £ 2 ¤¢

E |X | = 0

∂b i

{−2} E(y i − b i ) = 0

b i = E(y i )

Now the equation becomes

9

´´

E(|∆X |2 ) =

E(|∆X |2 ) =

=

n

X

´2

³

E (y i − E(y i ))φi

i =m+1

n

X

i =m+1

n

X

³

E (X ⊤ φi

where y i = X ⊤ φi

− E(X ⊤ φi

)

´

φi T Sφi

i =m+1

³

´

where S = E (X − E(X ))(X − E(X ))T is the covariance matrix

Now find φi for which a E(|∆X |2 ) is minimised

∂ ¡ £ 2 ¤¢

E |X | = 0

∂φi

subject to φTi φi = 1

L = min

n

X

φ i =m+1

φi T Sφi +

n

X

i =m+1

λi (1 − φTi φi )

∂ ¡ £ 2 ¤¢

E |X | = 0

∂φi

=⇒ Sφi = λi φi

λi is eigenvalue of covariance matrix S

now substituting in equation E(|∆X |2 ) =

n

X

φi T φi

i =m+1

E(|∆X |2 ) =

2

E(|∆X | ) =

n

X

i =m+1

n

X

φi T λi φi

λi

i =m+1

So in order to minimise the representation error λi ’s need to be small as possible

among all eigenvalues of covariance matrix. In other word, it involves selecting m

eigenvector corresponding to top m eigenvalues.

10

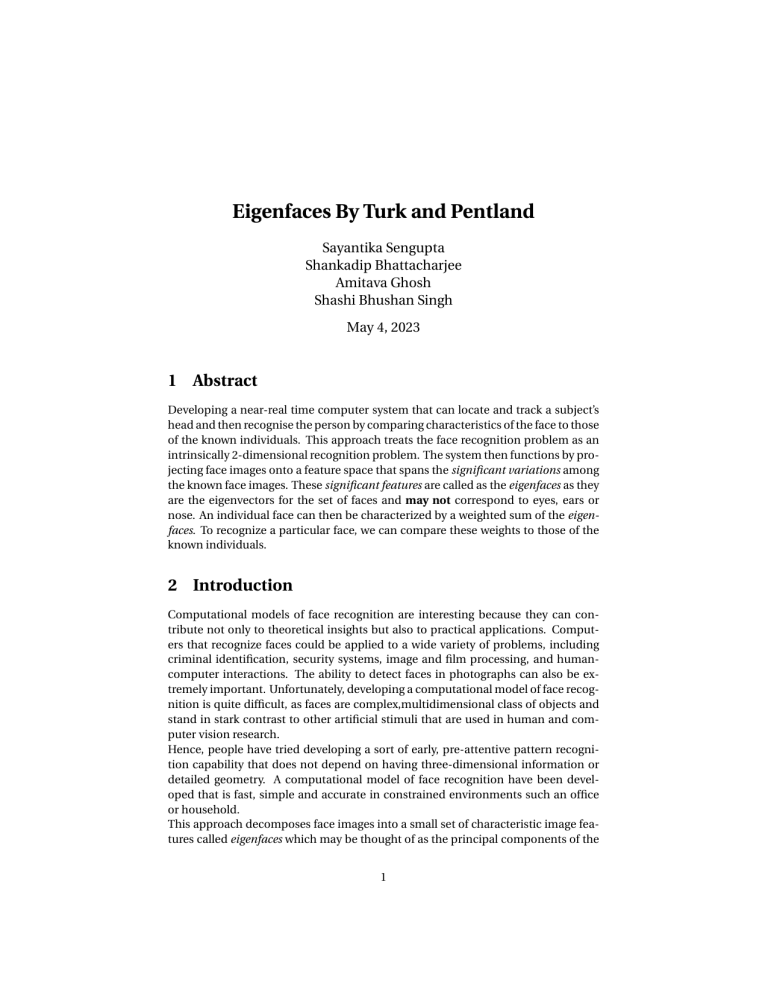

8 Experiments with Eigenfaces

To assess the viability of this approach to face recognition, we have performed experiments using facial datasets comprising of 15 unique individuals displaying 12

distinct facial expressions such as smiling, winking, and sadness, among others.

To verify the resilience of this approach in accurately identifying faces, we randomly

selected 3 facial images for each individual and set them aside to create a test dataset.

The remaining facial images were then utilized to train the model. The performance

of the model for different epsilon value for train and test datasets are shown below:

Initially, the model exhibited a high rate of false negatives (not recognizing faces

correctly) and a very low rate of false positives (wrong predictions). However, as the

value of epsilon increases, the rate of false negatives decreases while the rate of false

positives increases. This behavior is also observed in the test dataset, indicating the

consistency of the model’s performance.

The appropriate value of epsilon for face recognition can be chosen based on

the specific needs of the application, as there is a trade-off between false negatives

and misclassification. A lower value of epsilon may result in more false negatives,

but fewer misclassifications, while a higher value of epsilon may reduce the false

negatives but increase the misclassifications. The choice of epsilon should be made

based on the specific requirements of the application and the relative importance of

minimizing false negatives versus misclassification

11

9 Conclusion

The eigenface approach to face recognition was motivated by information theory,

which led to the idea basing face recognition on a small set of image features which

best approximated the set of known face images, which did not correspond to our

intuitive notion of facial parts and features. It is of course not an elegant solution

to the general recognition problem. But, this approach does provide a practical solution that is well fitted to the problem of face recognition. It is fast, simple and

has been shown to work well in a constrained environment. This approach can be

applied in security systems or human-computer interactions and experiments have

shown that the eigenface technique can be made to perform at a very high accuracy

rate and is potentially well suited to these applications. In addition to recognition

of faces, people are trying to determine the gender of the subject and to interpret

facial expressions. These are the two important face processing problems that complement the face recognition procedure. The eigenface approach is a successful and

widely used approach for face recognition.

10 My Jupyter Notebook

12

5/4/23, 4:23 PM

Face_recognition1

In [1]: import numpy as np

import cv2

import random

from PIL import Image

import matplotlib.pyplot as plt

face_cascade = cv2.CascadeClassifier('haarcascade_frontalface_default.xml')

In [2]:

def face_vector(image, detect =0):

gray_image = image.convert('L')

gray_image_array = np.asarray(gray_image)

# convert to NumPy array

if detect==0:

# Detect faces in the grayscale image

faces = face_cascade.detectMultiScale(gray_image_array, scaleFactor=1.1, minNeighbors=5)

# Process each detected face

for (x, y, w, h) in faces:

# Define the ROI as a rectangle

roi1 = gray_image_array[y:y+h, x:x+w]

roi = cv2.resize(roi1, (128, 128), interpolation=cv2.INTER_LANCZOS4)

image_array = np.asarray(roi)

gray_image_vector = np.ravel(image_array)

# return a vector containing all detected faces

return gray_image_vector.reshape(1,-1)

else:

roi = cv2.resize(gray_image_array, (128, 128), interpolation=cv2.INTER_LANCZOS4)

image_array = np.asarray(roi)

gray_image_vector = np.ravel(image_array)

localhost:8889/nbconvert/html/Documents/Eigen_faces/Face_recognition1.ipynb?download=false

1/15

5/4/23, 4:23 PM

Face_recognition1

# return a vector containing all detected faces

return gray_image_vector.reshape(1,-1)

In [3]: num=["01","02","03","04","05","06","07","08","09","10","11","12","13","14","15"]

state=["happy","sad","centerlight","glasses","leftlight","noglasses","normal","rightlight","sad","sleepy","surprised","wink"]

face_matrix=np.empty((0, 128*128))

for n in num:

for st in state:

image = Image.open(f"data//subject{n}.{st}")

img=face_vector(image)

face_matrix = np.concatenate((face_matrix, img.reshape(1, -1)), axis=0)

In [4]: label=[]

for i in range(15):

lb=[i]*12

label=label+lb

test_face =np.empty((0, 128*128))

train_face=np.empty((0, 128*128))

test_label =[]

train_label=[]

for i in range(15):

np.random.RandomState(50)

k = random.sample(range(12), 3)

for j in range(12):

if j in k:

test_face = np.concatenate((test_face,face_matrix[12*i+j,:].reshape(1, -1)), axis=0)

test_label+=[label[12*i+j]]

else:

train_face = np.concatenate((train_face, face_matrix[12*i+j,:].reshape(1, -1)), axis=0)

train_label+=[label[12*i+j]]

mean_face_matrix=np.mean(train_face,axis=0).reshape(1,-1)

A=train_face-mean_face_matrix

In [5]: fig, axes = plt.subplots(nrows=3, ncols=4, figsize=(15, 7))

localhost:8889/nbconvert/html/Documents/Eigen_faces/Face_recognition1.ipynb?download=false

2/15

5/4/23, 4:23 PM

Face_recognition1

for i in range(12):

row = i // 4

col = i % 4

face = face_matrix[i]

eig=np.real(face.reshape(128,128))

eig_norm = cv2.normalize(eig, dst=None, alpha=0, beta=255, norm_type=cv2.NORM_MINMAX, dtype=cv2.CV_8U)

axes[row, col].imshow(eig_norm, cmap='gray')

axes[row, col].axis('off')

axes[row, col].set_title("Eigenface {}".format(i+1))

fig.suptitle('Top eigenfaces', fontsize=16)

plt.show()

In [6]: img = cv2.normalize(mean_face_matrix.reshape(128,128), None, 0, 255, cv2.NORM_MINMAX, cv2.CV_8U)

plt.imshow(img, cmap='gray')

localhost:8889/nbconvert/html/Documents/Eigen_faces/Face_recognition1.ipynb?download=false

3/15

5/4/23, 4:23 PM

Face_recognition1

plt.show()

Covariance matrix and its eigenvaue and eigenvector

In [7]: def feature_vec(n,A,i=0):

# Calculate the covariance matrix

cov = np.matmul(A, A.T)

# Calculate the eigenvalues and eigenvectors of the covariance matrix

eigenvalues, eigenvectors = np.linalg.eigh(cov)

# Compute the eigenvectors of A.A.T

eigenface_all = np.matmul(A.T, eigenvectors)

# Sort the eigenvalues and eigenvectors in descending order

sorted_indices = np.argsort(eigenvalues)[::-1]

sorted_eigenvalues = eigenvalues[sorted_indices]

sorted_eigenvectors = eigenface_all[:, sorted_indices]

norms = np.linalg.norm(sorted_eigenvectors, axis=0)

sorted_eigenvectors=

sorted_eigenvectors / norms

top_eigenval=sorted_eigenvalues[:n]

localhost:8889/nbconvert/html/Documents/Eigen_faces/Face_recognition1.ipynb?download=false

4/15

5/4/23, 4:23 PM

Face_recognition1

eigenface=sorted_eigenvectors[:,:n].T

var =(np.sum(sorted_eigenvalues[:n])/np.sum(sorted_eigenvalues))*100

if i==0: print("explained variance : ",var)

return eigenface

def weight_cal(arr,eigenface):

weight = np.matmul(eigenface,arr.T).T

# weight for all image

return weight

def recast_cal(weight,eigenface):

recast=np.real(np.matmul(weight,eigenface))

recast_image=recast.reshape(1,-1)

return recast_image

calculating weight for faces

In [8]: n = 25

eigenface = feature_vec(n,A)

weight = weight_cal(A,eigenface)

weight=np.real(weight)

explained variance :

# weight for all image

87.50957896305898

In [9]: fig, axes = plt.subplots(nrows=3, ncols=7, figsize=(15, 7))

for i in range(21):

row = i // 7

col = i % 7

eig = eigenface[i]

eig=np.real(eig.reshape(128,128))

eig_norm = cv2.normalize(eig, dst=None, alpha=0, beta=255, norm_type=cv2.NORM_MINMAX, dtype=cv2.CV_8U)

axes[row, col].imshow(eig_norm, cmap='gray')

axes[row, col].axis('off')

axes[row, col].set_title("Eigenface {}".format(i+1))

fig.suptitle('Top eigenfaces', fontsize=16)

plt.show()

localhost:8889/nbconvert/html/Documents/Eigen_faces/Face_recognition1.ipynb?download=false

5/15

5/4/23, 4:23 PM

Face_recognition1

In [10]: indiv =1

img1 = cv2.normalize((train_face[indiv]).reshape(128,128), None, 0, 255, cv2.NORM_MINMAX, cv2.CV_8U)

plt.imshow(img1, cmap='gray')

plt.show()

fig, axes = plt.subplots(nrows=3, ncols=3, figsize=(10, 10))

for i, feature in enumerate(range(19, 180, 20)):

eigenface = feature_vec(feature, A, 1)

weight = weight_cal(A, eigenface) # weight for all images

localhost:8889/nbconvert/html/Documents/Eigen_faces/Face_recognition1.ipynb?download=false

6/15

5/4/23, 4:23 PM

Face_recognition1

w = weight[indiv].reshape(1,-1)

recast = np.matmul(w, eigenface)

ab = recast + mean_face_matrix.reshape(1,-1)

img = cv2.normalize(ab.reshape(128,128), None, 0, 255, cv2.NORM_MINMAX, cv2.CV_8U)

row, col = i // 3, i % 3

axes[row, col].imshow(img, cmap='gray')

axes[row, col].set_title(f"n ={feature}")

fig.suptitle('Reconstructed face with different number of eigenfaces', fontsize=16)

plt.show()

localhost:8889/nbconvert/html/Documents/Eigen_faces/Face_recognition1.ipynb?download=false

7/15

5/4/23, 4:23 PM

Face_recognition1

Face prediction

localhost:8889/nbconvert/html/Documents/Eigen_faces/Face_recognition1.ipynb?download=false

8/15

5/4/23, 4:23 PM

Face_recognition1

In [11]: def centroid_fun(weight,label,n):

centroid = np.zeros((len(set(label)),n))

target =[]

for i,lab_cent in enumerate(set(label)):

indices = [i for i, x in enumerate(label) if x == lab_cent]

indiv_mean = np.mean(weight[indices,:],axis =0)

centroid[i,:] = indiv_mean

target.append(lab_cent)

return centroid ,target

def face_prediction(A,mean_face ,center,label,eigenface,eps=4000, eps_face = 5000):

A1 =A - mean_face

W = weight_cal(A1,eigenface)

m=np.inf

for i in range(len(center)):

w_norm =np.linalg.norm(W-center[i])

if m>w_norm:

m=w_norm

if w_norm <eps:

return label[i], w_norm

reimg = recast_cal(W,eigenface)

face_norm = np.linalg.norm(A1-reimg)

if face_norm < eps_face:

return -1, m

return -2

,face_norm

# -1 for face not recognized

# -2 it is not a face

localhost:8889/nbconvert/html/Documents/Eigen_faces/Face_recognition1.ipynb?download=false

9/15

5/4/23, 4:23 PM

Face_recognition1

In [12]: # calculating eigenfaces :

n = 25

eigenface = feature_vec(n,A)

weight = weight_cal(A,eigenface)

weight=np.real(weight)

centroid,target=centroid_fun(weight,train_label,n)

explained variance :

87.50957896305898

In [ ]:

In [13]: import pandas as pd

train_df =pd.DataFrame({"epsilon":[],

"correct" :[],

"wrong_pred" :[],

"not_recognized" : [],

"not_face" :[]})

test_df =pd.DataFrame({"epsilon":[],

"correct" :[],

"wrong_pred" :[],

"not_recognized" : [],

"not_face" :[]})

eps1 =5400

for ep in range(3000,6000,200):

correct = 0

not_rec =0

not_face = 0

wrong_pre=0

size=len(train_face)

for i in range(size):

pred,nor =face_prediction(train_face[i],mean_face_matrix,centroid,target,eigenface,ep,eps1)

if pred ==train_label[i]:

localhost:8889/nbconvert/html/Documents/Eigen_faces/Face_recognition1.ipynb?download=false

10/15

5/4/23, 4:23 PM

Face_recognition1

correct+=1

elif pred == -1:

not_rec+=1

elif pred==-2:

not_face+=1

else:

wrong_pre += 1

train_df.loc[len(train_df)] = [ep, correct *100/size, wrong_pre*100/size, not_rec*100/size, not_face*100/size]

correct_t = 0

not_rec_t =0

not_face_t = 0

wrong_pre_t=0

size_t=len(test_face)

for i in range(size_t):

pred_t,nor_t =face_prediction(test_face[i],mean_face_matrix, centroid,target,eigenface,ep,eps1)

if pred_t ==test_label[i]:

correct_t+=1

elif pred_t == -1:

not_rec_t+=1

elif pred_t==-2:

not_face_t+=1

else:

wrong_pre_t += 1

test_df.loc[len(test_df)] = [ep, correct_t *100/size_t, wrong_pre_t*100/size_t, not_rec_t*100/size_t, not_face_t*100/size_t]

localhost:8889/nbconvert/html/Documents/Eigen_faces/Face_recognition1.ipynb?download=false

11/15

5/4/23, 4:23 PM

Face_recognition1

In [14]: fig, axes = plt.subplots(nrows=1, ncols=2, figsize=(16, 6))

# plot the correct, wrong_pred, not_recognized, and not_face columns against the epsilon column for train_df

axes[0].plot(train_df['epsilon'], train_df['correct'], color='blue', label='Correct')

axes[0].plot(train_df['epsilon'], train_df['wrong_pred'], color='orange', label='Wrong Predictions')

axes[0].plot(train_df['epsilon'], train_df['not_recognized'], color='green', label='Not Recognized')

axes[0].plot(train_df['epsilon'], train_df['not_face'], color='red', label='Not Face')

axes[0].legend()

axes[0].set_xlabel('Epsilon')

axes[0].set_ylabel('percentage')

axes[0].set_title('train Dataset')

# plot the correct, wrong_pred, not_recognized, and not_face columns against the epsilon column for test_df

axes[1].plot(test_df['epsilon'], test_df['correct'], color='blue', label='Correct')

axes[1].plot(test_df['epsilon'], test_df['wrong_pred'], color='orange', label='Wrong Predictions')

axes[1].plot(test_df['epsilon'], test_df['not_recognized'], color='green', label='Not Recognized')

axes[1].plot(test_df['epsilon'], test_df['not_face'], color='red', label='Not Face')

axes[1].legend()

axes[1].set_xlabel('Epsilon')

axes[1].set_ylabel('percentage')

axes[1].set_title('Test Dataset')

fig.suptitle('Model Performance', fontsize=16)

plt.show()

localhost:8889/nbconvert/html/Documents/Eigen_faces/Face_recognition1.ipynb?download=false

12/15

5/4/23, 4:23 PM

Face_recognition1

In [15]: fig.savefig('performance.png')

In [16]: eps=4800

eps1=5300

chair_l = Image.open("chair.jpg")

plt.imshow(chair_l)

plt.show()

chair =face_vector(chair_l,1)

detect,nor=face_prediction(chair,mean_face_matrix, centroid,target,eigenface,eps,eps1)

if detect ==-1:

print("face detected but not recongized")

if detect == -2:

print("It is not a face")

localhost:8889/nbconvert/html/Documents/Eigen_faces/Face_recognition1.ipynb?download=false

13/15

5/4/23, 4:23 PM

Face_recognition1

else:

print("person is :", detect)

It is not a face

In [17]: flower_I = Image.open("flower.jpg")

plt.imshow(flower_I)

plt.show()

flower1 =face_vector(flower_I,1)

flower = flower1 - mean_face_matrix

w_flo =weight_cal(flower,eigenface)

a=recast_cal(w_flo,eigenface) + mean_face_matrix

plt.imshow(a.reshape(128,128),"gray")

plt.show()

detect,nor=face_prediction(flower1,mean_face_matrix, centroid,target,eigenface,eps,eps1)

if detect == -1:

print("face detected but not recognized")

if detect == -2:

print("It is not a face")

localhost:8889/nbconvert/html/Documents/Eigen_faces/Face_recognition1.ipynb?download=false

14/15

5/4/23, 4:23 PM

Face_recognition1

else:

print("person is :", detect)

It is not a face

In [ ]:

In [ ]:

localhost:8889/nbconvert/html/Documents/Eigen_faces/Face_recognition1.ipynb?download=false

15/15