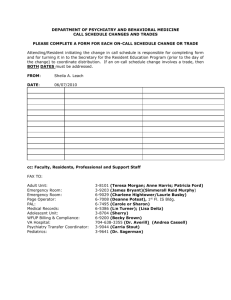

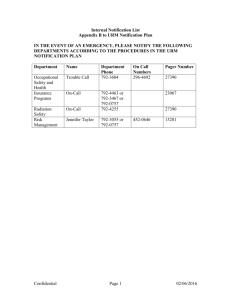

Uber Blog Sign up Engineering Eats Safety Team On-Call Overview 19 August 2021 / Global Introduction Our engineers have the responsibility of ensuring a consistent and positive experience for our riders, drivers, eaters, and delivery/restaurant partners. Ensuring such an experience requires reliable systems: our apps have to work when anyone needs them. A major component of reliability is having engineers on call to deal with problems immediately as they arise. We set up our on-call engineers for success through training, tools, and processes. In this article we will provide an overview of how we at the Eats Safety team ensure that our engineers are fully equipped to provide prompt, high-quality service—anywhere in the world, 24/7. The Eats Safety Team Created in late 2019, the Eats Safety team is based in the São Paulo Tech Center. We are responsible for building safety features and products for the UberEats marketplace. The team is in a constant state of action: implementing and deploying systems, and combining different types of technology at massive scale. Mobile applications, cloud-based services, and web applications all need to interoperate effectively to achieve our desired business goals. We have a lot of autonomy to build and deploy our own services and features. At the same time, we are responsible for ensuring that everything we built is performing as intended in production, and for that we have an on-call rotation. On-Call Process Roles Being on call means being available for a period of time to respond to alerts with the appropriate level of urgency. In addition to mitigating incidents, on-call engineers also invest their time in calibrating alerts, updating runbooks, improving dashboards, automating operational processes, and providing support to other teams. On-call engineers often fall into 3 different roles, with the following responsibilities: Primary: Acknowledge alerts Address alerts (assess, mitigate, communicate, and resolve) Annotate alerts (we will cover alert annotation later in this article) Work on improvements to the on-call experience (alert tuning, automation) Should not be responsible for a large number of regular sprint tasks—they should have a dedicated time slot during sprint to work on on-call improvement tasks Some teams’ responsibilities also includes monitoring chat, ticket queues, or other internal support forums Bug triaging Secondary: Nobody should be on-call alone. The secondary on-call is available to support the primary whenever needed. Supports the primary, when necessary If necessary, assist the primary in addressing alerts Shadow: The safest and most effective way to onboard new on-call engineers includes rigorous shadow training, which provides an “in the trenches” experience. We require that every new engineer on the team shadow another more experienced engineer before entering the rotation. A new on-call engineer will be reverse shadowed by an experienced engineer as well. Don’t acknowledge alerts—the purpose of shadowing is just learning and getting familiar with tools and processes Follow along all pages, and communicate with the primary Read annotations and call out anything that doesn’t make sense Alert Notification Policy Alerts are triggered by monitoring platforms and depending how critical an alert is, the on-call engineers can be notified in different ways. Critical Alerts: Immediate: Email and push notification 1 minute: Text message 3 minutes: Phone call Non-Critical Alerts: 2 minutes: Email If the primary on-call doesn’t acknowledge a critical alert within ten minutes, the alert will be escalated to the secondary in the current rotation. Rotation The on-call rotation is the schedule used to map who will be on call, and when. Every rotation should have at least a primary and a secondary. Some teams might also have a tertiary to cover the secondary, if necessary. Defining an on-call rotation involves answering questions such as: How long does a rotation last? How many engineers must be on call simultaneously? Which engineers should be on a shift? What happens when an on-call engineer does not respond to an alert? The answer can vary a lot according to the characteristics of each team, such as: Size Whether the team is distributed across different time zones Example Setup Eats Safety is a cross-functional team, composed of 4 mobile and 5 backend engineers. Traditionally, different functional groups have their own rotation, even when they’re part of the same product team. In our case, that would mean having one on-call rotation for mobile and another for backend. However, assuming that we have a weekly rotation, it would cause a mobile pair to go on call every 2 weeks and we wanted: Our engineers to go on-call less often Cross-functional shifts by having both a mobile and a backend engineer Because of that, we decided to experiment with a merged mobile and backend on-call. It allowed us to reduce how often any given engineer goes on-call, and made it possible to draw from the expertise of both specialties at each shift, alternating between primary and secondary. Here is what we learned from having a merged on-call rotation: Besides providing a better quality of life, the merged on-call also proved to be an excellent opportunity for all engineers to develop new skills, as mobile engineers need to have a better understanding of backend and vice versa Less context change and more time to focus on regular sprint work Easier to set up and maintain, since it’s a single rotation for the whole team The engineers get to know the product better over time Better knowledge sharing among the team A crucial factor in the success of merged on-call is to have easy-to-follow runbooks for all alerts, saving engineers from needing to think too much when an alert comes in Before On-Call – The Preparation Checklist The preparation checklist consists of steps an on-call engineer should take to be fully prepared for an upcoming on-call shift, such as: Ensuring that paging tooling has up to date information (phone number, email, etc.) Ensuring availability to be paged Having a laptop and charger ready at all times Knowing where to find their team runbooks Ensuring access to their team’s tooling Reading shift reports and alerts from the previous on-call shift After On-Call – The Handoff Meeting The handoff meeting happens at the end of a shift. In this meeting, the whole context of the previous shift is passed to the engineers entering the new shift, including: Alert metrics analysis Triggered alerts Completed on-call action items and follow-ups Action item prioritization Ongoing operational procedures The reports based on alert annotation are a great source of insights for future alert improvements and runbook updates. The participants in this meeting vary from one team to another. It can be the whole team, or just the engineers directly involved in the relevant shifts. Monitoring In order to know whether or not our services are performing as intended in production, they need to be monitored. Monitoring refers to our ability to quickly detect and understand problems, via system metrics or testing. Monitoring can be grouped in 2 general categories: White-box monitoring is applied to systems that are running on a server. It consists of processing metrics exposed by each system, through code instrumentation, profiling, or HTTP interfaces. Black-box monitoring comprises external tests that aim to verify the experience from the user’s perspective. Monitoring allows: Tracking system metrics trends over time, such as the number of requests per second at a given endpoint, or the growth rate of a database Creating dashboards that help illustrate the impact of changes and the results of mitigating a problem Creating alerts that notify engineers when something is broken, or about to break Good Alerts Alerts are notifications sent to the on-call engineers that report a problem with a service/product. The type of notification will depend on how critical an alert is. Here are our guidelines for what makes an alert good: High Signal: It represents a real problem Low Noise: Should not be triggered extraneously Actionable: The on-call can do something to address it Rare: Frequent, non-actionable alerts lead to alert fatigue, and increase the chance that people will ignore a real problem Frequent, actionable alerts indicate that the system or service does not have a satisfactory level of reliability, and needs to be updated To guarantee the on-call load and quality, we track all alerts by annotating them, and then generating reports so we can understand what needs to be improved. Our Monitoring Tools uMonitor is an alerting system and front end for bringing together all the different observability metrics, with the goal of enabling engineers to maintain visibility on their systems, detect issues as they arise, and quickly fix them. Blackbox is designed to monitor the availability and accuracy of flows that are exposed externally to our customers, by calling the same APIs used for our mobile applications. You can read more in Observability at Scale: Building Uber’s Alerting Ecosystem. On-Call Quality Metrics We use an internal platform developed by the Production Engineering team, called On-Call Dashboard. It offers a seamless experience for our on-call engineers by compiling everything necessary to respond to an alert and recording a history of both alerts and the actions taken in response. This data gives us high level insight into alert trends which can be used to improve our software development and on-call response practices. Annotating an alert comprises keeping track of the following information: Was it a real problem? Was it actionable? What actions did we take? What was the root cause? Tasks for follow-up work Any notes deemed relevant by the on-call engineer, typically describing the actions taken including debugging steps, observations about related metrics, links to relevant documentation, and outcomes. These notes can help future on-call engineers with similar alerts to debug outages faster by leveraging past history and best practices. Metrics We derive the following metrics from the alerts annotations, which help us understand the overall quality of the on-call shift: Alerts: Alert count during the shift. Annotations: The fraction of annotated alerts over total alerts. A high annotation rate indicates more complete information about the shift. Signal-to-Noise Ratio Accurate: The fraction of alerts that represents a real problem over the total number of alerts. Noisy alerts should be calibrated in future on-call shifts. Signal-to-Noise Ratio Actionable: The fraction of alerts that required an action to be taken by the on-call engineer or other teammate to resolve the alert. Orphaned Alerts: Alerts that are still open by the end of the shift, but have not been annotated, indicating poor on-call engagement. Poor Runbooks: Every alert should have an associated runbook. This metric represents the runbooks lack of accuracy based on a quality score assigned by on-call engineers on the shifts. Disturbance: This indicates on-call action distribution along the shift. High disturbance score indicates a heavy shift with several incidents or noisy alerts. Mean Time To Acknowledge Alert (MTTA): The average time it takes from when an alert is triggered to when it is acknowledged by the on-call engineer. Mean Time To Resolution (MTTR): The average length of time needed to resolve alerts. These metrics help engineers analyze and prioritize action items that should be addressed during the next on-call shift. Some of the insights we can take from these metrics are: What alerts need to be calibrated? What runbooks need to be improved? High-level stability/reliability information, and patterns of failure Services that are failing too often, and need engineering investment such as: Paying technical debts Re-architecture More redundancy Higher capacity Training As mentioned throughout the article, our on-call process involves tools, metrics, definition of roles, alert notification policies, etc. We conduct regular training sessions covering on-call and incident management as part of Engucation (Engineering + Education) for new engineers. Having a structured education process is important for ramping up new engineers, building confidence, and maintaining process standardization across teams. During Engucation sessions, besides theory, new engineers also have hands-on experience that involves the first contact with many of the tools that we use on a daily basis. Prior to joining an on-call rotation, all engineers should attend this training session, review their team’s runbooks, and complete one or more shadow rotations. Final Considerations The on-call process requires constant improvement. At Eats Safety we are continually evaluating and discussing what is working and what can be improved as part of our basic process during the shifts. The standards presented here that we use at Eats Safety represent decades of collective experience and best practices from teams across the company. By teaching and following these processes, we hope to reduce chaos, maintain organization in the face of uncertainty, and improve outcomes for both engineers and customers. We are hiring at the São Paulo Tech Center. Click here to see our positions. Engineering Eduardo David Eduardo David is an Engineering Manager, Software Engineer, and product enthusiast. He is responsible for creating Delivery Safety engineering roadmaps based on the delivery business strategy, supporting execution and developing high performing teams. He is one of the On-Call and Incident Response instructors and has trained hundreds of engineers across Uber's tech centers. Josh Kline Josh Kline is a Sr Software Engineer II working on Uber's Core Security Engineering team. Josh leads the Incident Management Instructors Group responsible for teaching reliability best practices around on-call, incident response, postmortems, and incident review. Posted by Eduardo David, Josh Kline Category: Engineering Related articles Engineering, Data / ML Fast Copy-On-Write within Apache Parquet for Data Lakehouse ACID Upserts 29 June / Global