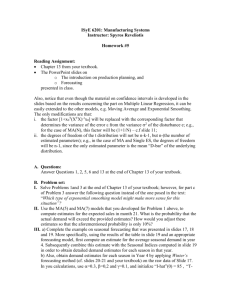

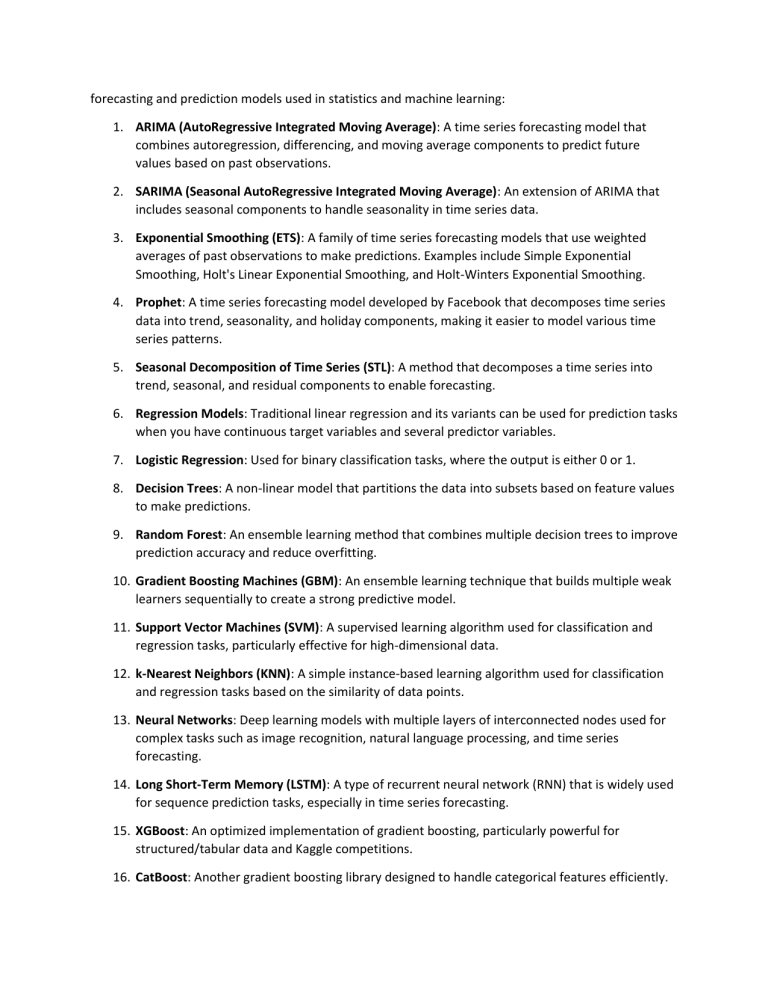

forecasting and prediction models used in statistics and machine learning: 1. ARIMA (AutoRegressive Integrated Moving Average): A time series forecasting model that combines autoregression, differencing, and moving average components to predict future values based on past observations. 2. SARIMA (Seasonal AutoRegressive Integrated Moving Average): An extension of ARIMA that includes seasonal components to handle seasonality in time series data. 3. Exponential Smoothing (ETS): A family of time series forecasting models that use weighted averages of past observations to make predictions. Examples include Simple Exponential Smoothing, Holt's Linear Exponential Smoothing, and Holt-Winters Exponential Smoothing. 4. Prophet: A time series forecasting model developed by Facebook that decomposes time series data into trend, seasonality, and holiday components, making it easier to model various time series patterns. 5. Seasonal Decomposition of Time Series (STL): A method that decomposes a time series into trend, seasonal, and residual components to enable forecasting. 6. Regression Models: Traditional linear regression and its variants can be used for prediction tasks when you have continuous target variables and several predictor variables. 7. Logistic Regression: Used for binary classification tasks, where the output is either 0 or 1. 8. Decision Trees: A non-linear model that partitions the data into subsets based on feature values to make predictions. 9. Random Forest: An ensemble learning method that combines multiple decision trees to improve prediction accuracy and reduce overfitting. 10. Gradient Boosting Machines (GBM): An ensemble learning technique that builds multiple weak learners sequentially to create a strong predictive model. 11. Support Vector Machines (SVM): A supervised learning algorithm used for classification and regression tasks, particularly effective for high-dimensional data. 12. k-Nearest Neighbors (KNN): A simple instance-based learning algorithm used for classification and regression tasks based on the similarity of data points. 13. Neural Networks: Deep learning models with multiple layers of interconnected nodes used for complex tasks such as image recognition, natural language processing, and time series forecasting. 14. Long Short-Term Memory (LSTM): A type of recurrent neural network (RNN) that is widely used for sequence prediction tasks, especially in time series forecasting. 15. XGBoost: An optimized implementation of gradient boosting, particularly powerful for structured/tabular data and Kaggle competitions. 16. CatBoost: Another gradient boosting library designed to handle categorical features efficiently. 17. ARIMA-X: An extension of the ARIMA model that includes exogenous variables to improve forecasting accuracy. 18. VAR (Vector Autoregression): A model used for multivariate time series forecasting, where multiple time series variables influence each other. 19. State Space Models: A general class of models used for time series forecasting and handling missing data, where the underlying process is represented as a hidden state. 20. Gaussian Processes: A probabilistic model that can be used for regression tasks, particularly useful for handling uncertainty in predictions.