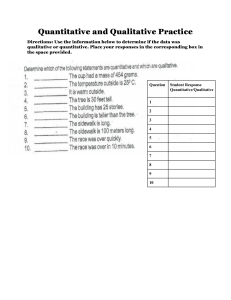

Educational Research Textbook: Quantitative, Qualitative, Mixed Methods

advertisement