L. D. College of Engineering

Opp Gujarat University, Navrangpura, Ahmedabad - 380015

LAB MANUAL

Minor Degree: Artificial Intelligence and Machine Learning

Branch: Computer Engineering

Introduction to AI and Machine

Learning (114AG01)

Semester: I V

Faculty Details:

1. Prof. M. K. Shah

2. Prof. P. G. Patel

3. Prof. H. D. Rajput

Introduction to AI and Machine Learning (114AG01)

CERTIFICATE

This is to certify that Mr./Ms. __________________________,

Enrollment Number________________ has satisfactorily completed the practical work

in _______________ subject at L D College of Engineering, Ahmedabad-380015.

Date of Submission:

_______________

Sign of Faculty:

_______________

Head of Department: _______________

Computer Engineering Department,

L. D. College of Engineering, Ahmedabad-15

Introduction to AI and Machine Learning (114AG01)

L. D. College of Engineering, Ahmedabad

Department of Computer Engineering

Practical List

Subject Name: Introduction to AI and Machine Learning (114AG01)

Term: 2022-2023

Sr.

No.

1

Title

Date

Basics of Python Programming

2

Study about numpy, pandas, Scikit-learn and matplotlib

libraries.

3

Getting Started with Python Logic Programming using

Kanren and SymPy packages

Write the code in Kanren to demonstrate the followings:

a) The use of logical variables.

b) The use of membero goal constructor

Write the code in Kanren to create parent and grandparent

relationships and use it to state facts and query based on

the facts.

4

5

Page Marks

No.

(10)

6

Write the code in Kanren to demonstrate the constraint

system.

7

Write the code in Kanren to match the mathematical

expressions

8

Write the code in python to implement linear regression

for one variable.

9

Write the code in python to implement linear regression

using gradient descent for one variable

10

Write the code in python to implement logistic regression

for single class classification.

11

Write the code in python to implement logistic regression

for multi class classification

Computer Engineering Department,

L. D. College of Engineering, Ahmedabad-15

Sign

Introduction to AI and Machine Learning (114AG01)

L. D. College of Engineering, Ahmedabad

Department of Computer Engineering

Practical Rubrics

Subject Name: Introduction to AI and Machine Learning Subject Code: (114AG01)

Term: 2022-2023

Rubrics ID

Criteria

Marks

Good (2)

Satisfactory (1) Need Improvement (0)

RB1

Regularity

02

RB2

Problem

Analysis and

Development of

Solution

03

RB3

Concept Clarity

and

Understanding

03

Concept is very

clear with proper

understanding

Concept is

clear up to

some extent.

RB4

Documentation

02

Documentation

completed

neatly.

Not up to

standard.

Moderate (4070%)

Limited

Appropriate & Full

Identification of

Identification of the

the Problem /

Problem &

Incomplete

Complete Solution

Solution for the

for the Problem

Problem

High (>70%)

Poor (0-40%)

Very Less Identification

of the Problem / Very

Less Solution for the

Problem

Concept is not clear

Proper format not

followed,

incomplete.

SIGN OF FACULTY

Computer Engineering Department,

L. D. College of Engineering, Ahmedabad-15

Introduction to AI and Machine Learning (114AG01)

L. D. College of Engineering, Ahmedabad

Department of Computer Engineering

LABORATORY PRACTICALS ASSESSMENT

Subject Name: Introduction to AI and Machine Learning (114AG01)

Term: 2022-2023

Enroll. No.:

Name:

Pract.

No.

RB1

RB2

RB3

RB4

Total

Date

Faculty

Sign

1

2

3

4

5

6

7

8

9

10

11

Computer Engineering Department,

L. D. College of Engineering, Ahmedabad-15

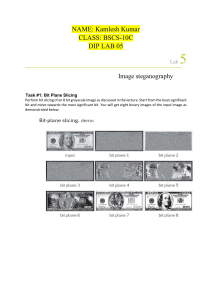

Practical - 1

Aim: Basics of Python Program

1. Write a program to Convert Celsius to Fahrenheit and vice –a-versa based on the

choice of user.

Code :

print("For Celsius to Fahrenhit Enter (1) and For Fahrenhit to Celsius Enter (2)")

choice = int(input())

if(choice == 1):

celsius = float(input())

fahernhit = (9*celsius)/5 +32

print(fahernhit)

else:

fahernhit = float(input())

celsius = (fahernhit - 32)*(5/9)

print(celsius)

Output:

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

2. Write a program in python to implement menu driven simple calculator.

Code :

print("Calculator:")

print("Enter (1) for Addition \nEnter (2) for Subtraction \nEnter (3) for

Multiplication \nEnter (4) for Division")

choice = int(input())

numOne = int(input())

numTwo = int(input())

if(choice==1):

Sum = numTwo+numOne

print(Sum)

elif(choice==2) :

Sub =numOne-numTwo

print(Sub)

elif(choice==3):

Multiplication = numOne*numTwo

print(Multiplication)

elif(choice==4):

division = numOne/numTwo

print(division)

Output :

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

3. Write a program which will allow user to enter 10 numbers and display largest odd

and even number from them. Also display the count of odd and even numbers.

Code :

import array as arr

numbers = [1,2,3,4,5,6,7,8,9,10]

print(numbers)

print(type(numbers))

a = arr.array('i',[])

even = 0

odd = 1

countEven = 0

countOdd = 0

for i in range(0,10):

n = int(input())

a.append(n)

for i in range(0,10):

# print(a[i])

if(a[i]%2 == 0 and a[i]>even):

even = a[i]

countEven += a[i]

elif(a[i]%2!=0 and a[i]>odd):

odd = a[i]

countOdd += a[i]

print(even)

print(odd)

print(countEven)

print(countOdd)

Output :

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

4. Write a Python program to check if the number provided by the user is a Prime

number. Perform it with and without user defined function.

Code :

number = int(input())

count=0

for i in range(2, number):

if (number % i == 0):

count+=1

if(count == 0):

print("This is a prime number")

else:

print("This is not a prime number")

Output :

5. Write a program to create a list of ten numbers entered by user and find smallest

and largest number from the list and display it.

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

Code :

lst = []

large=0

small = 10000000000

for i in range(0,10):

n = int(input())

lst.append(n)

if(lst[i] > large):

large = lst[i]

if(lst[i] < small):

small = lst[i]

print(large)

print(small)

Output :

6. Write a program to create a tuple to students CPI and display it.

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

Code :

cpi = (7.8,8,8.9, 8.5,9,9.1,9.7,8,9,10)

print(cpi)

Output :

7. Write a program to create a set of students’ enrolment number and sort them and

perform addition and deletion operation on it.

Code :

enrollment = {1,22,344,123,12}

print(enrollment)

print("Choose 1 for addition and choose 2 for deletion")

choice = int(input())

if(choice == 1):

number = int(input())

enrollment.add(number)

print(enrollment)

if(choice == 2):

enrollment.pop()

print(enrollment)

print("Sorted Set")

l = list(enrollment)

l.sort()

print(l)

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

Output :

8. Write a program to create a dictionary of student details- EnrollmentNo, Name,

Branch and perform addition and deletion of entry of key-value pair form it.

Code :

student = {'12': {'bhargavi': 'computer'}}

print(student)

student['14'] = {'meet': 'computer'}

print(student)

student['11'] = {'hasti': 'it'}

student['16'] = {'neeta': 'computer'}

print(student)

student.pop('11')

print(student)

Output :

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

9. Write a program to read and display content of the file. Also display number of

lines, words in the given file.

Code :

Output :

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

Practical - 2

Aim: Study about NumPy, pandas, Scikit-learn and matplotlib

libraries.

Code :

import numpy as np

arr_1d=np.array([1,2,3,4,5,6,7])

arr_2d=np.array([[101,90],[102,85],[103,99],[104,78],[105,80]])

print(arr_1d)

print(arr_2d)

[1 2 3 4 5 6 7]

[[101 90]

[102 85]

[103 99]

[104 78]

[105 80]]

Shape of an array-number of elements in each dimension

print(arr_1d.shape)

print(arr_2d.shape)

(7,)

(5, 2)

Size of an array-total number of elements

print(arr_1d.size)

print(arr_2d.size)

7

10

Dimension of an array

print(arr_1d.ndim)

print(arr_2d.ndim)

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

#to define dimension while declaring

arr_3d = np.array([1, 2, 3, 4], ndmin=3)

print(arr_3d)

1

2

[[[1 2 3 4]]]

Indexing

print(arr_1d[0])

print(arr_1d[1] + arr_1d[2])

print(arr_1d[-1])

print(arr_2d[0,1])

print(arr_2d[0][1])

print(arr_2d[1,-2])

1

5

7

90

90

102

Slicing

#arr[x:y] returns elements from index x to y-1

print(arr_1d[0:5])

print(arr_1d[-5:])

#with step

print(arr_1d[::2])

print(arr_1d[-5:-1:3])

#2d array

print(arr_2d[0:3,:])

print(arr_2d[0::2, 0:1])

[1 2 3 4 5]

[3 4 5 6 7]

[1 3 5 7]

[3 6]

[[101 90]

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

[102 85]

[103 99]]

[[101]

[103]

[105]]

Universal Function

#square root af an array

print(np.sqrt(arr_1d))

#absolute values

arr=np.array([-3,-2,-1,0,1,2,3])

print(np.absolute(arr))

#exponents & log

print(np.exp(arr))

print(np.log(arr))

#min/max

print(np.max(arr_1d))

print(np.min(arr_2d[0:,1:]))

#sign

print(np.sign(arr))

#trigonometry

print(np.sin(arr))

print(np.cos(arr))

Copy and View

copyarr=arr.copy()

viewarr=arr.view()

arr[0]=3

print(copyarr)

print(viewarr)

viewarr[0]=3

print(arr)

[ 3 -2 -1 0 1 2 3]

[ 3 -2 -1 0 1 2 3]

[ 3 -2 -1 0 1 2 3]

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

Reshape - Increase and Flat

arr=np.array([1,2,3,4,5,6,7,8,9])

#1D to 2D

arr_1t2=arr.reshape(3,3)

print(arr_1t2)

#1D to 3D

arr_1t3=arr.reshape(3,1,3)

print(arr_1t3)

#3D to 1D

flatarr=arr_1t3.reshape(-1)

print(flatarr)

[[1 2 3]

[4 5 6]

[7 8 9]]

[[[1 2 3]]

[[4 5 6]]

[[7 8 9]]]

[1 2 3 4 5 6 7 8 9]

Sorting

#np.sort() - returns a sorted copy

arr = np.array([12,98,32,25,1])

print(np.sort(arr))

name = np.array(['Raj','Priya','Rahul','Aditi'])

print(np.sort(name))

[ 1 12 25 32 98]

['Aditi' 'Priya' 'Rahul' 'Raj']

Filter

filter_arr = []

for element in arr_1d:

# if the element is higher than 3, set the value to True, otherwise False:

if element > 3:

filter_arr.append(True)

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

else:

filter_arr.append(False)

newarr = arr_1d[filter_arr]

print(filter_arr)

print(newarr)

#another way

filtered = arr_1d > 3

print(filtered)

print(arr_1d[filtered])

[False, False, False, True, True, True, True]

[4 5 6 7]

[False False False True True True True]

[4 5 6 7]

Pandas:

Pandas is a Python library for data manipulation and analysis.

It simplifies working with large datasets, enabling data cleaning, transformation,

and statistical analysis.

Its powerful data structures like Series and DataFrame make data readable and

relevant for decision-making.

import pandas as pd

import numpy as np

from numpy.random import randn

Series

A Series is a one-dimensional labeled array that can hold data of any type.

It is similar to a Python list but provides additional functionalities for data

analysis and manipulation.

result = [9.5,9.3,8.5,9.7,8.5,9,8.7,7,8,7.8]

res_series = pd.Series(result) #series a column in table

res_series

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

0 9.5

1 9.3

2 8.5

3 9.7

4 8.5

5 9.0

6 8.7

7 7.0

8 8.0

9 7.8

dtype: float64

#adding labels

sid = [101,102,103,104,105,106,107,108,109,110]

res_series = pd.Series(result,sid)

res_series

101 9.5

102 9.3

103 8.5

104 9.7

105 8.5

106 9.0

107 8.7

108 7.0

109 8.0

110 7.8

dtype: float64

res_series[102]

9.3

#from dictionary

grade =

{101:"AA",102:"AB",103:"BC",104:"AA",105:"BC",106:"AB",107:"",108:"",10

9:"",110:""}

grade_series = pd.Series(grade)

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

grade_series

101 AA

102 AB

103 BC

104 AA

105 BC

106 AB

107

108

109

110

dtype: object

DataFrame

random_data = randn(3,3) #3rows&3cols

rows = ["A","B","C"]

cols = ["X","Y","Z"]

random_df = pd.DataFrame(random_data,rows,cols)

random_df

#import a csv file

df = pd.read_csv(‘iris.csv’)

df

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

#head(x) - returns first x rows (default 5)

df.head()

#tail(x) - returns last x rows (default 5)

df.tail(3)

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

#information about data

df.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 150 entries, 0 to 149

Data columns (total 6 columns):

# Column Non-Null Count Dtype

--- ------ -------------- ----0 Id 150 non-null int64

1 SepalLengthCm 150 non-null float64

2 SepalWidthCm 150 non-null float64

3 PetalLengthCm 150 non-null float64

4 PetalWidthCm 150 non-null float64

5 Species 150 non-null object

dtypes: float64(4), int64(1), object(1)

memory usage: 7.2+ KB

#statistics of stat

df.describe()

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

df['Species'].value_counts()

Iris-setosa 50

Iris-versicolor 50

Iris-virginica 50

Name: Species, dtype: int64

df['Species'].value_counts(normalize=True)

Iris-setosa 0.333333

Iris-versicolor 0.333333

Iris-virginica 0.333333

Name: Species, dtype: float64

#add columns in data

age=np.zeros(150)

df['Age']=age

df.head(3)

df.insert(1,"Color",[np.nan]*len(df))

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

df.tail(3)

#condional selection

df[df['SepalLengthCm']>5.85].count()

Id 70

SepalLengthCm 70

SepalWidthCm 70

PetalLengthCm 70

PetalWidthCm 70

Species 70

dtype: int64

#multiple condition - &(and) |(or)

df[(df['PetalLengthCm']>3.75) & (df['PetalWidthCm']<1.2)]

#function

def cm_to_inch(x):

return x*0.394

usdf=df.copy()

columns_to_convert = ['SepalLengthCm', 'SepalWidthCm', 'PetalLengthCm',

'PetalWidthCm']

usdf[columns_to_convert] = df[columns_to_convert].apply(cm_to_inch)

usdf.rename(columns={col: col.replace('Cm', 'In') for col in

columns_to_convert}, inplace=True)

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

usdf.head(3)

#sorting

df.sort_values("SepalLengthCm",ascending=False)

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

MatPlotLib:

Matplotlib is a low level graph plotting library in python that serves as a

visualization utility.

import numpy as np

import pandas as pd

from numpy.random import randn

import matplotlib.pyplot as plt

random_data = pd.DataFrame(randn(50,3),columns=["A","B","C"])

random_data.tail(3)

Histograms:

plt.figure(figsize=(4,4))

plt.hist(random_data["B"])

plt.title("Histogram Example")

plt.show()

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

#with grid

plt.figure(figsize=(4,4))

plt.hist(random_data)

plt.title("Histogram Example with Grid")

plt.grid()

plt.show()

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

Areaplot:

plt.figure(figsize=(4,4))

plt.stackplot(range(len(random_data)),random_data["C"].values.T)

plt.title("Areaplot Example")

plt.show()

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

plt.figure(figsize=(4,4))

plt.stackplot(range(len(random_data)),random_data.abs().values.T)

plt.title("Areaplot Example with absolute value")

plt.show()

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

BarChart:

random_data["B"].plot.bar(figsize=(8,3))

plt.title("Barchart Example")

plt.show()

random_data.abs().plot.bar(figsize=(6,4),stacked=True)

plt.title("Barchart Example with stacked abs. data")

plt.show()

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

Line Charts:

random_data["A"].plot.line(figsize=(4,4))

plt.title("LineChart Example")

plt.show()

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

Scatterplot:

random_data.plot.scatter(x="A", y="B",figsize=(4,3))

plt.title("ScatterPlot Example")

plt.show()

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

random_data.plot.scatter(x="A", y="B",c="C",cmap="cool",figsize=(5,3))

plt.title("ScatterPlot Example with color")

plt.show()

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

BoxPlot:

#Presents the summary statistics (such as quartiles, median, and outliers)

random_data.plot.box(cmap="jet",figsize=(5,3))

plt.title("BoxPlot Example")

plt.grid()

plt.show()

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

HexPlots:

#used to visualize the density of points in a scatter plot

random_data.plot.hexbin(x="A",y="B",gridsize=15,figsize=(5,3))

plt.title("HexPlot Example")

plt.show()

KDE chart:

#Kernel Density Estimation - used to estimate the probability density function

(PDF)

random_data.plot.kde(figsize=(5,3))

plt.title("KDE Chart")

plt.show()

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

ScikitLearn:

Scikit-learn provides a wide range of tools and algorithms for various machine

learning tasks.

import numpy as np

import pandas as pd

Dataset Loading

from sklearn.datasets import load_iris

iris = load_iris()

X = iris.data

y = iris.target

print("\nFirst 5 rows of X:\n", X[:5])

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

Splitting the data into training and testing sets

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3,

random_state=42)

print(X.shape,end=" = ")

print(X_train.shape,end=" + ")

print(X_test.shape)

print(y.shape,end=" = ")

print(y_train.shape,end=" + ")

print(y_test.shape)

(150, 4) = (105, 4) + (45, 4)

(150,) = (105,) + (45,)

Preprocessing the data

#scaling the data

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

X_scaled = scaler.fit_transform(X)

print(X[0],end=" -> ")

print(X_scaled[0])

[5.1 3.5 1.4 0.2] -> [-0.90068117 1.01900435 -1.34022653 -1.3154443 ]

#normalization

from sklearn import preprocessing

#L1

data_normalized_l1 = preprocessing.normalize(X, norm='l1')

print("\nL1 normalized data:", data_normalized_l1[0])

#L2

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

data_normalized_l2 = preprocessing.normalize(X, norm='l2')

print("\nL1 normalized data:", data_normalized_l2[0])

L1 normalized data: [0.5 0.34313725 0.1372549 0.01960784]

L1 normalized data: [0.80377277 0.55160877 0.22064351 0.0315205 ]

Choosing a machine learning algorithm

from sklearn.linear_model import LogisticRegression

model = LogisticRegression()

model.fit(X_train, y_train)

Evaluating the model

from sklearn.metrics import accuracy_score

y_pred = model.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)

accuracy

1.0

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

Practical - 3

Aim: Getting Started with Python Logic Programming using Kanren and

SymPy packages.

Code :

Kanren:

Kanren enables the expression of relations and the search for values which satisfy

them. The following code is the "Hello, world!" of logic

programming. It asks for `1` number, `x`, such that `x == 5

Code:

from kanren import run, eq, membero, var, conde

x = var()

run(1, x, eq(x, 5))

(5,)

Sympy:

SymPy is a Python library for symbolic mathematics that provides a wide range of

capabilities, including solving algebraic equations, differentiation,

integration, and more. The following code uses SymPy to solve the equation 2*x + 1

= 5 for the variable x and prints the solution.

Code :

from sympy import symbols, Eq, solve

# Define the variables

x = symbols('x')

# Define the equation

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

equation = Eq(2*x + 1, 5)

# Solve the equation

solution = solve(equation, x)

print(solution)

Output:

[2]

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

Practical - 4

Aim: Write the code in Kanren to demonstrate the followings: a) The use of

logical variables. b) The use of membero goal constructor.

Logical Variables in Kanren:

Logical variables in Kanren represent unknown values or entities in logical relationships. They

act as placeholders for values that need to be determined during the execution of the logic

program, allowing exploration of multiple possible solutions efficiently.

Membero Goal Constructor:

The membero goal in Kanren checks if an element belongs to a list or collection. It finds a value

for the logical variable representing the element, binding it to a valid value present in the list, or it

fails if no valid value is found.

Code :

from kanren import run,var,eq,conde

# Define a goal to find an element in a list using membero

def find_elemento(element, lst):

return membero(element, lst)

# Define some sample data and a logical variable

list_data = [1, 2, 3, 4, 5]

element_var = var()

# Find the logical variable that satisfies the goal

result = run(1, element_var, find_elemento(element_var, list_data))

print(result[0])

Output :

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

Practical - 5

Aim: W rite the code in Kanren to create parent and grandparent relationships

and use it to state facts and query based on the facts.

Code :

from kanren import run, var, eq, conde

# Define the facts

def parent_relation(father, child):

return conde((eq(father, 'John'), eq(child, 'Alice')),

(eq(father, 'John'), eq(child, 'Bob')),

(eq(father, 'Bob'), eq(child, 'Charlie')),

(eq(father, 'Bob'), eq(child, 'David')),

(eq(father, 'David'), eq(child, 'Eva')))

# Define the grandparent relationship

def grandparent_relation(grandparent, grandchild):

parent = var()

return conde((parent_relation(grandparent, parent), parent_relation(parent,

grandchild)))

# Example facts

facts = conde(parent_relation('John', 'Alice'),

parent_relation('John', 'Bob'),

parent_relation('Bob', 'Charlie'),

parent_relation('Bob', 'David'),

parent_relation('David', 'Eva'))

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

x = var()

john_children = run(0, x, parent_relation('John', x))

print("Children of John:", john_children)

y = var()

john_grandchildren = run(0, y, grandparent_relation('John', y))

print("Grandchildren of John:", john_grandchildren)

Output :

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

Practical - 6

Aim: Write the code in Kanren to demonstrate the constraint system.

Code :

def Sum(input_list, iterator, num, counter):

if num <= iterator:

return counter

counter += input_list[iterator]

counter = Sum(input_list, iterator + 1, num, counter)

return counter

input_list = [6, 4, 8, 2, 9]

counter = 0

num = len(input_list)

print(Sum(input_list, 0, num, counter))

Output :

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

Practical - 7

Aim: Write the code in Kanren to match the mathematical expressions.

Code :

from kanren import run, eq, var, conde

def expressiono(x):

a, b = var(), var()

return conde(

(eq(x, 'number')),

(eq(x, 'variable')),

(eq(x, ('sum', a, b))),

(eq(x, ('product', a, b)))

)

expression1 = ('sum', 2, 3)

#2+3

expression2 = ('product', 5, 'x')

#5*x

expression3 = ('sum', 'x', ('product', 2, 4)) # x + (2 * 4)

result = run(1, x, expressiono(x))

print(result[0])

Output :

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

Practical - 8

Aim: Write the code in python to implement linear regression for one variable.

Code :

import numpy as np

import matplotlib.pyplot as plt

def estimate_coef(x, y):

# number of observations/points

n = np.size(x)

# mean of x and y vector

m_x = np.mean(x)

m_y = np.mean(y)

# calculating cross-deviation and deviation about x

SS_xy = np.sum(y*x) - n*m_y*m_x

SS_xx = np.sum(x*x) - n*m_x*m_x

# calculating regression coefficients

b_1 = SS_xy / SS_xx

b_0 = m_y - b_1*m_x

return (b_0, b_1)

def plot_regression_line(x, y, b):

# plotting the actual points as scatter plot

plt.scatter(x, y, color = "m",

marker = "o", s = 30)

# predicted response vector

y_pred = b[0] + b[1]*x

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

# plotting the regression line

plt.plot(x, y_pred, color = "g")

# putting labels

plt.xlabel('x')

plt.ylabel('y')

# function to show plot

plt.show()

def main():

# observations / data

x = np.array([0, 1, 2, 3, 4, 5, 6, 7, 8, 9])

y = np.array([1, 3, 2, 5, 7, 8, 8, 9, 10, 12])

# estimating coefficients

b = estimate_coef(x, y)

print("Estimated coefficients:\nb_0 = {} \

\nb_1 = {}".format(b[0], b[1]))

# plotting regression line

plot_regression_line(x, y, b)

if __name__ == "__main__":

main()

Output :

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

Practical - 9

Aim: Write the code in python to implement linear regression using gradient

descent for one variable.

Code :

import numpy as np

import matplotlib.pyplot as plt

def linear_regression_gradient_descent(X, y, learning_rate=0.01,

num_iterations=1000):

"""

Perform linear regression using gradient descent for one variable.

Parameters:

X (array-like): Input features (independent variable).

y (array-like): Target values (dependent variable).

learning_rate (float): The learning rate for gradient descent. Default is

0.01.

num_iterations (int): The number of iterations for gradient descent. Default

is 1000.

Returns:

theta (float): The learned slope of the linear regression line.

intercept (float): The learned intercept of the linear regression line.

cost_history (list): The history of cost (loss) values during gradient

descent.

"""

m = len(y) # Number of data points

theta = 0.0

intercept = 0.0

cost_history = []

for _ in range(num_iterations):

# Make predictions

y_pred = theta * X + intercept

# Calculate the cost (mean squared error)

cost = np.sum((y_pred - y) ** 2) / (2 * m)

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

cost_history.append(cost)

# Compute the gradients

d_theta = np.sum((y_pred - y) * X) / m

d_intercept = np.sum(y_pred - y) / m

# Update parameters using gradient descent

theta -= learning_rate * d_theta

intercept -= learning_rate * d_intercept

return theta, intercept, cost_history

# Sample data for demonstration

X = np.array([1, 2, 3, 4, 5])

y = np.array([3, 6, 4, 8, 7])

# Perform linear regression

theta, intercept, cost_history = linear_regression_gradient_descent(X, y)

# Print the learned parameters

print(f"Slope (Theta): {theta}")

print(f"Intercept: {intercept}")

# Plot the cost history

plt.plot(range(len(cost_history)), cost_history)

plt.xlabel('Iterations')

plt.ylabel('Cost')

plt.title('Cost History during Gradient Descent')

plt.show()

Output :

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

Practical - 10

Aim: Write the code in python to implement logistic regression for single class

classification.

Code :

Program:

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import accuracy_score, confusion_matrix

from sklearn import metrics

Titanic Dataset

• PassengerId: A unique identif ier for each passenger.

• Survived: Indicates whether the passenger survived or not (0 = No, 1 = Yes).

• Pclass: The ticket class of the passenger (1 = 1st class, 2 = 2nd class, 3 = 3rd

class).

• Name: The name of the passenger.

• Sex: The gender of the passenger (male or female).

• Age: The age of the passenger in years.

• SibSp: The number of siblings/spouses aboard the Titanic.

• Parch: The number of parents/children aboard the Titanic.

• Ticket: The ticket number.

• Fare: The fare paid for the ticket.

• Cabin: The cabin number of the passenger.

• Embarked: The port of embarkation (C = Cherbourg, Q = Queenstown, S =

Southampton).

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

#loading the data

df = pd.read_csv("Titanic.csv")

df.head()

df.info()

Data Preprocessing

Removing Unnecessary Columns

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

The "PassengerID", "Name", "Ticket", and "Embarked" columns are removed as

they are not useful for survival prediction.

df.drop(["PassengerId", "Name", "Ticket", "Embarked"], axis=1, inplace=True)

df.info()

Cabin feature has a large number(327) of missing values and may not provide

signif icant predictive power for survival outcomes.

df.drop(["Cabin"],axis=1,inplace=True)

df.info()

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

Converting "sex" Column to Integer - "male" to 1 and "female" to 0

df['Sex']=df['Sex'].apply(lambda x: 1 if x=='male' else 0)

df.head(3)

Filling the missing values

The "age" and "fare" features have 86 and 1 null values, respectively. These values

can be replaced by mean, median, or mode.

df['Age'].fillna(df['Age'].mean(),inplace=True)

df['Age'].isna().sum()

0

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

df['Fare'].fillna(df['Fare'].mean(),inplace=True)

df['Fare'].isna().sum()

0

Feature Selection

Defining dependent and independent variables

X = df.drop(['Survived'],axis=1)

y = df['Survived']

print(X.shape,y.shape)

(418, 6) (418,)

Spliting data into training set and testing set

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2,

random_state=42)

print("Training set Shape : ",X_train.shape,y_train.shape)

print("Testing set Shape : ",X_test.shape,y_train.shape)

Training set Shape : (334, 6) (334,)

Testing set Shape : (84, 6) (334,)

Model Training

Create and f it the Logistic Regression model

model = LogisticRegression()

model.fit(X_train,y_train)

Crete the predictions on the testing set

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

y_pred = model.predict(X_test)

Model Evaluation

accuracy = accuracy_score(y_test,y_pred)

print("Accuracy: ",accuracy)

Accuracy: 1.0

print(metrics.classification_report(y_test,y_pred))

import seaborn as sn

plt.figure(figsize = (5,5))

sn.heatmap(confusion_matrix(y_test,y_pred), annot=True, cmap="magma")

plt.xlabel('Predicted')

plt.ylabel('Truth')

coefficients = model.coef_[0]

feature_names = X.columns

plt.barh(feature_names, coefficients)

plt.xlabel('Coefficient Value')

plt.ylabel('Feature')

plt.title('Coefficient Plot')

plt.show()

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

Practical - 11

Aim: Write the code in python to implement logistic regression for multi class

classification.

Code :

import pandas as pd

import numpy as mp

import matplotlib.pyplot as plt

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import train_test_split

from sklearn import metrics

from sklearn.metrics import accuracy_score, confusion_matrix

import seaborn as sns

Iris Dataset

•

•

•

•

•

•

Id: A unique identif ier for each entry.

SepalLengthCm: Sepal length in centimeters.

SepalWidthCm: Sepal width in centimeters.

PetalLengthCm: Petal length in centimeters.

PetalWidthCm: Petal width in centimeters.

Species: The target variable indicating the species of Iris f lowers (setosa,

versicolor, virginica).

#loading the data

df = pd.read_csv("Iris.csv")

df.head(3)

df.info()

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

Data Preprocessing

Removing Unnecessary Columns

The "Id" column is removed as it is not useful for species prediction.

df.drop(['Id'],axis=1,inplace=True)

df.info()

Converting "Species" Column to Integer

df['Species'].unique()

array(['Iris-setosa', 'Iris-versicolor', 'Iris-virginica'], dtype=object)

Let's set

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

• Iris-setosa as 1

• Iris-versicolor as 2

• Iris-virginica as 3

df['Species']=df['Species'].apply(lambda x: 1 if x=="Iris-setosa" else 2 if x=="Irisversicolor" else 3)

df['Species'].unique()

array([1, 2, 3])

Feature Selection

Def ining dependent and independent variables

X = df.drop(['Species'],axis=1)

y = df['Species']

print(X.shape,y.shape)

(150, 4) (150,)

Spliting data into training set and testing set

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2,

random_state=42)

print("Training set Shape : ",X_train.shape,y_train.shape) print("Testing set Shape :

",X_test.shape,y_train.shape)

Training set Shape : (120, 4) (120,)

Testing set Shape : (30, 4) (120,)

Model Training

Create and f it the Logistic Regression model

model = LogisticRegression()

model.fit(X_train,y_train)

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

Crete the predictions on the testing set

y_pred = model.predict(X_test)

Model Evaluation

accuracy = accuracy_score(y_test,y_pred)

print("Accuracy: ",accuracy)

Accuracy: 1.0

print(metrics.classification_report(y_test,y_pred))

plt.figure(figsize = (7,5)) sns.heatmap(confusion_matrix(y_test,y_pred), annot=True,

cmap="magma")

plt.xlabel('Predicted')

plt.ylabel('Truth')

Text(58.222222222222214, 0.5, 'Truth')

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

coefficients = model.coef_[0]

feature_names = X.columns

plt.barh(feature_names, coefficients)

plt.xlabel('Coefficient Value')

plt.ylabel('Feature')

plt.title('Coefficient Plot')

plt.show()

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4

210280107065

Jethva Bhargavi

BE/LDCE/CE/SEM-4