UNIT III

SORTING AND SEARCHING

Bubble sort – selection sort – insertion sort – merge sort – quick sort – analysis of sorting

algorithms – linear search – binary search – hashing – hash functions – collision handling –

load factors, rehashing, and efficiency

SORTING:

Sorting is arranging the given elements either in ascending order or descending order.

A Sorting Algorithm is used to rearrange a given array or list of elements according to a

comparison operator on the elements.

By sorting data, it is easier to search through it quickly and easily.

The simplest example of sorting is a dictionary.

CATEGORIES OF SORTING:

The techniques of sorting can be divided into two categories. These are:

Internal Sorting

External Sorting

Internal sorting: If the input data is such that it can be adjusted in the main memory at once, it

is called internal sorting.

External sorting: If the input data is such that it cannot be adjusted in the memory entirely at

once, it needs to be stored in a hard disk, floppy disk, or any other storage device. This is called

external sorting.

Different Sorting Algorithms

Bubble Sort

Selection Sort

Insertion Sort

Merge Sort

Quicksort

Counting Sort

Radix Sort

Bucket Sort

Heap Sort

Shell Sort

Complexity of Sorting Algorithms

The efficiency of any sorting algorithm is determined by the time complexity and space

complexity of the algorithm.

1. Time Complexity: Time complexity refers to the time taken by an algorithm to complete its

execution with respect to the size of the input. It can be represented in different forms:

Big-O notation (O)

Omega notation (Ω)

Theta notation (Θ)

2. Space Complexity: Space complexity refers to the total amount of memory used by the

algorithm for a complete execution. It includes both the auxiliary memory and the input.

BUBBLE SORT

Bubble sort is a sorting algorithm that compares two adjacent elements and swaps them

until they are in the intended order.

The bubble sort uses a straightforward logic that works by repeating swapping the adjacent

elements if they are not in the right order. It compares one pair at a time and swaps if the first

element is greater than the second element; otherwise, move further to the next pair of elements

for comparison.

Working of Bubble Sort

Suppose we are trying to sort the elements in ascending order.

First Iteration (Compare and Swap)

1. Starting from the first index, compare the first and the second elements.

2. If the first element is greater than the second element, they are swapped.

3. Now, compare the second and the third elements. Swap them if they are not in order.

4. The above process goes on until the last element

Remaining Iteration

1. The same process goes on for the remaining iterations.

2. After each iteration, the largest element among the unsorted elements is placed at the end.

Example We are creating a list of element, which stores the integer numbers

list1 = [5, 3, 8, 6, 7, 2]

Here the algorithm sort the elements

First iteration

[5, 3, 8, 6, 7, 2]

here 5>3 then swap with each other.

[3, 5, 8, 6, 7, 2]

5 < 8 then no swap taken place.

[3, 5, 8, 6, 7, 2]

8>6 then swap with each other

[3, 5, 6, 8, 7, 2]

8>7 then swap with each other

[3, 5, 6, 7, 8, 2]

8>2 then swap with each other

[3, 5, 6, 7, 2, 8]

Here the first iteration is complete and we get the largest element at the end. Now we need to the

len(list1) - 1

Second Iteration

[3, 5, 6, 7, 2, 8] - > [3, 5, 6, 7, 2, 8] here, 3<5 then no swap taken place

[3, 5, 6, 7, 2, 8] - > [3, 5, 6, 7, 2, 8] here, 5<6 then no swap taken place

[3, 5, 6, 7, 2, 8] - > [3, 5, 6, 7, 2, 8] here, 6<7 then no swap taken place

[3, 5, 6, 7, 2, 8] - > [3, 5, 6, 2, 7, 8] here 7>2 then swap their position. Now

[3, 5, 6, 2, 7, 8] - > [3, 5, 6, 2, 7, 8] here 7<8 then no swap taken place.

Third Iteration

[3, 5, 6, 2, 7, 8] - > [3, 5, 6, 7, 2, 8] here, 3<5 then no swap taken place

[3, 5, 6, 2, 7, 8] - > [3, 5, 6, 7, 2, 8] here, 5<6 then no swap taken place

[3, 5, 6, 2, 7, 8] - > [3, 5, 2, 6, 7, 8] here, 6>2 then swap their positions

[3, 5, 2, 6, 7, 8] - > [3, 5, 2, 6, 7, 8] here 6<7 then no swap taken place. Now

[3, 5, 2, 6, 7, 8] - > [3, 5, 2, 6, 7, 8] here 7<8 then swap their position.

Fourth Iteration

[3, 5, 2, 6, 7, 8] - > [3, 5, 2, 6, 7, 8] here, 3<5 then no swap taken place

[3, 5, 2, 6, 7, 8] - > [3, 2, 5, 6, 7, 8] here 5>2 then swap their position.

[3, 2, 5, 6, 7, 8] - > [3, 2, 5, 6, 7, 8] here 5<6 then no swap taken place

[3, 2, 5, 6, 7, 8] - > [3, 2, 5, 6, 7, 8] here 6<7 then no swap taken place

[3, 2, 5, 6, 7, 8] - > [3, 2, 5, 6, 7, 8] here 7<8 then no swap taken place

Fifth Iteration

[3, 2, 5, 6, 7, 8] - > [2, 3, 5, 6, 7, 8]here 3>2 then swap their position.

Bubble sort Example:

def bsort(array):

for i in range(len(array)-1):

for j in range(0,len(array)-i-1):

if(array[j]>array[j+1]):

array[j],array[j+1]=array[j+1],array[j]

list1=[5,3,8,6,7,2]

print("The unsoted list is",list1)

print("sorted list is",bsort(list1))

OUTPUT:

The unsoted list is [5, 3, 8, 6, 7, 2]

sorted list is [2, 3, 5, 6, 7, 8]

Time Complexities

Worst Case Complexity: O(n2)

If we want to sort in ascending order and the array is in descending order then the worst case

occurs.

Best Case Complexity: O(n)

If the array is already sorted, then there is no need for sorting.

Average Case Complexity: O(n2)

It occurs when the elements of the array are in jumbled order (neither ascending nor descending).

2. Space Complexity

Space complexity is O(1) because an extra variable is used for swapping.

SELECTION SORT

Selection sort is a sorting algorithm that selects the smallest element from an unsorted list in each

iteration and places that element at the beginning of the unsorted list.

selection sort algorithm sorts an array by repeatedly finding the minimum element (considering

ascending order) from unsorted part and putting it at the beginning. The algorithm maintains two

subarrays in a given array.

1) The subarray which is already sorted.

2) Remaining subarray which is unsorted. In every iteration of selection sort, the minimum

element (considering ascending order) from the unsorted subarray is picked and moved to the

sorted subarray.

Working of Selection Sort

1. Set the first element as minimum.Select first element as minimum

2. Compare minimum with the second element. If the second element is smaller

than minimum, assign the second element as minimum.

3. Compare minimum with the third element. Again, if the third element is smaller, then

assign minimum to the third element otherwise do nothing. The process goes on until the

last element.Compare minimum with the remaining element

4. After each iteration, minimum is placed in the front of the unsorted list.

5. Swap the first with minimum

6. For each iteration, indexing starts from the first unsorted element. Step 1 to 3 are repeated

until all the elements are placed at their correct positions.

Selection Sort:

def ssort(a):

for i in range(len(a)):

min=i

for j in range(i+1,len(a)):

if(a[j]<a[min]):

min=j

a[i],a[min]=a[min],a[i]

list1=[8,3,9,5,1]

print("The unsorted list of element are",list1)

print("The sorted list of element are",ssort(list1))

OUTPUT:

The unsorted list of element are [8, 3, 9, 5, 1]

The sorted list of element are [1, 3, 5, 8, 9]

Time Complexity

Worst Case Complexity: O(n2)

If we want to sort in ascending order and the array is in descending order then, the worst case

occurs.

Best Case Complexity: O(n2)

It occurs when the array is already sorted

Average Case Complexity: O(n2)

It occurs when the elements of the array are in jumbled order (neither ascending nor descending).

Space Complexity:

Space complexity is O(1) because an extra variable temp is used.

INSERTION SORT:

Insertion sort is the simple method of sorting an array. In this technique, the array is

virtually split into the sorted and unsorted part.

An element from unsorted part is picked and is placed at correct position in the sorted

part. Insertion sort works similarly as we sort cards in our hand in a card game.

We assume that the first card is already sorted then, we select an unsorted card. If the

unsorted card is greater than the card in hand, it is placed on the right otherwise, to the

left. In the same way, other unsorted cards are taken and put in their right place.

Working of Insertion Sort

Suppose we need to sort the following array.

Initial array

The first element in the array is assumed to be sorted. Take the second element and store

it separately in key.

Compare key with the first element. If the first element is greater than key, then key is

placed in front of the first element.

If the first element is greater than key, then key is placed in front of the first element.

Now, the first two elements are sorted.

Take the third element and compare it with the elements on the left of it. Placed it just

behind the element smaller than it. If there is no element smaller than it, then place it at

the beginning of the array.

Place 1 at the beginning Similarly, place every unsorted element at its correct position.

Example Program:

def isort(array):

for i in range(1,len(array)):

key=array[i]

j=i-1

while(j>=0 and key<a[j]):

a[j+1]=a[j]

j-=1

a[j+1]=key

list1=[2,6,8,4,1]

print("The given list is",list1)

print("The sorted list is",isort(list1))

OUTPUT:

The given list is [2, 6, 8, 4, 1]

The sorted list is [1, 2, 4, 6, 8]

Insertion Sort Complexity

Time Complexity

Best

O(n)

Worst

O(n2)

Average

O(n2)

Space Complexity

O(1)

MERGE SORT

Merge Sort is one of the most popular sorting algorithms that is based on the principle of Divide

and Conquer Algorithm.

Here, a problem is divided into multiple sub-problems. Each sub-problem is solved individually.

Finally, sub-problems are combined to form the final solution.

Divide and Conquer Strategy

Using the Divide and Conquer technique, we divide a problem into sub problems. When the

solution to each sub problem is ready, we 'combine' the results from the sub problems to solve the

main problem.

Suppose we had to sort an array A. A sub problem would be to sort a sub-section of this array

starting at index p and ending at index r, denoted as A[p..r].

Divide

If q is the half-way point between p and r, then we can split the subarray A[p..r] into two arrays

A[p..q] and A[q+1, r].

Conquer

In the conquer step, we try to sort both the subarrays A[p..q] and A[q+1, r]. If we haven't yet

reached the base case, we again divide both these subarrays and try to sort them.

Combine

When the conquer step reaches the base step and we get two sorted subarrays A[p..q] and A[q+1,

r] for array A[p..r], we combine the results by creating a sorted array A[p..r] from two sorted

subarrays A[p..q] and A[q+1, r].

Merge Sort Algorithm

The Merge Sort function repeatedly divides the array into two halves until we reach a stage where

we try to perform Merge Sort on a subarray of size 1 i.e. p == r.

After that, the merge function comes into play and combines the sorted arrays into larger arrays

until the whole array is merged.

To sort an entire array, we need to call MergeSort(A, 0, length(A)-1).

As shown in the image below, the merge sort algorithm recursively divides the array into halves

until we reach the base case of array with 1 element. After that, the merge function picks up the

sorted sub-arrays and merges them to gradually sort the entire array.

The merge Step of Merge Sort

The merge step is the solution to the simple problem of merging two sorted lists(arrays) to build

one large sorted list(array).

The algorithm maintains three pointers, one for each of the two arrays and one for maintaining the

current index of the final sorted array.

The merge function works as follows:

1. Create copies of the subarrays L ← A[p..q] and M ← A[q+1..r].

2. Create three pointers i, j and k

a. i maintains current index of L, starting at 1

b. j maintains current index of M, starting at 1

c. k maintains the current index of A[p..q], starting at p.

3. Until we reach the end of either L or M, pick the larger among the elements

from L and M and place them in the correct position at A[p..q]

4. When we run out of elements in either L or M, pick up the remaining elements and put

in A[p..q]

def mergesort(array):

if(len(array)>1):

q=len(array)//2

l=array[:q]

r=array[q:]

mergesort(l)

mergesort(r)

i=j=k=0

while(i<len(l) and j<len(r)):

if(l[i]<r[j]):

array[k]=l[i]

i+=1

else:

array[k]=r[j]

j+=1

k+=1

while(i<len(l)):

array[k]=l[i]

i+=1

k+=1

while(j<len(r)):

array[k]=r[j]

j+=1

k+=1

def printlist(a):

for i in range(len(a)):

print(a[i])

array = [6, 5, 12, 10, 9, 1]

print("The unsorted array is",array)

mergesort(array)

print("Sorted array is: ")

printlist(array)

OUTPUT:

The unsorted array is [6, 5, 12, 10, 9, 1]

Sorted array is:

1 5 6 9 10 12

QUICKSORT ALGORITHM

Quicksort is a sorting algorithm based on the divide and conquer approach where An

array is divided into subarrays by selecting a pivot element (element selected from the

array).

While dividing the array, the pivot element should be positioned in such a way that

elements less than pivot are kept on the left side and elements greater than pivot are on the

right side of the pivot.

The left and right subarrays are also divided using the same approach. This process

continues until each subarray contains a single element.

At this point, elements are already sorted. Finally, elements are combined to form a sorted

array.

Divide: In Divide, first pick a pivot element. After that, partition or rearrange the array into

two sub-arrays such that each element in the left sub-array is less than or equal to the pivot

element and each element in the right sub-array is larger than the pivot element.

Conquer: Recursively, sort two subarrays with Quicksort.

Combine: Combine the already sorted array.

Quicksort picks an element as pivot, and then it partitions the given array around the picked

pivot element. In quick sort, a large array is divided into two arrays in which one holds

values that are smaller than the specified value (Pivot), and another array holds the values

that are greater than the pivot.

After that, left and right sub-arrays are also partitioned using the same approach. It will

continue until the single element remains in the sub-array.

Working of Quick Sort Algorithm

To understand the working of quick sort, let's take an unsorted array. It will make the concept more

clear and understandable.

Let the elements of array are -

In the given array, we consider the leftmost element as pivot. So, in this case, a[left] = 24, a[right]

= 27 and a[pivot] = 24.

Since, pivot is at left, so algorithm starts from right and move towards left.

Now, a[pivot] < a[right], so algorithm moves forward one position towards left, i.e. -

Now, a[left] = 24, a[right] = 19, and a[pivot] = 24.

Because, a[pivot] > a[right], so, algorithm will swap a[pivot] with a[right], and pivot moves to

right, as -

Now, a[left] = 19, a[right] = 24, and a[pivot] = 24. Since, pivot is at right, so algorithm starts from

left and moves to right.

As a[pivot] > a[left], so algorithm moves one position to right as -

Now, a[left] = 9, a[right] = 24, and a[pivot] = 24. As a[pivot] > a[left], so algorithm moves one

position to right as -

Now, a[left] = 29, a[right] = 24, and a[pivot] = 24. As a[pivot] < a[left], so, swap a[pivot] and

a[left], now pivot is at left, i.e. -

Since, pivot is at left, so algorithm starts from right, and move to left. Now, a[left] = 24, a[right] =

29, and a[pivot] = 24. As a[pivot] < a[right], so algorithm moves one position to left, as -

Now, a[pivot] = 24, a[left] = 24, and a[right] = 14. As a[pivot] > a[right], so, swap a[pivot] and

a[right], now pivot is at right, i.e. -

Now, a[pivot] = 24, a[left] = 14, and a[right] = 24. Pivot is at right, so the algorithm starts from

left and move to right.

Now, a[pivot] = 24, a[left] = 24, and a[right] = 24. So, pivot, left and right are pointing the same

element. It represents the termination of procedure.

Element 24, which is the pivot element is placed at its exact position.

Elements that are right side of element 24 are greater than it, and the elements that are left side of

element 24 are smaller than it.

Now, in a similar manner, quick sort algorithm is separately applied to the left and right sub-arrays.

After sorting gets done, the array will be -

Quicksort complexity

1. Time Complexity

Case

Best Case

Average Case

Worst Case

Time Complexity

O(n*logn)

O(n*logn)

O(n2)

2. Space Complexity

Space Complexity

Example Program:

O(n*logn)

An array is split into smaller arrays using the divide-and-conquer strategy in the sorting algorithm

known as Quicksort (item taken from the array).

When splitting the given array, the pivot component should be set so that items of value greater

than the pivot component are located on the right side, and components with a value less than the

pivot value are stored on the left side.

The same method is used to divide the right and left subarrays. This procedure is repeated until

only one element remains in each subarray.

The elements have already been sorted at this point. The combination of the items creates a sorted

array at the end.

def quicksort(number, first, last):

if(first<last):

pivot=first

i=first+1

j=last

while(i<j):

while number[i]<number[pivot] and i<last:

i=i+1

while number[j]>number[pivot] and j>pivot:

j=j-1

if(i<j):

number[i],number[j]=number[j],number[i]

number[pivot],number[j]=number[j],number[pivot]

quicksort(number,first,j-1)

quicksort(number,j+1,last)

data = [1, 7, 4, 1, 10, 9, 2]

print("Unsorted Array")

print(data)

size = len(data)

quickSort(data, 0, size - 1)

print('Sorted Array in Ascending Order:')

print(data)

OUTPUT:

Unsorted Array

[1, 7, 4, 1, 10, 9, 2]

Sorted Array in Ascending Order:

[1, 1, 2, 4, 7, 9, 10]

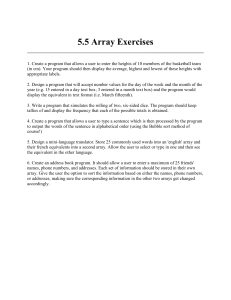

Let's see a complexity analysis of different sorting algorithms.

Sorting

Algorithm

Time

Complexity Best

Time

Complexity Worst

Time

Complexity

- Average

Space

Complexity

Bubble

Sort

n

n2

n2

1

Selection

Sort

n2

n2

n2

1

Insertion

Sort

n

n2

n2

1

Merge

Sort

nlog n

nlog n

nlog n

n

Quicksort

nlog n

n2

nlog n

log n

SEARCHING

Searching is an operation or a technique that helps finds the place of a given element or value

in the list. Any search is said to be successful or unsuccessful depending upon whether the element

that is being searched is found or not. Some of the standard searching technique that is being

followed in the data structure is listed below:

Linear Search or Sequential Search

Binary Search

Linear Search

Linear Search is defined as a sequential search algorithm that starts at one end and goes

through each element of a list until the desired element is found, otherwise the search

continues till the end of the data set. It is the easiest searching algorithm

Working of Linear search

Now, let's see the working of the linear search Algorithm.

To understand the working of linear search algorithm, let's take an unsorted array. It will be easy

to understand the working of linear search with an example.

Let the elements of array are -

Let the element to be searched is K = 41

Now, start from the first element and compare K with each element of the array.

The value of K, i.e., 41, is not matched with the first element of the array. So, move to the next

element. And follow the same process until the respective element is found.

Now, the element to be searched is found. So algorithm will return the index of the element

matched.

Linear Search complexity

Now, let's see the time complexity of linear search in the best case, average case, and worst case.

We will also see the space complexity of linear search.

Time Complexity

Case

Time Complexity

Best Case

O(1)

Average Case

O(n)

Worst Case

O(n)

Space Complexity

Space Complexity

O(1)

Example program:

def linear_Search(list1, n, key):

for i in range(0, n):

if (list1[i] == key):

return i

return -1

list1 = [1 ,3, 5, 4, 7, 9]

key = 3

n = len(list1)

res = linear_Search(list1, n, key)

if(res == -1):

print("Element not found")

else:

print("Element found at index: ", res)

OUTPUT:

Element found at index: 2

BINARY SEARCH:

The recursive method of binary search follows the divide and conquer approach.

Let the elements of array are -

Let the element to search is, K = 56

We have to use the below formula to calculate the mid of the array mid = (beg + end)/2

So, in the given array -

beg = 0

end = 8

mid = (0 + 8)/2 = 4. So, 4 is the mid of the array.

Now, the element to search is found. So algorithm will return the index of the element matched.

Binary Search complexity

Now, let's see the time complexity of Binary search in the best case, average case, and worst case.

We will also see the space complexity of Binary search.

Time Complexity

Case

Time Complexity

Best Case

O(1)

Average Case

O(logn)

Worst Case

O(logn)

Space Complexity

Space Complexity

O(1)

Example Program:

def binary_search(list1, key):

low = 0

high = len(list1) - 1

mid = 0

while low <= high:

mid = (high + low) // 2

if list1[mid] < key:

low = mid + 1

elif list1[mid] > key:

high = mid - 1

else:

return mid

return -1

# Initial list1

list1 = [12, 24, 32, 39, 45, 50, 54]

key = 45

# Function call

result = binary_search(list1, key)

if result != -1:

print("Element is present ")

else:

print("Element is not present in list1")

OUTPUT:

Element is present.

HASHING:

Hashing is a technique or process of mapping keys, and values into the hash table by using

a hash function. It is done for faster access to elements. The efficiency of mapping depends on the

efficiency of the hash function used.

Hash Table

The Hash table data structure stores elements in key-value pairs where

Key- unique integer that is used for indexing the values

Value - data that are associated with keys.

Key and Value in Hash table

HASH FUNCTION:

A Hash Function is a function that converts a given numeric or alphanumeric key to a

small practical integer value.

The mapped integer value is used as an index in the hash table.

In simple terms, a hash function maps a significant number or string to a small integer

that can be used as the index in the hash table.

The pair is of the form (key, value), where for a given key, one can find a value using

some kind of a “function” that maps keys to values.

The key for a given object can be calculated using a function called a hash function. For

example, given an array A, if iis the key, then we can find the value by simply looking

up A[i].

Types of Hash functions

Methods to find Hash Function:

1. Folding Method.

2. Mid-Square Method.

3. Pseudo random number generator method

4. Digit or Character extraction method

5. Modulo Method.

1. Folding method

This method involves splitting keys into two or more parts each of which has the same length as

the required address and then adding the parts to form the hash function.

Two types

1. Fold shifting method

2. Fold boundary method

1.1 Fold shifting method

key = 123203241

Partition key into 3 parts of equal length.

123, 203 & 241

Add these three parts

123+203+241 = 567 is the hash value

1.2 Fold boundary method

Similar to fold shifting except the boundary parts are reversed

123203241 is the key

3 parts 123, 203, 241

Reverse the boundary partitions

321, 302, 142

321 + 302 + 142 = 666 is the hash value

2. Mid square method

The key is squared and the middle part of the result is taken as the hash value based on the number

or digits required for addressing.

H(X) = middle digits of X²

• For example:

– Map the key 2453 into a hash table of size 1000.

– Now X = 2453 and X² = 6017209

– Extracted middle value “172“ is the hash value

3. Digit or Character extraction method

This method extracts the selected digits from the key

Example:

Map the key 123203241 to a hash table size of 1000

Select the digits from the positions 2 , 5, 8 .

Now the hash value = 204

4. Pseudo random number generator method

This method generates random number given a seed as parameter and the resulting random

number then scaled into the possible address range using modulo division.

The random number produced can be transformed to produce a hash value.

5. Modulo Method:

1. Computes hash value from key using the % operator.

2. H(key) = Key % Table_size

If the input keys are integers then simply Key mod TableSize is a general strategy.

For example:

– Map the key 4 into a hash table of size 8

– H(4) = 4 % 4 = 0

COLLISION HANDLING:

Collision is a Situation in which, the hash function returns the same hash key for more than one

record/key value.

The process of finding another position for the collide record is called Collision Resolution

strategy.

Example: 37, 24, 7

(table size n=5).

There are various Collision Resolution Techniques:

1.

2.

3.

4.

Separate Chaining/Open Hashing.

Open Addressing/Closed Hashing.

Rehashing.

Extendible Hashing

1. OPEN HASHING/SEPARATE CHAINING

It is an open hashing technique.

A pointer field is added to each record location

Each bucket in the hash table is the head of a linked list. All elements that hash to same

value are linked together.

The first strategy, commonly known as either open hashing, or separate chaining, is to

keep a list of all elements that hash to the same value.

For convenience, our lists have headers.

A pointer field is added to each record location, when an overflow occurs, this pointer is set to

overflow blocks making a Linked list.

Example:

10, 11, 81, 7, 34, 94, 17, 29,89

n=10

Advantages:

1. More number of elements can be inserted than the table size.

2. Collision resolution is simple and efficient.

Disadvantages:

1. It requires a pointer that occupies more space.

2. It takes more effort to perform search, since it takes time to evaluate the hash function and

also to traverse the list

3. Elements are not evenly distributed.

2. OPEN ADDRESSING/CLOSED HASHING

Open Addressing:

It is a closed hashing technique.

In this method, if collision occurs, alternative cells are tried until an empty cell is found.

There are three common methods

1. Linear probing

2. Quadratic probing

3. Double hashing

2.1. Linear probing

Probing is a process of getting next available hash table array cell.

Whenever collision occurs, cells are searched sequentially for an empty cell.

When 2 record/key value has the same hash key, then 2nd record is placed linearly down

(wrap around), whenever the empty index is found.

If Collision Occurs, new hash values is computed:

Based on quadratic function: [Hi(x) = Hash(X) + i mod Tablesize]

Example:

To insert 42, 39, 69,21,71,55 to the hash table of size 10 using linear probing

1. H0(42) = 42 % 10 = 2

2. H0(39) = 39 %10 = 9

3. H0(69) = 69 % 10 = 9 collides with 39

H1(69) = (9+1) % 10 = 10 % 10 = 0 [Hi(x) = Hash(X) + i mod Tablesize]

4. H0(21) = 21 % 10 = 1

5. H0(71) = 71 % 10 = 1 collides with 21

H1(71) = (1 +1) % 10 = 2 % 10 = 2 collides with 42

H2(71) = (2 +1) % 10 = 3 % 10 = 3

H1(71) = (1 +1) % 10 = 2 % 10 = 2 collides with 42 H2(71) = (2 +1) % 10 = 3 % 10 = 3.

6.H0(55) = 55 % 10 = 5

//Closed hash table with linear probing, after each insertion

Advantages

1. It doesn‟t require pointers

2. Suffers from primary clustering.

• Disadvantages

1. It forms clusters that degrades the performance of the hash table

2. Increased search time.

2.2. QUADRATIC PROBING:

Quadratic probing is a collision resolution method that eliminates the primary clustering

problem of linear probing.

(Primary clustering is one of two major failure modes of open addressing based hash tables,

especially those using linear probing. Whenever another record is hashed to anywhere within

the cluster, it grows in size by one cell.)

If Collision Occurs, new hash values is computed:

Based on quadratic function i.e., Hi(x) = Hash(X) + i² mod Tablesize

Example: To insert 89,18, 49, 58, 69 to the hash table of size 10 using quadratic probing.

1. H0(89) = 89 %10 =9

2. H0(18) = 18 %10 =8

3. H0(49) = 49 %10 =9 collides with 89

H1(49) = (9+1 ) % 10 = 10 % 10 = 0

4. H0(58) = 58 %10 = 8 collides with 18

H1(58) = (8 +12) % 10 = 9 % 10 = 9 collides with 89

H2(58) = (8 + 2 ) % 10 = 12 % 10 = 2

5. H0(69) = 69 % 10 =9 collides with 89

H1(69) = (9 +1 ) % 10 = 10 % 10 = 0 collides with 49

H2(69) = (9 + 2 ) % 10 = 13 % 10 = 3

2

2

2

2

Disadvantages:

It faces secondary clustering that is difficult to find the empty slot if the table is half

full.

Key will not be inserted evenly.

2.3. Double Hashing

1. It uses the idea of applying a second hash function to the key when a collision occurs.

2. The result of the second hash function will be the number of positions from the point of

collision to insert.

Hi(x) = (Hash(X) + i * Hash2(X) ) mod Tablesize

A popular second hash function is Hash2(X) = R – (X % R) where R is a prime number

Insert: 89, 18, 49, 58, 69 using Hash2(X) = R – (X % R) and R = 7

Here Hash(X) = X % 10 & Hash2(X) = 7 – (X % 7)

1. H0(89) = 89 % 10 = 9

2. H0(18) = 18 % 10 = 8

3. H0(49) = 49 % 10 = 9 collides with 89

H1(49) = ((49 % 10 ) + 1 * (7- (49 % 7)) ) % 10 = 16 % 10 = 6

4. H0(58) = 58 % 10 = 8 collides with 18

H1(58) = ((58 % 10 ) + 1 * (7- (58 % 7)) ) % 10 = 13 % 10 = 3 5.

5. H0(69) = 69 % 10 = 9 collides with 89

H1(69) = ((69 % 10 ) + 1 * (7- (69 % 7)) ) % 10 = 10 % 10 = 0.

S.No.

Separate Chaining

Open Addressing

1.

Chaining is Simpler to implement.

Open Addressing requires more

computation.

2.

In chaining, Hash table never fills up, we

can always add more elements to chain.

In open addressing, table may

become full.

S.No.

Separate Chaining

Open Addressing

3.

Chaining is Less sensitive to the hash

function or load factors.

Open addressing requires extra care

to avoid clustering and load factor.

4.

Chaining is mostly used when it is

unknown how many and how frequently

keys may be inserted or deleted.

Open addressing is used when the

frequency and number of keys is

known.

5.

Cache performance of chaining is not

good as keys are stored using linked list.

Open addressing provides better

cache performance as everything is

stored in the same table.

6.

Wastage of Space (Some Parts of hash

table in chaining are never used).

In Open addressing, a slot can be

used even if an input doesn’t map to

it.

7.

Chaining uses extra space for links.

No links in Open addressing

LOAD FACTOR AND REHASHING

Complexity and Load Factor

if there are n entries and b is the size of the array there would be n/b entries on each

index. This value n/b is called the load factor that represents the load that is there on

our map.

This Load Factor needs to be kept low, so that number of entries at one index is less

and so is the complexity almost constant, i.e., O(1).

REHASHING:

1. It is a closed hashing technique.

2. If the table gets too full, then the rehashing method builds new table that is about twice as

big and scan down the entire original hash table, comparing the new hash value for each

element and inserting it in the new table.

3. Rehashing is very expensive since the running time is O(N), since there are N elements to

rehash and the table size is roughly 2N

Rehashing can be implemented in several ways like

1. Rehash, as soon as the table is half full

2. Rehash only when an insertion fails

Example: Suppose the elements 13, 15, 24, 6 are inserted into an open addressing hash table of

size 7 and if linear probing is used when collision occurs.

0

1

2

3

4

5

6

6

15

24

13

If 23 is inserted, the resulting table will be over 70 percent full.

0 6

1 15

2 23

3 24

4

5

6 13

A new table is created. The size of the new table is 17, as this is the first prime number that is twice

as large as the old table size.

Advantages

1. Programmer doesn’t worry about the table size

2. Simple to implement

Why rehashing?

Rehashing is done because whenever key value pairs are inserted into the map, the load

factor increases, which implies that the time complexity also increases as explained

above.

This might not give the required time complexity of O(1).

Hence, rehash must be done, increasing the size of the bucket. Array so as to reduce the

load factor and the time complexity.