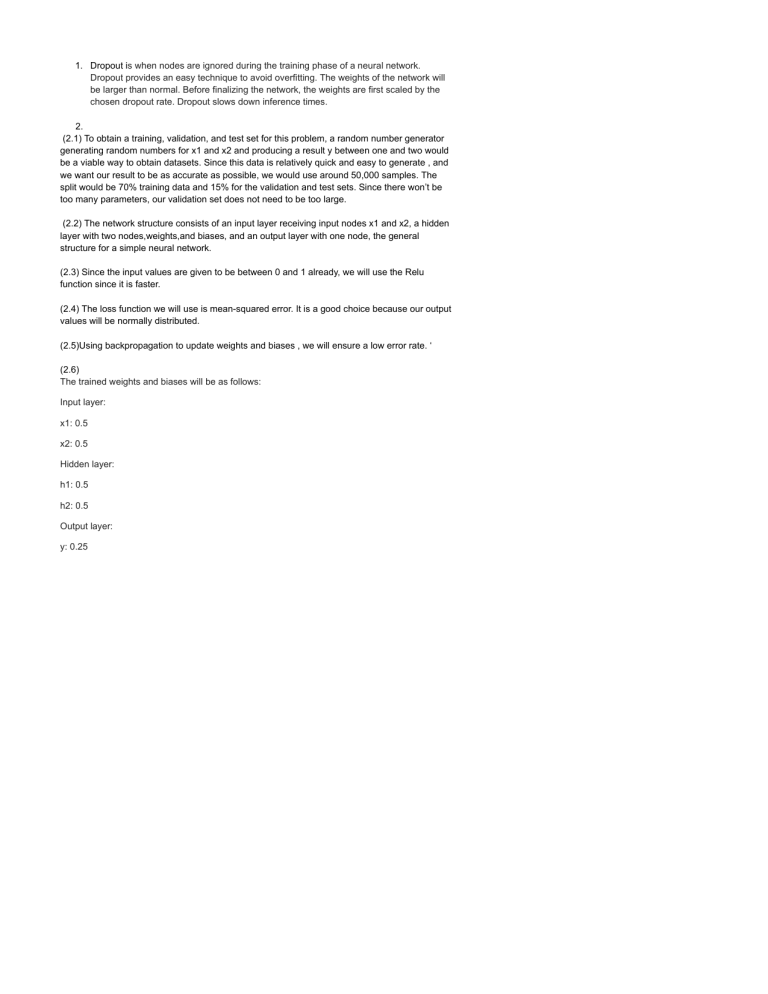

1. Dropout is when nodes are ignored during the training phase of a neural network. Dropout provides an easy technique to avoid overfitting. The weights of the network will be larger than normal. Before finalizing the network, the weights are first scaled by the chosen dropout rate. Dropout slows down inference times. 2. (2.1) To obtain a training, validation, and test set for this problem, a random number generator generating random numbers for x1 and x2 and producing a result y between one and two would be a viable way to obtain datasets. Since this data is relatively quick and easy to generate , and we want our result to be as accurate as possible, we would use around 50,000 samples. The split would be 70% training data and 15% for the validation and test sets. Since there won’t be too many parameters, our validation set does not need to be too large. (2.2) The network structure consists of an input layer receiving input nodes x1 and x2, a hidden layer with two nodes,weights,and biases, and an output layer with one node, the general structure for a simple neural network. (2.3) Since the input values are given to be between 0 and 1 already, we will use the Relu function since it is faster. (2.4) The loss function we will use is mean-squared error. It is a good choice because our output values will be normally distributed. (2.5)Using backpropagation to update weights and biases , we will ensure a low error rate. ‘ (2.6) The trained weights and biases will be as follows: Input layer: x1: 0.5 x2: 0.5 Hidden layer: h1: 0.5 h2: 0.5 Output layer: y: 0.25