Qamrul Hasan Ansari

Advanced Functional Analysis

Advanced Functional Analysis

Qamrul Hasan Ansari

Department of Mathematics

Aligarh Muslim University, Aligarh

E-mail: qhansari@gmail.com

Page 1

SYLLABUS

M.A. / M.Sc. II SEMESTER

ADVANCED FUNCTIONAL ANALYSIS

Course Title

Course Number

Credits

Course Category

Prerequisite Courses

Contact Course

Type of Course

Course Assessment

End Semester Examination

Course Objectives

Course Outcomes

Advanced Functional Analysis

MMM-2009

4

Compulsory

Functional Analysis, Linear Algebra, Real Analysis

4 Lecture + 1 Tutorial

Theory

Sessional (1 hour) 30%

(2:30 hrs) 70%

To discuss some advanced topics from Functional Analysis, namely

orthogonality, orthonormal bases, orthogonal projections, bilinear forms,

spectral theory of continuous linear operators,

differential calculus on normed spaces, geometry of Banach spaces.

These topics play central role in research and

advancement of various topics in mathematics.

After undertaking this course, students will understand:

◮ spectral theory of continuous linear operators

◮ orthogonality, orthogonal complements, orthonormal bases

◮ orthogonal projection, bilinear form and Lax-Milgram lemma

◮ differential calculus on normed spaces

◮ geometry of Banach spaces

2

Qamrul Hasan Ansari

Advanced Functional Analysis

Syllabus

UNIT I: Orthogonality, Orthonormal Bases, Orthogonal Projection

and Bilinear Forms

Orthogonality, Orthogonal complements, Orthonormal Bases,

Orthogonal projections, Projection theorem,

Projection on convex stes, Sesquilinear forms,

Bilinear forms and their basic properties, Lax-Milgram lemma

UNIT II: Spectral Theory of Continuous Linear Operators

Eigenvalues and eigenvectors, Resolvent operators, Spectrum,

Spectral properties of bounded linear operators,

Compact linear operators on normed spaces,

Finite dimensional domain and range, Sequence of compact linear operators,

Weak convergence, Spectral theory of compact linear operators

UNIT III: Differential Calculus on Normed Spaces

Gâteaux derivative, Gradient of a function, Fréchet derivative,

Chain rule, Mean value theorem, Properties of Gâteaux and Fréchet derivatives,

Taylor’s formula, Subdifferential and its properties

UNIT IV: Geometry of Banach Spaces

Strict convexity, Modulus of convexity, Uniform convexity,

Duality mapping and its properties

Smooth Banach spaces, Modulus of smoothness

Total

Page 3

No. of Lectures

14

13

14

15

56

Qamrul Hasan Ansari

Advanced Functional Analysis

Page 4

Recommended Books:

1. Q. H. Ansari: Topics in Nonlinear Analysis and Optimization, World Education, Delhi,

2012.

2. Q. H. Ansari, C. S. Lalitha and M. Mehta: Generalized Convexity, Nonsmooth Variational

and Nonsmooth Optimization, CRC Press, Taylor and Francis Group, Boca Raton, London,

New York, 2014.

3. C. Chidume: Geometric Properties of Banach Spaces and Nonlinear Iterations, Springer,

London, 2009.

4. M. C. Joshi and R. K. Bose: Some Topics in Nonlinear Functional Analysis, Wiley Eastern

Limited, New Delhi, 1985.

5. E. Kreyazig: Introductory Functional Analysis with Applications, John Wiley and Sons, New

York, 1989.

6. M. T. Nair: Functional Analysis: A First Course, Prentice-Hall of India Private Limited,

New Delhi, 2002.

7. A. H. Siddiqi: Applied Functional Analysis, CRC Press, London, 2003.

1

Orthogonality, Orthonormal Bases,

Orthogonal Projection and Bilinear Forms

Throughout these notes, 0 denotes the zero vector of the corresponding vector space, and

h., .i denotes the inner product on an inner product space.

1.1

1.1.1

Orthogonality and Orthonormal Bases

Orthogonality

One of the major differences between an inner product and a normed space is that in an

inner product space we can talk about the angle between two vectors.

Definition 1.1.1. The angle θ between two vectors x and y of an inner product space X is

defined by the following relation:

cos θ =

hx, yi

.

kxk kyk

(1.1)

Definition 1.1.2. Let X be an inner product space whose inner product is denoted by h., .i.

(a) Two vectors x and y in X are said to be orthogonal if hx, yi = 0. When two vectors x

and y are orthogonal, we denoted by x ⊥ y.

(b) A vector x ∈ X is said to be orthogonal to a nonempty subset A of X, denoted by

x⊥A, if hx, yi = 0 for all y ∈ A.

5

Qamrul Hasan Ansari

Advanced Functional Analysis

Page 6

(c) Let A be a nonempty subset of X. The set of all vectors orthogonal to A, denoted by

A⊥ , is called the orthogonal complement of A, that is,

A⊥ = {x ∈ X : hx, yi = 0 for all y ∈ A}.

A⊥⊥ = (A⊥ )⊥ denotes the orthogonal complement of A⊥ , that is,

A⊥⊥ = (A⊥ )⊥ = {x ∈ X : hx, yi = 0 for all y ∈ A⊥ }.

(c) Two subsets A and B of X are said to be orthogonal, denoted by A⊥B, if hx, yi = 0

for all x ∈ A and all y ∈ B.

Clearly, x and y are orthogonal if and only if the angle θ between is 90◦ , that is, cos θ = 0

which is equivalent to (in view of (1.1)) hx, yi = 0 ⇔ x ⊥ y.

Remark 1.1.1. (a) Since hx, yi = hy, xi (conjugate of hy, xi) hx, yi = 0 implies that

hy, xi = 0 or hy, xi = 0 and vice versa. Hence, x⊥y if and only if y ⊥ x, that is, all

vectors in X are mutually orthogonal.

(b) Since hx, 0i = 0 for all x, x ⊥ 0 for every x belonging to an inner product space. By

the definition of the inner product, 0 is the only vector orthogonal to itself.

(c) Clearly, {0}⊥ = X and X ⊥ = {0}.

(d) If A ⊥ B, then A ∩ B = {0}.

(e) Nonzero mutually orthogonal vectors, x1 , x2 , x3 , . . . , xn , of an inner product space are

linearly independent (Prove it!).

Example 1.1.1. Let A = {(x, 0, 0) ∈ R3 : x ∈ R} be a line in R3 and B = {(0, y, z) ∈ R3 :

y, z ∈ R} be a plane in R3 . Then A⊥ = B and B ⊥ = A.

Example 1.1.2. Let X = R3 and A be its subspace spanned by a non-zero vector x. The

orthogonal complement of A is the plane through the origin and perpendicular to the vector

x.

Example 1.1.3. Let A be a subspace of R3 generated by the set {(1, 0, 1), (0, 2, 3)}. An

element of A can be expressed as

x = (x1, x2, x3 ) = λ(1, 0, 1) + µ(0, 2, 3)

= λi + 2µj + (λ + 3µ)k

⇒ x1 = λ, x2 = 2µ, x3 = λ + 3µ.

Thus, the element of A is of the form x1 , x2 , x1 + 23 x2 . The orthogonal complement of A

can be constructed as follows: Let x = (x1 , x2 , x3 ) ∈ A⊥ . Then for y = (y1 , y2 , y3 ) ∈ A, we

have

3

hx, yi = x1 y1 + x2 y2 + x3 y3 = x1 y1 + x2 y2 + x3 y1 + y2

2

3

= (x1 + x3 ) y1 + x2 + x3 y2 = 0.

2

Qamrul Hasan Ansari

Advanced Functional Analysis

Page 7

Since y1 and y2 are arbitrary, we have

3

x1 + x3 = 0 and x2 + x3 = 0.

2

Therefore,

⊥

A

3

=

x = (x1 , x2 , x3 ) : x1 = −x3 , x2 = − x3

2

3

=

x ∈ R3 : x = −x3 , − x3, x3

.

2

Exercise 1.1.1. Let A be a subspace of R3 generated by the set {(1, 1, 0), (0, 1, 1)}. Find

A⊥ .

Answer. A⊥ is the straight line spanned by the vector (1, −1, 1).

Theorem 1.1.1. Let X be an inner product space and A be a subset of X. Then A⊥ is

a closed subspace of X.

Proof. Let x, y ∈ A⊥ . Then, hx, zi = 0 for all z ∈ A and hy, zi = 0 for all z ∈ A. Since for

arbitrary scalars α, β, hαx + βy, zi = αhx, zi + βhy, zi = 0, we get hαx + βy, zi = 0; that is,

αx + βy ∈ A⊥ . So A⊥ is a subspace of X.

To show that A⊥ is closed, let {xn } ∈ A⊥ such that xn → y. We need to show that y must

belongs to A⊥ . Since xn ∈ A⊥ , hx, xn i = 0 for all x ∈ X and all n. Since h., .i is a continuous

function, we have

lim hx, xn i = lim hxn , xi = h lim xn , xi = hy, xi = 0.

n→∞

n→∞

n→∞

Hence, y ∈ A⊥ .

Exercise 1.1.2. Let X be an inner product space and A and B be subsets of X. Prove the

following assertions:

(a) A ∩ A⊥ ⊆ {0}. A ∩ A⊥ = {0} if and only if A is a subspace.

(b) A ⊆ A⊥⊥ .

(c) If B ⊆ A, then B ⊥ ⊇ A⊥ .

Proof. (a) If y ∈ A ∩ A⊥ and y ∈ A⊥ , then y ∈ {0}. If A is a subspace, then 0 ∈ A and

0 ∈ A ∩ A⊥ . Hence, A ∩ A⊥ = {0}.

(b) Let y ∈ A, but y ∈

/ A⊥⊥ . Then there exists an element z ∈ A⊥ such that hy, zi =

6 0.

⊥

Since z ∈ A , hy, zi = 0 which is a contradiction. Hence, y ∈ A⊥⊥ .

(c) Let y ∈ A⊥ . Then hy, zi = 0 for all z ∈ A. Since every z ∈ B is an element of A, we

have hy, zi = 0 for all z ∈ B. Hence, y ∈ B ⊥ , and so B ⊥ ⊃ A⊥ .

Qamrul Hasan Ansari

Advanced Functional Analysis

Page 8

Exercise 1.1.3. Let X be an inner product space and A and B be subsets of X. Prove the

following assertions:

(a) If A ⊆ B, then A⊥⊥ ⊆ B ⊥⊥ .

(b) A⊥ = A⊥⊥⊥ .

(c) If A is dense in X, that is, A = X, then A⊥ = {0}.

(d) If A is an orthogonal set and 0 ∈

/ A, then prove that A is linearly independent.

Exercise 1.1.4. Let A be a nonempty subset of a Hilbert space X. Show that

(a) A⊥⊥ = spanA;

(b) spanA is dense in X whenever A⊥ = {0}.

Hint: See [5], pp. 149.

Exercise 1.1.5. Let A be a nonempty subset of a Hilbert space X. Show that A is closed

if and only if A = A⊥⊥ .

The well-known Pythagorean theorem of plane geometry says that the sum of the squares

of the base and the perpendicular in a right-angled triangle is equal to the square of the

hypotenuse. Its infinite-dimensional analogue is as follows.

Theorem 1.1.2. Let X be an inner product space and x, y ∈ X. Then for x ⊥ y, we

have kx + yk2 = kxk2 + kyk2.

Proof. Note that kx + yk2 = hx + y, x + yi = hx, xi + hy, xi + hx, yi + hy, yi. Since x⊥y,

hx, yi = 0 and hy, xi = 0, we have kx + yk2 = kxk2 + kyk2 .

Exercise 1.1.6. Let K and D be subset of an inner product space X. Show that

(K + D)⊥ = K ⊥ ∩ D ⊥ ,

where K + D = {x + y : x ∈ K, y ∈ D}.

Exercise 1.1.7. For each i = 1, 2, . . . , n, let Ki be a subspace of a Hilbert space X. If

hxi , xj i = 0 when i 6= j for each xi ∈ Ki and yj ∈ Kj , then show that the subspace

K1 + K2 + · · · + Kn is closed. Is this property true for an incomplete inner product space?

Exercise 1.1.8. Let X be an inner product space and for a nonzero vector y ∈ X, Ky :=

{x ∈ X : hx, yi = 0}. Determine the subspace Ky⊥ .

Qamrul Hasan Ansari

1.1.2

Advanced Functional Analysis

Page 9

Orthonormal Sets and Orthonormal Bases

Definition 1.1.3. Let X be an inner product space.

(a) A subset A of nonzero vectors in X is said to orthogonal if any two distinct elements

in A are orthogonal.

(b) A set of vectors A in X is said to be orthonormal if it is orthogonal and kxk = 1 for

all x ∈ A, that is, for all x, y ∈ A,

0,

if x 6= y

(1.2)

hx, yi =

1,

if x = y.

If an orthogonal / orthonormal set in X is countable, then it can be arranged as a sequence

{xn } and in this case we call it an orthogonal sequence / orthonormal sequence, respectively.

More generally, let Λ be any index set.

(a) A family of vectors {xα }α∈Λ in an inner product space X is said to be orthogonal if

xα ⊥ xβ for all α, β ∈ Λ, α 6= β.

(b) A family of vectors {xα }α∈Λ in an inner product space X is said to be orthonormal if

it is orthogonal and kxα k = 1 for all xα , that is, for all α, β ∈ Λ, we have

0,

if α 6= β

(1.3)

hxα , yβ i = δαβ =

1,

if α = β.

1

Example 1.1.4. The standard / canonical basis for Rn (with usual inner product)

e1 = (1, 0, 0, . . . , 0),

e2 = (0, 1, 0, . . . , 0),

..

..

.

.

en = (0, 0, 0, . . . , 1),

form an orthonormal set as

hei , ej i = δij =

0,

1,

if i 6= j

if i = j.

(1.4)

Qamrul Hasan Ansari

Advanced Functional Analysis

Recall that for p ≥ 1,

(

ℓp =

c00 =

x = {xn } ⊆ K :

∞

[

∞

X

n=1

|xn |p < ∞}

Page 10

)

{{x1 , x2 , . . .} ⊆ K : xj = 0 for j ≥ k}

k=1

ℓ∞ = {{xn } ⊆ K : sup |xn | < ∞}

n∈N

C[a, b] = The space of all continuous real-valued functions defined on the interval [a, b]

P [a, b] = The space of polynomials defined on the interval [a, b]

Clearly, c00 ⊆ ℓ∞ .

P [a, b] is complete with respect to the norm kf k∞ = supx∈[a,b] |f (x)|.

However, P [a, b] is dense in C[a, b] with k · k∞ .

ℓ2 is a Hilbert space with inner product defined by

hx, yi =

∞

X

n=1

xn yn ,

for all x = {xn }, y = {yn } ∈ ℓ2 .

The norm on ℓ2 is defined by

kxk = hx, xi

1/2

=

∞

X

n=1

|xn |

2

!1/2

.

The space ℓp with p 6= 2 is not an inner product space, and hence not a Hilbert space.

However, ℓp with p 6= 2 is a Banach space.

For 0 < p < ∞,

p

L [a, b] =

f : [a, b] → K : f is measurable and

Z

b

a

p

|f | dµ < ∞

For 1 ≤ p < ∞, Lp [a, b] is a complete normed space with respect to the norm

kf kp =

Z

a

b

p

|f | dµ

1/p

.

Note that kf kp does not define a norm on Lp [a, b] for 0 < p < 1.

Example 1.1.5. Consider ℓ2 space and its subset E = {e1 , e2 , . . .} with en = δnj , that is,

Qamrul Hasan Ansari

Advanced Functional Analysis

Page 11

en = {0, 0, . . . 0, 1, 0, . . .} (1 is at nth place). Then E forms an orthonormal set for ℓ2 , and

{en } is an orthonormal sequence.

Example 1.1.6. Consider the space c00 with the inner product

hx, yi =

∞

X

for all x = {x1 , x2 , . . .}, y = {y1 , y2, . . .} ∈ c00 .

xn yn ,

n=1

The set E = {e1 , e2 , . . .} with en = δnj , that is, en = {0, 0, . . . 0, 1, 0, . . .} (1 is at nth place)

forms an orthonormal set for c00 , and {en } is an orthonormal sequence.

Example 1.1.7. Consider the space C[0, 2π] with the inner product

Z 2π

hf, gi =

f (t)g(t) dt, for all f, g ∈ C[0, 2π].

0

Consider the sets E = {u1 , u2 , . . .} and G = {v1 , v2 , . . .} or the sequences {un } and {vn },

where

un (t) = cos nt, for all n = 0, 1, 2, . . . ,

and

vn (t) = sin nt,

for all n = 1, 2, . . . .

Then, E = {u1 , u2 , . . .} is an orthogonal set and {un } is an orthogonal sequence. Also,

G = {v1 , v2 , . . .} is an orthogonal set and {vn } is an orthogonal sequence.

Indeed, by integrating, we obtain

hum , un i =

Z

hvm , vn i =

Z

and

2π

0

2π

0,

π,

cos mt cos nt dt =

2π

sin mt sin nt dt =

0

0,

π,

if m 6= n

if m = n = 1, 2, . . .

if m = n = 0,

if m 6= n

if m = n = 1, 2, . . . .

Also, {e1 , e2 , . . .} is an orthonormal set and {en } is an orthonormal sequence, where

1

e0 (t) = √ ,

2π

en =

cos nt

un (t)

= √ ,

kun k

π

for n = 1, 2, . . . .

Similarly, {ẽ1 , ẽ2 , . . .} is an an orthonormal set and {ẽn } is an orthonormal sequence, where

ẽn =

sin nt

vn (t)

= √ ,

kvn k

π

for n = 1, 2, . . . .

Note that um ⊥ vn for all m and n (Prove it!).

Exercise 1.1.9 (Pythagorean Theorem). If {x1 , x2 , . . . , xn } is an orthogonal subset of an

inner product space X, then prove that

n

X

i=1

2

xi

=

n

X

i=1

kxi k2 .

Qamrul Hasan Ansari

Advanced Functional Analysis

Page 12

Proof. We have

n

X

2

xi

=

i=1

* n

X

i=1

=

=

=

n

X

i=1

n

X

i=1

n

X

i=1

xi ,

n

X

xi

i=1

hxi , xi i +

+

n

X

i,j=1, 6=j

hxi , xj i

hxi , xi i

kxi k2 .

Lemma 1.1.1 (Linearly independence). An orthonormal set of non-zero vectors is linearly independent.

Proof. Let {ui } be an orthonormal set. Consider the linear combination

α1 u1 + α2 u2 + · · · + αn un = 0.

Multiply by any fixed uj 6= 0, we get

0 = h0, uj i = hα1 u1 + α2 u2 + · · · + αn un , uj i

= α1 hu1, uj i + α2 hu2 , uj i + · · · αj huj , uj i + · · · + αn hun , uj i.

Since hui , uj i = δij , we have

0 = αj huj , uj i or αj = 0 as uj 6= 0.

This shows that {ui } is a set of linearly independent vectors.

Exercise 1.1.10. Determine an orthogonal set in L2 [0, 2π].

√

Hint: (See [6], pp. 179) Consider u1 (t) = 1/ 2π, and for n ∈ N,

sin nt

u2n (t) = √ ,

π

cos nt

u2n+1 (t) = √ ,

π

and then check E = {u1 , u2, . . .} is an orthogonal set in L2 [0, 2π].

Exercise 1.1.11. Construct a set of 3 vectors in R3 and determine whether it is a basis

and, if it is, then whether it is orthogonal, orthonormal or neither.

Exercise 1.1.12. Show that every orthonormal set in a separable inner product space X is

countable.

Hint: (See [6], pp. 179)

Qamrul Hasan Ansari

Advanced Functional Analysis

Page 13

Advantages of an Orthonormal Sequence. A great advantage of orthonormal sequences

over arbitrary linearly independent sequences is the following: If we know that a given x can

be represented as linear combination of some elements of an orthonormal sequence, then the

orthonormality makes the actual determination of the coefficients very easy.

Let {u1 , u2, . . .} be an orthonormal sequence in an inner product space X and x ∈

span{u1, u2 , . . . , un }, where n is fixed. Then x can be written as a linear combination of

u1 , u2 , . . . , un , that is,

n

X

αk uk , for scalars αk .

(1.5)

x=

k=1

Take the inner product by a fixed uj , then we obtain

+

* n

X

X

αk huk , uj i + αj huk , uj i = αj kuj k = αj ,

αk u k , u j =

hx, uj i =

k=1

k=1, k6=j

as kuj k = 1 since {u1 , u2, . . .} is an orthonormal sequence. Therefore, the unknown coefficients αk in (1.5) can be easily calculated.

The following Gram-Schmidt process provides that how to obtain an orthonormal sequence

if an arbitrary linearly independent sequence is given.

Gram-Schmidt Orthogonalization Process. Let {xn } be a linearly independent sequence in an inner product space X. Then we obtain an orthonal sequence {vn } and an

orthonormal sequence {un } with the following property for every n:

span{u1 , u2 , . . . , nn } = span{x1 , x2 , . . . , xn }.

Qamrul Hasan Ansari

Advanced Functional Analysis

1st Step

Take v1 = x1

2nd Step

Take v2 = x2 − hx2 , u1iu1

v1

v1

= x2 − x2 ,

kv1 k kv1 k

hx2 , v1 i

= x2 −

v1

hv1 , v1 i

Take v3 = x3 − hx3 , u2iu2

3rd Step

= x3 −

..

.

nth Step

..

.

Take vn = xn −

= xn −

..

.

..

.

Page 14

v1

kv1 k

v2

and u2 =

kv2 k

and u1 =

2

X

hxj , vj i

hvj , vj i

n−1

X

hxn , uj iuj

n−1

X

hxn , vj i

j=1

hvj , vj i

v3

kv3 k

vj

j=1

j=1

and u3 =

..

.

and un =

vn

kvn k

vj

..

.

Then {vn } is an orthogonal sequence of vectors in X and {un } is an orthonormal sequence

in X. Also, for every n:

span{u1 , u2 , . . . , nn } = span{x1 , x2 , . . . , xn }.

Theorem 1.1.3. Let {xn } be a linearly independent sequence in an inner product space

X. Let v1 = x1 , and

vn = xn −

n−1

X

hxn , vn i

j=1

hvj , vj i

vj ,

for n = 2, 3, . . . .

Then {v1 , v2 , . . .} is an orthogonal set, {un } is an orthonormal sequence where un =

and

span{x1 , x2 , . . . , xk } = span{u1 , u2 , . . . , uk }, for all k = 1, 2, . . . , n.

vn

,

kvn k

Proof. Since {xn } is a sequence of linearly independent vectors, so xn 6= 0 for all n. Define

v1 = x1 and

hx2 , v1 i

v2 = x2 −

v1 .

hv1 , v1 i

Clearly, v2 ∈ span{x1 , x2 } and

hv2 , v1 i = hx2 , v1 i −

hx2 , v1 i

hv1 , v1 i = 0,

hv1 , v1 i

Qamrul Hasan Ansari

Advanced Functional Analysis

Page 15

that is, v2 and v1 are orthogonal. Since {x1 , x2 } is linearly independent, v2 6= 0. Then, by

Exercise 1.1.3 (d), {v1 , v2 } is linearly independent, and hence, it follows that

span{v1 , v2 } = span{x1 , x2 }.

Continuing in this way, we define an orthogonal set {v1 , v2 , . . . , vn−1 } such that

span{x1 , x2 , . . . , xn−1 } = span{v1 , v2 , . . . , vn−1 }.

Let

vn = xn −

n−1

X

hxn , vk i

j=1

hvj , vj i

vj .

Then we have vk ∈ span{x1 , x2 , . . . , xk } and hvk , vi i = 0 for i < k. Again, since {x1 , x2 , . . . , xk }

is linearly independent, vk 6= 0. Thus, {v1 , v2 , . . . , vn } is the required orthogonal set and

{u1 , u2, . . . , un } is the required orthonormal set

Exercise 1.1.13. Let Y be the plane in R3 spanned by the vectors x1 = (1, 2, 2) and

x2 = (−1, 0, 2), that is, Y = span{x1 , x2 }. Find orthonormal basis for Y and for R3 .

Solution. x1 , x2 is a basis for the plane Y . We can extend it to a basis for R3 by adding one

vector from the standard basis. For instance, vectors x1 , x2 and x2 = (0, 0, 1) form a basis

for R3 because

1 2 2

1 2

−1 0 2 =

= 2 6= 0.

−1 0

0 0 1

By using the Gram-Schmidt process, we orthogonalize the basis x1 = (1, 2, 2), x2 = (−1, 0, 2)

and x3 = (0, 0, 1):

v1 = x1 = (1, 2, 2),

hx2 , v1 i

v2 = x2 −

v1

hv1 , v1 i

3

= (−1, 0, 2) − (1, 2, 2) = (−4/3, −2/3, 4/3)

9

hx3 , v1 i

hx3 , v2 i

v3 = x3 −

v1 −

v2

hv1 , v1 i

hv2 , v2 i

4/3

2

(−4/3, −2/3, 4/3) = (2/9, −2/9, 1/9).

= (0, 0, 1) − (1, 2, 2) −

9

4

Now, v1 = (1, 2, 2), v2 = (−4/3, −2/3, 4/3), v3 = (2/9, −2/9, 1/9) is an orthogonal basis for

R3 , while v1 , v2 is an orthogonal basis for Y . The orthonromal basis for Y is u1 = kvv11 k =

1

(1, 2, 2), u2 = kvv22 k = 31 (−2, −1, 2).

3

The orthonromal basis for R3 is u1 =

1

(2, −2, 1).

3

v1

kv1 k

= 31 (1, 2, 2), u2 =

v2

kv2 k

= 31 (−2, −1, 2), u3 =

v3

kv3 k

=

Qamrul Hasan Ansari

Advanced Functional Analysis

Page 16

Exercise 1.1.14. Let {un } be an orthonormal sequence in an inner product space X. Prove

the following statements (Use Pythagorean theorem).

(a) If w =

P∞

n=1 αn un , then kwk =

(b) If x ∈ X and sN =

PN

P∞

n=1 |αn |

n=1 hx, un iun ,

2

, where αn ’s are scalars.

then kxk2 = kx − sN k2 + ksN k2 .

P

(c) If x ∈ X and sN = N

n=1 hx, un iun , and XN = span{u1 , u2 , . . . uN }, then kx − sN k =

miny∈XN kx − yk (It is called best approximation property).

Theorem 1.1.4 (Bessel’s inequality). Let {uk } be an orthonormal set in an inner product space X. Then for any x ∈ X, we have

∞

X

k=1

|hx, uk i|2 ≤ kxk2 .

P

Proof. Let xn = nk=1 hx, uk iuk be the nth partial sum. Then, by using the properties of the

inner product and applying the fact that

0,

if i 6= j

hui , uj i = δij =

1,

if i = j,

we have

0 ≤ kx − xn k2 = hx − xn , x − xn i = kxk2 − hxn , xi − hx, xn i + kxn k2

* n

+ *

+

n

X

X

= kxk2 −

hx, uk iuk , x − x,

hx, uk iuk + kxn k2

k=1

= kxk2 −

2

n

X

k=1

k=1

hx, uk ihuk , xi −

2

= kxk − kxn k .

Therefore, kxn k2 ≤ kxk2 , and hence,

the conclusion.

Pn

k=1 |hx, uk i|

2

n

X

k=1

hx, uk ihx, uk i + kxn k2

≤ kxk2 . Taking limit as n → ∞, we get

Exercise 1.1.15. Let {ui } be a countably infinite orthonormal set in a Hilbert space X.

Then prove the following statements:

(a) The infinite series

∞

P

n=1

∞

P

αn un , where αn ’s are scalars, converges if and only if the series

n=1

|αn |2 converges, that is,

∞

P

n=1

|αn |2 < ∞.

Qamrul Hasan Ansari

(b) If

∞

P

Advanced Functional Analysis

Page 17

αn un converges and

n=1

x=

∞

X

αn u n =

n=1

∞

P

then αn = βn for all n and kxk2 =

Proof. (a) Let

∞

P

n=1

∞

X

βn un ,

n=1

|αn |2 .

αn un be convergent and assume that

n=1

x=

∞

X

αn u n ,

or equivalently,

lim

N →∞

n=1

x−

N

X

2

αn u n

= 0.

n=1

Now,

hx, um i =

=

*∞

X

αn u n , u m

n=1

∞

X

n=1

+

αn hun , um i,

for m = 1, 2, . . .

(as {ui } is orthonormal).

= αm

By the Bessel inequality, we get

∞

X

m=1

which shows that

∞

P

n=1

2

|hx, um i| =

m=1

|αm |2 ≤ kxk2 ,

|αn |2 converges.

To prove the converse, assume that

n

P

∞

X

∞

P

n=1

αi ui . Then, we have

|αn |2 is convergent. Consider the finite sum sn =

i=1

ksn − sm k

2

=

=

*

n

X

i=m+1

n

X

i=m+1

αi u i ,

n

X

αi u i

i=m+1

+

|αi |2 → 0 as n, m → ∞.

This means that {sn } is a Cauchy sequence. Since X is complete, the sequence of partial

∞

P

αn un converges.

sums {sn } is convergent in X, and therefore, the series

n=1

Qamrul Hasan Ansari

Advanced Functional Analysis

(b) We first prove that kxk2 =

2

kxk −

N

X

n=1

|αn |

2

∞

P

n=1

*

x, x −

x−

≤

Since

N

P

|αn |2 . We have

= hx, xi −

=

Page 18

N X

N

X

hαn un , αm um i

n=1 m=1

N

X

αn u n

n=1

N

X

αn u n

n=1

+

+

*N

X

kxk +

αn u n , x −

n=1

N

X

αn u n

n=1

!

N

X

αn u n

n=1

+

= M.

αn un converges to x, the M converges to zero, proving the result.

n=1

If x =

∞

X

αn u n =

n=1

∞

X

βn un , then

n=1

0 = lim

N →∞

" N

X

n=1

(αn − βn ) un

#

⇒

∞

X

0=

n=1

|αn − βn |2 ,

by (a),

implying that αn = βn for all n.

Exercise 1.1.16. Let {un } be an orthonormal sequence in a Hilbert space X, and

∞

X

n=1

2

|αn | < ∞ and

Prove that

u

∞

X

αn u n

∞

X

n=1

and v =

n=1

|βn |2 < ∞.

∞

X

βn un

n=1

are convergent series with respect to the norm of X and hu, vi =

P∞

n=1

αn βn .

Proof. Let

uN

N

X

αn u n

and vN =

n=1

Then for M < N, we have

N

X

βn un .

n=1

2

kuN − uM k =

N

X

n=M

|αn |2 → 0 as M → ∞,

and so, {uN } is a Cauchy sequence in a complete space X and thus converging to some

u ∈ X. Similarly, {vN } is a Cauchy sequence in a complete space X that converges to some

Qamrul Hasan Ansari

Advanced Functional Analysis

Page 19

v ∈ X. Finally,

huN , vN i =

N

X

hαj uj , βk uk i =

j,k=1

N

X

j,k=1

αj βk huj , uk i =

N

X

αj βj ,

j=1

since huj , wk i = 0 for j 6= k and hwj , wj i = 1. Taking

P∞the limit as N → ∞ and using the

Pythagorean theorem, huN , vN i → hu, vi gives hu, vi n=1 αn βn .

Recall that if {u1 , u2 , . . . , un } is a basis of a linear space X, then for every x ∈ X, there

exists scalars α1 , α2 , . . . , αn such that x = α1 u1 + α2 u2 + · · · + αn un .

Definition 1.1.4. (a) An orthogonal set of vectors {ui } in an inner product space X is

called an orthogonal basis if for any x ∈ X, there exist scalars αi such that

x=

∞

X

αi u i .

i=1

If the set {ui } is orthonormal, then it is called an orthonormal basis.

(b) An orthonormal basis {ui } in a Hilbert space X is called maximal or complete if there

is no unit vector u0 in X such that {u0 , u1, u2 , . . .} is an orthonormal set. In other

words, the sequence {ui } of orthonormal basis in X is complete if and only if the only

vector orthogonal to each of ui ’s is the null vector.

In general, an orthonormal set E in an inner product space X is complete or maximal

if it is a maximal orthonormal set in X, that is, E is an orthonormal set, and for every

e satisfying E ⊆ E,

e we have E

e = E.

orthonormal set E

(c) Let {ui } be an orthonormal basis in a Hilbert space X, then the numbers αi = hx, ui i

are called the Fourier coefficients of the element x with respect to the system {ui } and

P

∞

i=1 αi ui is called the Fourier series of the element x.

Example 1.1.8. The set {ei : i ∈ N}, where ei = (0, 0, . . . , 0, 1, 0, . . .) with 1 lies in the ith

place, forms an orthonormal basis for ℓ2 (C).

Example 1.1.9. Let X = L2 (−π, π) be a complex Hilbert space and un be the element of

X defined by

1

un (t) = √ exp(i n t), for n = 0, ±1, ±2, . . . .

2π

Then

1 cos nt sin nt

√ , √ , √ : n = 1, 2, . . .

π

π

2π

forms an orthonormal basis for X as exp(i n t) = cos nt + i sin nt.

Qamrul Hasan Ansari

Advanced Functional Analysis

Page 20

Theorem 1.1.5. Let {ui : i ∈ N} be an orthonormal set in a Hilbert space X. Then the

following assertions are equivalent:

(a) {ui : i ∈ N} is an orthonormal basis for X.

(b) For all x ∈ X, x =

∞

X

i=1

(c) For all x ∈ X, kxk2 =

hx, ui iui .

∞

X

i=1

|hx, ui i|2 .

(d) hx, ui i = 0 for all i implies x = 0.

Proof. (a) ⇔ (b): Let {ui : i ∈ N} be an orthonormal basis for X. Then we can write

x=

∞

X

αi u i ,

that is x = lim

n→∞

i=1

For k ≤ n in N, we have

* n

X

αi u i , u k

i=1

+

n

X

=

i=1

n

X

αi u i .

i=1

αi hui , uk i = uk .

By letting n → ∞ and using the continuity of the inner product, we obtain

hx, uk i = lim = αk ,

n→∞

and hence (b) holds.

The same argument shows that if (b) holds, then this expansion is unique and so {ui : i ∈ N}

is an orthonormal basis for X.

(b) ⇔ (c): By Pythagorean theorem and continuity of the inner product, we have

2

kxk =

∞

X

i=1

2

hx, ui iui

=

i=1

(c) ⇔ (d): Let hx, ui i = 0 for all i. Then kxk2 =

x = 0.

(d) ⇔ (b): Take any x ∈ X and let y = x −

∞

X

i=1

hy, uk i = hx, uk i − lim

n→∞

*

∞

X

|hx, ui i|2 .

P∞

i=1

|hx, ui i|2 = 0 which implies that

hx, ui iui . Then for each k ∈ N, we have

∞

X

hx, ui iui , uk

i=1

+

=0

Qamrul Hasan Ansari

Advanced Functional Analysis

since eventually n ≥ k. It follows from (d) that y = 0, and hence x =

Page 21

∞

X

i=1

hx, ui iui .

Theorem 1.1.6 (Fourier Series Representation). Let Y be the closed subspace spanned

by a countable orthonormal set {ui } in a Hilbert space X. Then every element x ∈ Y

can be written uniquely as

∞

X

x=

hx, ui iui .

(1.6)

i=1

Proof. Uniqueness of (1.6) is a consequence of Exercise 1.1.15 (b). For any x ∈ Y , we can

write

M

X

x = lim

αi ui , for M ≥ N

N →∞

i=1

as Y is closed. From Theorem 1.1.4 and Exercise 1.1.15, it follows that

x−

M

X

i=1

hx, ui iui ≤ x −

M

X

αi u i ,

i=1

and as N → ∞, we get the desired result.

Theorem 1.1.7 (Fourier Series Theorem). For any orthonormal set {un } in a separable

Hilbert space X, the following statements are equivalent:

(a) Every x ∈ X can be represented by the Fourier series in X; that is,

x=

∞

X

i=1

hx, ui iui .

(1.7)

(b) For any pair of vectors x, y ∈ X, we have

hx, yi =

∞

X

i=1

hx, ui ihy, uii =

∞

X

αi βi ,

(1.8)

i=1

where αi = hui , xi are Fourier coefficients of x, and βi = hy, ui i are Fourier coefficients of y.

(c) For any x ∈ X, one has

2

kxk =

∞

X

i=1

|hx, ui i|2 .

(1.9)

Qamrul Hasan Ansari

Advanced Functional Analysis

Page 22

(d) Any subspace Y of X that contains {ui } is dense in X.

Proof. (a) ⇒ (b). It follows from (1.6) and the fact that {ui } is orthonormal.

(b) ⇒ (c). Put x = y in (1.8) to get (1.9).

(a) ⇒ (d). The statement (d) is equivalent to the statement that the orthogonal projection

onto S, the closure of S, is the identity. In view of Theorem 1.1.6, statement (d) is equivalent

to statement (a).

Exercise 1.1.17. Let X be a Hilbert space and E be an orthonormal basis of X. Prove

that E is countable if and only if X is separable.

Hint: See Theorem 4.10 on page 187 in [6].

Exercise 1.1.18. Let X be a Hilbert space and E be an orthonormal basis of X. Prove

that E is a basis of X if and only if X is finite dimension.

Hint: See Theorem 4.13 on page 189 in [6].

Exercise 1.1.19. Let X be a Hilbert space. Prove that E is an orthonormal basis of X if

and only if spanE is dense in X.

Exercise 1.1.20. If X is a Hilbert space, then show that E is an orthonormal basis if and

only if

X

hx, yi =

hx, ui hy, ui, for all x, y ∈ X.

u∈E

1.2

1.2.1

Orthogonal Projections and Projection Theorem

Orthogonal Projection

Let K be a nonempty subset of a normed space X. Recall that distance from an element

x ∈ X to the set K is defined by

ρ := inf kx − yk.

y∈K

(1.10)

It is important to know that whether there is a z ∈ K such that

kx − zk = inf kx − yk.

y∈K

If such point exists, whether it is unique?

(1.11)

Qamrul Hasan Ansari

Advanced Functional Analysis

b

Page 23

x

z

K

y

b

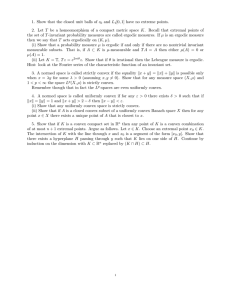

Figure 1.1: The distance from a point x to K

b

x

b

ρ

x

ρ

K is an open segment

No z in K that satisfies (1.12)

K is an open segment

z is unique that satisfies (1.12)

b

x

ρ

K is circular arc; Infinitely

many z’s which satisfy (1.12)

Figure 1.2: The distance from a point x to K

One can see in the following figures that even in the simple space R2 , there may be no z

satisfying (1.12), or precisely one such z, or more than one z.

To get the existence and uniqueness of such z, we recall the concept of a convex set.

Definition 1.2.1. A subset K of a vector space X is said to be a convex set if for all

x, y ∈ K and α, β ≥ 0 such that α + β = 1, we have αx + βy ∈ K, that is, for all x, y ∈ K

and α ∈ [0, 1], we have αx + (1 − α)y ∈ K.

Theorem 1.2.1. Let K be a nonempty closed convex subset of a Hilbert space X. Then

for any given x ∈ X, there exists a unique z ∈ K such that

kx − zk = inf kx − yk.

y∈K

(1.12)

Proof. Existence. Let ρ := inf kx − yk. By the definition of the infimum, there exists a

y∈K

sequence {yn } in K such that kx − yn k → ρ as n → ∞. We will prove that {yn } is a Cauchy

sequence.

Qamrul Hasan Ansari

Advanced Functional Analysis

Page 24

y

x

y

x

A convex set

A nonconvex set

Figure 1.3: A convex set and a nonconvex set

b

x

z

K

y

b

Figure 1.4: Existence and uniqueness of z that minimizes the distance from K

By using parallelogram law, we have

yn + ym

kyn − ym k + 4 x −

2

2

2

= 2 kx − yn k2 + kx − ym k2 ,

for all n, m ≥ 1.

Since K is a convex subset of X and yn , ym ∈ K, we have 12 (yn + ym ) ∈ K. Therefore,

m

≥ ρ. Hence

x − yn +y

2

kyn − ym k

2

yn + ym

= 2 kx − yn k + kx − ym k − 4 x −

2

2

2

2

≤ 2 kx − yn k + kx − ym k − 4ρ .

2

2

2

Let n, m → ∞, then we have kx − yn k → ρ and kx − ym k → ρ and

0 ≤ lim kyn − ym k2 ≤ 4ρ2 − 4ρ2 = 0.

n,m→∞

Therefore, lim kyn − ym k2 = 0, and thus, {yn } is a Cauchy sequence. Since X is complete,

n,m→∞

there exists z ∈ X such that lim yn = z. Since yn ∈ K and K is closed, z ∈ K. In

n→∞

conclusion, we have

kx − zk = inf kx − yk.

y∈K

Qamrul Hasan Ansari

Advanced Functional Analysis

Page 25

Uniqueness. Suppose that there is also ẑ ∈ K such that

kx − ẑk = inf kx − yk.

y∈K

z+ẑ

2

By using parallelogram law and x −

kz − ẑk2 + 4 x −

z + ẑ

2

that is,

2

≥ ρ (since 21 (z + ẑ) ∈ K), we have

= 2 kx − zk2 + kx − ẑk2 = 4ρ2 ,

z + ẑ

0 ≤ kz − ẑk = 4ρ − 4 x −

2

2

2

2

≤ 0.

Thus, kz − ẑk = 0, and hence, z = ẑ.

Remark 1.2.1. Theorem 1.2.1 does not hold in the setting of Banach spaces. For example, c0

is a closed subspace of ℓ∞ , but there is no closest sequence in c0 to the sequence {1, 1, 1, . . .}.

In fact, the distance between c0 and the sequence {1, 1, 1, . . .} is 1, and this is achieved by

any bounded sequence {xn } with xn ∈ [0, 2].

Theorem 1.2.2. Let K be a closed subspace of a Hilbert space X and x ∈ X be given.

There exists a unique z ∈ K which satisfies (1.12) and x − z is orthogonal to K, that is,

x − z ∈ K ⊥.

Proof. Existence. Existence of z ∈ K follows from previous theorem as every subspace is

convex.

Orthogonality. Clearly, hx − z, 0i = 0. Take y ∈ K, y 6= 0. Then we shall prove that

hx − z, yi = 0. Since z ∈ K satisfies (1.12) and z + λy ∈ K (as K is a subspace), we have

kx − zk2 ≤ kx − (z + λy)k2 = kx − zk2 + |λ|2kyk2 − λhy, x − zi − λhx − z, yi,

that is,

Putting λ =

hx−z,yi

kyk2

0 ≤ |λ|2 kyk2 − λhx − z, yi − λhx − z, yi.

in the above inequality, we obtain

|hx − z, yi|2

≤ 0,

kyk2

which is only happened when hx − z, yi = 0. Since y was arbitrary, x − z is orthogonal to K.

Uniqueness. Suppose that there is also ẑ ∈ K such that x − ẑ ∈ K ⊥ . Then z − ẑ =

(x − ẑ) − (x − z) ∈ K ⊥ . On the other hand, z − ẑ ∈ K since z, ẑ ∈ K and K is a subspace.

So, z − ẑ ∈ K ∩ K ⊥ ⊂ {0}. Therefore, z − ẑ = 0, and hence, z = ẑ.

Qamrul Hasan Ansari

Advanced Functional Analysis

Page 26

Lemma 1.2.1. If K is a proper closed subspace of a Hilbert space X, then there exists

a nonzero vector x ∈ X such that x ⊥ K.

Proof. Let u ∈

/ K and ρ = inf ku − yk, the distance from u to K. By Theorem 1.2.1, there

y∈K

exists a unique element z ∈ K such that ku − zk = ρ. Let x = u − z. Then x 6= 0 as ρ > 0.

(If x = 0, then u − z = 0 and ku − zk = 0 implies that ρ = 0.)

Now, we show that x ⊥ K. For this, we show that for arbitrary y ∈ K, hx, yi = 0. For

any scalar α, we have kx − αyk = ku − z − αyk = ku − (z + αy)k. Since K is a subspace,

z + αy ∈ K whenever z, y ∈ K. Thus, z + αy ∈ K implies that kx − αyk ≥ ρ = kxk or

kx − αyk2 − kxk2 ≥ 0 or hx − αy, x − αyi − kxk2 ≥ 0. Since

hx − αy, x − αyi = hx, xi − αhy, xi − αhx, yi + ααhy, yi

= kxk2 − αhx, yi − αhy, xi + |α|2kyk2 ,

we have,

−αhx, yi − αhx, yi + |α|2kyk2 ≥ 0.

Putting α = βhx, yi in the above inequality, β being an arbitrary real number, we get

−2β|hx, yi|2 + β 2 |hx, yi|2kyk2 ≥ 0.

If we put a = |hx, yi|2 and b = kyk2 in the above inequality, we obtain

−2βa + β 2 ab ≥ 0,

or

βa(βb − 2) ≥ 0,

for all real β.

If a > 0, the above inequality is false for all sufficiently small positive β. Hence, a must be

zero, that is, a = |hx, yi|2 = 0 or hx, yi = 0 for all y ∈ K.

Lemma 1.2.2. If M and N are closed subspaces of a Hilbert space X such that M ⊥ N,

then the subspace M + N = {x + y ∈ X : x ∈ M and y ∈ N} is also closed.

Proof. It is a well-known result of vector spaces that M + N is a subspace of X. We show

that it is closed, that is, every limit point of M + N belongs to it. Let z be an arbitrary limit

point of M + N. Then there exists a sequence {zn } of points of M + N such that zn → z.

M ⊥ N implies that M ∩ N = {0}. So, every zn ∈ M + N can be written uniquely in the

form zn = xn + yn , where xn ∈ M and yn ∈ N.

By the Pythagorean theorem for elements (xm − xn ) and (ym − yn ), we have

kzm − zn k2 = k(xm − xn ) + (ym − yn )k2

= kxm − xn k2 + kym − yn k2

(1.13)

Qamrul Hasan Ansari

Advanced Functional Analysis

Page 27

(It is clear that (xm − xn ) ⊥ (ym − yn ) for all m, n.) Since {zn } is convergent, it is a Cauchy

sequence and so kzm − zn k2 → 0. Hence, from (1.13), we see that kxm − xn k → 0 and

kym − yn k → 0 as m, n → ∞. Hence, {xm } and {yn } are Cauchy sequences in M and N,

respectively. Being closed subspaces of a complete space, M and N are also complete. Thus,

{xm } and {yn } are convergent in M and N, respectively, say xm → x ∈ M and yn → y ∈ N,

x + y ∈ M + N as x ∈ M and y ∈ N. Then

z =

lim zn = lim (xn + yn ) = lim xn + lim y

n→∞

n→∞

n→∞

n→∞

= x + y ∈ M + N.

This proves that an arbitrary limit point of M + N belongs to it and so it is closed.

Definition 1.2.2. A vector space X is said to be the direct sum of two subspaces Y and Z

of X, denoted by X = Y ⊕ Z, if each x ∈ X has a unique representation x = y + z for y ∈ Y

and z ∈ Z.

Theorem 1.2.3 (Orthogonal Decomposition). If K is a closed subspace of a Hilbert

space X, then every x ∈ X can be uniquely represented as x = z + y for z ∈ K and

y ∈ K ⊥ , that is, X = K ⊕ K ⊥ .

Proof. Since every subspace is a convex set, by previous two results, for every x ∈ X, there

is a z ∈ K such that x − z ∈ K ⊥ , that is, there is a y ∈ K ⊥ such that y = x − z which is

equivalently to x = z + y for z ∈ K and y ∈ K ⊥ .

To prove the uniqueness, assume that there is also ŷ ∈ K ⊥ such that x = ŷ + ẑ for ẑ ∈ K.

Then x = y + z = ŷ + ẑ, and therefore, y − ŷ = ẑ − z. Since y − ŷ ∈ K ⊥ whereas ẑ − z ∈ K,

we have y − ŷ ∈ K ∩ K ⊥ = {0}. This implies that y = ŷ, and hence also z = ẑ.

x

y = PK ⊥ (x)

z = PK (x)

K

Figure 1.5: Orthogonal decomposition

Qamrul Hasan Ansari

Advanced Functional Analysis

Page 28

Example 1.2.1. (a) Let X = L2 (−1, 1). Then X = K ⊕ K ⊥ , where K is the space of

even functions, that is,

K = {f ∈ L2 (−1, 1) : f (−t) = f (t) for all t ∈ (−1, 1)},

and K ⊥ is the space of odd functions, that is,

K ⊥ = {f ∈ L2 (−1, 1) : f (−t) = −f (t) for all t ∈ (−1, 1)}.

(b) Let X = L2 [a, b]. For c ∈ [a, b], let

K = {f ∈ L2 [a, b] : f (t) = 0 almost everywhere in (a, c)}

and

K ⊥ = {f ∈ L2 [a, b] : f (t) = 0 almost everywhere in (c, b)}.

Then X = K ⊕ K ⊥ .

Exercise 1.2.1. Give examples of representations of R3 as a direct sum of a subspace and

its orthogonal complement.

Exercise 1.2.2. Let K be a subspace of an inner product space X. Show that x ∈ K ⊥ if

and only if kx − yk ≥ kxk for all y ∈ K.

Definition 1.2.3. Let K be a closed subspace of a Hilbert space X. A mapping PK : X → K

defined by

PK (x) = z, where x = z + y and (z, y) ∈ K × K ⊥ ,

is called the orthogonal projection of X onto K.

2

Let X and Y be normed spaces and T : X → Y be an operator.

(a) The range of T is R(T ) := {T (x) ∈ Y : x ∈ X}.

(b) The null space or kernel of T is N (T ) := {x ∈ X : T (x) = 0}.

(c) The operator T is called an idempotent if T 2 = T .

2

Let X be a vector space. A linear operator P : X → X is called projection operator if P ◦ P = P 2 = P .

Theorem 1.2.4. If P : X → X is a projection operator from a vector space X to itself, then X =

R(P ) ⊕ N (P ), where R(P ) is the range set of P and N (P ) = {x ∈: P (x) = 0} is the null space of P .

Theorem 1.2.5. If a vector space X is expressed as the directed sum of its subspaces Y and Z, then

there is a uniquely determined projection P : X → X such that Y = R(P ) and Z = N (P ) = R(I − P ),

where I be the identity mapping on X.

Qamrul Hasan Ansari

Advanced Functional Analysis

Page 29

Theorem 1.2.6 (Existence of Projection Mapping). Let K be a closed subspace of a

Hilbert space X. Then there exists a unique mapping PK from X onto K such that

R(PK ) = K.

Proof. By Theorem 1.2.3, X = K ⊕ K ⊥ . Theorem 1.2.5 ensures the existence of a unique

projection PK such that R(PK ) = K and N (PK ) = K ⊥ . This projection is an orthogonal

projection as its null space and range are orthogonal.

Similarly, it can be verified that the orthogonal projection I − PK corresponds to the case

R(I − PK ) = K ⊥ and N (I − PK ) = K.

Exercise 1.2.3. Let K be a closed subspace of a Hilbert space X and I be the identity

mapping on X. Then prove that there exists a unique mapping PK from X onto K such

that I − PK maps X onto K ⊥ .

Such map PK is the projection mapping of X onto K.

Exercise 1.2.4 (Properties of Projection Mapping). Let K be a closed subspace of a Hilbert

space X, I be the identity mapping on X and PK is the projection mapping from X onto

K. Then prove that the following properties hold for all x, y ∈ X.

(a) Each element x ∈ X has a unique representation as a sum of an element of K and an

element of K ⊥ , that is,

x = PK (x) + (I − PK )(x).

(1.14)

(Hint: Compare with Theorem 1.2.3)

(b) kxk2 = kPK (x)k2 + k(I − PK )(x)k2 .

(c) x ∈ K if and only if PK (x) = x.

(d) x ∈ K ⊥ if and only if PK (x) = 0.

(e) If K1 and K2 are closed subspaces of X such that K1 ⊆ K2 , then PK1 (PK2 (x)) =

PK1 (x).

(f) PK is a linear mapping, that is, for all α, β ∈ R and all x, y ∈ X, PK (αx + βy) =

αPK (x) + βPK (y).

(g) PK is a continuous mapping, that is, xn −→ x (that is, kxn − xk −→ 0) implies

n→∞

PK (xn ) −→ PK (x) (that is, kPK (xn ) − PK (x) −→ 0).

n→∞

n→∞

n→∞

Exercise 1.2.5 (Properties of Projection Mapping). Let K be a closed subspace of a Hilbert

space X, I be the identity mapping on X and PK is the projection mapping from X onto

K. Then prove that the following properties hold for all x, y ∈ X.

Qamrul Hasan Ansari

Advanced Functional Analysis

Page 30

(a) Each element z ∈ X can be written uniquely as

z = x + y,

where x ∈ R(PK ) and y ∈ N (PK ).

(b) The null space N (PK ) and the range set R(PK ) are closed subspaces of X.

(c) N (PK ) = (R(PK ))⊥ and R(PK ) = N (PK )⊥ .

(d) PK is idempotent.

Exercise 1.2.6. Let K1 and K2 be closed subspaces of a Hilbert space X and PK1 and PK2

be orthogonal projections onto K1 and K2 , respectively. If hx, yi = 0 for all x ∈ K1 and

y ∈ K2 , then prove that

(a) K1 + K2 is a closed subspace of X;

(b) PK1 + PK2 is the orthogonal projection onto K1 + K2 ;

(c) PK1 PK2 ≡ 0 ≡ PK2 PK1 .

1.2.2

Projection on Convex Sets

We discuss here the concepts of projection and projection operator on convex sets which are

of vital importance in such diverse fields as optimization, optimal control and variational

inequalities.

Definition 1.2.4. Let K be a nonempty closed convex subset of a Hilbert space X. For

x ∈ X, by projection of x on K, we mean the element z ∈ K, denoted by PK (x), such that

kx − PK (x)k ≤ kx − yk,

for all y ∈ K,

(1.15)

equivalently,

kx − zk = inf kx − yk.

y∈K

(1.16)

An operator on X into K, denoted by PK , is called the projection operator if PK (x) = z,

where z is the projection of x on K.

In view of Theorem 1.2.1, there always exists a z ∈ K which satisfies (1.16)

Theorem 1.2.7 (Variational Characterization of Projection). Let K be a nonempty

closed convex subset of a Hilbert space X. For any x ∈ X, z ∈ K is the projection of x

if and only if

hx − z, y − zi ≤ 0, for all y ∈ K.

(1.17)

Qamrul Hasan Ansari

Advanced Functional Analysis

Page 31

Proof. Let z be the projection of x ∈ X. Then for any α, 0 ≤ α ≤ 1, since K is convex,

αy + (1 − α)z ∈ K for all y ∈ K. Define a real-valued function g : [0, 1] → R by

g(α) := kx − (αy + (1 − α)z)k2 ,

for all α ∈ [0, 1].

(1.18)

Then g is a twice continuously differentiable function of α. Moreover,

g ′ (α) = 2hx − αy − (1 − α)z, z − yi

g ′′ (α) = 2hz − y, z − yi.

b

(1.19)

x

z

K

y

b

Figure 1.6: The projection of a point x onto K

Now, for z to be the projection of x, it is clear that g ′ (0) ≥ 0, which is (1.17).

In order to prove the converse, let (1.17) be satisfied for some element z ∈ K. This implies

that g ′(0) is non-negative, and by (1.19), g ′′ (α) is non-negative. Hence, g(0) ≤ g(1) for all

y ∈ K such that (1.16) is satisfied.

Remark 1.2.2. The inequality (1.17) shows that x − z and y − z subtend a non-acute angle

between them. The projection PK (x) of x on K can be interpreted as the result of applying

to x the operator PK : X → K, which is called projection operator. Note that PK (x) = x

for all x ∈ K.

Theorem 1.2.8. The projection operator PK defined on a Hilbert space X into its

nonempty closed convex subset K has the following properties:

(a) PK is a nonexpansive, that is, kPK (x) − PK (y)k ≤ kx − yk for all x, y ∈ X; which

implies that PK is continuous.

(b) hPK (x) − PK (y), x − yi ≥ 0 for all x, y ∈ X.

Proof. (a) From (1.17), we obtain

hPK (x) − x, PK (x) − yi ≤ 0,

for all y ∈ K.

(1.20)

Qamrul Hasan Ansari

Advanced Functional Analysis

Page 32

Put x = x1 in (1.20), we get

hPK (x1 ) − x1 , PK (x1 ) − yi ≤ 0,

for all y ∈ K.

(1.21)

for all y ∈ K.

(1.22)

Put x = x2 in (1.20), we get

hPK (x2 ) − x2 , PK (x2 ) − yi ≤ 0,

Since PK (x2 ) and PK (x1 ) ∈ K, choose y = PK (x2 ) and y = PK (x1 ), respectively, in (1.21)

and (1.22), we obtain

hPK (u1 ) − u1 , PK (u1 ) − PK (u2 )i ≤ 0

hPK (u2 ) − u2 , PK (u2 ) − PK (u1 )i ≤ 0.

From above two inequalities, we obatin

hPK (x1 ) − x1 − PK (x2 ) + x2 , PK (x1 ) − PK (x2 )i ≤ 0,

or

hPK (x1 ) − PK (x2 ), PK (x1 i − PK (x2 )i ≤ hx1 − x2 , PK (x1 ) − PK (x2 )i,

equivalently,

kPK (x1 ) − PK (x2 )k2 ≤ hx1 − x2 , PK (x1 ) − PK (x2 )i.

(1.23)

Therefore, by the Cauchy-Schwartz-Bunyakowski inequality, we get

kPK (x1 ) − PK (x2 )k2 ≤ kx1 − x2 k kPK (x1 ) − PK (x2 )k ,

(1.24)

kPK (x1 ) − PK (x2 )k ≤ kx1 − x2 k .

(1.25)

and hence,

(b) follows from (1.23).

The geometric interpretation of the nonexpansivity of PK is given in the following figure.

We observe that if strict inequality holds in (a), then the projection operator PK reduces

the distance. However, if the equality holds in (a), then the distance is conserved.

Qamrul Hasan Ansari

Advanced Functional Analysis

x̃

x

b

y

b

b

ỹ

b

Page 33

PK (x̃)

b

PK (x)

b

K

b

PK (ỹ)

PK (y)

b

Figure 1.7: The nonexpansiveness of the projection operator

1.3

Bilinear Forms and Lax-Milgram Lemma

Let X and Y be inner product spaces over the same field K (= R or C). A functional

a(·, ·) : X × Y → K will be called a form.

Definition 1.3.1. Let X and Y be inner product spaces over the same field K (= R or C).

A form a(·, ·) : X × Y → K is called a sesquilinear functional or sesquilinear form if the

following conditions are satisfied for all x, x1 , x2 ∈ X, y, y1, y2 ∈ Y and all α, β ∈ K:

(i) a(x1 + x2 , y) = a(x1 , y) + a(x2 , y).

(ii) a(αx, y) = αa(x, y).

(iii) a(x, y1 + y2 ) = a(x, y1 ) + a(x, y2 ).

(iv) a(x, βy) = βa(x, y).

Remark 1.3.1. (a) The sesquilinear functional is linear in the first variable but not so

in the second variable. A sesquilinear functional which is also linear in the second

variable is called a bilinear form or a bilinear functional. Thus, a bilinear form a(·, ·) is

a mapping defined from X × Y into K which satisfies conditions (i) - (iii) of the above

definition and a(x, βy) = βa(x, y).

(b) If X and Y are real inner product spaces, then the concepts of sesquilinear functional

and bilinear form coincide.

(c) An inner product is an example of a sesquilinear functional. The real inner product is

an example of a bilinear form.

Qamrul Hasan Ansari

Advanced Functional Analysis

Page 34

(d) If a(·, ·) is a sesquilinear functional, then g(x, y) = a(y, x) is a sesquilinear functional.

Definition 1.3.2. Let X and Y be inner product spaces. A form a(·, ·) : X × Y → K is

called:

(a) symmetric if a(x, y) = a(y, x) for all (x, y) ∈ X × Y ;

(b) bounded or continuous if there exists a constant M > 0 such that

|a(x, y)| ≤ Mkxk kyk,

for all x ∈ X, y ∈ Y,

and the norm of a is defined as

|a(x, y)|

kak = sup

= sup a

x6=0 y6=0 kxk kyk

x6=0 y6=0

=

sup |a(x, y)|.

y

x

,

kxk kyk

kxk=kyk=1

It is clear that |a(x, y)| ≤ kak kxk kyk.

Remark 1.3.2. Let a(·, ·) : X×Y → K be a continuous form and {xn } and {yn } be sequences

in X and Y , respectively, such that xn → x and yn → y. Then a(xn , yn ) → a(x, y).

Indeed,

|a(xn , yn ) − a(x, y)| ≤ |a(xn − x, yn )| + |a(x, yn − y)|

≤ kak (kxn − xk kyn k + kxk kyn − yk) .

Definition 1.3.3. Let X be an inner product space. A form a(·, ·) : X × X → K is called:

(a) positive if a(x, x) ≥ 0 for all x ∈ X;

(b) positive definite if a(x, x) ≥ 0 for all x ∈ X and a(x, x) = 0 implies that x = 0;

(c) coercive or X-elliptic if there exists a constant α > 0 such that a(x, x) ≥ αkxk2 for all

x ∈ X.

Example 1.3.1. Let X = Rn with the usual Euclidean inner product. Then any n × n

metrix with real entries defines a continuous bilinear form.

If A = (aij ), 1 ≤ i, j ≤ n, and if we have x = (x1 , . . . , xn ) and y = (y1 , . . . , yn ), then the

bilinear form is defined as

n

X

a(x, y) :=

aij xj yi = y ⊤ Ax,

i,j=1

where x and y are considered as column vectors and y ⊤ denotes the transpose of y. By the

Cauchy-Schwarz inequality, we have

|a(x, y)| = |y ⊤ Ax| = |hy, Axi|

≤ kyk kAxk ≤ kAk kxk kyk.

Qamrul Hasan Ansari

Advanced Functional Analysis

Page 35

If A is a symmetric and positive definite matrix, then the bilinear form is symmetric and

coercive since we know that

n

X

aij yj yi ≥ αkyk2,

i,j=1

where α > 0 is the smallest eigenvalue of the matrix A.

Theorem 1.3.1 (Extended form of Riesz Representation Theorem). Let X and Y be

Hilbert spaces and a(·, ·) : X × Y → K be a bounded sesquilinear form. Then there exists

a unique bounded linear operator T : X → Y such that

a(x, y) = hT (x), yi,

for all (x, y) ∈ X × Y,

(1.26)

and kak = kT k.

Proof. For each fixed x ∈ X, define a functional fx : Y → K by

fx (y) = a(x, y),

for all y ∈ Y.

(1.27)

Then, fx is a linear functional since for all y1 , y2 ∈ Y and all α ∈ K, we have

fx (y1 + y2 ) = a(x, y1 + y2 ) = a(x, y1 ) + a(x, y2 ) = fx (y1 ) + fx (y2 )

fx (αy) = a(x, αy) = αa(x, y) = αfx (y).

Since a(·, ·) is bounded, we have

|fx (y)| = |a(x, y)| = |a(x, y)| ≤ kak kxk kyk,

that is,

kfx k ≤ kak kxk.

Thus, fx is a bounded linear functional on Y . By Riesz representation theorem3 , there exists

a unique vector y ∗ ∈ Y such that

fx (y) = hy, y ∗i,

for all y ∈ Y.

(1.28)

The vector y ∗ depends on the choice vector x. Therefore, we can write y ∗ = T (x) where

T : X → Y . We observe that

a(x, y) = hy, T (x)i or a(x, y) = hT (x), yi,

for all x ∈ X and y ∈ Y.

Since y ∗ is unique, the operator T is uniquely determined.

3

Riesz Representation Theorem. If f is a bounded linear functional on a Hilbert space X, then

there exists a unique vector y ∈ X such that f (x) = hx, yi for all x ∈ X and kf k = kyk

Qamrul Hasan Ansari

Advanced Functional Analysis

Page 36

The operator T is linear in view of the following relations: For all y ∈ Y , x, x1 , x2 ∈ X and

α ∈ K, we have

hT (x1 + x2 ), yi = a(x1 + x2 , y) = a(x1 , y) + a(x2 , y)

= hT (x1 ), yi + hT (x2 , yi,

hT (αx1 ), yi = a(αx, y) = αa(x, y) = αhT (x), yi.

Moreover, T is continuous as

kfx k = ky ∗ k = kT (x)k ≤ kak kxk

implies that kT k ≤ kak.

To prove that kT k = kak, it is enough to show that kT k ≥ kak which follows from the

following relation:

|a(x, y)|

|hT (x), yi|

= sup

x6=0 y6=0 kxk kyk

x6=0 y6=0 kxk kyk

kT (x)k kyk

≤ sup

= kT k.

kxk kyk

x6=0 y6=0

kak =

sup

To prove the uniqueness of T , let us assume that there is another linear operator S : X → Y

such that

a(x, y) = hS(x), yi, for all (x, y) ∈ X × Y.

Then, for every x ∈ X and y ∈ Y , we have

a(x, y) = hT (x), yi = hS(x), yi

equivalently, h(T − S)(x), yi = 0. This implies that (T − S)(x) = 0 for all x ∈ X, that

is, T ≡ S. This proves that there exists a unique bounded linear operator T such that

a(x, y) = hT (x), yi.

Remark 1.3.3 (Converse of above theorem). Let X and Y be Hilbert spaces and T : X → Y

be a bounded linear operator. Then the form a(·, ·) : X × Y → K defined by

a(x, y) = hT (x), yi,

for all (x, y) ∈ X × Y,

(1.29)

is a bounded sesquilinear form on X × Y .

Proof. Since T is a bounded linear operator on X × Y and the inner product is a sesquilinear

mapping, we have that a(x, y) = hT (x), yi is sesquilinear.

Since |a(x, y)| = |hT (x), yi| ≤ kT k kxk kyk, by the Cauchy-Schwartz-Bunyakowski inequality,

we have sup |a(x, y)| ≤ kT k, and hence a(·, ·) is bounded.

kxk=kyk=1

Qamrul Hasan Ansari

Advanced Functional Analysis

Page 37

Corollary 1.3.1. Let X be a Hilbert space and T : X → X be a bounded linear operator.

Then the complex-valued function b(·, ·) : X × X → C defined by b(x, y) = hx, T (y)i is a

bounded bilinear form on X and kbk = kT k.

Conversely, if b(·, ·) : X × X → C is a bounded bilinear form, then there is a unique

bounded linear operator T : X → X such that b(x, y) = hx, T (y)i for all (x, y) ∈ X × X.

Proof. Define a function a(·, ·) : X × X → C by

a(x, y) = b(y, x) = hT (x), yi.

By Theorem 1.3.1, a(x, y) is a bounded bilinear form on X and kak = kT k. Since we have

b(x, y) = a(y, x); b is also bounded bilinear on X and

kbk =

sup

kxk=kyk=1

|b(x, y)| =

sup

kxk=kyk=1

|a(y, x)| = kak = kT k.

Conversely, if b is given, we define a bounded bilinear form a(·, ·) : X × X → C by

a(x, y) = b(y, x),

for all x, y ∈ X.

Again, by Theorem 1.3.1, there is a bounded linear operator T on X such that

a(x, y) = hT (x), yi,

for all (x, y) ∈ X × X.

Therefore, we have b(x, y) = a(y, x) = hT (y), xi = hx, T (y)i for all (x, y) ∈ X × X.

Corollary 1.3.2. Let X be a Hilbert space. If T is a bounded linear operator on X,

then

kT k = sup |hx, T (y)i| = sup |hT (x), yi|.

kxk=kyk=1

kxk=kyk=1

Proof. By Theorem 1.3.1, for every bounded linear operator on X, there is a bounded bilinear

form a such that a(x, y) = hT (x), yi and kak = kT k. Then,

kak =

sup

kxk=kyk=1

From this, we conclude that kT k =

|a(x, y)| =

sup

sup

kxk=kyk=1

hT (x), yi.

t|hT (x), yi|.

kxk=kyk=1

Definition 1.3.4. Let X be a Hilbert space and a(·, ·) : X × X → K be a form. Then the

operator F : X → K is called a quadratic form associated with a(·, ·) if F (x) = a(x, x) for

all x ∈ X.

A quadratic form F is called real if F (x) is real for all x ∈ X.

Qamrul Hasan Ansari

Remark 1.3.4.

Advanced Functional Analysis

Page 38

(a) We immediately observe that F (αx) = |α|2F (x) and |F (x)| ≤ kak kxk.

(b) The norm of F is defined as

kF k = sup

x6=0

|F (x)|

= sup |F (x)|.

kxk2

kxk=1

Remark 1.3.5. If a(·, ·) is any fixed sesquilinear form and F (x) is an associated quadratic

form on a Hilbert space X. Then

(a)

1

2

[a(x, y) + a(y, x)] = F

x+y

2

−F

x−y

2

;

(b) a(x, y) = 14 [F (x + y) − F (x − y) + iF (x + iy) − iF (x − iy)].

Varification. By using linearity of the bilinear form a, we have

F (x + y) = a(x + y, x + y) = a(x, x) + a(y, x) + a(x, y) + a(y, y)

and

F (x − y) = a(x − y, x − y) = a(x, x) − a(y, x) − a(x, y) + a(y, y).

By subtracting the second of the above equation from the first, we get

F (x + y) − F (x − y) = 2a(x, y) + 2a(y, x).

(1.30)

Replacing y by iy in (1.30), we obtain

F (x + iy) − F (x − iy) = 2a(x, iy) + 2a(iy, x),

or

F (x + iy) − F (x − iy) = 2ia(x, y) + 2ia(y, x).

(1.31)

Multiplying (1.31) by i and adding it to (1.30), we get the result.

Lemma 1.3.1. A bilinear form a(·, ·) : X × X → K is symmetric if and only if the

associated quadratic functional F (x) is real.

Proof. If a(x, y) is symmetric, then we have

F (x) = a(x, x)

= a(x, x)

= F (x).

This implies that F (x) is real.

Conversely, let F (x) be real, then by Remark 1.3.5 (d) and in view of the relation

F (x) = F (−x) = F (ix)

Qamrul Hasan Ansari

and

Advanced Functional Analysis

Page 39

F (x) = a(x, x), F (−x) = a(x, x) = a(−x, −x),

we obtain,

F (ix) = a(ix, ix) = iia(x, x) = a(x, x) ,

1

[F (x + y) − F (y − x) + iF (y + ix) − iF (y − ix)]

4

1

[F (x + y) − F (x − y) + iF (x − iy) − iF (x + iy)]

=

4

1

=

[F (x + y) − F (x − y) + iF (x + iy) − iF (x − iy)]

4

= a(x, y).

a(y, x) =

Hence, a(·, ·) is symmetric.

Lemma 1.3.2. A bilinear form a(·, ·) : X × X → K is bounded if and only if the

associated quadratic form F is bounded. If a(·, ·) is bounded, then kF k ≤ kak ≤ 2kF k.

Proof. Suppose that a(·, ·) is bounded. Then we have

sup |F (x)| = sup |a(x, x)| ≤

kxk=1

kxk=1

sup

kxk=kyk=1

|a(x, y)| = kak,

and, therefore, F is bounded and kF k ≤ kak.

On the other hand, suppose F is bounded. From Remark 1.3.5 (d) and the parallelogram

law, we get

1

kF k(kx + yk2 + kx − yk2 + kx + iyk2 + kx − iyk2)

4

1

kF k2 kxk2 + kyk2 + kxk2 + kyk2

=

4

= kF k kxk2 + kyk2 ,

|a(x, y)| ≤

or

sup

kxk=kyk=1

|a(x, y)| ≤ 2kF k.

Thus, a(·, ·) is bounded and kak ≤ 2kF k.

Theorem 1.3.2. Let X be a Hilbert space and T : X → X be a bounded linear operator.

Then the following statements are equivalent:

(a) T is self-adjoint.

(b) The bilinear form a(·, ·) on X defined by a(x, y) = hT (x), yi is symmetric.

Qamrul Hasan Ansari

Advanced Functional Analysis

Page 40

Proof. (a) ⇒ (b): F (x) = hT (x), xi = hx, T (x)i = hT (x), xi = F (x). In view of Lemma

1.3.1, we obtain the result.

(b) ⇒ (a): hT (x), yi = a(x, y) = a(y, x) = hT (y), xi = hx, T (y)i. This shows that T ∗ ≡ T

that T is self-adjoint.

Theorem 1.3.3. Let X be a Hilbert space. If a bilinear form a(·, ·) : X × X → K is

bounded and symmetric, then kak = kF k, where F is the associated quadratic functional.

The following theorem, known as the Lax-Milgram lemma proved by PD Lax and AN Milgram in 1954, has important applications in different fields.

Theorem 1.3.4 (Lax-Milgram Lemma). Let X be a Hilbert space, a(·, ·) : X × X → R

be a coercive bounded bilinear form, and f : X → R be a bounded linear functional. Then

there exists a unique element x ∈ X such that

a(x, y) = f (y),

for all y ∈ X.

(1.32)

Proof. Since a(·, ·) is bounded, there exists a constant M > 0 such that

|a(x, y)| ≤ Mkxk kyk.

(1.33)

By Theorem 1.3.1, there exists a bounded linear operator T : X → X such that

a(x, y) = hT (x), yi,

for all (x, y) ∈ X × X.

By Riesz representation theorem4 , there exists a continuous linear functional f : X → R

such that equation a(x, y) = f (y) can be rewritten as, for all λ > 0,

hλT (x), yi = λhf, yi,

(1.34)

or

This implies that

hλT (x) − λf, yi = 0,

for all y ∈ X.

λT (x) = λf.

(1.35)

We will show that (1.35) has a unique solution by showing that for appropriate values of

parameter ρ > 0, the affine mapping for y ∈ X, y 7→ y − ρ(λT (y) − λf ) ∈ X is a contraction

mapping. For this, we observe that

ky − ρλT (y)k2 = hy − ρλT (y), y − ρλT (y)i

= kyk2 − 2ρhλT (y), yi + ρ2 kλT (y)k2

≤ kyk2 − 2ραkyk2 + ρ2 M 2 kyk2,

4

(by applying inner product axioms)

Riesz Representation Theorem. If f is a bounded linear functional on a Hilbert space X, then there

exists a unique vector y ∈ X such that f (x) = hx, yi for all x ∈ X and kf k = kyk

Qamrul Hasan Ansari

Advanced Functional Analysis

Page 41

as

a(y, y) = hλT (y), yi ≥ αkyk2

(by the coercivity),

(1.36)

and

kλT (y)k ≤ Mkyk (by boundedness of T ).

Therefore,

ky − ρλT (y)k2 ≤ (1 − 2ρα + ρ2 M 2 )kyk2 ,

(1.37)

ky − ρλT (y)k ≤ (1 − 2ρα + ρ2 M 2 )1/2 kyk.

(1.38)

or

Let S(y) = y − ρ (λT (y) − λf ). Then

kS(y) − S(z)k = k(y − ρ(λT (y) − λf (u))) − (z − ρ(λT (z) − λf (u)))k

= k(y − z) − ρ(λT (y − z))k

≤ (1 − 2ρα + ρ2 M 2 )1/2 ky − zk (by (1.38).

(1.39)

This implies that S is a contraction mapping if 0 < 1−2ρα+ρ2 M 2 < 1 which is equivalent to

the condition that ρ ∈ (0, 2α/M 2). Hence, by the Banach contraction fixed point theorem,

S has a unique fixed point which is the unique solution.

Remark 1.3.6 (Abstract Variational Problem). Find an element x such that

a(x, y) = f (y),

for all y ∈ X,

where a(x, y) and f are as in Theorem 1.3.4.

This problem is known as abstract variational problem. In view of the Lax-Milgram lemma,

it has a unique solution.

2

Spectral Theory of Continuous and Compact

Linear Operators

Let X and Y be linear spaces and T : X → Y be a linear operator. Recall that the range

R(T ) and null space N (T ) of T are defined, respectively, as

R(T ) = {T (x) : x ∈ X} and N (T ) = {x ∈ X : T (x) = 0}.

The dimension of R(T ) is called the rank of T and the dimension of N (T ) is called the

nullity of T .

It can be easily seen that a linear operator T : X → Y is one-one if and only if N (T ) = {0}.

Recall that

c =

=

c0 =

=

c00

space of convergent sequences of real or complex numbers

{{xn } ⊆ K : {xn } is convergent}

space of convergent sequences of real or complex numbers that converge to zero

{{xn } ⊆ K : xn → 0 as n → ∞}

∞

[

{{x1 , x2 , . . .} ⊆ K : xj = 0 for j ≥ k}

=

k=1

ℓ∞ = {{xn } ⊆ K : sup |xn | < ∞}

n∈N

Clearly, c00 ⊆ c0 ⊆ c ⊆ ℓ∞ .

42

Qamrul Hasan Ansari

2.1

Advanced Functional Analysis

Page 43

Compact Linear Operators on Normed Spaces

Recall that the set {T (x) : kxk ≤ 1} is closed and bounded if T : X → Y is a bounded linear

operator from a normed space X to another normed space Y . However, if T : X → Y is

bounded linear operator of finite rank, then the set {T (x) : kxk ≤ 1} is compact as every

closed and bounded subset of a finite dimensional normed space is compact. But this is

not true if the rank of T (dimension of R(T ) is called rank of T ) is infinity. For example,

consider the identity operator I : X → X on an infinite dimensional normed space X, then

the above set reduces to the closed unit ball {x ∈ X : kxk ≤ 1} which is not compact.

Let T : X → Y be a bounded linear operator from a normed space X to another normed

space Y . Then for any r > 0, we have

{T (x) : kxk ≤ r} is compact ⇔ {T (x) : kxk ≤ 1} is compact

{T (x) : kxk < r} is compact ⇔ {T (x) : kxk < 1} is compact

Definition 2.1.1 (Compact linear operator). Let X and Y be normed spaces. A linear

operator T : X → Y is said be compact or completely continuous if the image T (M) of every

bounded subset M of X is relatively compact, that is, T (M) is compact for every bounded

subset M of X.

Lemma 2.1.1. Let X and Y be normed spaces.

(a) Every compact linear operator T : X → Y is bounded, and hence continuous.

(b) If dimX = ∞, then the identity operator I : X → X (which is always continuous)

is not compact.

Proof. (a) Since the unit space S = {x ∈ X : kxk = 1} is bounded and T is a compact linear

operator, T (S) is compact, and hence is bounded1 . Therefore,

sup kT (x)k < ∞.

kxk=1

Hence T is bounded and so it is continuous.

(b) Note that the closed unit ball B = {x ∈ X : kxk ≤ 1} is bounded. If dimX = ∞, then

B cannot be compact2 . Therefore, I(B) = B = B is not relatively compact.

1

2

Every compact subset of a normed space is closed and bounded

The normed space X is finite dimensional if and only if the closed unit ball is compact

Qamrul Hasan Ansari

Advanced Functional Analysis

Page 44

Exercise 2.1.1. Let X and Y be normed spaces and T : X → Y be a linear operator. Then

prove that the following statements are equivalent.

(a) T is a compact operator.

(b) {T (x) : kxk < 1} is compact in Y .

(c) {T (x) : kxk ≤ 1} is compact in Y .

Proof. Clearly, (a) implies (b) and (c). Assume that (c) holds, that is, {T (x) : kxk ≤ 1}

is compact in Y . Let M be a bounded subset of X. Then, there exists r > 0 such that

M ⊆ {x ∈ X : kxk ≤ r}. Since

T (M) ⊆ {T (x) ∈ Y : x ∈ X, kxk < r} ⊆ {T (x) ∈ Y : x ∈ X, kxk ≤ r},

and the fact that a closed subset of a compact set is compact, it follows that (c) implies (b)

and (a), and (b) implies (a).

Theorem 2.1.1 (Compactness criterion). Let X and Y be normed spaces and T : X →

Y be a linear operator. Then T is compact if and only if it maps every bounded sequence

{xn } in X onto a sequence {T (xn )} in Y which has a convergent subsequence.

Proof. If T is compact and {xn } is bounded. Then we can assume that kxn k ≤ c for

every n ∈ N and some constant c > 0. Let M = {x ∈ X : kxk ≤ c}. Then {T (xn )} is a

sequence in the closure of {T (xn )} in Y which is compact, and hence it contains a convergent

subsequence.

Conversely, assume that every bounded sequence {xn } contains a subsequence {xnk } such

that {T (xnk )} converges in Y . Let B be a bounded subset of X. To show that T (B) is

compact, it is enough to prove that every sequence in it has a convergent subsequence.

Suppose that {yn } be any sequence in T (B). Then yn = T (xn ) for some xn ∈ B and {xn } is

bounded since B is bounded. By assumption, {T (xn )} contains a convergent subsequence.

Hence T (B) is compact3 because {yn } in T (B) was arbitrary. It shows that T is compact.

Remark 2.1.1. Sum T1 + T2 of two compact linear operators T1 , T2 : X → Y is compact.

Also, for all α scalar, αT1 is compact. Therefore the set of compact linear operators, denoted

by K(X, Y ) from a normed space X to another normed space Y forms a vector space.

Exercise 2.1.2. Let X and Y be normed spaces. Prove that K(X, Y ) is a subspace of

B(X, Y ) the space of all bounded linear operators from X to Y .

Exercise 2.1.3. Let T : X → X be a compact linear operator and S : X → X be a bounded

linear operator on a normed space X. Then prove that T S and ST are compact.

3

A set is compact if every sequence has a convergent subsequence

Qamrul Hasan Ansari

Advanced Functional Analysis

Page 45

Proof. Let B be any bounded subset of X. Since S is bounded, S(B) is a bounded set

and T (S(B)) = T S(B) is relatively compact because T is compact. Hence T S is a linear

compact operator.

To prove ST is also compact, let {xn } be any bounded sequence in S. Then {T (xn )} has

convergent subsequence {T (xnk )} by Theorem 2.1.1 and {ST (xnk } is convergent. Hence ST

is compact again by Theorem 2.1.1.

Example 2.1.1. Let 1 ≤ p ≤ ∞ and X = ℓp . Let T : X → X be the right shift operator on

X defined by

0,

if i = 1,

(T (x)(i)) :=

x(i − 1),

if i > 1.

Since

T (en ) = en+1 ,

ken − em k =

21/p ,

1,

if 1 ≤ p < ∞,

if = ∞,

for all n, m ∈ N, n 6= m, it follows that, corresponding to the bounded sequence {en },

{A(en )} does not have a convergent subsequence. Hence, by Theorem 2.1.1, the operator T

is not compact.

Exercise 2.1.4. Prove that the left shift operator on ℓp space is not compact for any p with

1 ≤ p ≤ ∞.

Definition 2.1.2. An operator T ∈ B(X, Y ) with dimT (X) < ∞ is called an operator of

finite rank.

Theorem 2.1.2 (Finite dimensional domain or range). Let X and Y be normed spaces

and T : X → Y be a linear operator.

(a) If T is bounded and dimT (X) < ∞, then the operator T is compact. That is, every

bounded linear operator of finite rank is compact.

(b) If dim(X) < ∞, then the operator T is compact.

Proof. (a) Let {xn } be any bounded sequence in X. Then the inequality kT (xn )k ≤ kT k kxn k

shows that the sequence {T (xn )} is bounded. Hence {T (xn )} is relatively compact4 since

dimT (X) < ∞. It follows that {T (xn )} has a convergent subsequence. Since {xn } was an

arbitrary bounded sequence in X, the operator T is compact by Theorem 2.1.1.

(b) It follows from (a) by noting that dim(X) < ∞ implies the boundedness of T 5 .

Exercise 2.1.5. Prove that the identity operator on a normed space is compact if and only

if the space is of finite dimension.

4

5

In a finite dimensional space, a set is compact if and only if it is closed and bounded

Every linear operator is bounded on a finite dimensional normed space X

Qamrul Hasan Ansari

Advanced Functional Analysis

Page 46

Theorem 2.1.3 (Sequence of compact linear operators). Let {Tn } be a sequence of

compact linear operators from a normed space X to a Banach space Y . If {Tn } is

uniformly operator convergent to an operator T (that is, kTn − T k → 0), then the limit

operator T is compact.

Proof. Let {Tn } be a sequence in K(X, Y ) such that kTn − T k → 0 as n → ∞. In order to

prove that T ∈ K(X, Y ), it is enough to show that for any bounded sequence {xn } in X, the

image sequence {T (xn )} has a convergent subsequence, and then apply Theorem 2.1.1.

Let {xn } be a bounded sequence in X, and ε > 0 be given. Since {Tn } is a sequence in

K(X, Y ), there exists N ∈ N such that

kTn − T k < ε,

for all n ≥ N.