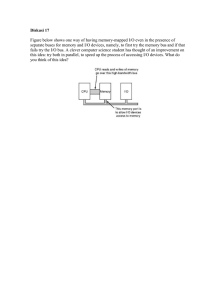

INTERCONNECTION NETWORKS Networking provides a method for fast communication between multiple processors of a computer and between multiple computers connected to a network. The idea behind interconnection networks is that when a computing task involving large amounts of data cannot be sufficiently handled by a single processor, the task is broken up into parallel tasks that are performed at the same time by different processors, so the processing time is vastly reduced. Efficient interconnection networks are critical for high-speed data transfer between the different elements in parallel processing. Interconnection networks, also called multi-stage interconnection networks (or MINs), are high-speed computer networks. There are the connections between nodes where each node can be a single processor or a group of processors or memory modules. Interconnection networks can also be used for connection (internally) of Processors, Memory Modules, I/O devices etc. INTERCONNECTION NETWORK TOPOLOGY Interconnection network topology is the layout and design of the connections and the switches that constitute the interconnections among different entities associated with processing tasks. TYPES: 1. STATIC NETWORKS These networks are formed of point –to- point direct connections. There is no switch and the connection between the processor and memory nodes are fixed or hard-wired and cannot be changed or reconfigured easily. The connection has no switching elements and the connection between the processors or between the memory and the processor is static. This is also called a direct connection because the processor and the memory modules are connected directly without any other connecting elements in between. Some examples of the structures that a static network can have are pipeline, mesh, ring, star, or hypercube. 2. DYNAMIC NETWORKS Dynamic interconnection networks are connections between processing nodes and memory nodes that are usually connected through switching element nodes. This type of connection is also called an indirect connection because the connection may pass through a switch and not directly from processor to processor, or memory to processor. Dynamic interconnection networks are scalable (can be expanded) because the connections can be reconfigured before or even during the execution of a parallel program. Examples of dynamic interconnection networks are bus, crossbar, and multistage. What are the types of interconnection in computer architecture? Time-shared common bus. Multiport memory. Crossbar switch. Multistage switching network. Hypercube system. 1. Time-shared / Common Bus (Interconnection structure in Multiprocessor System): In a multiprocessor system, the time shared bus interconnection provides a common communication path connecting all the functional units like processor, I/O processor, memory unit etc. The figure below shows the multiple processors with common communication path (single bus). Single-Bus Multiprocessor Organization To communicate with any functional unit, processor needs the bus to transfer the data. For which the processor first need to see that whether the bus is available or not by checking the status of the bus. If the bus is used by some other functional unit, the status is busy, else free. A processor can use bus only when the bus is free. The sender processor puts the address of the destination on the bus & the destination unit identifies it. In order to communicate with any functional unit, a command is issued to tell that unit, what work is to be done. The other processors at that time will be either busy in internal operations or will sit free, waiting to get bus. A bus controller to resolve conflicts, if any. (Bus controller can set priority of different functional units) This Single-Bus Multiprocessor Organization is easiest to reconfigure & is simple. This interconnection structure contains only passive elements. The bus interfaces of sender & receiver units controls the transfer operation here. To decide the access to common bus without conflicts, methods such as static & fixed priorities, First-In-Out (FIFO) queues & daisy chains can be used. Advantages: Inexpensive as no extra hardware is required such as switch. Simple & easy to configure as the functional units are directly connected to the bus . Disadvantages: Major fight with this kind of configuration is that if malfunctioning occurs in any of the bus interface circuits, complete system will fail. Decreased throughput: At a time, only one processor can communicate with any other functional unit. Increased contention problem: As the number of processors & memory unit increases, the bus contention problem (two or more devices sending data over the bus at a time) increases. To solve the above disadvantages, we can use two uni-directional buses as: Multiprocessor System with unidirectional buses Both the buses are required in a single transfer operation. Here, the system complexity is increased & the reliability is decreased, The solution is to use multiple bi-directional buses. Multiple bi-directional buses: The multiple bi-directional buses means that in the system there are multiple buses that are bi-directional. It permits simultaneous transfers as many as buses are available. But here also the complexity of the system is increased. Multiple Bi-Directional Multiprocessor System Apart from the organization, there are many factors affecting the performance of bus. They are; Number of active devices on the bus. Data width Error Detection method Synchronization of data transfer etc. Advantages of Multiple bi-directional buses – Lowest cost for hardware as no extra device is needed such as switch. Modifying the hardware system configuration is easy. Fewer complexes when compared to other interconnection schemes as there are only 2 buses & all the components are connected via those buses. Disadvantages of Multiple bi-directional buses – System Expansion will degrade the performance because as the number of functional unit increases, more communication is required but at a time only 1 transfer can happen via 1 bus. Overall system capacity limits the transfer rate & If bus fails, whole system will fail. Suitable for small systems only. 2. Multiport Memory: Multiport Memory System employs separate buses between each memory module and each CPU. A processor bus comprises the address, data and control lines necessary to communicate with memory. Each memory module connects each processor bus. At any given time, the memory module should have internal control logic to obtain which port can have access to memory. In Multiport Memory system, the control, switching & priority arbitration logic are distributed throughout the crossbar switch matrix which is distributed at the interfaces to the memory modules. Memory module can be said to have four ports and each port accommodates one of the buses. Assigning fixed priorities to each memory port resolve the memory access conflicts. The priority is established for memory access associated with each processor by the physical port position that its bus occupies in each module. Therefore CPU 1 can have priority over CPU 2, CPU 2 can have priority over CPU 3 and CPU 4 can have the lowest priority. Advantage:High transfer rate can be achieved because of multiple paths Disadvantage: It requires expensive memory control logic and a large number of cables and connectors. It is only good for systems with small number of processors. 3. Crossbar Switch A point is reached at which there is a separate path available for each memory module, if the number of buses in common bus system is increased. Crossbar Switch (for multiprocessors) provides separate path for each module. Crossbar Switch system contains of a number of cross points that are kept at intersections among memory module and processor buses paths. In each cross point, the small square represents a switch which obtains the path from a processor to a memory module. Each switch point has control logic to set up the transfer path among a memory and processor. It calculates the address which is placed in the bus to obtain whether its specific module is being addressed. In addition, it eliminates multiple requests for access to the same memory module on a predetermined priority basis. Functional design of a crossbar switch connected to one memory module is shown in figure. The circuit contains multiplexers which choose the data, address, and control from one CPU for communication with the memory module. Arbitration logic established priority levels to select one CPU when two or more CPUs attempt to access the same memory. The multiplexers can be handled by the binary code which is produced by a priority encoder within the arbitration logic. A crossbar switch system permits simultaneous transfers from all memory modules because there is a separate path associated with each module. Thus, the hardware needed to implement the switch may become quite large and complex. 4. Multistage Switching Network : The 2×2 crossbar switch is used in the multistage network. It has 2 inputs (A & B) and 2 outputs (0 & 1). To establish the connection between the input & output terminals, the control inputs CA & CB are associated. 2 * 2 Crossbar Switch The input is connected to 0 output if the control input is 0 & the input is connected to 1 output if the control input is 1. This switch can arbitrate between conflicting requests. Only 1 will be connected if both A & B require the same output terminal, the other will be blocked/ rejected. We can construct a multistage network using 2×2 switches, in order to control the communication between a number of sources & destinations. Creating a binary tree of cross-bar switches accomplishes the connections to connect the input to one of the 8 possible destinations. 1 to 8 way switch using 2*2 Switch In the above diagram, P A & P B are 2 processors, and they are connected to 8 memory modules in a binary way from 000(0) to 111(7) through switches. Three levels are there from a source to a destination. To choose output in a level, one bit is assigned to each of the 3 levels. There are 3 bits in the destination number: 1st bit determines the output of the switch in 1st level, 2nd bit in 2nd level & 3rd bit in the 3rd level. Example: If the source is: P B & the destination is memory module 011 (as in the figure): A path is formed from P B to 0 output in 1st level, output 1 in 2nd level & output 1 in 3rd level. Usually, the processor acts as the source and the memory unit acts as a destination in a tightly coupled system. The destination is a memory module. But, processing units act as both, the source and the destination in a loosely coupled system. Many patterns can be made using 2×2 switches such as Omega networks, Butterfly Network, etc. NOTE: Interconnection structure can decide the overall system’s performance in a multiprocessor environment. To overcome the disadvantage of the common bus system, i.e., availability of only 1 path & reducing the complexity (crossbar have the complexity of O(n2))of other interconnection structure, Multi-Stage Switching network came. They used smaller switches, i.e., 2×2 switches to reduce the complexity. To set the switches, routing algorithms can be used. Its complexity and cost are less than the cross-bar interconnection network. 5. Hypercube Interconnection Hypercube (or Binary n-cube multiprocessor) structure represents a loosely coupled system made up of N=2n processors interconnected in an n-dimensional binary cube. Each processor makes a node of the cube. A node can be memory module, I/O interface also. Therefore, it is customary to refer to each node as containing a processor; in effect it has not only a CPU but also local memory and I/O interface. Each processor has direct communication paths to n other neighbor processors. These paths correspond to the cube edges. The processor at a node has communication path that is direct goes to n other nodes (total 2n nodes). There are total 2n distinct n-bit binary addresses. Each processor address differs from that of each of its n neighbors by exactly one bit position. Hypercube structure for n= 1, 2 and 3. A one cube structure contains n = 1 and 2n = 2. It has two processors interconnected by a single path. A two-cube structure contains n=2 and 2n=4. It has four nodes interconnected as a cube. An n-cube structure contains 2n nodes with a processor residing in each node. Each node is assigned a binary address in such a manner, that the addresses of two neighbors differ in exactly one bit position. For example, the three neighbors of the node with address 100 are 000, 110, and 101 in a three-cube structure. Each of these binary numbers differs from address 100 by one bit value. Routing messages through an n-cube structure may take from one to n links from a source node to a destination node. Example: In a three-cube structure, node 000 may communicate with 011 (from 000 to 010 to 011 or from 000 to 001 to 011). It should cross at least three links to communicate from node 000 to node 111. A routing procedure is designed by determining the exclusive-OR of the source node address with the destination node address. The resulting binary value will have 1 bits corresponding to the axes on which the two nodes differ. Then, message is transmitted along any one of the exes. For example, a message at node 010 going to node 001 produces an exclusive-OR of the two addresses equal to 011 in a three-cube structure. The message can be transmitted along the second axis to node 000 and then through the third axis to node 001. CLOUD COMPUTING: Cloud Computing Architecture is a combination of components required for a Cloud Computing service. A Cloud computing architecture consists of several components like a frontend platform, a backend platform or servers, a network or Internet service, and a cloud-based delivery service. The front end consists of the client part of a cloud computing system. It comprises interfaces and applications that are required to access the Cloud computing or Cloud programming platform. While the back end refers to the cloud itself, it comprises the resources required for cloud computing services. It consists of virtual machines, servers, data storage, security mechanisms, etc. It is under the provider’s control. Cloud computing distributes the file system that spreads over multiple hard disks and machines. Data is never stored in one place, and in case one unit fails, the other will take over automatically. The user disk space is allocated on the distributed file system, while another important component is an algorithm for resource allocation. Cloud computing is a strong distributed environment, and it heavily depends upon strong algorithms. Important Components of Cloud Computing Architecture Here are some important components of Cloud computing architecture: 1. Client Infrastructure: Client Infrastructure is a front-end component that provides a GUI. It helps users to interact with the Cloud. 2. Application: The application can be any software or platform which a client wants to access. 3. Service: The service component manages which type of service you can access according to the client’s requirements. Three Cloud computing services are: Software as a Service (SaaS) Platform as a Service (PaaS) Infrastructure as a Service (IaaS) 4. Runtime Cloud: Runtime cloud offers the execution and runtime environment to the virtual machines. 5. Storage: Storage is another important Cloud computing architecture component. It provides a large amount of storage capacity in the Cloud to store and manage data. 6. Infrastructure: It offers services on the host level, network level, and application level. Cloud infrastructure includes hardware and software components like servers, storage, network devices, virtualization software, and various other storage resources that are needed to support the cloud computing model. 7. Management: This component manages components like application, service, runtime cloud, storage, infrastructure, and other security matters in the backend. It also establishes coordination between them. 8. Security: Security in the backend refers to implementing different security mechanisms for secure Cloud systems, resources, files, and infrastructure to the end-user. 9. Internet: Internet connection acts as the bridge or medium between frontend and backend. It allows you to establish the interaction and communication between the frontend and backend. Benefits of Cloud Computing Architecture Following are the cloud computing architecture benefits: Makes the overall Cloud computing system simpler. Helps to enhance your data processing. Provides high security. It has better disaster recovery. Offers good user accessibility. Significantly reduces IT operating costs. Virtualization and Cloud Computing The main enabling technology for Cloud Computing is Virtualization. Virtualization is the partitioning of a single physical server into multiple logical servers. Once the physical server is divided, each logical server behaves like a physical server and can run an operating system and applications independently. Many popular companies like VMware and Microsoft provide virtualization services. Instead of using your PC for storage and computation, you can use their virtual servers. They are fast, cost-effective, and less time-consuming. Network Virtualization: It is a method of combining the available resources in a network by splitting up the available bandwidth into channels. Each channel is independent of others and can be assigned to a specific server or device in real time. Storage Virtualization: It is the pooling of physical storage from multiple network storage devices into what appears to be a single storage device that is managed from a central console. Storage virtualization is commonly used in storage area networks (SANs). Server Virtualization: Server virtualization is the masking of server resources like processors, RAM, operating system, etc., from server users. Server virtualization intends to increase resource sharing and reduce the burden and complexity of computation from users.